[GitHub] spark issue #17864: [SPARK-20604][ML] Allow imputer to handle numeric types

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/17864 Thanks for following up on this, Felix. Still waiting for an agreement on this... Would like to have more direction on this. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18870: [SPARK-19270][FOLLOW-UP][ML] PySpark GLR model.summary s...

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/18870 LGTM. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18831: [SPARK-21622][ML][SparkR] Support offset in SparkR GLM

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/18831 Thanks both for the comments. Yes, I think it's be to keep this PR on offset and we can address the other improvements later. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18831: [SPARK-21622][ML][SparkR] Support offset in Spark...

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/18831#discussion_r131386220

--- Diff: R/pkg/tests/fulltests/test_mllib_regression.R ---

@@ -173,6 +173,14 @@ test_that("spark.glm summary", {

expect_equal(stats$df.residual, rStats$df.residual)

expect_equal(stats$aic, rStats$aic)

+ # Test spark.glm works with offset

+ training <- suppressWarnings(createDataFrame(iris))

+ stats <- summary(spark.glm(training, Sepal_Width ~ Sepal_Length +

Species,

+ family = poisson(), offsetCol =

"Petal_Length"))

+ rStats <- suppressWarnings(summary(glm(Sepal.Width ~ Sepal.Length +

Species,

+data = iris, family = poisson(), offset =

iris$Petal.Length)))

--- End diff --

Then do you want to make the change for weight as well?

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18831: [SPARK-21622][ML][SparkR] Support offset in SparkR GLM

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/18831 Thanks for your comments, Felix. Addressed all issues. @yanboliang Could you take a quick look? --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18831: [SPARK-21622][ML][SparkR] Support offset in SparkR GLM

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/18831 Jenkins, retest this please --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18831: [SPARK-21622][ML][SparkR] Support offset in Spark...

GitHub user actuaryzhang opened a pull request: https://github.com/apache/spark/pull/18831 [SPARK-21622][ML][SparkR] Support offset in SparkR GLM ## What changes were proposed in this pull request? Support offset in SparkR GLM #16699 You can merge this pull request into a Git repository by running: $ git pull https://github.com/actuaryzhang/spark sparkROffset Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/18831.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #18831 commit 6ec068e5f48d393d539f4600bca3cbd1ea7d65a3 Author: actuaryzhang <actuaryzhan...@gmail.com> Date: 2017-08-03T06:37:41Z add offset to SparkR --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18809: [SPARK-21602][R] Add map_keys and map_values functions t...

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/18809 LGTM --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #16630: [SPARK-19270][ML] Add summary table to GLM summary

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/16630 Made a new commit to address the comments. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #16630: [SPARK-19270][ML] Add summary table to GLM summar...

Github user actuaryzhang commented on a diff in the pull request: https://github.com/apache/spark/pull/16630#discussion_r127853762 --- Diff: mllib/src/main/scala/org/apache/spark/ml/regression/GeneralizedLinearRegression.scala --- @@ -452,6 +452,8 @@ object GeneralizedLinearRegression extends DefaultParamsReadable[GeneralizedLine private[regression] val epsilon: Double = 1E-16 + private[regression] val Intercept: String = "(Intercept)" --- End diff -- Removed. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #16630: [SPARK-19270][ML] Add summary table to GLM summary

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/16630 @yanboliang Thanks for the suggestions. I have made a new commit that addresses your comments. In the new version, I used an array of tuple to represent the coefficient matrix. I used tuple because I have mixed type of string and double (it's necessary to store the feature names since they also depend on whether there is intercept). I then wrote a `showString` function similar to that in the `DataSet` class that compiles all summary info into a string, and defined show methods to print out the estimated model. The output is very similar to that in R except that I did not show the residuals and significance levels. Please let me know your thoughts on this update. Below is an example of the call and the output: ``` model.summary.show() +---+++--+--+ |Feature|Estimate|StdError|TValue|PValue| +---+++--+--+ |(Intercept)| 0.790| 4.013| 0.197| 0.862| | features_0| 0.226| 2.115| 0.107| 0.925| | features_1| 0.468| 0.582| 0.804| 0.506| +---+++--+--+ (Dispersion parameter for gaussian family taken to be 14.516) Null deviance: 46.800 on 2 degrees of freedom Residual deviance: 29.032 on 2 degrees of freedom AIC: 30.984 ``` --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #16630: [SPARK-19270][ML] Add summary table to GLM summar...

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/16630#discussion_r127844484

--- Diff:

mllib/src/main/scala/org/apache/spark/ml/regression/GeneralizedLinearRegression.scala

---

@@ -1441,4 +1460,33 @@ class GeneralizedLinearRegressionTrainingSummary

private[regression] (

"No p-value available for this GeneralizedLinearRegressionModel")

}

}

+

+ /**

+ * Summary table with feature name, coefficient, standard error,

+ * tValue and pValue.

+ */

+ @Since("2.2.0")

+ lazy val summaryTable: DataFrame = {

--- End diff --

Updated it as `coefficientMatrix`.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #16630: [SPARK-19270][ML] Add summary table to GLM summar...

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/16630#discussion_r127844472

--- Diff:

mllib/src/main/scala/org/apache/spark/ml/regression/GeneralizedLinearRegression.scala

---

@@ -1441,4 +1460,33 @@ class GeneralizedLinearRegressionTrainingSummary

private[regression] (

"No p-value available for this GeneralizedLinearRegressionModel")

}

}

+

+ /**

+ * Summary table with feature name, coefficient, standard error,

+ * tValue and pValue.

+ */

+ @Since("2.2.0")

--- End diff --

Done

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #16630: [SPARK-19270][ML] Add summary table to GLM summar...

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/16630#discussion_r127844463

--- Diff:

mllib/src/main/scala/org/apache/spark/ml/regression/GeneralizedLinearRegression.scala

---

@@ -1187,6 +1189,23 @@ class GeneralizedLinearRegressionSummary

private[regression] (

@Since("2.2.0")

lazy val numInstances: Long = predictions.count()

+

+ /**

+ * Name of features. If the name cannot be retrieved from attributes,

+ * set default names to feature column name with numbered suffix "_0",

"_1", and so on.

+ */

+ @Since("2.2.0")

+ lazy val featureNames: Array[String] = {

--- End diff --

Made it `private[ml]` since it is used in the R wrapper.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #16630: [SPARK-19270][ML] Add summary table to GLM summary

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/16630 @yanboliang Could you take a look? --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18534: [SPARK-21310][ML][PySpark] Expose offset in PySpark

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/18534 @yanboliang --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18534: [SPARK-21310][ML][PySpark] Expose offset in PySpa...

GitHub user actuaryzhang opened a pull request: https://github.com/apache/spark/pull/18534 [SPARK-21310][ML][PySpark] Expose offset in PySpark ## What changes were proposed in this pull request? Add offset to PySpark in GLM as in #16699. ## How was this patch tested? Python test You can merge this pull request into a Git repository by running: $ git pull https://github.com/actuaryzhang/spark pythonOffset Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/18534.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #18534 commit f523149709f33c9bd805f24589f6651675cc6359 Author: actuaryzhang <actuaryzhan...@gmail.com> Date: 2017-07-05T05:33:02Z add offset to pyspark --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18481: [SPARK-20889][SparkR] Grouped documentation for W...

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/18481#discussion_r125349671

--- Diff: R/pkg/R/functions.R ---

@@ -2875,9 +2875,9 @@ setMethod("ifelse",

#' @details

#' \code{cume_dist}: Returns the cumulative distribution of values within

a window partition,

#' i.e. the fraction of rows that are below the current row:

-#' number of values before (and including) x / total number of rows in the

partition.

+#' (number of values before and including x) / (total number of rows in

the partition)

#' This is equivalent to the \code{CUME_DIST} function in SQL.

--- End diff --

This is not a formula, right? Thought this is pretty clear. Not sure what

is the ask. I can just add a period after the `partition)`.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18481: [SPARK-20889][SparkR] Grouped documentation for WINDOW c...

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/18481 jenkins, retest this please --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18481: [SPARK-20889][SparkR] Grouped documentation for WINDOW c...

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/18481 OK, docs are now updated as you suggested. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18495: [SPARK-21275][ML] Update GLM test to use supporte...

GitHub user actuaryzhang opened a pull request: https://github.com/apache/spark/pull/18495 [SPARK-21275][ML] Update GLM test to use supportedFamilyNames ## What changes were proposed in this pull request? Update GLM test to use supportedFamilyNames as suggested here: https://github.com/apache/spark/pull/16699#discussion-diff-100574976R855 You can merge this pull request into a Git repository by running: $ git pull https://github.com/actuaryzhang/spark mlGlmTest2 Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/18495.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #18495 commit 4fe7641c200dffe416ef6bd84c87f778bba5c799 Author: actuaryzhang <actuaryzhan...@gmail.com> Date: 2017-07-01T00:12:55Z Update GLM test to use supportedFamilyNames --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18495: [SPARK-21275][ML] Update GLM test to use supportedFamily...

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/18495 @yanboliang --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18493: [SPARK-20889][SparkR][Followup] Clean up grouped doc for...

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/18493 We are done for this doc update effort after this one :) --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18493: [SPARK-20889][SparkR][Followup] Clean up grouped ...

GitHub user actuaryzhang opened a pull request: https://github.com/apache/spark/pull/18493 [SPARK-20889][SparkR][Followup] Clean up grouped doc for column methods ## What changes were proposed in this pull request? Add doc for methods that were left out, and fix various style and consistency issues. You can merge this pull request into a Git repository by running: $ git pull https://github.com/actuaryzhang/spark sparkRDocCleanup Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/18493.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #18493 commit 700e73c16fdfca4cc66605b28c6521d8d55f82de Author: actuaryzhang <actuaryzhan...@gmail.com> Date: 2017-06-30T07:05:13Z add doc for spark_partition_id commit c97caa91f9b264b8393850ac2c75440602d457b5 Author: actuaryzhang <actuaryzhan...@gmail.com> Date: 2017-06-30T07:16:20Z add doc for window commit 2ea1d0ab8c60a59f6b235ac771fa5a72dc48f9fe Author: actuaryzhang <actuaryzhan...@gmail.com> Date: 2017-06-30T07:24:58Z fix issue in example commit 1e45874517a773e3de7c718a3b74f95682b2f0cb Author: actuaryzhang <actuaryzhan...@gmail.com> Date: 2017-06-30T20:49:20Z fix style --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18481: [SPARK-20889][SparkR] Grouped documentation for W...

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/18481#discussion_r125112816

--- Diff: R/pkg/R/generics.R ---

@@ -1013,9 +1013,9 @@ setGeneric("create_map", function(x, ...) {

standardGeneric("create_map") })

#' @name NULL

setGeneric("hash", function(x, ...) { standardGeneric("hash") })

-#' @param x empty. Should be used with no argument.

--- End diff --

added.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18481: [SPARK-20889][SparkR] Grouped documentation for W...

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/18481#discussion_r125112517

--- Diff: R/pkg/R/functions.R ---

@@ -3083,11 +3011,10 @@ setMethod("rank",

column(jc)

})

-# Expose rank() in the R base package

-#' @param x a numeric, complex, character or logical vector.

-#' @param ... additional argument(s) passed to the method.

-#' @name rank

-#' @rdname rank

+#' @details

+#' \code{rank}: Exposes \code{rank()} in the R base package. In this case,

\code{x}

--- End diff --

Ye, actually we don need to doc this. We only need to add an alias and that

should satisfy the R requirement. User can still use the base doc.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18481: [SPARK-20889][SparkR] Grouped documentation for W...

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/18481#discussion_r125112058

--- Diff: R/pkg/R/functions.R ---

@@ -2844,27 +2869,16 @@ setMethod("ifelse",

## Window functions##

-#' cume_dist

-#'

-#' Window function: returns the cumulative distribution of values within a

window partition,

+#' @details

+#' \code{cume_dist}: Returns the cumulative distribution of values within

a window partition,

#' i.e. the fraction of rows that are below the current row.

-#'

-#' N = total number of rows in the partition

-#' cume_dist(x) = number of values before (and including) x / N

-#'

+#' N = total number of rows in the partition

--- End diff --

Fixed with better doc.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18481: [SPARK-20889][SparkR] Grouped documentation for W...

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/18481#discussion_r125112069

--- Diff: R/pkg/R/functions.R ---

@@ -2903,34 +2907,16 @@ setMethod("dense_rank",

column(jc)

})

-#' lag

-#'

-#' Window function: returns the value that is \code{offset} rows before

the current row, and

+#' @details

+#' \code{lag}: Returns the value that is \code{offset} rows before the

current row, and

#' \code{defaultValue} if there is less than \code{offset} rows before the

current row. For example,

#' an \code{offset} of one will return the previous row at any given point

in the window partition.

-#'

#' This is equivalent to the \code{LAG} function in SQL.

#'

-#' @param x the column as a character string or a Column to compute on.

-#' @param offset the number of rows back from the current row from which

to obtain a value.

-#' If not specified, the default is 1.

#' @param defaultValue (optional) default to use when the offset row does

not exist.

--- End diff --

Done.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18481: [SPARK-20889][SparkR] Grouped documentation for W...

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/18481#discussion_r125111820

--- Diff: R/pkg/R/functions.R ---

@@ -200,6 +200,31 @@ NULL

#' head(select(tmp, sort_array(tmp$v1, asc = FALSE)))}

NULL

+#' Window functions for Column operations

+#'

+#' Window functions defined for \code{Column}.

+#'

+#' @param x In \code{lag} and \code{lead}, it is the column as a character

string or a Column

+#' to compute on. In \code{ntile}, it is the number of ntile

groups.

+#' @param offset In \code{lag}, the number of rows back from the current

row from which to obtain

+#' a value. In \code{lead}, the number of rows after the

current row from which to

+#' obtain a value. If not specified, the default is 1.

+#' @param ... additional argument(s).

+#' @name column_window_functions

+#' @rdname column_window_functions

+#' @family window functions

+#' @examples

+#' \dontrun{

+#' # Dataframe used throughout this doc

+#' df <- createDataFrame(cbind(model = rownames(mtcars), mtcars))

+#' ws <- orderBy(windowPartitionBy("am"), "hp")

+#' tmp <- mutate(df, dist = over(cume_dist(), ws), dense_rank =

over(dense_rank(), ws),

--- End diff --

OK. added back

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18481: [SPARK-20889][SparkR] Grouped documentation for WINDOW c...

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/18481 jenkins, retest this please --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18481: [SPARK-20889][SparkR] Grouped documentation for WINDOW c...

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/18481 Ahh, forgot about the window functions. This is actually the last set... @felixcheung @HyukjinKwon    --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18481: [SPARK-20889][SparkR] Grouped documentation for W...

GitHub user actuaryzhang opened a pull request: https://github.com/apache/spark/pull/18481 [SPARK-20889][SparkR] Grouped documentation for WINDOW column methods ## What changes were proposed in this pull request? Grouped documentation for column window methods. You can merge this pull request into a Git repository by running: $ git pull https://github.com/actuaryzhang/spark sparkRDocWindow Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/18481.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #18481 commit e7d19a3da6c580575734e696d7be76bd08d4bae1 Author: actuaryzhang <actuaryzhan...@gmail.com> Date: 2017-06-30T06:44:44Z update doc for window functions --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18458: [SPARK-20889][SparkR] Grouped documentation for COLLECTI...

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/18458 @felixcheung This is the last set of this doc update. Once it gets in, I will do another pass to fix any styles or consistency issue. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #16699: [SPARK-18710][ML] Add offset in GLM

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/16699#discussion_r124869366

--- Diff:

mllib/src/main/scala/org/apache/spark/ml/regression/GeneralizedLinearRegression.scala

---

@@ -961,14 +1008,16 @@ class GeneralizedLinearRegressionModel private[ml] (

}

override protected def transformImpl(dataset: Dataset[_]): DataFrame = {

--- End diff --

Thanks for summarizing the different cases. I think this is worth a deeper

discussion as follow-up work. Let me work on this in another PR.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #16699: [SPARK-18710][ML] Add offset in GLM

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/16699 Made a new commit that fixes the issues you pointed out. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18422: [SPARK-20889][SparkR] Grouped documentation for N...

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/18422#discussion_r124719484

--- Diff: R/pkg/R/functions.R ---

@@ -3554,21 +3493,17 @@ setMethod("grouping_id",

column(jc)

})

-#' input_file_name

-#'

-#' Creates a string column with the input file name for a given row

+#' @details

+#' \code{input_file_name}: Creates a string column with the input file

name for a given row.

#'

-#' @rdname input_file_name

-#' @name input_file_name

-#' @family non-aggregate functions

-#' @aliases input_file_name,missing-method

+#' @rdname column_nonaggregate_functions

+#' @aliases input_file_name input_file_name,missing-method

#' @export

#' @examples

-#' \dontrun{

-#' df <- read.text("README.md")

#'

-#' head(select(df, input_file_name()))

-#' }

+#' \dontrun{

+#' tmp <- read.text("README.md")

--- End diff --

To avoid overwriting the dataframe example `df` used throughout the doc.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18422: [SPARK-20889][SparkR] Grouped documentation for N...

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/18422#discussion_r124719362

--- Diff: R/pkg/R/functions.R ---

@@ -824,32 +835,23 @@ setMethod("initcap",

column(jc)

})

-#' is.nan

-#'

-#' Return true if the column is NaN, alias for \link{isnan}

-#'

-#' @param x Column to compute on.

+#' @details

+#' \code{is.nan}: Alias for \link{isnan}.

--- End diff --

OK, swapped the order.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18422: [SPARK-20889][SparkR] Grouped documentation for N...

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/18422#discussion_r124719101

--- Diff: R/pkg/R/functions.R ---

@@ -132,23 +132,40 @@ NULL

#' df <- createDataFrame(as.data.frame(Titanic, stringsAsFactors = FALSE))}

NULL

-#' lit

+#' Non-aggregate functions for Column operations

#'

-#' A new \linkS4class{Column} is created to represent the literal value.

-#' If the parameter is a \linkS4class{Column}, it is returned unchanged.

+#' Non-aggregate functions defined for \code{Column}.

#'

-#' @param x a literal value or a Column.

+#' @param x Column to compute on. In \code{lit}, it is a literal value or

a Column.

+#' In \code{monotonically_increasing_id}, it should be empty.

+#' @param y Column to compute on.

+#' @param ... additional argument(s). In \code{expr}, it contains an

expression character

+#'object to be parsed.

+#' @name column_nonaggregate_functions

+#' @rdname column_nonaggregate_functions

+#' @seealso coalesce,SparkDataFrame-method

#' @family non-aggregate functions

-#' @rdname lit

-#' @name lit

+#' @examples

+#' \dontrun{

+#' # Dataframe used throughout this doc

+#' df <- createDataFrame(cbind(model = rownames(mtcars), mtcars))}

+NULL

+

+#' @details

+#' \code{lit}: A new \linkS4class{Column} is created to represent the

literal value.

+#' If the parameter is a \linkS4class{Column}, it is returned unchanged.

+#'

+#' @rdname column_nonaggregate_functions

#' @export

-#' @aliases lit,ANY-method

+#' @aliases lit lit,ANY-method

#' @examples

+#'

#' \dontrun{

-#' lit(df$name)

-#' select(df, lit("x"))

-#' select(df, lit("2015-01-01"))

-#'}

+#' tmp <- mutate(df, v1 = lit(df$mpg), v2 = lit("x"), v3 =

lit("2015-01-01"),

+#' v4 = negate(df$mpg), v5 = expr('length(model)'),

+#' v6 = greatest(df$vs, df$am), v7 = least(df$vs, df$am),

+#' v8 = column("mpg"))

--- End diff --

See L2796.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18422: [SPARK-20889][SparkR] Grouped documentation for N...

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/18422#discussion_r124719080

--- Diff: R/pkg/R/functions.R ---

@@ -2819,20 +2775,26 @@ setMethod("unix_timestamp", signature(x = "Column",

format = "character"),

jc <- callJStatic("org.apache.spark.sql.functions",

"unix_timestamp", x@jc, format)

column(jc)

})

-#' when

-#'

-#' Evaluates a list of conditions and returns one of multiple possible

result expressions.

+

+#' @details

+#' \code{when}: Evaluates a list of conditions and returns one of multiple

possible result expressions.

#' For unmatched expressions null is returned.

#'

+#' @rdname column_nonaggregate_functions

#' @param condition the condition to test on. Must be a Column expression.

#' @param value result expression.

-#' @family non-aggregate functions

-#' @rdname when

-#' @name when

-#' @aliases when,Column-method

-#' @seealso \link{ifelse}

+#' @aliases when when,Column-method

#' @export

-#' @examples \dontrun{when(df$age == 2, df$age + 1)}

+#' @examples

+#'

+#' \dontrun{

+#' tmp <- mutate(df, mpg_na = otherwise(when(df$mpg > 20, df$mpg),

lit(NaN)),

+#' mpg2 = ifelse(df$mpg > 20 & df$am > 0, 0, 1),

+#' mpg3 = ifelse(df$mpg > 20, df$mpg, 20.0))

+#' head(tmp)

+#' tmp <- mutate(tmp, ind_na1 = is.nan(tmp$mpg_na), ind_na2 =

isnan(tmp$mpg_na))

+#' head(select(tmp, coalesce(tmp$mpg_na, tmp$mpg)))

+#' head(select(tmp, nanvl(tmp$mpg_na, tmp$hp)))}

--- End diff --

@felixcheung Examples for `coalesce` and `nanvl` are here.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18422: [SPARK-20889][SparkR] Grouped documentation for N...

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/18422#discussion_r124718828

--- Diff: R/pkg/R/functions.R ---

@@ -132,23 +132,40 @@ NULL

#' df <- createDataFrame(as.data.frame(Titanic, stringsAsFactors = FALSE))}

NULL

-#' lit

+#' Non-aggregate functions for Column operations

#'

-#' A new \linkS4class{Column} is created to represent the literal value.

-#' If the parameter is a \linkS4class{Column}, it is returned unchanged.

+#' Non-aggregate functions defined for \code{Column}.

#'

-#' @param x a literal value or a Column.

+#' @param x Column to compute on. In \code{lit}, it is a literal value or

a Column.

+#' In \code{monotonically_increasing_id}, it should be empty.

+#' @param y Column to compute on.

+#' @param ... additional argument(s). In \code{expr}, it contains an

expression character

+#'object to be parsed.

+#' @name column_nonaggregate_functions

+#' @rdname column_nonaggregate_functions

+#' @seealso coalesce,SparkDataFrame-method

#' @family non-aggregate functions

-#' @rdname lit

-#' @name lit

+#' @examples

+#' \dontrun{

+#' # Dataframe used throughout this doc

+#' df <- createDataFrame(cbind(model = rownames(mtcars), mtcars))}

+NULL

+

+#' @details

+#' \code{lit}: A new \linkS4class{Column} is created to represent the

literal value.

--- End diff --

updated.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18422: [SPARK-20889][SparkR] Grouped documentation for N...

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/18422#discussion_r124718755

--- Diff: R/pkg/R/functions.R ---

@@ -132,23 +132,40 @@ NULL

#' df <- createDataFrame(as.data.frame(Titanic, stringsAsFactors = FALSE))}

NULL

-#' lit

+#' Non-aggregate functions for Column operations

#'

-#' A new \linkS4class{Column} is created to represent the literal value.

-#' If the parameter is a \linkS4class{Column}, it is returned unchanged.

+#' Non-aggregate functions defined for \code{Column}.

#'

-#' @param x a literal value or a Column.

+#' @param x Column to compute on. In \code{lit}, it is a literal value or

a Column.

+#' In \code{monotonically_increasing_id}, it should be empty.

+#' @param y Column to compute on.

+#' @param ... additional argument(s). In \code{expr}, it contains an

expression character

--- End diff --

Right, thanks for catching this.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18422: [SPARK-20889][SparkR] Grouped documentation for N...

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/18422#discussion_r124718681

--- Diff: R/pkg/R/functions.R ---

@@ -132,23 +132,40 @@ NULL

#' df <- createDataFrame(as.data.frame(Titanic, stringsAsFactors = FALSE))}

NULL

-#' lit

+#' Non-aggregate functions for Column operations

#'

-#' A new \linkS4class{Column} is created to represent the literal value.

-#' If the parameter is a \linkS4class{Column}, it is returned unchanged.

+#' Non-aggregate functions defined for \code{Column}.

#'

-#' @param x a literal value or a Column.

+#' @param x Column to compute on. In \code{lit}, it is a literal value or

a Column.

+#' In \code{monotonically_increasing_id}, it should be empty.

--- End diff --

Yes, I was just copying from the old doc.

I now remove this and add `The method should be used with no argument.` to

the two individual methods.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

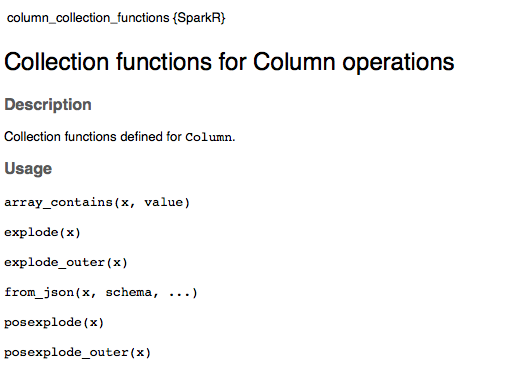

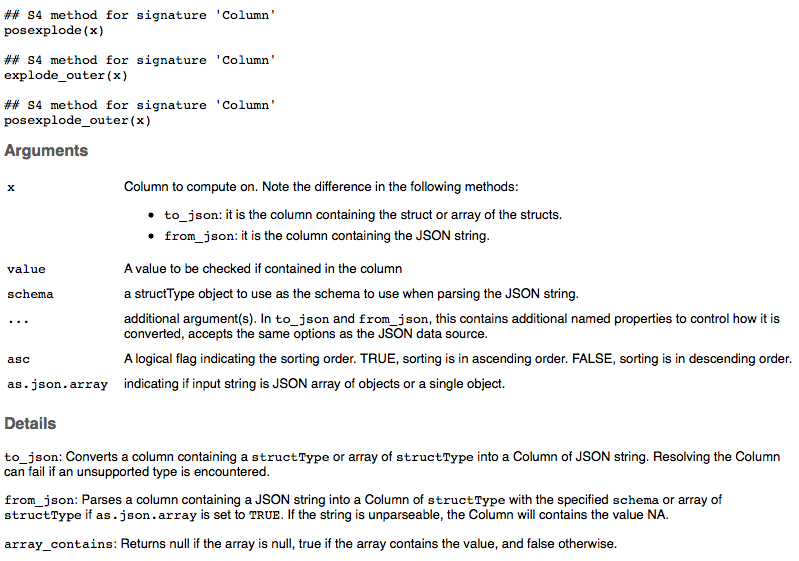

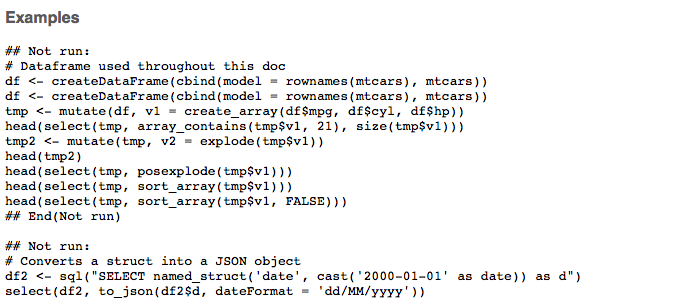

[GitHub] spark pull request #18458: [SPARK-20889][SparkR] Grouped documentation for C...

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/18458#discussion_r124716253

--- Diff: R/pkg/R/functions.R ---

@@ -2156,28 +2178,23 @@ setMethod("date_format", signature(y = "Column", x

= "character"),

column(jc)

})

-#' from_json

-#'

-#' Parses a column containing a JSON string into a Column of

\code{structType} with the specified

-#' \code{schema} or array of \code{structType} if \code{as.json.array} is

set to \code{TRUE}.

-#' If the string is unparseable, the Column will contains the value NA.

+#' @details

+#' \code{from_json}: Parses a column containing a JSON string into a

Column of \code{structType}

+#' with the specified \code{schema} or array of \code{structType} if

\code{as.json.array} is set

+#' to \code{TRUE}. If the string is unparseable, the Column will contains

the value NA.

#'

-#' @param x Column containing the JSON string.

+#' @rdname column_collection_functions

#' @param schema a structType object to use as the schema to use when

parsing the JSON string.

#' @param as.json.array indicating if input string is JSON array of

objects or a single object.

-#' @param ... additional named properties to control how the json is

parsed, accepts the same

-#'options as the JSON data source.

-#'

-#' @family non-aggregate functions

-#' @rdname from_json

-#' @name from_json

-#' @aliases from_json,Column,structType-method

+#' @aliases from_json from_json,Column,structType-method

#' @export

#' @examples

+#'

#' \dontrun{

-#' schema <- structType(structField("name", "string"),

-#' select(df, from_json(df$value, schema, dateFormat = "dd/MM/"))

-#'}

+#' df2 <- sql("SELECT named_struct('name', 'Bob') as people")

+#' df2 <- mutate(df2, people_json = to_json(df2$people))

+#' schema <- structType(structField("name", "string"))

+#' head(select(df2, from_json(df2$people_json, schema)))}

--- End diff --

Thanks for catching this. Added an example.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18458: [SPARK-20889][SparkR] Grouped documentation for C...

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/18458#discussion_r124715019

--- Diff: R/pkg/R/functions.R ---

@@ -2156,28 +2178,23 @@ setMethod("date_format", signature(y = "Column", x

= "character"),

column(jc)

})

-#' from_json

-#'

-#' Parses a column containing a JSON string into a Column of

\code{structType} with the specified

-#' \code{schema} or array of \code{structType} if \code{as.json.array} is

set to \code{TRUE}.

-#' If the string is unparseable, the Column will contains the value NA.

+#' @details

+#' \code{from_json}: Parses a column containing a JSON string into a

Column of \code{structType}

+#' with the specified \code{schema} or array of \code{structType} if

\code{as.json.array} is set

+#' to \code{TRUE}. If the string is unparseable, the Column will contains

the value NA.

--- End diff --

Corrected the typo. Will consider updating `null` & `NA` in the future :)

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18448: [SPARK-20889][SparkR] Grouped documentation for M...

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/18448#discussion_r124714226

--- Diff: R/pkg/R/functions.R ---

@@ -132,6 +132,27 @@ NULL

#' df <- createDataFrame(as.data.frame(Titanic, stringsAsFactors = FALSE))}

NULL

+#' Miscellaneous functions for Column operations

+#'

+#' Miscellaneous functions defined for \code{Column}.

+#'

+#' @param x Column to compute on. In \code{sha2}, it is one of 224, 256,

384, or 512.

+#' @param y Column to compute on.

+#' @param ... additional columns.

--- End diff --

updated now.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

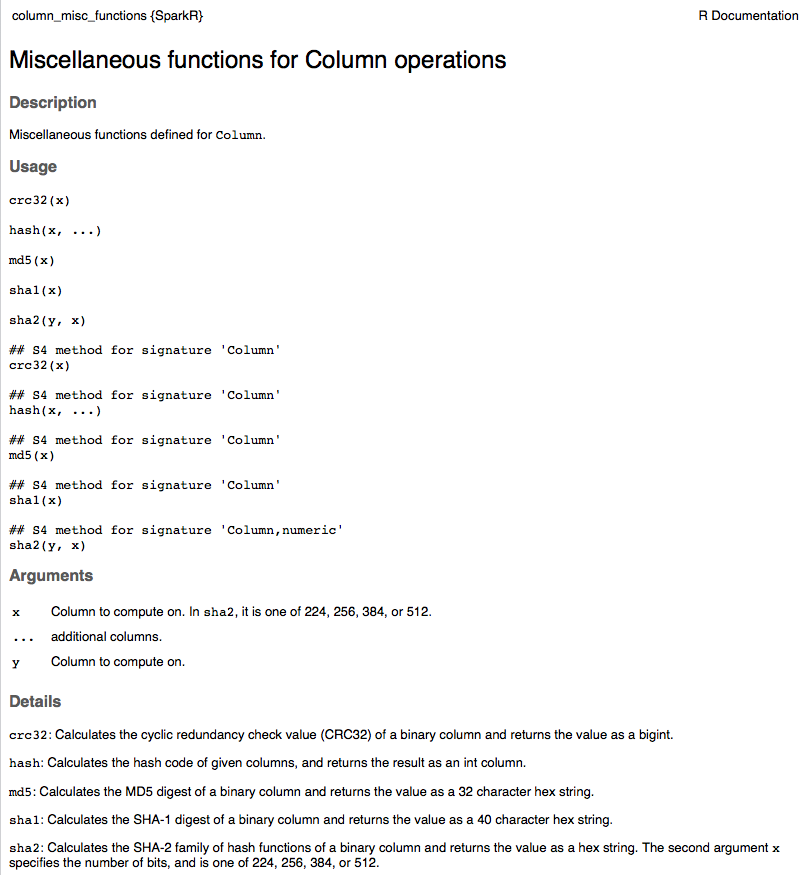

[GitHub] spark pull request #18448: [SPARK-20889][SparkR] Grouped documentation for M...

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/18448#discussion_r124714065

--- Diff: R/pkg/R/functions.R ---

@@ -132,6 +132,27 @@ NULL

#' df <- createDataFrame(as.data.frame(Titanic, stringsAsFactors = FALSE))}

NULL

+#' Miscellaneous functions for Column operations

+#'

+#' Miscellaneous functions defined for \code{Column}.

+#'

+#' @param x Column to compute on. In \code{sha2}, it is one of 224, 256,

384, or 512.

+#' @param y Column to compute on.

--- End diff --

I think roxygen automatically chooses the order of the arguments based on

the order they appear in the file, and ignores the order we specify. So even if

I move `y` before `x` here, in the generated doc, `x` will still appear before

`y`. Indeed, as you can see from the screenshot, `...` appears before `y`.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18422: [SPARK-20889][SparkR] Grouped documentation for NONAGGRE...

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/18422 jenkins, retest this please --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18448: [SPARK-20889][SparkR] Grouped documentation for MISC col...

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/18448  --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18458: [SPARK-20889][SparkR] Grouped documentation for COLLECTI...

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/18458 @felixcheung @HyukjinKwon    --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18458: [SPARK-20889][SparkR] Grouped documentation for C...

GitHub user actuaryzhang opened a pull request: https://github.com/apache/spark/pull/18458 [SPARK-20889][SparkR] Grouped documentation for COLLECTOIN column methods ## What changes were proposed in this pull request? Grouped documentation for column collection methods. You can merge this pull request into a Git repository by running: $ git pull https://github.com/actuaryzhang/spark sparkRDocCollection Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/18458.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #18458 commit 9bdc739483ec1d0493eda1dbb0e4eef761c31929 Author: actuaryzhang <actuaryzhan...@gmail.com> Date: 2017-06-28T17:18:12Z update doc for collection functions --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18458: [SPARK-20889][SparkR] Grouped documentation for COLLECTO...

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/18458 Last part of this doc update. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18448: [SPARK-20889][SparkR] Grouped documentation for MISC col...

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/18448 jenkins, test this please --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18366: [SPARK-20889][SparkR] Grouped documentation for STRING c...

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/18366 jenkins, retest this please --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18448: [SPARK-20889][SparkR] Grouped documentation for MISC col...

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/18448 @felixcheung @HyukjinKwon Easiest group to update by far. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18448: [SPARK-20889][SparkR] Grouped documentation for M...

GitHub user actuaryzhang opened a pull request: https://github.com/apache/spark/pull/18448 [SPARK-20889][SparkR] Grouped documentation for MISC column methods ## What changes were proposed in this pull request? Grouped documentation for string misc methods. You can merge this pull request into a Git repository by running: $ git pull https://github.com/actuaryzhang/spark sparkRDocMisc Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/18448.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #18448 commit 00d8bd8e1be5d27b1b07991540a60aff046e247b Author: actuaryzhang <actuaryzhan...@gmail.com> Date: 2017-06-28T06:23:56Z update doc for column misc functions --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18366: [SPARK-20889][SparkR] Grouped documentation for STRING c...

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/18366 I see what you mean. Updated now. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18366: [SPARK-20889][SparkR] Grouped documentation for STRING c...

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/18366 You mean add `See 'details'` to the doc of `x`? If so, yes. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #16699: [SPARK-18710][ML] Add offset in GLM

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/16699 Got it. I should pay more attention to that mailing list from now on :) --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18371: [SPARK-20889][SparkR] Grouped documentation for M...

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/18371#discussion_r124457521

--- Diff: R/pkg/R/functions.R ---

@@ -41,14 +41,21 @@ NULL

#' @param x Column to compute on. In \code{shiftLeft}, \code{shiftRight}

and \code{shiftRightUnsigned},

#' this is the number of bits to shift.

#' @param y Column to compute on.

-#' @param ... additional argument(s). For example, it could be used to

pass additional Columns.

--- End diff --

Right, `...` is not used in any of these functions here.

But it is still documented because one of the generic methods `bround` has

it.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18366: [SPARK-20889][SparkR] Grouped documentation for STRING c...

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/18366 OK. Incorporated your suggested changes now. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #16699: [SPARK-18710][ML] Add offset in GLM

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/16699 Not sure what this error msg means, but it seems unrelated to this PR. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #16699: [SPARK-18710][ML] Add offset in GLM

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/16699 jenkins, retest this please --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #12414: [SPARK-14657][SPARKR][ML] RFormula w/o intercept should ...

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/12414 LGTM once it clears Jenkins. Thanks. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18366: [SPARK-20889][SparkR] Grouped documentation for STRING c...

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/18366 @felixcheung @HyukjinKwon Anything else needed for this one? --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #16699: [SPARK-18710][ML] Add offset in GLM

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/16699 @yanboliang Thanks much for the review. The new commit includes everything you suggested except implementing `WeightLeastSquares` interface for `OffsetInstance`. Please see my incline comments above. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #16699: [SPARK-18710][ML] Add offset in GLM

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/16699#discussion_r124403889

--- Diff:

mllib/src/test/scala/org/apache/spark/ml/regression/GeneralizedLinearRegressionSuite.scala

---

@@ -798,77 +798,184 @@ class GeneralizedLinearRegressionSuite

}

}

- test("glm summary: gaussian family with weight") {

+ test("generalized linear regression with offset") {

/*

- R code:

+ R code:

+ library(statmod)

- A <- matrix(c(0, 1, 2, 3, 5, 7, 11, 13), 4, 2)

- b <- c(17, 19, 23, 29)

- w <- c(1, 2, 3, 4)

- df <- as.data.frame(cbind(A, b))

- */

-val datasetWithWeight = Seq(

- Instance(17.0, 1.0, Vectors.dense(0.0, 5.0).toSparse),

- Instance(19.0, 2.0, Vectors.dense(1.0, 7.0)),

- Instance(23.0, 3.0, Vectors.dense(2.0, 11.0)),

- Instance(29.0, 4.0, Vectors.dense(3.0, 13.0))

+ df <- as.data.frame(matrix(c(

+0.2, 1.0, 2.0, 0.0, 5.0,

+0.5, 2.1, 0.5, 1.0, 2.0,

+0.9, 0.4, 1.0, 2.0, 1.0,

+0.7, 0.7, 0.0, 3.0, 3.0), 4, 5, byrow = TRUE))

+ families <- list(gaussian, binomial, poisson, Gamma, tweedie(1.5))

+ f1 <- V1 ~ -1 + V4 + V5

+ f2 <- V1 ~ V4 + V5

+ for (f in c(f1, f2)) {

+for (fam in families) {

+ model <- glm(f, df, family = fam, weights = V2, offset = V3)

+ print(as.vector(coef(model)))

+}

+ }

+ [1] 0.5169222 -0.334

+ [1] 0.9419107 -0.6864404

+ [1] 0.1812436 -0.6568422

+ [1] -0.2869094 0.7857710

+ [1] 0.1055254 0.2979113

+ [1] -0.05990345 0.53188982 -0.32118415

+ [1] -0.2147117 0.9911750 -0.6356096

+ [1] -1.5616130 0.6646470 -0.3192581

+ [1] 0.3390397 -0.3406099 0.6870259

+ [1] 0.3665034 0.1039416 0.1484616

+*/

+val dataset = Seq(

+ OffsetInstance(0.2, 1.0, 2.0, Vectors.dense(0.0, 5.0)),

+ OffsetInstance(0.5, 2.1, 0.5, Vectors.dense(1.0, 2.0)),

+ OffsetInstance(0.9, 0.4, 1.0, Vectors.dense(2.0, 1.0)),

+ OffsetInstance(0.7, 0.7, 0.0, Vectors.dense(3.0, 3.0))

).toDF()

+

+val expected = Seq(

+ Vectors.dense(0, 0.5169222, -0.334),

+ Vectors.dense(0, 0.9419107, -0.6864404),

+ Vectors.dense(0, 0.1812436, -0.6568422),

+ Vectors.dense(0, -0.2869094, 0.785771),

+ Vectors.dense(0, 0.1055254, 0.2979113),

+ Vectors.dense(-0.05990345, 0.53188982, -0.32118415),

+ Vectors.dense(-0.2147117, 0.991175, -0.6356096),

+ Vectors.dense(-1.561613, 0.664647, -0.3192581),

+ Vectors.dense(0.3390397, -0.3406099, 0.6870259),

+ Vectors.dense(0.3665034, 0.1039416, 0.1484616))

+

+import GeneralizedLinearRegression._

+

+var idx = 0

+

+for (fitIntercept <- Seq(false, true)) {

+ for (family <- Seq("gaussian", "binomial", "poisson", "gamma",

"tweedie")) {

+val trainer = new GeneralizedLinearRegression().setFamily(family)

+ .setFitIntercept(fitIntercept).setOffsetCol("offset")

+ .setWeightCol("weight").setLinkPredictionCol("linkPrediction")

+if (family == "tweedie") trainer.setVariancePower(1.5)

+val model = trainer.fit(dataset)

+val actual = Vectors.dense(model.intercept, model.coefficients(0),

model.coefficients(1))

+assert(actual ~= expected(idx) absTol 1e-4, s"Model mismatch: GLM

with family = $family," +

+ s" and fitIntercept = $fitIntercept.")

+

+val familyLink = FamilyAndLink(trainer)

+model.transform(dataset).select("features", "offset",

"prediction", "linkPrediction")

+ .collect().foreach {

+ case Row(features: DenseVector, offset: Double, prediction1:

Double,

+ linkPrediction1: Double) =>

+val eta = BLAS.dot(features, model.coefficients) +

model.intercept + offset

+val prediction2 = familyLink.fitted(eta)

+val linkPrediction2 = eta

+assert(prediction1 ~= prediction2 relTol 1E-5, "Prediction

mismatch: GLM with " +

+ s"family = $family, and fitIntercept = $fitIntercept.")

+assert(linkPrediction1 ~= linkPrediction2 relTol 1E-5, "Link

Prediction mismatch: " +

+ s"GLM with family = $family, and fitIntercept =

$fitIntercept.")

+}

+

+idx += 1

+ }

+}

+ }

+

+ test("generalize

[GitHub] spark pull request #16699: [SPARK-18710][ML] Add offset in GLM

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/16699#discussion_r124402141

--- Diff:

mllib/src/test/scala/org/apache/spark/ml/optim/IterativelyReweightedLeastSquaresSuite.scala

---

@@ -169,29 +169,29 @@ class IterativelyReweightedLeastSquaresSuite extends

SparkFunSuite with MLlibTes

object IterativelyReweightedLeastSquaresSuite {

def BinomialReweightFunc(

- instance: Instance,

+ instance: OffsetInstance,

model: WeightedLeastSquaresModel): (Double, Double) = {

-val eta = model.predict(instance.features)

+val eta = model.predict(instance.features) + instance.offset

val mu = 1.0 / (1.0 + math.exp(-1.0 * eta))

-val z = eta + (instance.label - mu) / (mu * (1.0 - mu))

+val z = eta - instance.offset + (instance.label - mu) / (mu * (1.0 -

mu))

--- End diff --

Indeed this is the correct implementation: in the IRWLS, we only include

offset when computing `mu` and use `Xb` (without offset) when updating the

working label. To see this clearly, one would have to derive the IRWLS. But for

a quick reference, below is R's implementation:

```

eta <- drop(x %*% start)

mu <- linkinv(eta <- eta + offset)

z <- (eta - offset)[good] + (y - mu)[good]/mu.eta.val[good]

w <- sqrt((weights[good] * mu.eta.val[good]^2)/variance(mu)[good])

fit <- .Call(C_Cdqrls, x[good, , drop = FALSE] *

w, z * w, min(1e-07, control$epsilon/1000), check = FALSE)

```

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #16699: [SPARK-18710][ML] Add offset in GLM