svn commit: r29904 - in /dev/spark/2.4.1-SNAPSHOT-2018_10_05_22_02-a2991d2-docs: ./ _site/ _site/api/ _site/api/R/ _site/api/java/ _site/api/java/lib/ _site/api/java/org/ _site/api/java/org/apache/ _s

Author: pwendell Date: Sat Oct 6 05:16:47 2018 New Revision: 29904 Log: Apache Spark 2.4.1-SNAPSHOT-2018_10_05_22_02-a2991d2 docs [This commit notification would consist of 1472 parts, which exceeds the limit of 50 ones, so it was shortened to the summary.] - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

spark git commit: [SPARK-25646][K8S] Fix docker-image-tool.sh on dev build.

Repository: spark

Updated Branches:

refs/heads/branch-2.4 0a70afdc0 -> a2991d233

[SPARK-25646][K8S] Fix docker-image-tool.sh on dev build.

The docker file was referencing a path that only existed in the

distribution tarball; it needs to be parameterized so that the

right path can be used in a dev build.

Tested on local dev build.

Closes #22634 from vanzin/SPARK-25646.

Authored-by: Marcelo Vanzin

Signed-off-by: Dongjoon Hyun

(cherry picked from commit 58287a39864db463eeef17d1152d664be021d9ef)

Signed-off-by: Dongjoon Hyun

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/a2991d23

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/a2991d23

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/a2991d23

Branch: refs/heads/branch-2.4

Commit: a2991d23348bd1f4ecc33e5c762ccd12bb65f5cd

Parents: 0a70afd

Author: Marcelo Vanzin

Authored: Fri Oct 5 21:15:16 2018 -0700

Committer: Dongjoon Hyun

Committed: Fri Oct 5 21:18:12 2018 -0700

--

bin/docker-image-tool.sh | 2 ++

.../kubernetes/docker/src/main/dockerfiles/spark/Dockerfile | 3 ++-

2 files changed, 4 insertions(+), 1 deletion(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/a2991d23/bin/docker-image-tool.sh

--

diff --git a/bin/docker-image-tool.sh b/bin/docker-image-tool.sh

index d637105..228494d 100755

--- a/bin/docker-image-tool.sh

+++ b/bin/docker-image-tool.sh

@@ -54,6 +54,8 @@ function build {

img_path=$IMG_PATH

--build-arg

spark_jars=assembly/target/scala-$SPARK_SCALA_VERSION/jars

+ --build-arg

+ k8s_tests=resource-managers/kubernetes/integration-tests/tests

)

else

# Not passed as an argument to docker, but used to validate the Spark

directory.

http://git-wip-us.apache.org/repos/asf/spark/blob/a2991d23/resource-managers/kubernetes/docker/src/main/dockerfiles/spark/Dockerfile

--

diff --git

a/resource-managers/kubernetes/docker/src/main/dockerfiles/spark/Dockerfile

b/resource-managers/kubernetes/docker/src/main/dockerfiles/spark/Dockerfile

index 7ae57bf..1c4dcd5 100644

--- a/resource-managers/kubernetes/docker/src/main/dockerfiles/spark/Dockerfile

+++ b/resource-managers/kubernetes/docker/src/main/dockerfiles/spark/Dockerfile

@@ -19,6 +19,7 @@ FROM openjdk:8-alpine

ARG spark_jars=jars

ARG img_path=kubernetes/dockerfiles

+ARG k8s_tests=kubernetes/tests

# Before building the docker image, first build and make a Spark distribution

following

# the instructions in http://spark.apache.org/docs/latest/building-spark.html.

@@ -43,7 +44,7 @@ COPY bin /opt/spark/bin

COPY sbin /opt/spark/sbin

COPY ${img_path}/spark/entrypoint.sh /opt/

COPY examples /opt/spark/examples

-COPY kubernetes/tests /opt/spark/tests

+COPY ${k8s_tests} /opt/spark/tests

COPY data /opt/spark/data

ENV SPARK_HOME /opt/spark

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

spark git commit: [SPARK-25646][K8S] Fix docker-image-tool.sh on dev build.

Repository: spark

Updated Branches:

refs/heads/master 2c6f4d61b -> 58287a398

[SPARK-25646][K8S] Fix docker-image-tool.sh on dev build.

The docker file was referencing a path that only existed in the

distribution tarball; it needs to be parameterized so that the

right path can be used in a dev build.

Tested on local dev build.

Closes #22634 from vanzin/SPARK-25646.

Authored-by: Marcelo Vanzin

Signed-off-by: Dongjoon Hyun

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/58287a39

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/58287a39

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/58287a39

Branch: refs/heads/master

Commit: 58287a39864db463eeef17d1152d664be021d9ef

Parents: 2c6f4d6

Author: Marcelo Vanzin

Authored: Fri Oct 5 21:15:16 2018 -0700

Committer: Dongjoon Hyun

Committed: Fri Oct 5 21:15:16 2018 -0700

--

bin/docker-image-tool.sh | 2 ++

.../kubernetes/docker/src/main/dockerfiles/spark/Dockerfile | 3 ++-

2 files changed, 4 insertions(+), 1 deletion(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/58287a39/bin/docker-image-tool.sh

--

diff --git a/bin/docker-image-tool.sh b/bin/docker-image-tool.sh

index d637105..228494d 100755

--- a/bin/docker-image-tool.sh

+++ b/bin/docker-image-tool.sh

@@ -54,6 +54,8 @@ function build {

img_path=$IMG_PATH

--build-arg

spark_jars=assembly/target/scala-$SPARK_SCALA_VERSION/jars

+ --build-arg

+ k8s_tests=resource-managers/kubernetes/integration-tests/tests

)

else

# Not passed as an argument to docker, but used to validate the Spark

directory.

http://git-wip-us.apache.org/repos/asf/spark/blob/58287a39/resource-managers/kubernetes/docker/src/main/dockerfiles/spark/Dockerfile

--

diff --git

a/resource-managers/kubernetes/docker/src/main/dockerfiles/spark/Dockerfile

b/resource-managers/kubernetes/docker/src/main/dockerfiles/spark/Dockerfile

index 7ae57bf..1c4dcd5 100644

--- a/resource-managers/kubernetes/docker/src/main/dockerfiles/spark/Dockerfile

+++ b/resource-managers/kubernetes/docker/src/main/dockerfiles/spark/Dockerfile

@@ -19,6 +19,7 @@ FROM openjdk:8-alpine

ARG spark_jars=jars

ARG img_path=kubernetes/dockerfiles

+ARG k8s_tests=kubernetes/tests

# Before building the docker image, first build and make a Spark distribution

following

# the instructions in http://spark.apache.org/docs/latest/building-spark.html.

@@ -43,7 +44,7 @@ COPY bin /opt/spark/bin

COPY sbin /opt/spark/sbin

COPY ${img_path}/spark/entrypoint.sh /opt/

COPY examples /opt/spark/examples

-COPY kubernetes/tests /opt/spark/tests

+COPY ${k8s_tests} /opt/spark/tests

COPY data /opt/spark/data

ENV SPARK_HOME /opt/spark

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

svn commit: r29903 - in /dev/spark/3.0.0-SNAPSHOT-2018_10_05_20_02-2c6f4d6-docs: ./ _site/ _site/api/ _site/api/R/ _site/api/java/ _site/api/java/lib/ _site/api/java/org/ _site/api/java/org/apache/ _s

Author: pwendell Date: Sat Oct 6 03:17:28 2018 New Revision: 29903 Log: Apache Spark 3.0.0-SNAPSHOT-2018_10_05_20_02-2c6f4d6 docs [This commit notification would consist of 1485 parts, which exceeds the limit of 50 ones, so it was shortened to the summary.] - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

spark git commit: [SPARK-25610][SQL][TEST] Improve execution time of DatasetCacheSuite: cache UDF result correctly

Repository: spark

Updated Branches:

refs/heads/master bbd038d24 -> 2c6f4d61b

[SPARK-25610][SQL][TEST] Improve execution time of DatasetCacheSuite: cache UDF

result correctly

## What changes were proposed in this pull request?

In this test case, we are verifying that the result of an UDF is cached when

the underlying data frame is cached and that the udf is not evaluated again

when the cached data frame is used.

To reduce the runtime we do :

1) Use a single partition dataframe, so the total execution time of UDF is more

deterministic.

2) Cut down the size of the dataframe from 10 to 2.

3) Reduce the sleep time in the UDF from 5secs to 2secs.

4) Reduce the failafter condition from 3 to 2.

With the above change, it takes about 4 secs to cache the first dataframe. And

subsequent check takes a few hundred milliseconds.

The new runtime for 5 consecutive runs of this test is as follows :

```

[info] - cache UDF result correctly (4 seconds, 906 milliseconds)

[info] - cache UDF result correctly (4 seconds, 281 milliseconds)

[info] - cache UDF result correctly (4 seconds, 288 milliseconds)

[info] - cache UDF result correctly (4 seconds, 355 milliseconds)

[info] - cache UDF result correctly (4 seconds, 280 milliseconds)

```

## How was this patch tested?

This is s test fix.

Closes #22638 from dilipbiswal/SPARK-25610.

Authored-by: Dilip Biswal

Signed-off-by: gatorsmile

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/2c6f4d61

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/2c6f4d61

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/2c6f4d61

Branch: refs/heads/master

Commit: 2c6f4d61bbf7f0267a7309b4a236047f830bd6ee

Parents: bbd038d

Author: Dilip Biswal

Authored: Fri Oct 5 17:25:28 2018 -0700

Committer: gatorsmile

Committed: Fri Oct 5 17:25:28 2018 -0700

--

.../test/scala/org/apache/spark/sql/DatasetCacheSuite.scala| 6 +++---

1 file changed, 3 insertions(+), 3 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/2c6f4d61/sql/core/src/test/scala/org/apache/spark/sql/DatasetCacheSuite.scala

--

diff --git

a/sql/core/src/test/scala/org/apache/spark/sql/DatasetCacheSuite.scala

b/sql/core/src/test/scala/org/apache/spark/sql/DatasetCacheSuite.scala

index 5c6a021..fef6ddd 100644

--- a/sql/core/src/test/scala/org/apache/spark/sql/DatasetCacheSuite.scala

+++ b/sql/core/src/test/scala/org/apache/spark/sql/DatasetCacheSuite.scala

@@ -127,8 +127,8 @@ class DatasetCacheSuite extends QueryTest with

SharedSQLContext with TimeLimits

}

test("cache UDF result correctly") {

-val expensiveUDF = udf({x: Int => Thread.sleep(5000); x})

-val df = spark.range(0, 10).toDF("a").withColumn("b", expensiveUDF($"a"))

+val expensiveUDF = udf({x: Int => Thread.sleep(2000); x})

+val df = spark.range(0, 2).toDF("a").repartition(1).withColumn("b",

expensiveUDF($"a"))

val df2 = df.agg(sum(df("b")))

df.cache()

@@ -136,7 +136,7 @@ class DatasetCacheSuite extends QueryTest with

SharedSQLContext with TimeLimits

assertCached(df2)

// udf has been evaluated during caching, and thus should not be

re-evaluated here

-failAfter(3 seconds) {

+failAfter(2 seconds) {

df2.collect()

}

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

spark git commit: [SPARK-25653][TEST] Add tag ExtendedHiveTest for HiveSparkSubmitSuite

Repository: spark

Updated Branches:

refs/heads/master 1c9486c1a -> bbd038d24

[SPARK-25653][TEST] Add tag ExtendedHiveTest for HiveSparkSubmitSuite

## What changes were proposed in this pull request?

The total run time of `HiveSparkSubmitSuite` is about 10 minutes.

While the related code is stable, add tag `ExtendedHiveTest` for it.

## How was this patch tested?

Unit test.

Closes #22642 from gengliangwang/addTagForHiveSparkSubmitSuite.

Authored-by: Gengliang Wang

Signed-off-by: gatorsmile

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/bbd038d2

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/bbd038d2

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/bbd038d2

Branch: refs/heads/master

Commit: bbd038d2436c17ff519c08630a016f3ec796a282

Parents: 1c9486c

Author: Gengliang Wang

Authored: Fri Oct 5 17:03:24 2018 -0700

Committer: gatorsmile

Committed: Fri Oct 5 17:03:24 2018 -0700

--

.../scala/org/apache/spark/sql/hive/HiveSparkSubmitSuite.scala | 4 ++--

1 file changed, 2 insertions(+), 2 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/bbd038d2/sql/hive/src/test/scala/org/apache/spark/sql/hive/HiveSparkSubmitSuite.scala

--

diff --git

a/sql/hive/src/test/scala/org/apache/spark/sql/hive/HiveSparkSubmitSuite.scala

b/sql/hive/src/test/scala/org/apache/spark/sql/hive/HiveSparkSubmitSuite.scala

index a676cf6..f839e89 100644

---

a/sql/hive/src/test/scala/org/apache/spark/sql/hive/HiveSparkSubmitSuite.scala

+++

b/sql/hive/src/test/scala/org/apache/spark/sql/hive/HiveSparkSubmitSuite.scala

@@ -33,11 +33,13 @@ import org.apache.spark.sql.execution.command.DDLUtils

import org.apache.spark.sql.expressions.Window

import org.apache.spark.sql.hive.test.{TestHive, TestHiveContext}

import org.apache.spark.sql.types.{DecimalType, StructType}

+import org.apache.spark.tags.ExtendedHiveTest

import org.apache.spark.util.{ResetSystemProperties, Utils}

/**

* This suite tests spark-submit with applications using HiveContext.

*/

+@ExtendedHiveTest

class HiveSparkSubmitSuite

extends SparkSubmitTestUtils

with Matchers

@@ -46,8 +48,6 @@ class HiveSparkSubmitSuite

override protected val enableAutoThreadAudit = false

- // TODO: rewrite these or mark them as slow tests to be run sparingly

-

override def beforeEach() {

super.beforeEach()

}

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

spark git commit: [SPARK-25635][SQL][BUILD] Support selective direct encoding in native ORC write

Repository: spark Updated Branches: refs/heads/master a433fbcee -> 1c9486c1a [SPARK-25635][SQL][BUILD] Support selective direct encoding in native ORC write ## What changes were proposed in this pull request? Before ORC 1.5.3, `orc.dictionary.key.threshold` and `hive.exec.orc.dictionary.key.size.threshold` are applied for all columns. This has been a big huddle to enable dictionary encoding. From ORC 1.5.3, `orc.column.encoding.direct` is added to enforce direct encoding selectively in a column-wise manner. This PR aims to add that feature by upgrading ORC from 1.5.2 to 1.5.3. The followings are the patches in ORC 1.5.3 and this feature is the only one related to Spark directly. ``` ORC-406: ORC: Char(n) and Varchar(n) writers truncate to n bytes & corrupts multi-byte data (gopalv) ORC-403: [C++] Add checks to avoid invalid offsets in InputStream ORC-405: Remove calcite as a dependency from the benchmarks. ORC-375: Fix libhdfs on gcc7 by adding #include two places. ORC-383: Parallel builds fails with ConcurrentModificationException ORC-382: Apache rat exclusions + add rat check to travis ORC-401: Fix incorrect quoting in specification. ORC-385: Change RecordReader to extend Closeable. ORC-384: [C++] fix memory leak when loading non-ORC files ORC-391: [c++] parseType does not accept underscore in the field name ORC-397: Allow selective disabling of dictionary encoding. Original patch was by Mithun Radhakrishnan. ORC-389: Add ability to not decode Acid metadata columns ``` ## How was this patch tested? Pass the Jenkins with newly added test cases. Closes #22622 from dongjoon-hyun/SPARK-25635. Authored-by: Dongjoon Hyun Signed-off-by: gatorsmile Project: http://git-wip-us.apache.org/repos/asf/spark/repo Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/1c9486c1 Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/1c9486c1 Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/1c9486c1 Branch: refs/heads/master Commit: 1c9486c1acceb73e5cc6f1fa684b6d992e187a9a Parents: a433fbc Author: Dongjoon Hyun Authored: Fri Oct 5 16:42:06 2018 -0700 Committer: gatorsmile Committed: Fri Oct 5 16:42:06 2018 -0700 -- dev/deps/spark-deps-hadoop-2.6 | 6 +- dev/deps/spark-deps-hadoop-2.7 | 6 +- dev/deps/spark-deps-hadoop-3.1 | 6 +- pom.xml | 2 +- .../datasources/orc/OrcSourceSuite.scala| 75 .../spark/sql/hive/orc/HiveOrcSourceSuite.scala | 8 +++ 6 files changed, 93 insertions(+), 10 deletions(-) -- http://git-wip-us.apache.org/repos/asf/spark/blob/1c9486c1/dev/deps/spark-deps-hadoop-2.6 -- diff --git a/dev/deps/spark-deps-hadoop-2.6 b/dev/deps/spark-deps-hadoop-2.6 index 2dcab85..22e86ef 100644 --- a/dev/deps/spark-deps-hadoop-2.6 +++ b/dev/deps/spark-deps-hadoop-2.6 @@ -153,9 +153,9 @@ objenesis-2.5.1.jar okhttp-3.8.1.jar okio-1.13.0.jar opencsv-2.3.jar -orc-core-1.5.2-nohive.jar -orc-mapreduce-1.5.2-nohive.jar -orc-shims-1.5.2.jar +orc-core-1.5.3-nohive.jar +orc-mapreduce-1.5.3-nohive.jar +orc-shims-1.5.3.jar oro-2.0.8.jar osgi-resource-locator-1.0.1.jar paranamer-2.8.jar http://git-wip-us.apache.org/repos/asf/spark/blob/1c9486c1/dev/deps/spark-deps-hadoop-2.7 -- diff --git a/dev/deps/spark-deps-hadoop-2.7 b/dev/deps/spark-deps-hadoop-2.7 index d1d695c..19dd786 100644 --- a/dev/deps/spark-deps-hadoop-2.7 +++ b/dev/deps/spark-deps-hadoop-2.7 @@ -154,9 +154,9 @@ objenesis-2.5.1.jar okhttp-3.8.1.jar okio-1.13.0.jar opencsv-2.3.jar -orc-core-1.5.2-nohive.jar -orc-mapreduce-1.5.2-nohive.jar -orc-shims-1.5.2.jar +orc-core-1.5.3-nohive.jar +orc-mapreduce-1.5.3-nohive.jar +orc-shims-1.5.3.jar oro-2.0.8.jar osgi-resource-locator-1.0.1.jar paranamer-2.8.jar http://git-wip-us.apache.org/repos/asf/spark/blob/1c9486c1/dev/deps/spark-deps-hadoop-3.1 -- diff --git a/dev/deps/spark-deps-hadoop-3.1 b/dev/deps/spark-deps-hadoop-3.1 index e9691eb..ea0f487 100644 --- a/dev/deps/spark-deps-hadoop-3.1 +++ b/dev/deps/spark-deps-hadoop-3.1 @@ -172,9 +172,9 @@ okhttp-2.7.5.jar okhttp-3.8.1.jar okio-1.13.0.jar opencsv-2.3.jar -orc-core-1.5.2-nohive.jar -orc-mapreduce-1.5.2-nohive.jar -orc-shims-1.5.2.jar +orc-core-1.5.3-nohive.jar +orc-mapreduce-1.5.3-nohive.jar +orc-shims-1.5.3.jar oro-2.0.8.jar osgi-resource-locator-1.0.1.jar paranamer-2.8.jar http://git-wip-us.apache.org/repos/asf/spark/blob/1c9486c1/pom.xml -- diff --git a/pom.xml b/pom.xml index c5eea6a..79af5d6 100644 --- a/pom.xml +++ b/pom.xml @@ -131,7 +131,7 @@ 1.2.1 10.12.1.1 1.10.0

svn commit: r29902 - in /dev/spark/3.0.0-SNAPSHOT-2018_10_05_16_03-a433fbc-docs: ./ _site/ _site/api/ _site/api/R/ _site/api/java/ _site/api/java/lib/ _site/api/java/org/ _site/api/java/org/apache/ _s

Author: pwendell Date: Fri Oct 5 23:17:20 2018 New Revision: 29902 Log: Apache Spark 3.0.0-SNAPSHOT-2018_10_05_16_03-a433fbc docs [This commit notification would consist of 1485 parts, which exceeds the limit of 50 ones, so it was shortened to the summary.] - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

spark git commit: [SPARK-25626][SQL][TEST] Improve the test execution time of HiveClientSuites

Repository: spark

Updated Branches:

refs/heads/master 7dcc90fbb -> a433fbcee

[SPARK-25626][SQL][TEST] Improve the test execution time of HiveClientSuites

## What changes were proposed in this pull request?

Improve the runtime by reducing the number of partitions created in the test.

The number of partitions are reduced from 280 to 60.

Here are the test times for the `getPartitionsByFilter returns all partitions`

test on my laptop.

```

[info] - 0.13: getPartitionsByFilter returns all partitions when

hive.metastore.try.direct.sql=false (4 seconds, 230 milliseconds)

[info] - 0.14: getPartitionsByFilter returns all partitions when

hive.metastore.try.direct.sql=false (3 seconds, 576 milliseconds)

[info] - 1.0: getPartitionsByFilter returns all partitions when

hive.metastore.try.direct.sql=false (3 seconds, 495 milliseconds)

[info] - 1.1: getPartitionsByFilter returns all partitions when

hive.metastore.try.direct.sql=false (6 seconds, 728 milliseconds)

[info] - 1.2: getPartitionsByFilter returns all partitions when

hive.metastore.try.direct.sql=false (7 seconds, 260 milliseconds)

[info] - 2.0: getPartitionsByFilter returns all partitions when

hive.metastore.try.direct.sql=false (8 seconds, 270 milliseconds)

[info] - 2.1: getPartitionsByFilter returns all partitions when

hive.metastore.try.direct.sql=false (6 seconds, 856 milliseconds)

[info] - 2.2: getPartitionsByFilter returns all partitions when

hive.metastore.try.direct.sql=false (7 seconds, 587 milliseconds)

[info] - 2.3: getPartitionsByFilter returns all partitions when

hive.metastore.try.direct.sql=false (7 seconds, 230 milliseconds)

## How was this patch tested?

Test only.

Closes #22644 from dilipbiswal/SPARK-25626.

Authored-by: Dilip Biswal

Signed-off-by: gatorsmile

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/a433fbce

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/a433fbce

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/a433fbce

Branch: refs/heads/master

Commit: a433fbcee66904d1b7fa98ab053e2bdf81e5e4f2

Parents: 7dcc90f

Author: Dilip Biswal

Authored: Fri Oct 5 14:39:30 2018 -0700

Committer: gatorsmile

Committed: Fri Oct 5 14:39:30 2018 -0700

--

.../spark/sql/hive/client/HiveClientSuite.scala | 70 ++--

1 file changed, 35 insertions(+), 35 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/a433fbce/sql/hive/src/test/scala/org/apache/spark/sql/hive/client/HiveClientSuite.scala

--

diff --git

a/sql/hive/src/test/scala/org/apache/spark/sql/hive/client/HiveClientSuite.scala

b/sql/hive/src/test/scala/org/apache/spark/sql/hive/client/HiveClientSuite.scala

index fa9f753..7a325bf 100644

---

a/sql/hive/src/test/scala/org/apache/spark/sql/hive/client/HiveClientSuite.scala

+++

b/sql/hive/src/test/scala/org/apache/spark/sql/hive/client/HiveClientSuite.scala

@@ -32,7 +32,7 @@ class HiveClientSuite(version: String)

private val tryDirectSqlKey =

HiveConf.ConfVars.METASTORE_TRY_DIRECT_SQL.varname

- private val testPartitionCount = 3 * 24 * 4

+ private val testPartitionCount = 3 * 5 * 4

private def init(tryDirectSql: Boolean): HiveClient = {

val storageFormat = CatalogStorageFormat(

@@ -51,7 +51,7 @@ class HiveClientSuite(version: String)

val partitions =

for {

ds <- 20170101 to 20170103

-h <- 0 to 23

+h <- 0 to 4

chunk <- Seq("aa", "ab", "ba", "bb")

} yield CatalogTablePartition(Map(

"ds" -> ds.toString,

@@ -92,7 +92,7 @@ class HiveClientSuite(version: String)

testMetastorePartitionFiltering(

attr("ds") <=> 20170101,

20170101 to 20170103,

- 0 to 23,

+ 0 to 4,

"aa" :: "ab" :: "ba" :: "bb" :: Nil)

}

@@ -100,7 +100,7 @@ class HiveClientSuite(version: String)

testMetastorePartitionFiltering(

attr("ds") === 20170101,

20170101 to 20170101,

- 0 to 23,

+ 0 to 4,

"aa" :: "ab" :: "ba" :: "bb" :: Nil)

}

@@ -118,7 +118,7 @@ class HiveClientSuite(version: String)

testMetastorePartitionFiltering(

attr("chunk") === "aa",

20170101 to 20170103,

- 0 to 23,

+ 0 to 4,

"aa" :: Nil)

}

@@ -126,7 +126,7 @@ class HiveClientSuite(version: String)

testMetastorePartitionFiltering(

attr("chunk").cast(IntegerType) === 1,

20170101 to 20170103,

- 0 to 23,

+ 0 to 4,

"aa" :: "ab" :: "ba" :: "bb" :: Nil)

}

@@ -134,7 +134,7 @@ class HiveClientSuite(version: String)

testMetastorePartitionFiltering(

attr("chunk").cast(BooleanType) === true,

20170101 to 20170103,

- 0 to 23,

+ 0 to 4,

"aa" :: "ab" :: "ba" :: "bb" :: Nil)

}

@@ -142,23 +142,23 @@ class

svn commit: r29901 - in /dev/spark/2.4.1-SNAPSHOT-2018_10_05_14_03-0a70afd-docs: ./ _site/ _site/api/ _site/api/R/ _site/api/java/ _site/api/java/lib/ _site/api/java/org/ _site/api/java/org/apache/ _s

Author: pwendell Date: Fri Oct 5 21:17:19 2018 New Revision: 29901 Log: Apache Spark 2.4.1-SNAPSHOT-2018_10_05_14_03-0a70afd docs [This commit notification would consist of 1472 parts, which exceeds the limit of 50 ones, so it was shortened to the summary.] - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

svn commit: r29900 - in /dev/spark/3.0.0-SNAPSHOT-2018_10_05_12_03-7dcc90f-docs: ./ _site/ _site/api/ _site/api/R/ _site/api/java/ _site/api/java/lib/ _site/api/java/org/ _site/api/java/org/apache/ _s

Author: pwendell Date: Fri Oct 5 19:17:42 2018 New Revision: 29900 Log: Apache Spark 3.0.0-SNAPSHOT-2018_10_05_12_03-7dcc90f docs [This commit notification would consist of 1485 parts, which exceeds the limit of 50 ones, so it was shortened to the summary.] - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

spark git commit: [SPARK-25644][SS] Fix java foreachBatch in DataStreamWriter

Repository: spark

Updated Branches:

refs/heads/branch-2.4 2c700ee30 -> 0a70afdc0

[SPARK-25644][SS] Fix java foreachBatch in DataStreamWriter

## What changes were proposed in this pull request?

The java `foreachBatch` API in `DataStreamWriter` should accept

`java.lang.Long` rather `scala.Long`.

## How was this patch tested?

New java test.

Closes #22633 from zsxwing/fix-java-foreachbatch.

Authored-by: Shixiong Zhu

Signed-off-by: Shixiong Zhu

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/0a70afdc

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/0a70afdc

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/0a70afdc

Branch: refs/heads/branch-2.4

Commit: 0a70afdc08d76f84c59ec50f2f92144f54271602

Parents: 2c700ee

Author: Shixiong Zhu

Authored: Fri Oct 5 10:45:15 2018 -0700

Committer: Shixiong Zhu

Committed: Fri Oct 5 11:18:49 2018 -0700

--

.../spark/sql/streaming/DataStreamWriter.scala | 2 +-

.../JavaDataStreamReaderWriterSuite.java| 89

2 files changed, 90 insertions(+), 1 deletion(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/0a70afdc/sql/core/src/main/scala/org/apache/spark/sql/streaming/DataStreamWriter.scala

--

diff --git

a/sql/core/src/main/scala/org/apache/spark/sql/streaming/DataStreamWriter.scala

b/sql/core/src/main/scala/org/apache/spark/sql/streaming/DataStreamWriter.scala

index 735fd17..4eb2918 100644

---

a/sql/core/src/main/scala/org/apache/spark/sql/streaming/DataStreamWriter.scala

+++

b/sql/core/src/main/scala/org/apache/spark/sql/streaming/DataStreamWriter.scala

@@ -379,7 +379,7 @@ final class DataStreamWriter[T] private[sql](ds:

Dataset[T]) {

* @since 2.4.0

*/

@InterfaceStability.Evolving

- def foreachBatch(function: VoidFunction2[Dataset[T], Long]):

DataStreamWriter[T] = {

+ def foreachBatch(function: VoidFunction2[Dataset[T], java.lang.Long]):

DataStreamWriter[T] = {

foreachBatch((batchDs: Dataset[T], batchId: Long) =>

function.call(batchDs, batchId))

}

http://git-wip-us.apache.org/repos/asf/spark/blob/0a70afdc/sql/core/src/test/java/test/org/apache/spark/sql/streaming/JavaDataStreamReaderWriterSuite.java

--

diff --git

a/sql/core/src/test/java/test/org/apache/spark/sql/streaming/JavaDataStreamReaderWriterSuite.java

b/sql/core/src/test/java/test/org/apache/spark/sql/streaming/JavaDataStreamReaderWriterSuite.java

new file mode 100644

index 000..48cdb26

--- /dev/null

+++

b/sql/core/src/test/java/test/org/apache/spark/sql/streaming/JavaDataStreamReaderWriterSuite.java

@@ -0,0 +1,89 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package test.org.apache.spark.sql.streaming;

+

+import java.io.File;

+

+import org.junit.After;

+import org.junit.Before;

+import org.junit.Test;

+

+import org.apache.spark.api.java.function.VoidFunction2;

+import org.apache.spark.sql.Dataset;

+import org.apache.spark.sql.ForeachWriter;

+import org.apache.spark.sql.SparkSession;

+import org.apache.spark.sql.streaming.StreamingQuery;

+import org.apache.spark.sql.test.TestSparkSession;

+import org.apache.spark.util.Utils;

+

+public class JavaDataStreamReaderWriterSuite {

+ private SparkSession spark;

+ private String input;

+

+ @Before

+ public void setUp() {

+spark = new TestSparkSession();

+input = Utils.createTempDir(System.getProperty("java.io.tmpdir"),

"input").toString();

+ }

+

+ @After

+ public void tearDown() {

+try {

+ Utils.deleteRecursively(new File(input));

+} finally {

+ spark.stop();

+ spark = null;

+}

+ }

+

+ @Test

+ public void testForeachBatchAPI() {

+StreamingQuery query = spark

+ .readStream()

+ .textFile(input)

+ .writeStream()

+ .foreachBatch(new VoidFunction2, Long>() {

+@Override

+public void call(Dataset v1, Long v2) throws Exception {}

+ })

+ .start();

+query.stop();

+ }

+

+ @Test

+ public

spark git commit: [SPARK-25644][SS] Fix java foreachBatch in DataStreamWriter

Repository: spark

Updated Branches:

refs/heads/master 434ada12a -> 7dcc90fbb

[SPARK-25644][SS] Fix java foreachBatch in DataStreamWriter

## What changes were proposed in this pull request?

The java `foreachBatch` API in `DataStreamWriter` should accept

`java.lang.Long` rather `scala.Long`.

## How was this patch tested?

New java test.

Closes #22633 from zsxwing/fix-java-foreachbatch.

Authored-by: Shixiong Zhu

Signed-off-by: Shixiong Zhu

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/7dcc90fb

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/7dcc90fb

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/7dcc90fb

Branch: refs/heads/master

Commit: 7dcc90fbb8dc75077819a5d8c42652f0c84424b5

Parents: 434ada1

Author: Shixiong Zhu

Authored: Fri Oct 5 10:45:15 2018 -0700

Committer: Shixiong Zhu

Committed: Fri Oct 5 10:45:15 2018 -0700

--

.../spark/sql/streaming/DataStreamWriter.scala | 2 +-

.../JavaDataStreamReaderWriterSuite.java| 89

2 files changed, 90 insertions(+), 1 deletion(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/7dcc90fb/sql/core/src/main/scala/org/apache/spark/sql/streaming/DataStreamWriter.scala

--

diff --git

a/sql/core/src/main/scala/org/apache/spark/sql/streaming/DataStreamWriter.scala

b/sql/core/src/main/scala/org/apache/spark/sql/streaming/DataStreamWriter.scala

index e9a1521..b23e86a 100644

---

a/sql/core/src/main/scala/org/apache/spark/sql/streaming/DataStreamWriter.scala

+++

b/sql/core/src/main/scala/org/apache/spark/sql/streaming/DataStreamWriter.scala

@@ -380,7 +380,7 @@ final class DataStreamWriter[T] private[sql](ds:

Dataset[T]) {

* @since 2.4.0

*/

@InterfaceStability.Evolving

- def foreachBatch(function: VoidFunction2[Dataset[T], Long]):

DataStreamWriter[T] = {

+ def foreachBatch(function: VoidFunction2[Dataset[T], java.lang.Long]):

DataStreamWriter[T] = {

foreachBatch((batchDs: Dataset[T], batchId: Long) =>

function.call(batchDs, batchId))

}

http://git-wip-us.apache.org/repos/asf/spark/blob/7dcc90fb/sql/core/src/test/java/test/org/apache/spark/sql/streaming/JavaDataStreamReaderWriterSuite.java

--

diff --git

a/sql/core/src/test/java/test/org/apache/spark/sql/streaming/JavaDataStreamReaderWriterSuite.java

b/sql/core/src/test/java/test/org/apache/spark/sql/streaming/JavaDataStreamReaderWriterSuite.java

new file mode 100644

index 000..48cdb26

--- /dev/null

+++

b/sql/core/src/test/java/test/org/apache/spark/sql/streaming/JavaDataStreamReaderWriterSuite.java

@@ -0,0 +1,89 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package test.org.apache.spark.sql.streaming;

+

+import java.io.File;

+

+import org.junit.After;

+import org.junit.Before;

+import org.junit.Test;

+

+import org.apache.spark.api.java.function.VoidFunction2;

+import org.apache.spark.sql.Dataset;

+import org.apache.spark.sql.ForeachWriter;

+import org.apache.spark.sql.SparkSession;

+import org.apache.spark.sql.streaming.StreamingQuery;

+import org.apache.spark.sql.test.TestSparkSession;

+import org.apache.spark.util.Utils;

+

+public class JavaDataStreamReaderWriterSuite {

+ private SparkSession spark;

+ private String input;

+

+ @Before

+ public void setUp() {

+spark = new TestSparkSession();

+input = Utils.createTempDir(System.getProperty("java.io.tmpdir"),

"input").toString();

+ }

+

+ @After

+ public void tearDown() {

+try {

+ Utils.deleteRecursively(new File(input));

+} finally {

+ spark.stop();

+ spark = null;

+}

+ }

+

+ @Test

+ public void testForeachBatchAPI() {

+StreamingQuery query = spark

+ .readStream()

+ .textFile(input)

+ .writeStream()

+ .foreachBatch(new VoidFunction2, Long>() {

+@Override

+public void call(Dataset v1, Long v2) throws Exception {}

+ })

+ .start();

+query.stop();

+ }

+

+ @Test

+ public void

svn commit: r29898 - in /dev/spark/2.4.1-SNAPSHOT-2018_10_05_10_02-2c700ee-docs: ./ _site/ _site/api/ _site/api/R/ _site/api/java/ _site/api/java/lib/ _site/api/java/org/ _site/api/java/org/apache/ _s

Author: pwendell Date: Fri Oct 5 17:17:28 2018 New Revision: 29898 Log: Apache Spark 2.4.1-SNAPSHOT-2018_10_05_10_02-2c700ee docs [This commit notification would consist of 1472 parts, which exceeds the limit of 50 ones, so it was shortened to the summary.] - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

svn commit: r29892 - in /dev/spark/3.0.0-SNAPSHOT-2018_10_05_04_02-434ada1-docs: ./ _site/ _site/api/ _site/api/R/ _site/api/java/ _site/api/java/lib/ _site/api/java/org/ _site/api/java/org/apache/ _s

Author: pwendell Date: Fri Oct 5 11:17:07 2018 New Revision: 29892 Log: Apache Spark 3.0.0-SNAPSHOT-2018_10_05_04_02-434ada1 docs [This commit notification would consist of 1485 parts, which exceeds the limit of 50 ones, so it was shortened to the summary.] - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

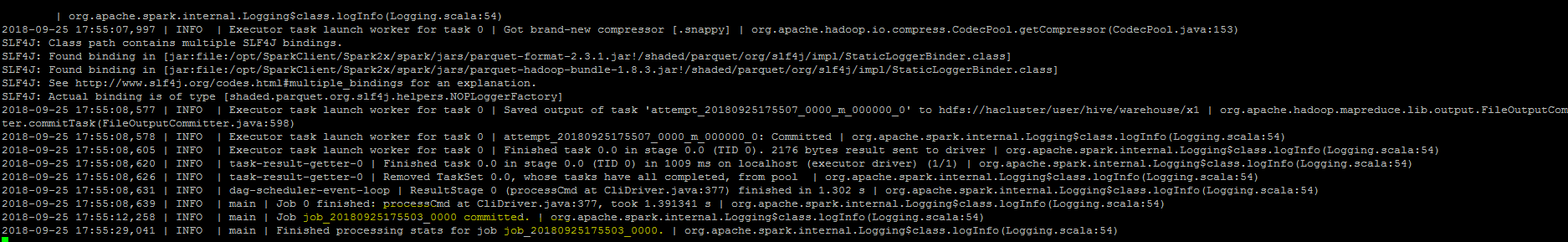

spark git commit: [SPARK-25521][SQL] Job id showing null in the logs when insert into command Job is finished.

Repository: spark

Updated Branches:

refs/heads/branch-2.4 c9bb83a7d -> 2c700ee30

[SPARK-25521][SQL] Job id showing null in the logs when insert into command Job

is finished.

## What changes were proposed in this pull request?

``As part of insert command in FileFormatWriter, a job context is created for

handling the write operation , While initializing the job context using

setupJob() API

in HadoopMapReduceCommitProtocol , we set the jobid in the Jobcontext

configuration.In FileFormatWriter since we are directly getting the jobId from

the map reduce JobContext the job id will come as null while adding the log.

As a solution we shall get the jobID from the configuration of the map reduce

Jobcontext.``

## How was this patch tested?

Manually, verified the logs after the changes.

Closes #22572 from sujith71955/master_log_issue.

Authored-by: s71955

Signed-off-by: Wenchen Fan

(cherry picked from commit 459700727fadf3f35a211eab2ffc8d68a4a1c39a)

Signed-off-by: Wenchen Fan

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/2c700ee3

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/2c700ee3

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/2c700ee3

Branch: refs/heads/branch-2.4

Commit: 2c700ee30d7fe7c7fdc7dbfe697ef5f41bd17215

Parents: c9bb83a

Author: s71955

Authored: Fri Oct 5 13:09:16 2018 +0800

Committer: Wenchen Fan

Committed: Fri Oct 5 16:51:59 2018 +0800

--

.../spark/sql/execution/datasources/FileFormatWriter.scala | 6 +++---

1 file changed, 3 insertions(+), 3 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/2c700ee3/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/FileFormatWriter.scala

--

diff --git

a/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/FileFormatWriter.scala

b/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/FileFormatWriter.scala

index 7c6ab4b..774fe38 100644

---

a/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/FileFormatWriter.scala

+++

b/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/FileFormatWriter.scala

@@ -183,15 +183,15 @@ object FileFormatWriter extends Logging {

val commitMsgs = ret.map(_.commitMsg)

committer.commitJob(job, commitMsgs)

- logInfo(s"Job ${job.getJobID} committed.")

+ logInfo(s"Write Job ${description.uuid} committed.")

processStats(description.statsTrackers, ret.map(_.summary.stats))

- logInfo(s"Finished processing stats for job ${job.getJobID}.")

+ logInfo(s"Finished processing stats for write job ${description.uuid}.")

// return a set of all the partition paths that were updated during this

job

ret.map(_.summary.updatedPartitions).reduceOption(_ ++

_).getOrElse(Set.empty)

} catch { case cause: Throwable =>

- logError(s"Aborting job ${job.getJobID}.", cause)

+ logError(s"Aborting job ${description.uuid}.", cause)

committer.abortJob(job)

throw new SparkException("Job aborted.", cause)

}

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

spark git commit: [SPARK-17952][SQL] Nested Java beans support in createDataFrame

Repository: spark

Updated Branches:

refs/heads/master ab1650d29 -> 434ada12a

[SPARK-17952][SQL] Nested Java beans support in createDataFrame

## What changes were proposed in this pull request?

When constructing a DataFrame from a Java bean, using nested beans throws an

error despite

[documentation](http://spark.apache.org/docs/latest/sql-programming-guide.html#inferring-the-schema-using-reflection)

stating otherwise. This PR aims to add that support.

This PR does not yet add nested beans support in array or List fields. This can

be added later or in another PR.

## How was this patch tested?

Nested bean was added to the appropriate unit test.

Also manually tested in Spark shell on code emulating the referenced JIRA:

```

scala> import scala.beans.BeanProperty

import scala.beans.BeanProperty

scala> class SubCategory(BeanProperty var id: String, BeanProperty var name:

String) extends Serializable

defined class SubCategory

scala> class Category(BeanProperty var id: String, BeanProperty var

subCategory: SubCategory) extends Serializable

defined class Category

scala> import scala.collection.JavaConverters._

import scala.collection.JavaConverters._

scala> spark.createDataFrame(Seq(new Category("s-111", new

SubCategory("sc-111", "Sub-1"))).asJava, classOf[Category])

java.lang.IllegalArgumentException: The value (SubCategory65130cf2) of the type

(SubCategory) cannot be converted to struct

at

org.apache.spark.sql.catalyst.CatalystTypeConverters$StructConverter.toCatalystImpl(CatalystTypeConverters.scala:262)

at

org.apache.spark.sql.catalyst.CatalystTypeConverters$StructConverter.toCatalystImpl(CatalystTypeConverters.scala:238)

at

org.apache.spark.sql.catalyst.CatalystTypeConverters$CatalystTypeConverter.toCatalyst(CatalystTypeConverters.scala:103)

at

org.apache.spark.sql.catalyst.CatalystTypeConverters$$anonfun$createToCatalystConverter$2.apply(CatalystTypeConverters.scala:396)

at

org.apache.spark.sql.SQLContext$$anonfun$beansToRows$1$$anonfun$apply$1.apply(SQLContext.scala:1108)

at

org.apache.spark.sql.SQLContext$$anonfun$beansToRows$1$$anonfun$apply$1.apply(SQLContext.scala:1108)

at

scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234)

at

scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234)

at

scala.collection.IndexedSeqOptimized$class.foreach(IndexedSeqOptimized.scala:33)

at scala.collection.mutable.ArrayOps$ofRef.foreach(ArrayOps.scala:186)

at scala.collection.TraversableLike$class.map(TraversableLike.scala:234)

at scala.collection.mutable.ArrayOps$ofRef.map(ArrayOps.scala:186)

at

org.apache.spark.sql.SQLContext$$anonfun$beansToRows$1.apply(SQLContext.scala:1108)

at

org.apache.spark.sql.SQLContext$$anonfun$beansToRows$1.apply(SQLContext.scala:1106)

at scala.collection.Iterator$$anon$11.next(Iterator.scala:410)

at scala.collection.Iterator$class.toStream(Iterator.scala:1320)

at scala.collection.AbstractIterator.toStream(Iterator.scala:1334)

at scala.collection.TraversableOnce$class.toSeq(TraversableOnce.scala:298)

at scala.collection.AbstractIterator.toSeq(Iterator.scala:1334)

at org.apache.spark.sql.SparkSession.createDataFrame(SparkSession.scala:423)

... 51 elided

```

New behavior:

```

scala> spark.createDataFrame(Seq(new Category("s-111", new

SubCategory("sc-111", "Sub-1"))).asJava, classOf[Category])

res0: org.apache.spark.sql.DataFrame = [id: string, subCategory: struct]

scala> res0.show()

+-+---+

| id|subCategory|

+-+---+

|s-111|[sc-111, Sub-1]|

+-+---+

```

Closes #22527 from michalsenkyr/SPARK-17952.

Authored-by: Michal Senkyr

Signed-off-by: Takuya UESHIN

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/434ada12

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/434ada12

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/434ada12

Branch: refs/heads/master

Commit: 434ada12a06d1d2d3cb19c4eac5a52f330bb236c

Parents: ab1650d

Author: Michal Senkyr

Authored: Fri Oct 5 17:48:52 2018 +0900

Committer: Takuya UESHIN

Committed: Fri Oct 5 17:48:52 2018 +0900

--

.../scala/org/apache/spark/sql/SQLContext.scala | 29 +--

.../apache/spark/sql/JavaDataFrameSuite.java| 30 +++-

2 files changed, 50 insertions(+), 9 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/434ada12/sql/core/src/main/scala/org/apache/spark/sql/SQLContext.scala

--

diff --git a/sql/core/src/main/scala/org/apache/spark/sql/SQLContext.scala

b/sql/core/src/main/scala/org/apache/spark/sql/SQLContext.scala

index af60184..dfb12f2 100644

--- a/sql/core/src/main/scala/org/apache/spark/sql/SQLContext.scala

+++

spark git commit: [SPARK-24601] Update Jackson to 2.9.6

Repository: spark

Updated Branches:

refs/heads/master 459700727 -> ab1650d29

[SPARK-24601] Update Jackson to 2.9.6

Hi all,

Jackson is incompatible with upstream versions, therefore bump the Jackson

version to a more recent one. I bumped into some issues with Azure CosmosDB

that is using a more recent version of Jackson. This can be fixed by adding

exclusions and then it works without any issues. So no breaking changes in the

API's.

I would also consider bumping the version of Jackson in Spark. I would suggest

to keep up to date with the dependencies, since in the future this issue will

pop up more frequently.

## What changes were proposed in this pull request?

Bump Jackson to 2.9.6

## How was this patch tested?

Compiled and tested it locally to see if anything broke.

Please review http://spark.apache.org/contributing.html before opening a pull

request.

Closes #21596 from Fokko/fd-bump-jackson.

Authored-by: Fokko Driesprong

Signed-off-by: hyukjinkwon

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/ab1650d2

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/ab1650d2

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/ab1650d2

Branch: refs/heads/master

Commit: ab1650d2938db4901b8c28df945d6a0691a19d31

Parents: 4597007

Author: Fokko Driesprong

Authored: Fri Oct 5 16:40:08 2018 +0800

Committer: hyukjinkwon

Committed: Fri Oct 5 16:40:08 2018 +0800

--

.../deploy/rest/SubmitRestProtocolMessage.scala | 2 +-

.../apache/spark/rdd/RDDOperationScope.scala| 2 +-

.../scala/org/apache/spark/status/KVUtils.scala | 2 +-

.../status/api/v1/JacksonMessageWriter.scala| 2 +-

.../org/apache/spark/status/api/v1/api.scala| 3 ++

dev/deps/spark-deps-hadoop-2.6 | 16 +-

dev/deps/spark-deps-hadoop-2.7 | 16 +-

dev/deps/spark-deps-hadoop-3.1 | 16 +-

pom.xml | 7 ++---

.../expressions/JsonExpressionsSuite.scala | 7 +

.../datasources/json/JsonBenchmarks.scala | 33 +++-

11 files changed, 59 insertions(+), 47 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/ab1650d2/core/src/main/scala/org/apache/spark/deploy/rest/SubmitRestProtocolMessage.scala

--

diff --git

a/core/src/main/scala/org/apache/spark/deploy/rest/SubmitRestProtocolMessage.scala

b/core/src/main/scala/org/apache/spark/deploy/rest/SubmitRestProtocolMessage.scala

index ef5a7e3..97b689c 100644

---

a/core/src/main/scala/org/apache/spark/deploy/rest/SubmitRestProtocolMessage.scala

+++

b/core/src/main/scala/org/apache/spark/deploy/rest/SubmitRestProtocolMessage.scala

@@ -36,7 +36,7 @@ import org.apache.spark.util.Utils

* (2) the Spark version of the client / server

* (3) an optional message

*/

-@JsonInclude(Include.NON_NULL)

+@JsonInclude(Include.NON_ABSENT)

@JsonAutoDetect(getterVisibility = Visibility.ANY, setterVisibility =

Visibility.ANY)

@JsonPropertyOrder(alphabetic = true)

private[rest] abstract class SubmitRestProtocolMessage {

http://git-wip-us.apache.org/repos/asf/spark/blob/ab1650d2/core/src/main/scala/org/apache/spark/rdd/RDDOperationScope.scala

--

diff --git a/core/src/main/scala/org/apache/spark/rdd/RDDOperationScope.scala

b/core/src/main/scala/org/apache/spark/rdd/RDDOperationScope.scala

index 53d69ba..3abb2d8 100644

--- a/core/src/main/scala/org/apache/spark/rdd/RDDOperationScope.scala

+++ b/core/src/main/scala/org/apache/spark/rdd/RDDOperationScope.scala

@@ -41,7 +41,7 @@ import org.apache.spark.internal.Logging

* There is no particular relationship between an operation scope and a stage

or a job.

* A scope may live inside one stage (e.g. map) or span across multiple jobs

(e.g. take).

*/

-@JsonInclude(Include.NON_NULL)

+@JsonInclude(Include.NON_ABSENT)

@JsonPropertyOrder(Array("id", "name", "parent"))

private[spark] class RDDOperationScope(

val name: String,

http://git-wip-us.apache.org/repos/asf/spark/blob/ab1650d2/core/src/main/scala/org/apache/spark/status/KVUtils.scala

--

diff --git a/core/src/main/scala/org/apache/spark/status/KVUtils.scala

b/core/src/main/scala/org/apache/spark/status/KVUtils.scala

index 99b1843..45348be 100644

--- a/core/src/main/scala/org/apache/spark/status/KVUtils.scala

+++ b/core/src/main/scala/org/apache/spark/status/KVUtils.scala

@@ -42,7 +42,7 @@ private[spark] object KVUtils extends Logging {

private[spark] class KVStoreScalaSerializer extends KVStoreSerializer {

mapper.registerModule(DefaultScalaModule)

-

svn commit: r29888 - in /dev/spark/3.0.0-SNAPSHOT-2018_10_05_00_02-4597007-docs: ./ _site/ _site/api/ _site/api/R/ _site/api/java/ _site/api/java/lib/ _site/api/java/org/ _site/api/java/org/apache/ _s

Author: pwendell Date: Fri Oct 5 07:17:16 2018 New Revision: 29888 Log: Apache Spark 3.0.0-SNAPSHOT-2018_10_05_00_02-4597007 docs [This commit notification would consist of 1485 parts, which exceeds the limit of 50 ones, so it was shortened to the summary.] - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org