Stephen-Robin opened a new issue #2195:

URL: https://github.com/apache/iceberg/issues/2195

There is a high probability of data loss after compaction through some

rewrite data files test. After compacting a table ,some rows will be lost.

The reproduction process is as follows:

1) create a new iceberg table

create table test (id int, age int) using iceberg;

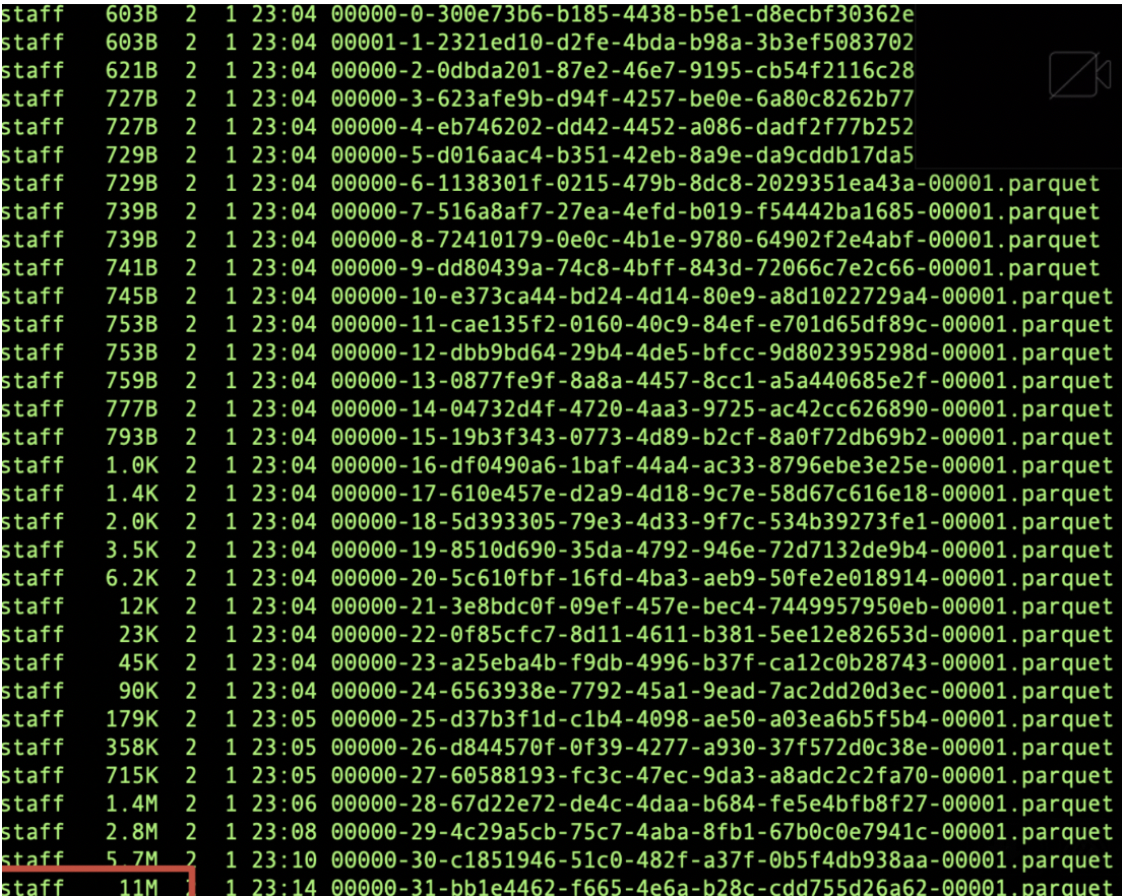

2) Write initial data, Keep writing data until the generated file size is

more than 10M(splitTargetSize), Which is 11M in this example

insert into test values (1,2),(3,4);

insert into test select * from test;

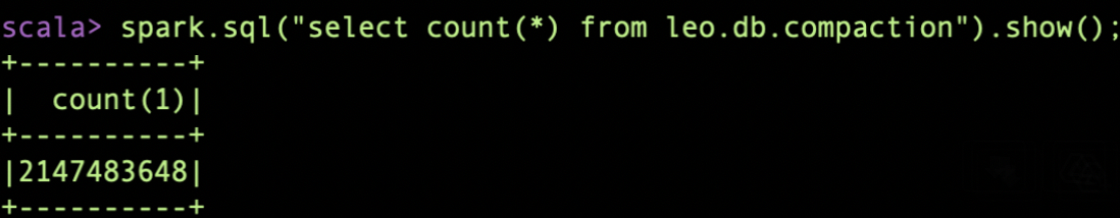

3) count the number of rows in the table: `select count(*) from table`

4) execute compaction

The compaction params like splitTargetSize are 10M and other parameters

such as splitOpenFileCost(4M) are default values.

`Actions.forTable(table).rewriteDataFiles.targetSizeInBytes(10 * 10 *

1024).execute()`

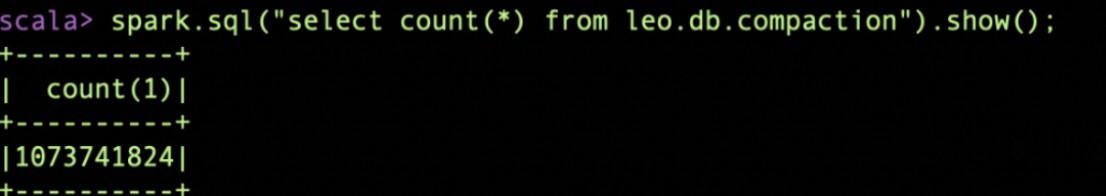

5) count the number of rows in the table: `select count(*) from table`

Actually, The origin 11M file has been split into a 10M file A and a 1M

file B after bin pack. However the CombinedScanTask with only one FileScanTask

will be filtered out.

When RewriteFiles.commit() is executed finally, because the 1M file B

exists, the 11M manifest corresponding files will be deleted (status set 2).

Regardless of whether the remaining 1M file B was successfully written, we

lost the 10M file A.

The result shows that the number of rows was exactly half missing, because

the 11M parquet file was missing in this example, and the remaining 1M file B

did not rewrite the new data file because the origin Parquet row group split

point was not found.

In my opinion, the Iceberg RewriteFile operation not only combines small

files, but also needs to split large files.

Flink may need to deal with more small file merging cases, in addition to

small file merging, Spark also need to consider as much as possible the

reasonable segmentation of large files. In either case, it is necessary to

ensure the reliability of the data

Please anyone confirm if this is a problem, If there is a problem, I have

tried to fix and submit a pr right away.

Thanks

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

[email protected]

---------------------------------------------------------------------

To unsubscribe, e-mail: [email protected]

For additional commands, e-mail: [email protected]