[GitHub] gaodayue opened a new pull request #6242: fix incorrect check of maxSemiJoinRowsInMemory

gaodayue opened a new pull request #6242: fix incorrect check of maxSemiJoinRowsInMemory URL: https://github.com/apache/incubator-druid/pull/6242 We should check maxSemiJoinRowsInMemory on right table's output rows count instead of number of values inside one row. Added a test case for that. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] gaodayue opened a new issue #6243: semi join query throws AssertionError

gaodayue opened a new issue #6243: semi join query throws AssertionError URL: https://github.com/apache/incubator-druid/issues/6243 Example query: ```sql SELECT COUNT(*) FROM ( SELECT DISTINCT dim2 FROM druid.foo WHERE SUBSTRING(dim2, 1, 1) IN ( SELECT SUBSTRING(dim1, 1, 1) FROM druid.foo WHERE dim1 <> '' ) AND __time >= '2000-01-01' AND __time < '2002-01-01' ) ``` When outer query contains AND filters like above, druid throws AssertionError when constructing Filter. ``` java.lang.AssertionError: AND(AND(>=($0, 2000-01-01 00:00:00), <($0, 2002-01-01 00:00:00)), OR(=(SUBSTRING($3, 1, 1), '1'), =(SUBSTRING($3, 1, 1), '2'), =(SUBSTRING($3, 1, 1), 'a'), =(SUBSTRING($3, 1, 1), 'd'))) at org.apache.calcite.rel.core.Filter.(Filter.java:74) at org.apache.calcite.rel.logical.LogicalFilter.(LogicalFilter.java:71) at org.apache.calcite.rel.logical.LogicalFilter.copy(LogicalFilter.java:136) at org.apache.calcite.rel.logical.LogicalFilter.copy(LogicalFilter.java:48) at io.druid.sql.calcite.rel.DruidSemiJoin.getLeftRelWithFilter(DruidSemiJoin.java:345) ``` The reason is calcite requires filter condition to be flattened, while DruidSemiJoin#getLeftRelWithFilter may construct unflatten filter. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] gaodayue opened a new pull request #6244: fix AssertionError of semi join query

gaodayue opened a new pull request #6244: fix AssertionError of semi join query URL: https://github.com/apache/incubator-druid/pull/6244 Fixed #6243 This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] fjy closed pull request #6191: SQL: Support more result formats, add columns header.

fjy closed pull request #6191: SQL: Support more result formats, add columns

header.

URL: https://github.com/apache/incubator-druid/pull/6191

This is a PR merged from a forked repository.

As GitHub hides the original diff on merge, it is displayed below for

the sake of provenance:

As this is a foreign pull request (from a fork), the diff is supplied

below (as it won't show otherwise due to GitHub magic):

diff --git a/docs/content/ingestion/index.md b/docs/content/ingestion/index.md

index 02fa1cc548e..378b308d9e9 100644

--- a/docs/content/ingestion/index.md

+++ b/docs/content/ingestion/index.md

@@ -147,7 +147,7 @@ This is a special variation of the JSON ParseSpec that

lower cases all the colum

CSV ParseSpec

-Use this with the String Parser to load CSV. Strings are parsed using the

net.sf.opencsv library.

+Use this with the String Parser to load CSV. Strings are parsed using the

com.opencsv library.

| Field | Type | Description | Required |

|---|--|-|--|

diff --git a/docs/content/querying/sql.md b/docs/content/querying/sql.md

index ffd655a76f6..0f15b936129 100644

--- a/docs/content/querying/sql.md

+++ b/docs/content/querying/sql.md

@@ -339,10 +339,6 @@ of configuration.

You can make Druid SQL queries using JSON over HTTP by posting to the endpoint

`/druid/v2/sql/`. The request should

be a JSON object with a "query" field, like `{"query" : "SELECT COUNT(*) FROM

data_source WHERE foo = 'bar'"}`.

-Results are available in two formats: "object" (the default; a JSON array of

JSON objects), and "array" (a JSON array

-of JSON arrays). In "object" form, each row's field names will match the

column names from your SQL query. In "array"

-form, each row's values are returned in the order specified in your SQL query.

-

You can use _curl_ to send SQL queries from the command-line:

```bash

@@ -353,9 +349,8 @@ $ curl -XPOST -H'Content-Type: application/json'

http://BROKER:8082/druid/v2/sql

[{"TheCount":24433}]

```

-Metadata is available over the HTTP API by querying the ["INFORMATION_SCHEMA"

tables](#retrieving-metadata).

-

-Finally, you can also provide [connection context

parameters](#connection-context) by adding a "context" map, like:

+There are a variety of [connection context parameters](#connection-context)

you can provide by adding a "context" map,

+like:

```json

{

@@ -366,6 +361,45 @@ Finally, you can also provide [connection context

parameters](#connection-contex

}

```

+Metadata is available over the HTTP API by querying [system

tables](#retrieving-metadata).

+

+ Responses

+

+All Druid SQL HTTP responses include a "X-Druid-Column-Names" header with a

JSON-encoded array of columns that

+will appear in the result rows and an "X-Druid-Column-Types" header with a

JSON-encoded array of

+[types](#data-types-and-casts).

+

+For the result rows themselves, Druid SQL supports a variety of result

formats. You can

+specify these by adding a "resultFormat" parameter, like:

+

+```json

+{

+ "query" : "SELECT COUNT(*) FROM data_source WHERE foo = 'bar' AND __time >

TIMESTAMP '2000-01-01 00:00:00'",

+ "resultFormat" : "object"

+}

+```

+

+The supported result formats are:

+

+|Format|Description|Content-Type|

+|--|---||

+|`object`|The default, a JSON array of JSON objects. Each object's field names

match the columns returned by the SQL query, and are provided in the same order

as the SQL query.|application/json|

+|`array`|JSON array of JSON arrays. Each inner array has elements matching the

columns returned by the SQL query, in order.|application/json|

+|`objectLines`|Like "object", but the JSON objects are separated by newlines

instead of being wrapped in a JSON array. This can make it easier to parse the

entire response set as a stream, if you do not have ready access to a streaming

JSON parser. To make it possible to detect a truncated response, this format

includes a trailer of one blank line.|text/plain|

+|`arrayLines`|Like "array", but the JSON arrays are separated by newlines

instead of being wrapped in a JSON array. This can make it easier to parse the

entire response set as a stream, if you do not have ready access to a streaming

JSON parser. To make it possible to detect a truncated response, this format

includes a trailer of one blank line.|text/plain|

+|`csv`|Comma-separated values, with one row per line. Individual field values

may be escaped by being surrounded in double quotes. If double quotes appear in

a field value, they will be escaped by replacing them with double-double-quotes

like `""this""`. To make it possible to detect a truncated response, this

format includes a trailer of one blank line.|text/csv|

+

+Errors that occur before the response body is sent will be reported in JSON,

with an HTTP 500 status code, in the

+same format as [native Druid query errors](../querying#query-errors). If an

error occurs while the response body is

+being sent, at that point it is too late

[GitHub] fjy closed pull request #6240: [Backport] SQL: Fix precision of TIMESTAMP types.

fjy closed pull request #6240: [Backport] SQL: Fix precision of TIMESTAMP types.

URL: https://github.com/apache/incubator-druid/pull/6240

This is a PR merged from a forked repository.

As GitHub hides the original diff on merge, it is displayed below for

the sake of provenance:

As this is a foreign pull request (from a fork), the diff is supplied

below (as it won't show otherwise due to GitHub magic):

diff --git

a/sql/src/main/java/io/druid/sql/calcite/expression/OperatorConversions.java

b/sql/src/main/java/io/druid/sql/calcite/expression/OperatorConversions.java

index c129388d2a8..f75186d4ab5 100644

--- a/sql/src/main/java/io/druid/sql/calcite/expression/OperatorConversions.java

+++ b/sql/src/main/java/io/druid/sql/calcite/expression/OperatorConversions.java

@@ -20,6 +20,7 @@

package io.druid.sql.calcite.expression;

import com.google.common.base.Preconditions;

+import io.druid.sql.calcite.planner.Calcites;

import io.druid.sql.calcite.planner.PlannerContext;

import io.druid.sql.calcite.table.RowSignature;

import org.apache.calcite.rex.RexCall;

@@ -131,18 +132,16 @@ public OperatorBuilder kind(final SqlKind kind)

public OperatorBuilder returnType(final SqlTypeName typeName)

{

- this.returnTypeInference = ReturnTypes.explicit(typeName);

+ this.returnTypeInference = ReturnTypes.explicit(

+ factory -> Calcites.createSqlType(factory, typeName)

+ );

return this;

}

public OperatorBuilder nullableReturnType(final SqlTypeName typeName)

{

this.returnTypeInference = ReturnTypes.explicit(

- factory ->

- factory.createTypeWithNullability(

- factory.createSqlType(typeName),

- true

- )

+ factory -> Calcites.createSqlTypeWithNullability(factory, typeName,

true)

);

return this;

}

diff --git a/sql/src/main/java/io/druid/sql/calcite/planner/Calcites.java

b/sql/src/main/java/io/druid/sql/calcite/planner/Calcites.java

index bf39def562b..dfcfad96868 100644

--- a/sql/src/main/java/io/druid/sql/calcite/planner/Calcites.java

+++ b/sql/src/main/java/io/druid/sql/calcite/planner/Calcites.java

@@ -32,10 +32,13 @@

import io.druid.sql.calcite.schema.DruidSchema;

import io.druid.sql.calcite.schema.InformationSchema;

import org.apache.calcite.jdbc.CalciteSchema;

+import org.apache.calcite.rel.type.RelDataType;

+import org.apache.calcite.rel.type.RelDataTypeFactory;

import org.apache.calcite.rex.RexLiteral;

import org.apache.calcite.rex.RexNode;

import org.apache.calcite.schema.Schema;

import org.apache.calcite.schema.SchemaPlus;

+import org.apache.calcite.sql.SqlCollation;

import org.apache.calcite.sql.SqlKind;

import org.apache.calcite.sql.type.SqlTypeName;

import org.apache.calcite.util.ConversionUtil;

@@ -163,6 +166,46 @@ public static StringComparator

getStringComparatorForValueType(ValueType valueTy

}

}

+ /**

+ * Like RelDataTypeFactory.createSqlType, but creates types that align best

with how Druid represents them.

+ */

+ public static RelDataType createSqlType(final RelDataTypeFactory

typeFactory, final SqlTypeName typeName)

+ {

+return createSqlTypeWithNullability(typeFactory, typeName, false);

+ }

+

+ /**

+ * Like RelDataTypeFactory.createSqlTypeWithNullability, but creates types

that align best with how Druid

+ * represents them.

+ */

+ public static RelDataType createSqlTypeWithNullability(

+ final RelDataTypeFactory typeFactory,

+ final SqlTypeName typeName,

+ final boolean nullable

+ )

+ {

+final RelDataType dataType;

+

+switch (typeName) {

+ case TIMESTAMP:

+// Our timestamps are down to the millisecond (precision = 3).

+dataType = typeFactory.createSqlType(typeName, 3);

+break;

+ case CHAR:

+ case VARCHAR:

+dataType = typeFactory.createTypeWithCharsetAndCollation(

+typeFactory.createSqlType(typeName),

+Calcites.defaultCharset(),

+SqlCollation.IMPLICIT

+);

+break;

+ default:

+dataType = typeFactory.createSqlType(typeName);

+}

+

+return typeFactory.createTypeWithNullability(dataType, nullable);

+ }

+

/**

* Calcite expects "TIMESTAMP" types to be an instant that has the expected

local time fields if printed as UTC.

*

diff --git a/sql/src/main/java/io/druid/sql/calcite/planner/DruidPlanner.java

b/sql/src/main/java/io/druid/sql/calcite/planner/DruidPlanner.java

index 8779d793e06..4a9feae0cd2 100644

--- a/sql/src/main/java/io/druid/sql/calcite/planner/DruidPlanner.java

+++ b/sql/src/main/java/io/druid/sql/calcite/planner/DruidPlanner.java

@@ -321,7 +321,7 @@ private PlannerResult planExplanation(

return new PlannerResult(

resultsSupplier,

typeFactory.createStructType(

-ImmutableList.of(typeFactory.createSqlType(SqlTypeName.VARCHAR)),

+ImmutableList.of(Calcites.createSqlType(typeFactory,

[GitHub] gianm commented on issue #6206: Fix NPE in KafkaSupervisor.checkpointTaskGroup

gianm commented on issue #6206: Fix NPE in KafkaSupervisor.checkpointTaskGroup URL: https://github.com/apache/incubator-druid/pull/6206#issuecomment-416115263 @jihoonson Got it, could you please merge master into this branch in order to get that? This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] gianm closed issue #6021: NPE in KafkaSupervisor.checkpointTaskGroup

gianm closed issue #6021: NPE in KafkaSupervisor.checkpointTaskGroup URL: https://github.com/apache/incubator-druid/issues/6021 This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] gianm closed pull request #6206: Fix NPE in KafkaSupervisor.checkpointTaskGroup

gianm closed pull request #6206: Fix NPE in KafkaSupervisor.checkpointTaskGroup

URL: https://github.com/apache/incubator-druid/pull/6206

This is a PR merged from a forked repository.

As GitHub hides the original diff on merge, it is displayed below for

the sake of provenance:

As this is a foreign pull request (from a fork), the diff is supplied

below (as it won't show otherwise due to GitHub magic):

diff --git

a/extensions-core/kafka-indexing-service/src/main/java/io/druid/indexing/kafka/supervisor/KafkaSupervisor.java

b/extensions-core/kafka-indexing-service/src/main/java/io/druid/indexing/kafka/supervisor/KafkaSupervisor.java

index 8c9bb599ada..8e12f591461 100644

---

a/extensions-core/kafka-indexing-service/src/main/java/io/druid/indexing/kafka/supervisor/KafkaSupervisor.java

+++

b/extensions-core/kafka-indexing-service/src/main/java/io/druid/indexing/kafka/supervisor/KafkaSupervisor.java

@@ -146,6 +146,8 @@

*/

private class TaskGroup

{

+final int groupId;

+

// This specifies the partitions and starting offsets for this task group.

It is set on group creation from the data

// in [partitionGroups] and never changes during the lifetime of this task

group, which will live until a task in

// this task group has completed successfully, at which point this will be

destroyed and a new task group will be

@@ -161,11 +163,13 @@

final String baseSequenceName;

TaskGroup(

+int groupId,

ImmutableMap partitionOffsets,

Optional minimumMessageTime,

Optional maximumMessageTime

)

{

+ this.groupId = groupId;

this.partitionOffsets = partitionOffsets;

this.minimumMessageTime = minimumMessageTime;

this.maximumMessageTime = maximumMessageTime;

@@ -187,9 +191,21 @@ int addNewCheckpoint(Map checkpoint)

private static class TaskData

{

+@Nullable

volatile TaskStatus status;

+@Nullable

volatile DateTime startTime;

volatile Map currentOffsets = new HashMap<>();

+

+@Override

+public String toString()

+{

+ return "TaskData{" +

+ "status=" + status +

+ ", startTime=" + startTime +

+ ", currentOffsets=" + currentOffsets +

+ '}';

+}

}

// Map<{group ID}, {actively reading task group}>; see documentation for

TaskGroup class

@@ -718,8 +734,8 @@ public void handle() throws ExecutionException,

InterruptedException

log.info("Already checkpointed with offsets [%s]",

checkpoints.lastEntry().getValue());

return;

}

-final Map newCheckpoint =

checkpointTaskGroup(taskGroupId, false).get();

-taskGroups.get(taskGroupId).addNewCheckpoint(newCheckpoint);

+final Map newCheckpoint =

checkpointTaskGroup(taskGroup, false).get();

+taskGroup.addNewCheckpoint(newCheckpoint);

log.info("Handled checkpoint notice, new checkpoint is [%s] for

taskGroup [%s]", newCheckpoint, taskGroupId);

}

}

@@ -785,10 +801,13 @@ void resetInternal(DataSourceMetadata dataSourceMetadata)

:

currentMetadata.getKafkaPartitions()

.getPartitionOffsetMap()

.get(resetPartitionOffset.getKey());

- final TaskGroup partitionTaskGroup =

taskGroups.get(getTaskGroupIdForPartition(resetPartitionOffset.getKey()));

- if (partitionOffsetInMetadataStore != null ||

- (partitionTaskGroup != null &&

partitionTaskGroup.partitionOffsets.get(resetPartitionOffset.getKey())

-

.equals(resetPartitionOffset.getValue( {

+ final TaskGroup partitionTaskGroup = taskGroups.get(

+ getTaskGroupIdForPartition(resetPartitionOffset.getKey())

+ );

+ final boolean isSameOffset = partitionTaskGroup != null

+ &&

partitionTaskGroup.partitionOffsets.get(resetPartitionOffset.getKey())

+

.equals(resetPartitionOffset.getValue());

+ if (partitionOffsetInMetadataStore != null || isSameOffset) {

doReset = true;

break;

}

@@ -1012,7 +1031,7 @@ private void discoverTasks() throws ExecutionException,

InterruptedException, Ti

List futureTaskIds = Lists.newArrayList();

List> futures = Lists.newArrayList();

List tasks = taskStorage.getActiveTasks();

-final Set taskGroupsToVerify = new HashSet<>();

+final Map taskGroupsToVerify = new HashMap<>();

for (Task task : tasks) {

if (!(task instanceof KafkaIndexTask) ||

!dataSource.equals(task.getDataSource())) {

@@ -1119,6 +1138,7 @@ public Boolean apply(KafkaIndexTask.Status status)

k -> {

[GitHub] gianm opened a new pull request #6245: [Backport] Fix NPE in KafkaSupervisor.checkpointTaskGroup

gianm opened a new pull request #6245: [Backport] Fix NPE in KafkaSupervisor.checkpointTaskGroup URL: https://github.com/apache/incubator-druid/pull/6245 Backport of #6206 to 0.12.3. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] fjy closed pull request #6229: [Backport] SQL: Finalize aggregations for inner queries when necessary.

fjy closed pull request #6229: [Backport] SQL: Finalize aggregations for inner

queries when necessary.

URL: https://github.com/apache/incubator-druid/pull/6229

This is a PR merged from a forked repository.

As GitHub hides the original diff on merge, it is displayed below for

the sake of provenance:

As this is a foreign pull request (from a fork), the diff is supplied

below (as it won't show otherwise due to GitHub magic):

diff --git

a/extensions-core/histogram/src/main/java/io/druid/query/aggregation/histogram/sql/QuantileSqlAggregator.java

b/extensions-core/histogram/src/main/java/io/druid/query/aggregation/histogram/sql/QuantileSqlAggregator.java

index b9c8d3d3d46..28c0d65b10d 100644

---

a/extensions-core/histogram/src/main/java/io/druid/query/aggregation/histogram/sql/QuantileSqlAggregator.java

+++

b/extensions-core/histogram/src/main/java/io/druid/query/aggregation/histogram/sql/QuantileSqlAggregator.java

@@ -48,6 +48,7 @@

import org.apache.calcite.sql.type.SqlTypeFamily;

import org.apache.calcite.sql.type.SqlTypeName;

+import javax.annotation.Nullable;

import java.util.ArrayList;

import java.util.List;

@@ -62,6 +63,7 @@ public SqlAggFunction calciteFunction()

return FUNCTION_INSTANCE;

}

+ @Nullable

@Override

public Aggregation toDruidAggregation(

final PlannerContext plannerContext,

@@ -70,7 +72,8 @@ public Aggregation toDruidAggregation(

final String name,

final AggregateCall aggregateCall,

final Project project,

- final List existingAggregations

+ final List existingAggregations,

+ final boolean finalizeAggregations

)

{

final DruidExpression input = Expressions.toDruidExpression(

diff --git

a/sql/src/main/java/io/druid/sql/calcite/aggregation/DimensionExpression.java

b/sql/src/main/java/io/druid/sql/calcite/aggregation/DimensionExpression.java

index d5da02d37b7..abc697c59e7 100644

---

a/sql/src/main/java/io/druid/sql/calcite/aggregation/DimensionExpression.java

+++

b/sql/src/main/java/io/druid/sql/calcite/aggregation/DimensionExpression.java

@@ -20,13 +20,13 @@

package io.druid.sql.calcite.aggregation;

import com.google.common.collect.ImmutableList;

-import io.druid.java.util.common.StringUtils;

import io.druid.math.expr.ExprMacroTable;

import io.druid.query.dimension.DefaultDimensionSpec;

import io.druid.query.dimension.DimensionSpec;

import io.druid.segment.VirtualColumn;

import io.druid.segment.column.ValueType;

import io.druid.sql.calcite.expression.DruidExpression;

+import io.druid.sql.calcite.planner.Calcites;

import javax.annotation.Nullable;

import java.util.List;

@@ -85,7 +85,7 @@ public DimensionSpec toDimensionSpec()

@Nullable

public String getVirtualColumnName()

{

-return expression.isSimpleExtraction() ? null : StringUtils.format("%s:v",

outputName);

+return expression.isSimpleExtraction() ? null :

Calcites.makePrefixedName(outputName, "v");

}

@Override

diff --git

a/sql/src/main/java/io/druid/sql/calcite/aggregation/SqlAggregator.java

b/sql/src/main/java/io/druid/sql/calcite/aggregation/SqlAggregator.java

index e6983ffb87a..dcf2c4e8d20 100644

--- a/sql/src/main/java/io/druid/sql/calcite/aggregation/SqlAggregator.java

+++ b/sql/src/main/java/io/druid/sql/calcite/aggregation/SqlAggregator.java

@@ -53,6 +53,9 @@

* @param project project that should be applied before

aggregation; may be null

* @param existingAggregations existing aggregations for this query; useful

for re-using aggregations. May be safely

* ignored if you do not want to re-use existing

aggregations.

+ * @param finalizeAggregations true if this query should include explicit

finalization for all of its

+ * aggregators, where required. This is set for

subqueries where Druid's native query

+ * layer does not do this automatically.

*

* @return aggregation, or null if the call cannot be translated

*/

@@ -64,6 +67,7 @@ Aggregation toDruidAggregation(

String name,

AggregateCall aggregateCall,

Project project,

- List existingAggregations

+ List existingAggregations,

+ boolean finalizeAggregations

);

}

diff --git

a/sql/src/main/java/io/druid/sql/calcite/aggregation/builtin/ApproxCountDistinctSqlAggregator.java

b/sql/src/main/java/io/druid/sql/calcite/aggregation/builtin/ApproxCountDistinctSqlAggregator.java

index 161c3ef9c31..0abdb8a7206 100644

---

a/sql/src/main/java/io/druid/sql/calcite/aggregation/builtin/ApproxCountDistinctSqlAggregator.java

+++

b/sql/src/main/java/io/druid/sql/calcite/aggregation/builtin/ApproxCountDistinctSqlAggregator.java

@@ -22,9 +22,9 @@

import com.google.common.collect.ImmutableList;

import com.google.common.collect.Iterables;

import io.druid.java.util.common.ISE;

-import io.druid.java.util.common.StringUtils;

import io.druid.query.aggregation.AggregatorFactory;

import

[GitHub] fjy closed pull request #6236: Fix all inspection errors currently reported.

fjy closed pull request #6236: Fix all inspection errors currently reported.

URL: https://github.com/apache/incubator-druid/pull/6236

This is a PR merged from a forked repository.

As GitHub hides the original diff on merge, it is displayed below for

the sake of provenance:

As this is a foreign pull request (from a fork), the diff is supplied

below (as it won't show otherwise due to GitHub magic):

diff --git a/api/src/test/java/io/druid/timeline/DataSegmentTest.java

b/api/src/test/java/io/druid/timeline/DataSegmentTest.java

index fc5bd282d8e..43b95598c25 100644

--- a/api/src/test/java/io/druid/timeline/DataSegmentTest.java

+++ b/api/src/test/java/io/druid/timeline/DataSegmentTest.java

@@ -23,7 +23,6 @@

import com.fasterxml.jackson.databind.ObjectMapper;

import com.google.common.collect.ImmutableList;

import com.google.common.collect.ImmutableMap;

-import com.google.common.collect.Lists;

import com.google.common.collect.RangeSet;

import com.google.common.collect.Sets;

import io.druid.TestObjectMapper;

@@ -40,6 +39,7 @@

import org.junit.Before;

import org.junit.Test;

+import java.util.ArrayList;

import java.util.Arrays;

import java.util.Collections;

import java.util.List;

@@ -238,7 +238,7 @@ public void testBucketMonthComparator()

makeDataSegment("test2", "2011-02-02/2011-02-03", "a"),

};

-List shuffled = Lists.newArrayList(sortedOrder);

+List shuffled = new ArrayList<>(Arrays.asList(sortedOrder));

Collections.shuffle(shuffled);

Set theSet =

Sets.newTreeSet(DataSegment.bucketMonthComparator());

diff --git

a/benchmarks/src/main/java/io/druid/benchmark/query/timecompare/TimeCompareBenchmark.java

b/benchmarks/src/main/java/io/druid/benchmark/query/timecompare/TimeCompareBenchmark.java

index 97522d5d921..ccf8e3f6fc0 100644

---

a/benchmarks/src/main/java/io/druid/benchmark/query/timecompare/TimeCompareBenchmark.java

+++

b/benchmarks/src/main/java/io/druid/benchmark/query/timecompare/TimeCompareBenchmark.java

@@ -22,7 +22,6 @@

import com.fasterxml.jackson.databind.InjectableValues;

import com.fasterxml.jackson.databind.ObjectMapper;

import com.google.common.collect.Lists;

-import com.google.common.collect.Maps;

import com.google.common.io.Files;

import io.druid.benchmark.datagen.BenchmarkDataGenerator;

import io.druid.benchmark.datagen.BenchmarkSchemaInfo;

@@ -96,6 +95,7 @@

import java.io.IOException;

import java.util.ArrayList;

import java.util.Collections;

+import java.util.HashMap;

import java.util.LinkedHashMap;

import java.util.List;

import java.util.Map;

@@ -117,7 +117,7 @@

@Param({"100"})

private int threshold;

- protected static final Map scriptDoubleSum =

Maps.newHashMap();

+ protected static final Map scriptDoubleSum = new HashMap<>();

static {

scriptDoubleSum.put("fnAggregate", "function aggregate(current, a) {

return current + a }");

scriptDoubleSum.put("fnReset", "function reset() { return 0 }");

@@ -427,10 +427,7 @@ private IncrementalIndex makeIncIndex()

@OutputTimeUnit(TimeUnit.MICROSECONDS)

public void queryMultiQueryableIndexTopN(Blackhole blackhole)

{

-Sequence> queryResult = topNRunner.run(

-QueryPlus.wrap(topNQuery),

-Maps.newHashMap()

-);

+Sequence> queryResult =

topNRunner.run(QueryPlus.wrap(topNQuery), new HashMap<>());

List> results = queryResult.toList();

for (Result result : results) {

@@ -446,7 +443,7 @@ public void queryMultiQueryableIndexTimeseries(Blackhole

blackhole)

{

Sequence> queryResult = timeseriesRunner.run(

QueryPlus.wrap(timeseriesQuery),

-Maps.newHashMap()

+new HashMap<>()

);

List> results = queryResult.toList();

diff --git

a/indexing-service/src/main/java/io/druid/indexing/common/task/batch/parallel/ParallelIndexSupervisorTask.java

b/indexing-service/src/main/java/io/druid/indexing/common/task/batch/parallel/ParallelIndexSupervisorTask.java

index 438e0f1d81f..b0e0ed24714 100644

---

a/indexing-service/src/main/java/io/druid/indexing/common/task/batch/parallel/ParallelIndexSupervisorTask.java

+++

b/indexing-service/src/main/java/io/druid/indexing/common/task/batch/parallel/ParallelIndexSupervisorTask.java

@@ -268,7 +268,7 @@ private TaskStatus runParallel(TaskToolbox toolbox) throws

Exception

return TaskStatus.fromCode(getId(), runner.run());

}

- private TaskStatus runSequential(TaskToolbox toolbox) throws Exception

+ private TaskStatus runSequential(TaskToolbox toolbox)

{

return new IndexTask(

getId(),

diff --git

a/indexing-service/src/test/java/io/druid/indexing/overlord/autoscaling/EC2AutoScalerTest.java

b/indexing-service/src/test/java/io/druid/indexing/overlord/autoscaling/EC2AutoScalerTest.java

index d34c9660632..68daf7f762b 100644

---

a/indexing-service/src/test/java/io/druid/indexing/overlord/autoscaling/EC2AutoScalerTest.java

+++

b/indexing-service/src/test/java/io/druid/indexing/overlord/autoscaling/EC2AutoScalerTest.java

@@

[GitHub] gianm commented on issue #6206: Fix NPE in KafkaSupervisor.checkpointTaskGroup

gianm commented on issue #6206: Fix NPE in KafkaSupervisor.checkpointTaskGroup URL: https://github.com/apache/incubator-druid/pull/6206#issuecomment-416085168 Merged master into this branch to get the fixes from #6236. Let's see how this goes. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] fjy closed pull request #6228: [Backport] Support projection after sorting in SQL

fjy closed pull request #6228: [Backport] Support projection after sorting in

SQL

URL: https://github.com/apache/incubator-druid/pull/6228

This is a PR merged from a forked repository.

As GitHub hides the original diff on merge, it is displayed below for

the sake of provenance:

As this is a foreign pull request (from a fork), the diff is supplied

below (as it won't show otherwise due to GitHub magic):

diff --git

a/sql/src/main/java/io/druid/sql/calcite/aggregation/Aggregation.java

b/sql/src/main/java/io/druid/sql/calcite/aggregation/Aggregation.java

index 2532c8d7f82..09436b96e9d 100644

--- a/sql/src/main/java/io/druid/sql/calcite/aggregation/Aggregation.java

+++ b/sql/src/main/java/io/druid/sql/calcite/aggregation/Aggregation.java

@@ -36,6 +36,7 @@

import io.druid.sql.calcite.table.RowSignature;

import javax.annotation.Nullable;

+import java.util.Collections;

import java.util.List;

import java.util.Objects;

import java.util.Set;

@@ -112,7 +113,7 @@ public static Aggregation create(final AggregatorFactory

aggregatorFactory)

public static Aggregation create(final PostAggregator postAggregator)

{

-return new Aggregation(ImmutableList.of(), ImmutableList.of(),

postAggregator);

+return new Aggregation(Collections.emptyList(), Collections.emptyList(),

postAggregator);

}

public static Aggregation create(

diff --git a/sql/src/main/java/io/druid/sql/calcite/rel/DruidQuery.java

b/sql/src/main/java/io/druid/sql/calcite/rel/DruidQuery.java

index bca4481992f..5503f50adf9 100644

--- a/sql/src/main/java/io/druid/sql/calcite/rel/DruidQuery.java

+++ b/sql/src/main/java/io/druid/sql/calcite/rel/DruidQuery.java

@@ -89,6 +89,7 @@

import java.util.ArrayList;

import java.util.List;

import java.util.Map;

+import java.util.OptionalInt;

import java.util.TreeSet;

import java.util.stream.Collectors;

@@ -105,9 +106,11 @@

private final DimFilter filter;

private final SelectProjection selectProjection;

private final Grouping grouping;

+ private final SortProject sortProject;

+ private final DefaultLimitSpec limitSpec;

private final RowSignature outputRowSignature;

private final RelDataType outputRowType;

- private final DefaultLimitSpec limitSpec;

+

private final Query query;

public DruidQuery(

@@ -128,15 +131,22 @@ public DruidQuery(

this.selectProjection = computeSelectProjection(partialQuery,

plannerContext, sourceRowSignature);

this.grouping = computeGrouping(partialQuery, plannerContext,

sourceRowSignature, rexBuilder);

+final RowSignature sortingInputRowSignature;

+

if (this.selectProjection != null) {

- this.outputRowSignature = this.selectProjection.getOutputRowSignature();

+ sortingInputRowSignature = this.selectProjection.getOutputRowSignature();

} else if (this.grouping != null) {

- this.outputRowSignature = this.grouping.getOutputRowSignature();

+ sortingInputRowSignature = this.grouping.getOutputRowSignature();

} else {

- this.outputRowSignature = sourceRowSignature;

+ sortingInputRowSignature = sourceRowSignature;

}

-this.limitSpec = computeLimitSpec(partialQuery, this.outputRowSignature);

+this.sortProject = computeSortProject(partialQuery, plannerContext,

sortingInputRowSignature, grouping);

+

+// outputRowSignature is used only for scan and select query, and thus

sort and grouping must be null

+this.outputRowSignature = sortProject == null ? sortingInputRowSignature :

sortProject.getOutputRowSignature();

+

+this.limitSpec = computeLimitSpec(partialQuery, sortingInputRowSignature);

this.query = computeQuery();

}

@@ -235,7 +245,7 @@ private static Grouping computeGrouping(

)

{

final Aggregate aggregate = partialQuery.getAggregate();

-final Project postProject = partialQuery.getPostProject();

+final Project aggregateProject = partialQuery.getAggregateProject();

if (aggregate == null) {

return null;

@@ -265,49 +275,27 @@ private static Grouping computeGrouping(

plannerContext

);

-if (postProject == null) {

+if (aggregateProject == null) {

return Grouping.create(dimensions, aggregations, havingFilter,

aggregateRowSignature);

} else {

- final List rowOrder = new ArrayList<>();

-

- int outputNameCounter = 0;

- for (final RexNode postAggregatorRexNode : postProject.getChildExps()) {

-// Attempt to convert to PostAggregator.

-final DruidExpression postAggregatorExpression =

Expressions.toDruidExpression(

-plannerContext,

-aggregateRowSignature,

-postAggregatorRexNode

-);

-

-if (postAggregatorExpression == null) {

- throw new CannotBuildQueryException(postProject,

postAggregatorRexNode);

-}

-

-if (postAggregatorDirectColumnIsOk(aggregateRowSignature,

postAggregatorExpression, postAggregatorRexNode)) {

- // Direct column access, without any type cast as far as

[GitHub] fjy closed pull request #6230: [Backport] Fix four bugs with numeric dimension output types.

fjy closed pull request #6230: [Backport] Fix four bugs with numeric dimension

output types.

URL: https://github.com/apache/incubator-druid/pull/6230

This is a PR merged from a forked repository.

As GitHub hides the original diff on merge, it is displayed below for

the sake of provenance:

As this is a foreign pull request (from a fork), the diff is supplied

below (as it won't show otherwise due to GitHub magic):

diff --git a/processing/src/main/java/io/druid/query/groupby/GroupByQuery.java

b/processing/src/main/java/io/druid/query/groupby/GroupByQuery.java

index 1f9b45e62eb..7d3dbc0844b 100644

--- a/processing/src/main/java/io/druid/query/groupby/GroupByQuery.java

+++ b/processing/src/main/java/io/druid/query/groupby/GroupByQuery.java

@@ -58,6 +58,7 @@

import io.druid.query.ordering.StringComparators;

import io.druid.query.spec.LegacySegmentSpec;

import io.druid.query.spec.QuerySegmentSpec;

+import io.druid.segment.DimensionHandlerUtils;

import io.druid.segment.VirtualColumn;

import io.druid.segment.VirtualColumns;

import io.druid.segment.column.Column;

@@ -377,7 +378,7 @@ public boolean determineApplyLimitPushDown()

final List orderedFieldNames = new ArrayList<>();

final Set dimsInOrderBy = new HashSet<>();

final List needsReverseList = new ArrayList<>();

-final List isNumericField = new ArrayList<>();

+final List dimensionTypes = new ArrayList<>();

final List comparators = new ArrayList<>();

for (OrderByColumnSpec orderSpec : limitSpec.getColumns()) {

@@ -389,7 +390,7 @@ public boolean determineApplyLimitPushDown()

dimsInOrderBy.add(dimIndex);

needsReverseList.add(needsReverse);

final ValueType type = dimensions.get(dimIndex).getOutputType();

-isNumericField.add(ValueType.isNumeric(type));

+dimensionTypes.add(type);

comparators.add(orderSpec.getDimensionComparator());

}

}

@@ -399,7 +400,7 @@ public boolean determineApplyLimitPushDown()

orderedFieldNames.add(dimensions.get(i).getOutputName());

needsReverseList.add(false);

final ValueType type = dimensions.get(i).getOutputType();

-isNumericField.add(ValueType.isNumeric(type));

+dimensionTypes.add(type);

comparators.add(StringComparators.LEXICOGRAPHIC);

}

}

@@ -416,7 +417,7 @@ public int compare(Row lhs, Row rhs)

return compareDimsForLimitPushDown(

orderedFieldNames,

needsReverseList,

- isNumericField,

+ dimensionTypes,

comparators,

lhs,

rhs

@@ -434,7 +435,7 @@ public int compare(Row lhs, Row rhs)

final int cmp = compareDimsForLimitPushDown(

orderedFieldNames,

needsReverseList,

- isNumericField,

+ dimensionTypes,

comparators,

lhs,

rhs

@@ -463,7 +464,7 @@ public int compare(Row lhs, Row rhs)

return compareDimsForLimitPushDown(

orderedFieldNames,

needsReverseList,

- isNumericField,

+ dimensionTypes,

comparators,

lhs,

rhs

@@ -530,28 +531,12 @@ public int compare(Row lhs, Row rhs)

private static int compareDims(List dimensions, Row lhs, Row

rhs)

{

for (DimensionSpec dimension : dimensions) {

- final int dimCompare;

- if (dimension.getOutputType() == ValueType.LONG) {

-dimCompare = Long.compare(

-((Number) lhs.getRaw(dimension.getOutputName())).longValue(),

-((Number) rhs.getRaw(dimension.getOutputName())).longValue()

-);

- } else if (dimension.getOutputType() == ValueType.FLOAT) {

-dimCompare = Float.compare(

-((Number) lhs.getRaw(dimension.getOutputName())).floatValue(),

-((Number) rhs.getRaw(dimension.getOutputName())).floatValue()

-);

- } else if (dimension.getOutputType() == ValueType.DOUBLE) {

-dimCompare = Double.compare(

-((Number) lhs.getRaw(dimension.getOutputName())).doubleValue(),

-((Number) rhs.getRaw(dimension.getOutputName())).doubleValue()

-);

- } else {

-dimCompare = ((Ordering) Comparators.naturalNullsFirst()).compare(

-lhs.getRaw(dimension.getOutputName()),

-rhs.getRaw(dimension.getOutputName())

-);

- }

+ //noinspection unchecked

+ final int dimCompare = DimensionHandlerUtils.compareObjectsAsType(

+ lhs.getRaw(dimension.getOutputName()),

+ rhs.getRaw(dimension.getOutputName()),

+ dimension.getOutputType()

+ );

if (dimCompare != 0) {

return dimCompare;

}

@@ -563,7 +548,7 @@ private static int compareDims(List

dimensions, Row lhs, Row rhs)

private static int

[GitHub] gianm commented on issue #6255: Heavy GC activities after upgrading to 0.12

gianm commented on issue #6255: Heavy GC activities after upgrading to 0.12 URL: https://github.com/apache/incubator-druid/issues/6255#issuecomment-416616545 Great catch! Btw, I think you meant to link to #4704 instead of #4707. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

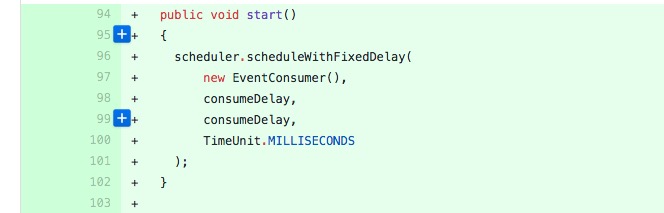

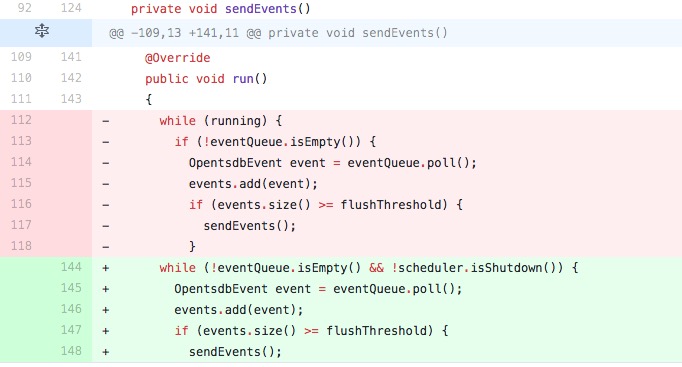

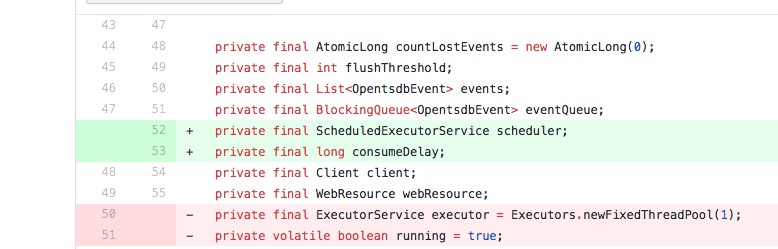

[GitHub] gianm commented on a change in pull request #6254: fix opentsdb emitter occupy 100%(#6247)

gianm commented on a change in pull request #6254: fix opentsdb emitter occupy

100%(#6247)

URL: https://github.com/apache/incubator-druid/pull/6254#discussion_r213366682

##

File path:

extensions-contrib/opentsdb-emitter/src/main/java/io/druid/emitter/opentsdb/OpentsdbSender.java

##

@@ -110,12 +119,15 @@ private void sendEvents()

public void run()

{

while (running) {

-if (!eventQueue.isEmpty()) {

- OpentsdbEvent event = eventQueue.poll();

+try {

+ OpentsdbEvent event = eventQueue.take();

events.add(event);

- if (events.size() >= flushThreshold) {

-sendEvents();

- }

+}

+catch (InterruptedException e) {

+ log.error(e, "consumer take event failed!");

+}

+if (events.size() >= flushThreshold) {

+ sendEvents();

Review comment:

`take` will block until an item becomes available, so I think it is okay.

This function will spend most of its time waiting on `take` rather than in a

spin loop.

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org

For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] gaodayue commented on issue #6255: Heavy GC activities after upgrading to 0.12

gaodayue commented on issue #6255: Heavy GC activities after upgrading to 0.12 URL: https://github.com/apache/incubator-druid/issues/6255#issuecomment-416583814 In order to find out which class puts too much pressure on garbage collector, I captured flight record (60s) before and after upgrade and compare them. In our cluster, each node is constantly serving ~30 queries per second, so the workload should be the same before and after. Here are the clues I found from the record. First, TLAB and non-TLAB allocation rate are 64MB/s and 284KB/s before upgrade, but 107MB/s and 100MB/s after upgrade. That is non-TLAB allocated memory is 362 times more. https://user-images.githubusercontent.com/1198446/44724021-31e35100-ab04-11e8-81d8-04057c744e5f.png;> https://user-images.githubusercontent.com/1198446/44724030-37d93200-ab04-11e8-9f3f-cdc6a0ada1eb.png;> Second, nearly 99% of the memory is allocated inside `RowBasedKeySerde`'s constructor. It's initializing forward and reverse dictionary. https://user-images.githubusercontent.com/1198446/44724736-2f81f680-ab06-11e8-898f-2d58848a0111.png;> From [the code](https://github.com/apache/incubator-druid/blob/0.12.0/processing/src/main/java/io/druid/query/groupby/epinephelinae/RowBasedGrouperHelper.java#L993) we can see, the initial dictionary capacity is 1. So every SpillGrouper needs to allocate at least two 1-sized dictionary. We have `processing.numThreads` set to 31 and QPS around 30, therefore each second we're creating 1860's 1-sized dictionary. Most of our groupby queries returns just a few rows, set initial dictionary size to 1 is too big. This bug was introduced by #4707. Before 0.12, [initial dictionary is empty](https://github.com/apache/incubator-druid/blob/0.11.0/processing/src/main/java/io/druid/query/groupby/epinephelinae/RowBasedGrouperHelper.java#L907). #4707 changes it to 1. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] gaodayue opened a new pull request #6256: RowBasedKeySerde should use empty dictionary in constructor

gaodayue opened a new pull request #6256: RowBasedKeySerde should use empty dictionary in constructor URL: https://github.com/apache/incubator-druid/pull/6256 Fixes #6255 This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] gianm commented on issue #6253: Multiple datasources with multiple topic in one spec file

gianm commented on issue #6253: Multiple datasources with multiple topic in one spec file URL: https://github.com/apache/incubator-druid/issues/6253#issuecomment-416635332 Hi @Harish346, currently the model is 1 supervisor and 1 set of tasks per datasource. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] QiuMM commented on a change in pull request #6254: fix opentsdb emitter occupy 100%(#6247)

QiuMM commented on a change in pull request #6254: fix opentsdb emitter occupy

100%(#6247)

URL: https://github.com/apache/incubator-druid/pull/6254#discussion_r213365894

##

File path:

extensions-contrib/opentsdb-emitter/src/main/java/io/druid/emitter/opentsdb/OpentsdbSender.java

##

@@ -110,12 +119,15 @@ private void sendEvents()

public void run()

{

while (running) {

-if (!eventQueue.isEmpty()) {

- OpentsdbEvent event = eventQueue.poll();

+try {

+ OpentsdbEvent event = eventQueue.take();

events.add(event);

- if (events.size() >= flushThreshold) {

-sendEvents();

- }

+}

+catch (InterruptedException e) {

+ log.error(e, "consumer take event failed!");

+}

+if (events.size() >= flushThreshold) {

+ sendEvents();

Review comment:

The while loop still always be running! Problem have not been solved.

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org

For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] QiuMM commented on issue #6254: fix opentsdb emitter occupy 100%(#6247)

QiuMM commented on issue #6254: fix opentsdb emitter occupy 100%(#6247) URL: https://github.com/apache/incubator-druid/pull/6254#issuecomment-416634103 It have been solved in #6251 . This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] QiuMM commented on a change in pull request #6254: fix opentsdb emitter occupy 100%(#6247)

QiuMM commented on a change in pull request #6254: fix opentsdb emitter occupy

100%(#6247)

URL: https://github.com/apache/incubator-druid/pull/6254#discussion_r213372654

##

File path:

extensions-contrib/opentsdb-emitter/src/main/java/io/druid/emitter/opentsdb/OpentsdbSender.java

##

@@ -110,12 +119,15 @@ private void sendEvents()

public void run()

{

while (running) {

-if (!eventQueue.isEmpty()) {

- OpentsdbEvent event = eventQueue.poll();

+try {

+ OpentsdbEvent event = eventQueue.take();

events.add(event);

- if (events.size() >= flushThreshold) {

-sendEvents();

- }

+}

+catch (InterruptedException e) {

+ log.error(e, "consumer take event failed!");

+}

+if (events.size() >= flushThreshold) {

+ sendEvents();

Review comment:

Sorry, you are right, but I do not think this is a elegant solution.

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org

For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] himanshug commented on issue #6219: Add optional `name` to top level of FilteredAggregatorFactory

himanshug commented on issue #6219: Add optional `name` to top level of FilteredAggregatorFactory URL: https://github.com/apache/incubator-druid/pull/6219#issuecomment-416659072 @drcrallen I added the `compatibility` label to this PR for the catch that you [mentioned](https://github.com/apache/incubator-druid/pull/6219#discussion_r212738542). It should be covered in the release notes. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] jihoonson commented on issue #6124: KafkaIndexTask can delete published segments on restart

jihoonson commented on issue #6124: KafkaIndexTask can delete published segments on restart URL: https://github.com/apache/incubator-druid/issues/6124#issuecomment-416669984 @gianm I think it makes sense to close this issue since the title is about kafka tasks deleting pushed segments. However, the tasks would still fail in the same scenario which can make users confused. Do you think we need to file this in another issue? This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] himanshug commented on issue #6212: fix TaskQueue-HRTR deadlock

himanshug commented on issue #6212: fix TaskQueue-HRTR deadlock URL: https://github.com/apache/incubator-druid/pull/6212#issuecomment-416659850 @gianm sure, thanks. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] himanshug closed issue #2406: [proposal]datasketches lib based quantiles/histogram support in druid

himanshug closed issue #2406: [proposal]datasketches lib based quantiles/histogram support in druid URL: https://github.com/apache/incubator-druid/issues/2406 This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] gianm commented on issue #6124: KafkaIndexTask can delete published segments on restart

gianm commented on issue #6124: KafkaIndexTask can delete published segments on restart URL: https://github.com/apache/incubator-druid/issues/6124#issuecomment-416667501 Got it, I think we can close this then. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] himanshug commented on a change in pull request #5280: add "subtotalsSpec" attribute to groupBy query

himanshug commented on a change in pull request #5280: add "subtotalsSpec"

attribute to groupBy query

URL: https://github.com/apache/incubator-druid/pull/5280#discussion_r213413936

##

File path: docs/content/querying/groupbyquery.md

##

@@ -113,6 +114,94 @@ improve performance.

See [Multi-value dimensions](multi-value-dimensions.html) for more details.

+### More on subtotalsSpec

+you can have a groupBy query that looks something like below...

+

+```json

+{

+"type": "groupBy",

+ ...

+ ...

+"dimenstions": [

+ {

+ "type" : "default",

+ "dimension" : "d1col",

+ "outputName": "D1"

+ },

+ {

+ "type" : "extraction",

+ "dimension" : "d2col",

+ "outputName" : "D2",

+ "extractionFn" : extraction_func

+ },

+ {

+ "type":"lookup",

+ "dimension":"d3col",

+ "outputName":"D3",

+ "name":"my_lookup"

+ }

+],

+...

+...

+"subtotalsSpec":[ ["D1", "D2", D3"], ["D1", "D3"], ["D3"]],

+..

+

+}

+```

+

+Response returned would be equivalent to concatenating result of 3 groupBy

queries with "dimensions" field being ["D1", "D2", D3"], ["D1", "D3"] and

["D3"] with appropriate `DimensionSpec` json blob as used in above query.

+Response for above query would look something like below...

+

+```json

+[

+ {

+"version" : "v1",

+"timestamp" : "t1",

+"event" : { "D1": "..", "D2": "..", "D3": ".." }

+}

+ },

+{

+"version" : "v1",

+"timestamp" : "t2",

+"event" : { "D1": "..", "D2": "..", "D3": ".." }

+}

+ },

+ ...

+ ...

+

+ {

+"version" : "v1",

+"timestamp" : "t1",

+"event" : { "D1": "..", "D3": ".." }

+}

+ },

+{

+"version" : "v1",

+"timestamp" : "t2",

+"event" : { "D1": "..", "D3": ".." }

+}

+ },

+ ...

+ ...

+

+ {

+"version" : "v1",

+"timestamp" : "t1",

+"event" : { "D3": ".." }

+}

+ },

+{

+"version" : "v1",

+"timestamp" : "t2",

+"event" : { "D3": ".." }

+}

+ },

+...

+]

+```

+

+Note that "subtotalsSpec" must contain subsets of "outputName" from various

`DimensionSpec` json blobs in `dimensions` attribute and also ordering of

dimensions inside subtotal spec must be same as that inside top level

"dimensions" attribute e.g. ["D2", "D1"] subtotal spec is not valid as it is

not in same order.

Review comment:

deleted

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org

For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] himanshug commented on a change in pull request #5280: add "subtotalsSpec" attribute to groupBy query

himanshug commented on a change in pull request #5280: add "subtotalsSpec" attribute to groupBy query URL: https://github.com/apache/incubator-druid/pull/5280#discussion_r213413907 ## File path: docs/content/querying/groupbyquery.md ## @@ -113,6 +114,94 @@ improve performance. See [Multi-value dimensions](multi-value-dimensions.html) for more details. +### More on subtotalsSpec +you can have a groupBy query that looks something like below... Review comment: thanks for writing above, added/replaced. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] gianm closed issue #6124: KafkaIndexTask can delete published segments on restart

gianm closed issue #6124: KafkaIndexTask can delete published segments on restart URL: https://github.com/apache/incubator-druid/issues/6124 This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] jihoonson commented on issue #6256: RowBasedKeySerde should use empty dictionary in constructor

jihoonson commented on issue #6256: RowBasedKeySerde should use empty dictionary in constructor URL: https://github.com/apache/incubator-druid/pull/6256#issuecomment-416668830 Hi @gaodayue, I left a comment on https://github.com/apache/incubator-druid/issues/6255. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] jihoonson commented on issue #6255: Heavy GC activities after upgrading to 0.12

jihoonson commented on issue #6255: Heavy GC activities after upgrading to 0.12 URL: https://github.com/apache/incubator-druid/issues/6255#issuecomment-416668676 @gaodayue thank you for catching this. Could you share some more details of your use case? I wonder especially how large the dictionary was. I set the initial size of dictionary map to 1 because I thought `SpillingGrouper` isn't supposed to be used for small dictionary. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] himanshug commented on issue #6255: Heavy GC activities after upgrading to 0.12

himanshug commented on issue #6255: Heavy GC activities after upgrading to 0.12 URL: https://github.com/apache/incubator-druid/issues/6255#issuecomment-416662430 #4704 did run the benchmarks for performance, unfortunately benchmarks don't report memory allocation or else it would be noticed while #4704 was under development. it would be nice if benchmarks could report some stats on memory as well. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] QiuMM edited a comment on issue #6254: fix opentsdb emitter occupy 100%(#6247)

QiuMM edited a comment on issue #6254: fix opentsdb emitter occupy 100%(#6247) URL: https://github.com/apache/incubator-druid/pull/6254#issuecomment-416634103 I have opened #6251 to solve same issue. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] himanshug commented on a change in pull request #5280: add "subtotalsSpec" attribute to groupBy query

himanshug commented on a change in pull request #5280: add "subtotalsSpec"

attribute to groupBy query

URL: https://github.com/apache/incubator-druid/pull/5280#discussion_r213413800

##

File path: docs/content/querying/groupbyquery.md

##

@@ -113,6 +114,94 @@ improve performance.

See [Multi-value dimensions](multi-value-dimensions.html) for more details.

+### More on subtotalsSpec

+you can have a groupBy query that looks something like below...

+

+```json

+{

+"type": "groupBy",

+ ...

+ ...

+"dimenstions": [

Review comment:

:)

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org

For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] himanshug commented on a change in pull request #5280: add "subtotalsSpec" attribute to groupBy query

himanshug commented on a change in pull request #5280: add "subtotalsSpec" attribute to groupBy query URL: https://github.com/apache/incubator-druid/pull/5280#discussion_r213413760 ## File path: docs/content/querying/groupbyquery.md ## @@ -70,6 +70,7 @@ There are 11 main parts to a groupBy query: |aggregations|See [Aggregations](../querying/aggregations.html)|no| |postAggregations|See [Post Aggregations](../querying/post-aggregations.html)|no| |intervals|A JSON Object representing ISO-8601 Intervals. This defines the time ranges to run the query over.|yes| +|subtotalsSpec| A JSON array of arrays to return additional result sets for groupings of subsets of top level `dimensions`. It is described later in more detail.|no| Review comment: sure This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] b-slim commented on a change in pull request #6251: fix opentsdb emitter always be running

b-slim commented on a change in pull request #6251: fix opentsdb emitter always be running URL: https://github.com/apache/incubator-druid/pull/6251#discussion_r213459729 ## File path: docs/content/development/extensions-contrib/opentsdb-emitter.md ## @@ -18,10 +18,11 @@ All the configuration parameters for the opentsdb emitter are under `druid.emitt ||---|-|---| |`druid.emitter.opentsdb.host`|The host of the OpenTSDB server.|yes|none| |`druid.emitter.opentsdb.port`|The port of the OpenTSDB server.|yes|none| -|`druid.emitter.opentsdb.connectionTimeout`|Connection timeout(in milliseconds).|no|2000| -|`druid.emitter.opentsdb.readTimeout`|Read timeout(in milliseconds).|no|2000| +|`druid.emitter.opentsdb.connectionTimeout`|`Jersey client` connection timeout(in milliseconds).|no|2000| +|`druid.emitter.opentsdb.readTimeout`|`Jersey client` read timeout(in milliseconds).|no|2000| |`druid.emitter.opentsdb.flushThreshold`|Queue flushing threshold.(Events will be sent as one batch)|no|100| |`druid.emitter.opentsdb.maxQueueSize`|Maximum size of the queue used to buffer events.|no|1000| +|`druid.emitter.opentsdb.consumeDelay`|Queue consuming delay(in milliseconds).|no|1| Review comment: can we add a short description about what this means like what happen if increased or decreased ? and maybe why 10second is a good default? This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] gianm opened a new pull request #6257: SQL: Fix post-aggregator naming logic for sort-project. (#6250)

gianm opened a new pull request #6257: SQL: Fix post-aggregator naming logic

for sort-project. (#6250)

URL: https://github.com/apache/incubator-druid/pull/6257

The old code assumes that post-aggregator prefixes are one character

long followed by numbers. This isn't always true (we may pad with

underscores to avoid conflicts). Instead, the new code uses a different

base prefix for sort-project postaggregators ("s" instead of "p") and

uses the usual Calcites.findUnusedPrefix function to avoid conflicts.

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org

For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] jon-wei commented on a change in pull request #6258: Don't let catch/finally suppress main exception in IncrementalPublishingKafkaIndexTaskRunner

jon-wei commented on a change in pull request #6258: Don't let catch/finally

suppress main exception in IncrementalPublishingKafkaIndexTaskRunner

URL: https://github.com/apache/incubator-druid/pull/6258#discussion_r213468683

##

File path:

extensions-core/kafka-indexing-service/src/main/java/io/druid/indexing/kafka/IncrementalPublishingKafkaIndexTaskRunner.java

##

@@ -706,21 +727,38 @@ public void onFailure(Throwable t)

}

catch (Exception e) {

// (3) catch all other exceptions thrown for the whole ingestion steps

including the final publishing.

- Futures.allAsList(publishWaitList).cancel(true);

- Futures.allAsList(handOffWaitList).cancel(true);

- appenderator.closeNow();

+ caughtExceptionOuter = e;

+ try {

+Futures.allAsList(publishWaitList).cancel(true);

+Futures.allAsList(handOffWaitList).cancel(true);

+if (appenderator != null) {

+ appenderator.closeNow();

+}

+ }

+ catch (Exception e2) {

+e.addSuppressed(e2);

+ }

throw e;

}

finally {

- if (driver != null) {

-driver.close();

+ try {

+if (driver != null) {

+ driver.close();

+}

+if (chatHandlerProvider.isPresent()) {

+ chatHandlerProvider.get().unregister(task.getId());

+}

+

+toolbox.getDruidNodeAnnouncer().unannounce(discoveryDruidNode);

+toolbox.getDataSegmentServerAnnouncer().unannounce();

}

- if (chatHandlerProvider.isPresent()) {

-chatHandlerProvider.get().unregister(task.getId());

+ catch (Exception e) {

+if (caughtExceptionOuter != null) {

+ caughtExceptionOuter.addSuppressed(e);

Review comment:

it's in the finally block, so if caughtExceptionOuter is not null it was

already thrown earlier

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org

For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] jihoonson commented on a change in pull request #6258: Don't let catch/finally suppress main exception in IncrementalPublishingKafkaIndexTaskRunner

jihoonson commented on a change in pull request #6258: Don't let catch/finally

suppress main exception in IncrementalPublishingKafkaIndexTaskRunner

URL: https://github.com/apache/incubator-druid/pull/6258#discussion_r213467270

##

File path:

extensions-core/kafka-indexing-service/src/main/java/io/druid/indexing/kafka/IncrementalPublishingKafkaIndexTaskRunner.java

##

@@ -706,21 +727,38 @@ public void onFailure(Throwable t)

}

catch (Exception e) {

// (3) catch all other exceptions thrown for the whole ingestion steps

including the final publishing.

- Futures.allAsList(publishWaitList).cancel(true);

- Futures.allAsList(handOffWaitList).cancel(true);

- appenderator.closeNow();

+ caughtExceptionOuter = e;

+ try {

+Futures.allAsList(publishWaitList).cancel(true);

+Futures.allAsList(handOffWaitList).cancel(true);

+if (appenderator != null) {

+ appenderator.closeNow();

+}

+ }

+ catch (Exception e2) {

+e.addSuppressed(e2);

+ }

throw e;

}

finally {

- if (driver != null) {

-driver.close();

+ try {

+if (driver != null) {

+ driver.close();

+}

+if (chatHandlerProvider.isPresent()) {

+ chatHandlerProvider.get().unregister(task.getId());

+}

+

+toolbox.getDruidNodeAnnouncer().unannounce(discoveryDruidNode);

+toolbox.getDataSegmentServerAnnouncer().unannounce();

}

- if (chatHandlerProvider.isPresent()) {

-chatHandlerProvider.get().unregister(task.getId());

+ catch (Exception e) {

+if (caughtExceptionOuter != null) {

+ caughtExceptionOuter.addSuppressed(e);

Review comment:

`throw caughtExceptionOuter`?

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org

For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] jihoonson commented on a change in pull request #6258: Don't let catch/finally suppress main exception in IncrementalPublishingKafkaIndexTaskRunner

jihoonson commented on a change in pull request #6258: Don't let catch/finally

suppress main exception in IncrementalPublishingKafkaIndexTaskRunner

URL: https://github.com/apache/incubator-druid/pull/6258#discussion_r213467072

##

File path:

extensions-core/kafka-indexing-service/src/main/java/io/druid/indexing/kafka/IncrementalPublishingKafkaIndexTaskRunner.java

##

@@ -616,12 +618,22 @@ public void onFailure(Throwable t)

}

catch (Exception e) {

// (1) catch all exceptions while reading from kafka

+caughtExceptionInner = e;

log.error(e, "Encountered exception in run() before persisting.");

throw e;

}

finally {

log.info("Persisting all pending data");

-driver.persist(committerSupplier.get()); // persist pending data

+try {

+ driver.persist(committerSupplier.get()); // persist pending data

+}

+catch (Exception e) {

+ if (caughtExceptionInner != null) {

+caughtExceptionInner.addSuppressed(e);

Review comment:

`throw caughtExceptionInner`?

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org

For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] himanshug commented on a change in pull request #5872: add method getRequiredColumns for DimFilter

himanshug commented on a change in pull request #5872: add method getRequiredColumns for DimFilter URL: https://github.com/apache/incubator-druid/pull/5872#discussion_r213452493 ## File path: processing/src/main/java/io/druid/query/filter/DimFilter.java ## @@ -75,4 +77,9 @@ * determine for this DimFilter. */ RangeSet getDimensionRangeSet(String dimension); + + /** + * @return a HashSet that represents all columns' name which the DimFilter required to do filter. + */ + HashSet getRequiredColumns(); Review comment: Can we have the return type be `Set` instead so that single column cases can just return `ImmutableSet.of(col)` ? This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] gianm commented on issue #6254: fix opentsdb emitter occupy 100%(#6247)

gianm commented on issue #6254: fix opentsdb emitter occupy 100%(#6247) URL: https://github.com/apache/incubator-druid/pull/6254#issuecomment-416729102 What are the pros and cons of this approach vs. the approach in #6251? (Sorry, I haven't had a chance to study how the OpenTSDB emitter works.) This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] jon-wei opened a new pull request #6258: Don't let catch/finally suppress main exception in IncrementalPublishingKafkaIndexTaskRunner