[GitHub] [airflow] bhavaniravi commented on issue #9625: Git-sync retry for worker pods

bhavaniravi commented on issue #9625: URL: https://github.com/apache/airflow/issues/9625#issuecomment-652794305 On approval as a valid feature, I would love to work on this :) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] bhavaniravi opened a new issue #9625: Git-sync retry for worker pods

bhavaniravi opened a new issue #9625: URL: https://github.com/apache/airflow/issues/9625 **Description** Airflow when using KubernetesExecutor and `git-mode` need to have a `GIT_SYNC_MAX_SYNC_FAILURES` option. **Use case / motivation** When running a task with `git-mode` and KubernetesExecutor with self-hosted bitbucket or GitLab, the pod fails because it couldn't connect to the Code hosting service thereby marking the task failed. While we can set `GIT_SYNC_MAX_SYNC_FAILURES` as suggested by [Kubernetes git-sync repo](https://github.com/kubernetes/git-sync), there is no option to set this in airflow workers via config. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[airflow] tag nightly-master updated (87d83a1 -> 63a8c79)

This is an automated email from the ASF dual-hosted git repository. github-bot pushed a change to tag nightly-master in repository https://gitbox.apache.org/repos/asf/airflow.git. *** WARNING: tag nightly-master was modified! *** from 87d83a1 (commit) to 63a8c79 (commit) from 87d83a1 Fix regression in SQLThresholdCheckOperator (#9312) add 58edc38 Fix typo in the word 'available' (#9599) add 7a54418 Move XCom tests to tests/models/test_xcom.py (#9601) add 2d3677f Fix typo in tutorial.rst (#9605) add 8bd15ef Switches to Helm Chart for Kubernetes tests (#9468) add f3e1f9a Update Breeze documentation (#9608) add 48a8316 Fix quarantined tests - TestCliWebServer (#9598) add 65855e5 Add docs to change Colors on the Webserver (#9607) add 9e305d6 Change default auth for experimental backend to deny_all (#9611) add a3a52c7 Removes importlib usage - it's not needed (fails on Airflow 1.10) (#9613) add 1655fa9 Restrict changing XCom values from the Webserver (#9614) add 7ef7f58 Update docs about the change to default auth for experimental API (#9617) add bc3f48c Change 'initiate' to 'initialize' in installation.rst (#9619) add 05c88cb Replace old Variables View Screenshot with new (#9620) add 63a8c79 Replace old SubDag zoom screenshot with new (#9621) No new revisions were added by this update. Summary of changes: .github/workflows/ci.yml | 27 +- BREEZE.rst | 401 - CI.rst | 2 +- Dockerfile | 4 + IMAGES.rst | 3 + TESTING.rst| 67 ++-- UPDATING.md| 21 ++ airflow/cli/commands/webserver_command.py | 26 +- airflow/config_templates/config.yml| 6 +- airflow/config_templates/default_airflow.cfg | 6 +- airflow/kubernetes/pod_launcher.py | 2 +- .../cncf/kubernetes/operators/kubernetes_pod.py| 5 +- airflow/www/views.py | 4 +- breeze | 51 ++- breeze-complete| 14 +- chart/README.md| 5 +- chart/requirements.lock| 4 +- chart/templates/_helpers.yaml | 4 +- chart/templates/configmap.yaml | 2 + chart/templates/rbac/pod-launcher-role.yaml| 2 +- chart/templates/rbac/pod-launcher-rolebinding.yaml | 4 +- docs/howto/customize-state-colors-ui.rst | 70 docs/howto/index.rst | 1 + docs/img/change-ui-colors/dags-page-new.png| Bin 0 -> 483599 bytes docs/img/change-ui-colors/dags-page-old.png| Bin 0 -> 493009 bytes docs/img/change-ui-colors/graph-view-new.png | Bin 0 -> 56973 bytes docs/img/change-ui-colors/graph-view-old.png | Bin 0 -> 54884 bytes docs/img/change-ui-colors/tree-view-new.png| Bin 0 -> 36934 bytes docs/img/change-ui-colors/tree-view-old.png| Bin 0 -> 21601 bytes docs/img/subdag_zoom.png | Bin 150185 -> 255915 bytes docs/img/variable_hidden.png | Bin 154299 -> 121301 bytes docs/installation.rst | 6 +- docs/security.rst | 18 +- docs/tutorial.rst | 2 +- kubernetes_tests/test_kubernetes_executor.py | 40 +- requirements/requirements-python3.6.txt| 16 +- requirements/requirements-python3.7.txt| 16 +- requirements/requirements-python3.8.txt| 16 +- requirements/setup-3.6.md5 | 2 +- requirements/setup-3.7.md5 | 2 +- requirements/setup-3.8.md5 | 2 +- scripts/ci/ci_build_production_images.sh | 25 -- scripts/ci/ci_count_changed_files.sh | 2 +- scripts/ci/ci_deploy_app_to_kubernetes.sh | 16 +- scripts/ci/ci_docs.sh | 2 +- scripts/ci/ci_flake8.sh| 2 +- scripts/ci/ci_generate_requirements.sh | 2 +- scripts/ci/ci_load_image_to_kind.sh| 7 +- scripts/ci/ci_mypy.sh | 2 +- scripts/ci/ci_perform_kind_cluster_operation.sh| 6 +- scripts/ci/ci_prepare_backport_packages.sh | 2 +- scripts/ci/ci_prepare_backport_readme.sh | 2 +- scripts/ci/ci_pylint_main.sh | 2 +- scripts/ci/ci_pylint_tests.sh | 2 +- scripts/ci/ci_refresh_pylint_todo.sh | 2 +- scripts/ci/ci_run_airflow_testing.sh | 2 +-

[GitHub] [airflow] houqp commented on pull request #9502: generate go client from openapi spec

houqp commented on pull request #9502: URL: https://github.com/apache/airflow/pull/9502#issuecomment-652739173 @potiuk @mik-laj github actions are passing now, ready for another round of review. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] houqp commented on a change in pull request #9502: generate go client from openapi spec

houqp commented on a change in pull request #9502:

URL: https://github.com/apache/airflow/pull/9502#discussion_r448700155

##

File path: chart/values.yaml

##

@@ -223,12 +223,12 @@ webserver:

extraNetworkPolicies: []

resources: {}

-# limits:

-# cpu: 100m

-# memory: 128Mi

-# requests:

-# cpu: 100m

-# memory: 128Mi

+ # limits:

Review comment:

fixed yaml lint warning reported in ci run

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow-client-go] houqp commented on a change in pull request #1: Add generated go client

houqp commented on a change in pull request #1: URL: https://github.com/apache/airflow-client-go/pull/1#discussion_r448693291 ## File path: README.md ## @@ -1 +1,62 @@ -# Airflow API Client for Go + +Airflow Go client += + +Go Airflow OpenAPI client generated from [openapi spec](https://github.com/apache/airflow/tree/master/clients). Review comment: @turbaszek I updated the link to point to `https://github.com/apache/airflow/blob/master/clients/gen/go.sh`, which will be a valid link once https://github.com/apache/airflow/pull/9502 gets merged. I think linking to the actual automation script might be better here since there is no guarantee that future of the client will be generated using the same v1.yaml spec. It also provides more context to users on how this client is actually generated so it's easier for them to contribute change back to the airflow repo. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ephraimbuddy opened a new pull request #9624: Move StackdriverTaskHandler to the provider package

ephraimbuddy opened a new pull request #9624: URL: https://github.com/apache/airflow/pull/9624 --- This PR fixes one of the issues listed in #9386 The `StackdriverTaskHandler` class from `airflow.utils.log.stackdriver_task_handler` was moved to `airflow.providers.google.cloud.log.stackdriver_task_handler`. Make sure to mark the boxes below before creating PR: [x] - [ ] Description above provides context of the change - [ ] Unit tests coverage for changes (not needed for documentation changes) - [ ] Target Github ISSUE in description if exists - [ ] Commits follow "[How to write a good git commit message](http://chris.beams.io/posts/git-commit/)" - [ ] Relevant documentation is updated including usage instructions. - [ ] I will engage committers as explained in [Contribution Workflow Example](https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#contribution-workflow-example). --- In case of fundamental code change, Airflow Improvement Proposal ([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvements+Proposals)) is needed. In case of a new dependency, check compliance with the [ASF 3rd Party License Policy](https://www.apache.org/legal/resolved.html#category-x). In case of backwards incompatible changes please leave a note in [UPDATING.md](https://github.com/apache/airflow/blob/master/UPDATING.md). Read the [Pull Request Guidelines](https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#pull-request-guidelines) for more information. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ephraimbuddy opened a new pull request #9623: Move ElasticsearchTaskHandler to the provider package

ephraimbuddy opened a new pull request #9623: URL: https://github.com/apache/airflow/pull/9623 --- This PR fixes one of the issues listed in #9386 The `ElasticsearchTaskHandler` class from `airflow.utils.log.es_task_handler` was moved to `airflow.providers.elasticsearch.log.es_task_handler`. Make sure to mark the boxes below before creating PR: [x] - [ ] Description above provides context of the change - [ ] Unit tests coverage for changes (not needed for documentation changes) - [ ] Target Github ISSUE in description if exists - [ ] Commits follow "[How to write a good git commit message](http://chris.beams.io/posts/git-commit/)" - [ ] Relevant documentation is updated including usage instructions. - [ ] I will engage committers as explained in [Contribution Workflow Example](https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#contribution-workflow-example). --- In case of fundamental code change, Airflow Improvement Proposal ([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvements+Proposals)) is needed. In case of a new dependency, check compliance with the [ASF 3rd Party License Policy](https://www.apache.org/legal/resolved.html#category-x). In case of backwards incompatible changes please leave a note in [UPDATING.md](https://github.com/apache/airflow/blob/master/UPDATING.md). Read the [Pull Request Guidelines](https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#pull-request-guidelines) for more information. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] kaxil opened a new pull request #9622: Fix docstrings in exceptions.py

kaxil opened a new pull request #9622: URL: https://github.com/apache/airflow/pull/9622 Fix incorrect docstrings --- Make sure to mark the boxes below before creating PR: [x] - [x] Description above provides context of the change - [x] Unit tests coverage for changes (not needed for documentation changes) - [x] Target Github ISSUE in description if exists - [x] Commits follow "[How to write a good git commit message](http://chris.beams.io/posts/git-commit/)" - [x] Relevant documentation is updated including usage instructions. - [ ] I will engage committers as explained in [Contribution Workflow Example](https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#contribution-workflow-example). --- In case of fundamental code change, Airflow Improvement Proposal ([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvements+Proposals)) is needed. In case of a new dependency, check compliance with the [ASF 3rd Party License Policy](https://www.apache.org/legal/resolved.html#category-x). In case of backwards incompatible changes please leave a note in [UPDATING.md](https://github.com/apache/airflow/blob/master/UPDATING.md). Read the [Pull Request Guidelines](https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#pull-request-guidelines) for more information. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[airflow] 09/10: Add __repr__ for DagTag so tags display properly in /dagmodel/show (#8719)

This is an automated email from the ASF dual-hosted git repository.

kaxilnaik pushed a commit to branch v1-10-test

in repository https://gitbox.apache.org/repos/asf/airflow.git

commit c99194a6d4bc4327061257aa249e9d50aebfaf1e

Author: Xiaodong DENG

AuthorDate: Tue May 5 20:32:18 2020 +0200

Add __repr__ for DagTag so tags display properly in /dagmodel/show (#8719)

(cherry picked from commit c717d12f47c604082afc106b7a4a1f71d91f73e2)

---

airflow/models/dag.py| 3 +++

tests/models/test_dag.py | 12 +++-

2 files changed, 14 insertions(+), 1 deletion(-)

diff --git a/airflow/models/dag.py b/airflow/models/dag.py

index e24c164..94c6d2e 100644

--- a/airflow/models/dag.py

+++ b/airflow/models/dag.py

@@ -1711,6 +1711,9 @@ class DagTag(Base):

name = Column(String(100), primary_key=True)

dag_id = Column(String(ID_LEN), ForeignKey('dag.dag_id'), primary_key=True)

+def __repr__(self):

+return self.name

+

class DagModel(Base):

diff --git a/tests/models/test_dag.py b/tests/models/test_dag.py

index 5d9d05d..b711a8b 100644

--- a/tests/models/test_dag.py

+++ b/tests/models/test_dag.py

@@ -36,7 +36,7 @@ from mock import patch

from airflow import models, settings

from airflow.configuration import conf

from airflow.exceptions import AirflowException, AirflowDagCycleException

-from airflow.models import DAG, DagModel, TaskInstance as TI

+from airflow.models import DAG, DagModel, DagTag, TaskInstance as TI

from airflow.operators.bash_operator import BashOperator

from airflow.operators.dummy_operator import DummyOperator

from airflow.operators.subdag_operator import SubDagOperator

@@ -46,6 +46,7 @@ from airflow.utils.db import create_session

from airflow.utils.state import State

from airflow.utils.weight_rule import WeightRule

from tests.models import DEFAULT_DATE

+from tests.test_utils.db import clear_db_dags

class DagTest(unittest.TestCase):

@@ -657,6 +658,15 @@ class DagTest(unittest.TestCase):

self.assertEqual(prev_local.isoformat(), "2018-03-24T03:00:00+01:00")

self.assertEqual(prev.isoformat(), "2018-03-24T02:00:00+00:00")

+def test_dagtag_repr(self):

+clear_db_dags()

+dag = DAG('dag-test-dagtag', start_date=DEFAULT_DATE, tags=['tag-1',

'tag-2'])

+dag.sync_to_db()

+with create_session() as session:

+self.assertEqual({'tag-1', 'tag-2'},

+ {repr(t) for t in session.query(DagTag).filter(

+ DagTag.dag_id == 'dag-test-dagtag').all()})

+

@patch('airflow.models.dag.timezone.utcnow')

def test_sync_to_db(self, mock_now):

dag = DAG(

[airflow] 08/10: Update version_added of configs added in 1.10.11

This is an automated email from the ASF dual-hosted git repository. kaxilnaik pushed a commit to branch v1-10-test in repository https://gitbox.apache.org/repos/asf/airflow.git commit 84fc6b482e4fe563f42f60914fe35cc744283715 Author: Kaxil Naik AuthorDate: Thu Jul 2 00:33:42 2020 +0100 Update version_added of configs added in 1.10.11 --- airflow/config_templates/config.yml | 8 1 file changed, 4 insertions(+), 4 deletions(-) diff --git a/airflow/config_templates/config.yml b/airflow/config_templates/config.yml index 0d52426..3dd0a58 100644 --- a/airflow/config_templates/config.yml +++ b/airflow/config_templates/config.yml @@ -705,7 +705,7 @@ description: | If set to True, Airflow will track files in plugins_folder directory. When it detects changes, then reload the gunicorn. - version_added: ~ + version_added: 1.10.11 type: boolean example: ~ default: "False" @@ -1749,7 +1749,7 @@ - name: pod_template_file description: | Path to the YAML pod file. If set, all other kubernetes-related fields are ignored. - version_added: ~ + version_added: 1.10.11 type: string example: ~ default: "" @@ -1776,7 +1776,7 @@ description: | If False (and delete_worker_pods is True), failed worker pods will not be deleted so users can investigate them. - version_added: ~ + version_added: 1.10.11 type: string example: ~ default: "False" @@ -1847,7 +1847,7 @@ - name: dags_volume_mount_point description: | For either git sync or volume mounted DAGs, the worker will mount the volume in this path - version_added: ~ + version_added: 1.10.11 type: string example: ~ default: ""

[airflow] 04/10: Replace old Variables View Screenshot with new (#9620)

This is an automated email from the ASF dual-hosted git repository. kaxilnaik pushed a commit to branch v1-10-test in repository https://gitbox.apache.org/repos/asf/airflow.git commit 0610df17ecb4ab6d740d09f5ed25d364798acffd Author: Kaxil Naik AuthorDate: Wed Jul 1 23:51:18 2020 +0100 Replace old Variables View Screenshot with new (#9620) (cherry picked from commit 05c88cb392e63e9f12f05915b94d216f22b652dd) --- docs/img/variable_hidden.png | Bin 154299 -> 121301 bytes 1 file changed, 0 insertions(+), 0 deletions(-) diff --git a/docs/img/variable_hidden.png b/docs/img/variable_hidden.png index e081ca3..d982b92 100644 Binary files a/docs/img/variable_hidden.png and b/docs/img/variable_hidden.png differ

[airflow] 05/10: Restrict changing XCom values from the Webserver (#9614)

This is an automated email from the ASF dual-hosted git repository.

kaxilnaik pushed a commit to branch v1-10-test

in repository https://gitbox.apache.org/repos/asf/airflow.git

commit 30325f8e87beb19148f29aa9d3aafc7439c9958a

Author: Kaxil Naik

AuthorDate: Wed Jul 1 22:13:10 2020 +0100

Restrict changing XCom values from the Webserver (#9614)

(cherry-picked from 1655fa9253ba8f61ccda77780a9e94766c15f565)

---

UPDATING.md | 6 ++

airflow/www/views.py | 2 ++

airflow/www_rbac/views.py | 4 +---

3 files changed, 9 insertions(+), 3 deletions(-)

diff --git a/UPDATING.md b/UPDATING.md

index ec193f9..61734bb 100644

--- a/UPDATING.md

+++ b/UPDATING.md

@@ -89,6 +89,12 @@ the previous behaviour on a new install by setting this in

your airflow.cfg:

auth_backend = airflow.api.auth.backend.default

```

+### XCom Values can no longer be added or changed from the Webserver

+

+Since XCom values can contain pickled data, we would no longer allow adding or

+changing XCom values from the UI.

+

+

## Airflow 1.10.10

### Setting Empty string to a Airflow Variable will return an empty string

diff --git a/airflow/www/views.py b/airflow/www/views.py

index a3293c8..abd1b9e 100644

--- a/airflow/www/views.py

+++ b/airflow/www/views.py

@@ -2754,6 +2754,8 @@ class VariableView(wwwutils.DataProfilingMixin,

AirflowModelView):

class XComView(wwwutils.SuperUserMixin, AirflowModelView):

+can_create = False

+can_edit = False

verbose_name = "XCom"

verbose_name_plural = "XComs"

diff --git a/airflow/www_rbac/views.py b/airflow/www_rbac/views.py

index 67a7493..96d4079 100644

--- a/airflow/www_rbac/views.py

+++ b/airflow/www_rbac/views.py

@@ -2233,12 +2233,10 @@ class XComModelView(AirflowModelView):

datamodel = AirflowModelView.CustomSQLAInterface(XCom)

-base_permissions = ['can_add', 'can_list', 'can_edit', 'can_delete']

+base_permissions = ['can_list', 'can_delete']

search_columns = ['key', 'value', 'timestamp', 'execution_date',

'task_id', 'dag_id']

list_columns = ['key', 'value', 'timestamp', 'execution_date', 'task_id',

'dag_id']

-add_columns = ['key', 'value', 'execution_date', 'task_id', 'dag_id']

-edit_columns = ['key', 'value', 'execution_date', 'task_id', 'dag_id']

base_order = ('execution_date', 'desc')

base_filters = [['dag_id', DagFilter, lambda: []]]

[airflow] 03/10: Change 'initiate' to 'initialize' in installation.rst (#9619)

This is an automated email from the ASF dual-hosted git repository. kaxilnaik pushed a commit to branch v1-10-test in repository https://gitbox.apache.org/repos/asf/airflow.git commit e5662b96466408f5ed9472cee41e737a150471df Author: Kaxil Naik AuthorDate: Wed Jul 1 23:34:25 2020 +0100 Change 'initiate' to 'initialize' in installation.rst (#9619) `Initiating Airflow Database` -> `Initializing Airflow Database` (cherry picked from commit bc3f48c96603dbd2a94a33f05dd816097ccab3f1) --- docs/installation.rst | 6 +++--- 1 file changed, 3 insertions(+), 3 deletions(-) diff --git a/docs/installation.rst b/docs/installation.rst index e7c8970..d5652cb 100644 --- a/docs/installation.rst +++ b/docs/installation.rst @@ -180,10 +180,10 @@ Here's the list of the subpackages and what they enable: | vertica | ``pip install 'apache-airflow[vertica]'`` | Vertica hook support as an Airflow backend | +-+-+--+ -Initiating Airflow Database -''' +Initializing Airflow Database +' -Airflow requires a database to be initiated before you can run tasks. If +Airflow requires a database to be initialized before you can run tasks. If you're just experimenting and learning Airflow, you can stick with the default SQLite option. If you don't want to use SQLite, then take a look at :doc:`howto/initialize-database` to setup a different database.

[airflow] 02/10: Add docs to change Colors on the Webserver (#9607)

This is an automated email from the ASF dual-hosted git repository.

kaxilnaik pushed a commit to branch v1-10-test

in repository https://gitbox.apache.org/repos/asf/airflow.git

commit 127104b7e4388be2d0e0b64e4316980d9b6928a9

Author: Kaxil Naik

AuthorDate: Wed Jul 1 16:17:29 2020 +0100

Add docs to change Colors on the Webserver (#9607)

Feature was added in https://github.com/apache/airflow/pull/9520

(cherry picked from commit 65855e558f9b070004ba817e8cf94fd834d2a67a)

---

docs/howto/customize-state-colors-ui.rst | 70 +++

docs/howto/index.rst | 1 +

docs/img/change-ui-colors/dags-page-new.png | Bin 0 -> 483599 bytes

docs/img/change-ui-colors/dags-page-old.png | Bin 0 -> 493009 bytes

docs/img/change-ui-colors/graph-view-new.png | Bin 0 -> 56973 bytes

docs/img/change-ui-colors/graph-view-old.png | Bin 0 -> 54884 bytes

docs/img/change-ui-colors/tree-view-new.png | Bin 0 -> 36934 bytes

docs/img/change-ui-colors/tree-view-old.png | Bin 0 -> 21601 bytes

8 files changed, 71 insertions(+)

diff --git a/docs/howto/customize-state-colors-ui.rst

b/docs/howto/customize-state-colors-ui.rst

new file mode 100644

index 000..c856950

--- /dev/null

+++ b/docs/howto/customize-state-colors-ui.rst

@@ -0,0 +1,70 @@

+ .. Licensed to the Apache Software Foundation (ASF) under one

+or more contributor license agreements. See the NOTICE file

+distributed with this work for additional information

+regarding copyright ownership. The ASF licenses this file

+to you under the Apache License, Version 2.0 (the

+"License"); you may not use this file except in compliance

+with the License. You may obtain a copy of the License at

+

+ .. http://www.apache.org/licenses/LICENSE-2.0

+

+ .. Unless required by applicable law or agreed to in writing,

+software distributed under the License is distributed on an

+"AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+KIND, either express or implied. See the License for the

+specific language governing permissions and limitations

+under the License.

+

+Customizing state colours in UI

+===

+

+.. versionadded:: 1.10.11

+

+To change the colors for TaskInstance/DagRun State in the Airflow Webserver,

perform the

+following steps:

+

+1. Create ``airflow_local_settings.py`` file and put in on ``$PYTHONPATH`` or

+to ``$AIRFLOW_HOME/config`` folder. (Airflow adds ``$AIRFLOW_HOME/config``

on ``PYTHONPATH`` when

+Airflow is initialized)

+

+2. Add the following contents to ``airflow_local_settings.py`` file. Change

the colors to whatever you

+would like.

+

+.. code-block:: python

+

+ STATE_COLORS = {

+"queued": 'darkgray',

+"running": '#01FF70',

+"success": '#2ECC40',

+"failed": 'firebrick',

+"up_for_retry": 'yellow',

+"up_for_reschedule": 'turquoise',

+"upstream_failed": 'orange',

+"skipped": 'darkorchid',

+"scheduled": 'tan',

+ }

+

+

+

+3. Restart Airflow Webserver.

+

+Screenshots

+---

+

+Before

+^^

+

+.. image:: ../img/change-ui-colors/dags-page-old.png

+

+.. image:: ../img/change-ui-colors/graph-view-old.png

+

+.. image:: ../img/change-ui-colors/tree-view-old.png

+

+After

+^^

+

+.. image:: ../img/change-ui-colors/dags-page-new.png

+

+.. image:: ../img/change-ui-colors/graph-view-new.png

+

+.. image:: ../img/change-ui-colors/tree-view-new.png

diff --git a/docs/howto/index.rst b/docs/howto/index.rst

index ae20c91..2b91c77 100644

--- a/docs/howto/index.rst

+++ b/docs/howto/index.rst

@@ -32,6 +32,7 @@ configuring an Airflow environment.

set-config

initialize-database

operator/index

+customize-state-colors-ui

custom-operator

connection/index

secure-connections

diff --git a/docs/img/change-ui-colors/dags-page-new.png

b/docs/img/change-ui-colors/dags-page-new.png

new file mode 100644

index 000..d2ffe1f

Binary files /dev/null and b/docs/img/change-ui-colors/dags-page-new.png differ

diff --git a/docs/img/change-ui-colors/dags-page-old.png

b/docs/img/change-ui-colors/dags-page-old.png

new file mode 100644

index 000..5078d01

Binary files /dev/null and b/docs/img/change-ui-colors/dags-page-old.png differ

diff --git a/docs/img/change-ui-colors/graph-view-new.png

b/docs/img/change-ui-colors/graph-view-new.png

new file mode 100644

index 000..b367461

Binary files /dev/null and b/docs/img/change-ui-colors/graph-view-new.png differ

diff --git a/docs/img/change-ui-colors/graph-view-old.png

b/docs/img/change-ui-colors/graph-view-old.png

new file mode 100644

index 000..ceaf8d4

Binary files /dev/null and b/docs/img/change-ui-colors/graph-view-old.png differ

diff --git a/docs/img/change-ui-colors/tree-view-new.png

b/docs/img/change-ui-colors/tree-view-new.png

new file mode 100644

index 000..6a5b2d7

Binary files /dev/null and b/docs/img/change-ui-colors/tree-view-new.png differ

diff

[airflow] 06/10: Replace old SubDag zoom screenshot with new (#9621)

This is an automated email from the ASF dual-hosted git repository. kaxilnaik pushed a commit to branch v1-10-test in repository https://gitbox.apache.org/repos/asf/airflow.git commit 0663b4ecce46b5ea95febca345f5605cb232786a Author: Kaxil Naik AuthorDate: Thu Jul 2 00:18:08 2020 +0100 Replace old SubDag zoom screenshot with new (#9621) (cherry picked from commit 63a8c79aa9f2dfc3f0802e19b98b0c0b0f6b7858) --- docs/img/subdag_zoom.png | Bin 150185 -> 255915 bytes 1 file changed, 0 insertions(+), 0 deletions(-) diff --git a/docs/img/subdag_zoom.png b/docs/img/subdag_zoom.png index 08fcf5c..fe5ce5a 100644 Binary files a/docs/img/subdag_zoom.png and b/docs/img/subdag_zoom.png differ

[airflow] 10/10: Add Changelog for 1.10.11

This is an automated email from the ASF dual-hosted git repository. kaxilnaik pushed a commit to branch v1-10-test in repository https://gitbox.apache.org/repos/asf/airflow.git commit 9a212d11eb4f96c38fac8d6bfc7c0ded0493fa42 Author: Kaxil Naik AuthorDate: Wed Jul 1 19:40:29 2020 +0100 Add Changelog for 1.10.11 --- .pre-commit-config.yaml | 3 +- CHANGELOG.txt | 198 2 files changed, 200 insertions(+), 1 deletion(-) diff --git a/.pre-commit-config.yaml b/.pre-commit-config.yaml index 469c606..1b7d88a 100644 --- a/.pre-commit-config.yaml +++ b/.pre-commit-config.yaml @@ -245,7 +245,8 @@ repos: (?x) ^airflow/contrib/hooks/cassandra_hook.py$| ^airflow/operators/hive_stats_operator.py$| - ^tests/contrib/hooks/test_cassandra_hook.py + ^tests/contrib/hooks/test_cassandra_hook.py| + ^CHANGELOG.txt - id: dont-use-safe-filter language: pygrep name: Don't use safe in templates diff --git a/CHANGELOG.txt b/CHANGELOG.txt index a8aa353..81be084 100644 --- a/CHANGELOG.txt +++ b/CHANGELOG.txt @@ -1,3 +1,201 @@ +Airflow 1.10.11, 2020-07-05 +- + +New Features + + +- Add task instance mutation hook (#8852) +- Allow changing Task States Colors (#9520) +- Add support for AWS Secrets Manager as Secrets Backend (#8186) +- Add airflow info command to the CLI (#8704) +- Add Local Filesystem Secret Backend (#8596) +- Add Airflow config CLI command (#8694) +- Add Support for Python 3.8 (#8836)(#8823) +- Allow K8S worker pod to be configured from JSON/YAML file (#6230) +- Add quarterly to crontab presets (#6873) +- Add support for ephemeral storage on KubernetesPodOperator (#6337) +- Add AirflowFailException to fail without any retry (#7133) +- Add SQL Branch Operator (#8942) + +Bug Fixes +" + +- Use NULL as dag.description default value (#7593) +- BugFix: DAG trigger via UI error in RBAC UI (#8411) +- Fix logging issue when running tasks (#9363) +- Fix JSON encoding error in DockerOperator (#8287) +- Fix alembic crash due to typing import (#6547) +- Correctly restore upstream_task_ids when deserializing Operators (#8775) +- Correctly store non-default Nones in serialized tasks/dags (#8772) +- Correctly deserialize dagrun_timeout field on DAGs (#8735) +- Fix tree view if config contains " (#9250) +- Fix Dag Run UI execution date with timezone cannot be saved issue (#8902) +- Fix Migration for MSSQL (#8385) +- RBAC ui: Fix missing Y-axis labels with units in plots (#8252) +- RBAC ui: Fix missing task runs being rendered as circles instead (#8253) +- Fix: DagRuns page renders the state column with artifacts in old UI (#9612) +- Fix task and dag stats on home page (#8865) +- Fix the trigger_dag api in the case of nested subdags (#8081) +- UX Fix: Prevent undesired text selection with DAG title selection in Chrome (#8912) +- Fix connection add/edit for spark (#8685) +- Fix retries causing constraint violation on MySQL with DAG Serialization (#9336) +- [AIRFLOW-4472] Use json.dumps/loads for templating lineage data (#5253) +- Restrict google-cloud-texttospeach to

[airflow] branch v1-10-test updated (0e4c3a2 -> 9a212d1)

This is an automated email from the ASF dual-hosted git repository. kaxilnaik pushed a change to branch v1-10-test in repository https://gitbox.apache.org/repos/asf/airflow.git. discard 0e4c3a2 Update version_added of configs added in 1.10.11 discard 011282e fixup! fixup! Add Changelog for 1.10.11 discard efe86e5 Update docs about the change to default auth for experimental API (#9617) discard 3c8fb4a Replace old SubDag zoom screenshot with new (#9621) discard ccf47ae Restrict changing XCom values from the Webserver (#9614) discard a177e5d Replace old Variables View Screenshot with new (#9620) discard cc3f09f Change 'initiate' to 'initialize' in installation.rst (#9619) discard 670c7d4 Add docs to change Colors on the Webserver (#9607) discard d56fdb4 fixup! Add Changelog for 1.10.11 discard 89329c4 Change default auth for experimental backend to deny_all (#9611) omit fae2e63 Add Changelog for 1.10.11 new 92c5f46 Change default auth for experimental backend to deny_all (#9611) new 127104b Add docs to change Colors on the Webserver (#9607) new e5662b9 Change 'initiate' to 'initialize' in installation.rst (#9619) new 0610df1 Replace old Variables View Screenshot with new (#9620) new 30325f8 Restrict changing XCom values from the Webserver (#9614) new 0663b4e Replace old SubDag zoom screenshot with new (#9621) new 0d520f2 Update docs about the change to default auth for experimental API (#9617) new 84fc6b4 Update version_added of configs added in 1.10.11 new c99194a Add __repr__ for DagTag so tags display properly in /dagmodel/show (#8719) new 9a212d1 Add Changelog for 1.10.11 This update added new revisions after undoing existing revisions. That is to say, some revisions that were in the old version of the branch are not in the new version. This situation occurs when a user --force pushes a change and generates a repository containing something like this: * -- * -- B -- O -- O -- O (0e4c3a2) \ N -- N -- N refs/heads/v1-10-test (9a212d1) You should already have received notification emails for all of the O revisions, and so the following emails describe only the N revisions from the common base, B. Any revisions marked "omit" are not gone; other references still refer to them. Any revisions marked "discard" are gone forever. The 10 revisions listed above as "new" are entirely new to this repository and will be described in separate emails. The revisions listed as "add" were already present in the repository and have only been added to this reference. Summary of changes: CHANGELOG.txt| 1 + airflow/models/dag.py| 3 +++ tests/models/test_dag.py | 12 +++- 3 files changed, 15 insertions(+), 1 deletion(-)

[airflow] 01/10: Change default auth for experimental backend to deny_all (#9611)

This is an automated email from the ASF dual-hosted git repository.

kaxilnaik pushed a commit to branch v1-10-test

in repository https://gitbox.apache.org/repos/asf/airflow.git

commit 92c5f46405497f4d9d9ce6d5cd3544b998be3714

Author: Ash Berlin-Taylor

AuthorDate: Wed Jul 1 17:04:35 2020 +0100

Change default auth for experimental backend to deny_all (#9611)

In a move that should surprise no one, a number of users do not read,

and leave the API wide open by default. Safe is better than powned

(cherry picked from commit 9e305d6b810a2a21e2591a80a80ec41acb3afed0)

---

UPDATING.md | 16

airflow/config_templates/config.yml | 6 --

airflow/config_templates/default_airflow.cfg | 6 --

3 files changed, 24 insertions(+), 4 deletions(-)

diff --git a/UPDATING.md b/UPDATING.md

index 3dfda58..ec193f9 100644

--- a/UPDATING.md

+++ b/UPDATING.md

@@ -73,6 +73,22 @@ Before 1.10.11 it was possible to edit DagRun State in the

`/admin/dagrun/` page

In Airflow 1.10.11+, the user can only choose the states from the list.

+### Experimental API will deny all request by default.

+

+The previous default setting was to allow all API requests without

authentication, but this poses security

+risks to users who miss this fact. This changes the default for new installs

to deny all requests by default.

+

+**Note**: This will not change the behavior for existing installs, please

update check your airflow.cfg

+

+If you wish to have the experimental API work, and aware of the risks of

enabling this without authentication

+(or if you have your own authentication layer in front of Airflow) you can get

+the previous behaviour on a new install by setting this in your airflow.cfg:

+

+```

+[api]

+auth_backend = airflow.api.auth.backend.default

+```

+

## Airflow 1.10.10

### Setting Empty string to a Airflow Variable will return an empty string

diff --git a/airflow/config_templates/config.yml

b/airflow/config_templates/config.yml

index f632cd5..0d52426 100644

--- a/airflow/config_templates/config.yml

+++ b/airflow/config_templates/config.yml

@@ -524,11 +524,13 @@

options:

- name: auth_backend

description: |

-How to authenticate users of the API

+How to authenticate users of the API. See

+https://airflow.apache.org/docs/stable/security.html for possible

values.

+("airflow.api.auth.backend.default" allows all requests for historic

reasons)

version_added: ~

type: string

example: ~

- default: "airflow.api.auth.backend.default"

+ default: "airflow.api.auth.backend.deny_all"

- name: lineage

description: ~

options:

diff --git a/airflow/config_templates/default_airflow.cfg

b/airflow/config_templates/default_airflow.cfg

index a061d46..63bd3cb 100644

--- a/airflow/config_templates/default_airflow.cfg

+++ b/airflow/config_templates/default_airflow.cfg

@@ -274,8 +274,10 @@ endpoint_url = http://localhost:8080

fail_fast = False

[api]

-# How to authenticate users of the API

-auth_backend = airflow.api.auth.backend.default

+# How to authenticate users of the API. See

+# https://airflow.apache.org/docs/stable/security.html for possible values.

+# ("airflow.api.auth.backend.default" allows all requests for historic reasons)

+auth_backend = airflow.api.auth.backend.deny_all

[lineage]

# what lineage backend to use

[airflow] 07/10: Update docs about the change to default auth for experimental API (#9617)

This is an automated email from the ASF dual-hosted git repository. kaxilnaik pushed a commit to branch v1-10-test in repository https://gitbox.apache.org/repos/asf/airflow.git commit 0d520f28c6232d94d1aae6118641ae4a06fae44f Author: Kaxil Naik AuthorDate: Wed Jul 1 22:59:13 2020 +0100 Update docs about the change to default auth for experimental API (#9617) (cherry picked from commit 7ef7f5880dfefc6e33cb7bf331927aa08e1bb438) --- docs/security.rst | 18 +++--- 1 file changed, 15 insertions(+), 3 deletions(-) diff --git a/docs/security.rst b/docs/security.rst index 863a454..3817c7f 100644 --- a/docs/security.rst +++ b/docs/security.rst @@ -159,15 +159,27 @@ only the dags which it is owner of, unless it is a superuser. API Authentication -- -Authentication for the API is handled separately to the Web Authentication. The default is to not -require any authentication on the API i.e. wide open by default. This is not recommended if your -Airflow webserver is publicly accessible, and you should probably use the ``deny all`` backend: +Authentication for the API is handled separately to the Web Authentication. The default is to +deny all requests: .. code-block:: ini [api] auth_backend = airflow.api.auth.backend.deny_all +.. versionchanged:: 1.10.11 + +In Airflow <1.10.11, the default setting was to allow all API requests without authentication, but this +posed security risks for if the Webserver is publicly accessible. + +If you wish to have the experimental API work, and aware of the risks of enabling this without authentication +(or if you have your own authentication layer in front of Airflow) you can set the following in ``airflow.cfg``: + +.. code-block:: ini + +[api] +auth_backend = airflow.api.auth.backend.default + Two "real" methods for authentication are currently supported for the API. To enabled Password authentication, set the following in the configuration:

[airflow] branch v1-10-test updated: Update version_added of configs added in 1.10.11

This is an automated email from the ASF dual-hosted git repository. kaxilnaik pushed a commit to branch v1-10-test in repository https://gitbox.apache.org/repos/asf/airflow.git The following commit(s) were added to refs/heads/v1-10-test by this push: new 0e4c3a2 Update version_added of configs added in 1.10.11 0e4c3a2 is described below commit 0e4c3a266d7b8f657a678a0ef78b7798ffbb6adc Author: Kaxil Naik AuthorDate: Thu Jul 2 00:33:42 2020 +0100 Update version_added of configs added in 1.10.11 --- airflow/config_templates/config.yml | 8 1 file changed, 4 insertions(+), 4 deletions(-) diff --git a/airflow/config_templates/config.yml b/airflow/config_templates/config.yml index 0d52426..3dd0a58 100644 --- a/airflow/config_templates/config.yml +++ b/airflow/config_templates/config.yml @@ -705,7 +705,7 @@ description: | If set to True, Airflow will track files in plugins_folder directory. When it detects changes, then reload the gunicorn. - version_added: ~ + version_added: 1.10.11 type: boolean example: ~ default: "False" @@ -1749,7 +1749,7 @@ - name: pod_template_file description: | Path to the YAML pod file. If set, all other kubernetes-related fields are ignored. - version_added: ~ + version_added: 1.10.11 type: string example: ~ default: "" @@ -1776,7 +1776,7 @@ description: | If False (and delete_worker_pods is True), failed worker pods will not be deleted so users can investigate them. - version_added: ~ + version_added: 1.10.11 type: string example: ~ default: "False" @@ -1847,7 +1847,7 @@ - name: dags_volume_mount_point description: | For either git sync or volume mounted DAGs, the worker will mount the volume in this path - version_added: ~ + version_added: 1.10.11 type: string example: ~ default: ""

[airflow] 02/07: Change 'initiate' to 'initialize' in installation.rst (#9619)

This is an automated email from the ASF dual-hosted git repository. kaxilnaik pushed a commit to branch v1-10-test in repository https://gitbox.apache.org/repos/asf/airflow.git commit cc3f09f1a85bbecf51657def6c027d80bda21663 Author: Kaxil Naik AuthorDate: Wed Jul 1 23:34:25 2020 +0100 Change 'initiate' to 'initialize' in installation.rst (#9619) `Initiating Airflow Database` -> `Initializing Airflow Database` (cherry picked from commit bc3f48c96603dbd2a94a33f05dd816097ccab3f1) --- docs/installation.rst | 6 +++--- 1 file changed, 3 insertions(+), 3 deletions(-) diff --git a/docs/installation.rst b/docs/installation.rst index e7c8970..d5652cb 100644 --- a/docs/installation.rst +++ b/docs/installation.rst @@ -180,10 +180,10 @@ Here's the list of the subpackages and what they enable: | vertica | ``pip install 'apache-airflow[vertica]'`` | Vertica hook support as an Airflow backend | +-+-+--+ -Initiating Airflow Database -''' +Initializing Airflow Database +' -Airflow requires a database to be initiated before you can run tasks. If +Airflow requires a database to be initialized before you can run tasks. If you're just experimenting and learning Airflow, you can stick with the default SQLite option. If you don't want to use SQLite, then take a look at :doc:`howto/initialize-database` to setup a different database.

[airflow] branch v1-10-test updated (d56fdb4 -> 011282e)

This is an automated email from the ASF dual-hosted git repository. kaxilnaik pushed a change to branch v1-10-test in repository https://gitbox.apache.org/repos/asf/airflow.git. from d56fdb4 fixup! Add Changelog for 1.10.11 new 670c7d4 Add docs to change Colors on the Webserver (#9607) new cc3f09f Change 'initiate' to 'initialize' in installation.rst (#9619) new a177e5d Replace old Variables View Screenshot with new (#9620) new ccf47ae Restrict changing XCom values from the Webserver (#9614) new 3c8fb4a Replace old SubDag zoom screenshot with new (#9621) new efe86e5 Update docs about the change to default auth for experimental API (#9617) new 011282e fixup! fixup! Add Changelog for 1.10.11 The 7 revisions listed above as "new" are entirely new to this repository and will be described in separate emails. The revisions listed as "add" were already present in the repository and have only been added to this reference. Summary of changes: CHANGELOG.txt| 6 +++ UPDATING.md | 6 +++ airflow/www/views.py | 2 + airflow/www_rbac/views.py| 4 +- docs/howto/customize-state-colors-ui.rst | 70 +++ docs/howto/index.rst | 1 + docs/img/change-ui-colors/dags-page-new.png | Bin 0 -> 483599 bytes docs/img/change-ui-colors/dags-page-old.png | Bin 0 -> 493009 bytes docs/img/change-ui-colors/graph-view-new.png | Bin 0 -> 56973 bytes docs/img/change-ui-colors/graph-view-old.png | Bin 0 -> 54884 bytes docs/img/change-ui-colors/tree-view-new.png | Bin 0 -> 36934 bytes docs/img/change-ui-colors/tree-view-old.png | Bin 0 -> 21601 bytes docs/img/subdag_zoom.png | Bin 150185 -> 255915 bytes docs/img/variable_hidden.png | Bin 154299 -> 121301 bytes docs/installation.rst| 6 +-- docs/security.rst| 18 +-- 16 files changed, 104 insertions(+), 9 deletions(-) create mode 100644 docs/howto/customize-state-colors-ui.rst create mode 100644 docs/img/change-ui-colors/dags-page-new.png create mode 100644 docs/img/change-ui-colors/dags-page-old.png create mode 100644 docs/img/change-ui-colors/graph-view-new.png create mode 100644 docs/img/change-ui-colors/graph-view-old.png create mode 100644 docs/img/change-ui-colors/tree-view-new.png create mode 100644 docs/img/change-ui-colors/tree-view-old.png

[airflow] 03/07: Replace old Variables View Screenshot with new (#9620)

This is an automated email from the ASF dual-hosted git repository. kaxilnaik pushed a commit to branch v1-10-test in repository https://gitbox.apache.org/repos/asf/airflow.git commit a177e5d4f4cd1d3d53a2897900fc964d1a6a752a Author: Kaxil Naik AuthorDate: Wed Jul 1 23:51:18 2020 +0100 Replace old Variables View Screenshot with new (#9620) (cherry picked from commit 05c88cb392e63e9f12f05915b94d216f22b652dd) --- docs/img/variable_hidden.png | Bin 154299 -> 121301 bytes 1 file changed, 0 insertions(+), 0 deletions(-) diff --git a/docs/img/variable_hidden.png b/docs/img/variable_hidden.png index e081ca3..d982b92 100644 Binary files a/docs/img/variable_hidden.png and b/docs/img/variable_hidden.png differ

[airflow] 07/07: fixup! fixup! Add Changelog for 1.10.11

This is an automated email from the ASF dual-hosted git repository. kaxilnaik pushed a commit to branch v1-10-test in repository https://gitbox.apache.org/repos/asf/airflow.git commit 011282e55527d877fbd892f09e6790c527384c5b Author: Kaxil Naik AuthorDate: Thu Jul 2 00:27:08 2020 +0100 fixup! fixup! Add Changelog for 1.10.11 --- CHANGELOG.txt | 6 ++ 1 file changed, 6 insertions(+) diff --git a/CHANGELOG.txt b/CHANGELOG.txt index bbf7ef9..f9e4dad 100644 --- a/CHANGELOG.txt +++ b/CHANGELOG.txt @@ -142,6 +142,7 @@ Improvements - Merging multiple sql operators (#9124) - Adds hive as extra in pyhive dependency (#9075) - Change default auth for experimental backend to deny_all (#9611) +- Restrict changing XCom values from the Webserver (#9614) Doc only changes @@ -187,6 +188,11 @@ Doc only changes - Remove non-existent chart value from readme (#9511) - Fix typo in helm chart upgrade command for 2.0 (#9484) - Don't use the term "whitelist" - language matters (#9174) +- Add docs to change Colors on the Webserver (#9607) +- Change 'initiate' to 'initialize' in installation.rst (#9619) +- Replace old Variables View Screenshot with new (#9620) +- Replace old SubDag zoom screenshot with new (#9621) +- Update docs about the change to default auth for experimental API (#9617) Airflow 1.10.10, 2020-04-09

[airflow] 04/07: Restrict changing XCom values from the Webserver (#9614)

This is an automated email from the ASF dual-hosted git repository.

kaxilnaik pushed a commit to branch v1-10-test

in repository https://gitbox.apache.org/repos/asf/airflow.git

commit ccf47ae7548740a5f65443cbca85b22884548cc7

Author: Kaxil Naik

AuthorDate: Wed Jul 1 22:13:10 2020 +0100

Restrict changing XCom values from the Webserver (#9614)

(cherry-picked from 1655fa9253ba8f61ccda77780a9e94766c15f565)

---

UPDATING.md | 6 ++

airflow/www/views.py | 2 ++

airflow/www_rbac/views.py | 4 +---

3 files changed, 9 insertions(+), 3 deletions(-)

diff --git a/UPDATING.md b/UPDATING.md

index ec193f9..61734bb 100644

--- a/UPDATING.md

+++ b/UPDATING.md

@@ -89,6 +89,12 @@ the previous behaviour on a new install by setting this in

your airflow.cfg:

auth_backend = airflow.api.auth.backend.default

```

+### XCom Values can no longer be added or changed from the Webserver

+

+Since XCom values can contain pickled data, we would no longer allow adding or

+changing XCom values from the UI.

+

+

## Airflow 1.10.10

### Setting Empty string to a Airflow Variable will return an empty string

diff --git a/airflow/www/views.py b/airflow/www/views.py

index a3293c8..abd1b9e 100644

--- a/airflow/www/views.py

+++ b/airflow/www/views.py

@@ -2754,6 +2754,8 @@ class VariableView(wwwutils.DataProfilingMixin,

AirflowModelView):

class XComView(wwwutils.SuperUserMixin, AirflowModelView):

+can_create = False

+can_edit = False

verbose_name = "XCom"

verbose_name_plural = "XComs"

diff --git a/airflow/www_rbac/views.py b/airflow/www_rbac/views.py

index 67a7493..96d4079 100644

--- a/airflow/www_rbac/views.py

+++ b/airflow/www_rbac/views.py

@@ -2233,12 +2233,10 @@ class XComModelView(AirflowModelView):

datamodel = AirflowModelView.CustomSQLAInterface(XCom)

-base_permissions = ['can_add', 'can_list', 'can_edit', 'can_delete']

+base_permissions = ['can_list', 'can_delete']

search_columns = ['key', 'value', 'timestamp', 'execution_date',

'task_id', 'dag_id']

list_columns = ['key', 'value', 'timestamp', 'execution_date', 'task_id',

'dag_id']

-add_columns = ['key', 'value', 'execution_date', 'task_id', 'dag_id']

-edit_columns = ['key', 'value', 'execution_date', 'task_id', 'dag_id']

base_order = ('execution_date', 'desc')

base_filters = [['dag_id', DagFilter, lambda: []]]

[airflow] 01/07: Add docs to change Colors on the Webserver (#9607)

This is an automated email from the ASF dual-hosted git repository.

kaxilnaik pushed a commit to branch v1-10-test

in repository https://gitbox.apache.org/repos/asf/airflow.git

commit 670c7d4ffbcda101a5ac451aa1aa8a88a054e30f

Author: Kaxil Naik

AuthorDate: Wed Jul 1 16:17:29 2020 +0100

Add docs to change Colors on the Webserver (#9607)

Feature was added in https://github.com/apache/airflow/pull/9520

(cherry picked from commit 65855e558f9b070004ba817e8cf94fd834d2a67a)

---

docs/howto/customize-state-colors-ui.rst | 70 +++

docs/howto/index.rst | 1 +

docs/img/change-ui-colors/dags-page-new.png | Bin 0 -> 483599 bytes

docs/img/change-ui-colors/dags-page-old.png | Bin 0 -> 493009 bytes

docs/img/change-ui-colors/graph-view-new.png | Bin 0 -> 56973 bytes

docs/img/change-ui-colors/graph-view-old.png | Bin 0 -> 54884 bytes

docs/img/change-ui-colors/tree-view-new.png | Bin 0 -> 36934 bytes

docs/img/change-ui-colors/tree-view-old.png | Bin 0 -> 21601 bytes

8 files changed, 71 insertions(+)

diff --git a/docs/howto/customize-state-colors-ui.rst

b/docs/howto/customize-state-colors-ui.rst

new file mode 100644

index 000..c856950

--- /dev/null

+++ b/docs/howto/customize-state-colors-ui.rst

@@ -0,0 +1,70 @@

+ .. Licensed to the Apache Software Foundation (ASF) under one

+or more contributor license agreements. See the NOTICE file

+distributed with this work for additional information

+regarding copyright ownership. The ASF licenses this file

+to you under the Apache License, Version 2.0 (the

+"License"); you may not use this file except in compliance

+with the License. You may obtain a copy of the License at

+

+ .. http://www.apache.org/licenses/LICENSE-2.0

+

+ .. Unless required by applicable law or agreed to in writing,

+software distributed under the License is distributed on an

+"AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+KIND, either express or implied. See the License for the

+specific language governing permissions and limitations

+under the License.

+

+Customizing state colours in UI

+===

+

+.. versionadded:: 1.10.11

+

+To change the colors for TaskInstance/DagRun State in the Airflow Webserver,

perform the

+following steps:

+

+1. Create ``airflow_local_settings.py`` file and put in on ``$PYTHONPATH`` or

+to ``$AIRFLOW_HOME/config`` folder. (Airflow adds ``$AIRFLOW_HOME/config``

on ``PYTHONPATH`` when

+Airflow is initialized)

+

+2. Add the following contents to ``airflow_local_settings.py`` file. Change

the colors to whatever you

+would like.

+

+.. code-block:: python

+

+ STATE_COLORS = {

+"queued": 'darkgray',

+"running": '#01FF70',

+"success": '#2ECC40',

+"failed": 'firebrick',

+"up_for_retry": 'yellow',

+"up_for_reschedule": 'turquoise',

+"upstream_failed": 'orange',

+"skipped": 'darkorchid',

+"scheduled": 'tan',

+ }

+

+

+

+3. Restart Airflow Webserver.

+

+Screenshots

+---

+

+Before

+^^

+

+.. image:: ../img/change-ui-colors/dags-page-old.png

+

+.. image:: ../img/change-ui-colors/graph-view-old.png

+

+.. image:: ../img/change-ui-colors/tree-view-old.png

+

+After

+^^

+

+.. image:: ../img/change-ui-colors/dags-page-new.png

+

+.. image:: ../img/change-ui-colors/graph-view-new.png

+

+.. image:: ../img/change-ui-colors/tree-view-new.png

diff --git a/docs/howto/index.rst b/docs/howto/index.rst

index ae20c91..2b91c77 100644

--- a/docs/howto/index.rst

+++ b/docs/howto/index.rst

@@ -32,6 +32,7 @@ configuring an Airflow environment.

set-config

initialize-database

operator/index

+customize-state-colors-ui

custom-operator

connection/index

secure-connections

diff --git a/docs/img/change-ui-colors/dags-page-new.png

b/docs/img/change-ui-colors/dags-page-new.png

new file mode 100644

index 000..d2ffe1f

Binary files /dev/null and b/docs/img/change-ui-colors/dags-page-new.png differ

diff --git a/docs/img/change-ui-colors/dags-page-old.png

b/docs/img/change-ui-colors/dags-page-old.png

new file mode 100644

index 000..5078d01

Binary files /dev/null and b/docs/img/change-ui-colors/dags-page-old.png differ

diff --git a/docs/img/change-ui-colors/graph-view-new.png

b/docs/img/change-ui-colors/graph-view-new.png

new file mode 100644

index 000..b367461

Binary files /dev/null and b/docs/img/change-ui-colors/graph-view-new.png differ

diff --git a/docs/img/change-ui-colors/graph-view-old.png

b/docs/img/change-ui-colors/graph-view-old.png

new file mode 100644

index 000..ceaf8d4

Binary files /dev/null and b/docs/img/change-ui-colors/graph-view-old.png differ

diff --git a/docs/img/change-ui-colors/tree-view-new.png

b/docs/img/change-ui-colors/tree-view-new.png

new file mode 100644

index 000..6a5b2d7

Binary files /dev/null and b/docs/img/change-ui-colors/tree-view-new.png differ

diff

[airflow] 05/07: Replace old SubDag zoom screenshot with new (#9621)

This is an automated email from the ASF dual-hosted git repository. kaxilnaik pushed a commit to branch v1-10-test in repository https://gitbox.apache.org/repos/asf/airflow.git commit 3c8fb4a89f9827baa82d1cf6403ca253959bc4bd Author: Kaxil Naik AuthorDate: Thu Jul 2 00:18:08 2020 +0100 Replace old SubDag zoom screenshot with new (#9621) (cherry picked from commit 63a8c79aa9f2dfc3f0802e19b98b0c0b0f6b7858) --- docs/img/subdag_zoom.png | Bin 150185 -> 255915 bytes 1 file changed, 0 insertions(+), 0 deletions(-) diff --git a/docs/img/subdag_zoom.png b/docs/img/subdag_zoom.png index 08fcf5c..fe5ce5a 100644 Binary files a/docs/img/subdag_zoom.png and b/docs/img/subdag_zoom.png differ

[airflow] 06/07: Update docs about the change to default auth for experimental API (#9617)

This is an automated email from the ASF dual-hosted git repository. kaxilnaik pushed a commit to branch v1-10-test in repository https://gitbox.apache.org/repos/asf/airflow.git commit efe86e55f6af52f0eb0457625d7b92193b88a296 Author: Kaxil Naik AuthorDate: Wed Jul 1 22:59:13 2020 +0100 Update docs about the change to default auth for experimental API (#9617) (cherry picked from commit 7ef7f5880dfefc6e33cb7bf331927aa08e1bb438) --- docs/security.rst | 18 +++--- 1 file changed, 15 insertions(+), 3 deletions(-) diff --git a/docs/security.rst b/docs/security.rst index 863a454..3817c7f 100644 --- a/docs/security.rst +++ b/docs/security.rst @@ -159,15 +159,27 @@ only the dags which it is owner of, unless it is a superuser. API Authentication -- -Authentication for the API is handled separately to the Web Authentication. The default is to not -require any authentication on the API i.e. wide open by default. This is not recommended if your -Airflow webserver is publicly accessible, and you should probably use the ``deny all`` backend: +Authentication for the API is handled separately to the Web Authentication. The default is to +deny all requests: .. code-block:: ini [api] auth_backend = airflow.api.auth.backend.deny_all +.. versionchanged:: 1.10.11 + +In Airflow <1.10.11, the default setting was to allow all API requests without authentication, but this +posed security risks for if the Webserver is publicly accessible. + +If you wish to have the experimental API work, and aware of the risks of enabling this without authentication +(or if you have your own authentication layer in front of Airflow) you can set the following in ``airflow.cfg``: + +.. code-block:: ini + +[api] +auth_backend = airflow.api.auth.backend.default + Two "real" methods for authentication are currently supported for the API. To enabled Password authentication, set the following in the configuration:

[GitHub] [airflow] vanka56 commented on pull request #9472: Add drop_partition functionality for HiveMetastoreHook

vanka56 commented on pull request #9472: URL: https://github.com/apache/airflow/pull/9472#issuecomment-652692779 @jhtimmins @turbaszek all static checks have passed now. is it good now? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[airflow] branch master updated (05c88cb -> 63a8c79)

This is an automated email from the ASF dual-hosted git repository. kaxilnaik pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/airflow.git. from 05c88cb Replace old Variables View Screenshot with new (#9620) add 63a8c79 Replace old SubDag zoom screenshot with new (#9621) No new revisions were added by this update. Summary of changes: docs/img/subdag_zoom.png | Bin 150185 -> 255915 bytes 1 file changed, 0 insertions(+), 0 deletions(-)

[GitHub] [airflow] kaxil merged pull request #9621: Replace old SubDag zoom screenshot with new

kaxil merged pull request #9621: URL: https://github.com/apache/airflow/pull/9621 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

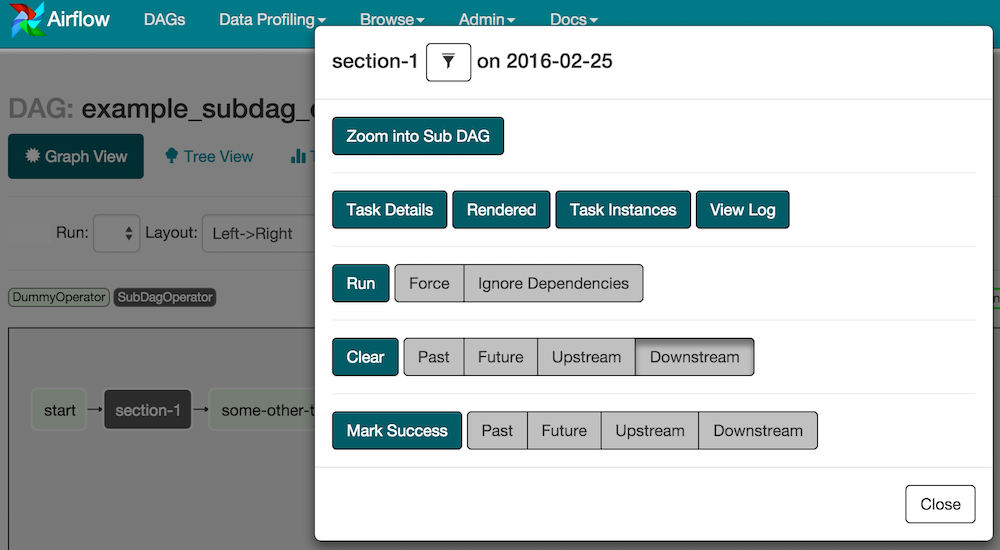

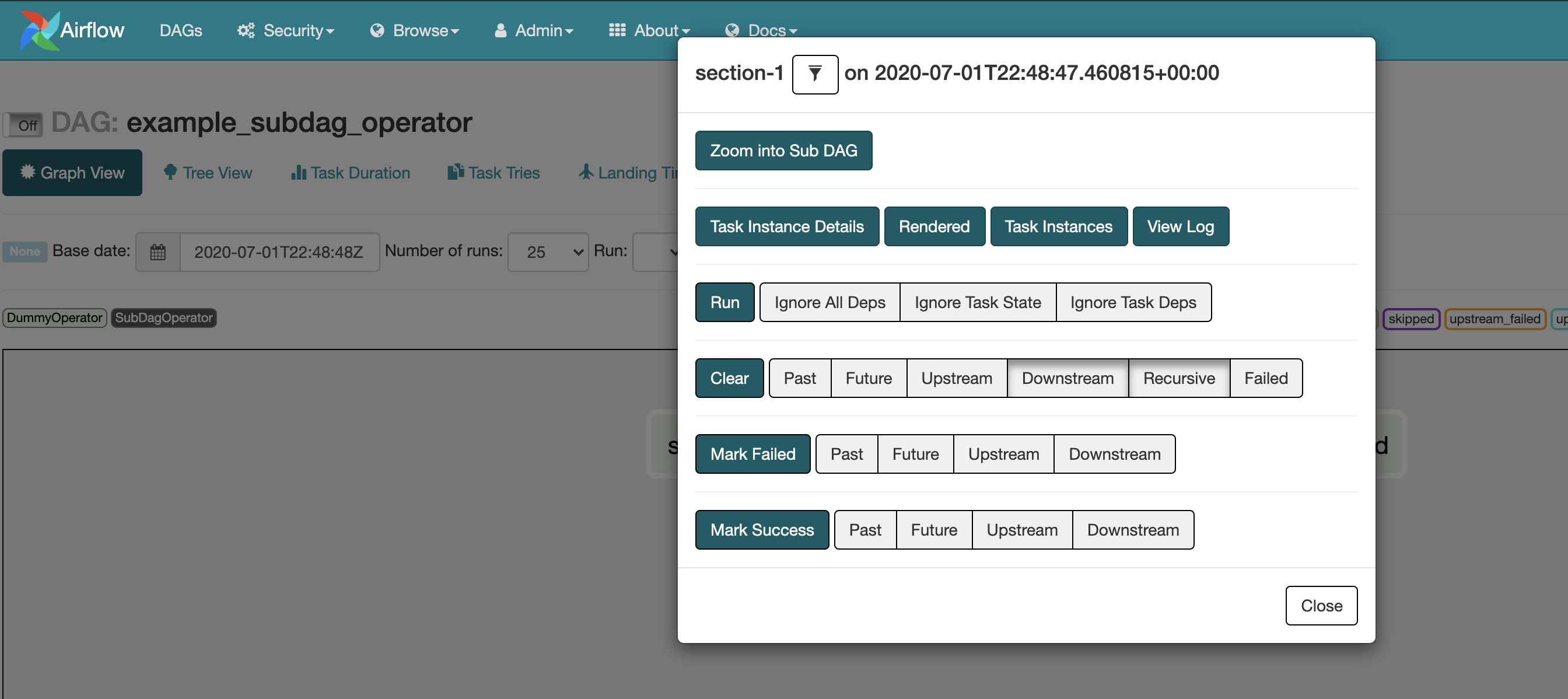

[GitHub] [airflow] kaxil opened a new pull request #9621: Replace old SubDag zoom screenshot with new

kaxil opened a new pull request #9621: URL: https://github.com/apache/airflow/pull/9621 Replace old SubDag zoom screenshot with new **Before**:  **After**:  --- Make sure to mark the boxes below before creating PR: [x] - [x] Description above provides context of the change - [x] Unit tests coverage for changes (not needed for documentation changes) - [x] Target Github ISSUE in description if exists - [x] Commits follow "[How to write a good git commit message](http://chris.beams.io/posts/git-commit/)" - [x] Relevant documentation is updated including usage instructions. - [x] I will engage committers as explained in [Contribution Workflow Example](https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#contribution-workflow-example). --- In case of fundamental code change, Airflow Improvement Proposal ([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvements+Proposals)) is needed. In case of a new dependency, check compliance with the [ASF 3rd Party License Policy](https://www.apache.org/legal/resolved.html#category-x). In case of backwards incompatible changes please leave a note in [UPDATING.md](https://github.com/apache/airflow/blob/master/UPDATING.md). Read the [Pull Request Guidelines](https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#pull-request-guidelines) for more information. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[airflow] branch master updated: Replace old Variables View Screenshot with new (#9620)

This is an automated email from the ASF dual-hosted git repository. kaxilnaik pushed a commit to branch master in repository https://gitbox.apache.org/repos/asf/airflow.git The following commit(s) were added to refs/heads/master by this push: new 05c88cb Replace old Variables View Screenshot with new (#9620) 05c88cb is described below commit 05c88cb392e63e9f12f05915b94d216f22b652dd Author: Kaxil Naik AuthorDate: Wed Jul 1 23:51:18 2020 +0100 Replace old Variables View Screenshot with new (#9620) --- docs/img/variable_hidden.png | Bin 154299 -> 121301 bytes 1 file changed, 0 insertions(+), 0 deletions(-) diff --git a/docs/img/variable_hidden.png b/docs/img/variable_hidden.png index e081ca3..d982b92 100644 Binary files a/docs/img/variable_hidden.png and b/docs/img/variable_hidden.png differ

[GitHub] [airflow] subkanthi commented on issue #8471: Add integration with Google Calendar API

subkanthi commented on issue #8471: URL: https://github.com/apache/airflow/issues/8471#issuecomment-652681463 @mik-laj , is this something I can take on, Im trying to find a good first issue, I have been using airflow for a few years and have written custom operators. Just thought will start contributing. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] kaxil opened a new pull request #9620: Replace old Variables View Screenshot with new

kaxil opened a new pull request #9620: URL: https://github.com/apache/airflow/pull/9620 Replace old Variables View Screenshot with new --- Make sure to mark the boxes below before creating PR: [x] - [x] Description above provides context of the change - [x] Unit tests coverage for changes (not needed for documentation changes) - [x] Target Github ISSUE in description if exists - [x] Commits follow "[How to write a good git commit message](http://chris.beams.io/posts/git-commit/)" - [x] Relevant documentation is updated including usage instructions. - [x] I will engage committers as explained in [Contribution Workflow Example](https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#contribution-workflow-example). --- In case of fundamental code change, Airflow Improvement Proposal ([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvements+Proposals)) is needed. In case of a new dependency, check compliance with the [ASF 3rd Party License Policy](https://www.apache.org/legal/resolved.html#category-x). In case of backwards incompatible changes please leave a note in [UPDATING.md](https://github.com/apache/airflow/blob/master/UPDATING.md). Read the [Pull Request Guidelines](https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#pull-request-guidelines) for more information. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] kaxil merged pull request #9619: Change 'initiate' to 'initialize' in installation.rst

kaxil merged pull request #9619: URL: https://github.com/apache/airflow/pull/9619 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[airflow] branch master updated (7ef7f58 -> bc3f48c)

This is an automated email from the ASF dual-hosted git repository. kaxilnaik pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/airflow.git. from 7ef7f58 Update docs about the change to default auth for experimental API (#9617) add bc3f48c Change 'initiate' to 'initialize' in installation.rst (#9619) No new revisions were added by this update. Summary of changes: docs/installation.rst | 6 +++--- 1 file changed, 3 insertions(+), 3 deletions(-)

[GitHub] [airflow] mik-laj commented on pull request #9354: Task logging handlers can provide custom log links

mik-laj commented on pull request #9354: URL: https://github.com/apache/airflow/pull/9354#issuecomment-652676572 @turbaszek Do you want to add anything else? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] kaxil opened a new pull request #9619: Change 'initiate' to 'initialize' in installation.rst

kaxil opened a new pull request #9619: URL: https://github.com/apache/airflow/pull/9619 `Initiating Airflow Database` -> `Initializing Airflow Database` --- Make sure to mark the boxes below before creating PR: [x] - [x] Description above provides context of the change - [x] Unit tests coverage for changes (not needed for documentation changes) - [x] Target Github ISSUE in description if exists - [x] Commits follow "[How to write a good git commit message](http://chris.beams.io/posts/git-commit/)" - [x] Relevant documentation is updated including usage instructions. - [x] I will engage committers as explained in [Contribution Workflow Example](https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#contribution-workflow-example). --- In case of fundamental code change, Airflow Improvement Proposal ([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvements+Proposals)) is needed. In case of a new dependency, check compliance with the [ASF 3rd Party License Policy](https://www.apache.org/legal/resolved.html#category-x). In case of backwards incompatible changes please leave a note in [UPDATING.md](https://github.com/apache/airflow/blob/master/UPDATING.md). Read the [Pull Request Guidelines](https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#pull-request-guidelines) for more information. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] vuppalli commented on issue #9418: Deprecated AI Platform Operators and Runtimes in Example DAG

vuppalli commented on issue #9418: URL: https://github.com/apache/airflow/issues/9418#issuecomment-652672779 Thank you for the information! I created a PR for this issue here: https://github.com/apache/airflow/pull/9618. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] vuppalli commented on pull request #9618: Fix typos, older versions, and deprecated operators with AI platform example DAG

vuppalli commented on pull request #9618: URL: https://github.com/apache/airflow/pull/9618#issuecomment-652672627 @mik-laj You mentioned that this DAG does not have accompanying tests. How should I go about addressing the Unit tests coverage bullet above? Additionally, should I make this a draft PR while I work on the corresponding documentation? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] boring-cyborg[bot] commented on pull request #9618: Fix typos, older versions, and deprecated operators with AI platform example DAG

boring-cyborg[bot] commented on pull request #9618: URL: https://github.com/apache/airflow/pull/9618#issuecomment-652670230 Congratulations on your first Pull Request and welcome to the Apache Airflow community! If you have any issues or are unsure about any anything please check our Contribution Guide (https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst) Here are some useful points: - Pay attention to the quality of your code (flake8, pylint and type annotations). Our [pre-commits]( https://github.com/apache/airflow/blob/master/STATIC_CODE_CHECKS.rst#prerequisites-for-pre-commit-hooks) will help you with that. - In case of a new feature add useful documentation (in docstrings or in `docs/` directory). Adding a new operator? Check this short [guide](https://github.com/apache/airflow/blob/master/docs/howto/custom-operator.rst) Consider adding an example DAG that shows how users should use it. - Consider using [Breeze environment](https://github.com/apache/airflow/blob/master/BREEZE.rst) for testing locally, it’s a heavy docker but it ships with a working Airflow and a lot of integrations. - Be patient and persistent. It might take some time to get a review or get the final approval from Committers. - Please follow [ASF Code of Conduct](https://www.apache.org/foundation/policies/conduct) for all communication including (but not limited to) comments on Pull Requests, Mailing list and Slack. - Be sure to read the [Airflow Coding style]( https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#coding-style-and-best-practices). Apache Airflow is a community-driven project and together we are making it better . In case of doubts contact the developers at: Mailing List: d...@airflow.apache.org Slack: https://apache-airflow-slack.herokuapp.com/ This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] vuppalli opened a new pull request #9618: Fix typos, older versions, and deprecated operators with AI platform example DAG

vuppalli opened a new pull request #9618: URL: https://github.com/apache/airflow/pull/9618 The AI platform example DAG had typos, was using older versions, and had deprecated operators. This PR is an attempt to fix these problems and corresponds to [this Github issue](https://github.com/apache/airflow/issues/9418). The relevant documentation will be updated soon (most likely next week), which corresponds to [this Github issue](https://github.com/apache/airflow/issues/8207). I will address any comments next Monday (07/06) since we have US holidays for the rest of the week. I look forward to getting feedback! --- Make sure to mark the boxes below before creating PR: [x] - [x] Description above provides context of the change - [ ] Unit tests coverage for changes (not needed for documentation changes) - [x] Target Github ISSUE in description if exists - [x] Commits follow "[How to write a good git commit message](http://chris.beams.io/posts/git-commit/)" - [ ] Relevant documentation is updated including usage instructions. - [x] I will engage committers as explained in [Contribution Workflow Example](https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#contribution-workflow-example). --- In case of fundamental code change, Airflow Improvement Proposal ([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvements+Proposals)) is needed. In case of a new dependency, check compliance with the [ASF 3rd Party License Policy](https://www.apache.org/legal/resolved.html#category-x). In case of backwards incompatible changes please leave a note in [UPDATING.md](https://github.com/apache/airflow/blob/master/UPDATING.md). Read the [Pull Request Guidelines](https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#pull-request-guidelines) for more information. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] kaxil merged pull request #9617: Update docs about the change to default auth for experimental API

kaxil merged pull request #9617: URL: https://github.com/apache/airflow/pull/9617 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[airflow] branch master updated: Update docs about the change to default auth for experimental API (#9617)

This is an automated email from the ASF dual-hosted git repository. kaxilnaik pushed a commit to branch master in repository https://gitbox.apache.org/repos/asf/airflow.git The following commit(s) were added to refs/heads/master by this push: new 7ef7f58 Update docs about the change to default auth for experimental API (#9617) 7ef7f58 is described below commit 7ef7f5880dfefc6e33cb7bf331927aa08e1bb438 Author: Kaxil Naik AuthorDate: Wed Jul 1 22:59:13 2020 +0100 Update docs about the change to default auth for experimental API (#9617) --- docs/security.rst | 18 +++--- 1 file changed, 15 insertions(+), 3 deletions(-) diff --git a/docs/security.rst b/docs/security.rst index c8f6e1a..1e820d3 100644 --- a/docs/security.rst +++ b/docs/security.rst @@ -63,15 +63,27 @@ OAuth, OpenID, LDAP, REMOTE_USER. You can configure in ``webserver_config.py``. API Authentication -- -Authentication for the API is handled separately to the Web Authentication. The default is to not -require any authentication on the API i.e. wide open by default. This is not recommended if your -Airflow webserver is publicly accessible, and you should probably use the ``deny all`` backend: +Authentication for the API is handled separately to the Web Authentication. The default is to +deny all requests: .. code-block:: ini [api] auth_backend = airflow.api.auth.backend.deny_all +.. versionchanged:: 1.10.11 + +In Airflow <1.10.11, the default setting was to allow all API requests without authentication, but this +posed security risks for if the Webserver is publicly accessible. + +If you wish to have the experimental API work, and aware of the risks of enabling this without authentication +(or if you have your own authentication layer in front of Airflow) you can set the following in ``airflow.cfg``: + +.. code-block:: ini + +[api] +auth_backend = airflow.api.auth.backend.default + Kerberos authentication is currently supported for the API. To enable Kerberos authentication, set the following in the configuration:

[GitHub] [airflow] mik-laj commented on a change in pull request #9531: Support .airflowignore for plugins

mik-laj commented on a change in pull request #9531:

URL: https://github.com/apache/airflow/pull/9531#discussion_r448633985

##

File path: airflow/plugins_manager.py

##

@@ -164,34 +165,31 @@ def load_plugins_from_plugin_directory():

global plugins # pylint: disable=global-statement

log.debug("Loading plugins from directory: %s", settings.PLUGINS_FOLDER)

-# Crawl through the plugins folder to find AirflowPlugin derivatives

-for root, _, files in os.walk(settings.PLUGINS_FOLDER, followlinks=True):

# noqa # pylint: disable=too-many-nested-blocks

-for f in files:

-filepath = os.path.join(root, f)

-try:

-if not os.path.isfile(filepath):

-continue

-mod_name, file_ext = os.path.splitext(

-os.path.split(filepath)[-1])

-if file_ext != '.py':

-continue

-

-log.debug('Importing plugin module %s', filepath)

-

-loader = importlib.machinery.SourceFileLoader(mod_name,

filepath)

-spec = importlib.util.spec_from_loader(mod_name, loader)

-mod = importlib.util.module_from_spec(spec)

-sys.modules[spec.name] = mod

-loader.exec_module(mod)

-for mod_attr_value in list(mod.__dict__.values()):

-if is_valid_plugin(mod_attr_value):

-plugin_instance = mod_attr_value()

-plugins.append(plugin_instance)

-except Exception as e: # pylint: disable=broad-except

-log.exception(e)

-path = filepath or str(f)

-log.error('Failed to import plugin %s', path)

-import_errors[path] = str(e)

+for file_path in find_path_from_directory(

+settings.PLUGINS_FOLDER, ".airflowignore"):

+

+if not os.path.isfile(file_path):

+continue

+mod_name, file_ext = os.path.splitext(os.path.split(file_path)[-1])

+if file_ext != '.py':

+continue

Review comment:

```suggestion

```

Should this not be part of the find_path_from_directory function? I would

like to see that there is no code in the plugins_manager.py file that is

responsible for the file selection. Plugins_manager should only load the

module. WDYT?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] kaxil closed issue #8214: "Adding DAG and Tasks documentation" section rendered twice in Tutorial on website