[GitHub] [airflow] binnisb commented on issue #29127: Use latest azure-mgmt-containerinstance (10.0.0) which depricates network_profile and adds subnet_ids

binnisb commented on issue #29127: URL: https://github.com/apache/airflow/issues/29127#issuecomment-1403205812 I can do that if I get it working for our use case. I have 2 week so if I haven't opened a PR by then I will not have time to do it. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] Aakcht commented on issue #21891: hive provider support for python 3.9

Aakcht commented on issue #21891: URL: https://github.com/apache/airflow/issues/21891#issuecomment-1403197225 I didn't personally test it, but I don't see any issues related to hive provider and python 3.10 so I suspect it should work fine (since the latest airflow versions support python 3.10). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

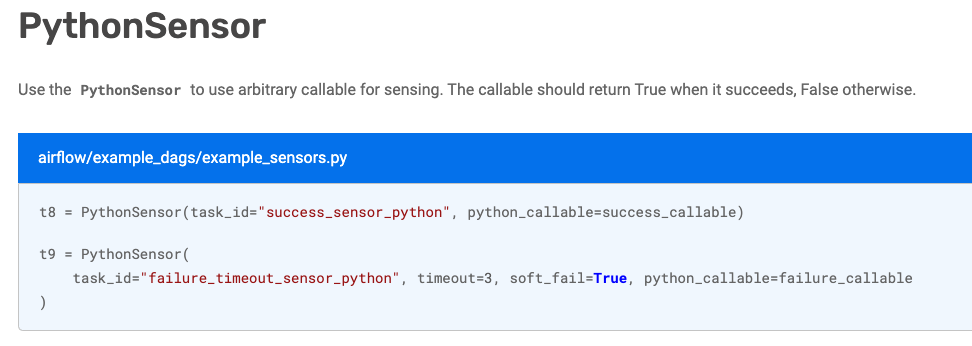

[GitHub] [airflow] BasPH commented on a diff in pull request #29143: Demonstrate usage of the PythonSensor

BasPH commented on code in PR #29143: URL: https://github.com/apache/airflow/pull/29143#discussion_r1086270155 ## docs/apache-airflow/howto/operator/python.rst: ## @@ -225,11 +225,29 @@ Jinja templating can be used in same way as described for the PythonOperator. PythonSensor -Use the :class:`~airflow.sensors.python.PythonSensor` to use arbitrary callable for sensing. The callable -should return True when it succeeds, False otherwise. +A PythonSensor waits for a certain condition to be ``True``, for example to wait for a file to exist. The +PythonSensor is available via ``@task.sensor`` and ``airflow.sensors.python.PythonSensor``. The callable +should return a boolean ``True`` or ``False``, indicating whether a condition is met. For example: Review Comment: Meh not really, I feel this page exists to give an overview of which operators/sensors exist to orchestrate arbitrary Python functions, but not to show nitty-gritty details. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] sergei3000 commented on issue #21891: hive provider support for python 3.9

sergei3000 commented on issue #21891: URL: https://github.com/apache/airflow/issues/21891#issuecomment-1403184373 Hi does all this mean Hive provider will work for Python 3.10 as well? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

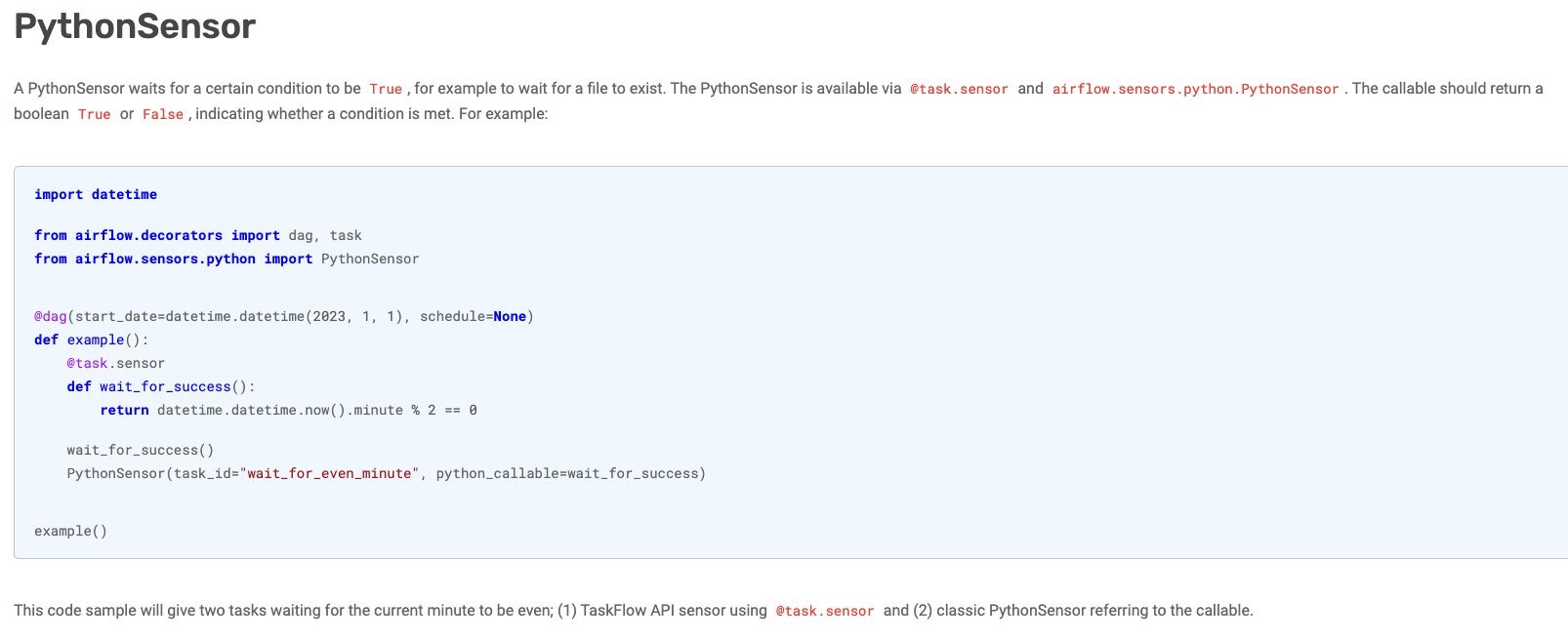

[GitHub] [airflow] BasPH commented on a diff in pull request #29143: Demonstrate usage of the PythonSensor

BasPH commented on code in PR #29143: URL: https://github.com/apache/airflow/pull/29143#discussion_r1086267570 ## docs/apache-airflow/howto/operator/python.rst: ## @@ -225,11 +225,29 @@ Jinja templating can be used in same way as described for the PythonOperator. PythonSensor -Use the :class:`~airflow.sensors.python.PythonSensor` to use arbitrary callable for sensing. The callable -should return True when it succeeds, False otherwise. +A PythonSensor waits for a certain condition to be ``True``, for example to wait for a file to exist. The +PythonSensor is available via ``@task.sensor`` and ``airflow.sensors.python.PythonSensor``. The callable +should return a boolean ``True`` or ``False``, indicating whether a condition is met. For example: -.. exampleinclude:: /../../airflow/example_dags/example_sensors.py -:language: python -:dedent: 4 -:start-after: [START example_python_sensors] -:end-before: [END example_python_sensors] +.. code-block:: python + +import datetime + +from airflow.decorators import dag, task +from airflow.sensors.python import PythonSensor + + +@dag(start_date=datetime.datetime(2023, 1, 1), schedule=None) +def example(): +@task.sensor +def wait_for_success(): +return datetime.datetime.now().minute % 2 == 0 + +wait_for_success() +PythonSensor(task_id="wait_for_even_minute", python_callable=wait_for_success) Review Comment: I tested on 2.5.1 and it just works:  I could split this into separate functions because it does look a little odd to reference a taskflow-decorated function from a PythonSensor class? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] o-nikolas commented on pull request #27947: refactored Amazon Redshift-data functionality into the hook

o-nikolas commented on PR #27947: URL: https://github.com/apache/airflow/pull/27947#issuecomment-1403147389 Hey @yehoshuadimarsky, Any updates on this one, specifically regarding the feedback from Daniel? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] o-nikolas commented on a diff in pull request #28187: Add IAM authentication to Amazon Redshift Connection by AWS Connection

o-nikolas commented on code in PR #28187:

URL: https://github.com/apache/airflow/pull/28187#discussion_r1086234925

##

airflow/providers/amazon/aws/hooks/redshift_sql.py:

##

@@ -62,6 +80,9 @@ def _get_conn_params(self) -> dict[str, str | int]:

conn_params: dict[str, str | int] = {}

+if conn.extra_dejson.get("iam", False):

Review Comment:

@IAL32 thoughts on this?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] o-nikolas commented on pull request #28282: Mark stepIds for cancel in EmrAddStepsOperator

o-nikolas commented on PR #28282: URL: https://github.com/apache/airflow/pull/28282#issuecomment-1403134136 Hey @swapz-z, What are your thoughts on the above feedback from Elad, Vincent and Dennis? Also @dacort, would you like to weigh in? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] o-nikolas commented on pull request #28321: Add support in AWS Batch Operator for multinode jobs

o-nikolas commented on PR #28321: URL: https://github.com/apache/airflow/pull/28321#issuecomment-1403132380 Hey @camilleanne, Any plans to pick this one up again? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] o-nikolas commented on pull request #28338: New AWS sensor — DynamoDBValueSensor

o-nikolas commented on PR #28338: URL: https://github.com/apache/airflow/pull/28338#issuecomment-1403128044 Hey @mrichman, Any plans to make more progress on this one? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] o-nikolas commented on a diff in pull request #28827: Add option to wait for completion on the EmrCreateJobFlowOperator

o-nikolas commented on code in PR #28827:

URL: https://github.com/apache/airflow/pull/28827#discussion_r1086224824

##

airflow/providers/amazon/aws/operators/emr.py:

##

@@ -538,42 +544,76 @@ def __init__(

emr_conn_id: str | None = "emr_default",

job_flow_overrides: str | dict[str, Any] | None = None,

region_name: str | None = None,

+wait_for_completion: bool = False,

+waiter_countdown: int | None = None,

+waiter_check_interval_seconds: int = 60,

**kwargs,

):

super().__init__(**kwargs)

self.aws_conn_id = aws_conn_id

self.emr_conn_id = emr_conn_id

self.job_flow_overrides = job_flow_overrides or {}

self.region_name = region_name

+self.wait_for_completion = wait_for_completion

+self.waiter_countdown = waiter_countdown

+self.waiter_check_interval_seconds = waiter_check_interval_seconds

+

+self._job_flow_id: str | None = None

-def execute(self, context: Context) -> str:

-emr = EmrHook(

+@cached_property

+def _emr_hook(self) -> EmrHook:

+"""Create and return an EmrHook."""

+return EmrHook(

aws_conn_id=self.aws_conn_id, emr_conn_id=self.emr_conn_id,

region_name=self.region_name

)

+def execute(self, context: Context) -> str | None:

self.log.info(

-"Creating JobFlow using aws-conn-id: %s, emr-conn-id: %s",

self.aws_conn_id, self.emr_conn_id

+"Creating job flow using aws_conn_id: %s, emr_conn_id: %s",

self.aws_conn_id, self.emr_conn_id

)

if isinstance(self.job_flow_overrides, str):

job_flow_overrides: dict[str, Any] =

ast.literal_eval(self.job_flow_overrides)

self.job_flow_overrides = job_flow_overrides

else:

job_flow_overrides = self.job_flow_overrides

-response = emr.create_job_flow(job_flow_overrides)

+response = self._emr_hook.create_job_flow(job_flow_overrides)

if not response["ResponseMetadata"]["HTTPStatusCode"] == 200:

-raise AirflowException(f"JobFlow creation failed: {response}")

+raise AirflowException(f"Job flow creation failed: {response}")

else:

-job_flow_id = response["JobFlowId"]

-self.log.info("JobFlow with id %s created", job_flow_id)

+self._job_flow_id = response["JobFlowId"]

+self.log.info("Job flow with id %s created", self._job_flow_id)

EmrClusterLink.persist(

context=context,

operator=self,

-region_name=emr.conn_region_name,

-aws_partition=emr.conn_partition,

-job_flow_id=job_flow_id,

+region_name=self._emr_hook.conn_region_name,

+aws_partition=self._emr_hook.conn_partition,

+job_flow_id=self._job_flow_id,

)

-return job_flow_id

+

+if self.wait_for_completion:

+# Didn't use a boto-supplied waiter because those don't

support waiting for WAITING state.

+#

https://boto3.amazonaws.com/v1/documentation/api/latest/reference/services/emr.html#waiters

+waiter(

+

get_state_callable=self._emr_hook.get_conn().describe_cluster,

+get_state_args={"ClusterId": self._job_flow_id},

+parse_response=["Cluster", "Status", "State"],

+# Cluster will be in WAITING after finishing if

KeepJobFlowAliveWhenNoSteps is True

+desired_state={"WAITING", "TERMINATED"},

+failure_states={"TERMINATED_WITH_ERRORS"},

+object_type="job flow",

+action="finished",

+countdown=self.waiter_countdown,

+check_interval_seconds=self.waiter_check_interval_seconds,

+)

+

+return self._job_flow_id

+

+def on_kill(self) -> None:

+"""Terminate job flow."""

+if self._job_flow_id:

+self.log.info("Terminating job flow %s", self._job_flow_id)

+self._emr_hook.terminate_job_flow(self._job_flow_id)

Review Comment:

Hey @BasPH, thoughts on this suggested change? Otherwise the PR looks good

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] o-nikolas commented on pull request #29053: introduce base class for EKS sensors

o-nikolas commented on PR #29053: URL: https://github.com/apache/airflow/pull/29053#issuecomment-1403123198 @ferruzzi as our resident EKS expert, do you have time to take a look? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[airflow] branch main updated: Fixes to how DebugExecutor handles sensors (#28528)

This is an automated email from the ASF dual-hosted git repository. onikolas pushed a commit to branch main in repository https://gitbox.apache.org/repos/asf/airflow.git The following commit(s) were added to refs/heads/main by this push: new a35ec9549d Fixes to how DebugExecutor handles sensors (#28528) a35ec9549d is described below commit a35ec9549dc0b4b311f126f3022309ec5b33fa62 Author: Raphaël Vandon <114772123+vandonr-...@users.noreply.github.com> AuthorDate: Tue Jan 24 21:40:10 2023 -0800 Fixes to how DebugExecutor handles sensors (#28528) * move fix to ready_to_reschedule * replace check on debug exec with new property Co-authored-by: Tzu-ping Chung --- airflow/executors/debug_executor.py | 6 ++ airflow/ti_deps/deps/ready_to_reschedule.py | 15 +++ 2 files changed, 17 insertions(+), 4 deletions(-) diff --git a/airflow/executors/debug_executor.py b/airflow/executors/debug_executor.py index 18ba0f8798..4355bd1dcf 100644 --- a/airflow/executors/debug_executor.py +++ b/airflow/executors/debug_executor.py @@ -25,6 +25,7 @@ DebugExecutor. from __future__ import annotations import threading +import time from typing import Any from airflow.configuration import conf @@ -120,6 +121,11 @@ class DebugExecutor(BaseExecutor): :param open_slots: Number of open slots """ +if not self.queued_tasks: +# wait a bit if there are no tasks ready to be executed to avoid spinning too fast in the void +time.sleep(0.5) +return + sorted_queue = sorted( self.queued_tasks.items(), key=lambda x: x[1][1], diff --git a/airflow/ti_deps/deps/ready_to_reschedule.py b/airflow/ti_deps/deps/ready_to_reschedule.py index 467f08536e..66aa5c5613 100644 --- a/airflow/ti_deps/deps/ready_to_reschedule.py +++ b/airflow/ti_deps/deps/ready_to_reschedule.py @@ -17,6 +17,7 @@ # under the License. from __future__ import annotations +from airflow.executors.executor_loader import ExecutorLoader from airflow.models.taskreschedule import TaskReschedule from airflow.ti_deps.deps.base_ti_dep import BaseTIDep from airflow.utils import timezone @@ -44,10 +45,16 @@ class ReadyToRescheduleDep(BaseTIDep): from airflow.models.mappedoperator import MappedOperator is_mapped = isinstance(ti.task, MappedOperator) -if not is_mapped and not getattr(ti.task, "reschedule", False): -# Mapped sensors don't have the reschedule property (it can only -# be calculated after unmapping), so we don't check them here. -# They are handled below by checking TaskReschedule instead. +executor, _ = ExecutorLoader.import_default_executor_cls() +if ( +# Mapped sensors don't have the reschedule property (it can only be calculated after unmapping), +# so we don't check them here. They are handled below by checking TaskReschedule instead. +not is_mapped +and not getattr(ti.task, "reschedule", False) +# Executors can force running in reschedule mode, +# in which case we ignore the value of the task property. +and not executor.change_sensor_mode_to_reschedule +): yield self._passing_status(reason="Task is not in reschedule mode.") return

[GitHub] [airflow] o-nikolas merged pull request #28528: Fixes to how DebugExecutor handles sensors

o-nikolas merged PR #28528: URL: https://github.com/apache/airflow/pull/28528 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] potiuk commented on pull request #29116: Add colors in help outputs of Airfow CLI commands #28789

potiuk commented on PR #29116: URL: https://github.com/apache/airflow/pull/29116#issuecomment-1403113821 I think performance in this case is very little impacted. The Click change was much more of the problem because click works with python-decorated methods and it has natural tendency to importing much more and the imports would take much more time. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] potiuk commented on pull request #29116: Add colors in help outputs of Airfow CLI commands #28789

potiuk commented on PR #29116: URL: https://github.com/apache/airflow/pull/29116#issuecomment-1403113028 Looks cool. You need to rebase, and fix the static checks and CLI tests (the SSM test errors will likely go away after rebase - it was broken main I think). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] o-nikolas commented on a diff in pull request #29147: Rename most pod_id usage to pod_name in KubernetesExecutor

o-nikolas commented on code in PR #29147:

URL: https://github.com/apache/airflow/pull/29147#discussion_r1086215221

##

airflow/executors/kubernetes_executor.py:

##

@@ -792,28 +793,31 @@ def try_adopt_task_instances(self, tis:

Sequence[TaskInstance]) -> Sequence[Task

}

pod_list = self._list_pods(query_kwargs)

for pod in pod_list:

-self.adopt_launched_task(kube_client, pod, pod_ids)

+self.adopt_launched_task(kube_client, pod, tis_to_flush_by_key)

self._adopt_completed_pods(kube_client)

-tis_to_flush.extend(pod_ids.values())

+tis_to_flush.extend(tis_to_flush_by_key.values())

return tis_to_flush

def adopt_launched_task(

-self, kube_client: client.CoreV1Api, pod: k8s.V1Pod, pod_ids:

dict[TaskInstanceKey, k8s.V1Pod]

+self,

+kube_client: client.CoreV1Api,

+pod: k8s.V1Pod,

+tis_to_flush_by_key: dict[TaskInstanceKey, k8s.V1Pod],

Review Comment:

This method is in the `KubernetesExecutor` class proper so I think squarely

lands in public land :grimacing:

##

airflow/executors/kubernetes_executor.py:

##

@@ -353,12 +353,12 @@ def run_next(self, next_job: KubernetesJobType) -> None:

self.run_pod_async(pod, **self.kube_config.kube_client_request_args)

self.log.debug("Kubernetes Job created!")

-def delete_pod(self, pod_id: str, namespace: str) -> None:

-"""Deletes POD."""

+def delete_pod(self, pod_name: str, namespace: str) -> None:

Review Comment:

This is an interesting grey area. We say in the new public interface docs

that executors are public and we even encourage extending them:

> Airflow has a set of Executors that are considered public. You are free to

extend their functionality

But this is not the `KubernetesExecutor` class itself that you're modifying

it's the job watcher singleton (which is indirectly used by the executor class

via the scheduler class, which is itself passed in as a parameter to the

executor).

So I could see this being a private class who's interface we don't guarantee

to be consistent release to release.

I'm interested to hear what others think though.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] sudeepgupta90 commented on issue #28779: Built-in ServiceMonitor for PgBouncer and StatsD

sudeepgupta90 commented on issue #28779: URL: https://github.com/apache/airflow/issues/28779#issuecomment-1403107145 The latter - adding community exporter for pgbouncer -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] boring-cyborg[bot] commented on issue #29149: Parameter for disabling scheduling

boring-cyborg[bot] commented on issue #29149: URL: https://github.com/apache/airflow/issues/29149#issuecomment-1403094151 Thanks for opening your first issue here! Be sure to follow the issue template! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] jedcunningham opened a new pull request, #29148: General cleanup of things around KubernetesExecutor

jedcunningham opened a new pull request, #29148: URL: https://github.com/apache/airflow/pull/29148 The biggest change here is using `State` or `TaskInstanceState` where appropriate. Even though their strings are the same, it provides useful context, so we should use the correct enum. One could argue `State` isn't the right thing to use, but at least it's consistently wrong now :) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] rishabh643 opened a new issue, #29149: Parameter for disabling scheduling

rishabh643 opened a new issue, #29149: URL: https://github.com/apache/airflow/issues/29149 ### Description Can we have a parameter in airflow that can disable scheduling for all dags that are present? I know one can disable dags individually or can turn off the scheduler altogether. But I am specifically looking for a parameter through which we can disable the scheduling of all dags without bringing down the scheduler. ### Use case/motivation We are creating a Disaster Recovery setup. In which primary site will be up and running. While the secondary site should be up with minimum replicas and no one should be able to trigger the dags on the secondary. In order to do so I want to set up airflow on secondary with this parameter enabled. So that no one even by mistake can run enable the dag from the UI and run it. ### Related issues _No response_ ### Are you willing to submit a PR? - [ ] Yes I am willing to submit a PR! ### Code of Conduct - [X] I agree to follow this project's [Code of Conduct](https://github.com/apache/airflow/blob/main/CODE_OF_CONDUCT.md) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] jedcunningham commented on a diff in pull request #29147: Rename most pod_id usage to pod_name in KubernetesExecutor

jedcunningham commented on code in PR #29147:

URL: https://github.com/apache/airflow/pull/29147#discussion_r1086193863

##

airflow/executors/kubernetes_executor.py:

##

@@ -353,12 +353,12 @@ def run_next(self, next_job: KubernetesJobType) -> None:

self.run_pod_async(pod, **self.kube_config.kube_client_request_args)

self.log.debug("Kubernetes Job created!")

-def delete_pod(self, pod_id: str, namespace: str) -> None:

-"""Deletes POD."""

+def delete_pod(self, pod_name: str, namespace: str) -> None:

Review Comment:

I guess arguably this is part of the public api?

##

airflow/executors/kubernetes_executor.py:

##

@@ -792,28 +793,31 @@ def try_adopt_task_instances(self, tis:

Sequence[TaskInstance]) -> Sequence[Task

}

pod_list = self._list_pods(query_kwargs)

for pod in pod_list:

-self.adopt_launched_task(kube_client, pod, pod_ids)

+self.adopt_launched_task(kube_client, pod, tis_to_flush_by_key)

self._adopt_completed_pods(kube_client)

-tis_to_flush.extend(pod_ids.values())

+tis_to_flush.extend(tis_to_flush_by_key.values())

return tis_to_flush

def adopt_launched_task(

-self, kube_client: client.CoreV1Api, pod: k8s.V1Pod, pod_ids:

dict[TaskInstanceKey, k8s.V1Pod]

+self,

+kube_client: client.CoreV1Api,

+pod: k8s.V1Pod,

+tis_to_flush_by_key: dict[TaskInstanceKey, k8s.V1Pod],

Review Comment:

Maybe this one too? Ugh, this is the worst offended, because these are

really TI keys.

##

airflow/executors/kubernetes_executor.py:

##

@@ -368,17 +368,17 @@ def delete_pod(self, pod_id: str, namespace: str) -> None:

if e.status != 404:

raise

-def patch_pod_executor_done(self, *, pod_id: str, namespace: str):

+def patch_pod_executor_done(self, *, pod_name: str, namespace: str):

Review Comment:

This one isn't in a release yet, so it's fair game.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] jedcunningham opened a new pull request, #29147: Rename most pod_id usage to pod_name in KubernetesExecutor

jedcunningham opened a new pull request, #29147: URL: https://github.com/apache/airflow/pull/29147 We were using pod_id in a lot of place, where really it is just the pod name. I've renamed it, where it is easy to do so, so things are easier to follow. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] josh-fell commented on a diff in pull request #29143: Demonstrate usage of the PythonSensor

josh-fell commented on code in PR #29143: URL: https://github.com/apache/airflow/pull/29143#discussion_r1086144868 ## docs/apache-airflow/howto/operator/python.rst: ## @@ -225,11 +225,29 @@ Jinja templating can be used in same way as described for the PythonOperator. PythonSensor -Use the :class:`~airflow.sensors.python.PythonSensor` to use arbitrary callable for sensing. The callable -should return True when it succeeds, False otherwise. +A PythonSensor waits for a certain condition to be ``True``, for example to wait for a file to exist. The +PythonSensor is available via ``@task.sensor`` and ``airflow.sensors.python.PythonSensor``. The callable +should return a boolean ``True`` or ``False``, indicating whether a condition is met. For example: Review Comment: _Technically_ ~the `PythonSensor`~ they both can also return ~the truthy or falsy~ a non-boolean value via `PokeReturnValue` ~and, interestingly enough, [this PR](https://github.com/apache/airflow/pull/29146) was opened to mimic the same behavior in `@task.sensor`~. Not sure if you want to dive into this nuance here. edit: Oh boy Josh, you really didn't do your homework on that comment 臘 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] josh-fell commented on a diff in pull request #29143: Demonstrate usage of the PythonSensor

josh-fell commented on code in PR #29143: URL: https://github.com/apache/airflow/pull/29143#discussion_r1086143815 ## docs/apache-airflow/howto/operator/python.rst: ## @@ -225,11 +225,29 @@ Jinja templating can be used in same way as described for the PythonOperator. PythonSensor -Use the :class:`~airflow.sensors.python.PythonSensor` to use arbitrary callable for sensing. The callable -should return True when it succeeds, False otherwise. +A PythonSensor waits for a certain condition to be ``True``, for example to wait for a file to exist. The +PythonSensor is available via ``@task.sensor`` and ``airflow.sensors.python.PythonSensor``. The callable +should return a boolean ``True`` or ``False``, indicating whether a condition is met. For example: -.. exampleinclude:: /../../airflow/example_dags/example_sensors.py -:language: python -:dedent: 4 -:start-after: [START example_python_sensors] -:end-before: [END example_python_sensors] +.. code-block:: python + +import datetime + +from airflow.decorators import dag, task +from airflow.sensors.python import PythonSensor + + +@dag(start_date=datetime.datetime(2023, 1, 1), schedule=None) +def example(): +@task.sensor +def wait_for_success(): +return datetime.datetime.now().minute % 2 == 0 + +wait_for_success() +PythonSensor(task_id="wait_for_even_minute", python_callable=wait_for_success) Review Comment: This won't function properly since the callable is now a TaskFlow function. ## docs/apache-airflow/howto/operator/python.rst: ## @@ -225,11 +225,29 @@ Jinja templating can be used in same way as described for the PythonOperator. PythonSensor -Use the :class:`~airflow.sensors.python.PythonSensor` to use arbitrary callable for sensing. The callable -should return True when it succeeds, False otherwise. +A PythonSensor waits for a certain condition to be ``True``, for example to wait for a file to exist. The +PythonSensor is available via ``@task.sensor`` and ``airflow.sensors.python.PythonSensor``. The callable +should return a boolean ``True`` or ``False``, indicating whether a condition is met. For example: Review Comment: _Technically_ the `PythonSensor` can also return the truthy or falsy value via `PokeReturnValue` and, interestingly enough, [this PR](https://github.com/apache/airflow/pull/29146) was opened to mimic the same behavior in `@task.sensor`. Not sure if you want to dive into this nuance here. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] boring-cyborg[bot] commented on pull request #29146: Add ability to access context in functions decorated by task.sensor

boring-cyborg[bot] commented on PR #29146: URL: https://github.com/apache/airflow/pull/29146#issuecomment-1402983470 Congratulations on your first Pull Request and welcome to the Apache Airflow community! If you have any issues or are unsure about any anything please check our Contribution Guide (https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst) Here are some useful points: - Pay attention to the quality of your code (ruff, mypy and type annotations). Our [pre-commits]( https://github.com/apache/airflow/blob/main/STATIC_CODE_CHECKS.rst#prerequisites-for-pre-commit-hooks) will help you with that. - In case of a new feature add useful documentation (in docstrings or in `docs/` directory). Adding a new operator? Check this short [guide](https://github.com/apache/airflow/blob/main/docs/apache-airflow/howto/custom-operator.rst) Consider adding an example DAG that shows how users should use it. - Consider using [Breeze environment](https://github.com/apache/airflow/blob/main/BREEZE.rst) for testing locally, it's a heavy docker but it ships with a working Airflow and a lot of integrations. - Be patient and persistent. It might take some time to get a review or get the final approval from Committers. - Please follow [ASF Code of Conduct](https://www.apache.org/foundation/policies/conduct) for all communication including (but not limited to) comments on Pull Requests, Mailing list and Slack. - Be sure to read the [Airflow Coding style]( https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst#coding-style-and-best-practices). Apache Airflow is a community-driven project and together we are making it better . In case of doubts contact the developers at: Mailing List: d...@airflow.apache.org Slack: https://s.apache.org/airflow-slack -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] Thomas-McKanna opened a new pull request, #29146: Add ability to access context in functions decorated by task.sensor

Thomas-McKanna opened a new pull request, #29146: URL: https://github.com/apache/airflow/pull/29146 Previous implementation did not properly expose Airflow context in functions decorated by `airflow.decorators.task.sensor`: https://github.com/apache/airflow/blob/1fbfd312d9d7e28e66f6ba5274421a96560fb7ba/airflow/decorators/sensor.py#L60-L61 Since `DecoratedSensorOperator` is a subclass of `PythonSensor`, I've removed the definition of `poke` so that the definition in `PythonSensor` is used: https://github.com/apache/airflow/blob/1fbfd312d9d7e28e66f6ba5274421a96560fb7ba/airflow/sensors/python.py#L68-L77 closes: #29137 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] Taragolis commented on pull request #29144: rewrite invoke lamba operator tests

Taragolis commented on PR #29144: URL: https://github.com/apache/airflow/pull/29144#issuecomment-1402930439 I don't know about removal `moto` in this test, because it is a good framework for testing something more close to AWS implementation. Basically it just wrapper around [botocore.stub.Stubber](https://botocore.amazonaws.com/v1/documentation/api/latest/reference/stubber.html) with some helpers. Side effect with mock you really need to sure that you didn't do any mistake with mocking otherwise you easily could pass something that regular AWS environment and botocore/boto won't allow -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ferruzzi commented on a diff in pull request #29144: rewrite invoke lamba operator tests

ferruzzi commented on code in PR #29144:

URL: https://github.com/apache/airflow/pull/29144#discussion_r1086120021

##

tests/providers/amazon/aws/operators/test_lambda_function.py:

##

@@ -90,65 +84,69 @@ def test_init(self):

assert lambda_operator.log_type == "None"

assert lambda_operator.aws_conn_id == "aws_conn_test"

-@staticmethod

-def create_zip(body):

-code = body

-zip_output = io.BytesIO()

-with zipfile.ZipFile(zip_output, "w", zipfile.ZIP_DEFLATED) as

zip_file:

-zip_file.writestr("lambda_function.py", code)

-zip_output.seek(0)

-return zip_output.read()

-

-@staticmethod

-def create_iam_role(role_name: str):

-iam = AwsBaseHook("aws_conn_test", client_type="iam")

-resp = iam.conn.create_role(

-RoleName=role_name,

-AssumeRolePolicyDocument=json.dumps(

-{

-"Version": "2012-10-17",

-"Statement": [

-{

-"Effect": "Allow",

-"Principal": {"Service": "lambda.amazonaws.com"},

-"Action": "sts:AssumeRole",

-}

-],

-}

-),

-Description="IAM role for Lambda execution.",

+@patch(

Review Comment:

Mocks are still a bit of black magic for me, but I think the same thing

would be achieved in much shorter code using

```suggestion

@patch.object(AwsLambdaInvokeFunctionOperator, "hook",

new_callable=mock.PropertyMock)

```

But I'm still wrong on occasion about when to use `@patch.object` vs `@patch`

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] Taragolis opened a new pull request, #29145: wip: Attempt to fix integration with codecov

Taragolis opened a new pull request, #29145: URL: https://github.com/apache/airflow/pull/29145 Change some setting for coverage and CMD arguments. No idea is help or not but right now codecov doesn't happy about our reports  -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] dstandish commented on pull request #29117: GCSTaskHandler may use remote log conn id

dstandish commented on PR #29117: URL: https://github.com/apache/airflow/pull/29117#issuecomment-1402916188 I think it's ok. I've tested it locally and it works and, it is only invoked if you set the conn id so, should be ok 爛 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] vandonr-amz opened a new pull request, #29144: rewrite invoke lamba operator tests

vandonr-amz opened a new pull request, #29144: URL: https://github.com/apache/airflow/pull/29144 there was one big test that was mostly testing moto's implementation, and also it was taking a long time and failing on my machine. I replaced it with a test that mocks the hook as we don't need to test anything beneath that, and added tests for failure cases. note that this operator is also tested end-to-end in the system test https://github.com/apache/airflow/blob/dd9a02940a6dde29d90184d3deb75d1dfa673aa6/tests/system/providers/amazon/aws/example_lambda.py#L102-L106 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] jedcunningham commented on pull request #29136: Dataproc batches

jedcunningham commented on PR #29136: URL: https://github.com/apache/airflow/pull/29136#issuecomment-1402886139 Thanks for the PR @kristopherkane! Looks like static checks are failing. I'd also suggest that you [set up the pre-commit hooks](https://github.com/apache/airflow/blob/main/STATIC_CODE_CHECKS.rst#pre-commit-hooks), they help catch these issues early . -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #23560: Add advanced secrets backend configurations

github-actions[bot] commented on PR #23560: URL: https://github.com/apache/airflow/pull/23560#issuecomment-1402861209 This pull request has been automatically marked as stale because it has not had recent activity. It will be closed in 5 days if no further activity occurs. Thank you for your contributions. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] closed pull request #27247: Added extra annotations support

github-actions[bot] closed pull request #27247: Added extra annotations support URL: https://github.com/apache/airflow/pull/27247 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] closed pull request #28056: Move GCP Sheets system tests

github-actions[bot] closed pull request #28056: Move GCP Sheets system tests URL: https://github.com/apache/airflow/pull/28056 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #28123: Fix SFTP Sensor fails to locate file

github-actions[bot] commented on PR #28123: URL: https://github.com/apache/airflow/pull/28123#issuecomment-1402861028 This pull request has been automatically marked as stale because it has not had recent activity. It will be closed in 5 days if no further activity occurs. Thank you for your contributions. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[airflow] branch main updated: introduce a method to convert dictionaries to boto-style key-value lists (#28816)

This is an automated email from the ASF dual-hosted git repository.

onikolas pushed a commit to branch main

in repository https://gitbox.apache.org/repos/asf/airflow.git

The following commit(s) were added to refs/heads/main by this push:

new 2c4928da40 introduce a method to convert dictionaries to boto-style

key-value lists (#28816)

2c4928da40 is described below

commit 2c4928da40667cd4d52030b8b79419175948cb85

Author: Raphaël Vandon <114772123+vandonr-...@users.noreply.github.com>

AuthorDate: Tue Jan 24 15:45:16 2023 -0800

introduce a method to convert dictionaries to boto-style key-value lists

(#28816)

* accept either dict of list for tags

---

airflow/providers/amazon/aws/hooks/s3.py | 28 ++--

airflow/providers/amazon/aws/hooks/sagemaker.py| 5 ++-

airflow/providers/amazon/aws/operators/rds.py | 32 ++

airflow/providers/amazon/aws/operators/s3.py | 4 +--

.../providers/amazon/aws/operators/sagemaker.py| 4 +--

airflow/providers/amazon/aws/utils/tags.py | 38 ++

tests/providers/amazon/aws/hooks/test_s3.py| 9 +

7 files changed, 89 insertions(+), 31 deletions(-)

diff --git a/airflow/providers/amazon/aws/hooks/s3.py

b/airflow/providers/amazon/aws/hooks/s3.py

index 89c9261cb6..f88274747d 100644

--- a/airflow/providers/amazon/aws/hooks/s3.py

+++ b/airflow/providers/amazon/aws/hooks/s3.py

@@ -43,6 +43,7 @@ from botocore.exceptions import ClientError

from airflow.exceptions import AirflowException

from airflow.providers.amazon.aws.exceptions import S3HookUriParseFailure

from airflow.providers.amazon.aws.hooks.base_aws import AwsBaseHook

+from airflow.providers.amazon.aws.utils.tags import format_tags

from airflow.utils.helpers import chunks

T = TypeVar("T", bound=Callable)

@@ -1063,36 +1064,43 @@ class S3Hook(AwsBaseHook):

@provide_bucket_name

def put_bucket_tagging(

self,

-tag_set: list[dict[str, str]] | None = None,

+tag_set: dict[str, str] | list[dict[str, str]] | None = None,

key: str | None = None,

value: str | None = None,

bucket_name: str | None = None,

) -> None:

"""

-Overwrites the existing TagSet with provided tags. Must provide

either a TagSet or a key/value pair.

+Overwrites the existing TagSet with provided tags.

+Must provide a TagSet, a key/value pair, or both.

.. seealso::

- :external+boto3:py:meth:`S3.Client.put_bucket_tagging`

-:param tag_set: A List containing the key/value pairs for the tags.

+:param tag_set: A dictionary containing the key/value pairs for the

tags,

+or a list already formatted for the API

:param key: The Key for the new TagSet entry.

:param value: The Value for the new TagSet entry.

:param bucket_name: The name of the bucket.

+

:return: None

"""

-self.log.info("S3 Bucket Tag Info:\tKey: %s\tValue: %s\tSet: %s", key,

value, tag_set)

-if not tag_set:

-tag_set = []

+formatted_tags = format_tags(tag_set)

+

if key and value:

-tag_set.append({"Key": key, "Value": value})

-elif not tag_set or (key or value):

-message = "put_bucket_tagging() requires either a predefined

TagSet or a key/value pair."

+formatted_tags.append({"Key": key, "Value": value})

+elif key or value:

+message = (

+"Key and Value must be specified as a pair. "

+f"Only one of the two had a value (key: '{key}', value:

'{value}')"

+)

self.log.error(message)

raise ValueError(message)

+self.log.info("Tagging S3 Bucket %s with %s", bucket_name,

formatted_tags)

+

try:

s3_client = self.get_conn()

-s3_client.put_bucket_tagging(Bucket=bucket_name,

Tagging={"TagSet": tag_set})

+s3_client.put_bucket_tagging(Bucket=bucket_name,

Tagging={"TagSet": formatted_tags})

except ClientError as e:

self.log.error(e)

raise e

diff --git a/airflow/providers/amazon/aws/hooks/sagemaker.py

b/airflow/providers/amazon/aws/hooks/sagemaker.py

index c5aeb3d9ed..4c731f2051 100644

--- a/airflow/providers/amazon/aws/hooks/sagemaker.py

+++ b/airflow/providers/amazon/aws/hooks/sagemaker.py

@@ -35,6 +35,7 @@ from airflow.exceptions import AirflowException

from airflow.providers.amazon.aws.hooks.base_aws import AwsBaseHook

from airflow.providers.amazon.aws.hooks.logs import AwsLogsHook

from airflow.providers.amazon.aws.hooks.s3 import S3Hook

+from airflow.providers.amazon.aws.utils.tags import format_tags

from airflow.utils import timezone

@@ -1100,9 +1101,7 @@ class SageMakerHook(AwsBaseHook):

:return: the ARN of the pipeline execution launched.

"""

-if pipeline_params is None:

-pipeline_params = {}

-

[GitHub] [airflow] o-nikolas merged pull request #28816: introduce a method to convert dictionaries to boto-style key-value lists

o-nikolas merged PR #28816: URL: https://github.com/apache/airflow/pull/28816 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] mik-laj commented on pull request #29117: GCSTaskHandler may use remote log conn id

mik-laj commented on PR #29117: URL: https://github.com/apache/airflow/pull/29117#issuecomment-1402832503 I don't remember the details of why this caused problems and we decided to remove hook support, but I would check if the secret backends are causing problems here. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] vandonr-amz commented on a diff in pull request #28869: rewrite polling code for appflow hook

vandonr-amz commented on code in PR #28869: URL: https://github.com/apache/airflow/pull/28869#discussion_r1086049943 ## airflow/providers/amazon/aws/operators/appflow.py: ## @@ -93,6 +98,12 @@ def execute(self, context: Context) -> None: self.connector_type = self._get_connector_type() if self.flow_update: self._update_flow() +# previous code had a wait between update and run without explaining why. +# since I don't have a way to actually test this behavior, +# I'm reproducing it out of fear of breaking workflows. +# It might be unnecessary. Review Comment: checked it with the team, and it's true for on-demand flows (see https://docs.aws.amazon.com/appflow/latest/userguide/flow-triggers.html for a desc of the different types of flows) I updated the comment accordingly. Unfortunately, we don't have infra setup to be able to run the appflow system test (because it depends on external sources), so it's a bit hard for me to test it. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[airflow] branch main updated: GCSTaskHandler may use remote log conn id (#29117)

This is an automated email from the ASF dual-hosted git repository.

dstandish pushed a commit to branch main

in repository https://gitbox.apache.org/repos/asf/airflow.git

The following commit(s) were added to refs/heads/main by this push:

new b4c50dadd3 GCSTaskHandler may use remote log conn id (#29117)

b4c50dadd3 is described below

commit b4c50dadd36d66e4d222c627a61771653767afd6

Author: Daniel Standish <15932138+dstand...@users.noreply.github.com>

AuthorDate: Tue Jan 24 15:25:04 2023 -0800

GCSTaskHandler may use remote log conn id (#29117)

---

.../providers/google/cloud/log/gcs_task_handler.py | 30 +-

.../google/cloud/log/test_gcs_task_handler.py | 25 +-

2 files changed, 41 insertions(+), 14 deletions(-)

diff --git a/airflow/providers/google/cloud/log/gcs_task_handler.py

b/airflow/providers/google/cloud/log/gcs_task_handler.py

index 5fbba80798..a264821093 100644

--- a/airflow/providers/google/cloud/log/gcs_task_handler.py

+++ b/airflow/providers/google/cloud/log/gcs_task_handler.py

@@ -24,6 +24,9 @@ from typing import Collection

from google.cloud import storage # type: ignore[attr-defined]

from airflow.compat.functools import cached_property

+from airflow.configuration import conf

+from airflow.exceptions import AirflowNotFoundException

+from airflow.providers.google.cloud.hooks.gcs import GCSHook

from airflow.providers.google.cloud.utils.credentials_provider import

get_credentials_and_project_id

from airflow.providers.google.common.consts import CLIENT_INFO

from airflow.utils.log.file_task_handler import FileTaskHandler

@@ -72,7 +75,6 @@ class GCSTaskHandler(FileTaskHandler, LoggingMixin):

super().__init__(base_log_folder, filename_template)

self.remote_base = gcs_log_folder

self.log_relative_path = ""

-self._hook = None

self.closed = False

self.upload_on_close = True

self.gcp_key_path = gcp_key_path

@@ -80,15 +82,29 @@ class GCSTaskHandler(FileTaskHandler, LoggingMixin):

self.scopes = gcp_scopes

self.project_id = project_id

+@cached_property

+def hook(self) -> GCSHook | None:

+"""Returns GCSHook if remote_log_conn_id configured."""

+conn_id = conf.get("logging", "remote_log_conn_id", fallback=None)

+if conn_id:

+try:

+return GCSHook(gcp_conn_id=conn_id)

+except AirflowNotFoundException:

+pass

+return None

+

@cached_property

def client(self) -> storage.Client:

"""Returns GCS Client."""

-credentials, project_id = get_credentials_and_project_id(

-key_path=self.gcp_key_path,

-keyfile_dict=self.gcp_keyfile_dict,

-scopes=self.scopes,

-disable_logging=True,

-)

+if self.hook:

+credentials, project_id =

self.hook.get_credentials_and_project_id()

+else:

+credentials, project_id = get_credentials_and_project_id(

+key_path=self.gcp_key_path,

+keyfile_dict=self.gcp_keyfile_dict,

+scopes=self.scopes,

+disable_logging=True,

+)

return storage.Client(

credentials=credentials,

client_info=CLIENT_INFO,

diff --git a/tests/providers/google/cloud/log/test_gcs_task_handler.py

b/tests/providers/google/cloud/log/test_gcs_task_handler.py

index 049a627336..b801d1fc05 100644

--- a/tests/providers/google/cloud/log/test_gcs_task_handler.py

+++ b/tests/providers/google/cloud/log/test_gcs_task_handler.py

@@ -21,6 +21,7 @@ import tempfile

from unittest import mock

import pytest

+from pytest import param

from airflow.providers.google.cloud.log.gcs_task_handler import GCSTaskHandler

from airflow.utils.state import TaskInstanceState

@@ -59,15 +60,25 @@ class TestGCSTaskHandler:

)

yield self.gcs_task_handler

-@mock.patch(

-

"airflow.providers.google.cloud.log.gcs_task_handler.get_credentials_and_project_id",

-return_value=("TEST_CREDENTIALS", "TEST_PROJECT_ID"),

-)

+@mock.patch("airflow.providers.google.cloud.log.gcs_task_handler.GCSHook")

@mock.patch("google.cloud.storage.Client")

-def test_hook(self, mock_client, mock_creds):

-return_value = self.gcs_task_handler.client

+

@mock.patch("airflow.providers.google.cloud.log.gcs_task_handler.get_credentials_and_project_id")

+@pytest.mark.parametrize("conn_id", [param("", id="no-conn"),

param("my_gcs_conn", id="with-conn")])

+def test_client_conn_id_behavior(self, mock_get_cred, mock_client,

mock_hook, conn_id):

+"""When remote log conn id configured, hook will be used"""

+mock_hook.return_value.get_credentials_and_project_id.return_value =

("test_cred", "test_proj")

+mock_get_cred.return_value = ("test_cred", "test_proj")

+with conf_vars({("logging", "remote_log_conn_id"): conn_id}):

+

[GitHub] [airflow] dstandish merged pull request #29117: GCSTaskHandler may use remote log conn id

dstandish merged PR #29117: URL: https://github.com/apache/airflow/pull/29117 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] Taragolis commented on pull request #29087: Unquarantine receive SIGTERM on Task Runner test (second attempt)

Taragolis commented on PR #29087: URL: https://github.com/apache/airflow/pull/29087#issuecomment-1402820966 Yet another rebase -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] travis-cook-sfdc commented on issue #28870: Support multiple buttons from `operator_extra_links`

travis-cook-sfdc commented on issue #28870: URL: https://github.com/apache/airflow/issues/28870#issuecomment-1402800228 I'm interesting in potentially implementing this change, but it's not clear to me exactly what the implementation should look like. Can anyone provide a summary? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] Taragolis commented on pull request #29142: Decrypt SecureString value obtained by SsmHook

Taragolis commented on PR #29142: URL: https://github.com/apache/airflow/pull/29142#issuecomment-1402786587 Since we cant be sure where it password and where it is not than better mask all. I will ad it tomorrow. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] zachliu closed issue #29138: Auto refreshing (or auto tail) of logs in Web UI is erroneous

zachliu closed issue #29138: Auto refreshing (or auto tail) of logs in Web UI is erroneous URL: https://github.com/apache/airflow/issues/29138 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] zachliu commented on issue #29138: Auto refreshing (or auto tail) of logs in Web UI is erroneous

zachliu commented on issue #29138: URL: https://github.com/apache/airflow/issues/29138#issuecomment-1402776235 oops, didn't reconcile my own derived class with that [PR](https://github.com/apache/airflow/pull/26169) :sweat_smile: i have a `PatchedS3TaskHandler` to always read from local for speed and use s3 just as a backup -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] Taragolis commented on issue #29138: Auto refreshing (or auto tail) of logs in Web UI is erroneous

Taragolis commented on issue #29138: URL: https://github.com/apache/airflow/issues/29138#issuecomment-1402766815 Just wondering are you use `EcsRunTaskOperator` with enable fetching CloudWatch logs to Airflow tasks? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ferruzzi commented on pull request #29142: Decrypt SecureString value obtained by SsmHook

ferruzzi commented on PR #29142: URL: https://github.com/apache/airflow/pull/29142#issuecomment-1402759146 > > Nice catch! IMHO, if we are decoding by default then masking sounds like the right answer to me. I'm not really up to date on best practices when using SecureString though, so I'm happy to defer if someone feels otherwise. > > Well there is not easy answer as well as best practices. We do not know what users might store into SSM Parameter Store and how they intend to use it. > > If it credentials the answer straightforward, yes we should, like here: > > https://github.com/apache/airflow/blob/3b25168c413a8434f8f65efb09aaf949cf7adc3b/airflow/providers/amazon/aws/hooks/base_aws.py#L662-L666 > > IMHO, In general if you create secure string you do not want to some one who does not have access to KMS keys see value. But we could mask all or nothing, that mean `postgresql+psycopg2://airflow:insecurepassword@postgres/airflow` in logs transform to `***` It would be ideal if only the password got masked, but I think if a user is setting the parameter as a secure string, it would be better to assume more security than less. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

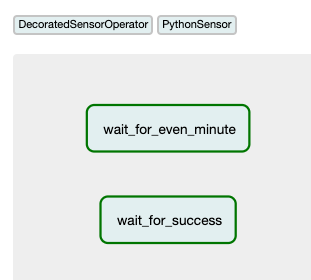

[GitHub] [airflow] BasPH opened a new pull request, #29143: Demonstrate usage of the PythonSensor

BasPH opened a new pull request, #29143:

URL: https://github.com/apache/airflow/pull/29143

Update of the

https://airflow.apache.org/docs/apache-airflow/stable/howto/operator/python.html#pythonsensor

paragraph. All other paragraphs in the doc also demonstrate the taskflow-style

operator, but the PythonSensor paragraph did not. This PR shows both style +

adds a reproducible example.

**Before:**

**After:**

---

**^ Add meaningful description above**

Read the **[Pull Request

Guidelines](https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst#pull-request-guidelines)**

for more information.

In case of fundamental code changes, an Airflow Improvement Proposal

([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvement+Proposals))

is needed.

In case of a new dependency, check compliance with the [ASF 3rd Party

License Policy](https://www.apache.org/legal/resolved.html#category-x).

In case of backwards incompatible changes please leave a note in a

newsfragment file, named `{pr_number}.significant.rst` or

`{issue_number}.significant.rst`, in

[newsfragments](https://github.com/apache/airflow/tree/main/newsfragments).

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] Taragolis commented on pull request #29142: Decrypt SecureString value obtained by SsmHook

Taragolis commented on PR #29142: URL: https://github.com/apache/airflow/pull/29142#issuecomment-1402747883 > Nice catch! IMHO, if we are decoding by default then masking sounds like the right answer to me. I'm not really up to date on best practices when using SecureString though, so I'm happy to defer if someone feels otherwise. Well there is not easy answer as well as best practices. We do not know what users might store into SSM Parameter Store and how they intend to use it. If it credentials the answer straightforward, yes we should, like here: https://github.com/apache/airflow/blob/3b25168c413a8434f8f65efb09aaf949cf7adc3b/airflow/providers/amazon/aws/hooks/base_aws.py#L662-L666 IMHO, In general if you create secure string you do not want to some one who does not have access to KMS keys see value. But we could mask all or nothing, that mean `postgresql+psycopg2://airflow:insecurepassword@postgres/airflow` in logs transform to `***` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] zachliu commented on issue #29138: Auto refreshing (or auto tail) of logs in Web UI is erroneous

zachliu commented on issue #29138: URL: https://github.com/apache/airflow/issues/29138#issuecomment-1402735638 i guess throttling would just make it refresh less frequent? the `example_sensors` dag has some long-running tasks (> 1min) that can clearly show this behavior no i'm not using remote logging, all logs are read from local -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] Taragolis opened a new pull request, #29142: Decrypt SecureString value obtained by SsmHook

Taragolis opened a new pull request, #29142:

URL: https://github.com/apache/airflow/pull/29142

Right now if we try to get SecureString by `SsmHook.get_parameter_value`

then we get encrypted value, which is useless for further usage without

decryption.

```python

from airflow.providers.amazon.aws.hooks.ssm import SsmHook

hook = SsmHook(aws_conn_id=None, region_name="eu-west-1")

print(hook.get_parameter_value("/airflow/config/boom"))

```

**Without this changes**

```console

AQICAHiA4cbp5//uho/.../Mlb3cleB4/7XXjkh

```

**After this changes**

```console

postgresql+psycopg2://airflow:insecurepassword@postgres/airflow

```

WDYT, should we also mask value if value has type `SecureString`?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] kristopherkane commented on issue #29109: [Google Cloud] DataprocCreateBatchOperator returns incorrect results and does not reattach

kristopherkane commented on issue #29109: URL: https://github.com/apache/airflow/issues/29109#issuecomment-1402672278 cc @MaksYermak for additional review as the original author. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] loulsb commented on pull request #29116: Add colors in help outputs of Airfow CLI commands #28789

loulsb commented on PR #29116: URL: https://github.com/apache/airflow/pull/29116#issuecomment-1402667103 I don't know if there is a specific tool that you use for performance testing, I ran "time airflow cheat-sheet" a bunch of times without the change and with the change and I got this: Without the change: real0m0.381s user0m0.351s sys 0m0.030s real0m0.367s user0m0.307s sys 0m0.060s real0m0.373s user0m0.312s sys 0m0.061s real0m0.369s user0m0.329s sys 0m0.040s real0m0.375s user0m0.355s sys 0m0.020s real0m0.372s user0m0.342s sys 0m0.030s real0m0.373s user0m0.363s sys 0m0.010s real0m0.372s user0m0.372s sys 0m0.000s With the change: real0m0.391s user0m0.370s sys 0m0.020s real0m0.391s user0m0.341s sys 0m0.050s real0m0.391s user0m0.351s sys 0m0.040s real0m0.392s user0m0.362s sys 0m0.030s real0m0.389s user0m0.369s sys 0m0.020s real0m0.394s user0m0.383s sys 0m0.010s real0m0.399s user0m0.379s sys 0m0.020s real0m0.392s user0m0.352s sys 0m0.041s -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] Taragolis commented on issue #29131: Airflow v2.4.2 - Scheduler fails with BrokenPIPE error (Exiting gracefully upon receiving signal 15)

Taragolis commented on issue #29131: URL: https://github.com/apache/airflow/issues/29131#issuecomment-1402640500 More like deployment troubleshooting rather then actual issue. Convert to discussion -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] Taragolis closed issue #29131: Airflow v2.4.2 - Scheduler fails with BrokenPIPE error (Exiting gracefully upon receiving signal 15)

Taragolis closed issue #29131: Airflow v2.4.2 - Scheduler fails with BrokenPIPE error (Exiting gracefully upon receiving signal 15) URL: https://github.com/apache/airflow/issues/29131 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] Taragolis commented on issue #29138: Auto refreshing (or auto tail) of logs in Web UI is erroneous

Taragolis commented on issue #29138: URL: https://github.com/apache/airflow/issues/29138#issuecomment-1402638923 It also might potentially fixed by https://github.com/apache/airflow/pull/28818 Could you provide some simple DAG which might reproduce this behaviour? And do you use Remote Logging? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] jedcunningham commented on a diff in pull request #29058: Optionally export `airflow db clean` data to CSV files

jedcunningham commented on code in PR #29058:

URL: https://github.com/apache/airflow/pull/29058#discussion_r1085907128

##

airflow/utils/db_cleanup.py:

##

@@ -159,6 +170,14 @@ def _do_delete(*, query, orm_model, skip_archive, session):

logger.debug("delete statement:\n%s", delete.compile())

session.execute(delete)

session.commit()

+if export_to_csv:

+if not output_path.startswith(AIRFLOW_HOME):

+output_path = os.path.join(AIRFLOW_HOME, output_path)

+os.makedirs(output_path, exist_ok=True)

+_to_csv(

+target_table=target_table,

file_path=f"{output_path}/{target_table_name}.csv", session=session

+)

+skip_archive = True

Review Comment:

I definitely lean toward having it go to one place.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] jedcunningham commented on issue #28779: Built-in ServiceMonitor for PgBouncer and StatsD

jedcunningham commented on issue #28779: URL: https://github.com/apache/airflow/issues/28779#issuecomment-1402634049 Sounds good to me. Does PgBouncer have a prometheus endpoint to point at though, or would this work include adding the community exporter? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] o-nikolas commented on a diff in pull request #29055: [AIP-51] Executors vending CLI commands

o-nikolas commented on code in PR #29055:

URL: https://github.com/apache/airflow/pull/29055#discussion_r1085901682

##

airflow/cli/cli_parser.py:

##

@@ -17,2165 +17,35 @@

# specific language governing permissions and limitations

# under the License.

"""Command-line interface."""

+

+

from __future__ import annotations

import argparse

-import json

-import os

-import textwrap

-from argparse import Action, ArgumentError, RawTextHelpFormatter

+from argparse import Action, RawTextHelpFormatter

from functools import lru_cache

-from typing import Callable, Iterable, NamedTuple, Union

-

-import lazy_object_proxy

+from typing import Iterable

-from airflow import settings

-from airflow.cli.commands.legacy_commands import check_legacy_command

-from airflow.configuration import conf

+from airflow.cli.cli_config import (

+DAG_CLI_DICT,

+ActionCommand,

+Arg,

+CLICommand,

+DefaultHelpParser,

+GroupCommand,

+core_commands,

+)

from airflow.exceptions import AirflowException

-from airflow.executors.executor_constants import CELERY_EXECUTOR,

CELERY_KUBERNETES_EXECUTOR

from airflow.executors.executor_loader import ExecutorLoader

-from airflow.utils.cli import ColorMode

from airflow.utils.helpers import partition

-from airflow.utils.module_loading import import_string

-from airflow.utils.timezone import parse as parsedate

-

-BUILD_DOCS = "BUILDING_AIRFLOW_DOCS" in os.environ

-

-

-def lazy_load_command(import_path: str) -> Callable:

-"""Create a lazy loader for command."""

-_, _, name = import_path.rpartition(".")

-

-def command(*args, **kwargs):

-func = import_string(import_path)

-return func(*args, **kwargs)

-

-command.__name__ = name

-

-return command

-

-

-class DefaultHelpParser(argparse.ArgumentParser):

-"""CustomParser to display help message."""

-

-def _check_value(self, action, value):

-"""Override _check_value and check conditionally added command."""

-if action.dest == "subcommand" and value == "celery":

-executor = conf.get("core", "EXECUTOR")

-if executor not in (CELERY_EXECUTOR, CELERY_KUBERNETES_EXECUTOR):

-executor_cls, _ = ExecutorLoader.import_executor_cls(executor)

-classes = ()

-try:

-from airflow.executors.celery_executor import

CeleryExecutor

-

-classes += (CeleryExecutor,)

-except ImportError:

-message = (

-"The celery subcommand requires that you pip install

the celery module. "

-"To do it, run: pip install 'apache-airflow[celery]'"

-)

-raise ArgumentError(action, message)

-try:

-from airflow.executors.celery_kubernetes_executor import

CeleryKubernetesExecutor

-

-classes += (CeleryKubernetesExecutor,)

-except ImportError:

-pass

-if not issubclass(executor_cls, classes):

-message = (

-f"celery subcommand works only with CeleryExecutor,

CeleryKubernetesExecutor and "

-f"executors derived from them, your current executor:

{executor}, subclassed from: "

-f'{", ".join([base_cls.__qualname__ for base_cls in

executor_cls.__bases__])}'

-)

-raise ArgumentError(action, message)

-if action.dest == "subcommand" and value == "kubernetes":

-try:

-import kubernetes.client # noqa: F401

-except ImportError:

-message = (

-"The kubernetes subcommand requires that you pip install

the kubernetes python client. "

-"To do it, run: pip install

'apache-airflow[cncf.kubernetes]'"

-)

-raise ArgumentError(action, message)

-

-if action.choices is not None and value not in action.choices:

-check_legacy_command(action, value)

-

-super()._check_value(action, value)

-

-def error(self, message):

-"""Override error and use print_instead of print_usage."""

-self.print_help()

-self.exit(2, f"\n{self.prog} command error: {message}, see help

above.\n")

-

-

-# Used in Arg to enable `None' as a distinct value from "not passed"

-_UNSET = object()

-

-

-class Arg:

-"""Class to keep information about command line argument."""