[GitHub] hellonico opened a new pull request #13624: WIP: Nightly Tests For Clojure

hellonico opened a new pull request #13624: WIP: Nightly Tests For Clojure URL: https://github.com/apache/incubator-mxnet/pull/13624 ## Description ## This is about running the inrtegration tests during nightly CI. ## Checklist ## ### Essentials ### Please feel free to remove inapplicable items for your PR. - [ ] The PR title starts with [MXNET-$JIRA_ID], where $JIRA_ID refers to the relevant [JIRA issue](https://issues.apache.org/jira/projects/MXNET/issues) created (except PRs with tiny changes) - [ ] Changes are complete (i.e. I finished coding on this PR) - [ ] All changes have test coverage: - Unit tests are added for small changes to verify correctness (e.g. adding a new operator) - Nightly tests are added for complicated/long-running ones (e.g. changing distributed kvstore) - Build tests will be added for build configuration changes (e.g. adding a new build option with NCCL) - [ ] Code is well-documented: - For user-facing API changes, API doc string has been updated. - For new C++ functions in header files, their functionalities and arguments are documented. - For new examples, README.md is added to explain the what the example does, the source of the dataset, expected performance on test set and reference to the original paper if applicable - Check the API doc at http://mxnet-ci-doc.s3-accelerate.dualstack.amazonaws.com/PR-$PR_ID/$BUILD_ID/index.html - [ ] To the my best knowledge, examples are either not affected by this change, or have been fixed to be compatible with this change ### Changes ### - [ ] Feature1, tests, (and when applicable, API doc) - [ ] Feature2, tests, (and when applicable, API doc) ## Comments ## - If this change is a backward incompatible change, why must this change be made. - Interesting edge cases to note here This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] MyYaYa commented on issue #13001: Feature request: numpy.cumsum

MyYaYa commented on issue #13001: Feature request: numpy.cumsum URL: https://github.com/apache/incubator-mxnet/issues/13001#issuecomment-446489801 Yeah, if ndarray supports cumsum operation, some custom metrics(i.e. mAP) will benefit from that. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[incubator-mxnet-site] branch asf-site updated: Bump the publish timestamp.

This is an automated email from the ASF dual-hosted git repository. zhasheng pushed a commit to branch asf-site in repository https://gitbox.apache.org/repos/asf/incubator-mxnet-site.git The following commit(s) were added to refs/heads/asf-site by this push: new 8f01f4b Bump the publish timestamp. 8f01f4b is described below commit 8f01f4be39e40f18e32ba8d35869675276061412 Author: mxnet-ci AuthorDate: Wed Dec 12 06:59:42 2018 + Bump the publish timestamp. --- date.txt | 1 + 1 file changed, 1 insertion(+) diff --git a/date.txt b/date.txt new file mode 100644 index 000..8e80d29 --- /dev/null +++ b/date.txt @@ -0,0 +1 @@ +Wed Dec 12 06:59:42 UTC 2018

[GitHub] chinakook commented on issue #8335: Performance of MXNet on Windows is lower than that on Linux by 15%-20%

chinakook commented on issue #8335: Performance of MXNet on Windows is lower than that on Linux by 15%-20% URL: https://github.com/apache/incubator-mxnet/issues/8335#issuecomment-446478064 Thx, I will try later. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] zheng-da commented on a change in pull request #13419: [MXNET-1233] Enable dynamic shape in CachedOp

zheng-da commented on a change in pull request #13419: [MXNET-1233] Enable

dynamic shape in CachedOp

URL: https://github.com/apache/incubator-mxnet/pull/13419#discussion_r240883314

##

File path: src/imperative/cached_op.cc

##

@@ -262,6 +262,29 @@ std::vector CachedOp::Gradient(

return ret;

}

+bool CachedOp::CheckDynamicShapeExists(const Context& default_ctx,

+ const std::vector& inputs) {

Review comment:

i wonder if it's better to check operators with dynamic shape directly.

right now, it assumes that if a computation graph can't infer shape, it

contains dynamic-shape operators. it's better to write one that works for both

CachedOp and symbol executor. It's a property of a computation graph whether a

graph contains dynamic shape. We can easily check it by traversing all

operators in a graph.

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] zheng-da commented on a change in pull request #13419: [MXNET-1233] Enable dynamic shape in CachedOp

zheng-da commented on a change in pull request #13419: [MXNET-1233] Enable

dynamic shape in CachedOp

URL: https://github.com/apache/incubator-mxnet/pull/13419#discussion_r240890932

##

File path: src/imperative/imperative_utils.cc

##

@@ -22,6 +22,114 @@

namespace mxnet {

namespace imperative {

+

+void NaiveRunGraph(

+const bool retain_graph,

+const Context& default_ctx,

+const nnvm::IndexedGraph& idx,

+const std::vector arrays,

+size_t node_start, size_t node_end,

+std::vector&& array_reqs,

+std::vector&& ref_count,

+std::vector *p_states,

+const DispatchModeVector _modes,

+bool recording) {

+ using namespace nnvm;

+ using namespace imperative;

+ static auto& createop = nnvm::Op::GetAttr("FCreateOpState");

+ static auto& is_layer_backward = Op::GetAttr("TIsLayerOpBackward");

+ static const auto bwd_cached_op = Op::Get("_backward_CachedOp");

+

+ const auto imp = Imperative::Get();

+

+ std::vector& states = *p_states;

+

+ for (size_t i = node_start; i < node_end; ++i) {

+const nnvm::IndexedGraph::Node& node = idx[i];

+if (node.source->op() == nullptr) {

+ continue;

+}

+size_t num_outputs = node.source->num_outputs();

+// construct `ndinputs`

+std::vector ndinputs;

+ndinputs.reserve(node.inputs.size());

+for (const auto& j : node.inputs) {

+ ndinputs.emplace_back(arrays[idx.entry_id(j)]);

+ CHECK(!ndinputs.back()->is_none()) << idx[j.node_id].source->attrs.name

<< " " << j.index;

+}

+// construct `ndoutputs` and `req`

+std::vector ndoutputs;

+ndoutputs.reserve(num_outputs);

+for (size_t j = 0; j < num_outputs; ++j) {

+ size_t eid = idx.entry_id(i, j);

+ ndoutputs.emplace_back(arrays[eid]);

+}

+// other auxiliary data

+Context ctx = GetContext(node.source->attrs, ndinputs, ndoutputs,

default_ctx);

+auto invoke = [&](const OpStatePtr ) {

+ DispatchMode dispatch_mode = DispatchMode::kUndefined;

+ SetShapeType(ctx, node.source->attrs, ndinputs, ndoutputs,

_mode);

+ std::vector req;

+ SetWriteInplaceReq(ndinputs, ndoutputs, );

+ imp->InvokeOp(ctx, node.source->attrs, ndinputs, ndoutputs, req,

dispatch_mode, state);

+ for (size_t i = 0; i < ndoutputs.size(); i++) {

+if (ndoutputs[i]->shape().ndim() == 0) {

+ ndoutputs[i]->WaitToRead();

+ ndoutputs[i]->SetShapeFromChunk();

+}

+ }

+ if (recording) {

+imp->RecordOp(NodeAttrs(node.source->attrs), ndinputs, ndoutputs,

state);

+ }

+};

+if (node.source->op() == bwd_cached_op) {

+ // case 1: backward cached op

+ std::vector req;

+ req.reserve(num_outputs);

+ for (size_t j = 0; j < num_outputs; ++j) {

+size_t eid = idx.entry_id(i, j);

+req.push_back(array_reqs[eid]);

+CHECK(array_reqs[eid] == kNullOp || !ndoutputs.back()->is_none());

+ }

+ const auto& cached_op =

dmlc::get(node.source->attrs.parsed);

+ nnvm::Node* fwd_node = node.source->control_deps[0].get();

+ auto fwd_node_id = idx.node_id(fwd_node);

+ cached_op->Backward(retain_graph, states[fwd_node_id], ndinputs, req,

ndoutputs);

+} else if (createop.count(node.source->op())) {

+ // case 2: node is in createop

Review comment:

i think this is to handle stateful operators

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] zheng-da commented on a change in pull request #13419: [MXNET-1233] Enable dynamic shape in CachedOp

zheng-da commented on a change in pull request #13419: [MXNET-1233] Enable

dynamic shape in CachedOp

URL: https://github.com/apache/incubator-mxnet/pull/13419#discussion_r240889937

##

File path: src/imperative/cached_op.cc

##

@@ -834,6 +861,61 @@ OpStatePtr CachedOp::DynamicForward(

return op_state;

}

+OpStatePtr CachedOp::NaiveForward(

+const Context& default_ctx,

+const std::vector& inputs,

+const std::vector& outputs) {

+ using namespace nnvm;

+ using namespace imperative;

+ // Initialize

+ bool recording = Imperative::Get()->is_recording();

+ auto op_state = OpStatePtr::Create();

+ auto& runtime = op_state.get_state();

+ {

+auto state_ptr = GetCachedOpState(default_ctx);

+auto& state = state_ptr.get_state();

+std::lock_guard lock(state.mutex);

+SetForwardGraph(, recording, inputs);

+runtime.info.fwd_graph = state.info.fwd_graph;

+ }

+ // build the indexed graph

+ nnvm::Graph& g = runtime.info.fwd_graph;

+ const auto& idx = g.indexed_graph();

+ const size_t num_inputs = idx.input_nodes().size();

+ const size_t num_entries = idx.num_node_entries();

+ std::vector ref_count = g.GetAttr >(

+recording ? "full_ref_count" : "forward_ref_count");

+ // construct `arrays`

+ runtime.buff.resize(num_entries);

+ std::vector arrays;

+ arrays.reserve(num_entries);

+ for (auto& item : runtime.buff) {

+arrays.push_back();

+ }

Review comment:

i wonder if we should buffer arrays from the previous run?

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] zheng-da commented on a change in pull request #13419: [MXNET-1233] Enable dynamic shape in CachedOp

zheng-da commented on a change in pull request #13419: [MXNET-1233] Enable

dynamic shape in CachedOp

URL: https://github.com/apache/incubator-mxnet/pull/13419#discussion_r240890307

##

File path: src/imperative/imperative_utils.cc

##

@@ -22,6 +22,114 @@

namespace mxnet {

namespace imperative {

+

+void NaiveRunGraph(

+const bool retain_graph,

+const Context& default_ctx,

+const nnvm::IndexedGraph& idx,

+const std::vector arrays,

+size_t node_start, size_t node_end,

+std::vector&& array_reqs,

+std::vector&& ref_count,

+std::vector *p_states,

+const DispatchModeVector _modes,

+bool recording) {

+ using namespace nnvm;

+ using namespace imperative;

+ static auto& createop = nnvm::Op::GetAttr("FCreateOpState");

+ static auto& is_layer_backward = Op::GetAttr("TIsLayerOpBackward");

+ static const auto bwd_cached_op = Op::Get("_backward_CachedOp");

+

+ const auto imp = Imperative::Get();

+

+ std::vector& states = *p_states;

+

+ for (size_t i = node_start; i < node_end; ++i) {

+const nnvm::IndexedGraph::Node& node = idx[i];

+if (node.source->op() == nullptr) {

+ continue;

+}

+size_t num_outputs = node.source->num_outputs();

+// construct `ndinputs`

+std::vector ndinputs;

+ndinputs.reserve(node.inputs.size());

+for (const auto& j : node.inputs) {

+ ndinputs.emplace_back(arrays[idx.entry_id(j)]);

+ CHECK(!ndinputs.back()->is_none()) << idx[j.node_id].source->attrs.name

<< " " << j.index;

+}

+// construct `ndoutputs` and `req`

+std::vector ndoutputs;

+ndoutputs.reserve(num_outputs);

+for (size_t j = 0; j < num_outputs; ++j) {

+ size_t eid = idx.entry_id(i, j);

+ ndoutputs.emplace_back(arrays[eid]);

+}

+// other auxiliary data

+Context ctx = GetContext(node.source->attrs, ndinputs, ndoutputs,

default_ctx);

+auto invoke = [&](const OpStatePtr ) {

+ DispatchMode dispatch_mode = DispatchMode::kUndefined;

+ SetShapeType(ctx, node.source->attrs, ndinputs, ndoutputs,

_mode);

Review comment:

do we still infer shape here?

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] TaoLv commented on a change in pull request #13150: support mkl log when dtype is fp32 or fp64

TaoLv commented on a change in pull request #13150: support mkl log when dtype

is fp32 or fp64

URL: https://github.com/apache/incubator-mxnet/pull/13150#discussion_r240885436

##

File path: src/operator/tensor/elemwise_unary_op.h

##

@@ -348,6 +352,42 @@ class UnaryOp : public OpBase {

LogUnimplementedOp(attrs, ctx, inputs, req, outputs);

}

}

+

+#if MSHADOW_USE_MKL == 1

+ static inline void MKLLog(MKL_INT size, const float* pIn, float* pOut) {

+vsLn(size, pIn, pOut);

+ }

+

+ static inline void MKLLog(MKL_INT size, const double* pIn, double* pOut) {

+vdLn(size, pIn, pOut);

+ }

+#endif

+

+ template

+ static void LogCompute(const nnvm::NodeAttrs& attrs,

+const OpContext& ctx,

+const std::vector& inputs,

+const std::vector& req,

+const std::vector& outputs) {

+if (req[0] == kNullOp) return;

+// if defined MSHADOW_USE_MKL then call mkl log when req is KWriteTo,

type_flag is

+// mshadow::kFloat32 or mshadow::kFloat64 and data size less than or equal

MKL_INT_MAX

+#if MSHADOW_USE_MKL == 1

+auto type_flag = inputs[0].type_flag_;

+const size_t MKL_INT_MAX = (sizeof(MKL_INT) == sizeof(int)) ? INT_MAX :

LLONG_MAX;

+size_t input_size = inputs[0].Size();

+if (req[0] == kWriteTo && (type_flag == mshadow::kFloat32

+ || type_flag == mshadow::kFloat64) && input_size <= MKL_INT_MAX) {

Review comment:

fix indent.

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] TaoLv commented on a change in pull request #13150: support mkl log when dtype is fp32 or fp64

TaoLv commented on a change in pull request #13150: support mkl log when dtype

is fp32 or fp64

URL: https://github.com/apache/incubator-mxnet/pull/13150#discussion_r240885471

##

File path: src/operator/tensor/elemwise_unary_op.h

##

@@ -348,6 +352,42 @@ class UnaryOp : public OpBase {

LogUnimplementedOp(attrs, ctx, inputs, req, outputs);

}

}

+

+#if MSHADOW_USE_MKL == 1

+ static inline void MKLLog(MKL_INT size, const float* pIn, float* pOut) {

+vsLn(size, pIn, pOut);

+ }

+

+ static inline void MKLLog(MKL_INT size, const double* pIn, double* pOut) {

+vdLn(size, pIn, pOut);

+ }

+#endif

+

+ template

+ static void LogCompute(const nnvm::NodeAttrs& attrs,

+const OpContext& ctx,

+const std::vector& inputs,

+const std::vector& req,

+const std::vector& outputs) {

+if (req[0] == kNullOp) return;

+// if defined MSHADOW_USE_MKL then call mkl log when req is KWriteTo,

type_flag is

+// mshadow::kFloat32 or mshadow::kFloat64 and data size less than or equal

MKL_INT_MAX

+#if MSHADOW_USE_MKL == 1

+auto type_flag = inputs[0].type_flag_;

+const size_t MKL_INT_MAX = (sizeof(MKL_INT) == sizeof(int)) ? INT_MAX :

LLONG_MAX;

+size_t input_size = inputs[0].Size();

+if (req[0] == kWriteTo && (type_flag == mshadow::kFloat32

+ || type_flag == mshadow::kFloat64) && input_size <= MKL_INT_MAX) {

+ MSHADOW_SGL_DBL_TYPE_SWITCH(type_flag, DType, {

+MKLLog(input_size, inputs[0].dptr(), outputs[0].dptr());

+ })

Review comment:

need `;` here.

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] TaoLv commented on a change in pull request #13150: support mkl log when dtype is fp32 or fp64

TaoLv commented on a change in pull request #13150: support mkl log when dtype is fp32 or fp64 URL: https://github.com/apache/incubator-mxnet/pull/13150#discussion_r240885737 ## File path: src/operator/tensor/elemwise_unary_op.h ## @@ -34,6 +34,10 @@ #include "../mxnet_op.h" #include "../elemwise_op_common.h" #include "../../ndarray/ndarray_function.h" +#if MSHADOW_USE_MKL == 1 +#include "mkl.h" +#endif +#include Review comment: need space before `<` and put this include before `#include "./cast_storage-inl.h"` This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] pengzhao-intel commented on a change in pull request #13599: fallback to dense version for grad(reshape), grad(expand_dims)

pengzhao-intel commented on a change in pull request #13599: fallback to dense

version for grad(reshape), grad(expand_dims)

URL: https://github.com/apache/incubator-mxnet/pull/13599#discussion_r240881956

##

File path: src/operator/tensor/elemwise_unary_op_basic.cc

##

@@ -236,6 +236,27 @@ NNVM_REGISTER_OP(_backward_copy)

return std::vector{true};

});

+NNVM_REGISTER_OP(_backward_reshape)

+.set_num_inputs(1)

+.set_num_outputs(1)

+.set_attr("TIsBackward", true)

+.set_attr("FInplaceOption",

+[](const NodeAttrs& attrs){

+ return std::vector >{{0,

0}};

+})

+.set_attr("FInferStorageType", ElemwiseStorageType<1, 1,

false, false, false>)

+.set_attr("FCompute", UnaryOp::IdentityCompute)

+#if MXNET_USE_MKLDNN == 1

+.set_attr("TIsMKLDNN", true)

+.set_attr("FResourceRequest", [](const NodeAttrs& n) {

+ return std::vector{ResourceRequest::kTempSpace};

+})

+#endif

Review comment:

FYI, https://github.com/apache/incubator-mxnet/pull/12980 is enabled the FW

of MKL-DNN supported reshape but BW is still WIP @huangzhiyuan

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] pengzhao-intel edited a comment on issue #13602: Fix for import mxnet taking long time if multiple process launched

pengzhao-intel edited a comment on issue #13602: Fix for import mxnet taking long time if multiple process launched URL: https://github.com/apache/incubator-mxnet/pull/13602#issuecomment-446459523 Based on 10560's [comment](https://github.com/apache/incubator-mxnet/issues/10560#issuecomment-381514559), "It sometimes block executing in Ubuntu and always block executing in Windows" and several related issues, including import hang, are reported. Could anyone help verify the functionality of this feature? @mseth10 @azai91 @lupesko Maybe set it off by default. Any idea? @cjolivier01 could you provide more backgrounds and how auto-tuning works? This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] zheng-da commented on issue #13599: fallback to dense version for grad(reshape), grad(expand_dims)

zheng-da commented on issue #13599: fallback to dense version for grad(reshape), grad(expand_dims) URL: https://github.com/apache/incubator-mxnet/pull/13599#issuecomment-446459824 Otherwise, it looks good to me. BTW, I don't think it has anything to do with sparse. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] pengzhao-intel commented on issue #13602: Fix for import mxnet taking long time if multiple process launched

pengzhao-intel commented on issue #13602: Fix for import mxnet taking long time if multiple process launched URL: https://github.com/apache/incubator-mxnet/pull/13602#issuecomment-446459523 Based on 10560's [comment](https://github.com/apache/incubator-mxnet/issues/10560#issuecomment-381514559), "It sometimes block executing in Ubuntu and always block executing in Windows" and several related issues, including import hang, are reported. Does anyone can help verify the funtionality of this feature? Maybe set it off by default. Any idea? This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] zheng-da commented on a change in pull request #13599: fallback to dense version for grad(reshape), grad(expand_dims)

zheng-da commented on a change in pull request #13599: fallback to dense

version for grad(reshape), grad(expand_dims)

URL: https://github.com/apache/incubator-mxnet/pull/13599#discussion_r240880048

##

File path: src/operator/tensor/elemwise_unary_op_basic.cc

##

@@ -236,6 +236,27 @@ NNVM_REGISTER_OP(_backward_copy)

return std::vector{true};

});

+NNVM_REGISTER_OP(_backward_reshape)

+.set_num_inputs(1)

+.set_num_outputs(1)

+.set_attr("TIsBackward", true)

+.set_attr("FInplaceOption",

+[](const NodeAttrs& attrs){

+ return std::vector >{{0,

0}};

+})

+.set_attr("FInferStorageType", ElemwiseStorageType<1, 1,

false, false, false>)

+.set_attr("FCompute", UnaryOp::IdentityCompute)

+#if MXNET_USE_MKLDNN == 1

+.set_attr("TIsMKLDNN", true)

+.set_attr("FResourceRequest", [](const NodeAttrs& n) {

+ return std::vector{ResourceRequest::kTempSpace};

+})

+#endif

Review comment:

does mkldnn support reshape?

if you want to optimize for mkldnn, you should add FComputeEx

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] pengzhao-intel commented on issue #12203: flaky test: test_operator_gpu.test_depthwise_convolution

pengzhao-intel commented on issue #12203: flaky test: test_operator_gpu.test_depthwise_convolution URL: https://github.com/apache/incubator-mxnet/issues/12203#issuecomment-446457845 @juliusshufan could you help take a look for this test case? This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] StatML commented on issue #11868: nnpack_fully_connected-inl.h:45:55: error: expected template-name before ‘<’ token > class NNPACKFullyConnectedOp : public FullyConnectedOp { >

StatML commented on issue #11868: nnpack_fully_connected-inl.h:45:55: error:

expected template-name before ‘<’ token > class NNPACKFullyConnectedOp :

public FullyConnectedOp { >

^

URL:

https://github.com/apache/incubator-mxnet/issues/11868#issuecomment-446457593

I just tried on Ubuntu 18.04.1, this issue is still not fixed... :(

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

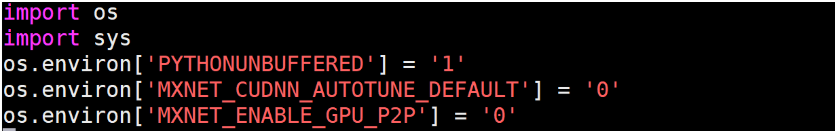

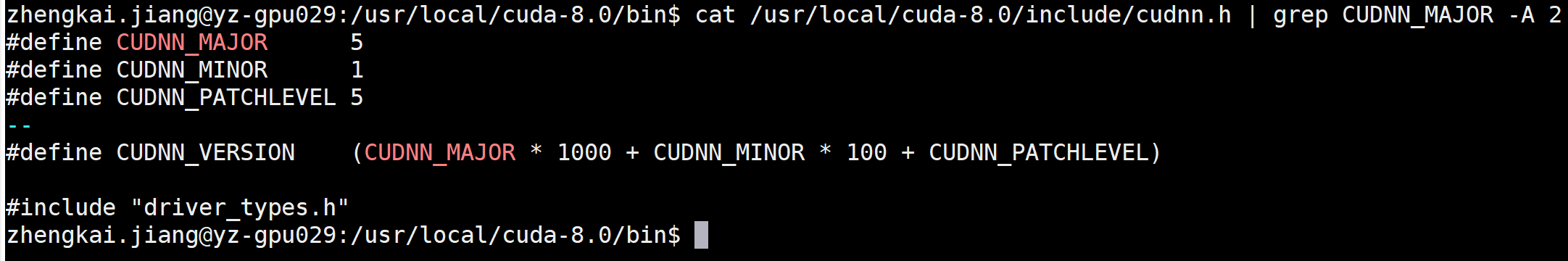

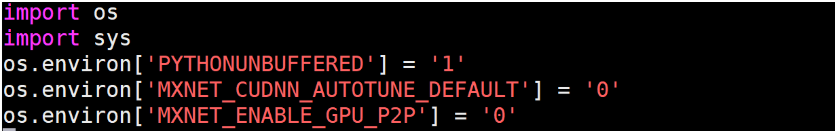

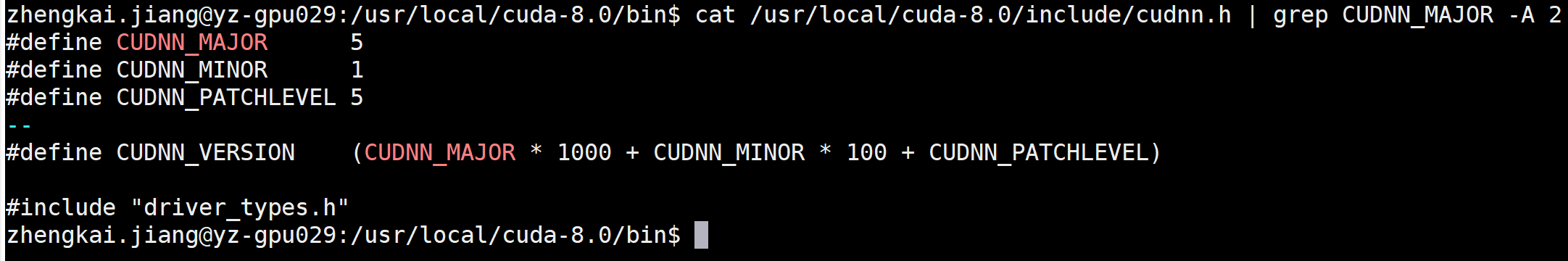

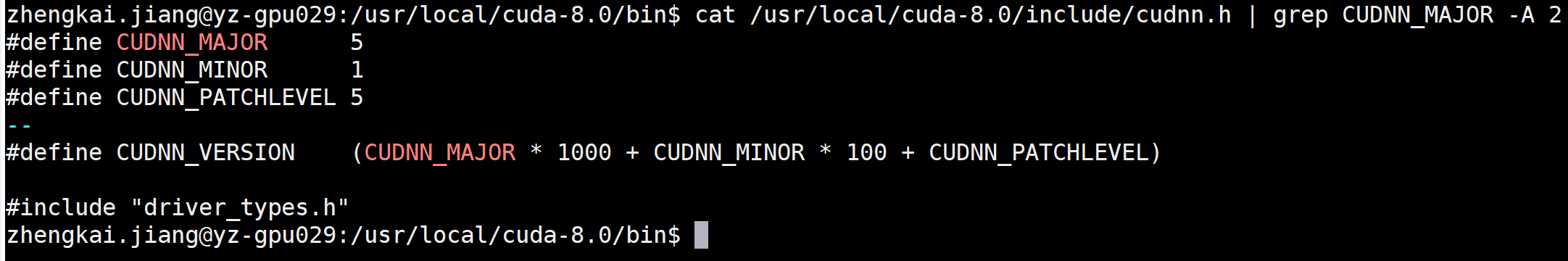

[GitHub] jiangzhengkai removed a comment on issue #13623: This convolution is not supported by cudnn

jiangzhengkai removed a comment on issue #13623: This convolution is not supported by cudnn URL: https://github.com/apache/incubator-mxnet/issues/13623#issuecomment-446449640 Hi, i'm using 1.5.0 version mxnet. The log "This convolution is not supported by cudnn" is really uncomfortable. How to supress the warning. I also try this, however, it does not help.  CUDA version is 8.0. Hers is cuddn version  1.5.0 version needs higher version cudnn? thanks! This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] jiangzhengkai commented on issue #13623: This convolution is not supported by cudnn

jiangzhengkai commented on issue #13623: This convolution is not supported by cudnn URL: https://github.com/apache/incubator-mxnet/issues/13623#issuecomment-446449640 Hi, i'm using 1.5.0 version mxnet. The log "This convolution is not supported by cudnn" is really uncomfortable. How to supress the warning. I also try this, however, it does not help.  CUDA version is 8.0. Hers is cuddn version  1.5.0 version needs higher version cudnn? thanks! This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] jiangzhengkai opened a new issue #13623: This convolution is not supported by cudnn

jiangzhengkai opened a new issue #13623: This convolution is not supported by cudnn URL: https://github.com/apache/incubator-mxnet/issues/13623 Hi, i'm using 1.5.0 version mxnet. The log **"This** convolution is not supported by cudnn" is really uncomfortable. How to supress the **warning.** I also try this, howerev, it does not help.  CUDA version is 8.0. Hers is cuddn version  1.5.0 version needs higher version cudnn? thanks! This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] xinyu-intel opened a new pull request #13622: [WIP]Fix SSD accuracy variance

xinyu-intel opened a new pull request #13622: [WIP]Fix SSD accuracy variance URL: https://github.com/apache/incubator-mxnet/pull/13622 ## Description ## This PR is to fix omp bug in `multibox_detection` to avoid accuracy variance of SSD topology. ## Checklist ## ### Essentials ### Please feel free to remove inapplicable items for your PR. - [ ] The PR title starts with [MXNET-$JIRA_ID], where $JIRA_ID refers to the relevant [JIRA issue](https://issues.apache.org/jira/projects/MXNET/issues) created (except PRs with tiny changes) - [ ] Changes are complete (i.e. I finished coding on this PR) - [ ] All changes have test coverage: - Unit tests are added for small changes to verify correctness (e.g. adding a new operator) - Nightly tests are added for complicated/long-running ones (e.g. changing distributed kvstore) - Build tests will be added for build configuration changes (e.g. adding a new build option with NCCL) - [ ] Code is well-documented: - For user-facing API changes, API doc string has been updated. - For new C++ functions in header files, their functionalities and arguments are documented. - For new examples, README.md is added to explain the what the example does, the source of the dataset, expected performance on test set and reference to the original paper if applicable - Check the API doc at http://mxnet-ci-doc.s3-accelerate.dualstack.amazonaws.com/PR-$PR_ID/$BUILD_ID/index.html - [ ] To the my best knowledge, examples are either not affected by this change, or have been fixed to be compatible with this change ### Changes ### - [ ] Feature1, tests, (and when applicable, API doc) - [ ] Feature2, tests, (and when applicable, API doc) ## Comments ## - If this change is a backward incompatible change, why must this change be made. - Interesting edge cases to note here @pengzhao-intel @TaoLv This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] TaoLv commented on issue #13150: support mkl log when dtype is fp32 or fp64

TaoLv commented on issue #13150: support mkl log when dtype is fp32 or fp64 URL: https://github.com/apache/incubator-mxnet/pull/13150#issuecomment-446430758 @XiaotaoChen since #13607 is merged, please rebase code and retrigger CI. Make sure that unit test for log operator can correctly run into MKL BLAS. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] ifeherva commented on issue #3118: Gradient reversal layer without custom operator

ifeherva commented on issue #3118: Gradient reversal layer without custom operator URL: https://github.com/apache/incubator-mxnet/issues/3118#issuecomment-446429492 I implemented this as custom operator currently, however I think this would be of interest to others. Would it make sense to implement it in Cpp as a standard or contrib operator? If yes I can PR it. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] TaoLv commented on issue #12922: Support Quantized Fully Connected by INT8 GEMM

TaoLv commented on issue #12922: Support Quantized Fully Connected by INT8 GEMM URL: https://github.com/apache/incubator-mxnet/pull/12922#issuecomment-446427791 Test case need be refined to make it can run into MKL BLAS. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[incubator-mxnet] branch master updated: Fix warning in waitall doc (#13618)

This is an automated email from the ASF dual-hosted git repository. nswamy pushed a commit to branch master in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git The following commit(s) were added to refs/heads/master by this push: new 9ce7eab Fix warning in waitall doc (#13618) 9ce7eab is described below commit 9ce7eabcbc9575128240f71f79f9f7cce1a19aa7 Author: Anirudh Subramanian AuthorDate: Tue Dec 11 17:22:02 2018 -0800 Fix warning in waitall doc (#13618) --- python/mxnet/ndarray/ndarray.py | 10 ++ 1 file changed, 6 insertions(+), 4 deletions(-) diff --git a/python/mxnet/ndarray/ndarray.py b/python/mxnet/ndarray/ndarray.py index 4e6d0cd..9a62620 100644 --- a/python/mxnet/ndarray/ndarray.py +++ b/python/mxnet/ndarray/ndarray.py @@ -157,11 +157,13 @@ def waitall(): """Wait for all async operations to finish in MXNet. This function is used for benchmarking only. + .. warning:: -If your code has exceptions, `waitall` can cause silent failures. -For this reason you should avoid `waitall` in your code. -Use it only if you are confident that your code is error free. -Then make sure you call `wait_to_read` on all outputs after `waitall`. + + If your code has exceptions, `waitall` can cause silent failures. + For this reason you should avoid `waitall` in your code. + Use it only if you are confident that your code is error free. + Then make sure you call `wait_to_read` on all outputs after `waitall`. """ check_call(_LIB.MXNDArrayWaitAll())

[GitHub] nswamy closed pull request #13618: Fix warning in waitall doc

nswamy closed pull request #13618: Fix warning in waitall doc URL: https://github.com/apache/incubator-mxnet/pull/13618 This is a PR merged from a forked repository. As GitHub hides the original diff on merge, it is displayed below for the sake of provenance: As this is a foreign pull request (from a fork), the diff is supplied below (as it won't show otherwise due to GitHub magic): diff --git a/python/mxnet/ndarray/ndarray.py b/python/mxnet/ndarray/ndarray.py index 4e6d0cdc929..9a62620da85 100644 --- a/python/mxnet/ndarray/ndarray.py +++ b/python/mxnet/ndarray/ndarray.py @@ -157,11 +157,13 @@ def waitall(): """Wait for all async operations to finish in MXNet. This function is used for benchmarking only. + .. warning:: -If your code has exceptions, `waitall` can cause silent failures. -For this reason you should avoid `waitall` in your code. -Use it only if you are confident that your code is error free. -Then make sure you call `wait_to_read` on all outputs after `waitall`. + + If your code has exceptions, `waitall` can cause silent failures. + For this reason you should avoid `waitall` in your code. + Use it only if you are confident that your code is error free. + Then make sure you call `wait_to_read` on all outputs after `waitall`. """ check_call(_LIB.MXNDArrayWaitAll()) This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] xinyu-intel commented on issue #12922: Support Quantized Fully Connected by INT8 GEMM

xinyu-intel commented on issue #12922: Support Quantized Fully Connected by INT8 GEMM URL: https://github.com/apache/incubator-mxnet/pull/12922#issuecomment-446425642 @lihaofd please rebase code and trigger MKL ci. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] ciyongch commented on issue #13596: Fix quantize pass error when excluding a quantization supported op

ciyongch commented on issue #13596: Fix quantize pass error when excluding a quantization supported op URL: https://github.com/apache/incubator-mxnet/pull/13596#issuecomment-446422951 @roywei No open issue related to it so far. We found this error when trying to quantize Resnet50_v1 at local (with excluding some ops which supports quantization from the model script manually). In this case, `NeedQuantize(e.node, excluded_nodes)` is much more accurate than `quantized_op_map.count(e.node->op())`. A test case is also added to cover this kind of error. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[incubator-mxnet-site] branch asf-site updated: Bump the publish timestamp.

This is an automated email from the ASF dual-hosted git repository. zhasheng pushed a commit to branch asf-site in repository https://gitbox.apache.org/repos/asf/incubator-mxnet-site.git The following commit(s) were added to refs/heads/asf-site by this push: new e97e209 Bump the publish timestamp. e97e209 is described below commit e97e209c1076c972c2185c81469b05f683d7cbb3 Author: mxnet-ci AuthorDate: Wed Dec 12 00:59:46 2018 + Bump the publish timestamp. --- date.txt | 1 + 1 file changed, 1 insertion(+) diff --git a/date.txt b/date.txt new file mode 100644 index 000..fbd07f0 --- /dev/null +++ b/date.txt @@ -0,0 +1 @@ +Wed Dec 12 00:59:46 UTC 2018

[GitHub] roywei commented on issue #13588: Accelerate DGL csr neighbor sampling

roywei commented on issue #13588: Accelerate DGL csr neighbor sampling URL: https://github.com/apache/incubator-mxnet/pull/13588#issuecomment-446418476 @mxnet-label-bot add[Operator, pr-awaiting-review] This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] anirudh2290 commented on issue #13618: Fix warning in waitall doc

anirudh2290 commented on issue #13618: Fix warning in waitall doc URL: https://github.com/apache/incubator-mxnet/pull/13618#issuecomment-446418635 Preview here: http://mxnet-ci-doc.s3-accelerate.dualstack.amazonaws.com/PR-13618/1/api/python/ndarray/ndarray.html?highlight=waitall#mxnet.ndarray.waitall This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] lanking520 opened a new pull request #13621: [MXNET-1251] Basic configuration to do static-linking

lanking520 opened a new pull request #13621: [MXNET-1251] Basic configuration to do static-linking URL: https://github.com/apache/incubator-mxnet/pull/13621 ## Description ## For Ubuntu 14.04 base build to install all dependencies. @szha @zachgk ## Checklist ## ### Essentials ### Please feel free to remove inapplicable items for your PR. - [ ] The PR title starts with [MXNET-$JIRA_ID], where $JIRA_ID refers to the relevant [JIRA issue](https://issues.apache.org/jira/projects/MXNET/issues) created (except PRs with tiny changes) - [ ] Changes are complete (i.e. I finished coding on this PR) - [ ] All changes have test coverage: - Unit tests are added for small changes to verify correctness (e.g. adding a new operator) - Nightly tests are added for complicated/long-running ones (e.g. changing distributed kvstore) - Build tests will be added for build configuration changes (e.g. adding a new build option with NCCL) - [ ] Code is well-documented: - For user-facing API changes, API doc string has been updated. - For new C++ functions in header files, their functionalities and arguments are documented. - For new examples, README.md is added to explain the what the example does, the source of the dataset, expected performance on test set and reference to the original paper if applicable - Check the API doc at http://mxnet-ci-doc.s3-accelerate.dualstack.amazonaws.com/PR-$PR_ID/$BUILD_ID/index.html - [ ] To the my best knowledge, examples are either not affected by this change, or have been fixed to be compatible with this change This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] roywei commented on issue #13588: Accelerate DGL csr neighbor sampling

roywei commented on issue #13588: Accelerate DGL csr neighbor sampling URL: https://github.com/apache/incubator-mxnet/pull/13588#issuecomment-446418341 @BullDemonKing Thanks for the contribution! could you take a look at failed tests? @zheng-da @eric-haibin-lin could you take a look? This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] roywei commented on issue #13590: fix Makefile for rpkg

roywei commented on issue #13590: fix Makefile for rpkg URL: https://github.com/apache/incubator-mxnet/pull/13590#issuecomment-446418055 @mxnet-label-bot [R, pr-awaiting-review] This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] roywei commented on issue #13590: fix Makefile for rpkg

roywei commented on issue #13590: fix Makefile for rpkg URL: https://github.com/apache/incubator-mxnet/pull/13590#issuecomment-446417917 @jeremiedb Thanks for the contribution, could you take a look at the unit test failed? ping @anirudhacharya @ankkhedia for review This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] roywei commented on issue #13591: Add a DGL operator to compute vertex Ids in layers

roywei commented on issue #13591: Add a DGL operator to compute vertex Ids in layers URL: https://github.com/apache/incubator-mxnet/pull/13591#issuecomment-446417396 @mxnet-label-bot add[Operator, pr-awaiting-review] This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] roywei commented on issue #13591: Add a DGL operator to compute vertex Ids in layers

roywei commented on issue #13591: Add a DGL operator to compute vertex Ids in layers URL: https://github.com/apache/incubator-mxnet/pull/13591#issuecomment-446417351 @BullDemonKing Thanks for the contribution. @zheng-da @apeforest could you take a look? This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] roywei commented on issue #13596: Fix quantize pass error when excluding a quantization supported op

roywei commented on issue #13596: Fix quantize pass error when excluding a quantization supported op URL: https://github.com/apache/incubator-mxnet/pull/13596#issuecomment-446417029 @mxnet-label-bot add [Operator, pr-awaiting-review] @ciyongch Thanks for the contribution, is there an issue related to this fix? This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] roywei commented on issue #13597: [MXNET-1255] update hybridize documentation

roywei commented on issue #13597: [MXNET-1255] update hybridize documentation URL: https://github.com/apache/incubator-mxnet/pull/13597#issuecomment-446416327 @mxnet-label-bot add[Doc, pr-awaiting-review] This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] zachgk commented on a change in pull request #13619: [MXNET-1231] Allow not using Some in the Scala operators

zachgk commented on a change in pull request #13619: [MXNET-1231] Allow not

using Some in the Scala operators

URL: https://github.com/apache/incubator-mxnet/pull/13619#discussion_r240843382

##

File path:

scala-package/core/src/test/scala/org/apache/mxnet/NDArraySuite.scala

##

@@ -576,4 +576,12 @@ class NDArraySuite extends FunSuite with

BeforeAndAfterAll with Matchers {

assert(arr.internal.toDoubleArray === Array(2d, 2d))

assert(arr.internal.toByteArray === Array(2.toByte, 2.toByte))

}

+

+ test("Generated api") {

Review comment:

We should test both with SomeConversion and without

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] roywei commented on issue #13602: Fix for import mxnet taking long time if multiple process launched

roywei commented on issue #13602: Fix for import mxnet taking long time if multiple process launched URL: https://github.com/apache/incubator-mxnet/pull/13602#issuecomment-446416195 @mxnet-label-bot add[Environment Variables, Operator] This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] lanking520 opened a new pull request #13620: [WIP] add examples and fix the dependency problem

lanking520 opened a new pull request #13620: [WIP] add examples and fix the dependency problem URL: https://github.com/apache/incubator-mxnet/pull/13620 ## Description ## Add a use case in the java demo explaining the usage of ParamObject @andrewfayres @zachgk @piyushghai @nswamy I am also getting tired of fixing the issue of the script (TODO): - [] Add CI test for the demo script for Java ## Checklist ## ### Essentials ### Please feel free to remove inapplicable items for your PR. - [ ] The PR title starts with [MXNET-$JIRA_ID], where $JIRA_ID refers to the relevant [JIRA issue](https://issues.apache.org/jira/projects/MXNET/issues) created (except PRs with tiny changes) - [ ] Changes are complete (i.e. I finished coding on this PR) - [ ] All changes have test coverage: - Unit tests are added for small changes to verify correctness (e.g. adding a new operator) - Nightly tests are added for complicated/long-running ones (e.g. changing distributed kvstore) - Build tests will be added for build configuration changes (e.g. adding a new build option with NCCL) - [ ] Code is well-documented: - For user-facing API changes, API doc string has been updated. - For new C++ functions in header files, their functionalities and arguments are documented. - For new examples, README.md is added to explain the what the example does, the source of the dataset, expected performance on test set and reference to the original paper if applicable - Check the API doc at http://mxnet-ci-doc.s3-accelerate.dualstack.amazonaws.com/PR-$PR_ID/$BUILD_ID/index.html - [ ] To the my best knowledge, examples are either not affected by this change, or have been fixed to be compatible with this change This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] roywei commented on issue #13604: [WIP] onnx broadcast ops fixes

roywei commented on issue #13604: [WIP] onnx broadcast ops fixes URL: https://github.com/apache/incubator-mxnet/pull/13604#issuecomment-446415455 @Roshrini Thanks for the contribution, seems one of the onnx unit test failed. @vandanavk for review This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] roywei commented on issue #13604: [WIP] onnx broadcast ops fixes

roywei commented on issue #13604: [WIP] onnx broadcast ops fixes URL: https://github.com/apache/incubator-mxnet/pull/13604#issuecomment-446415242 @mxnet-label-bot add[ONNX, pr-awaiting-review] This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] roywei commented on issue #13606: Complimentary gluon DataLoader improvements

roywei commented on issue #13606: Complimentary gluon DataLoader improvements URL: https://github.com/apache/incubator-mxnet/pull/13606#issuecomment-446415025 @mxnet-label-bot add[Data-loading, pr-awaiting-review] This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] roywei commented on issue #13609: [MXNET-1258]fix unittest for ROIAlign Operator

roywei commented on issue #13609: [MXNET-1258]fix unittest for ROIAlign Operator URL: https://github.com/apache/incubator-mxnet/pull/13609#issuecomment-446413342 @mxnet-label-bot add[CI, Flaky, pr-awaiting-review] This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] roywei commented on issue #13611: add image resize operator and unit test

roywei commented on issue #13611: add image resize operator and unit test URL: https://github.com/apache/incubator-mxnet/pull/13611#issuecomment-446412852 @mxnet-label-bot add[Operator, pr-awaiting-review] This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] roywei commented on issue #13612: add pos_weight for SigmoidBinaryCrossEntropyLoss

roywei commented on issue #13612: add pos_weight for SigmoidBinaryCrossEntropyLoss URL: https://github.com/apache/incubator-mxnet/pull/13612#issuecomment-446412567 @mxnet-label-bot add[Gluon, pr-awaiting-review] This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] roywei commented on issue #13612: add pos_weight for SigmoidBinaryCrossEntropyLoss

roywei commented on issue #13612: add pos_weight for SigmoidBinaryCrossEntropyLoss URL: https://github.com/apache/incubator-mxnet/pull/13612#issuecomment-446412389 @eureka7mt Thanks for the contribution, could you add a unit test for this case? This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] roywei commented on issue #13614: Make to_tensor and normalize to accept 3D or 4D tensor inputs

roywei commented on issue #13614: Make to_tensor and normalize to accept 3D or 4D tensor inputs URL: https://github.com/apache/incubator-mxnet/pull/13614#issuecomment-446411763 @mxnet-label-bot add[Gluon, Data-loading, Operator] This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] azai91 commented on issue #13084: Test/mkldnn batch norm op

azai91 commented on issue #13084: Test/mkldnn batch norm op URL: https://github.com/apache/incubator-mxnet/pull/13084#issuecomment-446411222 @Vikas89 added This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] roywei commented on issue #13618: Fix warning in waitall doc

roywei commented on issue #13618: Fix warning in waitall doc URL: https://github.com/apache/incubator-mxnet/pull/13618#issuecomment-446409938 @mxnet-label-bot add[Website, pr-awaiting-review] This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] roywei commented on issue #13619: [MXNET-1231] Allow not using Some in the Scala operators

roywei commented on issue #13619: [MXNET-1231] Allow not using Some in the Scala operators URL: https://github.com/apache/incubator-mxnet/pull/13619#issuecomment-446409294 @lanking520 Thanks for the contribution! any documentation on how to use SomeConversion? @mxnet-label-bot add[Scala, pr-awaiting-review] This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] vandanavk commented on issue #13356: ONNX export: Add Flatten before Gemm

vandanavk commented on issue #13356: ONNX export: Add Flatten before Gemm URL: https://github.com/apache/incubator-mxnet/pull/13356#issuecomment-446403176 @zhreshold for review This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] anirudh2290 commented on a change in pull request #13602: Fix for import mxnet taking long time if multiple process launched

anirudh2290 commented on a change in pull request #13602: Fix for import mxnet

taking long time if multiple process launched

URL: https://github.com/apache/incubator-mxnet/pull/13602#discussion_r240829402

##

File path: src/operator/operator_tune-inl.h

##

@@ -56,7 +56,7 @@ namespace op {

#endif

#endif // MXNET_NO_INLINE

-#define OUTSIDE_COUNT_SHIFT9

Review comment:

does changing this impact the IsOMPFaster selection in operator_tune.h. Do

we need to tweak WORKLOAD_COUNT_SHIFT too ?

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] lanking520 closed pull request #13364: [MXNET-1225] Always use config.mk in make install instructions

lanking520 closed pull request #13364: [MXNET-1225] Always use config.mk in make install instructions URL: https://github.com/apache/incubator-mxnet/pull/13364 This is a PR merged from a forked repository. As GitHub hides the original diff on merge, it is displayed below for the sake of provenance: As this is a foreign pull request (from a fork), the diff is supplied below (as it won't show otherwise due to GitHub magic): diff --git a/docs/install/build_from_source.md b/docs/install/build_from_source.md index e41b1d0f180..e807fb44b59 100644 --- a/docs/install/build_from_source.md +++ b/docs/install/build_from_source.md @@ -2,6 +2,7 @@ This document explains how to build MXNet from source code. +**For Java/Scala/Clojure, please follow [this guide instead](./scala_setup.md)** ## Overview @@ -27,7 +28,6 @@ MXNet's newest and most popular API is Gluon. Gluon is built into the Python bin - [Python (includes Gluon)](../api/python/index.html) - [C++](../api/c++/index.html) - [Clojure](../api/clojure/index.html) -- Java (coming soon) - [Julia](../api/julia/index.html) - [Perl](../api/perl/index.html) - [R](../api/r/index.html) @@ -35,6 +35,7 @@ MXNet's newest and most popular API is Gluon. Gluon is built into the Python bin - [Java](../api/java/index.html) + ## Build Instructions by Operating System Detailed instructions are provided per operating system. Each of these guides also covers how to install the specific [Language Bindings](#installing-mxnet-language-bindings) you require. @@ -160,7 +161,7 @@ More information on turning these features on or off are found in the following ## Build Configurations There is a configuration file for make, -[`make/config.mk`](https://github.com/apache/incubator-mxnet/blob/master/make/config.mk), that contains all the compilation options. You can edit it and then run `make` or `cmake`. `cmake` is recommended for building MXNet (and is required to build with MKLDNN), however you may use `make` instead. +[`make/config.mk`](https://github.com/apache/incubator-mxnet/blob/master/make/config.mk), that contains all the compilation options. You can edit it and then run `make` or `cmake`. `cmake` is recommended for building MXNet (and is required to build with MKLDNN), however you may use `make` instead. For building with Java/Scala/Clojure, only `make` is supported. @@ -203,18 +204,18 @@ It is recommended to set environment variable NCCL_LAUNCH_MODE to PARALLEL when ### Build MXNet with C++ -* To enable C++ package, just add `USE_CPP_PACKAGE=1` when you run `make` or `cmake`. +* To enable C++ package, just add `USE_CPP_PACKAGE=1` when you run `make` or `cmake` (see examples). ### Usage Examples -* `-j` runs multiple jobs against multi-core CPUs. - For example, you can specify using all cores on Linux as follows: ```bash -cmake -j$(nproc) +mkdir build && cd build +cmake -GNinja . +ninja -v ``` @@ -222,28 +223,36 @@ cmake -j$(nproc) * Build MXNet with `cmake` and install with MKL DNN, GPU, and OpenCV support: ```bash -cmake -j USE_CUDA=1 USE_CUDA_PATH=/usr/local/cuda USE_CUDNN=1 USE_MKLDNN=1 +mkdir build && cd build +cmake -DUSE_CUDA=1 -DUSE_CUDA_PATH=/usr/local/cuda -DUSE_CUDNN=1 -DUSE_MKLDNN=1 -GNinja . +ninja -v ``` Recommended for Systems with NVIDIA GPUs * Build with both OpenBLAS, GPU, and OpenCV support: ```bash -cmake -j BLAS=open USE_CUDA=1 USE_CUDA_PATH=/usr/local/cuda USE_CUDNN=1 +mkdir build && cd build +cmake -DBLAS=open -DUSE_CUDA=1 -DUSE_CUDA_PATH=/usr/local/cuda -DUSE_CUDNN=1 -GNinja . +ninja -v ``` Recommended for Systems with Intel CPUs * Build MXNet with `cmake` and install with MKL DNN, and OpenCV support: ```bash -cmake -j USE_CUDA=0 USE_MKLDNN=1 +mkdir build && cd build +cmake -DUSE_CUDA=0 -DUSE_MKLDNN=1 -GNinja . +ninja -v ``` Recommended for Systems with non-Intel CPUs * Build MXNet with `cmake` and install with OpenBLAS and OpenCV support: ```bash -cmake -j USE_CUDA=0 BLAS=open +mkdir build && cd build +cmake -DUSE_CUDA=0 -DBLAS=open -GNinja . +ninja -v ``` Other Examples @@ -251,20 +260,26 @@ cmake -j USE_CUDA=0 BLAS=open * Build without using OpenCV: ```bash -cmake USE_OPENCV=0 +mkdir build && cd build +cmake -DUSE_OPENCV=0 -GNinja . +ninja -v ``` * Build on **macOS** with the default BLAS library (Apple Accelerate) and Clang installed with `xcode` (OPENMP is disabled because it is not supported by the Apple version of Clang): ```bash -cmake -j BLAS=apple USE_OPENCV=0 USE_OPENMP=0 +mkdir build && cd build +cmake -DBLAS=apple -DUSE_OPENCV=0 -DUSE_OPENMP=0 -GNinja . +ninja -v ``` * To use OpenMP on **macOS** you need to install the Clang compiler, `llvm` (the one provided by Apple does not support OpenMP): ```bash brew install llvm -cmake -j BLAS=apple USE_OPENMP=1 +mkdir build && cd build +cmake -DBLAS=apple -DUSE_OPENMP=1 -GNinja . +ninja -v ``` diff --git

[incubator-mxnet] branch master updated: [MXNET-1225] Always use config.mk in make install instructions (#13364)

This is an automated email from the ASF dual-hosted git repository. lanking pushed a commit to branch master in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git The following commit(s) were added to refs/heads/master by this push: new 97e0c97 [MXNET-1225] Always use config.mk in make install instructions (#13364) 97e0c97 is described below commit 97e0c972178177011ee928407719b2e002fa116f Author: Zach Kimberg AuthorDate: Tue Dec 11 15:23:13 2018 -0800 [MXNET-1225] Always use config.mk in make install instructions (#13364) * Always use config.mk in make install instructions * Specify Cuda 0 for ubuntu with mkldnn * Scala install doc avoid build_from_source Minor doc fixes * Fix build_from_source CMake usage * CPP Install Instruction with CMake * Use cmake out of source build --- docs/install/build_from_source.md | 41 ++- docs/install/c_plus_plus.md | 3 ++- docs/install/java_setup.md| 4 +++- docs/install/osx_setup.md | 9 - docs/install/scala_setup.md | 4 +++- docs/install/ubuntu_setup.md | 21 6 files changed, 61 insertions(+), 21 deletions(-) diff --git a/docs/install/build_from_source.md b/docs/install/build_from_source.md index e41b1d0..e807fb4 100644 --- a/docs/install/build_from_source.md +++ b/docs/install/build_from_source.md @@ -2,6 +2,7 @@ This document explains how to build MXNet from source code. +**For Java/Scala/Clojure, please follow [this guide instead](./scala_setup.md)** ## Overview @@ -27,7 +28,6 @@ MXNet's newest and most popular API is Gluon. Gluon is built into the Python bin - [Python (includes Gluon)](../api/python/index.html) - [C++](../api/c++/index.html) - [Clojure](../api/clojure/index.html) -- Java (coming soon) - [Julia](../api/julia/index.html) - [Perl](../api/perl/index.html) - [R](../api/r/index.html) @@ -35,6 +35,7 @@ MXNet's newest and most popular API is Gluon. Gluon is built into the Python bin - [Java](../api/java/index.html) + ## Build Instructions by Operating System Detailed instructions are provided per operating system. Each of these guides also covers how to install the specific [Language Bindings](#installing-mxnet-language-bindings) you require. @@ -160,7 +161,7 @@ More information on turning these features on or off are found in the following ## Build Configurations There is a configuration file for make, -[`make/config.mk`](https://github.com/apache/incubator-mxnet/blob/master/make/config.mk), that contains all the compilation options. You can edit it and then run `make` or `cmake`. `cmake` is recommended for building MXNet (and is required to build with MKLDNN), however you may use `make` instead. +[`make/config.mk`](https://github.com/apache/incubator-mxnet/blob/master/make/config.mk), that contains all the compilation options. You can edit it and then run `make` or `cmake`. `cmake` is recommended for building MXNet (and is required to build with MKLDNN), however you may use `make` instead. For building with Java/Scala/Clojure, only `make` is supported. @@ -203,18 +204,18 @@ It is recommended to set environment variable NCCL_LAUNCH_MODE to PARALLEL when ### Build MXNet with C++ -* To enable C++ package, just add `USE_CPP_PACKAGE=1` when you run `make` or `cmake`. +* To enable C++ package, just add `USE_CPP_PACKAGE=1` when you run `make` or `cmake` (see examples). ### Usage Examples -* `-j` runs multiple jobs against multi-core CPUs. - For example, you can specify using all cores on Linux as follows: ```bash -cmake -j$(nproc) +mkdir build && cd build +cmake -GNinja . +ninja -v ``` @@ -222,28 +223,36 @@ cmake -j$(nproc) * Build MXNet with `cmake` and install with MKL DNN, GPU, and OpenCV support: ```bash -cmake -j USE_CUDA=1 USE_CUDA_PATH=/usr/local/cuda USE_CUDNN=1 USE_MKLDNN=1 +mkdir build && cd build +cmake -DUSE_CUDA=1 -DUSE_CUDA_PATH=/usr/local/cuda -DUSE_CUDNN=1 -DUSE_MKLDNN=1 -GNinja . +ninja -v ``` Recommended for Systems with NVIDIA GPUs * Build with both OpenBLAS, GPU, and OpenCV support: ```bash -cmake -j BLAS=open USE_CUDA=1 USE_CUDA_PATH=/usr/local/cuda USE_CUDNN=1 +mkdir build && cd build +cmake -DBLAS=open -DUSE_CUDA=1 -DUSE_CUDA_PATH=/usr/local/cuda -DUSE_CUDNN=1 -GNinja . +ninja -v ``` Recommended for Systems with Intel CPUs * Build MXNet with `cmake` and install with MKL DNN, and OpenCV support: ```bash -cmake -j USE_CUDA=0 USE_MKLDNN=1 +mkdir build && cd build +cmake -DUSE_CUDA=0 -DUSE_MKLDNN=1 -GNinja . +ninja -v ``` Recommended for Systems with non-Intel CPUs * Build MXNet with `cmake` and install with OpenBLAS and OpenCV support: ```bash -cmake -j USE_CUDA=0 BLAS=open +mkdir build && cd build +cmake -DUSE_CUDA=0 -DBLAS=open -GNinja . +ninja -v ``` Other Examples @@ -251,20 +260,26

[GitHub] lanking520 closed pull request #13493: [MXNET-1224]: improve scala maven jni build.

lanking520 closed pull request #13493: [MXNET-1224]: improve scala maven jni

build.

URL: https://github.com/apache/incubator-mxnet/pull/13493

This is a PR merged from a forked repository.

As GitHub hides the original diff on merge, it is displayed below for

the sake of provenance:

As this is a foreign pull request (from a fork), the diff is supplied

below (as it won't show otherwise due to GitHub magic):

diff --git a/scala-package/assembly/linux-x86_64-cpu/pom.xml

b/scala-package/assembly/linux-x86_64-cpu/pom.xml

index abefead175c..1658f36e6bb 100644

--- a/scala-package/assembly/linux-x86_64-cpu/pom.xml

+++ b/scala-package/assembly/linux-x86_64-cpu/pom.xml

@@ -14,6 +14,10 @@

MXNet Scala Package - Full Linux-x86_64 CPU-only

jar

+

+${project.parent.parent.basedir}/..

+

+

org.apache.mxnet

diff --git

a/scala-package/assembly/linux-x86_64-cpu/src/main/assembly/assembly.xml

b/scala-package/assembly/linux-x86_64-cpu/src/main/assembly/assembly.xml

index a574f8af25d..f4c2017c824 100644

--- a/scala-package/assembly/linux-x86_64-cpu/src/main/assembly/assembly.xml

+++ b/scala-package/assembly/linux-x86_64-cpu/src/main/assembly/assembly.xml

@@ -25,4 +25,10 @@

+

+

+ ${MXNET_DIR}/lib/libmxnet.so

+ lib/native

+

+

diff --git a/scala-package/assembly/linux-x86_64-gpu/pom.xml

b/scala-package/assembly/linux-x86_64-gpu/pom.xml

index 96ffa38c6af..c80515e7b10 100644

--- a/scala-package/assembly/linux-x86_64-gpu/pom.xml

+++ b/scala-package/assembly/linux-x86_64-gpu/pom.xml

@@ -14,6 +14,10 @@

MXNet Scala Package - Full Linux-x86_64 GPU

jar

+

+${project.parent.parent.basedir}/..

+

+

org.apache.mxnet

diff --git

a/scala-package/assembly/linux-x86_64-gpu/src/main/assembly/assembly.xml

b/scala-package/assembly/linux-x86_64-gpu/src/main/assembly/assembly.xml

index 3a064bf9f2c..2aca64bdf1a 100644

--- a/scala-package/assembly/linux-x86_64-gpu/src/main/assembly/assembly.xml

+++ b/scala-package/assembly/linux-x86_64-gpu/src/main/assembly/assembly.xml

@@ -25,4 +25,10 @@

+

+

+ ${MXNET_DIR}/lib/libmxnet.so

+ lib/native

+

+

diff --git a/scala-package/assembly/osx-x86_64-cpu/main/assembly/assembly.xml

b/scala-package/assembly/osx-x86_64-cpu/main/assembly/assembly.xml

deleted file mode 100644

index fecafecad31..000

--- a/scala-package/assembly/osx-x86_64-cpu/main/assembly/assembly.xml

+++ /dev/null

@@ -1,30 +0,0 @@

-

- full

-

-jar

-

- false

-

-

-

-*:*:jar

-

- /

- true

- true

- runtime

-

-

- lib/native

-

${artifact.artifactId}${dashClassifier?}.${artifact.extension}

- false

- false

- false

-

-*:*:dll:*

-*:*:so:*

-*:*:jnilib:*

-

-

-

-

diff --git a/scala-package/assembly/osx-x86_64-cpu/pom.xml

b/scala-package/assembly/osx-x86_64-cpu/pom.xml

index 5c5733a9a4c..62979a140fd 100644

--- a/scala-package/assembly/osx-x86_64-cpu/pom.xml

+++ b/scala-package/assembly/osx-x86_64-cpu/pom.xml

@@ -14,6 +14,10 @@

MXNet Scala Package - Full OSX-x86_64 CPU-only

jar

+

+${project.parent.parent.basedir}/..

+

+

org.apache.mxnet

diff --git

a/scala-package/assembly/osx-x86_64-cpu/src/main/assembly/assembly.xml

b/scala-package/assembly/osx-x86_64-cpu/src/main/assembly/assembly.xml

index bdbd09f170c..e9bc3728fcd 100644

--- a/scala-package/assembly/osx-x86_64-cpu/src/main/assembly/assembly.xml

+++ b/scala-package/assembly/osx-x86_64-cpu/src/main/assembly/assembly.xml

@@ -25,4 +25,10 @@

+

+

+ ${MXNET_DIR}/lib/libmxnet.so

+ lib/native

+

+

diff --git a/scala-package/core/pom.xml b/scala-package/core/pom.xml

index 484fbbd9679..976383f2e7d 100644

--- a/scala-package/core/pom.xml

+++ b/scala-package/core/pom.xml

@@ -12,6 +12,7 @@

true

+${project.parent.basedir}/..

mxnet-core_2.11

@@ -77,6 +78,9 @@

-Djava.library.path=${project.parent.basedir}/native/${platform}/target \

-Dlog4j.configuration=file://${project.basedir}/src/test/resources/log4j.properties

+

+${MXNET_DIR}/lib

+

@@ -88,6 +92,10 @@

-Djava.library.path=${project.parent.basedir}/native/${platform}/target

${skipTests}

+ always

+

+${MXNET_DIR}/lib

+

diff --git

a/scala-package/core/src/main/scala/org/apache/mxnet/util/NativeLibraryLoader.scala

b/scala-package/core/src/main/scala/org/apache/mxnet/util/NativeLibraryLoader.scala

index e94d320391f..2ce893b478e 100644

---

a/scala-package/core/src/main/scala/org/apache/mxnet/util/NativeLibraryLoader.scala

+++

b/scala-package/core/src/main/scala/org/apache/mxnet/util/NativeLibraryLoader.scala

@@ -85,12 +85,10 @@ private[mxnet] object NativeLibraryLoader {

}

[GitHub] anirudh2290 commented on a change in pull request #13602: Fix for import mxnet taking long time if multiple process launched

anirudh2290 commented on a change in pull request #13602: Fix for import mxnet taking long time if multiple process launched URL: https://github.com/apache/incubator-mxnet/pull/13602#discussion_r240818689 ## File path: docs/faq/env_var.md ## @@ -226,12 +226,11 @@ Settings for More GPU Parallelism Settings for controlling OMP tuning - - Set ```MXNET_USE_OPERATOR_TUNING=0``` to disable Operator tuning code which decides whether to use OMP or not for operator - - - * Values: String representation of MXNET_ENABLE_OPERATOR_TUNING environment variable - *0=disable all - *1=enable all - *float32, float16, float32=list of types to enable, and disable those not listed - * refer : https://github.com/apache/incubator-mxnet/blob/master/src/operator/operator_tune-inl.h#L444 + - Values: String representation of MXNET_ENABLE_OPERATOR_TUNING environment variable + -0=disable all + -1=enable all + -float32, float16, float32=list of types to enable, and disable those not listed Review comment: Can we list the valid types here: "float32", "float16", "float64", "int8", "uint8", "int32", "int64" This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[incubator-mxnet] branch master updated: [MXNET-1224]: improve scala maven jni build and packing. (#13493)

This is an automated email from the ASF dual-hosted git repository.

lanking pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git

The following commit(s) were added to refs/heads/master by this push:

new b242b0c [MXNET-1224]: improve scala maven jni build and packing.

(#13493)

b242b0c is described below

commit b242b0c1fb71da43fba8d6208ee8ca282e735474

Author: Frank Liu

AuthorDate: Tue Dec 11 15:21:05 2018 -0800

[MXNET-1224]: improve scala maven jni build and packing. (#13493)

Major JNI feature changes. Please find more info here:

https://cwiki.apache.org/confluence/display/MXNET/Scala+maven+build+improvement

---

scala-package/assembly/linux-x86_64-cpu/pom.xml| 4 ++

.../src/main/assembly/assembly.xml | 6 +++

scala-package/assembly/linux-x86_64-gpu/pom.xml| 4 ++

.../src/main/assembly/assembly.xml | 6 +++

.../osx-x86_64-cpu/main/assembly/assembly.xml | 30 ---

scala-package/assembly/osx-x86_64-cpu/pom.xml | 4 ++

.../osx-x86_64-cpu/src/main/assembly/assembly.xml | 6 +++

scala-package/core/pom.xml | 8 +++

.../apache/mxnet/util/NativeLibraryLoader.scala| 55 ---

scala-package/examples/pom.xml | 4 ++

scala-package/infer/pom.xml| 4 ++

scala-package/init-native/linux-x86_64/pom.xml | 42 +++

scala-package/init-native/osx-x86_64/pom.xml | 49 ++---

scala-package/native/README.md | 63 ++

scala-package/native/linux-x86_64-cpu/pom.xml | 25 -

scala-package/native/linux-x86_64-gpu/pom.xml | 25 -

scala-package/native/osx-x86_64-cpu/pom.xml| 50 ++---

scala-package/pom.xml | 2 +

18 files changed, 291 insertions(+), 96 deletions(-)

diff --git a/scala-package/assembly/linux-x86_64-cpu/pom.xml

b/scala-package/assembly/linux-x86_64-cpu/pom.xml

index abefead..1658f36 100644

--- a/scala-package/assembly/linux-x86_64-cpu/pom.xml

+++ b/scala-package/assembly/linux-x86_64-cpu/pom.xml

@@ -14,6 +14,10 @@

MXNet Scala Package - Full Linux-x86_64 CPU-only

jar

+

+${project.parent.parent.basedir}/..

+

+

org.apache.mxnet

diff --git

a/scala-package/assembly/linux-x86_64-cpu/src/main/assembly/assembly.xml

b/scala-package/assembly/linux-x86_64-cpu/src/main/assembly/assembly.xml

index a574f8a..f4c2017 100644

--- a/scala-package/assembly/linux-x86_64-cpu/src/main/assembly/assembly.xml

+++ b/scala-package/assembly/linux-x86_64-cpu/src/main/assembly/assembly.xml

@@ -25,4 +25,10 @@

+

+

+ ${MXNET_DIR}/lib/libmxnet.so

+ lib/native

+

+

diff --git a/scala-package/assembly/linux-x86_64-gpu/pom.xml

b/scala-package/assembly/linux-x86_64-gpu/pom.xml

index 96ffa38..c80515e 100644

--- a/scala-package/assembly/linux-x86_64-gpu/pom.xml

+++ b/scala-package/assembly/linux-x86_64-gpu/pom.xml

@@ -14,6 +14,10 @@

MXNet Scala Package - Full Linux-x86_64 GPU

jar

+

+${project.parent.parent.basedir}/..

+

+

org.apache.mxnet

diff --git

a/scala-package/assembly/linux-x86_64-gpu/src/main/assembly/assembly.xml

b/scala-package/assembly/linux-x86_64-gpu/src/main/assembly/assembly.xml

index 3a064bf..2aca64b 100644

--- a/scala-package/assembly/linux-x86_64-gpu/src/main/assembly/assembly.xml

+++ b/scala-package/assembly/linux-x86_64-gpu/src/main/assembly/assembly.xml

@@ -25,4 +25,10 @@

+

+

+ ${MXNET_DIR}/lib/libmxnet.so

+ lib/native

+

+

diff --git a/scala-package/assembly/osx-x86_64-cpu/main/assembly/assembly.xml

b/scala-package/assembly/osx-x86_64-cpu/main/assembly/assembly.xml

deleted file mode 100644

index fecafec..000

--- a/scala-package/assembly/osx-x86_64-cpu/main/assembly/assembly.xml

+++ /dev/null

@@ -1,30 +0,0 @@

-

- full

-

-jar

-

- false

-

-

-

-*:*:jar

-

- /

- true

- true

- runtime

-

-

- lib/native

-

${artifact.artifactId}${dashClassifier?}.${artifact.extension}

- false

- false

- false

-

-*:*:dll:*

-*:*:so:*

-*:*:jnilib:*

-

-

-

-

diff --git a/scala-package/assembly/osx-x86_64-cpu/pom.xml

b/scala-package/assembly/osx-x86_64-cpu/pom.xml

index 5c5733a..62979a1 100644

--- a/scala-package/assembly/osx-x86_64-cpu/pom.xml