[jira] [Work logged] (HADOOP-18241) Move to Java 11

[ https://issues.apache.org/jira/browse/HADOOP-18241?focusedWorklogId=780210=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-780210 ] ASF GitHub Bot logged work on HADOOP-18241: --- Author: ASF GitHub Bot Created on: 10/Jun/22 05:37 Start Date: 10/Jun/22 05:37 Worklog Time Spent: 10m Work Description: ayushtkn commented on PR #4319: URL: https://github.com/apache/hadoop/pull/4319#issuecomment-1151964919 @slfan1989 Javadoc errors most of them I sorted, you can try get hold of this if you have time [HADOOP-15984](https://issues.apache.org/jira/browse/HADOOP-15984) Issue Time Tracking --- Worklog Id: (was: 780210) Time Spent: 1h 20m (was: 1h 10m) > Move to Java 11 > --- > > Key: HADOOP-18241 > URL: https://issues.apache.org/jira/browse/HADOOP-18241 > Project: Hadoop Common > Issue Type: Improvement >Affects Versions: 3.4.0 >Reporter: Ayush Saxena >Assignee: Ayush Saxena >Priority: Major > Labels: pull-request-available > Time Spent: 1h 20m > Remaining Estimate: 0h > > https://lists.apache.org/thread/h5lmpqo2tz7tc02j44qxpwcnjzpxo0k2 -- This message was sent by Atlassian Jira (v8.20.7#820007) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] ayushtkn commented on pull request #4319: HADOOP-18241. Move to JAVA 11.

ayushtkn commented on PR #4319: URL: https://github.com/apache/hadoop/pull/4319#issuecomment-1151964919 @slfan1989 Javadoc errors most of them I sorted, you can try get hold of this if you have time [HADOOP-15984](https://issues.apache.org/jira/browse/HADOOP-15984) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #4424: HDFS-16628 RBF: kerbose user remove Non-default namespace data failed.

hadoop-yetus commented on PR #4424:

URL: https://github.com/apache/hadoop/pull/4424#issuecomment-1151935757

:confetti_ball: **+1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 41s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +0 :ok: | detsecrets | 0m 1s | | detect-secrets was not available.

|

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 37m 30s | | trunk passed |

| +1 :green_heart: | compile | 1m 1s | | trunk passed with JDK

Private Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1 |

| +1 :green_heart: | compile | 0m 57s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 0m 53s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 2s | | trunk passed |

| +1 :green_heart: | javadoc | 1m 7s | | trunk passed with JDK

Private Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1 |

| +1 :green_heart: | javadoc | 1m 19s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 1m 48s | | trunk passed |

| +1 :green_heart: | shadedclient | 21m 5s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 0m 41s | | the patch passed |

| +1 :green_heart: | compile | 0m 44s | | the patch passed with JDK

Private Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1 |

| +1 :green_heart: | javac | 0m 44s | | the patch passed |

| +1 :green_heart: | compile | 0m 40s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 0m 40s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 0m 27s | | the patch passed |

| +1 :green_heart: | mvnsite | 0m 41s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 42s | | the patch passed with JDK

Private Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1 |

| +1 :green_heart: | javadoc | 0m 57s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 1m 28s | | the patch passed |

| +1 :green_heart: | shadedclient | 20m 32s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 21m 41s | | hadoop-hdfs-rbf in the patch

passed. |

| +1 :green_heart: | asflicense | 0m 54s | | The patch does not

generate ASF License warnings. |

| | | 118m 57s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4424/1/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/4424 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell detsecrets |

| uname | Linux a003555ec08e 4.15.0-58-generic #64-Ubuntu SMP Tue Aug 6

11:12:41 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 61dbbdce1617dedabd4e5d9e8b35009ed13c0152 |

| Default Java | Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Multi-JDK versions | /usr/lib/jvm/java-11-openjdk-amd64:Private

Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4424/1/testReport/ |

| Max. process+thread count | 2752 (vs. ulimit of 5500) |

| modules | C: hadoop-hdfs-project/hadoop-hdfs-rbf U:

hadoop-hdfs-project/hadoop-hdfs-rbf |

| Console output |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4424/1/console |

| versions | git=2.25.1 maven=3.6.3 spotbugs=4.2.2 |

| Powered by | Apache Yetus 0.14.0 https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For

[GitHub] [hadoop] GauthamBanasandra merged pull request #4370: HDFS-16463. Make dirent cross platform compatible

GauthamBanasandra merged PR #4370: URL: https://github.com/apache/hadoop/pull/4370 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

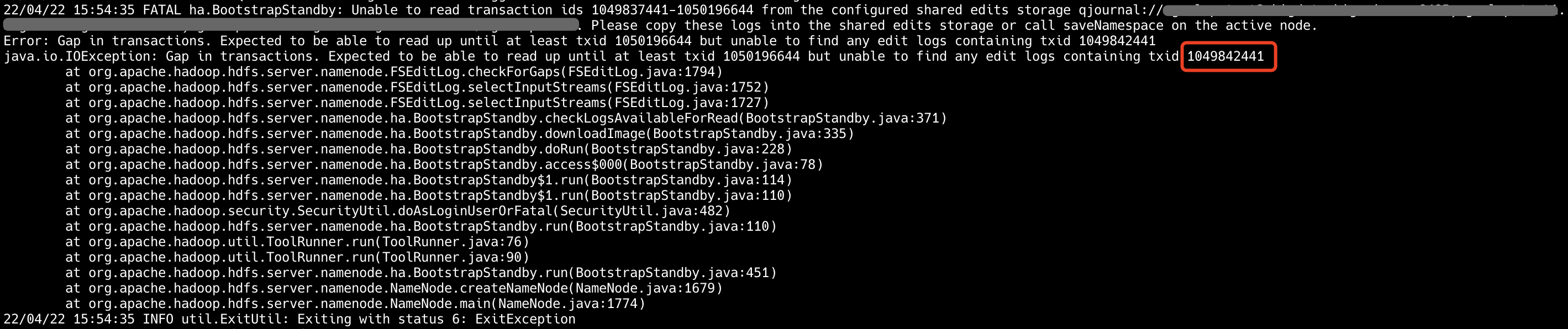

[GitHub] [hadoop] ZanderXu commented on pull request #4219: HDFS-16557. BootstrapStandby failed because of checking gap for inprogress EditLogInputStream

ZanderXu commented on PR #4219: URL: https://github.com/apache/hadoop/pull/4219#issuecomment-1151901548 > When we set dfs.ha.tail-edits.in-progress=true, the edits can be read by getJournaledEdits (there is no gap actually) . But there is an GAP exception thrown. I think there is a gap here because bootstrap expects to get 1050196644 txid, but can't find it in the result. So throwing GAP Exception is ok. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #4269: HDFS-16570 RBF: The router using MultipleDestinationMountTableResolve…

hadoop-yetus commented on PR #4269:

URL: https://github.com/apache/hadoop/pull/4269#issuecomment-1151891187

:confetti_ball: **+1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 40s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +0 :ok: | detsecrets | 0m 0s | | detect-secrets was not available.

|

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 38m 1s | | trunk passed |

| +1 :green_heart: | compile | 1m 1s | | trunk passed with JDK

Private Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1 |

| +1 :green_heart: | compile | 0m 57s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 0m 44s | | trunk passed |

| +1 :green_heart: | mvnsite | 0m 55s | | trunk passed |

| +1 :green_heart: | javadoc | 1m 8s | | trunk passed with JDK

Private Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1 |

| +1 :green_heart: | javadoc | 1m 15s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 1m 48s | | trunk passed |

| +1 :green_heart: | shadedclient | 21m 19s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 0m 41s | | the patch passed |

| +1 :green_heart: | compile | 0m 44s | | the patch passed with JDK

Private Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1 |

| +1 :green_heart: | javac | 0m 44s | | the patch passed |

| +1 :green_heart: | compile | 0m 39s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 0m 39s | | the patch passed |

| +1 :green_heart: | blanks | 0m 1s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 0m 27s | | the patch passed |

| +1 :green_heart: | mvnsite | 0m 43s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 39s | | the patch passed with JDK

Private Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1 |

| +1 :green_heart: | javadoc | 0m 58s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 1m 28s | | the patch passed |

| +1 :green_heart: | shadedclient | 20m 24s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 21m 56s | | hadoop-hdfs-rbf in the patch

passed. |

| +1 :green_heart: | asflicense | 0m 52s | | The patch does not

generate ASF License warnings. |

| | | 119m 4s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4269/3/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/4269 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell detsecrets |

| uname | Linux 5dc8306caacd 4.15.0-112-generic #113-Ubuntu SMP Thu Jul 9

23:41:39 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 94273aed12c914e3cd5bec3f27390aa9876e519c |

| Default Java | Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Multi-JDK versions | /usr/lib/jvm/java-11-openjdk-amd64:Private

Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4269/3/testReport/ |

| Max. process+thread count | 2662 (vs. ulimit of 5500) |

| modules | C: hadoop-hdfs-project/hadoop-hdfs-rbf U:

hadoop-hdfs-project/hadoop-hdfs-rbf |

| Console output |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4269/3/console |

| versions | git=2.25.1 maven=3.6.3 spotbugs=4.2.2 |

| Powered by | Apache Yetus 0.14.0 https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For

[GitHub] [hadoop] ZanderXu commented on pull request #4219: HDFS-16557. BootstrapStandby failed because of checking gap for inprogress EditLogInputStream

ZanderXu commented on PR #4219: URL: https://github.com/apache/hadoop/pull/4219#issuecomment-1151890746 As I explained above, change to `if (next == HdfsServerConstants.INVALID_TXID || elis.isInProgress())` maybe change the original semantics of the `checkgap` method. About my explain, do you have any questions? Discuss together and become more familiar with the relevant logic. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] ZanderXu commented on pull request #4219: HDFS-16557. BootstrapStandby failed because of checking gap for inprogress EditLogInputStream

ZanderXu commented on PR #4219: URL: https://github.com/apache/hadoop/pull/4219#issuecomment-1151887486 So in this case, we should change bootstrap logic. Solution one: set DFS_HA_TAILEDITS_INPROGRESS_KEY to false. Solution two: call getJournaledEdits multiple times until get the latest txid, and then go to checkgap -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] ZanderXu commented on pull request #4219: HDFS-16557. BootstrapStandby failed because of checking gap for inprogress EditLogInputStream

ZanderXu commented on PR #4219: URL: https://github.com/apache/hadoop/pull/4219#issuecomment-1151885068 Oh, i know, the root cause is that getJournaledEdits returns up to 5000 txids by default. And 1049842441 - 1049837441 = 5000. I can't reached to 1050196644, so checkForGaps failed. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] tomscut commented on pull request #4219: HDFS-16557. BootstrapStandby failed because of checking gap for inprogress EditLogInputStream

tomscut commented on PR #4219: URL: https://github.com/apache/hadoop/pull/4219#issuecomment-1151882369 > OK, back to BootstrapStandby GAP. Form this stack information, I got that it try to get streams from 1049842441 to 1050196644. But cannot get the txid 1049842441 from the result streams. So I think we should to trace the root cause, why can't we find txid 1049842441 in the return result of `selectInputStreams(streams, 1049842441, true, true)`? > > Please correct me if anything is wrong. Please refer to the discussion with @xkrogen above. The root cause is the` if` condition (`if(next == HdfsServerConstants.INVALID_TXID)`) that does not enter properly. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] ZanderXu commented on pull request #4219: HDFS-16557. BootstrapStandby failed because of checking gap for inprogress EditLogInputStream

ZanderXu commented on PR #4219: URL: https://github.com/apache/hadoop/pull/4219#issuecomment-1151877997 OK, back to BootstrapStandby GAP. Form this stack information, I got that it try to get streams from 1049842441 to 1050196644. But cannot get the txid 1049842441 from the result streams. So I think we should to trace the root cause, why can't we find txid 1049842441 in the return result of `selectInputStreams(streams, 1049842441, true, true)`? Please correct me if anything is wrong. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] zhangxiping1 opened a new pull request, #4424: HDFS-16628 RBF: kerbose user remove Non-default namespace data failed.

zhangxiping1 opened a new pull request, #4424: URL: https://github.com/apache/hadoop/pull/4424 HDFS-16628 RBF: kerbose user remove Non-default namespace data failed -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Comment Edited] (HADOOP-15852) Refactor QuotaUsage

[

https://issues.apache.org/jira/browse/HADOOP-15852?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17552503#comment-17552503

]

Daniel Ma edited comment on HADOOP-15852 at 6/10/22 2:37 AM:

-

/** Return storage type quota. */

165 private long[] getTypesQuota() {

166 return typeQuota;

167 }

[~belugabehr] hello, Could you pls share the reason why this function is

removed?

was (Author: daniel ma):

/** Return storage type quota. */

165 private long[] getTypesQuota() {

166 return typeQuota;

167 }

[~belugabehr] hello, Could you pls share the reason why this function is

remove?

> Refactor QuotaUsage

> ---

>

> Key: HADOOP-15852

> URL: https://issues.apache.org/jira/browse/HADOOP-15852

> Project: Hadoop Common

> Issue Type: Improvement

> Components: common

>Affects Versions: 3.2.0

>Reporter: David Mollitor

>Assignee: David Mollitor

>Priority: Minor

> Fix For: 3.3.0

>

> Attachments: HADOOP-15852.1.patch, HADOOP-15852.2.patch,

> HADOOP-15852.3.patch

>

>

> My new mission is to remove instances of {{StringBuffer}} in favor of

> {{StringBuilder}}.

> * Simplify Code

> * Use Eclipse to generate hashcode/equals

> * User StringBuilder instead of StringBuffer

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Commented] (HADOOP-15852) Refactor QuotaUsage

[

https://issues.apache.org/jira/browse/HADOOP-15852?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17552503#comment-17552503

]

Daniel Ma commented on HADOOP-15852:

/** Return storage type quota. */

165 private long[] getTypesQuota() {

166 return typeQuota;

167 }

[~belugabehr] hello, Could you pls share the reason why this function is

remove?

> Refactor QuotaUsage

> ---

>

> Key: HADOOP-15852

> URL: https://issues.apache.org/jira/browse/HADOOP-15852

> Project: Hadoop Common

> Issue Type: Improvement

> Components: common

>Affects Versions: 3.2.0

>Reporter: David Mollitor

>Assignee: David Mollitor

>Priority: Minor

> Fix For: 3.3.0

>

> Attachments: HADOOP-15852.1.patch, HADOOP-15852.2.patch,

> HADOOP-15852.3.patch

>

>

> My new mission is to remove instances of {{StringBuffer}} in favor of

> {{StringBuilder}}.

> * Simplify Code

> * Use Eclipse to generate hashcode/equals

> * User StringBuilder instead of StringBuffer

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] tomscut commented on pull request #4219: HDFS-16557. BootstrapStandby failed because of checking gap for inprogress EditLogInputStream

tomscut commented on PR #4219: URL: https://github.com/apache/hadoop/pull/4219#issuecomment-1151852402 > Thanks @tomscut , after tracing the code, I think we cannot add `elis.isInProgress()`. > > And I will explain my ideas trough questions and answers. **Question one: Why was INVALID_TXID considered in the original code?** > > * CheckForGaps method is used to check whether streams contains continuous TXids from fromTxId to toAtLeastTxid > * LastTxId equals INVALID_TXID means the stream is in progress > * toAtLeastTxid maybe abnormal value, like Long.MaxValue. So the CheckForGaps method only need to cover the latest inprogress segment. > > **Question two: What is the difference between INVALID_TXID and is InProgress()?** > > * Before introducing [SBN READ], LastTxId equals INVALID_TXID means the stream is in progress. And stream is in progress means it's lastTxId is INVALID_TXID. > * But after introducing [SBN READ], LastTxId equals INVALID_TXID means the stream is in progress. But stream is in progress cannot mean it's lastTxId is INVALID_TXID. Because introducing getJournaledEdits. > * So if we add `elis.isInProgress()` in CheckForGaps, it cannot cover the last writing segments which actual contains latest edit. > > Please correct me if anything is wrong. Thanks @ZanderXu for your comment. Please refer to the stack.  When we set `dfs.ha.tail-edits.in-progress=true`, the txID can be read by getJournaledEdits (there is no gap actually) . But there is an GAP exception thrown. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #4421: YARN-10122. Support signalToContainer API for Federation.

hadoop-yetus commented on PR #4421:

URL: https://github.com/apache/hadoop/pull/4421#issuecomment-1151813201

:confetti_ball: **+1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 39s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 1s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +0 :ok: | detsecrets | 0m 0s | | detect-secrets was not available.

|

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 3 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 37m 50s | | trunk passed |

| +1 :green_heart: | compile | 0m 33s | | trunk passed with JDK

Private Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1 |

| +1 :green_heart: | compile | 0m 30s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 0m 33s | | trunk passed |

| +1 :green_heart: | mvnsite | 0m 35s | | trunk passed |

| +1 :green_heart: | javadoc | 0m 39s | | trunk passed with JDK

Private Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1 |

| +1 :green_heart: | javadoc | 0m 23s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 1m 1s | | trunk passed |

| +1 :green_heart: | shadedclient | 19m 52s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 0m 22s | | the patch passed |

| +1 :green_heart: | compile | 0m 25s | | the patch passed with JDK

Private Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1 |

| +1 :green_heart: | javac | 0m 25s | | the patch passed |

| +1 :green_heart: | compile | 0m 22s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 0m 22s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| -0 :warning: | checkstyle | 0m 16s |

[/results-checkstyle-hadoop-yarn-project_hadoop-yarn_hadoop-yarn-server_hadoop-yarn-server-router.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4421/6/artifact/out/results-checkstyle-hadoop-yarn-project_hadoop-yarn_hadoop-yarn-server_hadoop-yarn-server-router.txt)

|

hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-router:

The patch generated 2 new + 0 unchanged - 0 fixed = 2 total (was 0) |

| +1 :green_heart: | mvnsite | 0m 25s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 21s | | the patch passed with JDK

Private Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1 |

| +1 :green_heart: | javadoc | 0m 18s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 0m 49s | | the patch passed |

| +1 :green_heart: | shadedclient | 20m 18s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 2m 53s | | hadoop-yarn-server-router in

the patch passed. |

| +1 :green_heart: | asflicense | 0m 39s | | The patch does not

generate ASF License warnings. |

| | | 91m 5s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4421/6/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/4421 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell detsecrets |

| uname | Linux 2ef1db663bfb 4.15.0-169-generic #177-Ubuntu SMP Thu Feb 3

10:50:38 UTC 2022 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 9b5229597df40a06407e4325f5bf024771410629 |

| Default Java | Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Multi-JDK versions | /usr/lib/jvm/java-11-openjdk-amd64:Private

Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4421/6/testReport/ |

| Max. process+thread count | 1227 (vs. ulimit of 5500) |

| modules | C:

hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-router U:

hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-router |

| Console output |

[GitHub] [hadoop] hadoop-yetus commented on pull request #4421: YARN-10122. Support signalToContainer API for Federation.

hadoop-yetus commented on PR #4421:

URL: https://github.com/apache/hadoop/pull/4421#issuecomment-1151767771

:confetti_ball: **+1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 40s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +0 :ok: | detsecrets | 0m 1s | | detect-secrets was not available.

|

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 3 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 38m 8s | | trunk passed |

| +1 :green_heart: | compile | 0m 55s | | trunk passed with JDK

Private Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1 |

| +1 :green_heart: | compile | 0m 44s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 0m 45s | | trunk passed |

| +1 :green_heart: | mvnsite | 0m 48s | | trunk passed |

| +1 :green_heart: | javadoc | 0m 53s | | trunk passed with JDK

Private Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1 |

| +1 :green_heart: | javadoc | 0m 39s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 1m 16s | | trunk passed |

| +1 :green_heart: | shadedclient | 20m 42s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 0m 32s | | the patch passed |

| +1 :green_heart: | compile | 0m 34s | | the patch passed with JDK

Private Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1 |

| +1 :green_heart: | javac | 0m 34s | | the patch passed |

| +1 :green_heart: | compile | 0m 30s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 0m 30s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| -0 :warning: | checkstyle | 0m 23s |

[/results-checkstyle-hadoop-yarn-project_hadoop-yarn_hadoop-yarn-server_hadoop-yarn-server-router.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4421/5/artifact/out/results-checkstyle-hadoop-yarn-project_hadoop-yarn_hadoop-yarn-server_hadoop-yarn-server-router.txt)

|

hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-router:

The patch generated 3 new + 0 unchanged - 0 fixed = 3 total (was 0) |

| +1 :green_heart: | mvnsite | 0m 31s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 27s | | the patch passed with JDK

Private Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1 |

| +1 :green_heart: | javadoc | 0m 28s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 0m 59s | | the patch passed |

| +1 :green_heart: | shadedclient | 20m 0s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 3m 12s | | hadoop-yarn-server-router in

the patch passed. |

| +1 :green_heart: | asflicense | 0m 50s | | The patch does not

generate ASF License warnings. |

| | | 95m 21s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4421/5/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/4421 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell detsecrets |

| uname | Linux b4ca09636b18 4.15.0-156-generic #163-Ubuntu SMP Thu Aug 19

23:31:58 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 82fb24709a7f6b2fac63bbc1d49f9eebf51ba272 |

| Default Java | Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Multi-JDK versions | /usr/lib/jvm/java-11-openjdk-amd64:Private

Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4421/5/testReport/ |

| Max. process+thread count | 1295 (vs. ulimit of 5500) |

| modules | C:

hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-router U:

hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-router |

| Console output |

[GitHub] [hadoop] hadoop-yetus commented on pull request #4421: YARN-10122. Support signalToContainer API for Federation.

hadoop-yetus commented on PR #4421:

URL: https://github.com/apache/hadoop/pull/4421#issuecomment-1151765548

:confetti_ball: **+1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 38s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +0 :ok: | detsecrets | 0m 0s | | detect-secrets was not available.

|

| +1 :green_heart: | @author | 0m 1s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 3 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 38m 14s | | trunk passed |

| +1 :green_heart: | compile | 0m 56s | | trunk passed with JDK

Private Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1 |

| +1 :green_heart: | compile | 0m 42s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 0m 43s | | trunk passed |

| +1 :green_heart: | mvnsite | 0m 50s | | trunk passed |

| +1 :green_heart: | javadoc | 0m 51s | | trunk passed with JDK

Private Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1 |

| +1 :green_heart: | javadoc | 0m 40s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 1m 16s | | trunk passed |

| +1 :green_heart: | shadedclient | 20m 29s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 0m 28s | | the patch passed |

| +1 :green_heart: | compile | 0m 30s | | the patch passed with JDK

Private Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1 |

| +1 :green_heart: | javac | 0m 30s | | the patch passed |

| +1 :green_heart: | compile | 0m 25s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 0m 25s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| -0 :warning: | checkstyle | 0m 20s |

[/results-checkstyle-hadoop-yarn-project_hadoop-yarn_hadoop-yarn-server_hadoop-yarn-server-router.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4421/4/artifact/out/results-checkstyle-hadoop-yarn-project_hadoop-yarn_hadoop-yarn-server_hadoop-yarn-server-router.txt)

|

hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-router:

The patch generated 3 new + 0 unchanged - 0 fixed = 3 total (was 0) |

| +1 :green_heart: | mvnsite | 0m 32s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 29s | | the patch passed with JDK

Private Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1 |

| +1 :green_heart: | javadoc | 0m 27s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 1m 3s | | the patch passed |

| +1 :green_heart: | shadedclient | 20m 16s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 3m 0s | | hadoop-yarn-server-router in

the patch passed. |

| +1 :green_heart: | asflicense | 0m 50s | | The patch does not

generate ASF License warnings. |

| | | 95m 1s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4421/4/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/4421 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell detsecrets |

| uname | Linux ebc3ee358ffd 4.15.0-156-generic #163-Ubuntu SMP Thu Aug 19

23:31:58 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 82fb24709a7f6b2fac63bbc1d49f9eebf51ba272 |

| Default Java | Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Multi-JDK versions | /usr/lib/jvm/java-11-openjdk-amd64:Private

Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4421/4/testReport/ |

| Max. process+thread count | 1266 (vs. ulimit of 5500) |

| modules | C:

hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-router U:

hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-router |

| Console output |

[GitHub] [hadoop] zhengchenyu closed pull request #4408: YARN-11172. Fix TestClientRMTokens#testDelegationToken introduced by HDFS-16563.

zhengchenyu closed pull request #4408: YARN-11172. Fix TestClientRMTokens#testDelegationToken introduced by HDFS-16563. URL: https://github.com/apache/hadoop/pull/4408 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] zhengchenyu closed pull request #4408: YARN-11172. Fix TestClientRMTokens#testDelegationToken introduced by HDFS-16563.

zhengchenyu closed pull request #4408: YARN-11172. Fix TestClientRMTokens#testDelegationToken introduced by HDFS-16563. URL: https://github.com/apache/hadoop/pull/4408 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] slfan1989 opened a new pull request, #4423: HDFS-16629. [JDK 11] Fix javadoc warnings in hadoop-hdfs module.

slfan1989 opened a new pull request, #4423: URL: https://github.com/apache/hadoop/pull/4423 JIRA: HDFS-16629. [JDK 11] Fix javadoc warnings in hadoop-hdfs module. During compilation of the most recently committed code, a java doc waring appeared and I will fix it. ``` 1 error 100 warnings [INFO] [INFO] BUILD FAILURE [INFO] [INFO] Total time: 37.132 s [INFO] Finished at: 2022-06-09T17:07:12Z [INFO] [ERROR] Failed to execute goal org.apache.maven.plugins:maven-javadoc-plugin:3.0.1:javadoc-no-fork (default-cli) on project hadoop-hdfs: An error has occurred in Javadoc report generation: [ERROR] Exit code: 1 - javadoc: warning - You have specified the HTML version as HTML 4.01 by using the -html4 option. [ERROR] The default is currently HTML5 and the support for HTML 4.01 will be removed [ERROR] in a future release. To suppress this warning, please ensure that any HTML constructs ``` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work started] (HADOOP-18284) Fix Repeated Semicolons

[

https://issues.apache.org/jira/browse/HADOOP-18284?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Work on HADOOP-18284 started by fanshilun.

--

> Fix Repeated Semicolons

> ---

>

> Key: HADOOP-18284

> URL: https://issues.apache.org/jira/browse/HADOOP-18284

> Project: Hadoop Common

> Issue Type: Improvement

>Affects Versions: 3.4.0

>Reporter: fanshilun

>Assignee: fanshilun

>Priority: Major

> Labels: pull-request-available

> Fix For: 3.4.0

>

> Time Spent: 10m

> Remaining Estimate: 0h

>

> while reading the code, I found a very tiny optimization point, part of the

> code contains 2 semicolons at the end, I will fix it. Because this change is

> simple, I fixed it in One JIRA.

> {code:java}

> private final ReentrantReadWriteLock lock = new ReentrantReadWriteLock();;

> {code}

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] slfan1989 commented on a diff in pull request #4421: YARN-10122. Support signalToContainer API for Federation.

slfan1989 commented on code in PR #4421:

URL: https://github.com/apache/hadoop/pull/4421#discussion_r894049538

##

hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-router/src/test/java/org/apache/hadoop/yarn/server/router/clientrm/TestableFederationClientInterceptor.java:

##

@@ -75,7 +75,7 @@ protected ApplicationClientProtocol

getClientRMProxyForSubCluster(

mockRM.init(super.getConf());

mockRM.start();

try {

- MockNM nm = mockRM.registerNode("127.0.0.1:1234", 8092,4);

+ MockNM nm = mockRM.registerNode("127.0.0.1:1234", 8 * 1024,4);

Review Comment:

I'll fix it.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] goiri commented on a diff in pull request #4421: YARN-10122. Support signalToContainer API for Federation.

goiri commented on code in PR #4421:

URL: https://github.com/apache/hadoop/pull/4421#discussion_r894045330

##

hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-router/src/test/java/org/apache/hadoop/yarn/server/router/clientrm/TestableFederationClientInterceptor.java:

##

@@ -75,7 +75,7 @@ protected ApplicationClientProtocol

getClientRMProxyForSubCluster(

mockRM.init(super.getConf());

mockRM.start();

try {

- MockNM nm = mockRM.registerNode("127.0.0.1:1234", 8092,4);

+ MockNM nm = mockRM.registerNode("127.0.0.1:1234", 8 * 1024,4);

Review Comment:

I was also pointing to the space after the comma.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-18241) Move to Java 11

[ https://issues.apache.org/jira/browse/HADOOP-18241?focusedWorklogId=780141=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-780141 ] ASF GitHub Bot logged work on HADOOP-18241: --- Author: ASF GitHub Bot Created on: 09/Jun/22 23:53 Start Date: 09/Jun/22 23:53 Worklog Time Spent: 10m Work Description: slfan1989 commented on PR #4319: URL: https://github.com/apache/hadoop/pull/4319#issuecomment-1151724366 Hi, @ayushtkn I hope to provide some help, I see that during the compilation process of the code I submitted, there is a java doc compilation warning in JDK11, I will fix it. Issue Time Tracking --- Worklog Id: (was: 780141) Time Spent: 1h 10m (was: 1h) > Move to Java 11 > --- > > Key: HADOOP-18241 > URL: https://issues.apache.org/jira/browse/HADOOP-18241 > Project: Hadoop Common > Issue Type: Improvement >Affects Versions: 3.4.0 >Reporter: Ayush Saxena >Assignee: Ayush Saxena >Priority: Major > Labels: pull-request-available > Time Spent: 1h 10m > Remaining Estimate: 0h > > https://lists.apache.org/thread/h5lmpqo2tz7tc02j44qxpwcnjzpxo0k2 -- This message was sent by Atlassian Jira (v8.20.7#820007) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] slfan1989 commented on pull request #4319: HADOOP-18241. Move to JAVA 11.

slfan1989 commented on PR #4319: URL: https://github.com/apache/hadoop/pull/4319#issuecomment-1151724366 Hi, @ayushtkn I hope to provide some help, I see that during the compilation process of the code I submitted, there is a java doc compilation warning in JDK11, I will fix it. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] slfan1989 commented on pull request #4419: HDFS-16627. improve BPServiceActor#register Log Add NN Addr.

slfan1989 commented on PR #4419: URL: https://github.com/apache/hadoop/pull/4419#issuecomment-1151721248 @Hexiaoqiao Please help me review the code again! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #4421: YARN-10122. Support signalToContainer API for Federation.

hadoop-yetus commented on PR #4421:

URL: https://github.com/apache/hadoop/pull/4421#issuecomment-1151720401

:confetti_ball: **+1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 40s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +0 :ok: | detsecrets | 0m 1s | | detect-secrets was not available.

|

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 3 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 37m 53s | | trunk passed |

| +1 :green_heart: | compile | 0m 30s | | trunk passed with JDK

Private Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1 |

| +1 :green_heart: | compile | 0m 31s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 0m 30s | | trunk passed |

| +1 :green_heart: | mvnsite | 0m 33s | | trunk passed |

| +1 :green_heart: | javadoc | 0m 39s | | trunk passed with JDK

Private Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1 |

| +1 :green_heart: | javadoc | 0m 25s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 1m 1s | | trunk passed |

| +1 :green_heart: | shadedclient | 19m 33s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 0m 23s | | the patch passed |

| +1 :green_heart: | compile | 0m 24s | | the patch passed with JDK

Private Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1 |

| +1 :green_heart: | javac | 0m 24s | | the patch passed |

| +1 :green_heart: | compile | 0m 21s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 0m 21s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| -0 :warning: | checkstyle | 0m 14s |

[/results-checkstyle-hadoop-yarn-project_hadoop-yarn_hadoop-yarn-server_hadoop-yarn-server-router.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4421/3/artifact/out/results-checkstyle-hadoop-yarn-project_hadoop-yarn_hadoop-yarn-server_hadoop-yarn-server-router.txt)

|

hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-router:

The patch generated 2 new + 0 unchanged - 0 fixed = 2 total (was 0) |

| +1 :green_heart: | mvnsite | 0m 25s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 20s | | the patch passed with JDK

Private Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1 |

| +1 :green_heart: | javadoc | 0m 19s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 0m 52s | | the patch passed |

| +1 :green_heart: | shadedclient | 20m 19s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 2m 59s | | hadoop-yarn-server-router in

the patch passed. |

| +1 :green_heart: | asflicense | 0m 35s | | The patch does not

generate ASF License warnings. |

| | | 90m 45s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4421/3/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/4421 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell detsecrets |

| uname | Linux b786df697e64 4.15.0-169-generic #177-Ubuntu SMP Thu Feb 3

10:50:38 UTC 2022 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / de4434e15f4c3d22494aba74e1a199c32750eac9 |

| Default Java | Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Multi-JDK versions | /usr/lib/jvm/java-11-openjdk-amd64:Private

Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4421/3/testReport/ |

| Max. process+thread count | 1266 (vs. ulimit of 5500) |

| modules | C:

hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-router U:

hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-router |

| Console output |

[GitHub] [hadoop] slfan1989 commented on pull request #4406: HDFS-16619. improve HttpHeaders.Values And HttpHeaders.Names With recommended Class

slfan1989 commented on PR #4406: URL: https://github.com/apache/hadoop/pull/4406#issuecomment-1151719908 @tomscut Please help me review the code, this pr is to replace the deprecated import. There should be no code risk and will not cause junit to fail. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-18284) Fix Repeated Semicolons

[

https://issues.apache.org/jira/browse/HADOOP-18284?focusedWorklogId=780137=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-780137

]

ASF GitHub Bot logged work on HADOOP-18284:

---

Author: ASF GitHub Bot

Created on: 09/Jun/22 23:47

Start Date: 09/Jun/22 23:47

Worklog Time Spent: 10m

Work Description: slfan1989 opened a new pull request, #4422:

URL: https://github.com/apache/hadoop/pull/4422

JIRA:HADOOP-18284. Fix Repeated Semicolons.

while reading the code, I found a very tiny optimization point, part of the

code contains 2 semicolons at the end, I will fix it. Because this change is

simple, I fixed it in One JIRA.

The code looks like this:

```

private final ReentrantReadWriteLock lock = new ReentrantReadWriteLock();;

```

Issue Time Tracking

---

Worklog Id: (was: 780137)

Remaining Estimate: 0h

Time Spent: 10m

> Fix Repeated Semicolons

> ---

>

> Key: HADOOP-18284

> URL: https://issues.apache.org/jira/browse/HADOOP-18284

> Project: Hadoop Common

> Issue Type: Improvement

>Affects Versions: 3.4.0

>Reporter: fanshilun

>Assignee: fanshilun

>Priority: Major

> Fix For: 3.4.0

>

> Time Spent: 10m

> Remaining Estimate: 0h

>

> while reading the code, I found a very tiny optimization point, part of the

> code contains 2 semicolons at the end, I will fix it. Because this change is

> simple, I fixed it in One JIRA.

> {code:java}

> private final ReentrantReadWriteLock lock = new ReentrantReadWriteLock();;

> {code}

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Updated] (HADOOP-18284) Fix Repeated Semicolons

[

https://issues.apache.org/jira/browse/HADOOP-18284?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

ASF GitHub Bot updated HADOOP-18284:

Labels: pull-request-available (was: )

> Fix Repeated Semicolons

> ---

>

> Key: HADOOP-18284

> URL: https://issues.apache.org/jira/browse/HADOOP-18284

> Project: Hadoop Common

> Issue Type: Improvement

>Affects Versions: 3.4.0

>Reporter: fanshilun

>Assignee: fanshilun

>Priority: Major

> Labels: pull-request-available

> Fix For: 3.4.0

>

> Time Spent: 10m

> Remaining Estimate: 0h

>

> while reading the code, I found a very tiny optimization point, part of the

> code contains 2 semicolons at the end, I will fix it. Because this change is

> simple, I fixed it in One JIRA.

> {code:java}

> private final ReentrantReadWriteLock lock = new ReentrantReadWriteLock();;

> {code}

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] slfan1989 opened a new pull request, #4422: HADOOP-18284. Fix Repeated Semicolons.

slfan1989 opened a new pull request, #4422: URL: https://github.com/apache/hadoop/pull/4422 JIRA:HADOOP-18284. Fix Repeated Semicolons. while reading the code, I found a very tiny optimization point, part of the code contains 2 semicolons at the end, I will fix it. Because this change is simple, I fixed it in One JIRA. The code looks like this: ``` private final ReentrantReadWriteLock lock = new ReentrantReadWriteLock();; ``` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] slfan1989 commented on pull request #4382: HDFS-16609. Fix Flakes Junit Tests that often report timeouts.

slfan1989 commented on PR #4382: URL: https://github.com/apache/hadoop/pull/4382#issuecomment-1151717102 @tomscut @Hexiaoqiao Thanks for helping to review the code, the junit test has passed, the java doc problem can be ignored, I will submit pr separately to fix the java doc problem. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Created] (HADOOP-18284) Fix Repeated Semicolons

fanshilun created HADOOP-18284:

--

Summary: Fix Repeated Semicolons

Key: HADOOP-18284

URL: https://issues.apache.org/jira/browse/HADOOP-18284

Project: Hadoop Common

Issue Type: Improvement

Affects Versions: 3.4.0

Reporter: fanshilun

Assignee: fanshilun

Fix For: 3.4.0

while reading the code, I found a very tiny optimization point, part of the

code contains 2 semicolons at the end, I will fix it. Because this change is

simple, I fixed it in One JIRA.

{code:java}

private final ReentrantReadWriteLock lock = new ReentrantReadWriteLock();;

{code}

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #4382: HDFS-16609. Fix Flakes Junit Tests that often report timeouts.

hadoop-yetus commented on PR #4382:

URL: https://github.com/apache/hadoop/pull/4382#issuecomment-1151713943

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 56s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +0 :ok: | detsecrets | 0m 1s | | detect-secrets was not available.

|

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 3 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 42m 12s | | trunk passed |

| +1 :green_heart: | compile | 1m 43s | | trunk passed with JDK

Private Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1 |

| +1 :green_heart: | compile | 1m 33s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 1m 21s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 47s | | trunk passed |

| -1 :x: | javadoc | 1m 24s |

[/branch-javadoc-hadoop-hdfs-project_hadoop-hdfs-jdkPrivateBuild-11.0.15+10-Ubuntu-0ubuntu0.20.04.1.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4382/4/artifact/out/branch-javadoc-hadoop-hdfs-project_hadoop-hdfs-jdkPrivateBuild-11.0.15+10-Ubuntu-0ubuntu0.20.04.1.txt)

| hadoop-hdfs in trunk failed with JDK Private

Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1. |

| +1 :green_heart: | javadoc | 1m 39s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 4m 7s | | trunk passed |

| +1 :green_heart: | shadedclient | 26m 51s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 23s | | the patch passed |

| +1 :green_heart: | compile | 1m 31s | | the patch passed with JDK

Private Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1 |

| +1 :green_heart: | javac | 1m 31s | | the patch passed |

| +1 :green_heart: | compile | 1m 24s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 1m 24s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 1m 8s | | the patch passed |

| +1 :green_heart: | mvnsite | 1m 35s | | the patch passed |

| -1 :x: | javadoc | 1m 3s |

[/patch-javadoc-hadoop-hdfs-project_hadoop-hdfs-jdkPrivateBuild-11.0.15+10-Ubuntu-0ubuntu0.20.04.1.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4382/4/artifact/out/patch-javadoc-hadoop-hdfs-project_hadoop-hdfs-jdkPrivateBuild-11.0.15+10-Ubuntu-0ubuntu0.20.04.1.txt)

| hadoop-hdfs in the patch failed with JDK Private

Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1. |

| +1 :green_heart: | javadoc | 1m 35s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 3m 45s | | the patch passed |

| +1 :green_heart: | shadedclient | 26m 8s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 346m 9s | | hadoop-hdfs in the patch

passed. |

| +1 :green_heart: | asflicense | 0m 58s | | The patch does not

generate ASF License warnings. |

| | | 467m 30s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4382/4/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/4382 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell detsecrets |

| uname | Linux d4cd432f2daf 4.15.0-175-generic #184-Ubuntu SMP Thu Mar 24

17:48:36 UTC 2022 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 20fe8be4eada6d583a1a3c29272fdf535d7b5365 |

| Default Java | Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Multi-JDK versions | /usr/lib/jvm/java-11-openjdk-amd64:Private

Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4382/4/testReport/ |

| Max. process+thread count | 2359 (vs. ulimit of 5500) |

| modules | C:

[GitHub] [hadoop] slfan1989 commented on a diff in pull request #4421: YARN-10122. Support signalToContainer API for Federation.

slfan1989 commented on code in PR #4421:

URL: https://github.com/apache/hadoop/pull/4421#discussion_r894032927

##

hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-router/src/test/java/org/apache/hadoop/yarn/server/router/clientrm/TestableFederationClientInterceptor.java:

##

@@ -71,7 +75,8 @@ protected ApplicationClientProtocol

getClientRMProxyForSubCluster(

mockRM.init(super.getConf());

mockRM.start();

try {

- mockRM.registerNode("h1:1234", 1024);

+ MockNM nm = mockRM.registerNode("127.0.0.1:1234", 8092,4);

Review Comment:

Thanks for your help reviewing the code, I will fix it.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] goiri commented on a diff in pull request #4421: YARN-10122. Support signalToContainer API for Federation.

goiri commented on code in PR #4421:

URL: https://github.com/apache/hadoop/pull/4421#discussion_r894029331

##

hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-router/src/test/java/org/apache/hadoop/yarn/server/router/clientrm/TestableFederationClientInterceptor.java:

##

@@ -71,7 +75,8 @@ protected ApplicationClientProtocol

getClientRMProxyForSubCluster(

mockRM.init(super.getConf());

mockRM.start();

try {

- mockRM.registerNode("h1:1234", 1024);

+ MockNM nm = mockRM.registerNode("127.0.0.1:1234", 8092,4);

Review Comment:

8*1024, 4

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #4406: HDFS-16619. improve HttpHeaders.Values And HttpHeaders.Names With recommended Class

hadoop-yetus commented on PR #4406:

URL: https://github.com/apache/hadoop/pull/4406#issuecomment-1151657257

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 50s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +0 :ok: | detsecrets | 0m 1s | | detect-secrets was not available.

|

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 2 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 37m 11s | | trunk passed |

| +1 :green_heart: | compile | 1m 44s | | trunk passed with JDK

Private Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1 |

| +1 :green_heart: | compile | 1m 37s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 1m 24s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 47s | | trunk passed |

| -1 :x: | javadoc | 1m 25s |

[/branch-javadoc-hadoop-hdfs-project_hadoop-hdfs-jdkPrivateBuild-11.0.15+10-Ubuntu-0ubuntu0.20.04.1.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4406/3/artifact/out/branch-javadoc-hadoop-hdfs-project_hadoop-hdfs-jdkPrivateBuild-11.0.15+10-Ubuntu-0ubuntu0.20.04.1.txt)

| hadoop-hdfs in trunk failed with JDK Private

Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1. |

| +1 :green_heart: | javadoc | 1m 49s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 3m 45s | | trunk passed |

| +1 :green_heart: | shadedclient | 23m 43s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 22s | | the patch passed |

| +1 :green_heart: | compile | 1m 28s | | the patch passed with JDK

Private Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1 |

| +1 :green_heart: | javac | 1m 28s | |

hadoop-hdfs-project_hadoop-hdfs-jdkPrivateBuild-11.0.15+10-Ubuntu-0ubuntu0.20.04.1

with JDK Private Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1 generated 0 new +

911 unchanged - 26 fixed = 911 total (was 937) |

| +1 :green_heart: | compile | 1m 18s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 1m 18s | |

hadoop-hdfs-project_hadoop-hdfs-jdkPrivateBuild-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07

with JDK Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 generated 0 new

+ 890 unchanged - 26 fixed = 890 total (was 916) |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 1m 1s | | the patch passed |

| +1 :green_heart: | mvnsite | 1m 24s | | the patch passed |

| -1 :x: | javadoc | 0m 55s |

[/patch-javadoc-hadoop-hdfs-project_hadoop-hdfs-jdkPrivateBuild-11.0.15+10-Ubuntu-0ubuntu0.20.04.1.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4406/3/artifact/out/patch-javadoc-hadoop-hdfs-project_hadoop-hdfs-jdkPrivateBuild-11.0.15+10-Ubuntu-0ubuntu0.20.04.1.txt)

| hadoop-hdfs in the patch failed with JDK Private

Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1. |

| +1 :green_heart: | javadoc | 1m 32s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 3m 18s | | the patch passed |

| +1 :green_heart: | shadedclient | 22m 28s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 247m 16s | | hadoop-hdfs in the patch

passed. |

| +1 :green_heart: | asflicense | 1m 14s | | The patch does not

generate ASF License warnings. |

| | | 356m 51s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4406/3/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/4406 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell detsecrets |

| uname | Linux fe39bdb378e4 4.15.0-112-generic #113-Ubuntu SMP Thu Jul 9

23:41:39 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 44d000361245a4e2cf86089c251598f2d0daacbb |

| Default Java | Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Multi-JDK

[GitHub] [hadoop] hadoop-yetus commented on pull request #4419: HDFS-16627. improve BPServiceActor#register Log Add NN Addr.

hadoop-yetus commented on PR #4419:

URL: https://github.com/apache/hadoop/pull/4419#issuecomment-1151655696

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 37s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 1s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +0 :ok: | detsecrets | 0m 0s | | detect-secrets was not available.

|

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 37m 10s | | trunk passed |

| +1 :green_heart: | compile | 1m 41s | | trunk passed with JDK

Private Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1 |

| +1 :green_heart: | compile | 1m 39s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 1m 23s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 43s | | trunk passed |

| -1 :x: | javadoc | 1m 24s |

[/branch-javadoc-hadoop-hdfs-project_hadoop-hdfs-jdkPrivateBuild-11.0.15+10-Ubuntu-0ubuntu0.20.04.1.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4419/3/artifact/out/branch-javadoc-hadoop-hdfs-project_hadoop-hdfs-jdkPrivateBuild-11.0.15+10-Ubuntu-0ubuntu0.20.04.1.txt)

| hadoop-hdfs in trunk failed with JDK Private

Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1. |

| +1 :green_heart: | javadoc | 1m 49s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 3m 41s | | trunk passed |

| +1 :green_heart: | shadedclient | 22m 58s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 25s | | the patch passed |

| +1 :green_heart: | compile | 1m 26s | | the patch passed with JDK

Private Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1 |

| +1 :green_heart: | javac | 1m 26s | | the patch passed |

| +1 :green_heart: | compile | 1m 21s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 1m 21s | | the patch passed |

| +1 :green_heart: | blanks | 0m 1s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 1m 0s | | the patch passed |

| +1 :green_heart: | mvnsite | 1m 27s | | the patch passed |

| -1 :x: | javadoc | 0m 57s |

[/patch-javadoc-hadoop-hdfs-project_hadoop-hdfs-jdkPrivateBuild-11.0.15+10-Ubuntu-0ubuntu0.20.04.1.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4419/3/artifact/out/patch-javadoc-hadoop-hdfs-project_hadoop-hdfs-jdkPrivateBuild-11.0.15+10-Ubuntu-0ubuntu0.20.04.1.txt)

| hadoop-hdfs in the patch failed with JDK Private

Build-11.0.15+10-Ubuntu-0ubuntu0.20.04.1. |

| +1 :green_heart: | javadoc | 1m 29s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 3m 23s | | the patch passed |

| +1 :green_heart: | shadedclient | 22m 25s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 251m 3s | | hadoop-hdfs in the patch

passed. |

| +1 :green_heart: | asflicense | 1m 14s | | The patch does not

generate ASF License warnings. |

| | | 359m 36s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4419/3/artifact/out/Dockerfile