[jira] [Commented] (HADOOP-18146) ABFS: Add changes for expect hundred continue header with append requests

[

https://issues.apache.org/jira/browse/HADOOP-18146?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17644176#comment-17644176

]

ASF GitHub Bot commented on HADOOP-18146:

-

hadoop-yetus commented on PR #4039:

URL: https://github.com/apache/hadoop/pull/4039#issuecomment-1340508430

:confetti_ball: **+1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 1m 7s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +0 :ok: | detsecrets | 0m 0s | | detect-secrets was not available.

|

| +0 :ok: | xmllint | 0m 0s | | xmllint was not available. |

| +0 :ok: | markdownlint | 0m 1s | | markdownlint was not available.

|

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 3 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 38m 28s | | trunk passed |

| +1 :green_heart: | compile | 0m 51s | | trunk passed with JDK

Ubuntu-11.0.16+8-post-Ubuntu-0ubuntu120.04 |

| +1 :green_heart: | compile | 0m 38s | | trunk passed with JDK

Private Build-1.8.0_342-8u342-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 0m 35s | | trunk passed |

| +1 :green_heart: | mvnsite | 0m 44s | | trunk passed |

| +1 :green_heart: | javadoc | 0m 45s | | trunk passed with JDK

Ubuntu-11.0.16+8-post-Ubuntu-0ubuntu120.04 |

| +1 :green_heart: | javadoc | 0m 32s | | trunk passed with JDK

Private Build-1.8.0_342-8u342-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 1m 20s | | trunk passed |

| +1 :green_heart: | shadedclient | 20m 26s | | branch has no errors

when building and testing our client artifacts. |

| -0 :warning: | patch | 20m 44s | | Used diff version of patch file.

Binary files and potentially other changes not applied. Please rebase and

squash commits if necessary. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 0m 31s | | the patch passed |

| +1 :green_heart: | compile | 0m 32s | | the patch passed with JDK

Ubuntu-11.0.16+8-post-Ubuntu-0ubuntu120.04 |

| +1 :green_heart: | javac | 0m 32s | | the patch passed |

| +1 :green_heart: | compile | 0m 29s | | the patch passed with JDK

Private Build-1.8.0_342-8u342-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 0m 29s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 0m 19s | | the patch passed |

| +1 :green_heart: | mvnsite | 0m 32s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 24s | | the patch passed with JDK

Ubuntu-11.0.16+8-post-Ubuntu-0ubuntu120.04 |

| +1 :green_heart: | javadoc | 0m 23s | | the patch passed with JDK

Private Build-1.8.0_342-8u342-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 1m 3s | | the patch passed |

| +1 :green_heart: | shadedclient | 20m 5s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 2m 11s | | hadoop-azure in the patch

passed. |

| +1 :green_heart: | asflicense | 0m 37s | | The patch does not

generate ASF License warnings. |

| | | 93m 53s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4039/30/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/4039 |

| JIRA Issue | HADOOP-18146 |

| Optional Tests | dupname asflicense codespell detsecrets xmllint compile

javac javadoc mvninstall mvnsite unit shadedclient spotbugs checkstyle

markdownlint |

| uname | Linux d2a2217c4b6e 4.15.0-200-generic #211-Ubuntu SMP Thu Nov 24

18:16:04 UTC 2022 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / f2e6f522fb3d170cbf33abf0d0cdb348f925c43a |

| Default Java | Private Build-1.8.0_342-8u342-b07-0ubuntu1~20.04-b07 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.16+8-post-Ubuntu-0ubuntu120.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_342-8u342-b07-0ubuntu1~20.04-b07 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4039/30/testReport/ |

| Max. process+thread count | 555 (vs. ulimit of 5500) |

| modules

[GitHub] [hadoop] hadoop-yetus commented on pull request #4039: HADOOP-18146: ABFS: Added changes for expect hundred continue header

hadoop-yetus commented on PR #4039:

URL: https://github.com/apache/hadoop/pull/4039#issuecomment-1340508430

:confetti_ball: **+1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 1m 7s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +0 :ok: | detsecrets | 0m 0s | | detect-secrets was not available.

|

| +0 :ok: | xmllint | 0m 0s | | xmllint was not available. |

| +0 :ok: | markdownlint | 0m 1s | | markdownlint was not available.

|

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 3 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 38m 28s | | trunk passed |

| +1 :green_heart: | compile | 0m 51s | | trunk passed with JDK

Ubuntu-11.0.16+8-post-Ubuntu-0ubuntu120.04 |

| +1 :green_heart: | compile | 0m 38s | | trunk passed with JDK

Private Build-1.8.0_342-8u342-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 0m 35s | | trunk passed |

| +1 :green_heart: | mvnsite | 0m 44s | | trunk passed |

| +1 :green_heart: | javadoc | 0m 45s | | trunk passed with JDK

Ubuntu-11.0.16+8-post-Ubuntu-0ubuntu120.04 |

| +1 :green_heart: | javadoc | 0m 32s | | trunk passed with JDK

Private Build-1.8.0_342-8u342-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 1m 20s | | trunk passed |

| +1 :green_heart: | shadedclient | 20m 26s | | branch has no errors

when building and testing our client artifacts. |

| -0 :warning: | patch | 20m 44s | | Used diff version of patch file.

Binary files and potentially other changes not applied. Please rebase and

squash commits if necessary. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 0m 31s | | the patch passed |

| +1 :green_heart: | compile | 0m 32s | | the patch passed with JDK

Ubuntu-11.0.16+8-post-Ubuntu-0ubuntu120.04 |

| +1 :green_heart: | javac | 0m 32s | | the patch passed |

| +1 :green_heart: | compile | 0m 29s | | the patch passed with JDK

Private Build-1.8.0_342-8u342-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 0m 29s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 0m 19s | | the patch passed |

| +1 :green_heart: | mvnsite | 0m 32s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 24s | | the patch passed with JDK

Ubuntu-11.0.16+8-post-Ubuntu-0ubuntu120.04 |

| +1 :green_heart: | javadoc | 0m 23s | | the patch passed with JDK

Private Build-1.8.0_342-8u342-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 1m 3s | | the patch passed |

| +1 :green_heart: | shadedclient | 20m 5s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 2m 11s | | hadoop-azure in the patch

passed. |

| +1 :green_heart: | asflicense | 0m 37s | | The patch does not

generate ASF License warnings. |

| | | 93m 53s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4039/30/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/4039 |

| JIRA Issue | HADOOP-18146 |

| Optional Tests | dupname asflicense codespell detsecrets xmllint compile

javac javadoc mvninstall mvnsite unit shadedclient spotbugs checkstyle

markdownlint |

| uname | Linux d2a2217c4b6e 4.15.0-200-generic #211-Ubuntu SMP Thu Nov 24

18:16:04 UTC 2022 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / f2e6f522fb3d170cbf33abf0d0cdb348f925c43a |

| Default Java | Private Build-1.8.0_342-8u342-b07-0ubuntu1~20.04-b07 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.16+8-post-Ubuntu-0ubuntu120.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_342-8u342-b07-0ubuntu1~20.04-b07 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4039/30/testReport/ |

| Max. process+thread count | 555 (vs. ulimit of 5500) |

| modules | C: hadoop-tools/hadoop-azure U: hadoop-tools/hadoop-azure |

| Console output |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4039/30/console |

| versions | git=2.25.1 maven=3.6.3 spotbugs=4.2.2 |

| Powered by | Apache

[GitHub] [hadoop] Neilxzn commented on pull request #5184: HDFS-16861. RBF. Truncate API always fails when dirs use AllResolver oder on Router

Neilxzn commented on PR #5184: URL: https://github.com/apache/hadoop/pull/5184#issuecomment-1340323320 Jenkins script has failed with code 125. It seems that it has nothing to do with the patch. Please help me run tests again @tomscut -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] dingshun3016 commented on pull request #5180: HDFS-16858. Dynamically adjust max slow disks to exclude.

dingshun3016 commented on PR #5180: URL: https://github.com/apache/hadoop/pull/5180#issuecomment-1340309901 > Please fix checkstyle warning and failed unit test. @tomscut Thanks for your review, I have fixed checkstyle warning. looks like failed unit test is unrelated to the change. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Commented] (HADOOP-18183) s3a audit logs to publish range start/end of GET requests in audit header

[

https://issues.apache.org/jira/browse/HADOOP-18183?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17644086#comment-17644086

]

ASF GitHub Bot commented on HADOOP-18183:

-

dannycjones commented on PR #5110:

URL: https://github.com/apache/hadoop/pull/5110#issuecomment-1340246393

hey @steveloughran - do you have time to give this another pass over the

next week?

> s3a audit logs to publish range start/end of GET requests in audit header

> -

>

> Key: HADOOP-18183

> URL: https://issues.apache.org/jira/browse/HADOOP-18183

> Project: Hadoop Common

> Issue Type: Sub-task

> Components: fs/s3

>Affects Versions: 3.3.2

>Reporter: Steve Loughran

>Assignee: Ankit Saurabh

>Priority: Minor

> Labels: pull-request-available

>

> we don't get the range of ranged get requests in s3 server logs, because the

> AWS s3 log doesn't record that information. we can see it's a partial get

> from the 206 response, but the length of data retrieved is lost.

> LoggingAuditor.beforeExecution() would need to recognise a ranged GET and

> determine the extra key-val pairs for range start and end (rs & re?)

> we might need to modify {{HttpReferrerAuditHeader.buildHttpReferrer()}} to

> take a map of so it can dynamically create a header for each

> request; currently that is not in there.

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] dannycjones commented on pull request #5110: HADOOP-18183. s3a audit logs to publish range start/end of GET requests in audit header

dannycjones commented on PR #5110: URL: https://github.com/apache/hadoop/pull/5110#issuecomment-1340246393 hey @steveloughran - do you have time to give this another pass over the next week? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] slfan1989 commented on a diff in pull request #5169: YARN-11349. [Federation] Router Support DelegationToken With SQL.

slfan1989 commented on code in PR #5169:

URL: https://github.com/apache/hadoop/pull/5169#discussion_r1041594729

##

hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-common/src/main/java/org/apache/hadoop/yarn/server/federation/store/utils/RowCountHandler.java:

##

@@ -0,0 +1,58 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hadoop.yarn.server.federation.store.utils;

+

+import org.apache.hadoop.util.StringUtils;

+

+import java.sql.SQLException;

+

+/**

+ * RowCount Handler.

+ * Used to parse out the rowCount information of the output parameter.

+ */

+public class RowCountHandler implements ResultSetHandler {

Review Comment:

I will create sql folder and move related classes to sql folder.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] slfan1989 commented on a diff in pull request #5169: YARN-11349. [Federation] Router Support DelegationToken With SQL.

slfan1989 commented on code in PR #5169:

URL: https://github.com/apache/hadoop/pull/5169#discussion_r1041594322

##

hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-common/src/main/java/org/apache/hadoop/yarn/server/federation/store/impl/SQLFederationStateStore.java:

##

@@ -1353,45 +1384,454 @@ public Connection getConn() {

return conn;

}

+ /**

+ * SQLFederationStateStore Supports Store New MasterKey.

+ *

+ * @param request The request contains RouterMasterKey, which is an

abstraction for DelegationKey.

+ * @return routerMasterKeyResponse, the response contains the

RouterMasterKey.

+ * @throws YarnException if the call to the state store is unsuccessful.

+ * @throws IOException An IO Error occurred.

+ */

@Override

public RouterMasterKeyResponse storeNewMasterKey(RouterMasterKeyRequest

request)

throws YarnException, IOException {

-throw new NotImplementedException("Code is not implemented");

+

+// Step1: Verify parameters to ensure that key fields are not empty.

+FederationRouterRMTokenInputValidator.validate(request);

+

+// Step2: Parse the parameters and serialize the DelegationKey as a string.

+DelegationKey delegationKey = convertMasterKeyToDelegationKey(request);

+int keyId = delegationKey.getKeyId();

+String delegationKeyStr =

FederationStateStoreUtils.encodeWritable(delegationKey);

+

+// Step3. store data in database.

+try {

+

+ FederationSQLOutParameter rowCountOUT =

+ new FederationSQLOutParameter<>("rowCount_OUT",

java.sql.Types.INTEGER, Integer.class);

+

+ // Execute the query

+ long startTime = clock.getTime();

+ Integer rowCount = getRowCountByProcedureSQL(CALL_SP_ADD_MASTERKEY,

keyId,

+ delegationKeyStr, rowCountOUT);

+ long stopTime = clock.getTime();

+

+ // We hope that 1 record can be written to the database.

+ // If the number of records is not 1, it means that the data was written

incorrectly.

+ if (rowCount != 1) {

+FederationStateStoreUtils.logAndThrowStoreException(LOG,

+"Wrong behavior during the insertion of masterKey, keyId = %s. " +

+"please check the records of the database.",

String.valueOf(keyId));

+ }

+ FederationStateStoreClientMetrics.succeededStateStoreCall(stopTime -

startTime);

+} catch (SQLException e) {

+ FederationStateStoreClientMetrics.failedStateStoreCall();

+ FederationStateStoreUtils.logAndThrowRetriableException(e, LOG,

+ "Unable to insert the newly masterKey, keyId = %s.",

String.valueOf(keyId));

+}

+

+// Step4. Query Data from the database and return the result.

+return getMasterKeyByDelegationKey(request);

}

+ /**

+ * SQLFederationStateStore Supports Remove MasterKey.

+ *

+ * Defined the sp_deleteMasterKey procedure.

+ * This procedure requires 1 input parameters, 1 output parameters.

+ * Input parameters

+ * 1. IN keyId_IN int

+ * Output parameters

+ * 2. OUT rowCount_OUT int

+ *

+ * @param request The request contains RouterMasterKey, which is an

abstraction for DelegationKey

+ * @return routerMasterKeyResponse, the response contains the

RouterMasterKey.

+ * @throws YarnException if the call to the state store is unsuccessful.

+ * @throws IOException An IO Error occurred.

+ */

@Override

public RouterMasterKeyResponse removeStoredMasterKey(RouterMasterKeyRequest

request)

throws YarnException, IOException {

-throw new NotImplementedException("Code is not implemented");

+

+// Step1: Verify parameters to ensure that key fields are not empty.

+FederationRouterRMTokenInputValidator.validate(request);

+

+// Step2: Parse parameters and get KeyId.

+RouterMasterKey paramMasterKey = request.getRouterMasterKey();

+int paramKeyId = paramMasterKey.getKeyId();

+

+// Step3. Clear data from database.

+try {

+

+ // Execute the query

+ long startTime = clock.getTime();

+ FederationSQLOutParameter rowCountOUT =

+ new FederationSQLOutParameter<>("rowCount_OUT",

java.sql.Types.INTEGER, Integer.class);

+ Integer rowCount = getRowCountByProcedureSQL(CALL_SP_DELETE_MASTERKEY,

+ paramKeyId, rowCountOUT);

+ long stopTime = clock.getTime();

+

+ // if it is equal to 0 it means the call

+ // did not delete the reservation from FederationStateStore

+ if (rowCount == 0) {

+FederationStateStoreUtils.logAndThrowStoreException(LOG,

+"masterKeyId = %s does not exist.", String.valueOf(paramKeyId));

+ } else if (rowCount != 1) {

+// if it is different from 1 it means the call

+// had a wrong behavior. Maybe the database is not set correctly.

+FederationStateStoreUtils.logAndThrowStoreException(LOG,

+"Wrong behavior during deleting the keyId %s. " +

+"The database is expected to delete 1 record, " +

+"but the number of

[GitHub] [hadoop] slfan1989 commented on a diff in pull request #5131: YARN-11350. [Federation] Router Support DelegationToken With ZK.

slfan1989 commented on code in PR #5131:

URL: https://github.com/apache/hadoop/pull/5131#discussion_r1041589814

##

hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-common/src/test/java/org/apache/hadoop/yarn/server/federation/store/impl/TestZookeeperFederationStateStore.java:

##

@@ -171,38 +203,117 @@ public void testMetricsInited() throws Exception {

MetricsRecords.assertMetric(record,

"UpdateReservationHomeSubClusterNumOps", expectOps);

}

- @Test(expected = NotImplementedException.class)

+ @Test

public void testStoreNewMasterKey() throws Exception {

super.testStoreNewMasterKey();

}

- @Test(expected = NotImplementedException.class)

+ @Test

Review Comment:

I agree with you, I will remove this part of the code.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] slfan1989 commented on a diff in pull request #5131: YARN-11350. [Federation] Router Support DelegationToken With ZK.

slfan1989 commented on code in PR #5131:

URL: https://github.com/apache/hadoop/pull/5131#discussion_r1041589119

##

hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-common/src/test/java/org/apache/hadoop/yarn/server/federation/store/impl/TestSQLFederationStateStore.java:

##

@@ -592,4 +588,14 @@ public void testRemoveStoredToken() throws IOException,

YarnException {

public void testGetTokenByRouterStoreToken() throws IOException,

YarnException {

super.testGetTokenByRouterStoreToken();

}

+

+ @Override

+ protected void checkRouterMasterKey(DelegationKey delegationKey,

+ RouterMasterKey routerMasterKey) throws YarnException, IOException {

Review Comment:

Thank you for your suggestion! I'll add a comment explaining what the method

does.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] slfan1989 commented on a diff in pull request #5131: YARN-11350. [Federation] Router Support DelegationToken With ZK.

slfan1989 commented on code in PR #5131: URL: https://github.com/apache/hadoop/pull/5131#discussion_r1041588318 ## hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-common/src/test/java/org/apache/hadoop/yarn/server/federation/store/impl/TestSQLFederationStateStore.java: ## @@ -18,21 +18,17 @@ package org.apache.hadoop.yarn.server.federation.store.impl; import org.apache.commons.lang3.NotImplementedException; +import org.apache.hadoop.security.token.delegation.DelegationKey; import org.apache.hadoop.test.LambdaTestUtils; import org.apache.hadoop.util.Time; import org.apache.hadoop.yarn.api.records.ApplicationId; import org.apache.hadoop.yarn.api.records.ReservationId; import org.apache.hadoop.yarn.conf.YarnConfiguration; import org.apache.hadoop.yarn.exceptions.YarnException; +import org.apache.hadoop.yarn.security.client.RMDelegationTokenIdentifier; import org.apache.hadoop.yarn.server.federation.store.FederationStateStore; import org.apache.hadoop.yarn.server.federation.store.metrics.FederationStateStoreClientMetrics; -import org.apache.hadoop.yarn.server.federation.store.records.SubClusterId; -import org.apache.hadoop.yarn.server.federation.store.records.SubClusterInfo; -import org.apache.hadoop.yarn.server.federation.store.records.SubClusterRegisterRequest; -import org.apache.hadoop.yarn.server.federation.store.records.ReservationHomeSubCluster; -import org.apache.hadoop.yarn.server.federation.store.records.AddReservationHomeSubClusterRequest; -import org.apache.hadoop.yarn.server.federation.store.records.UpdateReservationHomeSubClusterRequest; -import org.apache.hadoop.yarn.server.federation.store.records.DeleteReservationHomeSubClusterRequest; +import org.apache.hadoop.yarn.server.federation.store.records.*; Review Comment: Thank you very much for helping to review the code, I will fix it. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Commented] (HADOOP-18329) Add support for IBM Semeru OE JRE 11.0.15.0 and greater

[

https://issues.apache.org/jira/browse/HADOOP-18329?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17644048#comment-17644048

]

ASF GitHub Bot commented on HADOOP-18329:

-

hadoop-yetus commented on PR #4537:

URL: https://github.com/apache/hadoop/pull/4537#issuecomment-1340106496

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 54s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 2s | | codespell was not available. |

| +0 :ok: | detsecrets | 0m 2s | | detect-secrets was not available.

|

| +0 :ok: | xmllint | 0m 2s | | xmllint was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 5 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 20m 19s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 29m 15s | | trunk passed |

| +1 :green_heart: | compile | 25m 25s | | trunk passed with JDK

Ubuntu-11.0.16+8-post-Ubuntu-0ubuntu120.04 |

| +1 :green_heart: | compile | 21m 41s | | trunk passed with JDK

Private Build-1.8.0_342-8u342-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 1m 14s | | trunk passed |

| +1 :green_heart: | mvnsite | 3m 18s | | trunk passed |

| +1 :green_heart: | javadoc | 2m 46s | | trunk passed with JDK

Ubuntu-11.0.16+8-post-Ubuntu-0ubuntu120.04 |

| +1 :green_heart: | javadoc | 2m 12s | | trunk passed with JDK

Private Build-1.8.0_342-8u342-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 5m 0s | | trunk passed |

| +1 :green_heart: | shadedclient | 23m 18s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 23s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 1m 54s | | the patch passed |

| +1 :green_heart: | compile | 24m 29s | | the patch passed with JDK

Ubuntu-11.0.16+8-post-Ubuntu-0ubuntu120.04 |

| +1 :green_heart: | javac | 24m 29s | | the patch passed |

| +1 :green_heart: | compile | 21m 41s | | the patch passed with JDK

Private Build-1.8.0_342-8u342-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 21m 41s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 1m 9s | | the patch passed |

| +1 :green_heart: | mvnsite | 3m 15s | | the patch passed |

| +1 :green_heart: | javadoc | 2m 34s | | the patch passed with JDK

Ubuntu-11.0.16+8-post-Ubuntu-0ubuntu120.04 |

| +1 :green_heart: | javadoc | 2m 12s | | the patch passed with JDK

Private Build-1.8.0_342-8u342-b07-0ubuntu1~20.04-b07 |

| -1 :x: | spotbugs | 1m 0s |

[/new-spotbugs-hadoop-common-project_hadoop-auth.html](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4537/6/artifact/out/new-spotbugs-hadoop-common-project_hadoop-auth.html)

| hadoop-common-project/hadoop-auth generated 1 new + 0 unchanged - 0 fixed =

1 total (was 0) |

| +1 :green_heart: | shadedclient | 24m 9s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 0m 38s | | hadoop-minikdc in the patch

passed. |

| +1 :green_heart: | unit | 3m 18s | | hadoop-auth in the patch

passed. |

| +1 :green_heart: | unit | 18m 13s | | hadoop-common in the patch

passed. |

| +1 :green_heart: | unit | 1m 17s | | hadoop-registry in the patch

passed. |

| +1 :green_heart: | asflicense | 0m 52s | | The patch does not

generate ASF License warnings. |

| | | 250m 48s | | |

| Reason | Tests |

|---:|:--|

| SpotBugs | module:hadoop-common-project/hadoop-auth |

| | org.apache.hadoop.util.PlatformName.() creates a

org.apache.hadoop.util.PlatformName$SystemClassAccessor classloader, which

should be performed within a doPrivileged block At PlatformName.java:a

org.apache.hadoop.util.PlatformName$SystemClassAccessor classloader, which

should be performed within a doPrivileged block At PlatformName.java:[line 61]

|

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4537/6/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/4537 |

| Optional Tests | dupname

[GitHub] [hadoop] hadoop-yetus commented on pull request #4537: HADOOP-18329 - Support for IBM Semeru JVM v>11.0.15.0 Vendor Name Changes

hadoop-yetus commented on PR #4537:

URL: https://github.com/apache/hadoop/pull/4537#issuecomment-1340106496

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 54s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 2s | | codespell was not available. |

| +0 :ok: | detsecrets | 0m 2s | | detect-secrets was not available.

|

| +0 :ok: | xmllint | 0m 2s | | xmllint was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 5 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 20m 19s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 29m 15s | | trunk passed |

| +1 :green_heart: | compile | 25m 25s | | trunk passed with JDK

Ubuntu-11.0.16+8-post-Ubuntu-0ubuntu120.04 |

| +1 :green_heart: | compile | 21m 41s | | trunk passed with JDK

Private Build-1.8.0_342-8u342-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 1m 14s | | trunk passed |

| +1 :green_heart: | mvnsite | 3m 18s | | trunk passed |

| +1 :green_heart: | javadoc | 2m 46s | | trunk passed with JDK

Ubuntu-11.0.16+8-post-Ubuntu-0ubuntu120.04 |

| +1 :green_heart: | javadoc | 2m 12s | | trunk passed with JDK

Private Build-1.8.0_342-8u342-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 5m 0s | | trunk passed |

| +1 :green_heart: | shadedclient | 23m 18s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 23s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 1m 54s | | the patch passed |

| +1 :green_heart: | compile | 24m 29s | | the patch passed with JDK

Ubuntu-11.0.16+8-post-Ubuntu-0ubuntu120.04 |

| +1 :green_heart: | javac | 24m 29s | | the patch passed |

| +1 :green_heart: | compile | 21m 41s | | the patch passed with JDK

Private Build-1.8.0_342-8u342-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 21m 41s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 1m 9s | | the patch passed |

| +1 :green_heart: | mvnsite | 3m 15s | | the patch passed |

| +1 :green_heart: | javadoc | 2m 34s | | the patch passed with JDK

Ubuntu-11.0.16+8-post-Ubuntu-0ubuntu120.04 |

| +1 :green_heart: | javadoc | 2m 12s | | the patch passed with JDK

Private Build-1.8.0_342-8u342-b07-0ubuntu1~20.04-b07 |

| -1 :x: | spotbugs | 1m 0s |

[/new-spotbugs-hadoop-common-project_hadoop-auth.html](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4537/6/artifact/out/new-spotbugs-hadoop-common-project_hadoop-auth.html)

| hadoop-common-project/hadoop-auth generated 1 new + 0 unchanged - 0 fixed =

1 total (was 0) |

| +1 :green_heart: | shadedclient | 24m 9s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 0m 38s | | hadoop-minikdc in the patch

passed. |

| +1 :green_heart: | unit | 3m 18s | | hadoop-auth in the patch

passed. |

| +1 :green_heart: | unit | 18m 13s | | hadoop-common in the patch

passed. |

| +1 :green_heart: | unit | 1m 17s | | hadoop-registry in the patch

passed. |

| +1 :green_heart: | asflicense | 0m 52s | | The patch does not

generate ASF License warnings. |

| | | 250m 48s | | |

| Reason | Tests |

|---:|:--|

| SpotBugs | module:hadoop-common-project/hadoop-auth |

| | org.apache.hadoop.util.PlatformName.() creates a

org.apache.hadoop.util.PlatformName$SystemClassAccessor classloader, which

should be performed within a doPrivileged block At PlatformName.java:a

org.apache.hadoop.util.PlatformName$SystemClassAccessor classloader, which

should be performed within a doPrivileged block At PlatformName.java:[line 61]

|

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4537/6/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/4537 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell detsecrets xmllint |

| uname | Linux 42f9668f9d01 4.15.0-200-generic #211-Ubuntu SMP Thu Nov 24

18:16:04 UTC 2022 x86_64 x86_64 x86_64 GNU/Linux

[jira] [Commented] (HADOOP-18561) CVE-2021-37533 on commons-net is included in hadoop common and hadoop-client-runtime

[ https://issues.apache.org/jira/browse/HADOOP-18561?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17644043#comment-17644043 ] Steve Loughran commented on HADOOP-18561: - looks legit, though its only of interest to ftp filesystem and we don't recommend that (maybe its time to cut) HADOOP-18361 moved hadoop branch-3.3 to 3.8.0, but that is still exposed. why don't you submit a PR updating the pom? > CVE-2021-37533 on commons-net is included in hadoop common and > hadoop-client-runtime > > > Key: HADOOP-18561 > URL: https://issues.apache.org/jira/browse/HADOOP-18561 > Project: Hadoop Common > Issue Type: Improvement >Reporter: phoebe chen >Priority: Major > > Latest 3.3.4 version of hadoop-common and hadoop-client-runtime includes > commons-net in version 3.6, which has vulnerability CVE-2021-37533. Need to > upgrade it to 3.9 to fix. -- This message was sent by Atlassian Jira (v8.20.10#820010) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Updated] (HADOOP-18561) CVE-2021-37533 on commons-net is included in hadoop common and hadoop-client-runtime

[ https://issues.apache.org/jira/browse/HADOOP-18561?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Steve Loughran updated HADOOP-18561: Component/s: build > CVE-2021-37533 on commons-net is included in hadoop common and > hadoop-client-runtime > > > Key: HADOOP-18561 > URL: https://issues.apache.org/jira/browse/HADOOP-18561 > Project: Hadoop Common > Issue Type: Improvement > Components: build >Affects Versions: 3.3.5, 3.3.4 >Reporter: phoebe chen >Priority: Major > Labels: transitive-cve > > Latest 3.3.4 version of hadoop-common and hadoop-client-runtime includes > commons-net in version 3.6, which has vulnerability CVE-2021-37533. Need to > upgrade it to 3.9 to fix. -- This message was sent by Atlassian Jira (v8.20.10#820010) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Updated] (HADOOP-18561) CVE-2021-37533 on commons-net is included in hadoop common and hadoop-client-runtime

[ https://issues.apache.org/jira/browse/HADOOP-18561?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Steve Loughran updated HADOOP-18561: Labels: transitive-cve (was: ) > CVE-2021-37533 on commons-net is included in hadoop common and > hadoop-client-runtime > > > Key: HADOOP-18561 > URL: https://issues.apache.org/jira/browse/HADOOP-18561 > Project: Hadoop Common > Issue Type: Improvement >Affects Versions: 3.3.5, 3.3.4 >Reporter: phoebe chen >Priority: Major > Labels: transitive-cve > > Latest 3.3.4 version of hadoop-common and hadoop-client-runtime includes > commons-net in version 3.6, which has vulnerability CVE-2021-37533. Need to > upgrade it to 3.9 to fix. -- This message was sent by Atlassian Jira (v8.20.10#820010) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Updated] (HADOOP-18561) CVE-2021-37533 on commons-net is included in hadoop common and hadoop-client-runtime

[ https://issues.apache.org/jira/browse/HADOOP-18561?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Steve Loughran updated HADOOP-18561: Affects Version/s: 3.3.4 3.3.5 > CVE-2021-37533 on commons-net is included in hadoop common and > hadoop-client-runtime > > > Key: HADOOP-18561 > URL: https://issues.apache.org/jira/browse/HADOOP-18561 > Project: Hadoop Common > Issue Type: Improvement >Affects Versions: 3.3.5, 3.3.4 >Reporter: phoebe chen >Priority: Major > > Latest 3.3.4 version of hadoop-common and hadoop-client-runtime includes > commons-net in version 3.6, which has vulnerability CVE-2021-37533. Need to > upgrade it to 3.9 to fix. -- This message was sent by Atlassian Jira (v8.20.10#820010) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Commented] (HADOOP-18526) Leak of S3AInstrumentation instances via hadoop Metrics references

[

https://issues.apache.org/jira/browse/HADOOP-18526?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17644028#comment-17644028

]

ASF GitHub Bot commented on HADOOP-18526:

-

hadoop-yetus commented on PR #5144:

URL: https://github.com/apache/hadoop/pull/5144#issuecomment-1339996002

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 41s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 1s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +0 :ok: | detsecrets | 0m 1s | | detect-secrets was not available.

|

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 3 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 15m 21s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 25m 41s | | trunk passed |

| +1 :green_heart: | compile | 23m 12s | | trunk passed with JDK

Ubuntu-11.0.17+8-post-Ubuntu-1ubuntu220.04 |

| +1 :green_heart: | compile | 20m 34s | | trunk passed with JDK

Private Build-1.8.0_352-8u352-ga-1~20.04-b08 |

| +1 :green_heart: | checkstyle | 3m 42s | | trunk passed |

| +1 :green_heart: | mvnsite | 2m 36s | | trunk passed |

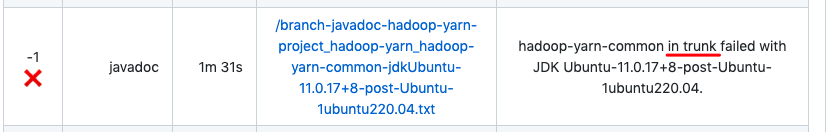

| -1 :x: | javadoc | 1m 14s |

[/branch-javadoc-hadoop-common-project_hadoop-common-jdkUbuntu-11.0.17+8-post-Ubuntu-1ubuntu220.04.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-5144/6/artifact/out/branch-javadoc-hadoop-common-project_hadoop-common-jdkUbuntu-11.0.17+8-post-Ubuntu-1ubuntu220.04.txt)

| hadoop-common in trunk failed with JDK

Ubuntu-11.0.17+8-post-Ubuntu-1ubuntu220.04. |

| +1 :green_heart: | javadoc | 1m 35s | | trunk passed with JDK

Private Build-1.8.0_352-8u352-ga-1~20.04-b08 |

| +1 :green_heart: | spotbugs | 3m 57s | | trunk passed |

| +1 :green_heart: | shadedclient | 20m 51s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 28s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 1m 33s | | the patch passed |

| +1 :green_heart: | compile | 22m 20s | | the patch passed with JDK

Ubuntu-11.0.17+8-post-Ubuntu-1ubuntu220.04 |

| +1 :green_heart: | javac | 22m 20s | | the patch passed |

| +1 :green_heart: | compile | 20m 23s | | the patch passed with JDK

Private Build-1.8.0_352-8u352-ga-1~20.04-b08 |

| +1 :green_heart: | javac | 20m 23s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 3m 32s | | the patch passed |

| +1 :green_heart: | mvnsite | 2m 36s | | the patch passed |

| -1 :x: | javadoc | 1m 5s |

[/patch-javadoc-hadoop-common-project_hadoop-common-jdkUbuntu-11.0.17+8-post-Ubuntu-1ubuntu220.04.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-5144/6/artifact/out/patch-javadoc-hadoop-common-project_hadoop-common-jdkUbuntu-11.0.17+8-post-Ubuntu-1ubuntu220.04.txt)

| hadoop-common in the patch failed with JDK

Ubuntu-11.0.17+8-post-Ubuntu-1ubuntu220.04. |

| +1 :green_heart: | javadoc | 1m 35s | | the patch passed with JDK

Private Build-1.8.0_352-8u352-ga-1~20.04-b08 |

| +1 :green_heart: | spotbugs | 4m 6s | | the patch passed |

| +1 :green_heart: | shadedclient | 21m 4s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 18m 18s | | hadoop-common in the patch

passed. |

| +1 :green_heart: | unit | 2m 53s | | hadoop-aws in the patch passed.

|

| +1 :green_heart: | asflicense | 0m 59s | | The patch does not

generate ASF License warnings. |

| | | 225m 42s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-5144/6/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/5144 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell detsecrets |

| uname | Linux 5c3da2b5ff42 4.15.0-200-generic #211-Ubuntu SMP Thu Nov 24

18:16:04 UTC 2022 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 48974549308b1eb63cb832e3a04beef3f593018d |

| Default Java | Private

[GitHub] [hadoop] hadoop-yetus commented on pull request #5144: HADOOP-18526. Leak of S3AInstrumentation instances via hadoop Metrics references

hadoop-yetus commented on PR #5144:

URL: https://github.com/apache/hadoop/pull/5144#issuecomment-1339996002

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 41s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 1s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +0 :ok: | detsecrets | 0m 1s | | detect-secrets was not available.

|

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 3 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 15m 21s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 25m 41s | | trunk passed |

| +1 :green_heart: | compile | 23m 12s | | trunk passed with JDK

Ubuntu-11.0.17+8-post-Ubuntu-1ubuntu220.04 |

| +1 :green_heart: | compile | 20m 34s | | trunk passed with JDK

Private Build-1.8.0_352-8u352-ga-1~20.04-b08 |

| +1 :green_heart: | checkstyle | 3m 42s | | trunk passed |

| +1 :green_heart: | mvnsite | 2m 36s | | trunk passed |

| -1 :x: | javadoc | 1m 14s |

[/branch-javadoc-hadoop-common-project_hadoop-common-jdkUbuntu-11.0.17+8-post-Ubuntu-1ubuntu220.04.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-5144/6/artifact/out/branch-javadoc-hadoop-common-project_hadoop-common-jdkUbuntu-11.0.17+8-post-Ubuntu-1ubuntu220.04.txt)

| hadoop-common in trunk failed with JDK

Ubuntu-11.0.17+8-post-Ubuntu-1ubuntu220.04. |

| +1 :green_heart: | javadoc | 1m 35s | | trunk passed with JDK

Private Build-1.8.0_352-8u352-ga-1~20.04-b08 |

| +1 :green_heart: | spotbugs | 3m 57s | | trunk passed |

| +1 :green_heart: | shadedclient | 20m 51s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 28s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 1m 33s | | the patch passed |

| +1 :green_heart: | compile | 22m 20s | | the patch passed with JDK

Ubuntu-11.0.17+8-post-Ubuntu-1ubuntu220.04 |

| +1 :green_heart: | javac | 22m 20s | | the patch passed |

| +1 :green_heart: | compile | 20m 23s | | the patch passed with JDK

Private Build-1.8.0_352-8u352-ga-1~20.04-b08 |

| +1 :green_heart: | javac | 20m 23s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 3m 32s | | the patch passed |

| +1 :green_heart: | mvnsite | 2m 36s | | the patch passed |

| -1 :x: | javadoc | 1m 5s |

[/patch-javadoc-hadoop-common-project_hadoop-common-jdkUbuntu-11.0.17+8-post-Ubuntu-1ubuntu220.04.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-5144/6/artifact/out/patch-javadoc-hadoop-common-project_hadoop-common-jdkUbuntu-11.0.17+8-post-Ubuntu-1ubuntu220.04.txt)

| hadoop-common in the patch failed with JDK

Ubuntu-11.0.17+8-post-Ubuntu-1ubuntu220.04. |

| +1 :green_heart: | javadoc | 1m 35s | | the patch passed with JDK

Private Build-1.8.0_352-8u352-ga-1~20.04-b08 |

| +1 :green_heart: | spotbugs | 4m 6s | | the patch passed |

| +1 :green_heart: | shadedclient | 21m 4s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 18m 18s | | hadoop-common in the patch

passed. |

| +1 :green_heart: | unit | 2m 53s | | hadoop-aws in the patch passed.

|

| +1 :green_heart: | asflicense | 0m 59s | | The patch does not

generate ASF License warnings. |

| | | 225m 42s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-5144/6/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/5144 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell detsecrets |

| uname | Linux 5c3da2b5ff42 4.15.0-200-generic #211-Ubuntu SMP Thu Nov 24

18:16:04 UTC 2022 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 48974549308b1eb63cb832e3a04beef3f593018d |

| Default Java | Private Build-1.8.0_352-8u352-ga-1~20.04-b08 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.17+8-post-Ubuntu-1ubuntu220.04

/usr/lib/jvm/java-8-openjdk-amd64:Private Build-1.8.0_352-8u352-ga-1~20.04-b08 |

| Test Results |

[jira] [Updated] (HADOOP-18561) CVE-2021-37533 on commons-net is included in hadoop common and hadoop-client-runtime

[ https://issues.apache.org/jira/browse/HADOOP-18561?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] phoebe chen updated HADOOP-18561: - Description: Latest 3.3.4 version of hadoop-common and hadoop-client-runtime includes commons-net in version 3.6, which has vulnerability CVE-2021-37533. Need to upgrade it to 3.9 to fix. (was: Latest 3.3.4 version of hadoop-common and hadoop-client-runtime includescommons-net in version 3.6, which has vulnerability CVE-2021-37533. Need to upgrade it to 3.9 to fix. ) > CVE-2021-37533 on commons-net is included in hadoop common and > hadoop-client-runtime > > > Key: HADOOP-18561 > URL: https://issues.apache.org/jira/browse/HADOOP-18561 > Project: Hadoop Common > Issue Type: Improvement >Reporter: phoebe chen >Priority: Major > > Latest 3.3.4 version of hadoop-common and hadoop-client-runtime includes > commons-net in version 3.6, which has vulnerability CVE-2021-37533. Need to > upgrade it to 3.9 to fix. -- This message was sent by Atlassian Jira (v8.20.10#820010) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Commented] (HADOOP-18329) Add support for IBM Semeru OE JRE 11.0.15.0 and greater

[

https://issues.apache.org/jira/browse/HADOOP-18329?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17644002#comment-17644002

]

ASF GitHub Bot commented on HADOOP-18329:

-

JackBuggins commented on PR #4537:

URL: https://github.com/apache/hadoop/pull/4537#issuecomment-1339816599

This should be a bit more robust to extension as well as handle the concerns

I had about the class loader from before. Is there any consensus/ruling around

adding a test against the IBM JREs? I appreciate it would take a bit of time on

CI and this is a once in a blue moon activity, but it could be a single suite

of integration tests against auth that execute to verify the result against

latest semeru is not IBM, and vice versa.

> Add support for IBM Semeru OE JRE 11.0.15.0 and greater

> ---

>

> Key: HADOOP-18329

> URL: https://issues.apache.org/jira/browse/HADOOP-18329

> Project: Hadoop Common

> Issue Type: Bug

> Components: auth, common

>Affects Versions: 3.0.0, 3.1.0, 3.0.1, 3.2.0, 3.0.2, 3.1.1, 3.0.3, 3.3.0,

> 3.1.2, 3.2.1, 3.1.3, 3.1.4, 3.2.2, 3.3.1, 3.2.3, 3.3.2, 3.3.3

> Environment: Running Hadoop (or Apache Spark 3.2.1 or above) on IBM

> Semeru runtimes open edition 11.0.15.0 or greater.

>Reporter: Jack

>Priority: Major

> Labels: pull-request-available

> Original Estimate: 1h

> Time Spent: 2.5h

> Remaining Estimate: 0h

>

> There are checks within the PlatformName class that use the Vendor property

> of the provided runtime JVM specifically looking for `IBM` within the name.

> Whilst this check worked for IBM's [java technology

> edition|https://www.ibm.com/docs/en/sdk-java-technology] it fails to work on

> [Semeru|https://developer.ibm.com/languages/java/semeru-runtimes/] since

> 11.0.15.0 due to the following change:

> h4. java.vendor system property

> In this release, the {{java.vendor}} system property has been changed from

> "International Business Machines Corporation" to "IBM Corporation".

> Modules such as the below are not provided in these runtimes.

> com.ibm.security.auth.module.JAASLoginModule

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] JackBuggins commented on pull request #4537: HADOOP-18329 - Support for IBM Semeru JVM v>11.0.15.0 Vendor Name Changes

JackBuggins commented on PR #4537: URL: https://github.com/apache/hadoop/pull/4537#issuecomment-1339816599 This should be a bit more robust to extension as well as handle the concerns I had about the class loader from before. Is there any consensus/ruling around adding a test against the IBM JREs? I appreciate it would take a bit of time on CI and this is a once in a blue moon activity, but it could be a single suite of integration tests against auth that execute to verify the result against latest semeru is not IBM, and vice versa. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #5190: YARN-11390. TestResourceTrackerService.testNodeRemovalNormally ...

hadoop-yetus commented on PR #5190:

URL: https://github.com/apache/hadoop/pull/5190#issuecomment-1339803193

:confetti_ball: **+1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 52s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +0 :ok: | detsecrets | 0m 1s | | detect-secrets was not available.

|

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 41m 35s | | trunk passed |

| +1 :green_heart: | compile | 1m 18s | | trunk passed with JDK

Ubuntu-11.0.16+8-post-Ubuntu-0ubuntu120.04 |

| +1 :green_heart: | compile | 1m 8s | | trunk passed with JDK

Private Build-1.8.0_342-8u342-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 1m 4s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 14s | | trunk passed |

| +1 :green_heart: | javadoc | 1m 9s | | trunk passed with JDK

Ubuntu-11.0.16+8-post-Ubuntu-0ubuntu120.04 |

| +1 :green_heart: | javadoc | 0m 54s | | trunk passed with JDK

Private Build-1.8.0_342-8u342-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 2m 20s | | trunk passed |

| +1 :green_heart: | shadedclient | 25m 24s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 0m 58s | | the patch passed |

| +1 :green_heart: | compile | 1m 4s | | the patch passed with JDK

Ubuntu-11.0.16+8-post-Ubuntu-0ubuntu120.04 |

| +1 :green_heart: | javac | 1m 4s | | the patch passed |

| +1 :green_heart: | compile | 0m 57s | | the patch passed with JDK

Private Build-1.8.0_342-8u342-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 0m 57s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 0m 46s | | the patch passed |

| +1 :green_heart: | mvnsite | 1m 1s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 47s | | the patch passed with JDK

Ubuntu-11.0.16+8-post-Ubuntu-0ubuntu120.04 |

| +1 :green_heart: | javadoc | 0m 42s | | the patch passed with JDK

Private Build-1.8.0_342-8u342-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 2m 7s | | the patch passed |

| +1 :green_heart: | shadedclient | 24m 55s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 102m 59s | |

hadoop-yarn-server-resourcemanager in the patch passed. |

| +1 :green_heart: | asflicense | 0m 42s | | The patch does not

generate ASF License warnings. |

| | | 213m 20s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-5190/1/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/5190 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell detsecrets |

| uname | Linux 1cd7b2ef8c62 4.15.0-191-generic #202-Ubuntu SMP Thu Aug 4

01:49:29 UTC 2022 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 5fc63f99d382e36adb7d6622c5c1f6b21e24ea14 |

| Default Java | Private Build-1.8.0_342-8u342-b07-0ubuntu1~20.04-b07 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.16+8-post-Ubuntu-0ubuntu120.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_342-8u342-b07-0ubuntu1~20.04-b07 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-5190/1/testReport/ |

| Max. process+thread count | 912 (vs. ulimit of 5500) |

| modules | C:

hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-resourcemanager

U:

hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-resourcemanager

|

| Console output |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-5190/1/console |

| versions | git=2.25.1 maven=3.6.3 spotbugs=4.2.2 |

| Powered by | Apache Yetus 0.14.0 https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the

[jira] [Created] (HADOOP-18561) CVE-2021-37533 on commons-net is included in hadoop common and hadoop-client-runtime

phoebe chen created HADOOP-18561: Summary: CVE-2021-37533 on commons-net is included in hadoop common and hadoop-client-runtime Key: HADOOP-18561 URL: https://issues.apache.org/jira/browse/HADOOP-18561 Project: Hadoop Common Issue Type: Improvement Reporter: phoebe chen Latest 3.3.4 version of hadoop-common and hadoop-client-runtime includescommons-net in version 3.6, which has vulnerability CVE-2021-37533. Need to upgrade it to 3.9 to fix. -- This message was sent by Atlassian Jira (v8.20.10#820010) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #5191: Bump express from 4.17.1 to 4.18.2 in /hadoop-yarn-project/hadoop-yarn/hadoop-yarn-ui/src/main/webapp

hadoop-yetus commented on PR #5191:

URL: https://github.com/apache/hadoop/pull/5191#issuecomment-1339792843

:confetti_ball: **+1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 53s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +0 :ok: | detsecrets | 0m 0s | | detect-secrets was not available.

|

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 39m 56s | | trunk passed |

| +1 :green_heart: | shadedclient | 59m 21s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 0m 15s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | shadedclient | 19m 20s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | asflicense | 0m 39s | | The patch does not

generate ASF License warnings. |

| | | 82m 23s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-5191/1/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/5191 |

| Optional Tests | dupname asflicense shadedclient codespell detsecrets |

| uname | Linux b1b30ed3feac 4.15.0-200-generic #211-Ubuntu SMP Thu Nov 24

18:16:04 UTC 2022 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 8563ac7a008bb3ad1939692e84bed5d8ef065b84 |

| Max. process+thread count | 554 (vs. ulimit of 5500) |

| modules | C: hadoop-yarn-project/hadoop-yarn/hadoop-yarn-ui U:

hadoop-yarn-project/hadoop-yarn/hadoop-yarn-ui |

| Console output |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-5191/1/console |

| versions | git=2.25.1 maven=3.6.3 |

| Powered by | Apache Yetus 0.14.0 https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] cnauroth commented on a diff in pull request #5190: YARN-11390. TestResourceTrackerService.testNodeRemovalNormally ...

cnauroth commented on code in PR #5190:

URL: https://github.com/apache/hadoop/pull/5190#discussion_r1041276776

##

hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-resourcemanager/src/test/java/org/apache/hadoop/yarn/server/resourcemanager/TestResourceTrackerService.java:

##

@@ -2959,6 +2960,20 @@ protected ResourceTrackerService

createResourceTrackerService() {

mockRM.stop();

}

+ private void pollingAssert(Supplier supplier, String message)

Review Comment:

In hadoop-common, there is a similar helper method:

`org.apache.hadoop.test.GenericTestUtils#waitFor`. This also has some other

nice features, like providing a thread dump for troubleshooting if it times

out. Can you please look at reusing that method?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Commented] (HADOOP-18538) Upgrade kafka to 2.8.2

[ https://issues.apache.org/jira/browse/HADOOP-18538?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17643962#comment-17643962 ] Brahma Reddy Battula commented on HADOOP-18538: --- [~dmmkr] thanks for reporting. Committed to trunk. > Upgrade kafka to 2.8.2 > -- > > Key: HADOOP-18538 > URL: https://issues.apache.org/jira/browse/HADOOP-18538 > Project: Hadoop Common > Issue Type: Improvement > Components: build >Affects Versions: 3.4.0 >Reporter: D M Murali Krishna Reddy >Assignee: D M Murali Krishna Reddy >Priority: Major > Labels: pull-request-available > > Upgrade kafka to 2.8.2 to resolve > [CVE-2022-34917|https://nvd.nist.gov/vuln/detail/CVE-2022-34917] -- This message was sent by Atlassian Jira (v8.20.10#820010) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] dependabot[bot] opened a new pull request, #5191: Bump express from 4.17.1 to 4.18.2 in /hadoop-yarn-project/hadoop-yarn/hadoop-yarn-ui/src/main/webapp

dependabot[bot] opened a new pull request, #5191: URL: https://github.com/apache/hadoop/pull/5191 Bumps [express](https://github.com/expressjs/express) from 4.17.1 to 4.18.2. Release notes Sourced from https://github.com/expressjs/express/releases;>express's releases. 4.18.2 Fix regression routing a large stack in a single route deps: body-parser@1.20.1 deps: qs@6.11.0 perf: remove unnecessary object clone deps: qs@6.11.0 4.18.1 Fix hanging on large stack of sync routes 4.18.0 Add root option to res.download Allow options without filename in res.download Deprecate string and non-integer arguments to res.status Fix behavior of null/undefined as maxAge in res.cookie Fix handling very large stacks of sync middleware Ignore Object.prototype values in settings through app.set/app.get Invoke default with same arguments as types in res.format Support proper 205 responses using res.send Use http-errors for res.format error deps: body-parser@1.20.0 Fix error message for json parse whitespace in strict Fix internal error when inflated body exceeds limit Prevent loss of async hooks context Prevent hanging when request already read deps: depd@2.0.0 deps: http-errors@2.0.0 deps: on-finished@2.4.1 deps: qs@6.10.3 deps: raw-body@2.5.1 deps: cookie@0.5.0 Add priority option Fix expires option to reject invalid dates deps: depd@2.0.0 Replace internal eval usage with Function constructor Use instance methods on process to check for listeners deps: finalhandler@1.2.0 Remove set content headers that break response deps: on-finished@2.4.1 deps: statuses@2.0.1 deps: on-finished@2.4.1 Prevent loss of async hooks context deps: qs@6.10.3 deps: send@0.18.0 Fix emitted 416 error missing headers property Limit the headers removed for 304 response deps: depd@2.0.0 deps: destroy@1.2.0 deps: http-errors@2.0.0 deps: on-finished@2.4.1 ... (truncated) Changelog Sourced from https://github.com/expressjs/express/blob/master/History.md;>express's changelog. 4.18.2 / 2022-10-08 Fix regression routing a large stack in a single route deps: body-parser@1.20.1 deps: qs@6.11.0 perf: remove unnecessary object clone deps: qs@6.11.0 4.18.1 / 2022-04-29 Fix hanging on large stack of sync routes 4.18.0 / 2022-04-25 Add root option to res.download Allow options without filename in res.download Deprecate string and non-integer arguments to res.status Fix behavior of null/undefined as maxAge in res.cookie Fix handling very large stacks of sync middleware Ignore Object.prototype values in settings through app.set/app.get Invoke default with same arguments as types in res.format Support proper 205 responses using res.send Use http-errors for res.format error deps: body-parser@1.20.0 Fix error message for json parse whitespace in strict Fix internal error when inflated body exceeds limit Prevent loss of async hooks context Prevent hanging when request already read deps: depd@2.0.0 deps: http-errors@2.0.0 deps: on-finished@2.4.1 deps: qs@6.10.3 deps: raw-body@2.5.1 deps: cookie@0.5.0 Add priority option Fix expires option to reject invalid dates deps: depd@2.0.0 Replace internal eval usage with Function constructor Use instance methods on process to check for listeners deps: finalhandler@1.2.0 Remove set content headers that break response deps: on-finished@2.4.1 deps: statuses@2.0.1 deps: on-finished@2.4.1 Prevent loss of async hooks context deps: qs@6.10.3 deps: send@0.18.0 ... (truncated) Commits https://github.com/expressjs/express/commit/8368dc178af16b91b576c4c1d135f701a0007e5d;>8368dc1 4.18.2 https://github.com/expressjs/express/commit/61f40491222dbede653b9938e6a4676f187aab44;>61f4049 docs: replace Freenode with Libera Chat https://github.com/expressjs/express/commit/bb7907b932afe3a19236a642f6054b6c8f7349a0;>bb7907b build: Node.js@18.10 https://github.com/expressjs/express/commit/f56ce73186e885a938bfdb3d3d1005a58e6ae12b;>f56ce73 build: supertest@6.3.0 https://github.com/expressjs/express/commit/24b3dc551670ac4fb0cd5a2bd5ef643c9525e60f;>24b3dc5 deps: qs@6.11.0 https://github.com/expressjs/express/commit/689d175b8b39d8860b81d723233fb83d15201827;>689d175 deps: body-parser@1.20.1 https://github.com/expressjs/express/commit/340be0f79afb9b3176afb76235aa7f92acbd5050;>340be0f build: eslint@8.24.0 https://github.com/expressjs/express/commit/33e8dc303af9277f8a7e4f46abfdcb5e72f6797b;>33e8dc3 docs: use Node.js name style

[jira] [Commented] (HADOOP-18538) Upgrade kafka to 2.8.2

[ https://issues.apache.org/jira/browse/HADOOP-18538?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17643961#comment-17643961 ] ASF GitHub Bot commented on HADOOP-18538: - brahmareddybattula merged PR #5164: URL: https://github.com/apache/hadoop/pull/5164 > Upgrade kafka to 2.8.2 > -- > > Key: HADOOP-18538 > URL: https://issues.apache.org/jira/browse/HADOOP-18538 > Project: Hadoop Common > Issue Type: Improvement > Components: build >Affects Versions: 3.4.0 >Reporter: D M Murali Krishna Reddy >Assignee: D M Murali Krishna Reddy >Priority: Major > Labels: pull-request-available > > Upgrade kafka to 2.8.2 to resolve > [CVE-2022-34917|https://nvd.nist.gov/vuln/detail/CVE-2022-34917] -- This message was sent by Atlassian Jira (v8.20.10#820010) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] brahmareddybattula merged pull request #5164: HADOOP-18538. Upgrade kafka to 2.8.2

brahmareddybattula merged PR #5164: URL: https://github.com/apache/hadoop/pull/5164 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Commented] (HADOOP-18538) Upgrade kafka to 2.8.2

[ https://issues.apache.org/jira/browse/HADOOP-18538?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17643959#comment-17643959 ] ASF GitHub Bot commented on HADOOP-18538: - brahmareddybattula commented on PR #5164: URL: https://github.com/apache/hadoop/pull/5164#issuecomment-1339675688 +1, Looks build failure are unrelated. > Upgrade kafka to 2.8.2 > -- > > Key: HADOOP-18538 > URL: https://issues.apache.org/jira/browse/HADOOP-18538 > Project: Hadoop Common > Issue Type: Improvement > Components: build >Affects Versions: 3.4.0 >Reporter: D M Murali Krishna Reddy >Assignee: D M Murali Krishna Reddy >Priority: Major > Labels: pull-request-available > > Upgrade kafka to 2.8.2 to resolve > [CVE-2022-34917|https://nvd.nist.gov/vuln/detail/CVE-2022-34917] -- This message was sent by Atlassian Jira (v8.20.10#820010) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] brahmareddybattula commented on pull request #5164: HADOOP-18538. Upgrade kafka to 2.8.2

brahmareddybattula commented on PR #5164: URL: https://github.com/apache/hadoop/pull/5164#issuecomment-1339675688 +1, Looks build failure are unrelated. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Updated] (HADOOP-18538) Upgrade kafka to 2.8.2

[ https://issues.apache.org/jira/browse/HADOOP-18538?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Brahma Reddy Battula updated HADOOP-18538: -- Status: Patch Available (was: Open) > Upgrade kafka to 2.8.2 > -- > > Key: HADOOP-18538 > URL: https://issues.apache.org/jira/browse/HADOOP-18538 > Project: Hadoop Common > Issue Type: Improvement > Components: build >Affects Versions: 3.4.0 >Reporter: D M Murali Krishna Reddy >Assignee: D M Murali Krishna Reddy >Priority: Major > Labels: pull-request-available > > Upgrade kafka to 2.8.2 to resolve > [CVE-2022-34917|https://nvd.nist.gov/vuln/detail/CVE-2022-34917] -- This message was sent by Atlassian Jira (v8.20.10#820010) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] goiri commented on a diff in pull request #5131: YARN-11350. [Federation] Router Support DelegationToken With ZK.

goiri commented on code in PR #5131:

URL: https://github.com/apache/hadoop/pull/5131#discussion_r1041207295

##

hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-common/src/test/java/org/apache/hadoop/yarn/server/federation/store/impl/TestSQLFederationStateStore.java:

##

@@ -592,4 +588,14 @@ public void testRemoveStoredToken() throws IOException,

YarnException {

public void testGetTokenByRouterStoreToken() throws IOException,

YarnException {

super.testGetTokenByRouterStoreToken();

}

+

+ @Override

+ protected void checkRouterMasterKey(DelegationKey delegationKey,

+ RouterMasterKey routerMasterKey) throws YarnException, IOException {

Review Comment:

Comment on why we do nothing

##

hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-common/src/test/java/org/apache/hadoop/yarn/server/federation/store/impl/TestSQLFederationStateStore.java:

##

@@ -18,21 +18,17 @@

package org.apache.hadoop.yarn.server.federation.store.impl;

import org.apache.commons.lang3.NotImplementedException;

+import org.apache.hadoop.security.token.delegation.DelegationKey;

import org.apache.hadoop.test.LambdaTestUtils;

import org.apache.hadoop.util.Time;

import org.apache.hadoop.yarn.api.records.ApplicationId;

import org.apache.hadoop.yarn.api.records.ReservationId;

import org.apache.hadoop.yarn.conf.YarnConfiguration;

import org.apache.hadoop.yarn.exceptions.YarnException;

+import org.apache.hadoop.yarn.security.client.RMDelegationTokenIdentifier;

import org.apache.hadoop.yarn.server.federation.store.FederationStateStore;

import

org.apache.hadoop.yarn.server.federation.store.metrics.FederationStateStoreClientMetrics;

-import org.apache.hadoop.yarn.server.federation.store.records.SubClusterId;

-import org.apache.hadoop.yarn.server.federation.store.records.SubClusterInfo;

-import