[jira] [Work logged] (HDFS-16624) Fix org.apache.hadoop.hdfs.tools.TestDFSAdmin#testAllDatanodesReconfig ERROR

[ https://issues.apache.org/jira/browse/HDFS-16624?focusedWorklogId=779318=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-779318 ] ASF GitHub Bot logged work on HDFS-16624: - Author: ASF GitHub Bot Created on: 08/Jun/22 04:30 Start Date: 08/Jun/22 04:30 Worklog Time Spent: 10m Work Description: virajjasani commented on PR #4412: URL: https://github.com/apache/hadoop/pull/4412#issuecomment-1149447908 Thank you for the nice fix @slfan1989 and for the review @tomscut !! Issue Time Tracking --- Worklog Id: (was: 779318) Time Spent: 2.5h (was: 2h 20m) > Fix org.apache.hadoop.hdfs.tools.TestDFSAdmin#testAllDatanodesReconfig ERROR > > > Key: HDFS-16624 > URL: https://issues.apache.org/jira/browse/HDFS-16624 > Project: Hadoop HDFS > Issue Type: Bug >Affects Versions: 3.4.0 >Reporter: fanshilun >Assignee: fanshilun >Priority: Major > Labels: pull-request-available > Attachments: testAllDatanodesReconfig.png > > Time Spent: 2.5h > Remaining Estimate: 0h > > HDFS-16619 found an error message during Junit unit testing, as follows: > expected:<[SUCCESS: Changed property dfs.datanode.peer.stats.enabled]> but > was:<[ From: "false"]> > After code debugging, it was found that there was an error in the selection > outs.get(2) of the assertion(1208), index should be equal to 1. > Please refer to the attachment for debugging pictures > !testAllDatanodesReconfig.png! -- This message was sent by Atlassian Jira (v8.20.7#820007) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Comment Edited] (HDFS-16613) EC: Improve performance of decommissioning dn with many ec blocks

[

https://issues.apache.org/jira/browse/HDFS-16613?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17551381#comment-17551381

]

caozhiqiang edited comment on HDFS-16613 at 6/8/22 4:01 AM:

[~hadachi] , in my cluster,

dfs.namenode.replication.max-streams-hard-limit=512,

dfs.namenode.replication.work.multiplier.per.iteration=20.

The data process is below:

# Choose the blocks to be reconstructed from neededReconstruction. This

process use dfs.namenode.replication.work.multiplier.per.iteration to limit

process number.

# *Choose source datanode. This process use

dfs.namenode.replication.max-streams-hard-limit to limit process number.*

# Choose target datanode.

# Add task to datanode.

# The blocks to be replicated would put to pendingReconstruction. If blocks in

pendingReconstruction timeout, they will be put back to neededReconstruction

and continue process. *This process use

dfs.namenode.reconstruction.pending.timeout-sec to limit time interval.*

# *Send replication cmds to dn in heartbeat response. Use

dfs.namenode.decommission.max-streams to limit task number original.*

Firstly, the process 1 doesn't have performance bottleneck. And its process

interval is 3 seconds.

Performance bottleneck is in process 2, 5 and 6. So we should increase the

value of dfs.namenode.replication.max-streams-hard-limit and decrease the value

of dfs.namenode.reconstruction.pending.timeout-sec{*}.{*} With process 6, we

should change to use dfs.namenode.replication.max-streams-hard-limit to limit

the task number.

{code:java}

// DatanodeManager::handleHeartbeat

if (nodeinfo.isDecommissionInProgress()) {

maxTransfers = blockManager.getReplicationStreamsHardLimit()

- xmitsInProgress;

} else {

maxTransfers = blockManager.getMaxReplicationStreams()

- xmitsInProgress;

} {code}

*In other words, we should get blocks from pendingReconstruction to

neededReconstruction in shorter interval(process 5). And should seed more

replication tasks to datanode(process 2 and 6).*

The below graph with under_replicated_blocks and pending_replicated_blocks

metrics monitor in namenode, which can show the performance bottleneck. A lot

of blocks time out in pendingReconstruction and would be put back to

neededReconstruction repeatedly. The first graph is before optimization and the

second is after optimization.

Please help to check this process, thank you.

!image-2022-06-08-11-41-11-127.png|width=932,height=190!

!image-2022-06-08-11-38-29-664.png|width=931,height=175!

was (Author: caozhiqiang):

[~hadachi] , in my cluster,

dfs.namenode.replication.max-streams-hard-limit=512,

dfs.namenode.replication.work.multiplier.per.iteration=20.

The data process is below:

# Choose the blocks to be reconstructed from neededReconstruction. This

process use dfs.namenode.replication.work.multiplier.per.iteration to limit

process number.

# *Choose source datanode. This process use

dfs.namenode.replication.max-streams-hard-limit to limit process number.*

# Choose target datanode.

# Add task to datanode.

# The blocks to be replicated would put to pendingReconstruction. If blocks in

pendingReconstruction timeout, they will be put back to neededReconstruction

and continue process. *This process use

dfs.namenode.reconstruction.pending.timeout-sec to limit time interval.*

# *Send replication cmds to dn in heartbeat response. Use

dfs.namenode.decommission.max-streams to limit task number original.*

Firstly, the process 1 doesn't have performance bottleneck. And its process

interval is 3 seconds.

Performance bottleneck is in process 2, 5 and 6. So we should increase the

value of dfs.namenode.replication.max-streams-hard-limit and decrease the value

of dfs.namenode.reconstruction.pending.timeout-sec{*}.{*} With process 6, we

should change to use dfs.namenode.replication.max-streams-hard-limit to limit

the task number.

{code:java}

// DatanodeManager::handleHeartbeat

if (nodeinfo.isDecommissionInProgress()) {

maxTransfers = blockManager.getReplicationStreamsHardLimit()

- xmitsInProgress;

} else {

maxTransfers = blockManager.getMaxReplicationStreams()

- xmitsInProgress;

} {code}

*In other words, we should get blocks from pendingReconstruction to

neededReconstruction in shorter interval(process 5). And seed more replication

tasks to datanode(process 2 and 6).*

The below graph with under_replicated_blocks and pending_replicated_blocks

metrics monitor in namenode, which can show the performance bottleneck. A lot

of blocks time out in pendingReconstruction and would be put back to

neededReconstruction repeatedly. The first graph is before optimization and the

second is after optimization.

Please help to check this process, thank you.

!image-2022-06-08-11-41-11-127.png|width=932,height=190!

[jira] [Comment Edited] (HDFS-16613) EC: Improve performance of decommissioning dn with many ec blocks

[

https://issues.apache.org/jira/browse/HDFS-16613?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17551381#comment-17551381

]

caozhiqiang edited comment on HDFS-16613 at 6/8/22 3:58 AM:

[~hadachi] , in my cluster,

dfs.namenode.replication.max-streams-hard-limit=512,

dfs.namenode.replication.work.multiplier.per.iteration=20.

The data process is below:

# Choose the blocks to be reconstructed from neededReconstruction. This

process use dfs.namenode.replication.work.multiplier.per.iteration to limit

process number.

# *Choose source datanode. This process use

dfs.namenode.replication.max-streams-hard-limit to limit process number.*

# Choose target datanode.

# Add task to datanode.

# The blocks to be replicated would put to pendingReconstruction. If blocks in

pendingReconstruction timeout, they will be put back to neededReconstruction

and continue process. *This process use

dfs.namenode.reconstruction.pending.timeout-sec to limit time interval.*

# *Send replication cmds to dn in heartbeat response. Use

dfs.namenode.decommission.max-streams to limit task number original.*

Firstly, the process 1 doesn't have performance bottleneck. And its process

interval is 3 seconds.

Performance bottleneck is in process 2, 5 and 6. So we should increase the

value of dfs.namenode.replication.max-streams-hard-limit and decrease the value

of dfs.namenode.reconstruction.pending.timeout-sec{*}.{*} With process 6, we

should change to use dfs.namenode.replication.max-streams-hard-limit to limit

the task number.

{code:java}

// DatanodeManager::handleHeartbeat

if (nodeinfo.isDecommissionInProgress()) {

maxTransfers = blockManager.getReplicationStreamsHardLimit()

- xmitsInProgress;

} else {

maxTransfers = blockManager.getMaxReplicationStreams()

- xmitsInProgress;

} {code}

*In other words, we should get blocks from pendingReconstruction to

neededReconstruction in shorter interval(process 5). And seed more replication

tasks to datanode(process 2 and 6).*

The below graph with under_replicated_blocks and pending_replicated_blocks

metrics monitor in namenode, which can show the performance bottleneck. A lot

of blocks time out in pendingReconstruction and would be put back to

neededReconstruction repeatedly. The first graph is before optimization and the

second is after optimization.

Please help to check this process, thank you.

!image-2022-06-08-11-41-11-127.png|width=932,height=190!

!image-2022-06-08-11-38-29-664.png|width=931,height=175!

was (Author: caozhiqiang):

[~hadachi] , in my cluster,

dfs.namenode.replication.max-streams-hard-limit=512,

dfs.namenode.replication.work.multiplier.per.iteration=20.

The data process is below:

# Choose the blocks to be reconstructed from neededReconstruction. This

process use dfs.namenode.replication.work.multiplier.per.iteration to limit

process number.

# *Choose source datanode. This process use

dfs.namenode.replication.max-streams-hard-limit to limit process number.*

# Choose target datanode.

# Add task to datanode.

# The blocks to be replicated would put to pendingReconstruction. If blocks in

pendingReconstruction timeout, they will be put back to neededReconstruction

and continue process. *This process use

dfs.namenode.reconstruction.pending.timeout-sec to limit time interval.*

# *Send cmd to dn in heartbeat response. Use

dfs.namenode.decommission.max-streams to limit task number original.*

Firstly, the process 1 doesn't have performance bottleneck. And its process

interval is 3 seconds.

Performance bottleneck is in process 2, 5 and 6. So we should increase the

value of dfs.namenode.replication.max-streams-hard-limit and decrease the value

of dfs.namenode.reconstruction.pending.timeout-sec{*}.{*} With process 6, we

should use dfs.namenode.replication.max-streams-hard-limit to limit the task

number.

That mean we should take blocks from pendingReconstruction to

neededReconstruction in shorten interval(process 5). And seed more replication

tasks to datanode(process 2 and 6).

{code:java}

// DatanodeManager::handleHeartbeat

if (nodeinfo.isDecommissionInProgress()) {

maxTransfers = blockManager.getReplicationStreamsHardLimit()

- xmitsInProgress;

} else {

maxTransfers = blockManager.getMaxReplicationStreams()

- xmitsInProgress;

} {code}

The below graph with under replicated blocks and pending replicated blocks

metrics monitor, which can show the performance bottleneck. A lot of blocks

time out in pendingReconstruction and were put back to neededReconstruction

repeatedly. The first graph is before optimization and the second is after

optimization.

Please help to check this process, thank you.

!image-2022-06-08-11-41-11-127.png|width=932,height=190!

!image-2022-06-08-11-38-29-664.png|width=931,height=175!

> EC: Improve

[jira] [Comment Edited] (HDFS-16613) EC: Improve performance of decommissioning dn with many ec blocks

[

https://issues.apache.org/jira/browse/HDFS-16613?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17551381#comment-17551381

]

caozhiqiang edited comment on HDFS-16613 at 6/8/22 3:52 AM:

[~hadachi] , in my cluster,

dfs.namenode.replication.max-streams-hard-limit=512,

dfs.namenode.replication.work.multiplier.per.iteration=20.

The data process is below:

# Choose the blocks to be reconstructed from neededReconstruction. This

process use dfs.namenode.replication.work.multiplier.per.iteration to limit

process number.

# *Choose source datanode. This process use

dfs.namenode.replication.max-streams-hard-limit to limit process number.*

# Choose target datanode.

# Add task to datanode.

# The blocks to be replicated would put to pendingReconstruction. If blocks in

pendingReconstruction timeout, they will be put back to neededReconstruction

and continue process. *This process use

dfs.namenode.reconstruction.pending.timeout-sec to limit time interval.*

# *Send cmd to dn in heartbeat response. Use

dfs.namenode.decommission.max-streams to limit task number original.*

Firstly, the process 1 doesn't have performance bottleneck. And its process

interval is 3 seconds.

Performance bottleneck is in process 2, 5 and 6. So we should increase the

value of dfs.namenode.replication.max-streams-hard-limit and decrease the value

of dfs.namenode.reconstruction.pending.timeout-sec{*}.{*} With process 6, we

should use dfs.namenode.replication.max-streams-hard-limit to limit the task

number.

That mean we should take blocks from pendingReconstruction to

neededReconstruction in shorten interval(process 5). And seed more replication

tasks to datanode(process 2 and 6).

{code:java}

// DatanodeManager::handleHeartbeat

if (nodeinfo.isDecommissionInProgress()) {

maxTransfers = blockManager.getReplicationStreamsHardLimit()

- xmitsInProgress;

} else {

maxTransfers = blockManager.getMaxReplicationStreams()

- xmitsInProgress;

} {code}

The below graph with under replicated blocks and pending replicated blocks

metrics monitor, which can show the performance bottleneck. A lot of blocks

time out in pendingReconstruction and were put back to neededReconstruction

repeatedly. The first graph is before optimization and the second is after

optimization.

Please help to check this process, thank you.

!image-2022-06-08-11-41-11-127.png|width=932,height=190!

!image-2022-06-08-11-38-29-664.png|width=931,height=175!

was (Author: caozhiqiang):

[~hadachi] , in my cluster,

dfs.namenode.replication.max-streams-hard-limit=512,

dfs.namenode.replication.work.multiplier.per.iteration=20.

The data process is below:

# Choose the blocks to be reconstructed from neededReconstruction. This

process use dfs.namenode.replication.work.multiplier.per.iteration to limit

process number.

# *Choose source datanode. This process use

dfs.namenode.replication.max-streams-hard-limit to limit process number.*

# Choose target datanode.

# Add task to datanode.

# The blocks to be replicated would put to pendingReconstruction. If blocks in

pendingReconstruction timeout, they will be put back to neededReconstruction

and continue process. *This process use

dfs.namenode.reconstruction.pending.timeout-sec to limit time interval.*

# *Send cmd to dn in heartbeat response. Use

dfs.namenode.decommission.max-streams to limit task number original.*

Firstly, the process 1 doesn't have performance bottleneck.

Performance bottleneck is in process 2, 5 and 6. So we should increase the

value of dfs.namenode.replication.max-streams-hard-limit and decrease the value

of dfs.namenode.reconstruction.pending.timeout-sec{*}.{*} With process 6, we

should use dfs.namenode.replication.max-streams-hard-limit to limit the task

number.

{code:java}

// DatanodeManager::handleHeartbeat

if (nodeinfo.isDecommissionInProgress()) {

maxTransfers = blockManager.getReplicationStreamsHardLimit()

- xmitsInProgress;

} else {

maxTransfers = blockManager.getMaxReplicationStreams()

- xmitsInProgress;

} {code}

The below graph with under replicated blocks and pending replicated blocks

metrics monitor, which can show the performance bottleneck. A lot of blocks

time out in pendingReconstruction and were put back to neededReconstruction

repeatedly. The first graph is before optimization and the second is after

optimization.

Please help to check this process, thank you.

!image-2022-06-08-11-41-11-127.png|width=932,height=190!

!image-2022-06-08-11-38-29-664.png|width=931,height=175!

> EC: Improve performance of decommissioning dn with many ec blocks

> -

>

> Key: HDFS-16613

> URL: https://issues.apache.org/jira/browse/HDFS-16613

> Project: Hadoop HDFS

>

[jira] [Commented] (HDFS-16613) EC: Improve performance of decommissioning dn with many ec blocks

[

https://issues.apache.org/jira/browse/HDFS-16613?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17551381#comment-17551381

]

caozhiqiang commented on HDFS-16613:

[~hadachi] , in my cluster,

dfs.namenode.replication.max-streams-hard-limit=512,

dfs.namenode.replication.work.multiplier.per.iteration=20.

The data process is below:

# Choose the blocks to be reconstructed from neededReconstruction. This

process use dfs.namenode.replication.work.multiplier.per.iteration to limit

process number.

# *Choose source datanode. This process use

dfs.namenode.replication.max-streams-hard-limit to limit process number.*

# Choose target datanode.

# Add task to datanode.

# The blocks to be replicated would put to pendingReconstruction. If blocks in

pendingReconstruction timeout, they will be put back to neededReconstruction

and continue process. *This process use

dfs.namenode.reconstruction.pending.timeout-sec to limit time interval.*

# *Send cmd to dn in heartbeat response. Use

dfs.namenode.decommission.max-streams to limit task number original.*

Firstly, the process 1 doesn't have performance bottleneck.

Performance bottleneck is in process 2, 5 and 6. So we should increase the

value of dfs.namenode.replication.max-streams-hard-limit and decrease the value

of dfs.namenode.reconstruction.pending.timeout-sec{*}.{*} With process 6, we

should use dfs.namenode.replication.max-streams-hard-limit to limit the task

number.

{code:java}

// DatanodeManager::handleHeartbeat

if (nodeinfo.isDecommissionInProgress()) {

maxTransfers = blockManager.getReplicationStreamsHardLimit()

- xmitsInProgress;

} else {

maxTransfers = blockManager.getMaxReplicationStreams()

- xmitsInProgress;

} {code}

The below graph with under replicated blocks and pending replicated blocks

metrics monitor, which can show the performance bottleneck. A lot of blocks

time out in pendingReconstruction and were put back to neededReconstruction

repeatedly. The first graph is before optimization and the second is after

optimization.

Please help to check this process, thank you.

!image-2022-06-08-11-41-11-127.png|width=932,height=190!

!image-2022-06-08-11-38-29-664.png|width=931,height=175!

> EC: Improve performance of decommissioning dn with many ec blocks

> -

>

> Key: HDFS-16613

> URL: https://issues.apache.org/jira/browse/HDFS-16613

> Project: Hadoop HDFS

> Issue Type: Improvement

> Components: ec, erasure-coding, namenode

>Affects Versions: 3.4.0

>Reporter: caozhiqiang

>Assignee: caozhiqiang

>Priority: Major

> Labels: pull-request-available

> Attachments: image-2022-06-07-11-46-42-389.png,

> image-2022-06-07-17-42-16-075.png, image-2022-06-07-17-45-45-316.png,

> image-2022-06-07-17-51-04-876.png, image-2022-06-07-17-55-40-203.png,

> image-2022-06-08-11-38-29-664.png, image-2022-06-08-11-41-11-127.png

>

> Time Spent: 0.5h

> Remaining Estimate: 0h

>

> In a hdfs cluster with a lot of EC blocks, decommission a dn is very slow.

> The reason is unlike replication blocks can be replicated from any dn which

> has the same block replication, the ec block have to be replicated from the

> decommissioning dn.

> The configurations dfs.namenode.replication.max-streams and

> dfs.namenode.replication.max-streams-hard-limit will limit the replication

> speed, but increase these configurations will create risk to the whole

> cluster's network. So it should add a new configuration to limit the

> decommissioning dn, distinguished from the cluster wide max-streams limit.

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Updated] (HDFS-16613) EC: Improve performance of decommissioning dn with many ec blocks

[ https://issues.apache.org/jira/browse/HDFS-16613?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] caozhiqiang updated HDFS-16613: --- Attachment: image-2022-06-08-11-41-11-127.png > EC: Improve performance of decommissioning dn with many ec blocks > - > > Key: HDFS-16613 > URL: https://issues.apache.org/jira/browse/HDFS-16613 > Project: Hadoop HDFS > Issue Type: Improvement > Components: ec, erasure-coding, namenode >Affects Versions: 3.4.0 >Reporter: caozhiqiang >Assignee: caozhiqiang >Priority: Major > Labels: pull-request-available > Attachments: image-2022-06-07-11-46-42-389.png, > image-2022-06-07-17-42-16-075.png, image-2022-06-07-17-45-45-316.png, > image-2022-06-07-17-51-04-876.png, image-2022-06-07-17-55-40-203.png, > image-2022-06-08-11-38-29-664.png, image-2022-06-08-11-41-11-127.png > > Time Spent: 0.5h > Remaining Estimate: 0h > > In a hdfs cluster with a lot of EC blocks, decommission a dn is very slow. > The reason is unlike replication blocks can be replicated from any dn which > has the same block replication, the ec block have to be replicated from the > decommissioning dn. > The configurations dfs.namenode.replication.max-streams and > dfs.namenode.replication.max-streams-hard-limit will limit the replication > speed, but increase these configurations will create risk to the whole > cluster's network. So it should add a new configuration to limit the > decommissioning dn, distinguished from the cluster wide max-streams limit. -- This message was sent by Atlassian Jira (v8.20.7#820007) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Updated] (HDFS-16613) EC: Improve performance of decommissioning dn with many ec blocks

[ https://issues.apache.org/jira/browse/HDFS-16613?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] caozhiqiang updated HDFS-16613: --- Attachment: image-2022-06-08-11-38-29-664.png > EC: Improve performance of decommissioning dn with many ec blocks > - > > Key: HDFS-16613 > URL: https://issues.apache.org/jira/browse/HDFS-16613 > Project: Hadoop HDFS > Issue Type: Improvement > Components: ec, erasure-coding, namenode >Affects Versions: 3.4.0 >Reporter: caozhiqiang >Assignee: caozhiqiang >Priority: Major > Labels: pull-request-available > Attachments: image-2022-06-07-11-46-42-389.png, > image-2022-06-07-17-42-16-075.png, image-2022-06-07-17-45-45-316.png, > image-2022-06-07-17-51-04-876.png, image-2022-06-07-17-55-40-203.png, > image-2022-06-08-11-38-29-664.png > > Time Spent: 0.5h > Remaining Estimate: 0h > > In a hdfs cluster with a lot of EC blocks, decommission a dn is very slow. > The reason is unlike replication blocks can be replicated from any dn which > has the same block replication, the ec block have to be replicated from the > decommissioning dn. > The configurations dfs.namenode.replication.max-streams and > dfs.namenode.replication.max-streams-hard-limit will limit the replication > speed, but increase these configurations will create risk to the whole > cluster's network. So it should add a new configuration to limit the > decommissioning dn, distinguished from the cluster wide max-streams limit. -- This message was sent by Atlassian Jira (v8.20.7#820007) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16557) BootstrapStandby failed because of checking gap for inprogress EditLogInputStream

[

https://issues.apache.org/jira/browse/HDFS-16557?focusedWorklogId=779311=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-779311

]

ASF GitHub Bot logged work on HDFS-16557:

-

Author: ASF GitHub Bot

Created on: 08/Jun/22 03:34

Start Date: 08/Jun/22 03:34

Worklog Time Spent: 10m

Work Description: tomscut commented on PR #4219:

URL: https://github.com/apache/hadoop/pull/4219#issuecomment-1149418728

Hi @xkrogen , if you have enough bandwidth, please take a look. Thank you.

Issue Time Tracking

---

Worklog Id: (was: 779311)

Time Spent: 2h 50m (was: 2h 40m)

> BootstrapStandby failed because of checking gap for inprogress

> EditLogInputStream

> -

>

> Key: HDFS-16557

> URL: https://issues.apache.org/jira/browse/HDFS-16557

> Project: Hadoop HDFS

> Issue Type: Bug

>Reporter: Tao Li

>Assignee: Tao Li

>Priority: Major

> Labels: pull-request-available

> Attachments: image-2022-04-22-17-17-14-577.png,

> image-2022-04-22-17-17-14-618.png, image-2022-04-22-17-17-23-113.png,

> image-2022-04-22-17-17-32-487.png

>

> Time Spent: 2h 50m

> Remaining Estimate: 0h

>

> The lastTxId of an inprogress EditLogInputStream lastTxId isn't necessarily

> HdfsServerConstants.INVALID_TXID. We can determine its status directly by

> EditLogInputStream#isInProgress.

> We introduced [SBN READ], and set

> {color:#ff}{{dfs.ha.tail-edits.in-progress=true}}{color}. Then

> bootstrapStandby, the EditLogInputStream of inProgress is misjudged,

> resulting in a gap check failure, which causes bootstrapStandby to fail.

> hdfs namenode -bootstrapStandby

> !image-2022-04-22-17-17-32-487.png|width=766,height=161!

> !image-2022-04-22-17-17-14-577.png|width=598,height=187!

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Commented] (HDFS-16624) Fix org.apache.hadoop.hdfs.tools.TestDFSAdmin#testAllDatanodesReconfig ERROR

[ https://issues.apache.org/jira/browse/HDFS-16624?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17551360#comment-17551360 ] fanshilun commented on HDFS-16624: -- Thanks for the suggestion, I have linked jira. > Fix org.apache.hadoop.hdfs.tools.TestDFSAdmin#testAllDatanodesReconfig ERROR > > > Key: HDFS-16624 > URL: https://issues.apache.org/jira/browse/HDFS-16624 > Project: Hadoop HDFS > Issue Type: Bug >Affects Versions: 3.4.0 >Reporter: fanshilun >Assignee: fanshilun >Priority: Major > Labels: pull-request-available > Attachments: testAllDatanodesReconfig.png > > Time Spent: 2h 20m > Remaining Estimate: 0h > > HDFS-16619 found an error message during Junit unit testing, as follows: > expected:<[SUCCESS: Changed property dfs.datanode.peer.stats.enabled]> but > was:<[ From: "false"]> > After code debugging, it was found that there was an error in the selection > outs.get(2) of the assertion(1208), index should be equal to 1. > Please refer to the attachment for debugging pictures > !testAllDatanodesReconfig.png! -- This message was sent by Atlassian Jira (v8.20.7#820007) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work started] (HDFS-16624) Fix org.apache.hadoop.hdfs.tools.TestDFSAdmin#testAllDatanodesReconfig ERROR

[ https://issues.apache.org/jira/browse/HDFS-16624?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Work on HDFS-16624 started by fanshilun. > Fix org.apache.hadoop.hdfs.tools.TestDFSAdmin#testAllDatanodesReconfig ERROR > > > Key: HDFS-16624 > URL: https://issues.apache.org/jira/browse/HDFS-16624 > Project: Hadoop HDFS > Issue Type: Bug >Affects Versions: 3.4.0 >Reporter: fanshilun >Assignee: fanshilun >Priority: Major > Labels: pull-request-available > Attachments: testAllDatanodesReconfig.png > > Time Spent: 2h 20m > Remaining Estimate: 0h > > HDFS-16619 found an error message during Junit unit testing, as follows: > expected:<[SUCCESS: Changed property dfs.datanode.peer.stats.enabled]> but > was:<[ From: "false"]> > After code debugging, it was found that there was an error in the selection > outs.get(2) of the assertion(1208), index should be equal to 1. > Please refer to the attachment for debugging pictures > !testAllDatanodesReconfig.png! -- This message was sent by Atlassian Jira (v8.20.7#820007) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work started] (HDFS-16605) Improve Code With Lambda in hadoop-hdfs-rbf moudle

[ https://issues.apache.org/jira/browse/HDFS-16605?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Work on HDFS-16605 started by fanshilun. > Improve Code With Lambda in hadoop-hdfs-rbf moudle > -- > > Key: HDFS-16605 > URL: https://issues.apache.org/jira/browse/HDFS-16605 > Project: Hadoop HDFS > Issue Type: Improvement > Components: rbf >Affects Versions: 3.4.0 >Reporter: fanshilun >Assignee: fanshilun >Priority: Minor > Labels: pull-request-available > Time Spent: 1.5h > Remaining Estimate: 0h > -- This message was sent by Atlassian Jira (v8.20.7#820007) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work started] (HDFS-16590) Fix Junit Test Deprecated assertThat

[ https://issues.apache.org/jira/browse/HDFS-16590?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Work on HDFS-16590 started by fanshilun. > Fix Junit Test Deprecated assertThat > > > Key: HDFS-16590 > URL: https://issues.apache.org/jira/browse/HDFS-16590 > Project: Hadoop HDFS > Issue Type: Improvement >Reporter: fanshilun >Assignee: fanshilun >Priority: Major > Labels: pull-request-available > Time Spent: 2h 10m > Remaining Estimate: 0h > -- This message was sent by Atlassian Jira (v8.20.7#820007) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work started] (HDFS-16619) impove HttpHeaders.Values And HttpHeaders.Names With recommended Class

[ https://issues.apache.org/jira/browse/HDFS-16619?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Work on HDFS-16619 started by fanshilun. > impove HttpHeaders.Values And HttpHeaders.Names With recommended Class > -- > > Key: HDFS-16619 > URL: https://issues.apache.org/jira/browse/HDFS-16619 > Project: Hadoop HDFS > Issue Type: Improvement >Affects Versions: 3.4.0 >Reporter: fanshilun >Assignee: fanshilun >Priority: Major > Labels: pull-request-available > Fix For: 3.4.0 > > Time Spent: 40m > Remaining Estimate: 0h > > HttpHeaders.Values and HttpHeaders.Names are deprecated, use > HttpHeaderValues and HttpHeaderNames instead. -- This message was sent by Atlassian Jira (v8.20.7#820007) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Updated] (HDFS-16621) Remove unused JNStorage#getCurrentDir()

[ https://issues.apache.org/jira/browse/HDFS-16621?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] JiangHua Zhu updated HDFS-16621: Description: There is no use of getCurrentDir() anywhere in JNStorage, we should remove it. (was: In JNStorage, sd.getCurrentDir() is used in 5~6 places, It can be replaced with JNStorage#getCurrentDir(), which will be more concise.) > Remove unused JNStorage#getCurrentDir() > --- > > Key: HDFS-16621 > URL: https://issues.apache.org/jira/browse/HDFS-16621 > Project: Hadoop HDFS > Issue Type: Improvement > Components: journal-node, qjm >Affects Versions: 3.3.0 >Reporter: JiangHua Zhu >Assignee: JiangHua Zhu >Priority: Minor > Labels: pull-request-available > Time Spent: 1h > Remaining Estimate: 0h > > There is no use of getCurrentDir() anywhere in JNStorage, we should remove it. -- This message was sent by Atlassian Jira (v8.20.7#820007) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Updated] (HDFS-16621) Remove unused JNStorage#getCurrentDir()

[ https://issues.apache.org/jira/browse/HDFS-16621?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] JiangHua Zhu updated HDFS-16621: Summary: Remove unused JNStorage#getCurrentDir() (was: Replace sd.getCurrentDir() with JNStorage#getCurrentDir()) > Remove unused JNStorage#getCurrentDir() > --- > > Key: HDFS-16621 > URL: https://issues.apache.org/jira/browse/HDFS-16621 > Project: Hadoop HDFS > Issue Type: Improvement > Components: journal-node, qjm >Affects Versions: 3.3.0 >Reporter: JiangHua Zhu >Assignee: JiangHua Zhu >Priority: Minor > Labels: pull-request-available > Time Spent: 1h > Remaining Estimate: 0h > > In JNStorage, sd.getCurrentDir() is used in 5~6 places, > It can be replaced with JNStorage#getCurrentDir(), which will be more concise. -- This message was sent by Atlassian Jira (v8.20.7#820007) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16621) Replace sd.getCurrentDir() with JNStorage#getCurrentDir()

[ https://issues.apache.org/jira/browse/HDFS-16621?focusedWorklogId=779307=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-779307 ] ASF GitHub Bot logged work on HDFS-16621: - Author: ASF GitHub Bot Created on: 08/Jun/22 02:05 Start Date: 08/Jun/22 02:05 Worklog Time Spent: 10m Work Description: jianghuazhu commented on PR #4404: URL: https://github.com/apache/hadoop/pull/4404#issuecomment-1149370199 Thanks @ayushtkn for the comment and reply. I initially thought that the previous author created JNStorage#getCurrentDir() to replace sd.getCurrentDir(), so I made this suggestion. I will remove JNStorage#getCurrentDir() and resubmit. Issue Time Tracking --- Worklog Id: (was: 779307) Time Spent: 1h (was: 50m) > Replace sd.getCurrentDir() with JNStorage#getCurrentDir() > - > > Key: HDFS-16621 > URL: https://issues.apache.org/jira/browse/HDFS-16621 > Project: Hadoop HDFS > Issue Type: Improvement > Components: journal-node, qjm >Affects Versions: 3.3.0 >Reporter: JiangHua Zhu >Assignee: JiangHua Zhu >Priority: Minor > Labels: pull-request-available > Time Spent: 1h > Remaining Estimate: 0h > > In JNStorage, sd.getCurrentDir() is used in 5~6 places, > It can be replaced with JNStorage#getCurrentDir(), which will be more concise. -- This message was sent by Atlassian Jira (v8.20.7#820007) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Commented] (HDFS-16563) Namenode WebUI prints sensitive information on Token Expiry

[ https://issues.apache.org/jira/browse/HDFS-16563?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17551345#comment-17551345 ] fanshilun commented on HDFS-16563: -- [~ste...@apache.org] Sorry, I saw this pr was merged in the git log, I thought this was forgotten to close, so I closed it. > Namenode WebUI prints sensitive information on Token Expiry > --- > > Key: HDFS-16563 > URL: https://issues.apache.org/jira/browse/HDFS-16563 > Project: Hadoop HDFS > Issue Type: Bug > Components: namanode, security, webhdfs >Affects Versions: 3.3.3 >Reporter: Renukaprasad C >Assignee: Renukaprasad C >Priority: Major > Labels: pull-request-available > Fix For: 3.4.0, 3.3.4 > > Attachments: image-2022-04-27-23-01-16-033.png, > image-2022-04-27-23-28-40-568.png > > Time Spent: 3h > Remaining Estimate: 0h > > Login to Namenode WebUI. > Wait for token to expire. (Or modify the Token refresh time > dfs.namenode.delegation.token.renew/update-interval to lower value) > Refresh the WebUI after the Token expiry. > Full token information gets printed in WebUI. > > !image-2022-04-27-23-01-16-033.png! -- This message was sent by Atlassian Jira (v8.20.7#820007) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16625) Unit tests aren't checking for PMDK availability

[

https://issues.apache.org/jira/browse/HDFS-16625?focusedWorklogId=779284=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-779284

]

ASF GitHub Bot logged work on HDFS-16625:

-

Author: ASF GitHub Bot

Created on: 08/Jun/22 00:15

Start Date: 08/Jun/22 00:15

Worklog Time Spent: 10m

Work Description: ashutoshcipher commented on PR #4414:

URL: https://github.com/apache/hadoop/pull/4414#issuecomment-1149301940

LGTM

Issue Time Tracking

---

Worklog Id: (was: 779284)

Time Spent: 20m (was: 10m)

> Unit tests aren't checking for PMDK availability

>

>

> Key: HDFS-16625

> URL: https://issues.apache.org/jira/browse/HDFS-16625

> Project: Hadoop HDFS

> Issue Type: Test

> Components: test

>Affects Versions: 3.4.0, 3.3.4

>Reporter: Steve Vaughan

>Priority: Blocker

> Labels: pull-request-available

> Time Spent: 20m

> Remaining Estimate: 0h

>

> There are unit tests that require native PMDK libraries which aren't checking

> if the library is available, resulting in unsuccessful test. Adding the

> following in the test setup addresses the problem.

> {code:java}

> assumeTrue ("Requires PMDK", NativeIO.POSIX.isPmdkAvailable()); {code}

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16624) Fix org.apache.hadoop.hdfs.tools.TestDFSAdmin#testAllDatanodesReconfig ERROR

[ https://issues.apache.org/jira/browse/HDFS-16624?focusedWorklogId=779280=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-779280 ] ASF GitHub Bot logged work on HDFS-16624: - Author: ASF GitHub Bot Created on: 08/Jun/22 00:09 Start Date: 08/Jun/22 00:09 Worklog Time Spent: 10m Work Description: slfan1989 commented on PR #4412: URL: https://github.com/apache/hadoop/pull/4412#issuecomment-1149298084 > Good find btw @slfan1989 The code you contributed is very useful, I have learned a lot from you, thank you again! Issue Time Tracking --- Worklog Id: (was: 779280) Time Spent: 2h 20m (was: 2h 10m) > Fix org.apache.hadoop.hdfs.tools.TestDFSAdmin#testAllDatanodesReconfig ERROR > > > Key: HDFS-16624 > URL: https://issues.apache.org/jira/browse/HDFS-16624 > Project: Hadoop HDFS > Issue Type: Bug >Affects Versions: 3.4.0 >Reporter: fanshilun >Assignee: fanshilun >Priority: Major > Labels: pull-request-available > Attachments: testAllDatanodesReconfig.png > > Time Spent: 2h 20m > Remaining Estimate: 0h > > HDFS-16619 found an error message during Junit unit testing, as follows: > expected:<[SUCCESS: Changed property dfs.datanode.peer.stats.enabled]> but > was:<[ From: "false"]> > After code debugging, it was found that there was an error in the selection > outs.get(2) of the assertion(1208), index should be equal to 1. > Please refer to the attachment for debugging pictures > !testAllDatanodesReconfig.png! -- This message was sent by Atlassian Jira (v8.20.7#820007) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16624) Fix org.apache.hadoop.hdfs.tools.TestDFSAdmin#testAllDatanodesReconfig ERROR

[ https://issues.apache.org/jira/browse/HDFS-16624?focusedWorklogId=779279=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-779279 ] ASF GitHub Bot logged work on HDFS-16624: - Author: ASF GitHub Bot Created on: 08/Jun/22 00:08 Start Date: 08/Jun/22 00:08 Worklog Time Spent: 10m Work Description: slfan1989 commented on PR #4412: URL: https://github.com/apache/hadoop/pull/4412#issuecomment-1149297182 @virajjasani @tomscut please help me review the code again. Issue Time Tracking --- Worklog Id: (was: 779279) Time Spent: 2h 10m (was: 2h) > Fix org.apache.hadoop.hdfs.tools.TestDFSAdmin#testAllDatanodesReconfig ERROR > > > Key: HDFS-16624 > URL: https://issues.apache.org/jira/browse/HDFS-16624 > Project: Hadoop HDFS > Issue Type: Bug >Affects Versions: 3.4.0 >Reporter: fanshilun >Assignee: fanshilun >Priority: Major > Labels: pull-request-available > Attachments: testAllDatanodesReconfig.png > > Time Spent: 2h 10m > Remaining Estimate: 0h > > HDFS-16619 found an error message during Junit unit testing, as follows: > expected:<[SUCCESS: Changed property dfs.datanode.peer.stats.enabled]> but > was:<[ From: "false"]> > After code debugging, it was found that there was an error in the selection > outs.get(2) of the assertion(1208), index should be equal to 1. > Please refer to the attachment for debugging pictures > !testAllDatanodesReconfig.png! -- This message was sent by Atlassian Jira (v8.20.7#820007) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16605) Improve Code With Lambda in hadoop-hdfs-rbf moudle

[ https://issues.apache.org/jira/browse/HDFS-16605?focusedWorklogId=779277=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-779277 ] ASF GitHub Bot logged work on HDFS-16605: - Author: ASF GitHub Bot Created on: 07/Jun/22 23:48 Start Date: 07/Jun/22 23:48 Worklog Time Spent: 10m Work Description: slfan1989 commented on PR #4375: URL: https://github.com/apache/hadoop/pull/4375#issuecomment-1149281613 @ayushtkn Please help me review the code. Issue Time Tracking --- Worklog Id: (was: 779277) Time Spent: 1.5h (was: 1h 20m) > Improve Code With Lambda in hadoop-hdfs-rbf moudle > -- > > Key: HDFS-16605 > URL: https://issues.apache.org/jira/browse/HDFS-16605 > Project: Hadoop HDFS > Issue Type: Improvement > Components: rbf >Affects Versions: 3.4.0 >Reporter: fanshilun >Assignee: fanshilun >Priority: Minor > Labels: pull-request-available > Time Spent: 1.5h > Remaining Estimate: 0h > -- This message was sent by Atlassian Jira (v8.20.7#820007) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16624) Fix org.apache.hadoop.hdfs.tools.TestDFSAdmin#testAllDatanodesReconfig ERROR

[

https://issues.apache.org/jira/browse/HDFS-16624?focusedWorklogId=779274=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-779274

]

ASF GitHub Bot logged work on HDFS-16624:

-

Author: ASF GitHub Bot

Created on: 07/Jun/22 23:18

Start Date: 07/Jun/22 23:18

Worklog Time Spent: 10m

Work Description: slfan1989 commented on code in PR #4412:

URL: https://github.com/apache/hadoop/pull/4412#discussion_r891781997

##

hadoop-hdfs-project/hadoop-hdfs/src/test/java/org/apache/hadoop/hdfs/tools/TestDFSAdmin.java:

##

@@ -1205,9 +1205,9 @@ public void testAllDatanodesReconfig()

LOG.info("dfsadmin -status -livenodes output:");

outs.forEach(s -> LOG.info("{}", s));

assertTrue(outs.get(0).startsWith("Reconfiguring status for node"));

-assertEquals("SUCCESS: Changed property dfs.datanode.peer.stats.enabled",

outs.get(2));

-assertEquals("\tFrom: \"false\"", outs.get(3));

-assertEquals("\tTo: \"true\"", outs.get(4));

+assertEquals("SUCCESS: Changed property dfs.datanode.peer.stats.enabled",

outs.get(1));

+assertEquals("\tFrom: \"false\"", outs.get(2));

+assertEquals("\tTo: \"true\"", outs.get(3));

Review Comment:

I will modify the code.

Issue Time Tracking

---

Worklog Id: (was: 779274)

Time Spent: 2h (was: 1h 50m)

> Fix org.apache.hadoop.hdfs.tools.TestDFSAdmin#testAllDatanodesReconfig ERROR

>

>

> Key: HDFS-16624

> URL: https://issues.apache.org/jira/browse/HDFS-16624

> Project: Hadoop HDFS

> Issue Type: Bug

>Affects Versions: 3.4.0

>Reporter: fanshilun

>Assignee: fanshilun

>Priority: Major

> Labels: pull-request-available

> Attachments: testAllDatanodesReconfig.png

>

> Time Spent: 2h

> Remaining Estimate: 0h

>

> HDFS-16619 found an error message during Junit unit testing, as follows:

> expected:<[SUCCESS: Changed property dfs.datanode.peer.stats.enabled]> but

> was:<[ From: "false"]>

> After code debugging, it was found that there was an error in the selection

> outs.get(2) of the assertion(1208), index should be equal to 1.

> Please refer to the attachment for debugging pictures

> !testAllDatanodesReconfig.png!

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16624) Fix org.apache.hadoop.hdfs.tools.TestDFSAdmin#testAllDatanodesReconfig ERROR

[

https://issues.apache.org/jira/browse/HDFS-16624?focusedWorklogId=779273=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-779273

]

ASF GitHub Bot logged work on HDFS-16624:

-

Author: ASF GitHub Bot

Created on: 07/Jun/22 23:16

Start Date: 07/Jun/22 23:16

Worklog Time Spent: 10m

Work Description: slfan1989 commented on code in PR #4412:

URL: https://github.com/apache/hadoop/pull/4412#discussion_r891780928

##

hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/tools/DFSAdmin.java:

##

@@ -2068,51 +2068,51 @@ int getReconfigurationStatus(final String nodeType,

final String address, final

errMsg = String.format("Node [%s] reloading configuration: %s.", address,

e.toString());

}

-

-if (errMsg != null) {

- err.println(errMsg);

- return 1;

-} else {

- out.print(outMsg);

-}

-

-if (status != null) {

- if (!status.hasTask()) {

-out.println("no task was found.");

-return 0;

- }

- out.print("started at " + new Date(status.getStartTime()));

- if (!status.stopped()) {

-out.println(" and is still running.");

-return 0;

+synchronized (this) {

Review Comment:

thanks for your suggestion, I will modify the junit test.

Issue Time Tracking

---

Worklog Id: (was: 779273)

Time Spent: 1h 50m (was: 1h 40m)

> Fix org.apache.hadoop.hdfs.tools.TestDFSAdmin#testAllDatanodesReconfig ERROR

>

>

> Key: HDFS-16624

> URL: https://issues.apache.org/jira/browse/HDFS-16624

> Project: Hadoop HDFS

> Issue Type: Bug

>Affects Versions: 3.4.0

>Reporter: fanshilun

>Assignee: fanshilun

>Priority: Major

> Labels: pull-request-available

> Attachments: testAllDatanodesReconfig.png

>

> Time Spent: 1h 50m

> Remaining Estimate: 0h

>

> HDFS-16619 found an error message during Junit unit testing, as follows:

> expected:<[SUCCESS: Changed property dfs.datanode.peer.stats.enabled]> but

> was:<[ From: "false"]>

> After code debugging, it was found that there was an error in the selection

> outs.get(2) of the assertion(1208), index should be equal to 1.

> Please refer to the attachment for debugging pictures

> !testAllDatanodesReconfig.png!

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Commented] (HDFS-16624) Fix org.apache.hadoop.hdfs.tools.TestDFSAdmin#testAllDatanodesReconfig ERROR

[ https://issues.apache.org/jira/browse/HDFS-16624?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17551305#comment-17551305 ] Ayush Saxena commented on HDFS-16624: - Can you link the Jira which broke this, add the root cause and loop in the folks involved in that Jira. > Fix org.apache.hadoop.hdfs.tools.TestDFSAdmin#testAllDatanodesReconfig ERROR > > > Key: HDFS-16624 > URL: https://issues.apache.org/jira/browse/HDFS-16624 > Project: Hadoop HDFS > Issue Type: Bug >Affects Versions: 3.4.0 >Reporter: fanshilun >Assignee: fanshilun >Priority: Major > Labels: pull-request-available > Attachments: testAllDatanodesReconfig.png > > Time Spent: 1h 40m > Remaining Estimate: 0h > > HDFS-16619 found an error message during Junit unit testing, as follows: > expected:<[SUCCESS: Changed property dfs.datanode.peer.stats.enabled]> but > was:<[ From: "false"]> > After code debugging, it was found that there was an error in the selection > outs.get(2) of the assertion(1208), index should be equal to 1. > Please refer to the attachment for debugging pictures > !testAllDatanodesReconfig.png! -- This message was sent by Atlassian Jira (v8.20.7#820007) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16624) Fix org.apache.hadoop.hdfs.tools.TestDFSAdmin#testAllDatanodesReconfig ERROR

[ https://issues.apache.org/jira/browse/HDFS-16624?focusedWorklogId=779266=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-779266 ] ASF GitHub Bot logged work on HDFS-16624: - Author: ASF GitHub Bot Created on: 07/Jun/22 22:05 Start Date: 07/Jun/22 22:05 Worklog Time Spent: 10m Work Description: virajjasani commented on PR #4412: URL: https://github.com/apache/hadoop/pull/4412#issuecomment-1149219846 Good find btw @slfan1989 Issue Time Tracking --- Worklog Id: (was: 779266) Time Spent: 1h 40m (was: 1.5h) > Fix org.apache.hadoop.hdfs.tools.TestDFSAdmin#testAllDatanodesReconfig ERROR > > > Key: HDFS-16624 > URL: https://issues.apache.org/jira/browse/HDFS-16624 > Project: Hadoop HDFS > Issue Type: Bug >Affects Versions: 3.4.0 >Reporter: fanshilun >Assignee: fanshilun >Priority: Major > Labels: pull-request-available > Attachments: testAllDatanodesReconfig.png > > Time Spent: 1h 40m > Remaining Estimate: 0h > > HDFS-16619 found an error message during Junit unit testing, as follows: > expected:<[SUCCESS: Changed property dfs.datanode.peer.stats.enabled]> but > was:<[ From: "false"]> > After code debugging, it was found that there was an error in the selection > outs.get(2) of the assertion(1208), index should be equal to 1. > Please refer to the attachment for debugging pictures > !testAllDatanodesReconfig.png! -- This message was sent by Atlassian Jira (v8.20.7#820007) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16624) Fix org.apache.hadoop.hdfs.tools.TestDFSAdmin#testAllDatanodesReconfig ERROR

[

https://issues.apache.org/jira/browse/HDFS-16624?focusedWorklogId=779265=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-779265

]

ASF GitHub Bot logged work on HDFS-16624:

-

Author: ASF GitHub Bot

Created on: 07/Jun/22 22:04

Start Date: 07/Jun/22 22:04

Worklog Time Spent: 10m

Work Description: virajjasani commented on code in PR #4412:

URL: https://github.com/apache/hadoop/pull/4412#discussion_r891747569

##

hadoop-hdfs-project/hadoop-hdfs/src/test/java/org/apache/hadoop/hdfs/tools/TestDFSAdmin.java:

##

@@ -1205,9 +1205,9 @@ public void testAllDatanodesReconfig()

LOG.info("dfsadmin -status -livenodes output:");

outs.forEach(s -> LOG.info("{}", s));

assertTrue(outs.get(0).startsWith("Reconfiguring status for node"));

-assertEquals("SUCCESS: Changed property dfs.datanode.peer.stats.enabled",

outs.get(2));

-assertEquals("\tFrom: \"false\"", outs.get(3));

-assertEquals("\tTo: \"true\"", outs.get(4));

+assertEquals("SUCCESS: Changed property dfs.datanode.peer.stats.enabled",

outs.get(1));

+assertEquals("\tFrom: \"false\"", outs.get(2));

+assertEquals("\tTo: \"true\"", outs.get(3));

Review Comment:

Given that concurrency is at play here, we can do something like this:

```

assertTrue("SUCCESS: Changed property

dfs.datanode.peer.stats.enabled".equals(outs.get(2))

|| "SUCCESS: Changed property

dfs.datanode.peer.stats.enabled".equals(outs.get(1)));

```

##

hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/tools/DFSAdmin.java:

##

@@ -2068,51 +2068,51 @@ int getReconfigurationStatus(final String nodeType,

final String address, final

errMsg = String.format("Node [%s] reloading configuration: %s.", address,

e.toString());

}

-

-if (errMsg != null) {

- err.println(errMsg);

- return 1;

-} else {

- out.print(outMsg);

-}

-

-if (status != null) {

- if (!status.hasTask()) {

-out.println("no task was found.");

-return 0;

- }

- out.print("started at " + new Date(status.getStartTime()));

- if (!status.stopped()) {

-out.println(" and is still running.");

-return 0;

+synchronized (this) {

Review Comment:

@slfan1989 This is good for concurrency control but we should avoid this for

performance issues. Rather, we can update test.

Issue Time Tracking

---

Worklog Id: (was: 779265)

Time Spent: 1.5h (was: 1h 20m)

> Fix org.apache.hadoop.hdfs.tools.TestDFSAdmin#testAllDatanodesReconfig ERROR

>

>

> Key: HDFS-16624

> URL: https://issues.apache.org/jira/browse/HDFS-16624

> Project: Hadoop HDFS

> Issue Type: Bug

>Affects Versions: 3.4.0

>Reporter: fanshilun

>Assignee: fanshilun

>Priority: Major

> Labels: pull-request-available

> Attachments: testAllDatanodesReconfig.png

>

> Time Spent: 1.5h

> Remaining Estimate: 0h

>

> HDFS-16619 found an error message during Junit unit testing, as follows:

> expected:<[SUCCESS: Changed property dfs.datanode.peer.stats.enabled]> but

> was:<[ From: "false"]>

> After code debugging, it was found that there was an error in the selection

> outs.get(2) of the assertion(1208), index should be equal to 1.

> Please refer to the attachment for debugging pictures

> !testAllDatanodesReconfig.png!

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16623) IllegalArgumentException in LifelineSender

[

https://issues.apache.org/jira/browse/HDFS-16623?focusedWorklogId=779260=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-779260

]

ASF GitHub Bot logged work on HDFS-16623:

-

Author: ASF GitHub Bot

Created on: 07/Jun/22 20:58

Start Date: 07/Jun/22 20:58

Worklog Time Spent: 10m

Work Description: cnauroth commented on PR #4409:

URL: https://github.com/apache/hadoop/pull/4409#issuecomment-1149161121

@ZanderXu , yes, I was thinking of just testing that `getLifelineWaitTime()`

only returns non-negative numbers. There is a similar kind of test in

`TestBpServiceActorScheduler#testScheduleLifeline`, but it doesn't yet cover

the case that would lead to a negative value.

I think testing for LifelineSender thread exit would be more complete, but

also a lot more complex. Testing directly against the `getLifelineWaitTime()`

return values is a good compromise.

Thanks!

Issue Time Tracking

---

Worklog Id: (was: 779260)

Time Spent: 40m (was: 0.5h)

> IllegalArgumentException in LifelineSender

> --

>

> Key: HDFS-16623

> URL: https://issues.apache.org/jira/browse/HDFS-16623

> Project: Hadoop HDFS

> Issue Type: Bug

>Reporter: ZanderXu

>Assignee: ZanderXu

>Priority: Major

> Labels: pull-request-available

> Time Spent: 40m

> Remaining Estimate: 0h

>

> In our production environment, an IllegalArgumentException occurred in the

> LifelineSender at one DataNode which was undergoing GC at that time.

> And the bug code is at line 1060 in BPServiceActor.java, because the sleep

> time is negative.

> {code:java}

> while (shouldRun()) {

> try {

> if (lifelineNamenode == null) {

> lifelineNamenode = dn.connectToLifelineNN(lifelineNnAddr);

> }

> sendLifelineIfDue();

> Thread.sleep(scheduler.getLifelineWaitTime());

> } catch (InterruptedException e) {

> Thread.currentThread().interrupt();

> } catch (IOException e) {

> LOG.warn("IOException in LifelineSender for " + BPServiceActor.this,

> e);

> }

> }

> {code}

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

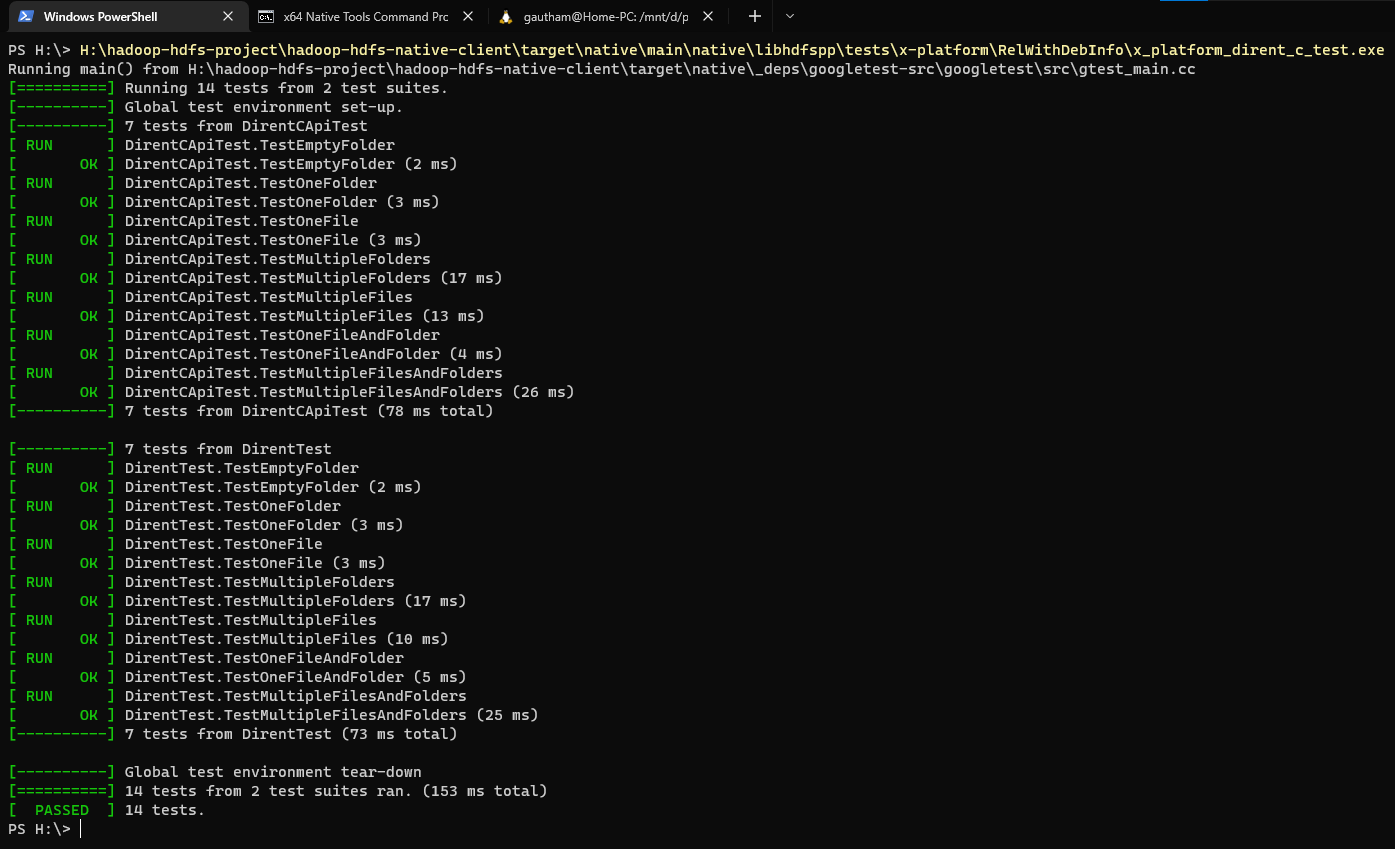

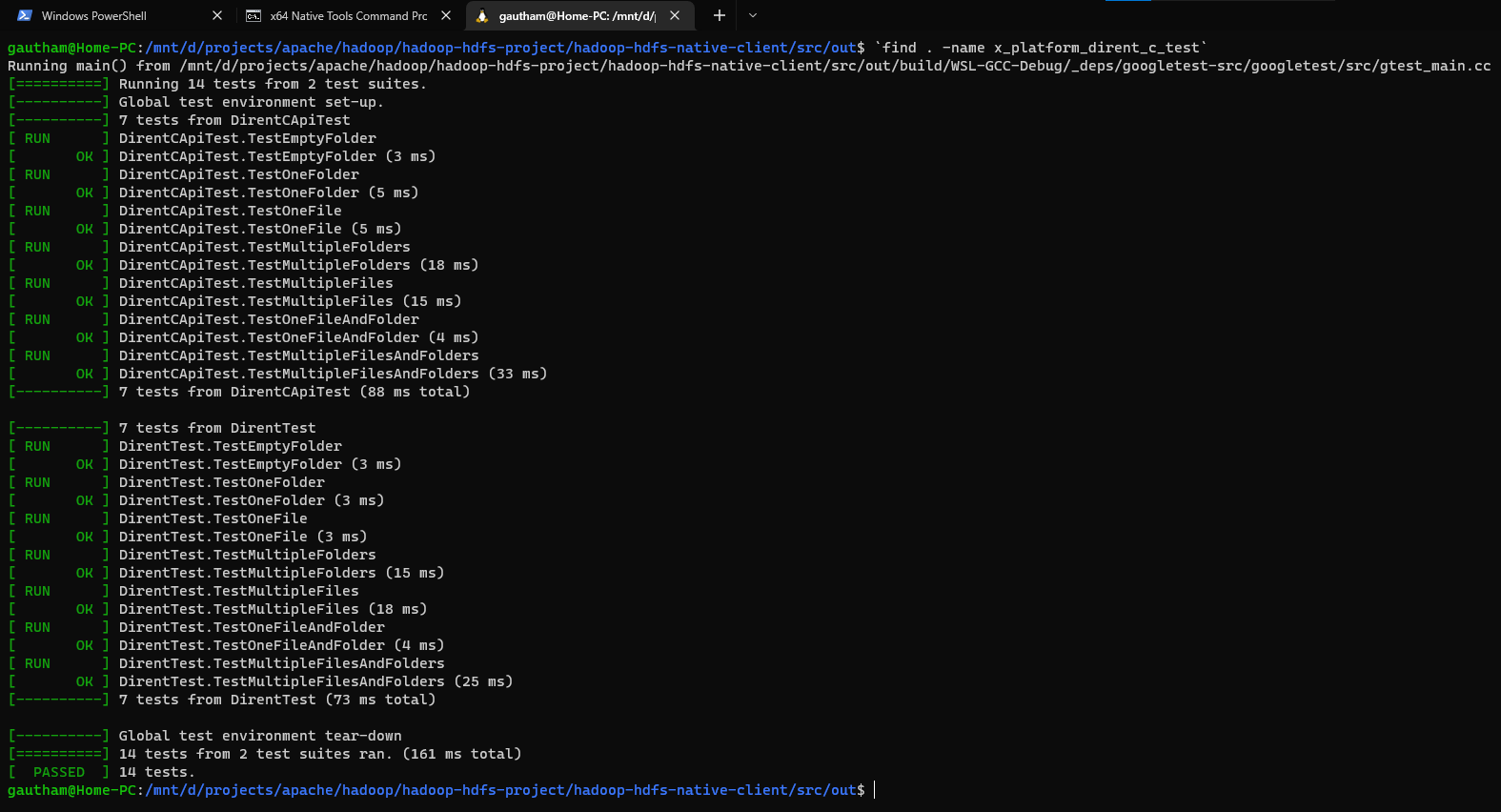

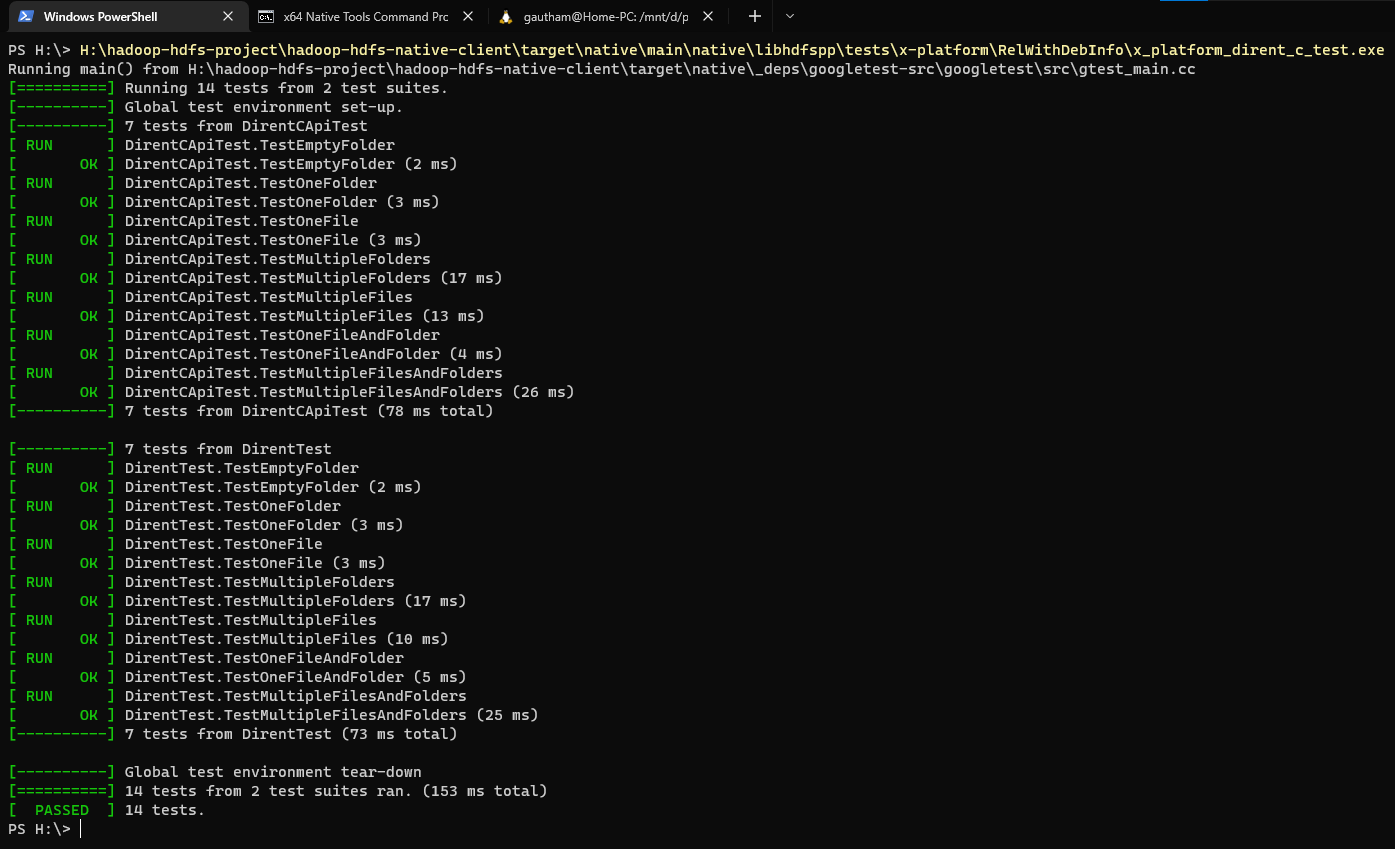

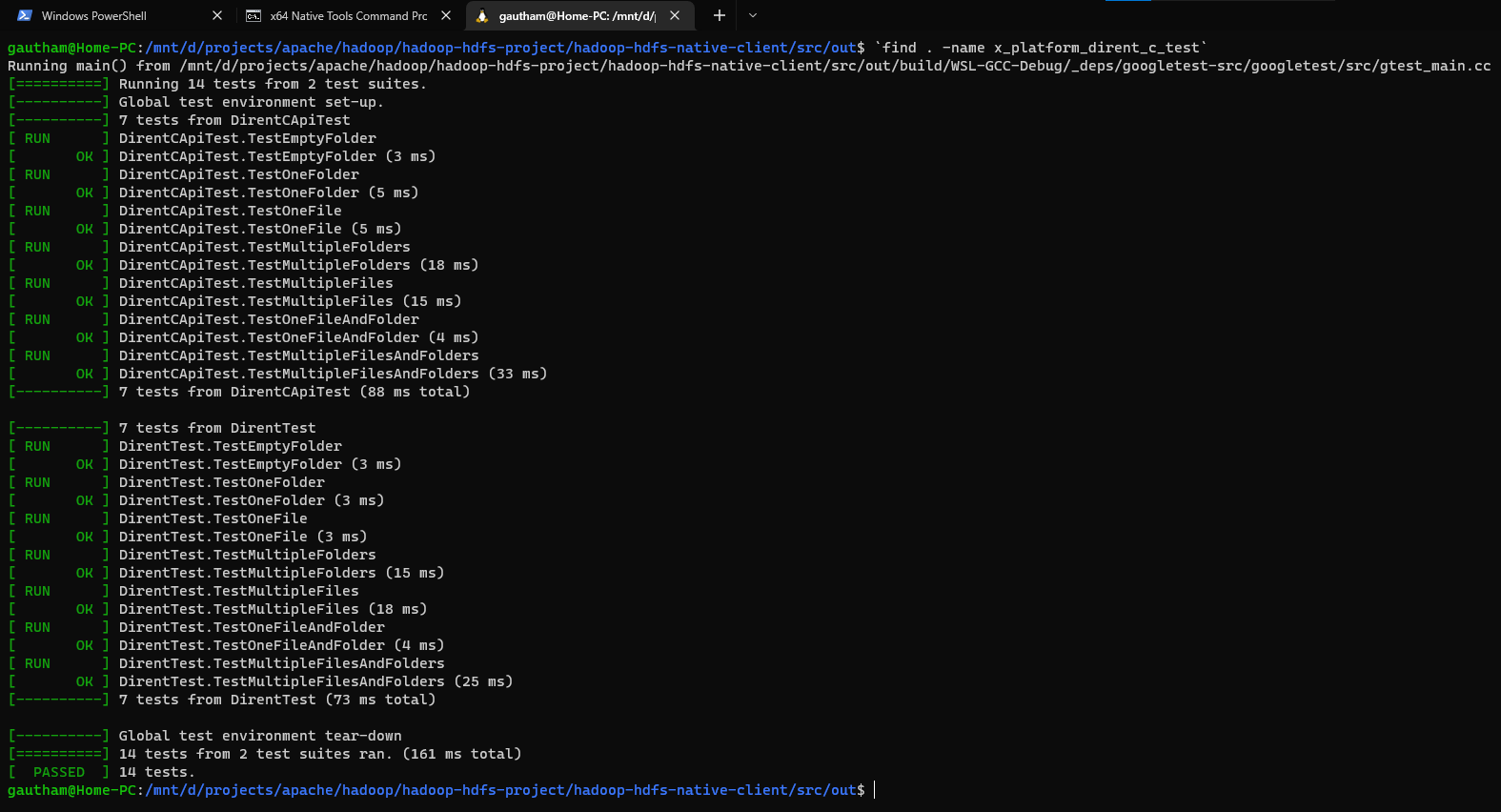

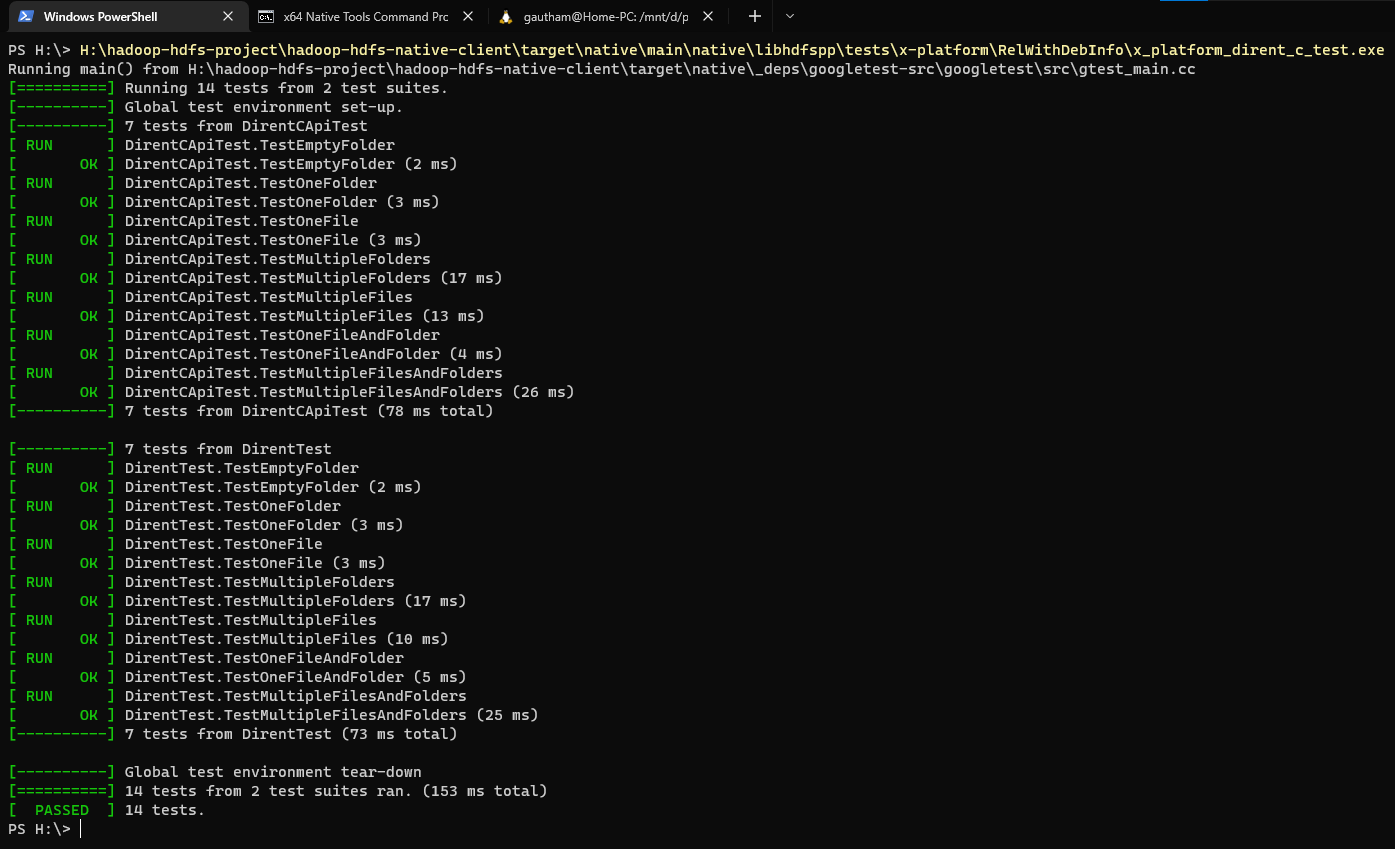

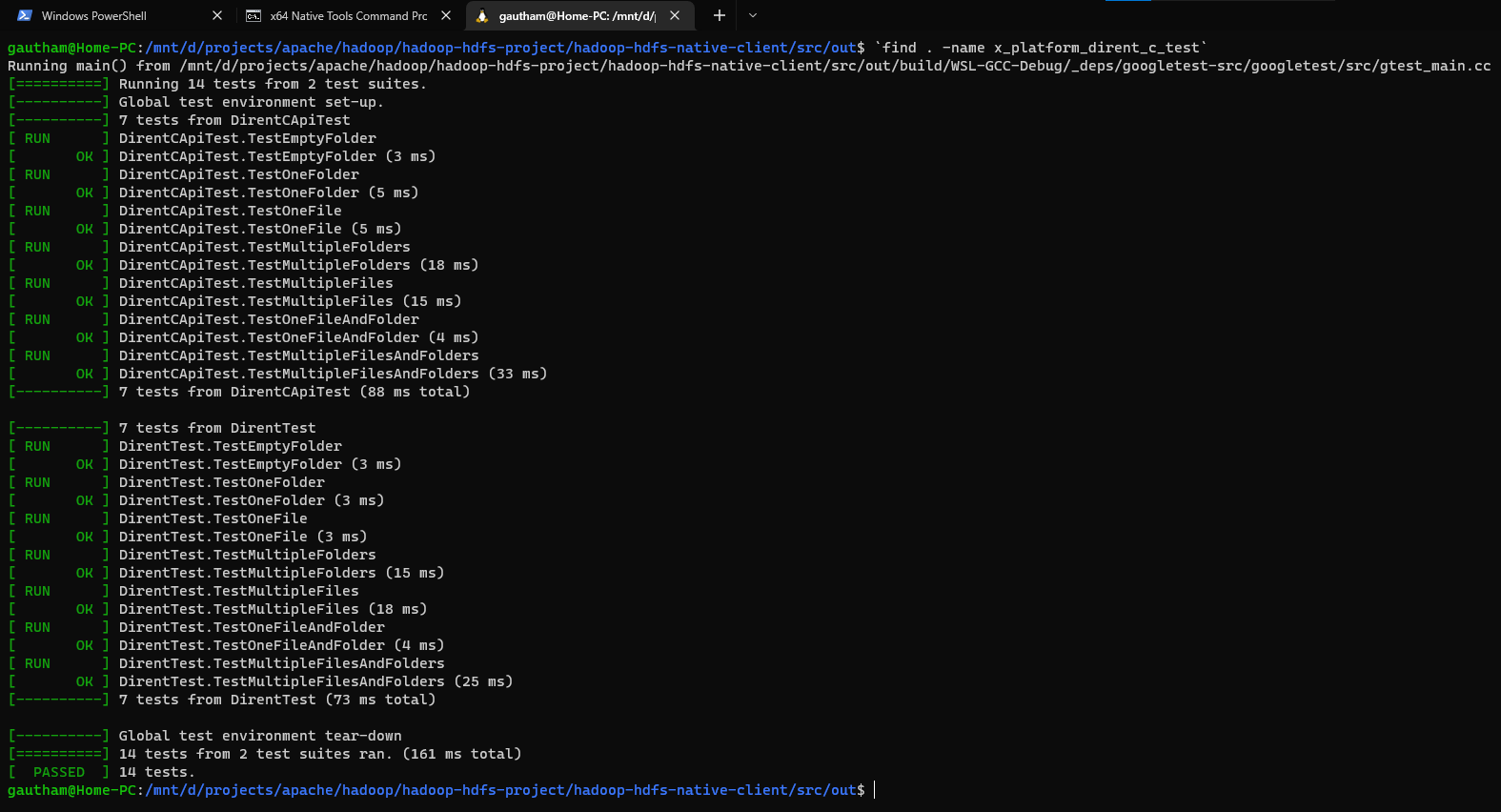

[jira] [Work logged] (HDFS-16463) Make dirent cross platform compatible

[

https://issues.apache.org/jira/browse/HDFS-16463?focusedWorklogId=779185=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-779185

]

ASF GitHub Bot logged work on HDFS-16463:

-

Author: ASF GitHub Bot

Created on: 07/Jun/22 16:39

Start Date: 07/Jun/22 16:39

Worklog Time Spent: 10m

Work Description: goiri commented on code in PR #4370:

URL: https://github.com/apache/hadoop/pull/4370#discussion_r891470689

##

hadoop-hdfs-project/hadoop-hdfs-native-client/src/main/native/libhdfspp/lib/x-platform/c-api/dirent.h:

##

@@ -0,0 +1,92 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+#ifndef NATIVE_LIBHDFSPP_LIB_CROSS_PLATFORM_C_API_DIRENT_H

+#define NATIVE_LIBHDFSPP_LIB_CROSS_PLATFORM_C_API_DIRENT_H

+

+#if !(defined(WIN32) || defined(USE_X_PLATFORM_DIRENT))

+/*

+ * For non-Windows environments, we use the dirent.h header itself.

+ */

+#include

+#else

+/*

+ * If it's a Windows environment or if the macro USE_X_PLATFORM_DIRENT is

+ * defined, we switch to using dirent from the XPlatform library.

+ */

+

+/*

+ * We will use extern "C" only on Windows.

+ */

+#if defined(WIN32) && defined(__cplusplus)

+extern "C" {

+#endif

+

+/**

Review Comment:

Line 40 to 84 could be in its own .h file.

Then you can have another .h which does the adding of the extern.

Finally, you would have this one that includes accordingly.

The issue I try to avoid is having nested preprocessor ifs which are not

easy to read.

Issue Time Tracking

---

Worklog Id: (was: 779185)

Time Spent: 5h 10m (was: 5h)

> Make dirent cross platform compatible

> -

>

> Key: HDFS-16463

> URL: https://issues.apache.org/jira/browse/HDFS-16463

> Project: Hadoop HDFS

> Issue Type: Improvement

> Components: libhdfs++

>Affects Versions: 3.4.0

> Environment: Windows 10

>Reporter: Gautham Banasandra

>Assignee: Gautham Banasandra

>Priority: Major

> Labels: libhdfscpp, pull-request-available

> Time Spent: 5h 10m

> Remaining Estimate: 0h

>

> [jnihelper.c|https://github.com/apache/hadoop/blob/1fed18bb2d8ac3dbaecc3feddded30bed918d556/hadoop-hdfs-project/hadoop-hdfs-native-client/src/main/native/libhdfs/jni_helper.c#L28]

> in HDFS native client uses *dirent.h*. This header file isn't available on

> Windows. Thus, we need to replace this with a cross platform compatible

> implementation for dirent.

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Updated] (HDFS-16625) Unit tests aren't checking for PMDK availability

[

https://issues.apache.org/jira/browse/HDFS-16625?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

ASF GitHub Bot updated HDFS-16625:

--

Labels: pull-request-available (was: )

> Unit tests aren't checking for PMDK availability

>

>

> Key: HDFS-16625

> URL: https://issues.apache.org/jira/browse/HDFS-16625

> Project: Hadoop HDFS

> Issue Type: Test

> Components: test

>Affects Versions: 3.4.0, 3.3.4

>Reporter: Steve Vaughan

>Priority: Blocker

> Labels: pull-request-available

> Time Spent: 10m

> Remaining Estimate: 0h

>

> There are unit tests that require native PMDK libraries which aren't checking

> if the library is available, resulting in unsuccessful test. Adding the

> following in the test setup addresses the problem.

> {code:java}

> assumeTrue ("Requires PMDK", NativeIO.POSIX.isPmdkAvailable()); {code}

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16625) Unit tests aren't checking for PMDK availability

[

https://issues.apache.org/jira/browse/HDFS-16625?focusedWorklogId=779145=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-779145

]

ASF GitHub Bot logged work on HDFS-16625:

-

Author: ASF GitHub Bot

Created on: 07/Jun/22 15:11

Start Date: 07/Jun/22 15:11

Worklog Time Spent: 10m

Work Description: snmvaughan opened a new pull request, #4414:

URL: https://github.com/apache/hadoop/pull/4414

### Description of PR

There are unit tests that require native PMDK libraries which aren't

checking if the library is available, resulting in unsuccessful test. This

patch checks the assumption about PMDK availability. The same changes have

been applied and tested against trunk (3.4.0-SNAPSHOT), branch-3.3

(3.3.4-SNAPSHOT), and branch-3.3.3.

### How was this patch tested?

This patch has been applied to a local build that runs in the Hadoop

development environment, which doesn't include the PMDK shared libraries.

### For code changes:

- [X] Does the title or this PR starts with the corresponding JIRA issue id

(e.g. 'HADOOP-17799. Your PR title ...')?

- [ ] Object storage: have the integration tests been executed and the

endpoint declared according to the connector-specific documentation?

- [ ] If adding new dependencies to the code, are these dependencies

licensed in a way that is compatible for inclusion under [ASF

2.0](http://www.apache.org/legal/resolved.html#category-a)?

- [ ] If applicable, have you updated the `LICENSE`, `LICENSE-binary`,

`NOTICE-binary` files?

Issue Time Tracking

---

Worklog Id: (was: 779145)

Remaining Estimate: 0h

Time Spent: 10m

> Unit tests aren't checking for PMDK availability

>

>

> Key: HDFS-16625

> URL: https://issues.apache.org/jira/browse/HDFS-16625

> Project: Hadoop HDFS

> Issue Type: Test

> Components: test

>Affects Versions: 3.4.0, 3.3.4

>Reporter: Steve Vaughan

>Priority: Blocker

> Time Spent: 10m

> Remaining Estimate: 0h

>

> There are unit tests that require native PMDK libraries which aren't checking

> if the library is available, resulting in unsuccessful test. Adding the

> following in the test setup addresses the problem.

> {code:java}

> assumeTrue ("Requires PMDK", NativeIO.POSIX.isPmdkAvailable()); {code}

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16596) Improve the processing capability of FsDatasetAsyncDiskService

[ https://issues.apache.org/jira/browse/HDFS-16596?focusedWorklogId=779141=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-779141 ] ASF GitHub Bot logged work on HDFS-16596: - Author: ASF GitHub Bot Created on: 07/Jun/22 15:00 Start Date: 07/Jun/22 15:00 Worklog Time Spent: 10m Work Description: ZanderXu commented on PR #4360: URL: https://github.com/apache/hadoop/pull/4360#issuecomment-1148793254 Thanks @saintstack for you suggestion. I have updated the patch according your suggestion, and help me review it again, thanks~ Issue Time Tracking --- Worklog Id: (was: 779141) Time Spent: 50m (was: 40m) > Improve the processing capability of FsDatasetAsyncDiskService > -- > > Key: HDFS-16596 > URL: https://issues.apache.org/jira/browse/HDFS-16596 > Project: Hadoop HDFS > Issue Type: Improvement >Reporter: ZanderXu >Assignee: ZanderXu >Priority: Major > Labels: pull-request-available > Time Spent: 50m > Remaining Estimate: 0h > > In our production environment, when DN needs to delete a large number blocks, > we find that many deletion tasks are backlogged in the queue of > threadPoolExecutor in FsDatasetAsyncDiskService. We can't improve its > throughput because the number of core threads is hard coded. > So DN needs to support the number of core threads of > FsDatasetAsyncDiskService can be configured. -- This message was sent by Atlassian Jira (v8.20.7#820007) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16600) Deadlock on DataNode

[

https://issues.apache.org/jira/browse/HDFS-16600?focusedWorklogId=779134=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-779134

]

ASF GitHub Bot logged work on HDFS-16600:

-

Author: ASF GitHub Bot

Created on: 07/Jun/22 14:39

Start Date: 07/Jun/22 14:39

Worklog Time Spent: 10m

Work Description: ZanderXu commented on PR #4367:

URL: https://github.com/apache/hadoop/pull/4367#issuecomment-1148766297

> Another suggestion, can you write the junit test?

you can see the UT

org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.testSynchronousEviction.

Issue Time Tracking

---

Worklog Id: (was: 779134)

Time Spent: 2h 40m (was: 2.5h)

> Deadlock on DataNode

>

>

> Key: HDFS-16600

> URL: https://issues.apache.org/jira/browse/HDFS-16600

> Project: Hadoop HDFS

> Issue Type: Bug

>Reporter: ZanderXu

>Assignee: ZanderXu

>Priority: Major

> Labels: pull-request-available

> Time Spent: 2h 40m

> Remaining Estimate: 0h

>

> The UT

> org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.testSynchronousEviction

> failed, because happened deadlock, which is introduced by

> [HDFS-16534|https://issues.apache.org/jira/browse/HDFS-16534].

> DeadLock:

> {code:java}

> // org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.createRbw line 1588

> need a read lock

> try (AutoCloseableLock lock = lockManager.readLock(LockLevel.BLOCK_POOl,

> b.getBlockPoolId()))

> // org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.evictBlocks line

> 3526 need a write lock

> try (AutoCloseableLock lock = lockManager.writeLock(LockLevel.BLOCK_POOl,

> bpid))

> {code}

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16533) COMPOSITE_CRC failed between replicated file and striped file.

[

https://issues.apache.org/jira/browse/HDFS-16533?focusedWorklogId=779133=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-779133

]

ASF GitHub Bot logged work on HDFS-16533:

-

Author: ASF GitHub Bot

Created on: 07/Jun/22 14:36

Start Date: 07/Jun/22 14:36

Worklog Time Spent: 10m

Work Description: ZanderXu commented on PR #4155:

URL: https://github.com/apache/hadoop/pull/4155#issuecomment-1148763022

@Hexiaoqiao @jojochuang Could you help me review this patch? The failed UTs

not caused by this modification, and has been solved in other jira.

Issue Time Tracking

---

Worklog Id: (was: 779133)

Time Spent: 3h 20m (was: 3h 10m)

> COMPOSITE_CRC failed between replicated file and striped file.

> --

>

> Key: HDFS-16533

> URL: https://issues.apache.org/jira/browse/HDFS-16533

> Project: Hadoop HDFS

> Issue Type: Bug

> Components: hdfs, hdfs-client

>Reporter: ZanderXu

>Assignee: ZanderXu

>Priority: Major

> Labels: pull-request-available

> Time Spent: 3h 20m

> Remaining Estimate: 0h

>

> After testing the COMPOSITE_CRC with some random length between replicated

> file and striped file which has same data with replicated file, it failed.

> Reproduce step like this:

> {code:java}

> @Test(timeout = 9)

> public void testStripedAndReplicatedFileChecksum2() throws Exception {

> int abnormalSize = (dataBlocks * 2 - 2) * blockSize +

> (int) (blockSize * 0.5);

> prepareTestFiles(abnormalSize, new String[] {stripedFile1, replicatedFile});

> int loopNumber = 100;

> while (loopNumber-- > 0) {

> int verifyLength = ThreadLocalRandom.current()

> .nextInt(10, abnormalSize);

> FileChecksum stripedFileChecksum1 = getFileChecksum(stripedFile1,

> verifyLength, false);

> FileChecksum replicatedFileChecksum = getFileChecksum(replicatedFile,

> verifyLength, false);

> if (checksumCombineMode.equals(ChecksumCombineMode.COMPOSITE_CRC.name()))

> {

> Assert.assertEquals(stripedFileChecksum1, replicatedFileChecksum);

> } else {

> Assert.assertNotEquals(stripedFileChecksum1, replicatedFileChecksum);

> }

> }

> } {code}

> And after tracing the root cause, `FileChecksumHelper#makeCompositeCrcResult`

> maybe compute an error `consumedLastBlockLength` when updating checksum for

> the last block of the fixed length which maybe not the last block in the file.

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16593) Correct inaccurate BlocksRemoved metric on DataNode side

[

https://issues.apache.org/jira/browse/HDFS-16593?focusedWorklogId=779132=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-779132

]

ASF GitHub Bot logged work on HDFS-16593:

-

Author: ASF GitHub Bot

Created on: 07/Jun/22 14:35

Start Date: 07/Jun/22 14:35

Worklog Time Spent: 10m

Work Description: ZanderXu commented on PR #4353:

URL: https://github.com/apache/hadoop/pull/4353#issuecomment-1148761554

@Hexiaoqiao Could you help me review this patch? The failed UTs not caused

by this modification, and has been solved in other jira.

Issue Time Tracking

---

Worklog Id: (was: 779132)

Time Spent: 0.5h (was: 20m)

> Correct inaccurate BlocksRemoved metric on DataNode side

>

>

> Key: HDFS-16593

> URL: https://issues.apache.org/jira/browse/HDFS-16593

> Project: Hadoop HDFS

> Issue Type: Bug

>Reporter: ZanderXu

>Assignee: ZanderXu

>Priority: Minor

> Labels: pull-request-available

> Time Spent: 0.5h

> Remaining Estimate: 0h

>

> When tracing the root cause of production issue, I found that the

> BlocksRemoved metric on Datanode size was inaccurate.

> {code:java}

> case DatanodeProtocol.DNA_INVALIDATE:

> //

> // Some local block(s) are obsolete and can be

> // safely garbage-collected.

> //

> Block toDelete[] = bcmd.getBlocks();

> try {

> // using global fsdataset

> dn.getFSDataset().invalidate(bcmd.getBlockPoolId(), toDelete);

> } catch(IOException e) {

> // Exceptions caught here are not expected to be disk-related.

> throw e;

> }

> dn.metrics.incrBlocksRemoved(toDelete.length);

> break;

> {code}

> Because even if the invalidate method throws an exception, some blocks may

> have been successfully deleted internally.

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16600) Deadlock on DataNode

[

https://issues.apache.org/jira/browse/HDFS-16600?focusedWorklogId=779130=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-779130

]

ASF GitHub Bot logged work on HDFS-16600:

-

Author: ASF GitHub Bot

Created on: 07/Jun/22 14:31

Start Date: 07/Jun/22 14:31

Worklog Time Spent: 10m

Work Description: slfan1989 commented on PR #4367:

URL: https://github.com/apache/hadoop/pull/4367#issuecomment-1148756359

@ZanderXu @Hexiaoqiao Thank you very much everyone, I learned a lot from the

discussion, I didn't pay attention to this pr, because the description

information is too short, especially for me who just started reading hdfs code.

I will summarize the calling process in the comment area of HDFS-16600 Leave

a message (ASAP), and I hope @ZanderXu @Hexiaoqiao you can help me check it too.

Issue Time Tracking

---

Worklog Id: (was: 779130)

Time Spent: 2.5h (was: 2h 20m)

> Deadlock on DataNode

>

>

> Key: HDFS-16600

> URL: https://issues.apache.org/jira/browse/HDFS-16600

> Project: Hadoop HDFS

> Issue Type: Bug

>Reporter: ZanderXu

>Assignee: ZanderXu

>Priority: Major

> Labels: pull-request-available

> Time Spent: 2.5h

> Remaining Estimate: 0h

>

> The UT

> org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.testSynchronousEviction

> failed, because happened deadlock, which is introduced by

> [HDFS-16534|https://issues.apache.org/jira/browse/HDFS-16534].

> DeadLock:

> {code:java}

> // org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.createRbw line 1588

> need a read lock

> try (AutoCloseableLock lock = lockManager.readLock(LockLevel.BLOCK_POOl,

> b.getBlockPoolId()))

> // org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.evictBlocks line

> 3526 need a write lock

> try (AutoCloseableLock lock = lockManager.writeLock(LockLevel.BLOCK_POOl,

> bpid))

> {code}

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16622) addRDBI in IncrementalBlockReportManager may remove the block with bigger GS.

[

https://issues.apache.org/jira/browse/HDFS-16622?focusedWorklogId=779121=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-779121

]

ASF GitHub Bot logged work on HDFS-16622:

-

Author: ASF GitHub Bot

Created on: 07/Jun/22 14:24

Start Date: 07/Jun/22 14:24

Worklog Time Spent: 10m

Work Description: ZanderXu commented on code in PR #4407: