[jira] [Commented] (FLINK-9325) generate the _meta file for checkpoint only when the writing is truly successful

[ https://issues.apache.org/jira/browse/FLINK-9325?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16517821#comment-16517821 ] ASF GitHub Bot commented on FLINK-9325: --- Github user sihuazhou commented on the issue: https://github.com/apache/flink/pull/5982 Could anybody have a look at this? > generate the _meta file for checkpoint only when the writing is truly > successful > > > Key: FLINK-9325 > URL: https://issues.apache.org/jira/browse/FLINK-9325 > Project: Flink > Issue Type: Improvement > Components: State Backends, Checkpointing >Affects Versions: 1.5.0 >Reporter: Sihua Zhou >Assignee: Sihua Zhou >Priority: Major > > We should generate the _meta file for checkpoint only when the writing is > totally successful. We should write the metadata file first to a temp file > and then atomically rename it (with an equivalent workaround for S3). -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[GitHub] flink issue #5982: [FLINK-9325][checkpoint]generate the meta file for checkp...

Github user sihuazhou commented on the issue: https://github.com/apache/flink/pull/5982 Could anybody have a look at this? ---

[GitHub] flink issue #6185: [FLINK-9619][YARN] Eagerly close the connection with task...

Github user sihuazhou commented on the issue: https://github.com/apache/flink/pull/6185 CC @tillrohrmann ---

[jira] [Commented] (FLINK-9619) Always close the task manager connection when the container is completed in YarnResourceManager

[ https://issues.apache.org/jira/browse/FLINK-9619?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16517794#comment-16517794 ] ASF GitHub Bot commented on FLINK-9619: --- GitHub user sihuazhou opened a pull request: https://github.com/apache/flink/pull/6185 [FLINK-9619][YARN] Eagerly close the connection with task manager when the container is completed ## What is the purpose of the change *We should always eagerly close the connection with task manager when the container is completed.* ## Brief change log - *Eagerly close the connection with task manager when the container is completed* ## Verifying this change This change is a trivial rework / code cleanup without any test coverage. ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): (no) - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: (no) - The serializers: (no) - The runtime per-record code paths (performance sensitive): (no) - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Yarn/Mesos, ZooKeeper: (yes) - The S3 file system connector: (no) ## Documentation - No You can merge this pull request into a Git repository by running: $ git pull https://github.com/sihuazhou/flink FLINK-9619 Alternatively you can review and apply these changes as the patch at: https://github.com/apache/flink/pull/6185.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #6185 commit a667ea120b0c2519ce45e5919c1377e897897d17 Author: sihuazhou Date: 2018-06-20T04:41:05Z Eagerly close the connection with task manager when the container is completed. > Always close the task manager connection when the container is completed in > YarnResourceManager > --- > > Key: FLINK-9619 > URL: https://issues.apache.org/jira/browse/FLINK-9619 > Project: Flink > Issue Type: Bug > Components: YARN >Affects Versions: 1.6.0, 1.5.1 >Reporter: Sihua Zhou >Assignee: Sihua Zhou >Priority: Critical > Fix For: 1.6.0, 1.5.1 > > > We should always eagerly close the connection with task manager when the > container is completed. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Commented] (FLINK-9619) Always close the task manager connection when the container is completed in YarnResourceManager

[ https://issues.apache.org/jira/browse/FLINK-9619?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16517795#comment-16517795 ] ASF GitHub Bot commented on FLINK-9619: --- Github user sihuazhou commented on the issue: https://github.com/apache/flink/pull/6185 CC @tillrohrmann > Always close the task manager connection when the container is completed in > YarnResourceManager > --- > > Key: FLINK-9619 > URL: https://issues.apache.org/jira/browse/FLINK-9619 > Project: Flink > Issue Type: Bug > Components: YARN >Affects Versions: 1.6.0, 1.5.1 >Reporter: Sihua Zhou >Assignee: Sihua Zhou >Priority: Critical > Fix For: 1.6.0, 1.5.1 > > > We should always eagerly close the connection with task manager when the > container is completed. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[GitHub] flink pull request #6185: [FLINK-9619][YARN] Eagerly close the connection wi...

GitHub user sihuazhou opened a pull request: https://github.com/apache/flink/pull/6185 [FLINK-9619][YARN] Eagerly close the connection with task manager when the container is completed ## What is the purpose of the change *We should always eagerly close the connection with task manager when the container is completed.* ## Brief change log - *Eagerly close the connection with task manager when the container is completed* ## Verifying this change This change is a trivial rework / code cleanup without any test coverage. ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): (no) - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: (no) - The serializers: (no) - The runtime per-record code paths (performance sensitive): (no) - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Yarn/Mesos, ZooKeeper: (yes) - The S3 file system connector: (no) ## Documentation - No You can merge this pull request into a Git repository by running: $ git pull https://github.com/sihuazhou/flink FLINK-9619 Alternatively you can review and apply these changes as the patch at: https://github.com/apache/flink/pull/6185.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #6185 commit a667ea120b0c2519ce45e5919c1377e897897d17 Author: sihuazhou Date: 2018-06-20T04:41:05Z Eagerly close the connection with task manager when the container is completed. ---

[jira] [Created] (FLINK-9619) Always close the task manager connection when the container is completed in YarnResourceManager

Sihua Zhou created FLINK-9619: - Summary: Always close the task manager connection when the container is completed in YarnResourceManager Key: FLINK-9619 URL: https://issues.apache.org/jira/browse/FLINK-9619 Project: Flink Issue Type: Bug Components: YARN Affects Versions: 1.6.0, 1.5.1 Reporter: Sihua Zhou Assignee: Sihua Zhou Fix For: 1.6.0, 1.5.1 We should always eagerly close the connection with task manager when the container is completed. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Commented] (FLINK-9417) Send heartbeat requests from RPC endpoint's main thread

[

https://issues.apache.org/jira/browse/FLINK-9417?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16517790#comment-16517790

]

Sihua Zhou commented on FLINK-9417:

---

Hi [~till.rohrmann] One thing come to my mind, If we send heartbeat requests

from RPC's main thread, then should we also do a checking for the

HEARTBEAT_INTERVAL with a sanity min value(currently it only need to greater

than 0)? If the user configure a very small value e.g 10, then the resource

manager and the job master will be kept always very busy just for sending the

heartbeat.

> Send heartbeat requests from RPC endpoint's main thread

> ---

>

> Key: FLINK-9417

> URL: https://issues.apache.org/jira/browse/FLINK-9417

> Project: Flink

> Issue Type: Improvement

> Components: Distributed Coordination

>Affects Versions: 1.5.0, 1.6.0

>Reporter: Till Rohrmann

>Assignee: Sihua Zhou

>Priority: Major

>

> Currently, we use the {{RpcService#scheduledExecutor}} to send heartbeat

> requests to remote targets. This has the problem that we still see heartbeats

> from this endpoint also if its main thread is currently blocked. Due to this,

> the heartbeat response cannot be processed and the remote target times out.

> On the remote side, this won't be noticed because it still receives the

> heartbeat requests.

> A solution to this problem would be to send the heartbeat requests to the

> remote thread through the RPC endpoint's main thread. That way, also the

> heartbeats would be blocked if the main thread is blocked/busy.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

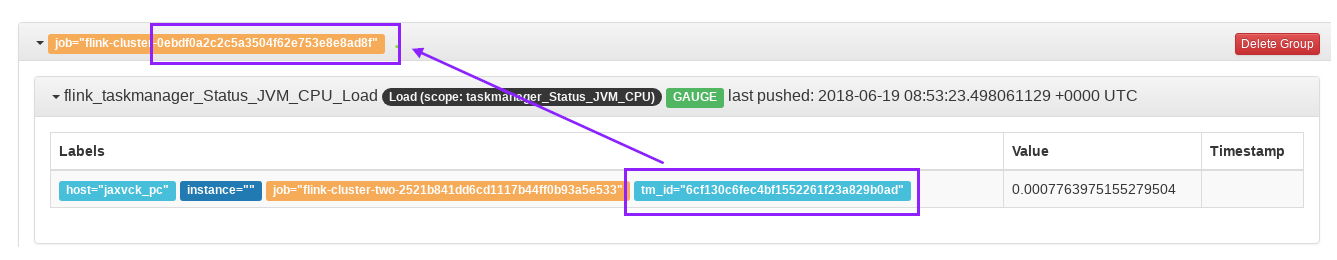

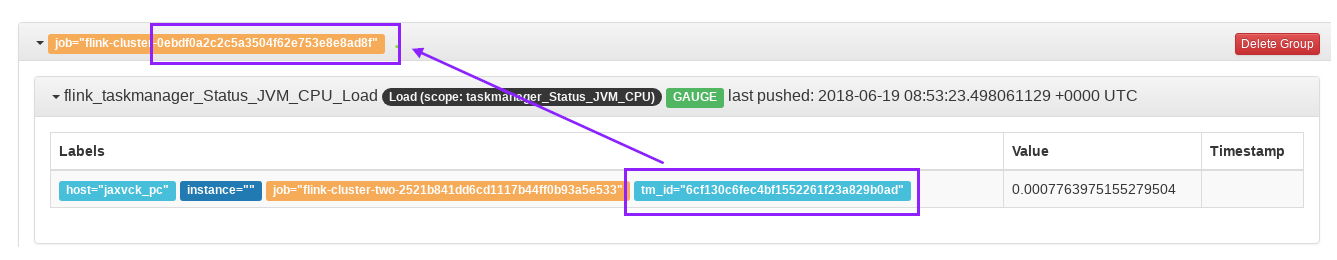

[jira] [Commented] (FLINK-9187) add prometheus pushgateway reporter

[ https://issues.apache.org/jira/browse/FLINK-9187?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16517789#comment-16517789 ] ASF GitHub Bot commented on FLINK-9187: --- Github user lamber-ken commented on the issue: https://github.com/apache/flink/pull/6184 Please click here for details [old-flink-9187](https://github.com/apache/flink/pull/5857) > add prometheus pushgateway reporter > --- > > Key: FLINK-9187 > URL: https://issues.apache.org/jira/browse/FLINK-9187 > Project: Flink > Issue Type: New Feature > Components: Metrics >Affects Versions: 1.4.2 >Reporter: lamber-ken >Priority: Minor > Labels: features > Fix For: 1.6.0 > > > make flink system can send metrics to prometheus via pushgateway. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[GitHub] flink issue #6184: [FLINK-9187][METRICS] add prometheus pushgateway reporter

Github user lamber-ken commented on the issue: https://github.com/apache/flink/pull/6184 Please click here for details [old-flink-9187](https://github.com/apache/flink/pull/5857) ---

[jira] [Commented] (FLINK-9187) add prometheus pushgateway reporter

[ https://issues.apache.org/jira/browse/FLINK-9187?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16517788#comment-16517788 ] ASF GitHub Bot commented on FLINK-9187: --- Github user lamber-ken closed the pull request at: https://github.com/apache/flink/pull/5857 > add prometheus pushgateway reporter > --- > > Key: FLINK-9187 > URL: https://issues.apache.org/jira/browse/FLINK-9187 > Project: Flink > Issue Type: New Feature > Components: Metrics >Affects Versions: 1.4.2 >Reporter: lamber-ken >Priority: Minor > Labels: features > Fix For: 1.6.0 > > > make flink system can send metrics to prometheus via pushgateway. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

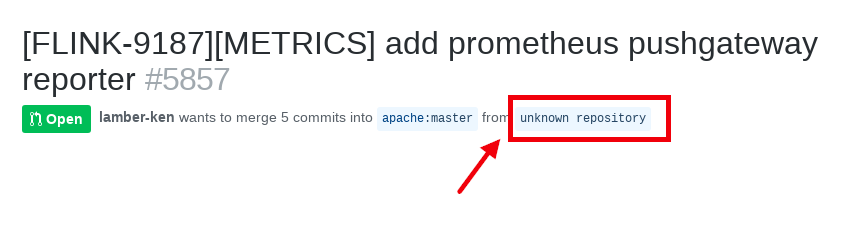

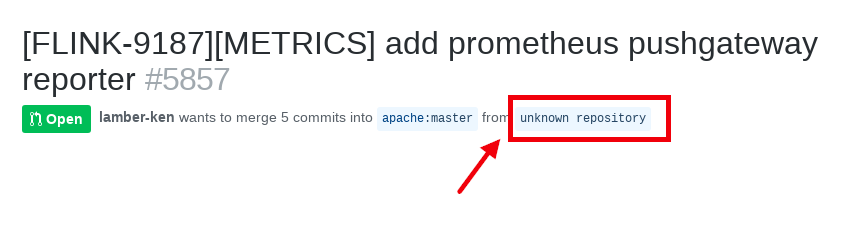

[GitHub] flink pull request #5857: [FLINK-9187][METRICS] add prometheus pushgateway r...

Github user lamber-ken closed the pull request at: https://github.com/apache/flink/pull/5857 ---

[GitHub] flink pull request #6184: add prometheus pushgateway reporter

GitHub user lamber-ken opened a pull request: https://github.com/apache/flink/pull/6184 add prometheus pushgateway reporter ## What is the purpose of the change This pull request makes flink system can send metrics to prometheus via pushgateway. it may be useful. ## Brief change log - Add prometheus pushgateway repoter - Restructure the code of the promethues reporter part ## Verifying this change This change is already covered by existing tests. [prometheus test](https://github.com/apache/flink/tree/master/flink-metrics/flink-metrics-prometheus/src/test/java/org/apache/flink/metrics/prometheus) ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): (yes) - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: (no) - The serializers: (no) - The runtime per-record code paths (performance sensitive): (no) - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Yarn/Mesos, ZooKeeper: (no) - The S3 file system connector: (no) ## Documentation - Does this pull request introduce a new feature? (yes) - If yes, how is the feature documented? (JavaDocs) You can merge this pull request into a Git repository by running: $ git pull https://github.com/lamber-ken/flink master Alternatively you can review and apply these changes as the patch at: https://github.com/apache/flink/pull/6184.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #6184 commit 1a8e1f6193823e70b1dc6abc1146299042c25c7d Author: lamber-ken Date: 2018-06-20T04:26:10Z add prometheus pushgateway reporter ---

[jira] [Commented] (FLINK-9187) add prometheus pushgateway reporter

[ https://issues.apache.org/jira/browse/FLINK-9187?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16517760#comment-16517760 ] ASF GitHub Bot commented on FLINK-9187: --- Github user lamber-ken commented on the issue: https://github.com/apache/flink/pull/5857 sorry, I reforked `flink` project, do I need to start a new PR?  > add prometheus pushgateway reporter > --- > > Key: FLINK-9187 > URL: https://issues.apache.org/jira/browse/FLINK-9187 > Project: Flink > Issue Type: New Feature > Components: Metrics >Affects Versions: 1.4.2 >Reporter: lamber-ken >Priority: Minor > Labels: features > Fix For: 1.6.0 > > > make flink system can send metrics to prometheus via pushgateway. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[GitHub] flink issue #5857: [FLINK-9187][METRICS] add prometheus pushgateway reporter

Github user lamber-ken commented on the issue: https://github.com/apache/flink/pull/5857 sorry, I reforked `flink` project, do I need to start a new PR?  ---

[jira] [Commented] (FLINK-9588) Reuse the same conditionContext with in a same computationState

[ https://issues.apache.org/jira/browse/FLINK-9588?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16517732#comment-16517732 ] ASF GitHub Bot commented on FLINK-9588: --- Github user Aitozi commented on the issue: https://github.com/apache/flink/pull/6168 Is it ok now ? @dawidwys > Reuse the same conditionContext with in a same computationState > --- > > Key: FLINK-9588 > URL: https://issues.apache.org/jira/browse/FLINK-9588 > Project: Flink > Issue Type: Improvement > Components: CEP >Reporter: aitozi >Assignee: aitozi >Priority: Major > > Now cep checkFilterCondition with a newly created Conditioncontext for each > edge, which will result in the repeatable getEventsForPattern because of the > different Conditioncontext Object. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[GitHub] flink issue #6168: [FLINK-9588][CEP]Reused context with same computation sta...

Github user Aitozi commented on the issue: https://github.com/apache/flink/pull/6168 Is it ok now ? @dawidwys ---

[jira] [Commented] (FLINK-9380) Failing end-to-end tests should not clean up logs

[ https://issues.apache.org/jira/browse/FLINK-9380?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16517718#comment-16517718 ] Deepak Sharma commented on FLINK-9380: -- Hi [~till.rohrmann], just a friendly reminder of this Jira :) > Failing end-to-end tests should not clean up logs > - > > Key: FLINK-9380 > URL: https://issues.apache.org/jira/browse/FLINK-9380 > Project: Flink > Issue Type: Bug > Components: Tests >Affects Versions: 1.5.0, 1.6.0 >Reporter: Till Rohrmann >Assignee: Deepak Sharma >Priority: Critical > Labels: test-stability > Fix For: 1.6.0, 1.5.1 > > > Some of the end-to-end tests clean up their logs also in the failure case. > This makes debugging and understanding the problem extremely difficult. > Ideally, the scripts says where it stored the respective logs. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Commented] (FLINK-9563) Migrate integration tests for CEP

[ https://issues.apache.org/jira/browse/FLINK-9563?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16517717#comment-16517717 ] ASF GitHub Bot commented on FLINK-9563: --- Github user deepaks4077 commented on the issue: https://github.com/apache/flink/pull/6170 Hi @zentol, just a friendly reminder about this pull request :) > Migrate integration tests for CEP > - > > Key: FLINK-9563 > URL: https://issues.apache.org/jira/browse/FLINK-9563 > Project: Flink > Issue Type: Sub-task >Reporter: Deepak Sharma >Assignee: Deepak Sharma >Priority: Minor > > Covers all integration tests under > apache-flink/flink-libraries/flink-cep/src/test/java/org/apache/flink/cep -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[GitHub] flink issue #6170: [FLINK-9563]: Using a custom sink function for tests in C...

Github user deepaks4077 commented on the issue: https://github.com/apache/flink/pull/6170 Hi @zentol, just a friendly reminder about this pull request :) ---

[jira] [Commented] (FLINK-9614) Improve the error message for Compiler#compile

[

https://issues.apache.org/jira/browse/FLINK-9614?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16517679#comment-16517679

]

mingleizhang commented on FLINK-9614:

-

I close this since we catch a throwable. stackoverflowerror is acceptable.

> Improve the error message for Compiler#compile

> --

>

> Key: FLINK-9614

> URL: https://issues.apache.org/jira/browse/FLINK-9614

> Project: Flink

> Issue Type: Improvement

> Components: Table API SQL

>Reporter: mingleizhang

>Assignee: mingleizhang

>Priority: Major

>

> When the below sql has too long. Like

> case when case when .

> when host in

> ('114.67.56.94','114.67.56.102','114.67.56.103','114.67.56.106','114.67.56.107','183.60.220.231','183.60.220.232','183.60.219.247','114.67.56.94','114.67.56.102','114.67.56.103','114.67.56.106','114.67.56.107','183.60.220.231','183.60.220.232','183.60.219.247','114.67.56.94','114.67.56.102','114.67.56.103','114.67.56.106','114.67.56.107','183.60.220.231','183.60.220.232','183.60.219.247')

> then 'condition'

> Then cause the {{StackOverflowError}}. And the current code is, but I would

> suggest prompt users add the {{-Xss 20m}} to solve this, instead of {{This is

> a bug..}}

> {code:java}

> trait Compiler[T] {

> @throws(classOf[CompileException])

> def compile(cl: ClassLoader, name: String, code: String): Class[T] = {

> require(cl != null, "Classloader must not be null.")

> val compiler = new SimpleCompiler()

> compiler.setParentClassLoader(cl)

> try {

> compiler.cook(code)

> } catch {

> case t: Throwable =>

> throw new InvalidProgramException("Table program cannot be compiled.

> " +

> "This is a bug. Please file an issue.", t)

> }

> compiler.getClassLoader.loadClass(name).asInstanceOf[Class[T]]

> }

> }

> {code}

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Closed] (FLINK-9614) Improve the error message for Compiler#compile

[

https://issues.apache.org/jira/browse/FLINK-9614?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

mingleizhang closed FLINK-9614.

---

> Improve the error message for Compiler#compile

> --

>

> Key: FLINK-9614

> URL: https://issues.apache.org/jira/browse/FLINK-9614

> Project: Flink

> Issue Type: Improvement

> Components: Table API SQL

>Reporter: mingleizhang

>Assignee: mingleizhang

>Priority: Major

>

> When the below sql has too long. Like

> case when case when .

> when host in

> ('114.67.56.94','114.67.56.102','114.67.56.103','114.67.56.106','114.67.56.107','183.60.220.231','183.60.220.232','183.60.219.247','114.67.56.94','114.67.56.102','114.67.56.103','114.67.56.106','114.67.56.107','183.60.220.231','183.60.220.232','183.60.219.247','114.67.56.94','114.67.56.102','114.67.56.103','114.67.56.106','114.67.56.107','183.60.220.231','183.60.220.232','183.60.219.247')

> then 'condition'

> Then cause the {{StackOverflowError}}. And the current code is, but I would

> suggest prompt users add the {{-Xss 20m}} to solve this, instead of {{This is

> a bug..}}

> {code:java}

> trait Compiler[T] {

> @throws(classOf[CompileException])

> def compile(cl: ClassLoader, name: String, code: String): Class[T] = {

> require(cl != null, "Classloader must not be null.")

> val compiler = new SimpleCompiler()

> compiler.setParentClassLoader(cl)

> try {

> compiler.cook(code)

> } catch {

> case t: Throwable =>

> throw new InvalidProgramException("Table program cannot be compiled.

> " +

> "This is a bug. Please file an issue.", t)

> }

> compiler.getClassLoader.loadClass(name).asInstanceOf[Class[T]]

> }

> }

> {code}

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Resolved] (FLINK-9614) Improve the error message for Compiler#compile

[

https://issues.apache.org/jira/browse/FLINK-9614?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

mingleizhang resolved FLINK-9614.

-

Resolution: Not A Problem

> Improve the error message for Compiler#compile

> --

>

> Key: FLINK-9614

> URL: https://issues.apache.org/jira/browse/FLINK-9614

> Project: Flink

> Issue Type: Improvement

> Components: Table API SQL

>Reporter: mingleizhang

>Assignee: mingleizhang

>Priority: Major

>

> When the below sql has too long. Like

> case when case when .

> when host in

> ('114.67.56.94','114.67.56.102','114.67.56.103','114.67.56.106','114.67.56.107','183.60.220.231','183.60.220.232','183.60.219.247','114.67.56.94','114.67.56.102','114.67.56.103','114.67.56.106','114.67.56.107','183.60.220.231','183.60.220.232','183.60.219.247','114.67.56.94','114.67.56.102','114.67.56.103','114.67.56.106','114.67.56.107','183.60.220.231','183.60.220.232','183.60.219.247')

> then 'condition'

> Then cause the {{StackOverflowError}}. And the current code is, but I would

> suggest prompt users add the {{-Xss 20m}} to solve this, instead of {{This is

> a bug..}}

> {code:java}

> trait Compiler[T] {

> @throws(classOf[CompileException])

> def compile(cl: ClassLoader, name: String, code: String): Class[T] = {

> require(cl != null, "Classloader must not be null.")

> val compiler = new SimpleCompiler()

> compiler.setParentClassLoader(cl)

> try {

> compiler.cook(code)

> } catch {

> case t: Throwable =>

> throw new InvalidProgramException("Table program cannot be compiled.

> " +

> "This is a bug. Please file an issue.", t)

> }

> compiler.getClassLoader.loadClass(name).asInstanceOf[Class[T]]

> }

> }

> {code}

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (FLINK-9618) NullPointerException in FlinkKinesisProducer when aws.region is not set and aws.endpoint is set

[

https://issues.apache.org/jira/browse/FLINK-9618?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16517645#comment-16517645

]

Aaron Langford commented on FLINK-9618:

---

I would be happy to take the work to fix this.

> NullPointerException in FlinkKinesisProducer when aws.region is not set and

> aws.endpoint is set

> ---

>

> Key: FLINK-9618

> URL: https://issues.apache.org/jira/browse/FLINK-9618

> Project: Flink

> Issue Type: Bug

> Components: Kinesis Connector

>Affects Versions: 1.5.0

> Environment: N/A

>Reporter: Aaron Langford

>Priority: Minor

> Original Estimate: 3h

> Remaining Estimate: 3h

>

> This problem arose while trying to write to a local kinesalite instance.

> Specifying the aws.region and the aws.endpoint is not allowed. However when

> the aws.region is not present, a NullPointer exception is thrown.

> Here is some example Scala code:

> {code:java}

> /**

> *

> * @param region the AWS region the stream lives in

> * @param streamName the stream to write records to

> * @param endpoint if in local dev, this points to a kinesalite instance

> * @return

> */

> def getSink(region: String,

> streamName: String,

> endpoint: Option[String]):

> FlinkKinesisProducer[ProcessedMobilePageView] = {

> val props = new Properties()

> props.put(AWSConfigConstants.AWS_CREDENTIALS_PROVIDER, "AUTO")

> endpoint match {

> case Some(uri) => props.put(AWSConfigConstants.AWS_ENDPOINT, uri)

> case None => props.put(AWSConfigConstants.AWS_REGION, region)

> }

> val producer = new FlinkKinesisProducer[ProcessedMobilePageView](

> new JsonSerializer[ProcessedMobilePageView](DefaultSerializationBuilder),

> props

> )

> producer.setDefaultStream(streamName)

> producer

> }

> {code}

> To produce the NullPointerException, pass in `Some("localhost:4567")` for

> endpoint.

> The source of the error is found at

> org.apache.flink.streaming.connectors.kinesis.util.KinesisConfigUtil.java, on

> line 194. This line should perform some kind of check if aws.endpoint is

> present before grabbing it from the Properties object.

>

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Created] (FLINK-9618) NullPointerException in FlinkKinesisProducer when aws.region is not set and aws.endpoint is set

Aaron Langford created FLINK-9618:

-

Summary: NullPointerException in FlinkKinesisProducer when

aws.region is not set and aws.endpoint is set

Key: FLINK-9618

URL: https://issues.apache.org/jira/browse/FLINK-9618

Project: Flink

Issue Type: Bug

Components: Kinesis Connector

Affects Versions: 1.5.0

Environment: N/A

Reporter: Aaron Langford

This problem arose while trying to write to a local kinesalite instance.

Specifying the aws.region and the aws.endpoint is not allowed. However when the

aws.region is not present, a NullPointer exception is thrown.

Here is some example Scala code:

{code:java}

/**

*

* @param region the AWS region the stream lives in

* @param streamName the stream to write records to

* @param endpoint if in local dev, this points to a kinesalite instance

* @return

*/

def getSink(region: String,

streamName: String,

endpoint: Option[String]):

FlinkKinesisProducer[ProcessedMobilePageView] = {

val props = new Properties()

props.put(AWSConfigConstants.AWS_CREDENTIALS_PROVIDER, "AUTO")

endpoint match {

case Some(uri) => props.put(AWSConfigConstants.AWS_ENDPOINT, uri)

case None => props.put(AWSConfigConstants.AWS_REGION, region)

}

val producer = new FlinkKinesisProducer[ProcessedMobilePageView](

new JsonSerializer[ProcessedMobilePageView](DefaultSerializationBuilder),

props

)

producer.setDefaultStream(streamName)

producer

}

{code}

To produce the NullPointerException, pass in `Some("localhost:4567")` for

endpoint.

The source of the error is found at

org.apache.flink.streaming.connectors.kinesis.util.KinesisConfigUtil.java, on

line 194. This line should perform some kind of check if aws.endpoint is

present before grabbing it from the Properties object.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (FLINK-9611) Allow for user-defined artifacts to be specified as part of a mesos overlay

[ https://issues.apache.org/jira/browse/FLINK-9611?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16517528#comment-16517528 ] Eron Wright commented on FLINK-9611: - I really like this idea, except for the proposed name because it has a connotation of overlaying the 'user' (which is sort of what happens with the Kerberos overlay). Maybe 'MesosCustomOverlay'. > Allow for user-defined artifacts to be specified as part of a mesos overlay > --- > > Key: FLINK-9611 > URL: https://issues.apache.org/jira/browse/FLINK-9611 > Project: Flink > Issue Type: Improvement > Components: Configuration, Docker, Mesos >Affects Versions: 1.5.0 >Reporter: Addison Higham >Priority: Major > > NOTE: this assumes mesos, but this improvement could also be useful for > future container deployments. > Currently, when deploying to mesos, the "Overlay" functionality is used to > determine which artifacts are to be downloaded into the container. However, > there isn't a way to plug in your own artifacts to be downloaded into the > container. This can cause problems with certain deployment models. > For example, if you are running flink in docker on mesos, you cannot easily > use a private docker image. Typically with mesos and private docker images, > you specify credentials as a URI to be downloaded into the container that > give permissions to download the private image. Typically, this credentials > expire after a few days, so baking them into a docker host isn't a solution. > It would make sense to add a `MesosUserOverlay` that would simplify take some > new configuration parameters and add any custom artifacts (or possibly also > environment variables?) > Another solution (or longer term solution) might be to allow for dynamically > loading an overlay class for even further customization of the container > specification. > > > > > > -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Commented] (FLINK-9612) Add option for minimal artifacts being pulled in Mesos

[ https://issues.apache.org/jira/browse/FLINK-9612?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16517525#comment-16517525 ] Eron Wright commented on FLINK-9612: - Yes it makes sense that the overlays would be selective and configurable, and especially true that the Flink binaries aren't needed in most scenarios involving a docker image. Specifically on that, I wonder if the Flink conf directory should be treated differently from the bin/libs (perhaps as a different overlay), since the image might be 'stock'. > Add option for minimal artifacts being pulled in Mesos > -- > > Key: FLINK-9612 > URL: https://issues.apache.org/jira/browse/FLINK-9612 > Project: Flink > Issue Type: Improvement > Components: Configuration, Docker, Mesos >Reporter: Addison Higham >Priority: Major > > NOTE: this assumes mesos, but this improvement could also be useful for > future container deployments. > Currently, in mesos, the FlinkDistributionOverlay copies the entire `conf`, > `bin`, and `lib` folders from the running JobManager/ResourceManager. When > using docker with a pre-installed flink distribution, this is relatively > inefficient as it pulls jars that are already baked into the container image. > A new option that disables pulling most (if not all?) of the > FlinkDistributionOverlay could allow for much faster and more scalable > provisions of TaskManagers. As it currently stands, trying to run a few > hundred TaskManagers is likely to result in poor performance in pulling all > the artifacts from the MesosArtifactServer -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Commented] (FLINK-9599) Implement generic mechanism to receive files via rest

[

https://issues.apache.org/jira/browse/FLINK-9599?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16517490#comment-16517490

]

ASF GitHub Bot commented on FLINK-9599:

---

Github user tillrohrmann commented on a diff in the pull request:

https://github.com/apache/flink/pull/6178#discussion_r196559093

--- Diff:

flink-runtime/src/test/java/org/apache/flink/runtime/rest/FileUploadHandlerTest.java

---

@@ -0,0 +1,471 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.rest;

+

+import org.apache.flink.api.common.time.Time;

+import org.apache.flink.api.java.tuple.Tuple2;

+import org.apache.flink.configuration.ConfigConstants;

+import org.apache.flink.configuration.Configuration;

+import org.apache.flink.configuration.RestOptions;

+import org.apache.flink.configuration.WebOptions;

+import org.apache.flink.runtime.rest.handler.AbstractRestHandler;

+import org.apache.flink.runtime.rest.handler.FileUploads;

+import org.apache.flink.runtime.rest.handler.HandlerRequest;

+import org.apache.flink.runtime.rest.handler.RestHandlerException;

+import org.apache.flink.runtime.rest.handler.RestHandlerSpecification;

+import org.apache.flink.runtime.rest.messages.EmptyMessageParameters;

+import org.apache.flink.runtime.rest.messages.EmptyRequestBody;

+import org.apache.flink.runtime.rest.messages.EmptyResponseBody;

+import org.apache.flink.runtime.rest.messages.MessageHeaders;

+import org.apache.flink.runtime.rest.messages.RequestBody;

+import org.apache.flink.runtime.rest.util.RestMapperUtils;

+import org.apache.flink.runtime.rpc.RpcUtils;

+import org.apache.flink.runtime.webmonitor.RestfulGateway;

+import org.apache.flink.runtime.webmonitor.retriever.GatewayRetriever;

+import org.apache.flink.util.Preconditions;

+

+import

org.apache.flink.shaded.jackson2.com.fasterxml.jackson.annotation.JsonCreator;

+import

org.apache.flink.shaded.jackson2.com.fasterxml.jackson.annotation.JsonProperty;

+import

org.apache.flink.shaded.jackson2.com.fasterxml.jackson.databind.ObjectMapper;

+import

org.apache.flink.shaded.netty4.io.netty.channel.ChannelInboundHandler;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.HttpResponseStatus;

+

+import okhttp3.MediaType;

+import okhttp3.MultipartBody;

+import okhttp3.OkHttpClient;

+import okhttp3.Request;

+import okhttp3.Response;

+import org.junit.AfterClass;

+import org.junit.BeforeClass;

+import org.junit.ClassRule;

+import org.junit.Test;

+import org.junit.rules.TemporaryFolder;

+

+import javax.annotation.Nonnull;

+

+import java.io.File;

+import java.io.IOException;

+import java.io.StringWriter;

+import java.nio.file.Files;

+import java.nio.file.Path;

+import java.util.Arrays;

+import java.util.Collection;

+import java.util.Collections;

+import java.util.List;

+import java.util.Random;

+import java.util.concurrent.CompletableFuture;

+

+import static java.util.Objects.requireNonNull;

+import static org.junit.Assert.assertArrayEquals;

+import static org.junit.Assert.assertEquals;

+import static org.mockito.Matchers.any;

+import static org.mockito.Mockito.mock;

+import static org.mockito.Mockito.when;

+

+/**

+ * Tests for the {@link FileUploadHandler}. Ensures that multipart http

messages containing files and/or json are properly

+ * handled.

+ */

+public class FileUploadHandlerTest {

--- End diff --

Missing `extends TestLogger`

> Implement generic mechanism to receive files via rest

> -

>

> Key: FLINK-9599

> URL: https://issues.apache.org/jira/browse/FLINK-9599

> Project: Flink

> Issue Type: New Feature

> Components: REST

>Reporter: Chesnay Schepler

>Assignee: Chesnay Schepler

>Priority: Major

>

[GitHub] flink pull request #6178: [FLINK-9599][rest] Implement generic mechanism to ...

Github user tillrohrmann commented on a diff in the pull request:

https://github.com/apache/flink/pull/6178#discussion_r196556101

--- Diff:

flink-runtime/src/test/java/org/apache/flink/runtime/rest/handler/FileUploadsTest.java

---

@@ -0,0 +1,124 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.rest.handler;

+

+import org.junit.Assert;

+import org.junit.Rule;

+import org.junit.Test;

+import org.junit.rules.TemporaryFolder;

+

+import java.io.IOException;

+import java.nio.file.Files;

+import java.nio.file.Path;

+import java.nio.file.Paths;

+import java.util.Collection;

+import java.util.Collections;

+

+/**

+ * Tests for {@link FileUploads}.

+ */

+public class FileUploadsTest {

--- End diff --

`extends TestLogger` is missing

---

[GitHub] flink pull request #6178: [FLINK-9599][rest] Implement generic mechanism to ...

Github user tillrohrmann commented on a diff in the pull request:

https://github.com/apache/flink/pull/6178#discussion_r196453980

--- Diff:

flink-runtime/src/main/java/org/apache/flink/runtime/rest/handler/HandlerRequest.java

---

@@ -129,4 +137,9 @@ public R getRequestBody() {

return queryParameter.getValue();

}

}

+

+ @Nonnull

+ public FileUploads getFileUploads() {

+ return uploadedFiles;

+ }

--- End diff --

I would not expose `FileUploads` to the user but rather return a

`Collection`.

---

[jira] [Commented] (FLINK-9599) Implement generic mechanism to receive files via rest

[

https://issues.apache.org/jira/browse/FLINK-9599?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16517480#comment-16517480

]

ASF GitHub Bot commented on FLINK-9599:

---

Github user tillrohrmann commented on a diff in the pull request:

https://github.com/apache/flink/pull/6178#discussion_r196556101

--- Diff:

flink-runtime/src/test/java/org/apache/flink/runtime/rest/handler/FileUploadsTest.java

---

@@ -0,0 +1,124 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.rest.handler;

+

+import org.junit.Assert;

+import org.junit.Rule;

+import org.junit.Test;

+import org.junit.rules.TemporaryFolder;

+

+import java.io.IOException;

+import java.nio.file.Files;

+import java.nio.file.Path;

+import java.nio.file.Paths;

+import java.util.Collection;

+import java.util.Collections;

+

+/**

+ * Tests for {@link FileUploads}.

+ */

+public class FileUploadsTest {

--- End diff --

`extends TestLogger` is missing

> Implement generic mechanism to receive files via rest

> -

>

> Key: FLINK-9599

> URL: https://issues.apache.org/jira/browse/FLINK-9599

> Project: Flink

> Issue Type: New Feature

> Components: REST

>Reporter: Chesnay Schepler

>Assignee: Chesnay Schepler

>Priority: Major

> Fix For: 1.6.0

>

>

> As a prerequisite for a cleaner implementation of FLINK-9280 we should

> * extend the RestClient to allow the upload of Files

> * extend FileUploadHandler to accept mixed multi-part requests (json + files)

> * generalize mechanism for accessing uploaded files in {{AbstractHandler}}

> Uploaded files can be forwarded to subsequent handlers as an attribute,

> similar to the existing special case for the {{JarUploadHandler}}. The JSON

> body can be forwarded by replacing the incoming http requests with a simple

> {{DefaultFullHttpRequest}}.

> Uploaded files will be retrievable through the {{HandlerRequest}}.

> I'm not certain if/how we can document that a handler accepts files.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[GitHub] flink pull request #6178: [FLINK-9599][rest] Implement generic mechanism to ...

Github user tillrohrmann commented on a diff in the pull request:

https://github.com/apache/flink/pull/6178#discussion_r196559335

--- Diff:

flink-runtime/src/test/java/org/apache/flink/runtime/rest/FileUploadHandlerTest.java

---

@@ -0,0 +1,471 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.rest;

+

+import org.apache.flink.api.common.time.Time;

+import org.apache.flink.api.java.tuple.Tuple2;

+import org.apache.flink.configuration.ConfigConstants;

+import org.apache.flink.configuration.Configuration;

+import org.apache.flink.configuration.RestOptions;

+import org.apache.flink.configuration.WebOptions;

+import org.apache.flink.runtime.rest.handler.AbstractRestHandler;

+import org.apache.flink.runtime.rest.handler.FileUploads;

+import org.apache.flink.runtime.rest.handler.HandlerRequest;

+import org.apache.flink.runtime.rest.handler.RestHandlerException;

+import org.apache.flink.runtime.rest.handler.RestHandlerSpecification;

+import org.apache.flink.runtime.rest.messages.EmptyMessageParameters;

+import org.apache.flink.runtime.rest.messages.EmptyRequestBody;

+import org.apache.flink.runtime.rest.messages.EmptyResponseBody;

+import org.apache.flink.runtime.rest.messages.MessageHeaders;

+import org.apache.flink.runtime.rest.messages.RequestBody;

+import org.apache.flink.runtime.rest.util.RestMapperUtils;

+import org.apache.flink.runtime.rpc.RpcUtils;

+import org.apache.flink.runtime.webmonitor.RestfulGateway;

+import org.apache.flink.runtime.webmonitor.retriever.GatewayRetriever;

+import org.apache.flink.util.Preconditions;

+

+import

org.apache.flink.shaded.jackson2.com.fasterxml.jackson.annotation.JsonCreator;

+import

org.apache.flink.shaded.jackson2.com.fasterxml.jackson.annotation.JsonProperty;

+import

org.apache.flink.shaded.jackson2.com.fasterxml.jackson.databind.ObjectMapper;

+import

org.apache.flink.shaded.netty4.io.netty.channel.ChannelInboundHandler;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.HttpResponseStatus;

+

+import okhttp3.MediaType;

+import okhttp3.MultipartBody;

+import okhttp3.OkHttpClient;

+import okhttp3.Request;

+import okhttp3.Response;

+import org.junit.AfterClass;

+import org.junit.BeforeClass;

+import org.junit.ClassRule;

+import org.junit.Test;

+import org.junit.rules.TemporaryFolder;

+

+import javax.annotation.Nonnull;

+

+import java.io.File;

+import java.io.IOException;

+import java.io.StringWriter;

+import java.nio.file.Files;

+import java.nio.file.Path;

+import java.util.Arrays;

+import java.util.Collection;

+import java.util.Collections;

+import java.util.List;

+import java.util.Random;

+import java.util.concurrent.CompletableFuture;

+

+import static java.util.Objects.requireNonNull;

+import static org.junit.Assert.assertArrayEquals;

+import static org.junit.Assert.assertEquals;

+import static org.mockito.Matchers.any;

+import static org.mockito.Mockito.mock;

+import static org.mockito.Mockito.when;

+

+/**

+ * Tests for the {@link FileUploadHandler}. Ensures that multipart http

messages containing files and/or json are properly

+ * handled.

+ */

+public class FileUploadHandlerTest {

+

+ private static final ObjectMapper OBJECT_MAPPER =

RestMapperUtils.getStrictObjectMapper();

+ private static final Random RANDOM = new Random();

+

+ @ClassRule

+ public static final TemporaryFolder TEMPORARY_FOLDER = new

TemporaryFolder();

+

+ private static RestServerEndpoint serverEndpoint;

+ private static String serverAddress;

+

+ private static MultipartMixedHandler mixedHandler;

+ private static MultipartJsonHandler jsonHandler;

+ private static MultipartFileHandler fileHandler;

+ private static File file1;

+ private static File file2;

+

+ @BeforeClass

+ public static void setup() throws Exception {

+

[GitHub] flink pull request #6178: [FLINK-9599][rest] Implement generic mechanism to ...

Github user tillrohrmann commented on a diff in the pull request:

https://github.com/apache/flink/pull/6178#discussion_r196558949

--- Diff:

flink-runtime/src/main/java/org/apache/flink/runtime/rest/messages/MessageHeaders.java

---

@@ -63,4 +63,13 @@

* @return description for the header

*/

String getDescription();

+

+ /**

+* Returns whether this header allows file uploads.

+*

+* @return whether this header allows file uploads

+*/

+ default boolean acceptsFileUploads() {

+ return false;

+ }

--- End diff --

Should this maybe go into `UntypedResponseMessageHeaders`? At the moment

one can upload files for a `AbstractHandler` (e.g.

`AbstractTaskManagerFileHandler`) implementation and also has access to it via

the `HandlerRequest` without being able to specify whether file upload is

allowed or not.

---

[jira] [Commented] (FLINK-9599) Implement generic mechanism to receive files via rest

[

https://issues.apache.org/jira/browse/FLINK-9599?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16517479#comment-16517479

]

ASF GitHub Bot commented on FLINK-9599:

---

Github user tillrohrmann commented on a diff in the pull request:

https://github.com/apache/flink/pull/6178#discussion_r196452418

--- Diff:

flink-runtime/src/main/java/org/apache/flink/runtime/rest/FileUploadHandler.java

---

@@ -116,5 +136,16 @@ private void reset() {

currentHttpPostRequestDecoder.destroy();

currentHttpPostRequestDecoder = null;

currentHttpRequest = null;

+ currentUploadDir = null;

+ currentJsonPayload = null;

+ }

+

+ public static Optional

getMultipartJsonPayload(ChannelHandlerContext ctx) {

+ return

Optional.ofNullable(ctx.channel().attr(UPLOADED_JSON).get());

+ }

--- End diff --

By sending the json payload down stream, we could avoid having this method.

> Implement generic mechanism to receive files via rest

> -

>

> Key: FLINK-9599

> URL: https://issues.apache.org/jira/browse/FLINK-9599

> Project: Flink

> Issue Type: New Feature

> Components: REST

>Reporter: Chesnay Schepler

>Assignee: Chesnay Schepler

>Priority: Major

> Fix For: 1.6.0

>

>

> As a prerequisite for a cleaner implementation of FLINK-9280 we should

> * extend the RestClient to allow the upload of Files

> * extend FileUploadHandler to accept mixed multi-part requests (json + files)

> * generalize mechanism for accessing uploaded files in {{AbstractHandler}}

> Uploaded files can be forwarded to subsequent handlers as an attribute,

> similar to the existing special case for the {{JarUploadHandler}}. The JSON

> body can be forwarded by replacing the incoming http requests with a simple

> {{DefaultFullHttpRequest}}.

> Uploaded files will be retrievable through the {{HandlerRequest}}.

> I'm not certain if/how we can document that a handler accepts files.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (FLINK-9599) Implement generic mechanism to receive files via rest

[

https://issues.apache.org/jira/browse/FLINK-9599?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16517492#comment-16517492

]

ASF GitHub Bot commented on FLINK-9599:

---

Github user tillrohrmann commented on a diff in the pull request:

https://github.com/apache/flink/pull/6178#discussion_r196557260

--- Diff:

flink-runtime/src/main/java/org/apache/flink/runtime/rest/handler/HandlerRequest.java

---

@@ -39,15 +41,21 @@

public class HandlerRequest {

private final R requestBody;

+ private final FileUploads uploadedFiles;

--- End diff --

This could also be a `Collection`

> Implement generic mechanism to receive files via rest

> -

>

> Key: FLINK-9599

> URL: https://issues.apache.org/jira/browse/FLINK-9599

> Project: Flink

> Issue Type: New Feature

> Components: REST

>Reporter: Chesnay Schepler

>Assignee: Chesnay Schepler

>Priority: Major

> Fix For: 1.6.0

>

>

> As a prerequisite for a cleaner implementation of FLINK-9280 we should

> * extend the RestClient to allow the upload of Files

> * extend FileUploadHandler to accept mixed multi-part requests (json + files)

> * generalize mechanism for accessing uploaded files in {{AbstractHandler}}

> Uploaded files can be forwarded to subsequent handlers as an attribute,

> similar to the existing special case for the {{JarUploadHandler}}. The JSON

> body can be forwarded by replacing the incoming http requests with a simple

> {{DefaultFullHttpRequest}}.

> Uploaded files will be retrievable through the {{HandlerRequest}}.

> I'm not certain if/how we can document that a handler accepts files.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[GitHub] flink pull request #6178: [FLINK-9599][rest] Implement generic mechanism to ...

Github user tillrohrmann commented on a diff in the pull request:

https://github.com/apache/flink/pull/6178#discussion_r196559093

--- Diff:

flink-runtime/src/test/java/org/apache/flink/runtime/rest/FileUploadHandlerTest.java

---

@@ -0,0 +1,471 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.rest;

+

+import org.apache.flink.api.common.time.Time;

+import org.apache.flink.api.java.tuple.Tuple2;

+import org.apache.flink.configuration.ConfigConstants;

+import org.apache.flink.configuration.Configuration;

+import org.apache.flink.configuration.RestOptions;

+import org.apache.flink.configuration.WebOptions;

+import org.apache.flink.runtime.rest.handler.AbstractRestHandler;

+import org.apache.flink.runtime.rest.handler.FileUploads;

+import org.apache.flink.runtime.rest.handler.HandlerRequest;

+import org.apache.flink.runtime.rest.handler.RestHandlerException;

+import org.apache.flink.runtime.rest.handler.RestHandlerSpecification;

+import org.apache.flink.runtime.rest.messages.EmptyMessageParameters;

+import org.apache.flink.runtime.rest.messages.EmptyRequestBody;

+import org.apache.flink.runtime.rest.messages.EmptyResponseBody;

+import org.apache.flink.runtime.rest.messages.MessageHeaders;

+import org.apache.flink.runtime.rest.messages.RequestBody;

+import org.apache.flink.runtime.rest.util.RestMapperUtils;

+import org.apache.flink.runtime.rpc.RpcUtils;

+import org.apache.flink.runtime.webmonitor.RestfulGateway;

+import org.apache.flink.runtime.webmonitor.retriever.GatewayRetriever;

+import org.apache.flink.util.Preconditions;

+

+import

org.apache.flink.shaded.jackson2.com.fasterxml.jackson.annotation.JsonCreator;

+import

org.apache.flink.shaded.jackson2.com.fasterxml.jackson.annotation.JsonProperty;

+import

org.apache.flink.shaded.jackson2.com.fasterxml.jackson.databind.ObjectMapper;

+import

org.apache.flink.shaded.netty4.io.netty.channel.ChannelInboundHandler;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.HttpResponseStatus;

+

+import okhttp3.MediaType;

+import okhttp3.MultipartBody;

+import okhttp3.OkHttpClient;

+import okhttp3.Request;

+import okhttp3.Response;

+import org.junit.AfterClass;

+import org.junit.BeforeClass;

+import org.junit.ClassRule;

+import org.junit.Test;

+import org.junit.rules.TemporaryFolder;

+

+import javax.annotation.Nonnull;

+

+import java.io.File;

+import java.io.IOException;

+import java.io.StringWriter;

+import java.nio.file.Files;

+import java.nio.file.Path;

+import java.util.Arrays;

+import java.util.Collection;

+import java.util.Collections;

+import java.util.List;

+import java.util.Random;

+import java.util.concurrent.CompletableFuture;

+

+import static java.util.Objects.requireNonNull;

+import static org.junit.Assert.assertArrayEquals;

+import static org.junit.Assert.assertEquals;

+import static org.mockito.Matchers.any;

+import static org.mockito.Mockito.mock;

+import static org.mockito.Mockito.when;

+

+/**

+ * Tests for the {@link FileUploadHandler}. Ensures that multipart http

messages containing files and/or json are properly

+ * handled.

+ */

+public class FileUploadHandlerTest {

--- End diff --

Missing `extends TestLogger`

---

[GitHub] flink pull request #6178: [FLINK-9599][rest] Implement generic mechanism to ...

Github user tillrohrmann commented on a diff in the pull request:

https://github.com/apache/flink/pull/6178#discussion_r196554025

--- Diff:

flink-runtime/src/main/java/org/apache/flink/runtime/rest/AbstractHandler.java

---

@@ -103,77 +104,74 @@ protected void respondAsLeader(ChannelHandlerContext

ctx, RoutedRequest routedRe

return;

}

- ByteBuf msgContent = ((FullHttpRequest)

httpRequest).content();

+ final ByteBuf msgContent;

+ Optional multipartJsonPayload =

FileUploadHandler.getMultipartJsonPayload(ctx);

+ if (multipartJsonPayload.isPresent()) {

+ msgContent =

Unpooled.wrappedBuffer(multipartJsonPayload.get());

--- End diff --

Let's send the Json payload as a proper `HttpRequest`, then we don't have

this special casing here.

---

[jira] [Commented] (FLINK-9599) Implement generic mechanism to receive files via rest

[

https://issues.apache.org/jira/browse/FLINK-9599?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16517481#comment-16517481

]

ASF GitHub Bot commented on FLINK-9599:

---

Github user tillrohrmann commented on a diff in the pull request:

https://github.com/apache/flink/pull/6178#discussion_r196452583

--- Diff:

flink-runtime/src/main/java/org/apache/flink/runtime/rest/FileUploadHandler.java

---

@@ -116,5 +136,16 @@ private void reset() {

currentHttpPostRequestDecoder.destroy();

currentHttpPostRequestDecoder = null;

currentHttpRequest = null;

+ currentUploadDir = null;

+ currentJsonPayload = null;

+ }

+

+ public static Optional

getMultipartJsonPayload(ChannelHandlerContext ctx) {

+ return

Optional.ofNullable(ctx.channel().attr(UPLOADED_JSON).get());

+ }

+

+ public static FileUploads getMultipartFileUploads(ChannelHandlerContext

ctx) {

+ return

Optional.ofNullable(ctx.channel().attr(UPLOADED_FILES).get())

+ .orElse(FileUploads.EMPTY);

--- End diff --

I would suggest to simply return the upload directory.

> Implement generic mechanism to receive files via rest

> -

>

> Key: FLINK-9599

> URL: https://issues.apache.org/jira/browse/FLINK-9599

> Project: Flink

> Issue Type: New Feature

> Components: REST

>Reporter: Chesnay Schepler

>Assignee: Chesnay Schepler

>Priority: Major

> Fix For: 1.6.0

>

>

> As a prerequisite for a cleaner implementation of FLINK-9280 we should

> * extend the RestClient to allow the upload of Files

> * extend FileUploadHandler to accept mixed multi-part requests (json + files)

> * generalize mechanism for accessing uploaded files in {{AbstractHandler}}

> Uploaded files can be forwarded to subsequent handlers as an attribute,

> similar to the existing special case for the {{JarUploadHandler}}. The JSON

> body can be forwarded by replacing the incoming http requests with a simple

> {{DefaultFullHttpRequest}}.

> Uploaded files will be retrievable through the {{HandlerRequest}}.

> I'm not certain if/how we can document that a handler accepts files.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (FLINK-9599) Implement generic mechanism to receive files via rest

[

https://issues.apache.org/jira/browse/FLINK-9599?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16517477#comment-16517477

]

ASF GitHub Bot commented on FLINK-9599:

---

Github user tillrohrmann commented on a diff in the pull request:

https://github.com/apache/flink/pull/6178#discussion_r196452755

--- Diff:

flink-runtime/src/main/java/org/apache/flink/runtime/rest/FileUploadHandler.java

---

@@ -95,14 +107,22 @@ protected void channelRead0(final

ChannelHandlerContext ctx, final HttpObject ms

final DiskFileUpload fileUpload =

(DiskFileUpload) data;

checkState(fileUpload.isCompleted());

- final Path dest =

uploadDir.resolve(Paths.get(UUID.randomUUID() +

- "_" +

fileUpload.getFilename()));

+ final Path dest =

currentUploadDir.resolve(fileUpload.getFilename());

fileUpload.renameTo(dest.toFile());

-

ctx.channel().attr(UPLOADED_FILE).set(dest);

+ } else if (data.getHttpDataType() ==

InterfaceHttpData.HttpDataType.Attribute) {

+ final Attribute request = (Attribute)

data;

+ // this could also be implemented by

using the first found Attribute as the payload

+ if

(data.getName().equals(HTTP_ATTRIBUTE_REQUEST)) {

+ currentJsonPayload =

request.get();

+ } else {

+ LOG.warn("Received unknown

attribute {}, will be ignored.", data.getName());

--- End diff --

Should we rather fail?

> Implement generic mechanism to receive files via rest

> -

>

> Key: FLINK-9599

> URL: https://issues.apache.org/jira/browse/FLINK-9599

> Project: Flink

> Issue Type: New Feature

> Components: REST

>Reporter: Chesnay Schepler

>Assignee: Chesnay Schepler

>Priority: Major

> Fix For: 1.6.0

>

>

> As a prerequisite for a cleaner implementation of FLINK-9280 we should

> * extend the RestClient to allow the upload of Files

> * extend FileUploadHandler to accept mixed multi-part requests (json + files)

> * generalize mechanism for accessing uploaded files in {{AbstractHandler}}

> Uploaded files can be forwarded to subsequent handlers as an attribute,

> similar to the existing special case for the {{JarUploadHandler}}. The JSON

> body can be forwarded by replacing the incoming http requests with a simple

> {{DefaultFullHttpRequest}}.

> Uploaded files will be retrievable through the {{HandlerRequest}}.

> I'm not certain if/how we can document that a handler accepts files.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[GitHub] flink pull request #6178: [FLINK-9599][rest] Implement generic mechanism to ...

Github user tillrohrmann commented on a diff in the pull request:

https://github.com/apache/flink/pull/6178#discussion_r196557260

--- Diff:

flink-runtime/src/main/java/org/apache/flink/runtime/rest/handler/HandlerRequest.java

---

@@ -39,15 +41,21 @@

public class HandlerRequest {

private final R requestBody;

+ private final FileUploads uploadedFiles;

--- End diff --

This could also be a `Collection`

---

[GitHub] flink pull request #6178: [FLINK-9599][rest] Implement generic mechanism to ...

Github user tillrohrmann commented on a diff in the pull request:

https://github.com/apache/flink/pull/6178#discussion_r196452418

--- Diff:

flink-runtime/src/main/java/org/apache/flink/runtime/rest/FileUploadHandler.java

---

@@ -116,5 +136,16 @@ private void reset() {

currentHttpPostRequestDecoder.destroy();

currentHttpPostRequestDecoder = null;

currentHttpRequest = null;

+ currentUploadDir = null;

+ currentJsonPayload = null;

+ }

+

+ public static Optional

getMultipartJsonPayload(ChannelHandlerContext ctx) {

+ return

Optional.ofNullable(ctx.channel().attr(UPLOADED_JSON).get());

+ }

--- End diff --

By sending the json payload down stream, we could avoid having this method.

---

[GitHub] flink pull request #6178: [FLINK-9599][rest] Implement generic mechanism to ...

Github user tillrohrmann commented on a diff in the pull request:

https://github.com/apache/flink/pull/6178#discussion_r196560163

--- Diff:

flink-runtime/src/test/java/org/apache/flink/runtime/rest/FileUploadHandlerTest.java

---

@@ -0,0 +1,471 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.rest;

+

+import org.apache.flink.api.common.time.Time;

+import org.apache.flink.api.java.tuple.Tuple2;

+import org.apache.flink.configuration.ConfigConstants;

+import org.apache.flink.configuration.Configuration;

+import org.apache.flink.configuration.RestOptions;

+import org.apache.flink.configuration.WebOptions;

+import org.apache.flink.runtime.rest.handler.AbstractRestHandler;

+import org.apache.flink.runtime.rest.handler.FileUploads;

+import org.apache.flink.runtime.rest.handler.HandlerRequest;

+import org.apache.flink.runtime.rest.handler.RestHandlerException;

+import org.apache.flink.runtime.rest.handler.RestHandlerSpecification;

+import org.apache.flink.runtime.rest.messages.EmptyMessageParameters;

+import org.apache.flink.runtime.rest.messages.EmptyRequestBody;

+import org.apache.flink.runtime.rest.messages.EmptyResponseBody;

+import org.apache.flink.runtime.rest.messages.MessageHeaders;

+import org.apache.flink.runtime.rest.messages.RequestBody;

+import org.apache.flink.runtime.rest.util.RestMapperUtils;

+import org.apache.flink.runtime.rpc.RpcUtils;

+import org.apache.flink.runtime.webmonitor.RestfulGateway;

+import org.apache.flink.runtime.webmonitor.retriever.GatewayRetriever;

+import org.apache.flink.util.Preconditions;

+

+import

org.apache.flink.shaded.jackson2.com.fasterxml.jackson.annotation.JsonCreator;

+import

org.apache.flink.shaded.jackson2.com.fasterxml.jackson.annotation.JsonProperty;

+import

org.apache.flink.shaded.jackson2.com.fasterxml.jackson.databind.ObjectMapper;

+import

org.apache.flink.shaded.netty4.io.netty.channel.ChannelInboundHandler;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.HttpResponseStatus;

+

+import okhttp3.MediaType;

+import okhttp3.MultipartBody;

+import okhttp3.OkHttpClient;

+import okhttp3.Request;

+import okhttp3.Response;

+import org.junit.AfterClass;

+import org.junit.BeforeClass;

+import org.junit.ClassRule;

+import org.junit.Test;

+import org.junit.rules.TemporaryFolder;

+

+import javax.annotation.Nonnull;

+

+import java.io.File;

+import java.io.IOException;

+import java.io.StringWriter;

+import java.nio.file.Files;

+import java.nio.file.Path;

+import java.util.Arrays;

+import java.util.Collection;

+import java.util.Collections;

+import java.util.List;

+import java.util.Random;

+import java.util.concurrent.CompletableFuture;

+

+import static java.util.Objects.requireNonNull;

+import static org.junit.Assert.assertArrayEquals;

+import static org.junit.Assert.assertEquals;

+import static org.mockito.Matchers.any;

+import static org.mockito.Mockito.mock;

+import static org.mockito.Mockito.when;

+

+/**

+ * Tests for the {@link FileUploadHandler}. Ensures that multipart http

messages containing files and/or json are properly

+ * handled.

+ */

+public class FileUploadHandlerTest {

+

+ private static final ObjectMapper OBJECT_MAPPER =

RestMapperUtils.getStrictObjectMapper();

+ private static final Random RANDOM = new Random();

+

+ @ClassRule

+ public static final TemporaryFolder TEMPORARY_FOLDER = new

TemporaryFolder();

+

+ private static RestServerEndpoint serverEndpoint;

+ private static String serverAddress;

+

+ private static MultipartMixedHandler mixedHandler;

+ private static MultipartJsonHandler jsonHandler;

+ private static MultipartFileHandler fileHandler;

+ private static File file1;

+ private static File file2;

+

+ @BeforeClass

+ public static void setup() throws Exception {

+

[GitHub] flink pull request #6178: [FLINK-9599][rest] Implement generic mechanism to ...

Github user tillrohrmann commented on a diff in the pull request:

https://github.com/apache/flink/pull/6178#discussion_r196555487

--- Diff:

flink-runtime/src/main/java/org/apache/flink/runtime/rest/handler/FileUploads.java

---

@@ -0,0 +1,136 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.rest.handler;

+

+import org.apache.flink.util.Preconditions;

+

+import java.io.FileNotFoundException;

+import java.io.IOException;

+import java.nio.file.FileVisitResult;

+import java.nio.file.Files;

+import java.nio.file.Path;

+import java.nio.file.SimpleFileVisitor;

+import java.nio.file.attribute.BasicFileAttributes;

+import java.util.ArrayList;

+import java.util.Collection;

+import java.util.Collections;

+

+/**

+ * A container for uploaded files.

+ *

+ * Implementation note: The constructor also accepts directories to

ensure that the upload directories are cleaned up.

+ * For convenience during testing it also accepts files directly.

+ */

+public final class FileUploads implements AutoCloseable {

+ private final Collection directoriesToClean;

+ private final Collection uploadedFiles;

+

+ @SuppressWarnings("resource")

+ public static final FileUploads EMPTY = new FileUploads();

+

+ private FileUploads() {

+ this.directoriesToClean = Collections.emptyList();

+ this.uploadedFiles = Collections.emptyList();

+ }

+

+ public FileUploads(Collection uploadedFilesOrDirectory) throws

IOException {

+ final Collection files = new ArrayList<>(4);

+ final Collection directories = new ArrayList<>(1);

+ for (Path fileOrDirectory : uploadedFilesOrDirectory) {

+

Preconditions.checkArgument(fileOrDirectory.isAbsolute(), "Path must be

absolute.");

+ if (Files.isDirectory(fileOrDirectory)) {

+ directories.add(fileOrDirectory);

+ FileAdderVisitor visitor = new

FileAdderVisitor();

+ Files.walkFileTree(fileOrDirectory, visitor);

+ files.addAll(visitor.get());

+ } else {

+ files.add(fileOrDirectory);

+ }

+ }

+ directoriesToClean =

Collections.unmodifiableCollection(directories);

+ uploadedFiles = Collections.unmodifiableCollection(files);

+ }

+

+ public Collection getUploadedFiles() {

+ return uploadedFiles;

+ }

+

+ @Override

+ public void close() throws IOException {

+ for (Path file : uploadedFiles) {

+ try {

+ Files.delete(file);

+ } catch (FileNotFoundException ignored) {

+ // file may have been moved by a handler

+ }

+ }

+ for (Path directory : directoriesToClean) {

+ Files.walkFileTree(directory, CleanupFileVisitor.get());

+ }

+ }

+

+ private static final class FileAdderVisitor extends

SimpleFileVisitor {

+

+ private final Collection files = new ArrayList<>(4);

+

+ Collection get() {

+ return files;

+ }

+

+ FileAdderVisitor() {

+ }

+

+ @Override

+ public FileVisitResult visitFile(Path file, BasicFileAttributes

attrs) throws IOException {

+ FileVisitResult result = super.visitFile(file, attrs);

+ files.add(file);

+ return result;

+ }

+ }

+

+ private static final class CleanupFileVisitor extends

SimpleFileVisitor {

--- End diff --

I think it would be better to make this an enum. Then we get all singleton

properties for free.

---

[GitHub] flink pull request #6178: [FLINK-9599][rest] Implement generic mechanism to ...

Github user tillrohrmann commented on a diff in the pull request:

https://github.com/apache/flink/pull/6178#discussion_r196455211

--- Diff:

flink-runtime/src/main/java/org/apache/flink/runtime/rest/AbstractHandler.java

---

@@ -103,77 +104,74 @@ protected void respondAsLeader(ChannelHandlerContext

ctx, RoutedRequest routedRe

return;

}

- ByteBuf msgContent = ((FullHttpRequest)

httpRequest).content();

+ final ByteBuf msgContent;

+ Optional multipartJsonPayload =

FileUploadHandler.getMultipartJsonPayload(ctx);

+ if (multipartJsonPayload.isPresent()) {

+ msgContent =

Unpooled.wrappedBuffer(multipartJsonPayload.get());

+ } else {

+ msgContent = ((FullHttpRequest)

httpRequest).content();

+ }

- R request;

- if (isFileUpload()) {

- final Path path =

ctx.channel().attr(FileUploadHandler.UPLOADED_FILE).get();

- if (path == null) {

- HandlerUtils.sendErrorResponse(

- ctx,

- httpRequest,

- new ErrorResponseBody("Client

did not upload a file."),

- HttpResponseStatus.BAD_REQUEST,

- responseHeaders);

- return;

- }

- //noinspection unchecked

- request = (R) new FileUpload(path);

- } else if (msgContent.capacity() == 0) {

- try {

- request = MAPPER.readValue("{}",

untypedResponseMessageHeaders.getRequestClass());

- } catch (JsonParseException |

JsonMappingException je) {

- log.error("Request did not conform to

expected format.", je);

- HandlerUtils.sendErrorResponse(

- ctx,

- httpRequest,

- new ErrorResponseBody("Bad

request received."),

- HttpResponseStatus.BAD_REQUEST,

- responseHeaders);

- return;

+ try (FileUploads uploadedFiles =

FileUploadHandler.getMultipartFileUploads(ctx)) {

--- End diff --

I would obtain the upload directory from `FileUploadHandler` and simply

delete this directory after the call has been processed. We could, then also

create `FileUploads` outside of the `FileUploadHandler` to instantiate a

`HandlerRequest` with it. This would also simplify the `FileUploads` class

significantly, because it is no longer responsible for deleting the files.

---

[jira] [Commented] (FLINK-9599) Implement generic mechanism to receive files via rest

[

https://issues.apache.org/jira/browse/FLINK-9599?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16517478#comment-16517478

]

ASF GitHub Bot commented on FLINK-9599:

---

Github user tillrohrmann commented on a diff in the pull request:

https://github.com/apache/flink/pull/6178#discussion_r196453235

--- Diff:

flink-runtime/src/main/java/org/apache/flink/runtime/rest/FileUploadHandler.java

---

@@ -95,14 +107,22 @@ protected void channelRead0(final

ChannelHandlerContext ctx, final HttpObject ms

final DiskFileUpload fileUpload =

(DiskFileUpload) data;

checkState(fileUpload.isCompleted());

- final Path dest =