[jira] [Updated] (FLINK-13962) Task state handles leak if the task fails before deploying

[ https://issues.apache.org/jira/browse/FLINK-13962?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Zhu Zhu updated FLINK-13962: Description: Currently the taskRestore field of an _Execution_ is reset to null in task deployment stage. The purpose of it is "allows the JobManagerTaskRestore instance to be garbage collected. Furthermore, it won't be archived along with the Execution in the ExecutionVertex in case of a restart. This is especially important when setting state.backend.fs.memory-threshold to larger values because every state below this threshold will be stored in the meta state files and, thus, also the JobManagerTaskRestore instances." (From FLINK-9693) However, if a task fails before it comes to the deployment stage(e.g. fails due to slot allocation timeout), the _taskRestore_ field will remain non-null and will be archived in prior executions. This may result in large JM heap cost in certain cases and lead to continuous JM full GCs. I’d propose to set the _taskRestore_ field to be null before moving an _Execution_ to prior executions. We may keep the logic which sets the _taskRestore_ field to be null after task deployment which allows it to be GC'ed earlier in normal cases. was: Currently the taskRestore field of an _Execution_ is reset to null in task deployment stage. The purpose of it is "allows the JobManagerTaskRestore instance to be garbage collected. Furthermore, it won't be archived along with the Execution in the ExecutionVertex in case of a restart. This is especially important when setting state.backend.fs.memory-threshold to larger values because every state below this threshold will be stored in the meta state files and, thus, also the JobManagerTaskRestore instances." (From FLINK-9693) However, if a task fails before it comes to the deployment stage(e.g. fails due to slot allocation timeout), the _taskRestore_ field will remain non-null and will be archived in prior executions. This may result in large JM heap cost in certain cases and lead to continuous JM full GCs. I’d propose to set the _taskRestore_ field to be null before moving an _Execution_ to prior executions. We may keep the logic which sets the _taskRestore_ field to be null after task deployment to allow GC of it in normal cases. > Task state handles leak if the task fails before deploying > -- > > Key: FLINK-13962 > URL: https://issues.apache.org/jira/browse/FLINK-13962 > Project: Flink > Issue Type: Bug > Components: Runtime / Coordination >Affects Versions: 1.9.0, 1.10.0 >Reporter: Zhu Zhu >Priority: Major > > Currently the taskRestore field of an _Execution_ is reset to null in task > deployment stage. > The purpose of it is "allows the JobManagerTaskRestore instance to be garbage > collected. Furthermore, it won't be archived along with the Execution in the > ExecutionVertex in case of a restart. This is especially important when > setting state.backend.fs.memory-threshold to larger values because every > state below this threshold will be stored in the meta state files and, thus, > also the JobManagerTaskRestore instances." (From FLINK-9693) > > However, if a task fails before it comes to the deployment stage(e.g. fails > due to slot allocation timeout), the _taskRestore_ field will remain non-null > and will be archived in prior executions. > This may result in large JM heap cost in certain cases and lead to continuous > JM full GCs. > > I’d propose to set the _taskRestore_ field to be null before moving an > _Execution_ to prior executions. > We may keep the logic which sets the _taskRestore_ field to be null after > task deployment which allows it to be GC'ed earlier in normal cases. -- This message was sent by Atlassian Jira (v8.3.2#803003)

[GitHub] [flink] flinkbot edited a comment on issue #9608: [FLINK-13776][table] Introduce new interfaces to BuiltInFunctionDefinition

flinkbot edited a comment on issue #9608: [FLINK-13776][table] Introduce new interfaces to BuiltInFunctionDefinition URL: https://github.com/apache/flink/pull/9608#issuecomment-527810896 ## CI report: * 44ce459cb0c516fd5fb0d0b7aa775231d0f572a9 : CANCELED [Build](https://travis-ci.com/flink-ci/flink/builds/125858277) * a79f328a66d7903ce7fe9c12af57380ca4e1cbdd : SUCCESS [Build](https://travis-ci.com/flink-ci/flink/builds/125860413) * 3688d1b32dea330de02065b4a3698855d29dec2f : SUCCESS [Build](https://travis-ci.com/flink-ci/flink/builds/125994583) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] flinkbot edited a comment on issue #9607: [FLINK-13946] Remove job session related code from ExecutionEnvironment

flinkbot edited a comment on issue #9607: [FLINK-13946] Remove job session related code from ExecutionEnvironment URL: https://github.com/apache/flink/pull/9607#issuecomment-527790327 ## CI report: * 0c2c5cdcc1267a49ef8df775e0e2ee46a5249487 : SUCCESS [Build](https://travis-ci.com/flink-ci/flink/builds/125850583) * 9c152ea5c40ef923f9f9cf8436d88b1db9af668a : FAILURE [Build](https://travis-ci.com/flink-ci/flink/builds/125884207) * 833e15cfdd2c32ed626bbd35370b69d1f4f8aad7 : CANCELED [Build](https://travis-ci.com/flink-ci/flink/builds/125943944) * 5d0397bed767e8f4e0de48e97295670cb5e3 : CANCELED [Build](https://travis-ci.com/flink-ci/flink/builds/125948178) * d256fa7db82ff098fe03568b6ae6608812bfbfb9 : FAILURE [Build](https://travis-ci.com/flink-ci/flink/builds/125956643) * 3814699a2cc3ce37d034988794bfdbf1d9f5aec7 : FAILURE [Build](https://travis-ci.com/flink-ci/flink/builds/125997236) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Comment Edited] (FLINK-13516) YARNSessionFIFOSecuredITCase fails on Java 11

[

https://issues.apache.org/jira/browse/FLINK-13516?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16923044#comment-16923044

]

Haibo Sun edited comment on FLINK-13516 at 9/5/19 4:58 AM:

---

The failure of the case is due to the failure of authentication when the yarn

client requests access authorization of resource manager, and subsequent

retries lead to test timeout. New encryption types of

aes128-cts-hmac-sha256-128 and aes256-cts-hmac-sha384-192 (for Kerberos 5)

enabled by default were added in Java 11, while the current version of MiniKdc

used by Flink does not support these encryption types and does not work well

when these encryption types are enabled, which results in the authentication

failure.

Error Log:

{{DEBUG org.apache.hadoop.security.UserGroupInformation -

PrivilegedActionException as:hadoop/localh...@example.com (auth:KERBEROS)

cause:javax.security.sasl.SaslException: GSS initiate failed [Caused by

GSSException: No valid credentials provided (Mechanism level: Message stream

modified (41) - Message stream modified)]}}

There are two solutions to fix this issue, one is to add a configuration

template named "minikdc-krb5.conf" in the test resource directory, and

explicitly set default_tkt_enctypes and default_tgs_enctypes to use

aes128-cts-hmac-sha1-96 in the template file, the other is to bump MiniKdc to

the latest version 3.2.0 (I tested that this version has solved this problem).

I've tested both solutions on my local machine, and all tests that depend on

MiniKdc work well on Java 8 and Java 11. Given that the version of minikdc will

be updated sooner or later, if it runs successfully on Travis, I suggest to

use the second solution. [~Zentol], what do you think?

was (Author: sunhaibotb):

The failure of the case is due to the failure of authentication when the yarn

client requests access authorization of resource manager, and subsequent

retries lead to test timeout. New encryption types of

aes128-cts-hmac-sha256-128 and aes256-cts-hmac-sha384-192 (for Kerberos 5)

enabled by default were added in Java 11, while the current version of MiniKdc

used by Flink does not support these encryption types and does not work well

when these encryption types are enabled, which results in the authentication

failure.

{{DEBUG org.apache.hadoop.security.UserGroupInformation -

PrivilegedActionException as:hadoop/localh...@example.com (auth:KERBEROS)

cause:javax.security.sasl.SaslException: GSS initiate failed [Caused by

GSSException: No valid credentials provided (Mechanism level: Message stream

modified (41) - Message stream modified)]}}

There are two solutions to fix this issue, one is to add a configuration

template named "minikdc-krb5.conf" in the test resource directory, and

explicitly set default_tkt_enctypes and default_tgs_enctypes to use

aes128-cts-hmac-sha1-96 in the template file, the other is to bump MiniKdc to

the latest version 3.2.0 (I tested that this version has solved this problem).

I've tested both solutions on my local machine, and all tests that depend on

MiniKdc work well on Java 8 and Java 11. Given that the version of minikdc will

be updated sooner or later, if it runs successfully on Travis, I suggest to

use the second solution. [~Zentol], what do you think?

> YARNSessionFIFOSecuredITCase fails on Java 11

> -

>

> Key: FLINK-13516

> URL: https://issues.apache.org/jira/browse/FLINK-13516

> Project: Flink

> Issue Type: Sub-task

> Components: Deployment / YARN, Tests

>Reporter: Chesnay Schepler

>Assignee: Haibo Sun

>Priority: Major

> Fix For: 1.10.0

>

>

> {{YARNSessionFIFOSecuredITCase#testDetachedMode}} times out when run on Java

> 11. This may be related to security changes in Java 11.

--

This message was sent by Atlassian Jira

(v8.3.2#803003)

[jira] [Comment Edited] (FLINK-13516) YARNSessionFIFOSecuredITCase fails on Java 11

[

https://issues.apache.org/jira/browse/FLINK-13516?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16923044#comment-16923044

]

Haibo Sun edited comment on FLINK-13516 at 9/5/19 4:58 AM:

---

The failure of the case is due to the failure of authentication when the yarn

client requests access authorization of resource manager, and subsequent

retries lead to test timeout. New encryption types of

aes128-cts-hmac-sha256-128 and aes256-cts-hmac-sha384-192 (for Kerberos 5)

enabled by default were added in Java 11, while the current version of MiniKdc

used by Flink does not support these encryption types and does not work well

when these encryption types are enabled, which results in the authentication

failure.

{{DEBUG org.apache.hadoop.security.UserGroupInformation -

PrivilegedActionException as:hadoop/localh...@example.com (auth:KERBEROS)

cause:javax.security.sasl.SaslException: GSS initiate failed [Caused by

GSSException: No valid credentials provided (Mechanism level: Message stream

modified (41) - Message stream modified)]}}

There are two solutions to fix this issue, one is to add a configuration

template named "minikdc-krb5.conf" in the test resource directory, and

explicitly set default_tkt_enctypes and default_tgs_enctypes to use

aes128-cts-hmac-sha1-96 in the template file, the other is to bump MiniKdc to

the latest version 3.2.0 (I tested that this version has solved this problem).

I've tested both solutions on my local machine, and all tests that depend on

MiniKdc work well on Java 8 and Java 11. Given that the version of minikdc will

be updated sooner or later, if it runs successfully on Travis, I suggest to

use the second solution. [~Zentol], what do you think?

was (Author: sunhaibotb):

The failure of the case is due to the failure of authentication when the yarn

client requests access authorization of resource manager, and subsequent

retries lead to test timeout. New encryption types of

aes128-cts-hmac-sha256-128 and aes256-cts-hmac-sha384-192 (for Kerberos 5)

enabled by default were added in Java 11, while the current version of MiniKdc

used by Flink does not support these encryption types and does not work well

when these encryption types are enabled, which results in the authentication

failure.

There are two solutions to fix this issue, one is to add a configuration

template named "minikdc-krb5.conf" in the test resource directory, and

explicitly set default_tkt_enctypes and default_tgs_enctypes to use

aes128-cts-hmac-sha1-96 in the template file, the other is to bump MiniKdc to

the latest version 3.2.0 (I tested that this version has solved this problem).

I've tested both solutions on my local machine, and all tests that depend on

MiniKdc work well on Java 8 and Java 11. Considering that the version of

MiniKdc will be updated sooner or later, I suggest to use the second solution.

[~Zentol], what do you think?

> YARNSessionFIFOSecuredITCase fails on Java 11

> -

>

> Key: FLINK-13516

> URL: https://issues.apache.org/jira/browse/FLINK-13516

> Project: Flink

> Issue Type: Sub-task

> Components: Deployment / YARN, Tests

>Reporter: Chesnay Schepler

>Assignee: Haibo Sun

>Priority: Major

> Fix For: 1.10.0

>

>

> {{YARNSessionFIFOSecuredITCase#testDetachedMode}} times out when run on Java

> 11. This may be related to security changes in Java 11.

--

This message was sent by Atlassian Jira

(v8.3.2#803003)

[GitHub] [flink] flinkbot edited a comment on issue #9607: [FLINK-13946] Remove job session related code from ExecutionEnvironment

flinkbot edited a comment on issue #9607: [FLINK-13946] Remove job session related code from ExecutionEnvironment URL: https://github.com/apache/flink/pull/9607#issuecomment-527790327 ## CI report: * 0c2c5cdcc1267a49ef8df775e0e2ee46a5249487 : SUCCESS [Build](https://travis-ci.com/flink-ci/flink/builds/125850583) * 9c152ea5c40ef923f9f9cf8436d88b1db9af668a : FAILURE [Build](https://travis-ci.com/flink-ci/flink/builds/125884207) * 833e15cfdd2c32ed626bbd35370b69d1f4f8aad7 : CANCELED [Build](https://travis-ci.com/flink-ci/flink/builds/125943944) * 5d0397bed767e8f4e0de48e97295670cb5e3 : CANCELED [Build](https://travis-ci.com/flink-ci/flink/builds/125948178) * d256fa7db82ff098fe03568b6ae6608812bfbfb9 : FAILURE [Build](https://travis-ci.com/flink-ci/flink/builds/125956643) * 3814699a2cc3ce37d034988794bfdbf1d9f5aec7 : UNKNOWN This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Comment Edited] (FLINK-13516) YARNSessionFIFOSecuredITCase fails on Java 11

[

https://issues.apache.org/jira/browse/FLINK-13516?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16923044#comment-16923044

]

Haibo Sun edited comment on FLINK-13516 at 9/5/19 4:43 AM:

---

The failure of the case is due to the failure of authentication when the yarn

client requests access authorization of resource manager, and subsequent

retries lead to test timeout. New encryption types of

aes128-cts-hmac-sha256-128 and aes256-cts-hmac-sha384-192 (for Kerberos 5)

enabled by default were added in Java 11, while the current version of MiniKdc

used by Flink does not support these encryption types and does not work well

when these encryption types are enabled, which results in the authentication

failure.

There are two solutions to fix this issue, one is to add a configuration

template named "minikdc-krb5.conf" in the test resource directory, and

explicitly set default_tkt_enctypes and default_tgs_enctypes to use

aes128-cts-hmac-sha1-96 in the template file, the other is to bump MiniKdc to

the latest version 3.2.0 (I tested that this version has solved this problem).

I've tested both solutions on my local machine, and all tests that depend on

MiniKdc work well on Java 8 and Java 11. Considering that the version of

MiniKdc will be updated sooner or later, I suggest to use the second solution.

[~Zentol], what do you think?

was (Author: sunhaibotb):

The failure of the case is due to the failure of authentication when the yarn

client requests access authorization of resource manager, and subsequent

retries lead to test timeout. New encryption types of

aes128-cts-hmac-sha256-128 and aes256-cts-hmac-sha384-192 (for Kerberos 5)

enabled by default were added in Java 11, while the current version of MiniKdc

used by Flink does not support these encryption types and does not work well

when these encryption types are enabled, which results in the authentication

failure. There are two solutions to fix this issue, one is to add a

configuration template named "minikdc-krb5.conf" in the test resource

directory, and explicitly set default_tkt_enctypes and default_tgs_enctypes to

use aes128-cts-hmac-sha1-96 in the template file, the other is to bump MiniKdc

to the latest version 3.2.0 (I tested that this version has solved this

problem). I've tested both solutions on my local machine, and all tests that

depend on MiniKdc work well on Java 8 and Java 11. Considering that the version

of MiniKdc will be updated sooner or later, I suggest to use the second

solution. [~Zentol], what do you think?

> YARNSessionFIFOSecuredITCase fails on Java 11

> -

>

> Key: FLINK-13516

> URL: https://issues.apache.org/jira/browse/FLINK-13516

> Project: Flink

> Issue Type: Sub-task

> Components: Deployment / YARN, Tests

>Reporter: Chesnay Schepler

>Assignee: Haibo Sun

>Priority: Major

> Fix For: 1.10.0

>

>

> {{YARNSessionFIFOSecuredITCase#testDetachedMode}} times out when run on Java

> 11. This may be related to security changes in Java 11.

--

This message was sent by Atlassian Jira

(v8.3.2#803003)

[jira] [Commented] (FLINK-13516) YARNSessionFIFOSecuredITCase fails on Java 11

[

https://issues.apache.org/jira/browse/FLINK-13516?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16923044#comment-16923044

]

Haibo Sun commented on FLINK-13516:

---

The failure of the case is due to the failure of authentication when the yarn

client requests access authorization of resource manager, and subsequent

retries lead to test timeout. New encryption types of

aes128-cts-hmac-sha256-128 and aes256-cts-hmac-sha384-192 (for Kerberos 5)

enabled by default were added in Java 11, while the current version of MiniKdc

used by Flink does not support these encryption types and does not work well

when these encryption types are enabled, which results in the authentication

failure. There are two solutions to fix this issue, one is to add a

configuration template named "minikdc-krb5.conf" in the test resource

directory, and explicitly set default_tkt_enctypes and default_tgs_enctypes to

use aes128-cts-hmac-sha1-96 in the template file, the other is to bump MiniKdc

to the latest version 3.2.0 (I tested that this version has solved this

problem). I've tested both solutions on my local machine, and all tests that

depend on MiniKdc work well on Java 8 and Java 11. Considering that the version

of MiniKdc will be updated sooner or later, I suggest to use the second

solution. [~Zentol], what do you think?

> YARNSessionFIFOSecuredITCase fails on Java 11

> -

>

> Key: FLINK-13516

> URL: https://issues.apache.org/jira/browse/FLINK-13516

> Project: Flink

> Issue Type: Sub-task

> Components: Deployment / YARN, Tests

>Reporter: Chesnay Schepler

>Assignee: Haibo Sun

>Priority: Major

> Fix For: 1.10.0

>

>

> {{YARNSessionFIFOSecuredITCase#testDetachedMode}} times out when run on Java

> 11. This may be related to security changes in Java 11.

--

This message was sent by Atlassian Jira

(v8.3.2#803003)

[GitHub] [flink] flinkbot edited a comment on issue #9608: [FLINK-13776][table] Introduce new interfaces to BuiltInFunctionDefinition

flinkbot edited a comment on issue #9608: [FLINK-13776][table] Introduce new interfaces to BuiltInFunctionDefinition URL: https://github.com/apache/flink/pull/9608#issuecomment-527810896 ## CI report: * 44ce459cb0c516fd5fb0d0b7aa775231d0f572a9 : CANCELED [Build](https://travis-ci.com/flink-ci/flink/builds/125858277) * a79f328a66d7903ce7fe9c12af57380ca4e1cbdd : SUCCESS [Build](https://travis-ci.com/flink-ci/flink/builds/125860413) * 3688d1b32dea330de02065b4a3698855d29dec2f : PENDING [Build](https://travis-ci.com/flink-ci/flink/builds/125994583) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] flinkbot edited a comment on issue #9608: [FLINK-13776][table] Introduce new interfaces to BuiltInFunctionDefinition

flinkbot edited a comment on issue #9608: [FLINK-13776][table] Introduce new interfaces to BuiltInFunctionDefinition URL: https://github.com/apache/flink/pull/9608#issuecomment-527810896 ## CI report: * 44ce459cb0c516fd5fb0d0b7aa775231d0f572a9 : CANCELED [Build](https://travis-ci.com/flink-ci/flink/builds/125858277) * a79f328a66d7903ce7fe9c12af57380ca4e1cbdd : SUCCESS [Build](https://travis-ci.com/flink-ci/flink/builds/125860413) * 3688d1b32dea330de02065b4a3698855d29dec2f : UNKNOWN This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Commented] (FLINK-13965) Keep hasDeprecatedKeys in ConfigOption and mark it with @Deprecated annotation

[

https://issues.apache.org/jira/browse/FLINK-13965?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16923029#comment-16923029

]

vinoyang commented on FLINK-13965:

--

[~till.rohrmann] and [~Zentol] WDYT?

> Keep hasDeprecatedKeys in ConfigOption and mark it with @Deprecated annotation

> --

>

> Key: FLINK-13965

> URL: https://issues.apache.org/jira/browse/FLINK-13965

> Project: Flink

> Issue Type: Test

>Affects Versions: 1.9.0

>Reporter: vinoyang

>Priority: Major

>

> In our program based on Flink 1.7.2, we used method

> {{ConfigOption#hasDeprecatedKeys}}. But, this method was renamed to

> {{hasFallbackKeys}} in FLINK-10436. So after we bump our flink version to

> 1.9.0, we meet compile error.

>

> It seems we replaced the deprecated key with an entity {{FallbackKey}}.

> However, I still see the method {{withDeprecatedKeys}}. Since we keep the

> method {{withDeprecatedKeys}}, why not keep the method {{hasDeprecatedKeys}}.

> Although, this public API did not marked as {{Public}} annotation. IMHO,

> {{ConfigOption}} is hosted in flink-core module, many users also use it, we

> should maintain the compatibility as far as possible.

--

This message was sent by Atlassian Jira

(v8.3.2#803003)

[jira] [Created] (FLINK-13965) Keep hasDeprecatedKeys in ConfigOption and mark it with @Deprecated annotation

vinoyang created FLINK-13965:

Summary: Keep hasDeprecatedKeys in ConfigOption and mark it with

@Deprecated annotation

Key: FLINK-13965

URL: https://issues.apache.org/jira/browse/FLINK-13965

Project: Flink

Issue Type: Test

Affects Versions: 1.9.0

Reporter: vinoyang

In our program based on Flink 1.7.2, we used method

{{ConfigOption#hasDeprecatedKeys}}. But, this method was renamed to

{{hasFallbackKeys }} in FLINK-10436. So after we bump our flink version to

1.9.0, we meet compile error.

It seems we replaced the deprecated key with an entity {{FallbackKey}}.

However, I still see the method {{withDeprecatedKeys}}. Since we keep the

method {{withDeprecatedKeys}}, why not keep the method {{hasDeprecatedKeys}}.

Although, this public API did not marked as {{Public}} annotation. IMHO,

{{ConfigOption}} is hosted in flink-core module, many users also use it, we

should maintain the compatibility as far as possible.

--

This message was sent by Atlassian Jira

(v8.3.2#803003)

[jira] [Updated] (FLINK-13965) Keep hasDeprecatedKeys in ConfigOption and mark it with @Deprecated annotation

[

https://issues.apache.org/jira/browse/FLINK-13965?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

vinoyang updated FLINK-13965:

-

Description:

In our program based on Flink 1.7.2, we used method

{{ConfigOption#hasDeprecatedKeys}}. But, this method was renamed to

{{hasFallbackKeys}} in FLINK-10436. So after we bump our flink version to

1.9.0, we meet compile error.

It seems we replaced the deprecated key with an entity {{FallbackKey}}.

However, I still see the method {{withDeprecatedKeys}}. Since we keep the

method {{withDeprecatedKeys}}, why not keep the method {{hasDeprecatedKeys}}.

Although, this public API did not marked as {{Public}} annotation. IMHO,

{{ConfigOption}} is hosted in flink-core module, many users also use it, we

should maintain the compatibility as far as possible.

was:

In our program based on Flink 1.7.2, we used method

{{ConfigOption#hasDeprecatedKeys}}. But, this method was renamed to

{{hasFallbackKeys }} in FLINK-10436. So after we bump our flink version to

1.9.0, we meet compile error.

It seems we replaced the deprecated key with an entity {{FallbackKey}}.

However, I still see the method {{withDeprecatedKeys}}. Since we keep the

method {{withDeprecatedKeys}}, why not keep the method {{hasDeprecatedKeys}}.

Although, this public API did not marked as {{Public}} annotation. IMHO,

{{ConfigOption}} is hosted in flink-core module, many users also use it, we

should maintain the compatibility as far as possible.

> Keep hasDeprecatedKeys in ConfigOption and mark it with @Deprecated annotation

> --

>

> Key: FLINK-13965

> URL: https://issues.apache.org/jira/browse/FLINK-13965

> Project: Flink

> Issue Type: Test

>Affects Versions: 1.9.0

>Reporter: vinoyang

>Priority: Major

>

> In our program based on Flink 1.7.2, we used method

> {{ConfigOption#hasDeprecatedKeys}}. But, this method was renamed to

> {{hasFallbackKeys}} in FLINK-10436. So after we bump our flink version to

> 1.9.0, we meet compile error.

>

> It seems we replaced the deprecated key with an entity {{FallbackKey}}.

> However, I still see the method {{withDeprecatedKeys}}. Since we keep the

> method {{withDeprecatedKeys}}, why not keep the method {{hasDeprecatedKeys}}.

> Although, this public API did not marked as {{Public}} annotation. IMHO,

> {{ConfigOption}} is hosted in flink-core module, many users also use it, we

> should maintain the compatibility as far as possible.

--

This message was sent by Atlassian Jira

(v8.3.2#803003)

[jira] [Updated] (FLINK-13962) Task state handles leak if the task fails before deploying

[ https://issues.apache.org/jira/browse/FLINK-13962?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Zhu Zhu updated FLINK-13962: Description: Currently the taskRestore field of an _Execution_ is reset to null in task deployment stage. The purpose of it is "allows the JobManagerTaskRestore instance to be garbage collected. Furthermore, it won't be archived along with the Execution in the ExecutionVertex in case of a restart. This is especially important when setting state.backend.fs.memory-threshold to larger values because every state below this threshold will be stored in the meta state files and, thus, also the JobManagerTaskRestore instances." (From FLINK-9693) However, if a task fails before it comes to the deployment stage(e.g. fails due to slot allocation timeout), the _taskRestore_ field will remain non-null and will be archived in prior executions. This may result in large JM heap cost in certain cases and lead to continuous JM full GCs. I’d propose to set the _taskRestore_ field to be null before moving an _Execution_ to prior executions. We may keep the logic which sets the _taskRestore_ field to be null after task deployment to allow GC of it in normal cases. was: Currently Execution#taskRestore is reset to null in task deployment stage. The purpose of it is "allows the JobManagerTaskRestore instance to be garbage collected. Furthermore, it won't be archived along with the Execution in the ExecutionVertex in case of a restart. This is especially important when setting state.backend.fs.memory-threshold to larger values because every state below this threshold will be stored in the meta state files and, thus, also the JobManagerTaskRestore instances." (From FLINK-9693) However, if a task fails before it comes to the deployment stage(e.g. fails due to slot allocation timeout), the Execution#taskRestore will remain non-null and will be archived in prior executions. This may result in large JM heap cost in certain cases and lead to continuous JM full GCs. I’d propose to set the taskRestore field to be null before moving an execution to prior executions. We can keep the logic which set the taskRestore field to be null after task deployment to allow the > Task state handles leak if the task fails before deploying > -- > > Key: FLINK-13962 > URL: https://issues.apache.org/jira/browse/FLINK-13962 > Project: Flink > Issue Type: Bug > Components: Runtime / Coordination >Affects Versions: 1.9.0, 1.10.0 >Reporter: Zhu Zhu >Priority: Major > > Currently the taskRestore field of an _Execution_ is reset to null in task > deployment stage. > The purpose of it is "allows the JobManagerTaskRestore instance to be garbage > collected. Furthermore, it won't be archived along with the Execution in the > ExecutionVertex in case of a restart. This is especially important when > setting state.backend.fs.memory-threshold to larger values because every > state below this threshold will be stored in the meta state files and, thus, > also the JobManagerTaskRestore instances." (From FLINK-9693) > > However, if a task fails before it comes to the deployment stage(e.g. fails > due to slot allocation timeout), the _taskRestore_ field will remain non-null > and will be archived in prior executions. > This may result in large JM heap cost in certain cases and lead to continuous > JM full GCs. > > I’d propose to set the _taskRestore_ field to be null before moving an > _Execution_ to prior executions. > We may keep the logic which sets the _taskRestore_ field to be null after > task deployment to allow GC of it in normal cases. -- This message was sent by Atlassian Jira (v8.3.2#803003)

[jira] [Updated] (FLINK-13962) Task state handles leak if the task fails before deploying

[ https://issues.apache.org/jira/browse/FLINK-13962?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Zhu Zhu updated FLINK-13962: Summary: Task state handles leak if the task fails before deploying (was: Execution#taskRestore leaks if task fails before deploying) > Task state handles leak if the task fails before deploying > -- > > Key: FLINK-13962 > URL: https://issues.apache.org/jira/browse/FLINK-13962 > Project: Flink > Issue Type: Bug > Components: Runtime / Coordination >Affects Versions: 1.9.0, 1.10.0 >Reporter: Zhu Zhu >Priority: Major > > Currently Execution#taskRestore is reset to null in task deployment stage. > The purpose of it is "allows the JobManagerTaskRestore instance to be garbage > collected. Furthermore, it won't be archived along with the Execution in the > ExecutionVertex in case of a restart. This is especially important when > setting state.backend.fs.memory-threshold to larger values because every > state below this threshold will be stored in the meta state files and, thus, > also the JobManagerTaskRestore instances." (From FLINK-9693) > > However, if a task fails before it comes to the deployment stage(e.g. fails > due to slot allocation timeout), the Execution#taskRestore will remain > non-null and will be archived in prior executions. > This may result in large JM heap cost in certain cases and lead to continuous > JM full GCs. > > I’d propose to set the taskRestore field to be null before moving an > execution to prior executions. > We can keep the logic which set the taskRestore field to be null after task > deployment to allow the -- This message was sent by Atlassian Jira (v8.3.2#803003)

[jira] [Updated] (FLINK-13962) Execution#taskRestore leaks if task fails before deploying

[ https://issues.apache.org/jira/browse/FLINK-13962?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Zhu Zhu updated FLINK-13962: Description: Currently Execution#taskRestore is reset to null in task deployment stage. The purpose of it is "allows the JobManagerTaskRestore instance to be garbage collected. Furthermore, it won't be archived along with the Execution in the ExecutionVertex in case of a restart. This is especially important when setting state.backend.fs.memory-threshold to larger values because every state below this threshold will be stored in the meta state files and, thus, also the JobManagerTaskRestore instances." (From FLINK-9693) However, if a task fails before it comes to the deployment stage(e.g. fails due to slot allocation timeout), the Execution#taskRestore will remain non-null and will be archived in prior executions. This may result in large JM heap cost in certain cases and lead to continuous JM full GCs. I’d propose to set the taskRestore field to be null before moving an execution to prior executions. We can keep the logic which set the taskRestore field to be null after task deployment to allow the was: Currently Execution#taskRestore is reset to null in task deployment stage. The purpose of it is "allows the JobManagerTaskRestore instance to be garbage collected. Furthermore, it won't be archived along with the Execution in the ExecutionVertex in case of a restart. This is especially important when setting state.backend.fs.memory-threshold to larger values because every state below this threshold will be stored in the meta state files and, thus, also the JobManagerTaskRestore instances." (From FLINK-9693) However, if a task fails before it comes to the deployment stage(e.g. fails due to slot allocation timeout), the Execution#taskRestore will remain non-null and will be archived in prior executions. This may result in large JM heap cost in certain cases. I think we should check the Execution#taskRestore and make sure it is null when moving a execution to prior executions. > Execution#taskRestore leaks if task fails before deploying > -- > > Key: FLINK-13962 > URL: https://issues.apache.org/jira/browse/FLINK-13962 > Project: Flink > Issue Type: Bug > Components: Runtime / Coordination >Affects Versions: 1.9.0, 1.10.0 >Reporter: Zhu Zhu >Priority: Major > > Currently Execution#taskRestore is reset to null in task deployment stage. > The purpose of it is "allows the JobManagerTaskRestore instance to be garbage > collected. Furthermore, it won't be archived along with the Execution in the > ExecutionVertex in case of a restart. This is especially important when > setting state.backend.fs.memory-threshold to larger values because every > state below this threshold will be stored in the meta state files and, thus, > also the JobManagerTaskRestore instances." (From FLINK-9693) > > However, if a task fails before it comes to the deployment stage(e.g. fails > due to slot allocation timeout), the Execution#taskRestore will remain > non-null and will be archived in prior executions. > This may result in large JM heap cost in certain cases and lead to continuous > JM full GCs. > > I’d propose to set the taskRestore field to be null before moving an > execution to prior executions. > We can keep the logic which set the taskRestore field to be null after task > deployment to allow the -- This message was sent by Atlassian Jira (v8.3.2#803003)

[jira] [Commented] (FLINK-13500) RestClusterClient requires S3 access when HA is configured

[ https://issues.apache.org/jira/browse/FLINK-13500?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16923010#comment-16923010 ] TisonKun commented on FLINK-13500: -- Hi [~till.rohrmann] & [~Zentol], with FLINK-13750 resolved I think we can mark this issue as resolved also > RestClusterClient requires S3 access when HA is configured > -- > > Key: FLINK-13500 > URL: https://issues.apache.org/jira/browse/FLINK-13500 > Project: Flink > Issue Type: Bug > Components: Runtime / Coordination, Runtime / REST >Affects Versions: 1.8.1 >Reporter: David Judd >Priority: Major > > RestClusterClient initialization calls ClusterClient initialization, which > calls > org.apache.flink.runtime.highavailability.HighAvailabilityServicesUtils.createHighAvailabilityServices > In turn, createHighAvailabilityServices calls > BlobUtils.createBlobStoreFromConfig, which in our case tries to talk to S3. > It seems very surprising to me that (a) RestClusterClient needs any form of > access other than to the REST API, and (b) that client initialization would > attempt a write as a side effect. I do not see either of these surprising > facts described in the documentation–are they intentional? -- This message was sent by Atlassian Jira (v8.3.2#803003)

[GitHub] [flink] zhijiangW commented on a change in pull request #9483: [FLINK-13767][task] Migrate isFinished method from AvailabilityListener to AsyncDataInput

zhijiangW commented on a change in pull request #9483: [FLINK-13767][task]

Migrate isFinished method from AvailabilityListener to AsyncDataInput

URL: https://github.com/apache/flink/pull/9483#discussion_r321054294

##

File path:

flink-runtime/src/main/java/org/apache/flink/runtime/io/AvailabilityListener.java

##

@@ -55,7 +50,7 @@

* @return a future that is completed if there are more records

available. If there are more

* records available immediately, {@link #AVAILABLE} should be

returned. Previously returned

* not completed futures should become completed once there is more

input available or if

-* the input {@link #isFinished()}.

+* the input is finished.

Review comment:

Not quite sure of it. `isFinished()` is more proper to be provided by input

interface to indicate whether this input ends or not. As for

`AvailabilityListener`, we just explain that which cases would cause future

complete. Or I did not get your key point?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[jira] [Commented] (FLINK-13961) Remove obsolete abstraction JobExecutor(Service)

[

https://issues.apache.org/jira/browse/FLINK-13961?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16923003#comment-16923003

]

TisonKun commented on FLINK-13961:

--

Hi [~kkl0u] I'd like to work on this issue. Please assign the issue to me if

there is no more concern. I'm going to start progress once FLINK-13946 resolved

since there would be several conflicts and FLINK-13946 is almost done.

> Remove obsolete abstraction JobExecutor(Service)

> -

>

> Key: FLINK-13961

> URL: https://issues.apache.org/jira/browse/FLINK-13961

> Project: Flink

> Issue Type: Sub-task

> Components: Client / Job Submission

>Affects Versions: 1.10.0

>Reporter: TisonKun

>Priority: Major

> Fix For: 1.10.0

>

>

> Refer to Till's comment

> The JobExecutor and the JobExecutorService have been introduced to bridge

> between the Flip-6 MiniCluster and the legacy FlinkMiniCluster. They should

> be obsolete now and could be removed if needed.

> Actually we should make used of {{MiniClusterClient}} for submission ideally

> but we have some tests based on MiniCluster in flink-runtime or somewhere

> that doesn't have a dependency to flink-client; while move

> {{MiniClusterClient}} to flink-runtime is unclear whether reasonable or not.

> Thus I'd prefer keep {{executeJobBlocking}} for now and defer the possible

> refactor.

--

This message was sent by Atlassian Jira

(v8.3.2#803003)

[GitHub] [flink] flinkbot edited a comment on issue #9621: [FLINK-13591][web]: fix job list display when job name is too long

flinkbot edited a comment on issue #9621: [FLINK-13591][web]: fix job list display when job name is too long URL: https://github.com/apache/flink/pull/9621#issuecomment-528167913 ## CI report: * 5d249470fb0fdd04681afc5d63e426083107537a : FAILURE [Build](https://travis-ci.com/flink-ci/flink/builds/125989099) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Commented] (FLINK-10707) Improve Cluster Overview in Flink Dashboard

[ https://issues.apache.org/jira/browse/FLINK-10707?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16922995#comment-16922995 ] Yadong Xie commented on FLINK-10707: Hi [~fwollert] there only left one issue in your pr, do you have time to fix it? https://github.com/apache/flink/pull/8542#discussion_r301436447 > Improve Cluster Overview in Flink Dashboard > --- > > Key: FLINK-10707 > URL: https://issues.apache.org/jira/browse/FLINK-10707 > Project: Flink > Issue Type: Sub-task > Components: Runtime / Web Frontend >Reporter: Fabian Wollert >Assignee: Fabian Wollert >Priority: Major > Labels: pull-request-available > Attachments: flink-dashboard.png, image-2019-05-28-20-38-00-761.png > > Time Spent: 10m > Remaining Estimate: 0h > > The flink Dashboard is currently very simple. The following screenshot is a > mock of an improvement proposal: > !flink-dashboard.png|width=806,height=401! -- This message was sent by Atlassian Jira (v8.3.2#803003)

[GitHub] [flink] zhijiangW commented on a change in pull request #9483: [FLINK-13767][task] Migrate isFinished method from AvailabilityListener to AsyncDataInput

zhijiangW commented on a change in pull request #9483: [FLINK-13767][task] Migrate isFinished method from AvailabilityListener to AsyncDataInput URL: https://github.com/apache/flink/pull/9483#discussion_r321050894 ## File path: flink-runtime/src/main/java/org/apache/flink/runtime/io/AvailabilityListener.java ## @@ -33,11 +33,6 @@ */ CompletableFuture AVAILABLE = CompletableFuture.completedFuture(null); - /** Review comment: Yes, I agree with the current class naming seems a bit misnomer. It could be refactored in a separate hotfix commit. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] flinkbot commented on issue #9621: [FLINK-13591][web]: fix job list display when job name is too long

flinkbot commented on issue #9621: [FLINK-13591][web]: fix job list display when job name is too long URL: https://github.com/apache/flink/pull/9621#issuecomment-528167913 ## CI report: * 5d249470fb0fdd04681afc5d63e426083107537a : UNKNOWN This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] zhijiangW commented on a change in pull request #9483: [FLINK-13767][task] Migrate isFinished method from AvailabilityListener to AsyncDataInput

zhijiangW commented on a change in pull request #9483: [FLINK-13767][task]

Migrate isFinished method from AvailabilityListener to AsyncDataInput

URL: https://github.com/apache/flink/pull/9483#discussion_r321050088

##

File path:

flink-streaming-java/src/main/java/org/apache/flink/streaming/runtime/io/StreamTwoInputProcessor.java

##

@@ -121,18 +115,46 @@ public StreamTwoInputProcessor(

taskManagerConfig,

taskName);

checkState(checkpointedInputGates.length == 2);

- this.input1 = new

StreamTaskNetworkInput(checkpointedInputGates[0], inputSerializer1, ioManager,

0);

- this.input2 = new

StreamTaskNetworkInput(checkpointedInputGates[1], inputSerializer2, ioManager,

1);

- this.statusWatermarkValve1 = new StatusWatermarkValve(

- unionedInputGate1.getNumberOfInputChannels(),

- new ForwardingValveOutputHandler(streamOperator, lock,

streamStatusMaintainer, input1WatermarkGauge, 0));

- this.statusWatermarkValve2 = new StatusWatermarkValve(

- unionedInputGate2.getNumberOfInputChannels(),

- new ForwardingValveOutputHandler(streamOperator, lock,

streamStatusMaintainer, input2WatermarkGauge, 1));

+ this.output1 = new StreamTaskNetworkOutput<>(

+ streamOperator,

+ (StreamRecord record) -> {

Review comment:

Agree, the constructor seems too long now. In order to avoid this, I ever

constructed the relevant arguments including `Output` via separate method in

`StreamTwoInputTask`, and then pass these final arguments to the constructor of

`StreamTwoInputProcessor` directly in my previous version. I would consider

refactor this part later.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] flinkbot commented on issue #9621: [FLINK-13591][web]: fix job list display when job name is too long

flinkbot commented on issue #9621: [FLINK-13591][web]: fix job list display when job name is too long URL: https://github.com/apache/flink/pull/9621#issuecomment-528166827 Thanks a lot for your contribution to the Apache Flink project. I'm the @flinkbot. I help the community to review your pull request. We will use this comment to track the progress of the review. ## Automated Checks Last check on commit 5d249470fb0fdd04681afc5d63e426083107537a (Thu Sep 05 02:18:19 UTC 2019) **Warnings:** * No documentation files were touched! Remember to keep the Flink docs up to date! Mention the bot in a comment to re-run the automated checks. ## Review Progress * ❓ 1. The [description] looks good. * ❓ 2. There is [consensus] that the contribution should go into to Flink. * ❓ 3. Needs [attention] from. * ❓ 4. The change fits into the overall [architecture]. * ❓ 5. Overall code [quality] is good. Please see the [Pull Request Review Guide](https://flink.apache.org/contributing/reviewing-prs.html) for a full explanation of the review process. The Bot is tracking the review progress through labels. Labels are applied according to the order of the review items. For consensus, approval by a Flink committer of PMC member is required Bot commands The @flinkbot bot supports the following commands: - `@flinkbot approve description` to approve one or more aspects (aspects: `description`, `consensus`, `architecture` and `quality`) - `@flinkbot approve all` to approve all aspects - `@flinkbot approve-until architecture` to approve everything until `architecture` - `@flinkbot attention @username1 [@username2 ..]` to require somebody's attention - `@flinkbot disapprove architecture` to remove an approval you gave earlier This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

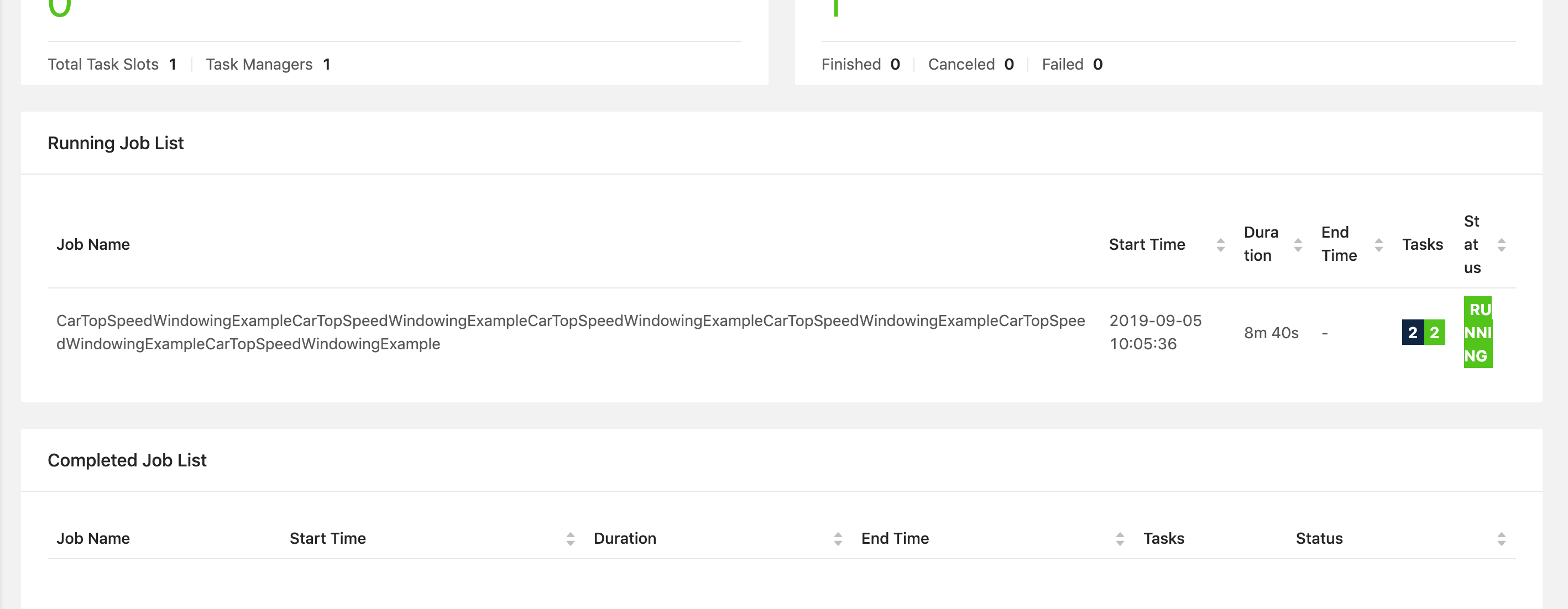

[GitHub] [flink] vthinkxie opened a new pull request #9621: [FLINK-13591][web]: fix job list display when job name is too long

vthinkxie opened a new pull request #9621: [FLINK-13591][web]: fix job list display when job name is too long URL: https://github.com/apache/flink/pull/9621 ## What is the purpose of the change fix Job List in Flink web doesn't display right when the job name is very long ## Brief change log add max-width to the td of job name column table to 40% ## Verifying this change - *Submit a job with long name (more than 200 char)* - *Visit the overview page* The job name column is not more than 40% width of table before  after fix  ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): (no) - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: (no) - The serializers: (no) - The runtime per-record code paths (performance sensitive): (no) - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Yarn/Mesos, ZooKeeper: (no) - The S3 file system connector: (no) ## Documentation - Does this pull request introduce a new feature? (no) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Updated] (FLINK-13591) 'Completed Job List' in Flink web doesn't display right when job name is very long

[ https://issues.apache.org/jira/browse/FLINK-13591?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated FLINK-13591: --- Labels: pull-request-available (was: ) > 'Completed Job List' in Flink web doesn't display right when job name is very > long > -- > > Key: FLINK-13591 > URL: https://issues.apache.org/jira/browse/FLINK-13591 > Project: Flink > Issue Type: Bug > Components: Runtime / Web Frontend >Affects Versions: 1.9.0 >Reporter: Kurt Young >Assignee: Yadong Xie >Priority: Minor > Labels: pull-request-available > Attachments: 10_57_07__08_06_2019.jpg > > > !10_57_07__08_06_2019.jpg! -- This message was sent by Atlassian Jira (v8.3.2#803003)

[GitHub] [flink] 1996fanrui commented on issue #9606: [FLINK-13677][docs-zh] Translate "Monitoring Back Pressure" page into Chinese

1996fanrui commented on issue #9606: [FLINK-13677][docs-zh] Translate "Monitoring Back Pressure" page into Chinese URL: https://github.com/apache/flink/pull/9606#issuecomment-528165894 @wuchong Can you take a look when you are free? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Commented] (FLINK-13816) Long job names result in a very ugly table listing the completed jobs in the web UI

[ https://issues.apache.org/jira/browse/FLINK-13816?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16922984#comment-16922984 ] Yadong Xie commented on FLINK-13816: I think this issue is duplicated with https://issues.apache.org/jira/browse/FLINK-13591 > Long job names result in a very ugly table listing the completed jobs in the > web UI > --- > > Key: FLINK-13816 > URL: https://issues.apache.org/jira/browse/FLINK-13816 > Project: Flink > Issue Type: Bug > Components: Runtime / Web Frontend >Affects Versions: 1.9.0 >Reporter: David Anderson >Priority: Major > Attachments: Screen Shot 2019-08-21 at 1.20.45 PM.png > > > Although this is a UI flaw, it's bad enough I've classified it as a bug. > The horizontal space used for the list of jobs in the new, angular-based web > frontend needs to be distributed more fairly (see the attached image). Some > min-width for each of the columns would be one solution. -- This message was sent by Atlassian Jira (v8.3.2#803003)

[GitHub] [flink] flinkbot edited a comment on issue #8542: [FLINK-10707][web-dashboard] flink cluster overview dashboard improvements

flinkbot edited a comment on issue #8542: [FLINK-10707][web-dashboard] flink cluster overview dashboard improvements URL: https://github.com/apache/flink/pull/8542#issuecomment-517730662 ## CI report: * ea5b9fc9b374fa895cefe921a3d4e99f8e12d3f2 : FAILURE [Build](https://travis-ci.com/flink-ci/flink/builds/121767898) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] vthinkxie commented on a change in pull request #8542: [FLINK-10707][web-dashboard] flink cluster overview dashboard improvements

vthinkxie commented on a change in pull request #8542:

[FLINK-10707][web-dashboard] flink cluster overview dashboard improvements

URL: https://github.com/apache/flink/pull/8542#discussion_r321044046

##

File path:

flink-runtime-web/web-dashboard/src/app/share/customize/task-manager-item/task-manager-item.component.ts

##

@@ -0,0 +1,37 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+import { ChangeDetectionStrategy, Component, Input } from '@angular/core';

+import { TaskmanagersItemCalInterface } from 'interfaces';

+

+@Component({

+ selector: 'flink-task-manager-item',

+ templateUrl: './task-manager-item.component.html',

+ styleUrls: ['./task-manager-item.component.less'],

+ changeDetection: ChangeDetectionStrategy.OnPush

+})

+export class TaskManagerItemComponent {

+ @Input() state: TaskmanagersItemCalInterface;

+ @Input() totalCount: number;

+ @Input() timeoutThresholdSeconds: number;

+ totalCountStyleThreshold: number;

Review comment:

Hi, @drummerwolli any update on this?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] flinkbot edited a comment on issue #9591: [FLINK-13937][docs] Fix the error of the hive connector dependency ve…

flinkbot edited a comment on issue #9591: [FLINK-13937][docs] Fix the error of the hive connector dependency ve… URL: https://github.com/apache/flink/pull/9591#issuecomment-527020451 ## CI report: * 9f75a4f6da30d5e22fa8594a628ce1e937b5eb10 : FAILURE [Build](https://travis-ci.com/flink-ci/flink/builds/125389706) * 6e3b8004605f8a9deb6adf5b7c5444bc73f3102e : FAILURE [Build](https://travis-ci.com/flink-ci/flink/builds/125858224) * 15b02613b5468e75651c68589699c41beb82436d : FAILURE [Build](https://travis-ci.com/flink-ci/flink/builds/125979715) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Commented] (FLINK-13417) Bump Zookeeper to 3.5.5

[

https://issues.apache.org/jira/browse/FLINK-13417?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16922970#comment-16922970

]

TisonKun commented on FLINK-13417:

--

Yes [~till.rohrmann]. I locally build with zk 3.5 and no compile error reported

while I fired CI it passed almost builds, see also

https://travis-ci.org/TisonKun/flink/builds/580757901

which reported failures on {{HBaseConnectorITCase}} when started HBase

MiniCluster when started MiniZooKeeperCluster. It seems like a problem of

testing class implementation.

Maybe [~carp84] can provide some inputs here.

{code:java}

java.io.IOException: Waiting for startup of standalone server

at

org.apache.hadoop.hbase.zookeeper.MiniZooKeeperCluster.startup(MiniZooKeeperCluster.java:261)

at

org.apache.hadoop.hbase.HBaseTestingUtility.startMiniZKCluster(HBaseTestingUtility.java:814)

at

org.apache.hadoop.hbase.HBaseTestingUtility.startMiniZKCluster(HBaseTestingUtility.java:784)

at

org.apache.hadoop.hbase.HBaseTestingUtility.startMiniCluster(HBaseTestingUtility.java:1041)

at

org.apache.hadoop.hbase.HBaseTestingUtility.startMiniCluster(HBaseTestingUtility.java:917)

at

org.apache.hadoop.hbase.HBaseTestingUtility.startMiniCluster(HBaseTestingUtility.java:899)

at

org.apache.hadoop.hbase.HBaseTestingUtility.startMiniCluster(HBaseTestingUtility.java:881)

at

org.apache.flink.addons.hbase.util.HBaseTestingClusterAutoStarter.setUp(HBaseTestingClusterAutoStarter.java:147)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at

sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at

sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at

org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:50)

at

org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

at

org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:47)

at

org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:24)

at

org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

at org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:48)

at org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:48)

at org.junit.rules.RunRules.evaluate(RunRules.java:20)

at org.junit.runners.ParentRunner.run(ParentRunner.java:363)

at org.junit.runner.JUnitCore.run(JUnitCore.java:137)

at

com.intellij.junit4.JUnit4IdeaTestRunner.startRunnerWithArgs(JUnit4IdeaTestRunner.java:68)

at

com.intellij.rt.execution.junit.IdeaTestRunner$Repeater.startRunnerWithArgs(IdeaTestRunner.java:47)

at

com.intellij.rt.execution.junit.JUnitStarter.prepareStreamsAndStart(JUnitStarter.java:242)

at

com.intellij.rt.execution.junit.JUnitStarter.main(JUnitStarter.java:70)

{code}

> Bump Zookeeper to 3.5.5

> ---

>

> Key: FLINK-13417

> URL: https://issues.apache.org/jira/browse/FLINK-13417

> Project: Flink

> Issue Type: Improvement

> Components: Runtime / Coordination

>Affects Versions: 1.9.0

>Reporter: Konstantin Knauf

>Priority: Blocker

> Fix For: 1.10.0

>

>

> User might want to secure their Zookeeper connection via SSL.

> This requires a Zookeeper version >= 3.5.1. We might as well try to bump it

> to 3.5.5, which is the latest version.

--

This message was sent by Atlassian Jira

(v8.3.2#803003)

[jira] [Commented] (FLINK-12847) Update Kinesis Connectors to latest Apache licensed libraries

[ https://issues.apache.org/jira/browse/FLINK-12847?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16922958#comment-16922958 ] Bowen Li commented on FLINK-12847: -- [~carp84] tried but seems Flink's jira doesn't have that field. I'll remember to put a release note for 1.10 :) > Update Kinesis Connectors to latest Apache licensed libraries > - > > Key: FLINK-12847 > URL: https://issues.apache.org/jira/browse/FLINK-12847 > Project: Flink > Issue Type: Improvement > Components: Connectors / Kinesis >Reporter: Dyana Rose >Assignee: Dyana Rose >Priority: Major > Labels: pull-request-available > Fix For: 1.10.0 > > Time Spent: 20m > Remaining Estimate: 0h > > Currently the referenced Kinesis Client Library and Kinesis Producer Library > code in the flink-connector-kinesis is licensed under the Amazon Software > License which is not compatible with the Apache License. This then requires a > fair amount of work in the CI pipeline and for users who want to use the > flink-connector-kinesis. > The Kinesis Client Library v2.x and the AWS Java SDK v2.x both are now on the > Apache 2.0 license. > [https://github.com/awslabs/amazon-kinesis-client/blob/master/LICENSE.txt] > [https://github.com/aws/aws-sdk-java-v2/blob/master/LICENSE.txt] > There is a PR for the Kinesis Producer Library to update it to the Apache 2.0 > license ([https://github.com/awslabs/amazon-kinesis-producer/pull/256]) > The task should include, but not limited to, upgrading KCL/KPL to new > versions of Apache 2.0 license, changing licenses and NOTICE files in > flink-connector-kinesis, and adding flink-connector-kinesis to build, CI and > artifact publishing pipeline, updating the build profiles, updating > documentation that references the license incompatibility > The expected outcome of this issue is that the flink-connector-kinesis will > be included with the standard build artifacts and will no longer need to be > built separately by users. -- This message was sent by Atlassian Jira (v8.3.2#803003)

[jira] [Commented] (FLINK-12847) Update Kinesis Connectors to latest Apache licensed libraries

[ https://issues.apache.org/jira/browse/FLINK-12847?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16922956#comment-16922956 ] Bowen Li commented on FLINK-12847: -- [~praveeng] no problem > Update Kinesis Connectors to latest Apache licensed libraries > - > > Key: FLINK-12847 > URL: https://issues.apache.org/jira/browse/FLINK-12847 > Project: Flink > Issue Type: Improvement > Components: Connectors / Kinesis >Reporter: Dyana Rose >Assignee: Dyana Rose >Priority: Major > Labels: pull-request-available > Fix For: 1.10.0 > > Time Spent: 20m > Remaining Estimate: 0h > > Currently the referenced Kinesis Client Library and Kinesis Producer Library > code in the flink-connector-kinesis is licensed under the Amazon Software > License which is not compatible with the Apache License. This then requires a > fair amount of work in the CI pipeline and for users who want to use the > flink-connector-kinesis. > The Kinesis Client Library v2.x and the AWS Java SDK v2.x both are now on the > Apache 2.0 license. > [https://github.com/awslabs/amazon-kinesis-client/blob/master/LICENSE.txt] > [https://github.com/aws/aws-sdk-java-v2/blob/master/LICENSE.txt] > There is a PR for the Kinesis Producer Library to update it to the Apache 2.0 > license ([https://github.com/awslabs/amazon-kinesis-producer/pull/256]) > The task should include, but not limited to, upgrading KCL/KPL to new > versions of Apache 2.0 license, changing licenses and NOTICE files in > flink-connector-kinesis, and adding flink-connector-kinesis to build, CI and > artifact publishing pipeline, updating the build profiles, updating > documentation that references the license incompatibility > The expected outcome of this issue is that the flink-connector-kinesis will > be included with the standard build artifacts and will no longer need to be > built separately by users. -- This message was sent by Atlassian Jira (v8.3.2#803003)

[GitHub] [flink] flinkbot edited a comment on issue #9591: [FLINK-13937][docs] Fix the error of the hive connector dependency ve…

flinkbot edited a comment on issue #9591: [FLINK-13937][docs] Fix the error of the hive connector dependency ve… URL: https://github.com/apache/flink/pull/9591#issuecomment-527020451 ## CI report: * 9f75a4f6da30d5e22fa8594a628ce1e937b5eb10 : FAILURE [Build](https://travis-ci.com/flink-ci/flink/builds/125389706) * 6e3b8004605f8a9deb6adf5b7c5444bc73f3102e : FAILURE [Build](https://travis-ci.com/flink-ci/flink/builds/125858224) * 15b02613b5468e75651c68589699c41beb82436d : PENDING [Build](https://travis-ci.com/flink-ci/flink/builds/125979715) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] flinkbot edited a comment on issue #9591: [FLINK-13937][docs] Fix the error of the hive connector dependency ve…

flinkbot edited a comment on issue #9591: [FLINK-13937][docs] Fix the error of the hive connector dependency ve… URL: https://github.com/apache/flink/pull/9591#issuecomment-527020451 ## CI report: * 9f75a4f6da30d5e22fa8594a628ce1e937b5eb10 : FAILURE [Build](https://travis-ci.com/flink-ci/flink/builds/125389706) * 6e3b8004605f8a9deb6adf5b7c5444bc73f3102e : FAILURE [Build](https://travis-ci.com/flink-ci/flink/builds/125858224) * 15b02613b5468e75651c68589699c41beb82436d : UNKNOWN This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Commented] (FLINK-13771) Support kqueue Netty transports (MacOS)

[

https://issues.apache.org/jira/browse/FLINK-13771?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16922939#comment-16922939

]

Aitozi commented on FLINK-13771:

Hi [~NicoK], is this work in progress? can i do a try on this issue?

> Support kqueue Netty transports (MacOS)

> ---

>

> Key: FLINK-13771

> URL: https://issues.apache.org/jira/browse/FLINK-13771

> Project: Flink

> Issue Type: Improvement

> Components: Runtime / Network

>Reporter: Nico Kruber

>Priority: Major

>

> It seems like Netty is now also supporting MacOS's native transport

> {{kqueue}}:

> https://netty.io/wiki/native-transports.html#using-the-macosbsd-native-transport

> We should allow this via {{taskmanager.network.netty.transport}}.

--

This message was sent by Atlassian Jira

(v8.3.2#803003)

[GitHub] [flink] Aitozi commented on issue #8479: [FLINK-11193][State Backends]Use user passed configuration overriding default configuration loading from file

Aitozi commented on issue #8479: [FLINK-11193][State Backends]Use user passed configuration overriding default configuration loading from file URL: https://github.com/apache/flink/pull/8479#issuecomment-528130638 Do you know why this push do not trigger travis ci ? How can i trigger it manually @azagrebin ? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] yangjf2019 commented on a change in pull request #9591: [FLINK-13937][docs] Fix the error of the hive connector dependency ve…

yangjf2019 commented on a change in pull request #9591: [FLINK-13937][docs] Fix the error of the hive connector dependency ve… URL: https://github.com/apache/flink/pull/9591#discussion_r321015149 ## File path: docs/_config.yml ## @@ -39,6 +39,10 @@ scala_version: "2.11" # This suffix is appended to the Scala-dependent Maven artifact names scala_version_suffix: "_2.11" +# Plain flink-shaded version is needed for e.g. the hive connector. +# Please update the shaded_version once new flink-shaded is released. +shaded_version: "7.0" Review comment: you are right, I found that the flink master's pom.xml is also used in 8.0 and I have modified it, PTAL. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] flinkbot edited a comment on issue #9620: [FLINK-13959] Consolidate DetachedEnvironment and ContextEnvironment

flinkbot edited a comment on issue #9620: [FLINK-13959] Consolidate DetachedEnvironment and ContextEnvironment URL: https://github.com/apache/flink/pull/9620#issuecomment-528072659 ## CI report: * 873a4970f35c7b321f46bdc669f1a9bc2f08fd4f : SUCCESS [Build](https://travis-ci.com/flink-ci/flink/builds/125959606) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Updated] (FLINK-13956) Add sequence file format with repeated sync blocks

[

https://issues.apache.org/jira/browse/FLINK-13956?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Ken Krugler updated FLINK-13956:

Description:

The current {{SerializedOutputFormat}} produces files that are tightly bound to

the block size of the filesystem. While this was a somewhat plausible

assumption in the old HDFS days, it can lead to [hard to debug issues in other

file

systems|https://lists.apache.org/thread.html/bdd87cbb5eb7b19ab4be6501940ec5659e8f6ce6c27ccefa2680732c@%3Cdev.flink.apache.org%3E].

We could implement a file format similar to the current version of Hadoop's

SequenceFileFormat: add a sync block in-between records whenever X bytes were

written. Hadoop uses 2k, but I'd propose to use 1M.

was:

The current {{SequenceFileFormat}} produces files that are tightly bound to the

block size of the filesystem. While this was a somewhat plausible assumption in

the old HDFS days, it can lead to [hard to debug issues in other file

systems|https://lists.apache.org/thread.html/bdd87cbb5eb7b19ab4be6501940ec5659e8f6ce6c27ccefa2680732c@%3Cdev.flink.apache.org%3E].

We could implement a file format similar to the current version of Hadoop's

SequenceFileFormat: add a sync block inbetween records whenever X bytes were

written. Hadoop uses 2k, but I'd propose to use 1M.

> Add sequence file format with repeated sync blocks

> --

>

> Key: FLINK-13956

> URL: https://issues.apache.org/jira/browse/FLINK-13956

> Project: Flink

> Issue Type: Improvement

>Reporter: Arvid Heise

>Priority: Minor

>

> The current {{SerializedOutputFormat}} produces files that are tightly bound

> to the block size of the filesystem. While this was a somewhat plausible

> assumption in the old HDFS days, it can lead to [hard to debug issues in

> other file

> systems|https://lists.apache.org/thread.html/bdd87cbb5eb7b19ab4be6501940ec5659e8f6ce6c27ccefa2680732c@%3Cdev.flink.apache.org%3E].

> We could implement a file format similar to the current version of Hadoop's

> SequenceFileFormat: add a sync block in-between records whenever X bytes were

> written. Hadoop uses 2k, but I'd propose to use 1M.

--

This message was sent by Atlassian Jira

(v8.3.2#803003)

[GitHub] [flink] bowenli86 commented on issue #9580: [FLINK-13930][hive] Support Hive version 3.1.x

bowenli86 commented on issue #9580: [FLINK-13930][hive] Support Hive version 3.1.x URL: https://github.com/apache/flink/pull/9580#issuecomment-528085435 LGTM, merging This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] bowenli86 commented on a change in pull request #9591: [FLINK-13937][docs] Fix the error of the hive connector dependency ve…

bowenli86 commented on a change in pull request #9591: [FLINK-13937][docs] Fix the error of the hive connector dependency ve… URL: https://github.com/apache/flink/pull/9591#discussion_r320973642 ## File path: docs/_config.yml ## @@ -39,6 +39,10 @@ scala_version: "2.11" # This suffix is appended to the Scala-dependent Maven artifact names scala_version_suffix: "_2.11" +# Plain flink-shaded version is needed for e.g. the hive connector. +# Please update the shaded_version once new flink-shaded is released. +shaded_version: "7.0" Review comment: isn't the latest one 8.0? https://mvnrepository.com/artifact/org.apache.flink/flink-shaded This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] flinkbot edited a comment on issue #9620: [FLINK-13959] Consolidate DetachedEnvironment and ContextEnvironment

flinkbot edited a comment on issue #9620: [FLINK-13959] Consolidate DetachedEnvironment and ContextEnvironment URL: https://github.com/apache/flink/pull/9620#issuecomment-528072659 ## CI report: * 873a4970f35c7b321f46bdc669f1a9bc2f08fd4f : PENDING [Build](https://travis-ci.com/flink-ci/flink/builds/125959606) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] flinkbot edited a comment on issue #9607: [FLINK-13946] Remove job session related code from ExecutionEnvironment

flinkbot edited a comment on issue #9607: [FLINK-13946] Remove job session related code from ExecutionEnvironment URL: https://github.com/apache/flink/pull/9607#issuecomment-527790327 ## CI report: * 0c2c5cdcc1267a49ef8df775e0e2ee46a5249487 : SUCCESS [Build](https://travis-ci.com/flink-ci/flink/builds/125850583) * 9c152ea5c40ef923f9f9cf8436d88b1db9af668a : FAILURE [Build](https://travis-ci.com/flink-ci/flink/builds/125884207) * 833e15cfdd2c32ed626bbd35370b69d1f4f8aad7 : CANCELED [Build](https://travis-ci.com/flink-ci/flink/builds/125943944) * 5d0397bed767e8f4e0de48e97295670cb5e3 : CANCELED [Build](https://travis-ci.com/flink-ci/flink/builds/125948178) * d256fa7db82ff098fe03568b6ae6608812bfbfb9 : FAILURE [Build](https://travis-ci.com/flink-ci/flink/builds/125956643) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] flinkbot commented on issue #9620: [FLINK-13959] Consolidate DetachedEnvironment and ContextEnvironment

flinkbot commented on issue #9620: [FLINK-13959] Consolidate DetachedEnvironment and ContextEnvironment URL: https://github.com/apache/flink/pull/9620#issuecomment-528072659 ## CI report: * 873a4970f35c7b321f46bdc669f1a9bc2f08fd4f : UNKNOWN This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Commented] (FLINK-13025) Elasticsearch 7.x support

[ https://issues.apache.org/jira/browse/FLINK-13025?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16922836#comment-16922836 ] Leonid Ilyevsky commented on FLINK-13025: - I just confirmed that using elasticsearch6 connector against Elasticsearch 7.x server has huge impact on performance, making it pretty much useless in our environment (we are processing hundreds of millions documents every day). All this because of that warning for every document. > Elasticsearch 7.x support > - > > Key: FLINK-13025 > URL: https://issues.apache.org/jira/browse/FLINK-13025 > Project: Flink > Issue Type: New Feature > Components: Connectors / ElasticSearch >Affects Versions: 1.8.0 >Reporter: Keegan Standifer >Priority: Major > > Elasticsearch 7.0.0 was released in April of 2019: > [https://www.elastic.co/blog/elasticsearch-7-0-0-released] > The latest elasticsearch connector is > [flink-connector-elasticsearch6|https://github.com/apache/flink/tree/master/flink-connectors/flink-connector-elasticsearch6] -- This message was sent by Atlassian Jira (v8.3.2#803003)

[GitHub] [flink] flinkbot edited a comment on issue #9607: [FLINK-13946] Remove job session related code from ExecutionEnvironment