[GitHub] metron issue #1293: METRON-1928: Bump Metron version to 0.7.0 for release

Github user nickwallen commented on the issue: https://github.com/apache/metron/pull/1293 +1 Thanks! ---

[GitHub] metron issue #1293: METRON-1928: Bump Metron version to 0.7.0 for release

Github user nickwallen commented on the issue: https://github.com/apache/metron/pull/1293 @justinleet The only issue I noticed is that the `Upgrading.md` has a section header that needs updated. ``` ## 0.6.0 to 0.6.1 ``` ``` ## 0.6.0 to 0.7.0 ``` ---

[GitHub] metron-bro-plugin-kafka pull request #21: METRON-1911 Docker setup for testi...

Github user nickwallen commented on a diff in the pull request:

https://github.com/apache/metron-bro-plugin-kafka/pull/21#discussion_r240209807

--- Diff: docker/scripts/download_sample_pcaps.sh ---

@@ -0,0 +1,105 @@

+#!/usr/bin/env bash

+

+#

+# Licensed to the Apache Software Foundation (ASF) under one or more

+# contributor license agreements. See the NOTICE file distributed with

+# this work for additional information regarding copyright ownership.

+# The ASF licenses this file to You under the Apache License, Version 2.0

+# (the "License"); you may not use this file except in compliance with

+# the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+#

+

+shopt -s nocasematch

+

+#

+# Downloads sample pcap files to the data directory

+#

+

+function help {

+ echo " "

+ echo "usage: ${0}"

+ echo "--data-path[REQURIED] The pcap data path"

+ echo "-h/--help Usage information."

+ echo " "

+ echo " "

+}

+

+DATA_PATH=

+

+# handle command line options

+for i in "$@"; do

+ case $i in

+ #

+ # DATA_PATH

+ #

+ #

+--data-path=*)

+ DATA_PATH="${i#*=}"

+ shift # past argument=value

+;;

+

+ #

+ # -h/--help

+ #

+-h | --help)

+ help

+ exit 0

+ shift # past argument with no value

+;;

+

+ #

+ # Unknown option

+ #

+*)

+ UNKNOWN_OPTION="${i#*=}"

+ echo "Error: unknown option: $UNKNOWN_OPTION"

+ help

+;;

+ esac

+done

+

+if [[ -z "$DATA_PATH" ]]; then

+ echo "DATA_PATH must be passed"

+ exit 1

+fi

+

+echo "Running download_sample_pcaps with "

+echo "DATA_PATH = $DATA_PATH"

+echo "==="

+

+for folder in nitroba example-traffic ssh ftp radius rfb; do

+ if [[ ! -d "${DATA_PATH}"/${folder} ]]; then

+mkdir -p "${DATA_PATH}"/${folder}

+ fi

+done

+

+if [[ ! -f "${DATA_PATH}"/example-traffic/exercise-traffic.pcap ]]; then

+ wget https://www.bro.org/static/traces/exercise-traffic.pcap -O

"${DATA_PATH}"/example-traffic/exercise-traffic.pcap

--- End diff --

Why not include this in the Dockerfile? That way it can be cached in the

image.

---

[GitHub] metron-bro-plugin-kafka pull request #21: METRON-1911 Docker setup for testi...

Github user nickwallen commented on a diff in the pull request:

https://github.com/apache/metron-bro-plugin-kafka/pull/21#discussion_r240208847

--- Diff: docker/in_docker_scripts/build_bro_plugin.sh ---

@@ -0,0 +1,48 @@

+#!/usr/bin/env bash

+

+#

+# Licensed to the Apache Software Foundation (ASF) under one or more

+# contributor license agreements. See the NOTICE file distributed with

+# this work for additional information regarding copyright ownership.

+# The ASF licenses this file to You under the Apache License, Version 2.0

+# (the "License"); you may not use this file except in compliance with

+# the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+#

+

+shopt -s nocasematch

+

+#

+# Runs bro-package to build and install the plugin

+#

+

+cd /root/code || exit 1

+

+

+make clean

+

+rc=$?; if [[ ${rc} != 0 ]]; then

+ echo "ERROR cleaning project ${rc}" >>"${RUN_LOG_PATH}"

+ exit ${rc}

+fi

+

+cd /root || exit 1

+

+echo "" >>"${RUN_LOG_PATH}" 2>&1

+bro-pkg install code --force | tee "${RUN_LOG_PATH}"

--- End diff --

Why not install the plugin in the Dockerfile? That way an image with the

plugin installed and ready to go can be cached.

---

[GitHub] metron pull request #1292: METRON-1925 Provide Verbose View of Profile Resul...

GitHub user nickwallen reopened a pull request:

https://github.com/apache/metron/pull/1292

METRON-1925 Provide Verbose View of Profile Results in REPL

## Motivation

When viewing profile measurements in the REPL using PROFILE_GET, you simply

get back a list of values. It is not easy to determine from which time period

the measurements were taken.

For example, are the following values all sequential? Are there any gaps

in the measurements taken over the past 30 minutes? When was the first

measurement taken?

```

[Stellar]>>> PROFILE_GET("hello-world","global",PROFILE_FIXED(30,

"MINUTES"))

[2655, 1170, 1185, 1170, 1185, 1215, 1200, 1170]

```

The `PROFILE_GET` function was designed to return values that serve as

input to other functions. It was not designed to return values in a

human-readable form that can be easily understood. We need another way to query

for profile measurements in the REPL that provides a user with a better

understanding of the profile measurements.

## Solution

This PR provides a new function called `PROFILE_VIEW`. It is effectively a

"verbose mode" for `PROFILE_GET`.

For lack of a better name, I just called it `PROFILE_VIEW`. I would be

open to alternatives. I did not want to add additional options to the already

complex `PROFILE_GET`.

* Description: Retrieves a series of measurements from a stored profile.

Provides a more verbose view of each measurement than PROFILE_GET. Returns a

map containing the profile name, entity, period id, period start, period end

for each profile measurement.

* Arguments:

profile - The name of the profile.

entity - The name of the entity.

periods - The list of profile periods to fetch. Use PROFILE_WINDOW or

PROFILE_FIXED.

groups - Optional, The groups to retrieve. Must correspond to the

'groupBy' list used during profile creation. Defaults to an empty list, meaning

no groups.

* Returns: A map for each profile measurement containing the profile name,

entity, period, and value.

## Test Drive

1. Spin-up Full Dev and create a profile. Follow the Profiler README.

Reduce the profile period if you are impatient.

1. Open up the REPL and retrieve the values using `PROFILE_GET`. Notice

that I have no idea when the first measurement was taken, if the values are

sequential, if there are gaps in the values and how big.

```

[Stellar]>>> PROFILE_GET("hello-world","global",PROFILE_FIXED(30,

"MINUTES"))

[1185, 1170, 1185, 1215, 1200, 1170, 5425, 1155, 1215, 1200]

```

1. Now use `PROFILE_VIEW` to retrieve the same results.

```

[Stellar]>>> results :=

PROFILE_VIEW("hello-world","global",PROFILE_FIXED(30, "MINUTES"))

[{period.start=154411956, period=12867663, profile=hello-world,

period.end=154411968, groups=[], value=1185, entity=global},

{period.start=154411968, period=12867664, profile=hello-world,

period.end=154411980, groups=[], value=1170, entity=global},

{period.start=154411980, period=12867665, profile=hello-world,

period.end=154411992, groups=[], value=1185, entity=global},

{period.start=154411992, period=12867666, profile=hello-world,

period.end=154412004, groups=[], value=1215, entity=global},

{period.start=154412004, period=12867667, profile=hello-world,

period.end=154412016, groups=[], value=1200, entity=global},

{period.start=154412016, period=12867668, profile=hello-world,

period.end=154412028, groups=[], value=1170, entity=global},

{period.start=154412088, period=12867674, profile=hello-world,

period.end=154412100, groups=[], value=5425, entity=global},

{period.start=154412100, period=12867675, profile=hello-world

, period.end=154412112, groups=[], value=1155, entity=global},

{period.start=154412112, period=12867676, profile=hello-world,

period.end=154412124, groups=[], value=1215, entity=global},

{period.start=154412124, period=12867677, profile=hello-world,

period.end=154412136, groups=[], value=1200, entity=global}]

```

1. For each measurement, I have a map containing the period, period start,

period end, profile name, entity, groups, and value. With this I can better

answer some of the questions above.

```

{

profile=hello-world,

entity=global,

period=12867663,

period.start=154411956,

period.end=154411968,

groups=[],

value=1185

}

```

1. When was the first measurement taken?

```

[Stellar]>>> GET(results, 0)

{period.start=154411956, period=12867663, profile=hello-world,

period.end=1544119

[GitHub] metron issue #1292: METRON-1925 Provide Verbose View of Profile Results in R...

Github user nickwallen commented on the issue:

https://github.com/apache/metron/pull/1292

Not sure where this is coming from:

```

Failed tests:

RestFunctionsTest.restGetShouldTimeout:516 expected null, but

was:<{get=success}>

```

---

[GitHub] metron pull request #1292: METRON-1925 Provide Verbose View of Profile Resul...

Github user nickwallen closed the pull request at: https://github.com/apache/metron/pull/1292 ---

[GitHub] metron pull request #1278: METRON-1892 Parser Debugger Should Load Config Fr...

GitHub user nickwallen reopened a pull request:

https://github.com/apache/metron/pull/1278

METRON-1892 Parser Debugger Should Load Config From Zookeeper

When using the parser debugger functions created in #1265, the user has to

manually specify the parser configuration. This is useful when testing out a

new parser before it goes live.

In other cases, it would be simpler to use the sensor configuration values

that are 'live' and already loaded in Zookeeper. For example, I might want to

test why a particular messages fails to parse in my environment.

## Try It Out

Try out the following examples in the Stellar REPL.

1. Launch a development environment.

1. Launch the REPL.

```

source /etc/default/metron

cd $METRON_HOME

bin/stellar -z $ZOOKEEPER

```

### Parse a Message

1. Grab a message from the input topic to parse. You could also just

mock-up a message that you would like to test.

```

[Stellar]>>> input := KAFKA_GET('bro')

[{"http":

{"ts":1542313125.807068,"uid":"CUrRne3iLIxXavQtci","id.orig_h"...

```

1. Initialize the parser. The parser configuration for 'bro' will be loaded

automatically from Zookeeper.

```

[Stellar]>>> parser := PARSER_INIT("bro")

Parser{0 successful, 0 error(s)}

```

1. Parse the message.

```

[Stellar]>>> msgs := PARSER_PARSE(parser, input)

[{"bro_timestamp":"1542313125.807068","method":"GET","ip_dst_port":8080,...

```

1. The parser will tally the success.

```

[Stellar]>>> parser

Parser{1 successful, 0 error(s)}

```

1. Review the successfully parsed message.

```

[Stellar]>>> LENGTH(msgs)

1

```

```

[Stellar]>>> msg := GET(msgs, 0)

[Stellar]>>> MAP_GET("guid", msg)

7f2e0c77-c58c-488e-b1ad-fbec10fb8182

```

```

[Stellar]>>> MAP_GET("timestamp", msg)

1542313125807

```

```

[Stellar]>>> MAP_GET("source.type", msg)

bro

```

### Missing Configuration

1. If the configuration does not exist in Zookeeper, you should see

something like this.

I have not configured a parser named 'tuna' in my environment (but I

could go for a tuna sandwich right about now ).

```

[Stellar]>>> bad := PARSER_INIT('tuna')

[!] Unable to parse: PARSER_INIT('tuna') due to: Unable to read

configuration from Zookeeper; sensorType = tuna

org.apache.metron.stellar.dsl.ParseException: Unable to parse:

PARSER_INIT('tuna') due to: Unable to read configuration from Zookeeper;

sensorType = tuna

at

org.apache.metron.stellar.common.BaseStellarProcessor.createException(BaseStellarProcessor.java:166)

at

org.apache.metron.stellar.common.BaseStellarProcessor.parse(BaseStellarProcessor.java:154)

at

org.apache.metron.stellar.common.shell.DefaultStellarShellExecutor.executeStellar(DefaultStellarShellExecutor.java:405)

at

org.apache.metron.stellar.common.shell.DefaultStellarShellExecutor.execute(DefaultStellarShellExecutor.java:257)

at

org.apache.metron.stellar.common.shell.specials.AssignmentCommand.execute(AssignmentCommand.java:66)

at

org.apache.metron.stellar.common.shell.DefaultStellarShellExecutor.execute(DefaultStellarShellExecutor.java:252)

at

org.apache.metron.stellar.common.shell.cli.StellarShell.execute(StellarShell.java:359)

at org.jboss.aesh.console.AeshProcess.run(AeshProcess.java:53)

at

java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at

java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.IllegalArgumentException: Unable to read

configuration from Zookeeper; sensorType = tuna

at

org.apache.metron.management.ParserFunctions$InitializeFunction.readFromZookeeper(ParserFunctions.java:103)

at

org.apache.metron.management.ParserFunctions$InitializeFunction.apply(ParserFunctions.java:66)

at

org.apache.metron.stellar.common.StellarCompiler.lambda$exitTransformationFunc$13(StellarCompiler.java:661)

at

org.apache.metron.stellar.common.StellarCompiler$Expression.apply(StellarCompiler.java:259)

at

org.apache.metron.stellar.common.BaseStellarProcessor.parse(BaseStellarProcessor.java:151)

... 9 more

[GitHub] metron pull request #1278: METRON-1892 Parser Debugger Should Load Config Fr...

Github user nickwallen closed the pull request at: https://github.com/apache/metron/pull/1278 ---

[GitHub] metron-bro-plugin-kafka issue #21: METRON-1911 Docker setup for testing bro ...

Github user nickwallen commented on the issue: https://github.com/apache/metron-bro-plugin-kafka/pull/21 I ran this up, but seemed to get a couple errors. ``` ./scripts/docker_run_bro_container.sh: line 152: DOCKER_CMD_BASE: command not found ``` ``` ./scripts/docker_run_bro_container.sh: line 162: metron-bro-docker-container:latest: command not found ``` This is the command that I ran along with what I saw when the errors popped up. ``` $ ./scripts/download_sample_pcaps.sh --data-path=~/tmp/data && ./example_script.sh --leave-running --data-path=/Users/me/tmp/pcap_data && ./scripts/docker_execute_process_data_dir.sh && ./scripts/docker_run_consume_bro_kafka.sh ... make[1]: Leaving directory `/root/bro-2.5.5/librdkafka-0.11.5/src-cpp' Removing intermediate container c4b01dee8e27 ---> 4301bf29af13 Step 23/23 : WORKDIR /root ---> Running in 3fac0790cfdc Removing intermediate container 3fac0790cfdc ---> 02b3e630af02 Successfully built 02b3e630af02 Successfully tagged metron-bro-docker-container:latest Running docker_run_bro_container with CONTAINER_NAME = bro NETWORK_NAME = bro-network SCRIPT_PATH = LOG_PATH = /Users/nallen/tmp/metron-bro-plugin-kafka-pr21/docker/logs DATA_PATH = /Users/me/tmp/pcap_data DOCKER_PARAMETERS = === Log will be found on host at /Users/nallen/tmp/metron-bro-plugin-kafka-pr21/docker/logs/bro-test-Fri_Dec__7_16:51:06_EST_2018.log ./scripts/docker_run_bro_container.sh: line 143: DOCKER_CMD_BASE: command not found ./scripts/docker_run_bro_container.sh: line 144: DOCKER_CMD_BASE: command not found ./scripts/docker_run_bro_container.sh: line 145: DOCKER_CMD_BASE: command not found ./scripts/docker_run_bro_container.sh: line 146: DOCKER_CMD_BASE: command not found ./scripts/docker_run_bro_container.sh: line 147: DOCKER_CMD_BASE: command not found ./scripts/docker_run_bro_container.sh: line 152: DOCKER_CMD_BASE: command not found ===Running Docker=== eval command is: bro bash ./scripts/docker_run_bro_container.sh: line 162: metron-bro-docker-container:latest: command not found Running stop_container with CONTAINER_NAME= kafka === kafka kafka Running stop_container with CONTAINER_NAME= zookeeper === zookeeper zookeeper Running destroy_docker_network with NETWORK_NAME = bro-network === bro-network ``` And I see no containers running. ``` $ docker ps CONTAINER IDIMAGE COMMAND CREATED STATUS PORTS NAMES ``` Docker Engine 18.09.0 ---

[GitHub] metron pull request #1245: METRON-1795: Initial Commit for Regular Expressio...

Github user nickwallen commented on a diff in the pull request:

https://github.com/apache/metron/pull/1245#discussion_r239855809

--- Diff:

metron-platform/metron-parsers/src/test/java/org/apache/metron/parsers/regex/RegularExpressionsParserTest.java

---

@@ -0,0 +1,152 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one or more

contributor license

+ * agreements. See the NOTICE file distributed with this work for

additional information regarding

+ * copyright ownership. The ASF licenses this file to you under the Apache

License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance with the

License. You may obtain a

+ * copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

distributed under the License

+ * is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF

ANY KIND, either express

+ * or implied. See the License for the specific language governing

permissions and limitations under

+ * the License.

+ */

+package org.apache.metron.parsers.regex;

+

+import org.json.simple.JSONObject;

+import org.json.simple.parser.JSONParser;

+import org.junit.Before;

+import org.junit.Test;

+

+import java.nio.file.Files;

+import java.nio.file.Paths;

+import java.util.HashMap;

+import java.util.List;

+import java.util.Map;

+

+import static org.junit.Assert.assertTrue;

+

+public class RegularExpressionsParserTest {

+

+ private RegularExpressionsParser regularExpressionsParser;

+ private JSONObject parserConfig;

+

+ @Before

+ public void setUp() throws Exception {

+regularExpressionsParser = new RegularExpressionsParser();

+ }

+

+ @Test

+ public void testSSHDParse() throws Exception {

+String message =

+"<38>Jun 20 15:01:17 deviceName sshd[11672]: Accepted publickey

for prod from 22.22.22.22 port 5 ssh2";

+

+parserConfig = getJsonConfig(

+

Paths.get("src/test/resources/config/RegularExpressionsParserConfig.json").toString());

--- End diff --

Yes, good point @ottobackwards .

@jagdeepsingh2 - He is referring specifically to the class

`Syslog3164ParserIntegrationTest` in that PR. Should be fairly simple to put

together with what you already have.

---

[GitHub] metron-bro-plugin-kafka issue #21: METRON-1911 Docker setup for testing bro ...

Github user nickwallen commented on the issue: https://github.com/apache/metron-bro-plugin-kafka/pull/21 Thanks @ottobackwards . I'll give it a run through. ---

[GitHub] metron issue #1289: METRON-1810 Storm Profiler Intermittent Test Failure

Github user nickwallen commented on the issue: https://github.com/apache/metron/pull/1289 I have run it up in Full Dev successfully. I ran all the tests in `ProfilerIntegrationTest` in a continuous loop on my desktop for maybe 3 or 4 hours yesterday. Doing the same on master fails within 20 minutes or so. I have manually triggered it in Travis 10 times. ---

[GitHub] metron issue #1292: METRON-1925 Provide Verbose View of Profile Results in R...

Github user nickwallen commented on the issue: https://github.com/apache/metron/pull/1292 > The underlying logic seems like it should be nearly identical. Is there any common functionality that could be pulled out and shared between the two? All of the heavy lifting was already done by the HBaseProfilerClient. So they already share a common implementation through that. And that is why you don't see a ton of change needed in `PROFILE_GET`. ---

[GitHub] metron issue #1292: METRON-1925 Provide Verbose View of Profile Results in R...

Github user nickwallen commented on the issue: https://github.com/apache/metron/pull/1292 > Could the return be a full json document, that includes the query parameters? what do you mean by query parameters? ---

[GitHub] metron pull request #1245: METRON-1795: Initial Commit for Regular Expressio...

Github user nickwallen commented on a diff in the pull request:

https://github.com/apache/metron/pull/1245#discussion_r239611104

--- Diff:

metron-platform/metron-parsers/src/test/java/org/apache/metron/parsers/regex/RegularExpressionsParserTest.java

---

@@ -0,0 +1,152 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one or more

contributor license

+ * agreements. See the NOTICE file distributed with this work for

additional information regarding

+ * copyright ownership. The ASF licenses this file to you under the Apache

License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance with the

License. You may obtain a

+ * copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

distributed under the License

+ * is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF

ANY KIND, either express

+ * or implied. See the License for the specific language governing

permissions and limitations under

+ * the License.

+ */

+package org.apache.metron.parsers.regex;

+

+import org.json.simple.JSONObject;

+import org.json.simple.parser.JSONParser;

+import org.junit.Before;

+import org.junit.Test;

+

+import java.nio.file.Files;

+import java.nio.file.Paths;

+import java.util.HashMap;

+import java.util.List;

+import java.util.Map;

+

+import static org.junit.Assert.assertTrue;

+

+public class RegularExpressionsParserTest {

+

+ private RegularExpressionsParser regularExpressionsParser;

+ private JSONObject parserConfig;

+

+ @Before

+ public void setUp() throws Exception {

+regularExpressionsParser = new RegularExpressionsParser();

+ }

+

+ @Test

+ public void testSSHDParse() throws Exception {

+String message =

+"<38>Jun 20 15:01:17 deviceName sshd[11672]: Accepted publickey

for prod from 22.22.22.22 port 5 ssh2";

+

+parserConfig = getJsonConfig(

+

Paths.get("src/test/resources/config/RegularExpressionsParserConfig.json").toString());

--- End diff --

I [opened this JIRA ](https://issues.apache.org/jira/browse/METRON-1926)to

fix the parsing infrastructure. The error message produced should have made it

clear that the message failed because it was missing a timestamp, but it does

not.

---

[GitHub] metron pull request #1245: METRON-1795: Initial Commit for Regular Expressio...

Github user nickwallen commented on a diff in the pull request:

https://github.com/apache/metron/pull/1245#discussion_r239608145

--- Diff:

metron-platform/metron-parsers/src/test/java/org/apache/metron/parsers/regex/RegularExpressionsParserTest.java

---

@@ -0,0 +1,152 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one or more

contributor license

+ * agreements. See the NOTICE file distributed with this work for

additional information regarding

+ * copyright ownership. The ASF licenses this file to you under the Apache

License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance with the

License. You may obtain a

+ * copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

distributed under the License

+ * is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF

ANY KIND, either express

+ * or implied. See the License for the specific language governing

permissions and limitations under

+ * the License.

+ */

+package org.apache.metron.parsers.regex;

+

+import org.json.simple.JSONObject;

+import org.json.simple.parser.JSONParser;

+import org.junit.Before;

+import org.junit.Test;

+

+import java.nio.file.Files;

+import java.nio.file.Paths;

+import java.util.HashMap;

+import java.util.List;

+import java.util.Map;

+

+import static org.junit.Assert.assertTrue;

+

+public class RegularExpressionsParserTest {

+

+ private RegularExpressionsParser regularExpressionsParser;

+ private JSONObject parserConfig;

+

+ @Before

+ public void setUp() throws Exception {

+regularExpressionsParser = new RegularExpressionsParser();

+ }

+

+ @Test

+ public void testSSHDParse() throws Exception {

+String message =

+"<38>Jun 20 15:01:17 deviceName sshd[11672]: Accepted publickey

for prod from 22.22.22.22 port 5 ssh2";

+

+parserConfig = getJsonConfig(

+

Paths.get("src/test/resources/config/RegularExpressionsParserConfig.json").toString());

--- End diff --

Hi @jagdeepsingh2 - I was able to get this up and running in a debugger.

Your parser will not parse messages successfully after the changes made in

#1213. You are likely using this on an older version of Metron.

The parser must produce a JSONObject that contains both a `timestamp` and

`original_string` field based on the [validation performed

here.](https://github.com/apache/metron/blob/2ee6cc7e0b448d8d27f56f873e2c15a603c53917/metron-platform/metron-parsers/src/main/java/org/apache/metron/parsers/BasicParser.java#L34-L46)

If you add the timestamp like you mentioned it should work.

---

[GitHub] metron pull request #1289: METRON-1810 Storm Profiler Intermittent Test Fail...

Github user nickwallen commented on a diff in the pull request:

https://github.com/apache/metron/pull/1289#discussion_r239577883

--- Diff:

metron-analytics/metron-profiler-common/src/main/java/org/apache/metron/profiler/ProfilePeriod.java

---

@@ -151,6 +152,8 @@ public String toString() {

return "ProfilePeriod{" +

"period=" + period +

", durationMillis=" + durationMillis +

+", startTime=" +

Instant.ofEpochMilli(getStartTimeMillis()).toString() +

+", endTime=" +

Instant.ofEpochMilli(getEndTimeMillis()).toString() +

--- End diff --

I will remove this as it is not necessary.

---

[GitHub] metron pull request #1292: METRON-1925 Provide Verbose View of Profile Resul...

GitHub user nickwallen opened a pull request:

https://github.com/apache/metron/pull/1292

METRON-1925 Provide Verbose View of Profile Results in REPL

## Motivation

When viewing profile measurements in the REPL using PROFILE_GET, you simply

get back a list of values. It is not easy to determine from which time period

the measurements were taken.

For example, are the following values all sequential? Are there any gaps

in the measurements taken over the past 30 minutes? When was the first

measurement taken?

```

[Stellar]>>> PROFILE_GET("hello-world","global",PROFILE_FIXED(30,

"MINUTES"))

[2655, 1170, 1185, 1170, 1185, 1215, 1200, 1170]

```

The `PROFILE_GET` function was designed to return values that serve as

input to other functions. It was not designed to return values in a

human-readable form that can be easily understood. We need another way to query

for profile measurements in the REPL that provides a user with a better

understanding of the profile measurements.

## Solution

This PR provides a new function called `PROFILE_VIEW`. It is effectively a

"verbose mode" for `PROFILE_GET`.

For lack of a better name, I just called it `PROFILE_VIEW`. I would be

open to alternatives. I did not want to add additional options to the already

complex `PROFILE_GET`.

* Description: Retrieves a series of measurements from a stored profile.

Provides a more verbose view of each measurement than PROFILE_GET. Returns a

map containing the profile name, entity, period id, period start, period end

for each profile measurement.

* Arguments:

profile - The name of the profile.

entity - The name of the entity.

periods - The list of profile periods to fetch. Use PROFILE_WINDOW or

PROFILE_FIXED.

groups - Optional, The groups to retrieve. Must correspond to the

'groupBy' list used during profile creation. Defaults to an empty list, meaning

no groups.

* Returns: A map for each profile measurement containing the profile name,

entity, period, and value.

## Test Drive

1. Spin-up Full Dev and create a profile. Follow the Profiler README.

Reduce the profile period if you are impatient.

1. Open up the REPL and retrieve the values using `PROFILE_GET`. Notice

that I have no idea when the first measurement was taken, if the values are

sequential, if there are gaps in the values and how big.

```

[Stellar]>>> PROFILE_GET("hello-world","global",PROFILE_FIXED(30,

"MINUTES"))

[1185, 1170, 1185, 1215, 1200, 1170, 5425, 1155, 1215, 1200]

```

1. Now use `PROFILE_VIEW` to retrieve the same results.

```

[Stellar]>>> results :=

PROFILE_VIEW("hello-world","global",PROFILE_FIXED(30, "MINUTES"))

[{period.start=154411956, period=12867663, profile=hello-world,

period.end=154411968, groups=[], value=1185, entity=global},

{period.start=154411968, period=12867664, profile=hello-world,

period.end=154411980, groups=[], value=1170, entity=global},

{period.start=154411980, period=12867665, profile=hello-world,

period.end=154411992, groups=[], value=1185, entity=global},

{period.start=154411992, period=12867666, profile=hello-world,

period.end=154412004, groups=[], value=1215, entity=global},

{period.start=154412004, period=12867667, profile=hello-world,

period.end=154412016, groups=[], value=1200, entity=global},

{period.start=154412016, period=12867668, profile=hello-world,

period.end=154412028, groups=[], value=1170, entity=global},

{period.start=154412088, period=12867674, profile=hello-world,

period.end=154412100, groups=[], value=5425, entity=global},

{period.start=154412100, period=12867675, profile=hello-world

, period.end=154412112, groups=[], value=1155, entity=global},

{period.start=154412112, period=12867676, profile=hello-world,

period.end=154412124, groups=[], value=1215, entity=global},

{period.start=154412124, period=12867677, profile=hello-world,

period.end=154412136, groups=[], value=1200, entity=global}]

```

1. For each measurement, I have a map containing the period, period start,

period end, profile name, entity, groups, and value. With this I can better

answer some of the questions above.

```

{

profile=hello-world,

entity=global,

period=12867663,

period.start=154411956,

period.end=154411968,

groups=[],

value=1185

}

```

1. When was the first measurement taken?

```

[Stellar]>>> GET(results, 0)

{period.start=154411956, period=12867663, profile=hello-world,

period.end=1544119

[GitHub] metron issue #1288: METRON-1916 Stellar Classpath Function Resolver Should H...

Github user nickwallen commented on the issue: https://github.com/apache/metron/pull/1288 It was when I was working on #1289 . After changing the POM, I found that multiple versions of httpclient were getting pulled in, which broke all the integration tests because a Stellar executor could not load. It would throw the exception that I put in the JIRA. This is one specific scenario, but I can imagine that classpath issues like this are going to crop-up in different environments. It seems like we should have a way to handle this. ---

[GitHub] metron pull request #1289: METRON-1810 Storm Profiler Intermittent Test Fail...

GitHub user nickwallen opened a pull request: https://github.com/apache/metron/pull/1289 METRON-1810 Storm Profiler Intermittent Test Failure This PR hopefully resolves some of the more frequent, intermittent `ProfilerIntegrationTest` failures. ### Testing Run the integration tests and they should not fail. I have repeatedly run the integration tests on my laptop and have yet to see a failure. I am also repeatedly triggering Travis CI builds on this branch to see if any failures occur there. I can't prove the problem is solved, but I am hoping this helps. ### Changes * This change uses Caffeine's CacheWriter in place of the RemovalListener for profile expiration. This is explained more in the next section. * Removed the ability to define the cache maintenance executor for the `DefaultMessageDistributor`. This was only needed for testing RemovalListeners, which no longer exist. * I re-jiggered some of the integration tests to provide more information when they do fail. I removed the use of `waitOrTimeout` and replaced it with `assertEventually`. I am also using a different style of assertion; Hamcrest-y. These changes should provide additional information and also be more consistent with the rest of the code base. * After removing the dependency that provided `waitOrTimeout`, I had to rework some of the project dependencies because multiple versions of`httpclient` lib was being pulled in. This caused the new REST function in `stellar-common` to blow up when a Stellar execution environment is loaded during the integration test. * Added additional debug logging for the caches including an estimate of the cache size. RemovalListener to CacheWriter Profiles are designed to expire and flush after a fixed period of time; the TTL. The integration tests rely on some of the profile values being flushed by this TTL mechanism. The TTL mechanism is driven by cache expiration. There is a cache of "active" profiles. This cache is configured to have values timeout after they have not been accessed for a fixed period of time. Once they timeout from the active cache, they are placed on an "expired" cache. Messages are not applied to expired profiles, but these expired profiles hang around for a fixed period of time to allow them to be flushed. Previously, a Caffeine [RemovalListener](https://github.com/ben-manes/caffeine/wiki/Removal#removal-listeners) was used so that when a profile expires from the active cache, it is placed into the expired cache. When the tests fail, it seems that the `RemovalListener` is not notified in a timely fashion, so the profile doesn't make it to the expired cache and so never flushes to be read by the integration test. In Caffeine, these listeners are notified asynchronously, on a separate thread (via ForkJoinPool.commonPool()), not inline with cache reads or writes. For running tests that depend on RemovalListener's it is recommended to set the cache maintenance executor to something like `MoreExecutors.sameThreadExecutor()` so that cache maintenance is executed on the main execution thread when `cleanUp` is called. This was done for the unit tests, but was not done for the integration tests. The Caffeine Wiki mentions that when notification should be performed synchronously, which logically works for this use case, to use a [CacheWriter](https://github.com/ben-manes/caffeine/wiki/Writer) instead. > Removal listener operations are executed asynchronously using an Executor. The default executor is ForkJoinPool.commonPool() and can be overridden via Caffeine.executor(Executor). When the operation must be performed synchronously with the removal, use [CacheWriter](https://github.com/ben-manes/caffeine/wiki/Writer) instead. This does not negatively impact production performance because the `ActiveCacheWriter` only does work on a delete which occurs only rarely when a profile stops receiving messages, not on a write. In addition, these caches are very read-heavy as in most cases the cache is only written to when a new profile is defined or on topology start-up. ## Pull Request Checklist - [ ] Is there a JIRA ticket associated with this PR? If not one needs to be created at [Metron Jira](https://issues.apache.org/jira/browse/METRON/?selectedTab=com.atlassian.jira.jira-projects-plugin:summary-panel). - [ ] Does your PR title start with METRON- where is the JIRA number you are trying to resolve? Pay particular attention to the hyphen "-" character. - [ ] Has your PR been rebased against the latest commit within the target branch (typically master)? - [ ] Have you included steps to reproduce the behavior or problem that is being changed or addressed? - [ ] Have you included steps or a guide

[GitHub] metron issue #1269: METRON-1879 Allow Elasticsearch to Auto-Generate the Doc...

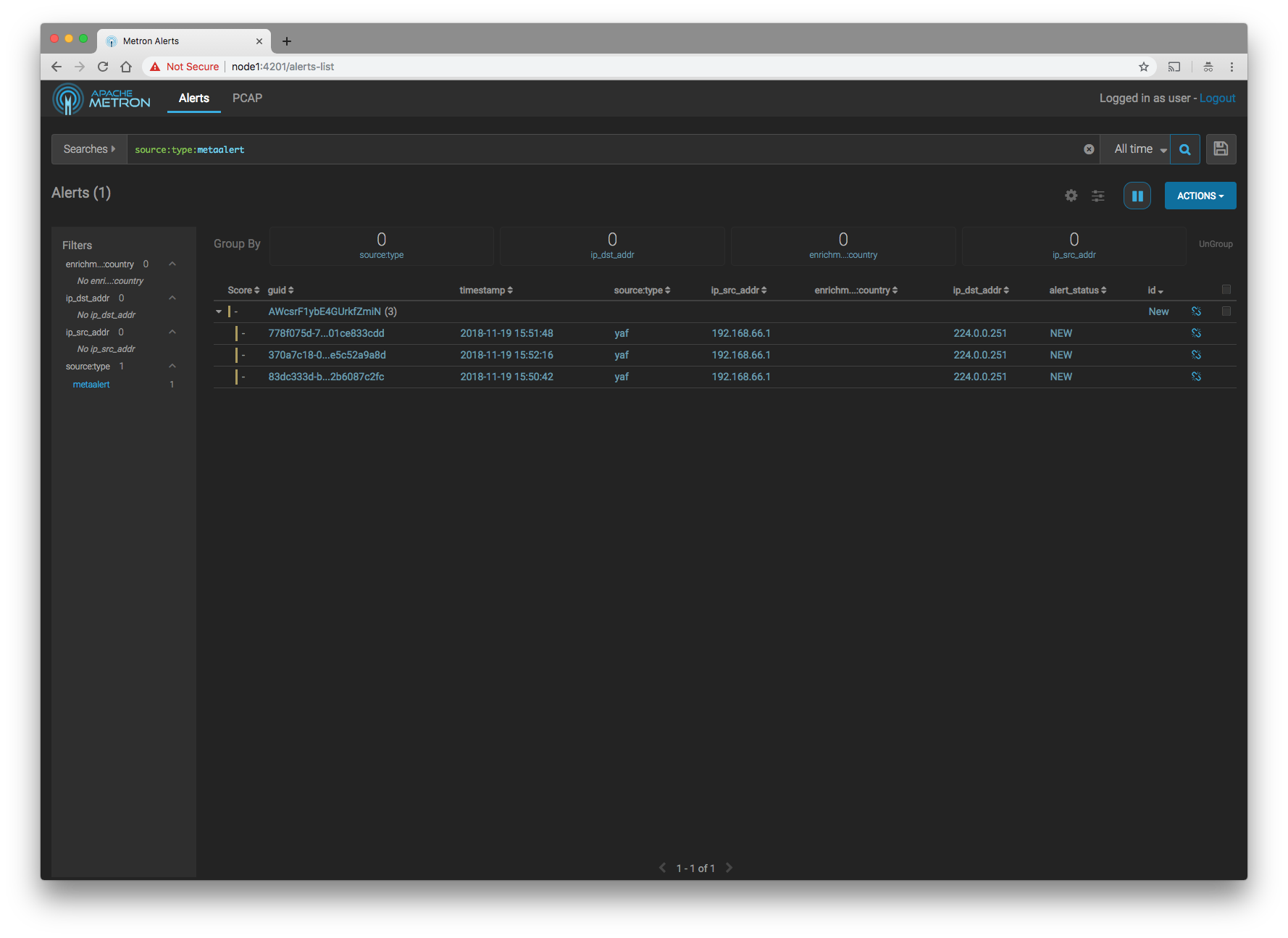

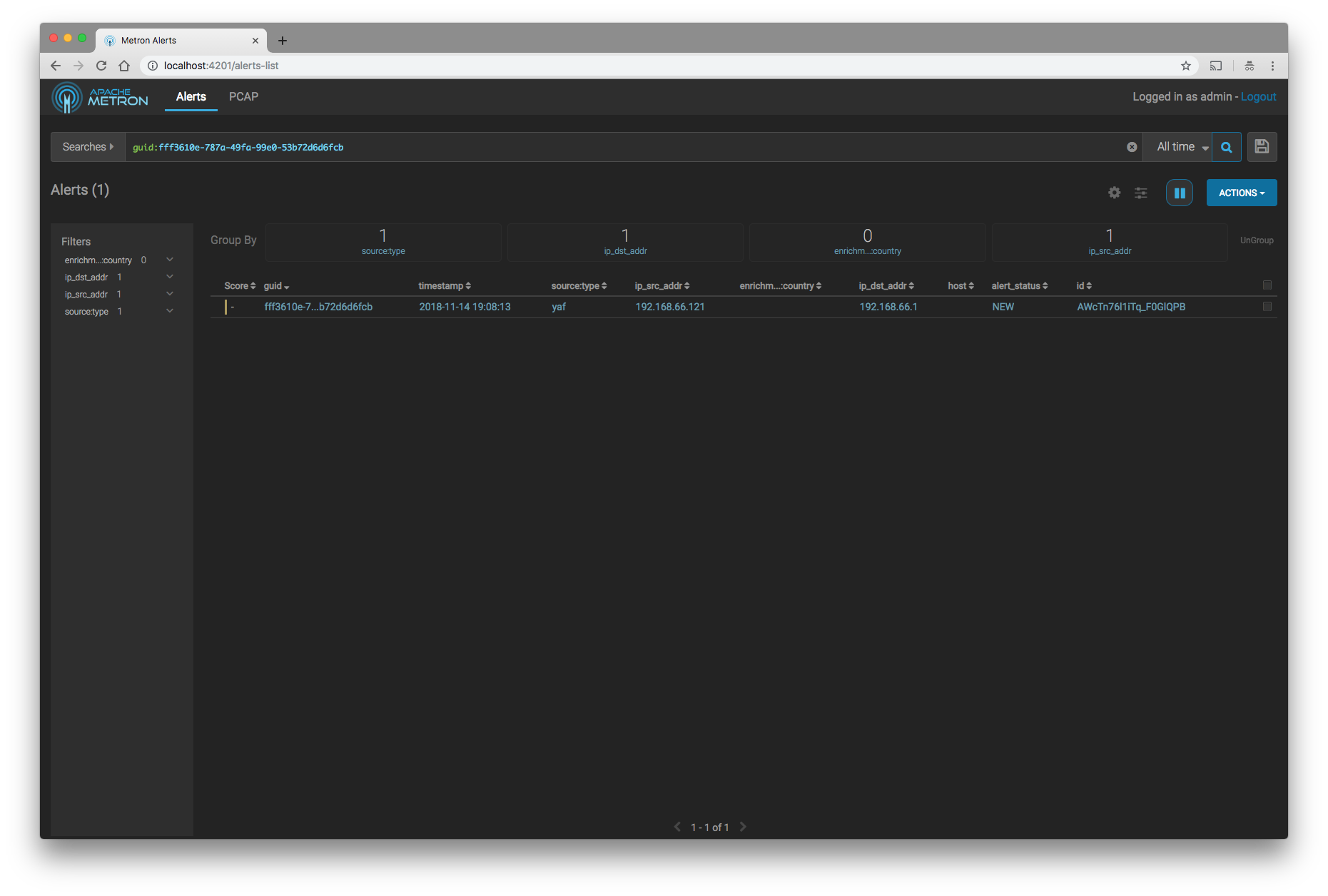

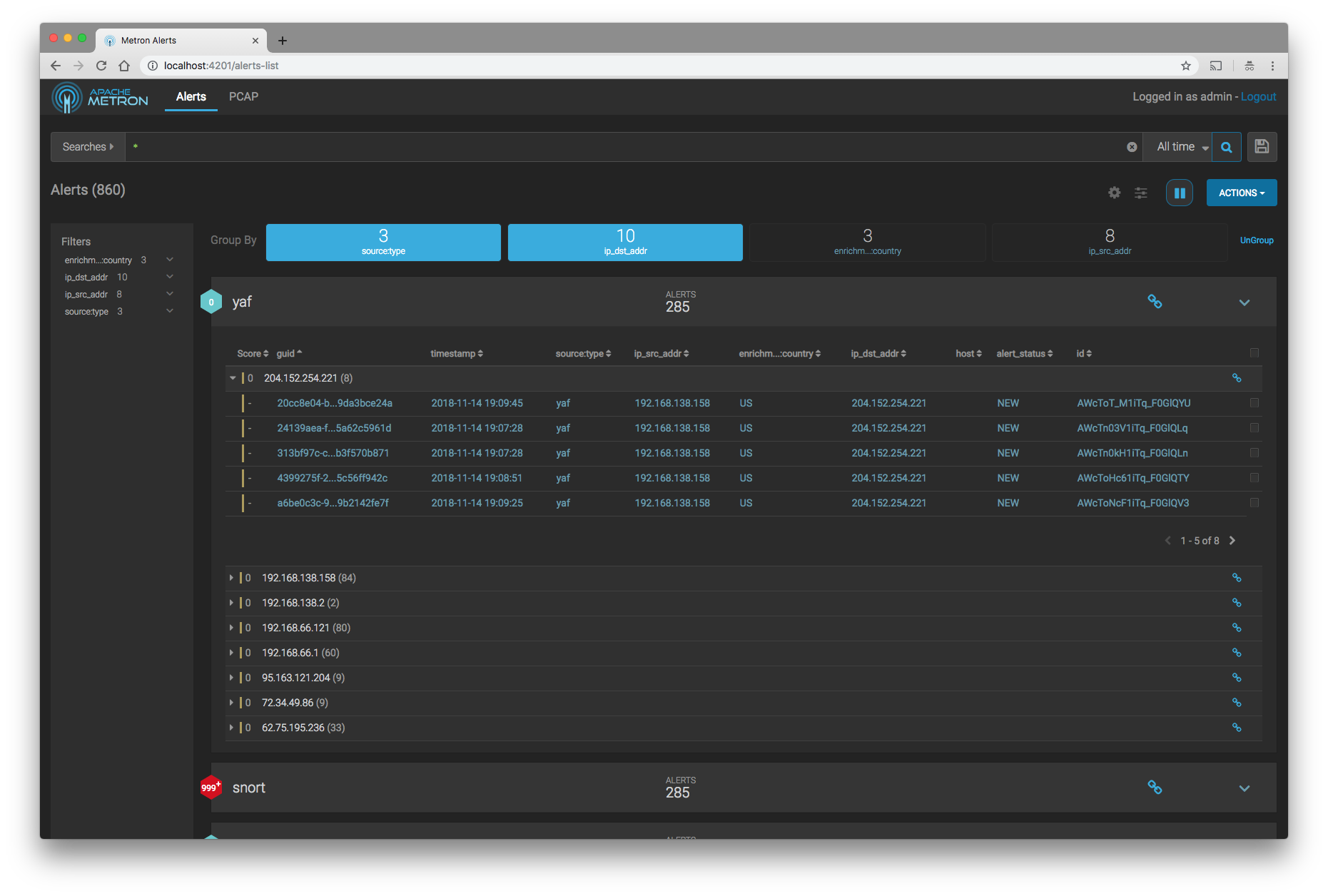

Github user nickwallen commented on the issue: https://github.com/apache/metron/pull/1269 Prior to this change we use the Metron-generated GUID as the documentID. They were always the same. There were various places in the code where the name `guid`, `docID`, or `id` were used that implicitly meant the Metron GUID. With this change, the Metron-generated guid will never be equal to the Elasticsearch-generated doc ID. You can view and use either the guid or the doc ID in the UI. Both are available as separate fields in the index. This PR has pulled on those strings and tried to make clear what is a Metron GUID and what is a document ID. In the Alerts UI you can see and/or use either the GUID or the document ID. It is up to the user which one they care to see. Although most users will not care what the document ID is. From my PR description: > This change is backwards compatible. The Alerts UI should continue to work no matter if some of the underlying indices were written with the Metron GUID as the document ID and some are written with the auto-generated document ID. There is no option to continue to use the GUID as the documentID. I can't think of a use case worthy of the additional effort and testing needed to support that. It is backwards compatible in that the Alerts UI will work when searching over both "legacy" indices where guid = docID and "new" indices where guid != docID. All places in the code where a docID is needed, that docID is actually first retrieved from Elasticsearch, rather than making an assumption about what it is. ---

[GitHub] metron pull request #1254: METRON-1849 Elasticsearch Index Write Functionali...

Github user nickwallen commented on a diff in the pull request:

https://github.com/apache/metron/pull/1254#discussion_r239114286

--- Diff:

metron-platform/metron-elasticsearch/src/main/java/org/apache/metron/elasticsearch/bulk/BulkDocumentWriter.java

---

@@ -0,0 +1,79 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.metron.elasticsearch.bulk;

+

+import org.apache.metron.indexing.dao.update.Document;

+

+import java.util.List;

+

+/**

+ * Writes documents to an index in bulk.

+ *

+ * Partial failures within a batch can be handled individually by

registering

+ * a {@link FailureListener}.

+ *

+ * @param The type of document to write.

+ */

+public interface BulkDocumentWriter {

+

+/**

+ * A listener that is notified when a set of documents have been

+ * written successfully.

+ * @param The type of document to write.

+ */

+interface SuccessListener {

--- End diff --

Done. See latest commit.

---

[GitHub] metron pull request #1254: METRON-1849 Elasticsearch Index Write Functionali...

Github user nickwallen commented on a diff in the pull request:

https://github.com/apache/metron/pull/1254#discussion_r239065568

--- Diff:

metron-platform/metron-elasticsearch/src/main/java/org/apache/metron/elasticsearch/dao/ElasticsearchDao.java

---

@@ -196,7 +196,7 @@ public ElasticsearchDao

withRefreshPolicy(WriteRequest.RefreshPolicy refreshPoli

}

protected Optional getIndexName(String guid, String sensorType)

throws IOException {

-return updateDao.getIndexName(guid, sensorType);

+return updateDao.findIndexNameByGUID(guid, sensorType);

--- End diff --

> Also, would we want any parity between the updateDao's find method name

vs the ElasticsearchDao's getIndexName method name?

I found the [code here

confusing](https://github.com/apache/metron/blob/89a2beda4f07911c8b3cd7dee8a2c3426838d161/metron-platform/metron-elasticsearch/src/main/java/org/apache/metron/elasticsearch/dao/ElasticsearchUpdateDao.java#L195-L197)

and had me stuck on an issue for quite some time. When both use

`getIndexName` I have no idea what the logic is doing. It tries one approach,

then falls back to another, but since the methods are named the same, it

doesn't tell me how they attempt to find the index name in a different way.

With the rename, I feel it improves understanding in a glance [what this is

doing

now](https://github.com/apache/metron/blob/260ccc366b79ef53595dbfd097066040444b4eda/metron-platform/metron-elasticsearch/src/main/java/org/apache/metron/elasticsearch/dao/ElasticsearchUpdateDao.java#L179)

and the differences between the primary approach versus the fallback.

> Is sensorType not a component to retrieving the index name?

So you prefer the original function name? Or you prefer `lookupIndexName`,

`findIndexNameByGUIDAndSensor`?

---

[GitHub] metron pull request #1254: METRON-1849 Elasticsearch Index Write Functionali...

Github user nickwallen commented on a diff in the pull request:

https://github.com/apache/metron/pull/1254#discussion_r239054925

--- Diff:

metron-platform/metron-elasticsearch/src/test/java/org/apache/metron/elasticsearch/writer/ElasticsearchWriterTest.java

---

@@ -18,170 +18,241 @@

package org.apache.metron.elasticsearch.writer;

-import static org.junit.Assert.assertEquals;

-import static org.mockito.Mockito.mock;

-import static org.mockito.Mockito.when;

+import org.apache.metron.common.Constants;

+import org.apache.metron.common.configuration.writer.WriterConfiguration;

+import org.apache.metron.common.writer.BulkWriterResponse;

+import org.apache.storm.task.TopologyContext;

+import org.apache.storm.tuple.Tuple;

+import org.json.simple.JSONObject;

+import org.junit.Before;

+import org.junit.Test;

-import com.google.common.collect.ImmutableList;

+import java.util.ArrayList;

import java.util.Collection;

import java.util.HashMap;

+import java.util.List;

import java.util.Map;

-import org.apache.metron.common.writer.BulkWriterResponse;

-import org.apache.storm.tuple.Tuple;

-import org.elasticsearch.action.bulk.BulkItemResponse;

-import org.elasticsearch.action.bulk.BulkResponse;

-import org.junit.Test;

+import java.util.UUID;

+

+import static org.junit.Assert.assertEquals;

+import static org.junit.Assert.assertFalse;

+import static org.junit.Assert.assertNotNull;

+import static org.junit.Assert.assertTrue;

+import static org.junit.Assert.fail;

+import static org.mockito.Mockito.mock;

+import static org.mockito.Mockito.when;

public class ElasticsearchWriterTest {

-@Test

-public void testSingleSuccesses() throws Exception {

-Tuple tuple1 = mock(Tuple.class);

-BulkResponse response = mock(BulkResponse.class);

-when(response.hasFailures()).thenReturn(false);

+Map stormConf;

+TopologyContext topologyContext;

+WriterConfiguration writerConfiguration;

-BulkWriterResponse expected = new BulkWriterResponse();

-expected.addSuccess(tuple1);

+@Before

+public void setup() {

+topologyContext = mock(TopologyContext.class);

-ElasticsearchWriter esWriter = new ElasticsearchWriter();

-BulkWriterResponse actual =

esWriter.buildWriteReponse(ImmutableList.of(tuple1), response);

+writerConfiguration = mock(WriterConfiguration.class);

+when(writerConfiguration.getGlobalConfig()).thenReturn(globals());

-assertEquals("Response should have no errors and single success",

expected, actual);

+stormConf = new HashMap();

}

@Test

-public void testMultipleSuccesses() throws Exception {

-Tuple tuple1 = mock(Tuple.class);

-Tuple tuple2 = mock(Tuple.class);

-

-BulkResponse response = mock(BulkResponse.class);

-when(response.hasFailures()).thenReturn(false);

+public void shouldWriteSuccessfully() {

+// create a writer where all writes will be successful

+float probabilityOfSuccess = 1.0F;

+ElasticsearchWriter esWriter = new ElasticsearchWriter();

+esWriter.setDocumentWriter( new

BulkDocumentWriterStub<>(probabilityOfSuccess));

+esWriter.init(stormConf, topologyContext, writerConfiguration);

-BulkWriterResponse expected = new BulkWriterResponse();

-expected.addSuccess(tuple1);

-expected.addSuccess(tuple2);

+// create a tuple and a message associated with that tuple

+List tuples = createTuples(1);

+List messages = createMessages(1);

-ElasticsearchWriter esWriter = new ElasticsearchWriter();

-BulkWriterResponse actual =

esWriter.buildWriteReponse(ImmutableList.of(tuple1, tuple2), response);

+BulkWriterResponse response = esWriter.write("bro",

writerConfiguration, tuples, messages);

-assertEquals("Response should have no errors and two successes",

expected, actual);

+// response should only contain successes

+assertFalse(response.hasErrors());

+assertTrue(response.getSuccesses().contains(tuples.get(0)));

}

@Test

-public void testSingleFailure() throws Exception {

-Tuple tuple1 = mock(Tuple.class);

-

-BulkResponse response = mock(BulkResponse.class);

-when(response.hasFailures()).thenReturn(true);

-

-Exception e = new IllegalStateException();

-BulkItemResponse itemResponse = buildBulkItemFailure(e);

-

when(response.iterator()).thenReturn(ImmutableList.of(itemRespons

[GitHub] metron pull request #1254: METRON-1849 Elasticsearch Index Write Functionali...

Github user nickwallen commented on a diff in the pull request:

https://github.com/apache/metron/pull/1254#discussion_r239054070

--- Diff:

metron-platform/metron-indexing/src/main/java/org/apache/metron/indexing/dao/update/Document.java

---

@@ -89,46 +91,29 @@ public void setGuid(String guid) {

this.guid = guid;

}

- @Override

- public String toString() {

-return "Document{" +

-"timestamp=" + timestamp +

-", document=" + document +

-", guid='" + guid + '\'' +

-", sensorType='" + sensorType + '\'' +

-'}';

- }

-

@Override

public boolean equals(Object o) {

-if (this == o) {

- return true;

-}

-if (o == null || getClass() != o.getClass()) {

- return false;

-}

-

+if (this == o) return true;

+if (!(o instanceof Document)) return false;

Document document1 = (Document) o;

-

-if (timestamp != null ? !timestamp.equals(document1.timestamp) :

document1.timestamp != null) {

- return false;

-}

-if (document != null ? !document.equals(document1.document) :

document1.document != null) {

- return false;

-}

-if (guid != null ? !guid.equals(document1.guid) : document1.guid !=

null) {

- return false;

-}

-return sensorType != null ? sensorType.equals(document1.sensorType)

-: document1.sensorType == null;

+return Objects.equals(timestamp, document1.timestamp) &&

--- End diff --

It is an auto-create from IntelliJ. It changed when on a previous

iteration, I added a field to the Document class. I since backed that out and

went with another approach. So there really is no need for this to change now.

I will back this out.

---

[GitHub] metron issue #1284: METRON-1867 Remove `/api/v1/update/replace` endpoint

Github user nickwallen commented on the issue: https://github.com/apache/metron/pull/1284 > Is this endpoint superseded by another implementation, or just removed altogether? The endpoint has been removed completely. It is not being used by anything in Metron currently. > Any users of the REST API using this directly for any reason? Not sure if we have to deprecate this - i.e. I'm unclear of whether or not we consider the REST API client facing or not, or if it's just middleware to us for the UI. I just sent out a notice to the dev list to see if anyone has a strong opinion. ---

[GitHub] metron-bro-plugin-kafka issue #20: METRON-1910: bro plugin segfaults on src/...

Github user nickwallen commented on the issue: https://github.com/apache/metron-bro-plugin-kafka/pull/20 No problem @JonZeolla. I can help track it down too when I get some free time. ---

[GitHub] metron pull request #1280: METRON-1869 Unable to Sort an Escalated Meta Aler...

Github user nickwallen closed the pull request at: https://github.com/apache/metron/pull/1280 ---

[GitHub] metron issue #1280: METRON-1869 Unable to Sort an Escalated Meta Alert

Github user nickwallen commented on the issue: https://github.com/apache/metron/pull/1280 ``` Tests in error: ProfilerIntegrationTest.testEventTime:281 û Timeout ``` Unrelated test failure, which is tracked as METRON-1810 (and I am trying to fix on the side.) Kicking Travis. ---

[GitHub] metron issue #1288: METRON-1916 Stellar Classpath Function Resolver Should H...

Github user nickwallen commented on the issue: https://github.com/apache/metron/pull/1288 > In my mind we don't have a current state where Stellar is running but not all the functions in the class path are loaded. The same thing would happen today if an `Exception` is thrown in any of the functions being loaded. If an Exception is thrown, instead of N functions ready-to-rock, we would have N-1 functions. This just expands that behavior to `ClassNotFoundException` which gets thrown when there is a missing dependency.. > Before we would have crashed starting up. Now we will run and crash get an error later. I don't see it that way. Let's look at both scenarios. * If I am NOT using the REST function, then things just work as they should. Yay! I don't want to worry about the missing dependency for a function that I have no knowledge about. * If I am using the REST function, an exception would still be thrown when the function definition could not be found. So the user still gets an exception when there is a problem. (Q) Any thoughts on alternative approaches? I prefer not to have to worry about classpath dependencies (like those required by the REST function) that I am not using. In this example, I was only trying to use the `STATS_*` functions, but it was the REST function that blew things up for me. It is difficult for a user to track this down, because they have no knowledge of the REST function and its dependencies. (Alternative 1) An alternative approach would be to be more selective about what functions we add to `stellar-common`. Anything added to `stellar-common` forces a required dependency on all Stellar users. If the REST function had been a separate project, then a user could choose to use or not use that projects and not be burdened by the additional dependency (Alternative 2) Could the `stellar.function.resolver.includes` and `stellar.function.resolver.excludes` be enhanced to allow users to exclude functions they don't want to load? ---

[GitHub] metron issue #1288: METRON-1916 Stellar Classpath Function Resolver Should H...

Github user nickwallen commented on the issue: https://github.com/apache/metron/pull/1288 There is just one less function available on the close when this happens. This is not something new unknown state. ---

[GitHub] metron pull request #1245: METRON-1795: Initial Commit for Regular Expressio...

Github user nickwallen commented on a diff in the pull request:

https://github.com/apache/metron/pull/1245#discussion_r237875330

--- Diff:

metron-platform/metron-parsers/src/test/java/org/apache/metron/parsers/regex/RegularExpressionsParserTest.java

---

@@ -0,0 +1,152 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one or more

contributor license

+ * agreements. See the NOTICE file distributed with this work for

additional information regarding

+ * copyright ownership. The ASF licenses this file to you under the Apache

License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance with the

License. You may obtain a

+ * copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

distributed under the License

+ * is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF

ANY KIND, either express

+ * or implied. See the License for the specific language governing

permissions and limitations under

+ * the License.

+ */

+package org.apache.metron.parsers.regex;

+

+import org.json.simple.JSONObject;

+import org.json.simple.parser.JSONParser;

+import org.junit.Before;

+import org.junit.Test;

+

+import java.nio.file.Files;

+import java.nio.file.Paths;

+import java.util.HashMap;

+import java.util.List;

+import java.util.Map;

+

+import static org.junit.Assert.assertTrue;

+

+public class RegularExpressionsParserTest {

+

+ private RegularExpressionsParser regularExpressionsParser;

+ private JSONObject parserConfig;

+

+ @Before

+ public void setUp() throws Exception {

+regularExpressionsParser = new RegularExpressionsParser();

+ }

+

+ @Test

+ public void testSSHDParse() throws Exception {

+String message =

+"<38>Jun 20 15:01:17 deviceName sshd[11672]: Accepted publickey

for prod from 22.22.22.22 port 5 ssh2";

+

+parserConfig = getJsonConfig(

+

Paths.get("src/test/resources/config/RegularExpressionsParserConfig.json").toString());

--- End diff --

If the parser does fail to parse a given message, we need to make sure that

the error message kicked out to the error topic has a helpful message, stack

trace, etc. Otherwise, it will be impossible for a user to determine why the

parser failed to parse the message.

While adding the timestamp is probably a good addition, I don't know that

it really solves the problem here. Right now, I don't really know if the

problem is in your parser or in the parser infrastructure, but it is something

that I want to make sure we track down.

---

[GitHub] metron pull request #1245: METRON-1795: Initial Commit for Regular Expressio...

Github user nickwallen commented on a diff in the pull request:

https://github.com/apache/metron/pull/1245#discussion_r237870087

--- Diff:

metron-platform/metron-parsers/src/test/resources/config/RegularExpressionsInvalidParserConfig.json

---

@@ -0,0 +1,208 @@

+{

+ "convertCamelCaseToUnderScore": true,

+ "messageHeaderRegex":

"(?(?<=^<)\\d{1,4}(?=>)).*?(?(?<=>)[A-Za-z]{3}\\s{1,2}\\d{1,2}\\s\\d{1,2}:\\d{1,2}:\\d{1,2}(?=\\s)).*?(?(?<=\\s).*?(?=\\s))",

+ "recordTypeRegex":

"(?(?<=\\s)\\b(tch-replicant|audispd|syslog|ntpd|sendmail|pure-ftpd|usermod|useradd|anacron|unix_chkpwd|sudo|dovecot|postfix\\/smtpd|postfix\\/smtp|postfix\\/qmgr|klnagent|systemd|(?i)crond(?-i)|clamd|kesl|sshd|run-parts|automount|suexec|freshclam|kernel|vsftpd|ftpd|su)\\b(?=\\[|:))",

+ "fields": [

+{

+ "recordType": "syslog",

+ "regex":

".*(?(?<=PID\\s=\\s).*?(?=\\sLine)).*(?(?<=64\\s)\/([A-Za-z0-9_-]+\/)+(?=\\w))(?.*?(?=\")).*(?(?<=\").*?(?=$))"

+},

+{

+ "recordType": "pure-ftpd",

+ "regex":

".*(?(?<=\\:\\s\\().*?(?=\\)\\s)).*?(?(?<=\\s\\[).*?(?=\\]\\s)).*?(?(?<=\\]\\s).*?(?=$))"

+},

+{

+ "recordType": "systemd",

+ "regex": [

+

".*(?(?<=\\ssystemd\\:\\s).*?(?=\\d+)).*?(?(?<=\\sSession\\s).*?(?=\\sof)).*?(?(?<=\\suser\\s).*?(?=\\.)).*$",

+

".*(?(?<=\\ssystemd\\:\\s).*?(?=\\sof)).*?(?(?<=\\sof\\s).*?(?=\\.)).*$"

+ ]

+},

+{

+ "recordType": "kesl",

+ "regex": ".*(?(?<=\\:).*?(?=$))"

+},

+{

+ "recordType": "dovecot",

+ "regex": [

+

".*(?(?<=\\sdovecot:\\s).*?(?=\\:)).*?(?(?<=\\:).*?(?=\\:\\suser)).*?(?(?<=user\\=\\<).*?(?=\\>)).*?(?(?<=rip\\=).*?(?=,)).*?(?(?<=lip\\=).*?(?=,)).*?(?(?<=,\\s).*?(?=,)).*?(?(?<=session\\=\\<).*?(?=\\>)).*$",

+

".*(?(?<=\\sdovecot:\\s).*?(?=\\:)).*?(?(?<=\\:).*?(?=\\:\\srip)).*?(?(?<=rip\\=).*?(?=,)).*?(?(?<=lip\\=).*?(?=,)).*?(?(?<=,\\s).*?(?=$))",

+

".*(?(?<=\\sdovecot:\\s).*?(?=\\:)).*?(?(?<=\\:).*?(?=$))"

+ ]

+},

+{

+ "recordType": "postfix/smtpd",

+ "regex": [

+

".*(?(?<=\\[).*?(?=\\])).*?(?(?<=\\:).*?(?=$))",

+

".*(?(?<=\\[).*?(?=\\]:)).*?(?(?<=\\:\\s)disconnect(?=\\sfrom)).*?(?(?<=from).*(?=\\[)).*?(?(?<=\\[).*(?=\\])).*$"

+ ]

+},

+{

+ "recordType": "postfix/smtp",

+ "regex": [

+

".*(?(?<=smtp\\[).*?(?=\\]:)).*(?(?<=to=#\\<).*?(?=>,)).*(?(?<=relay=).*?(?=,)).*(?(?<=delay=).*?(?=,)).*(?(?<=delays=).*?(?=,)).*(?(?<=dsn=).*?(?=,)).*(?(?<=status=).*?(?=\\()).*?(?(?<=connect

to).*?(?=\\[)).*?(?(?<=\\[).*?(?=\\])).*?(?(?<=\\]:).*?(?=:\\s)).*?(?(?<=:\\s).*?(?=$))",

+

".*(?(?<=smtp\\[).*?(?=\\]:)).*?(?(?<=connect

to).*?(?=\\[)).*?(?(?<=\\[).*?(?=\\])).*(?(?<=:).*?(?=\\s)).*(?(?<=\\s).*?(?=$))",

+

".*(?(?<=\\[).*?(?=\\])).*?(?(?<=\\:).*?(?=$))"

+ ]

+},

+{

+ "recordType": "crond",

+ "regex": [

+

".*(?(?<=\\[).*?(?=\\])).*?(?(?<=\\]:\\s\\().*?(?=\\)\\s)).*?(?(?<=CMD\\s\\().*?(?=\\))).*$",

+

".*(?(?<=\\[).*?(?=\\])).*?(?(?<=\\]:\\s\\().*?(?=\\)\\s)).*?(?(?<=\\().*?(?=\\))).*$",

+

".*(?(?<=\\[).*?(?=\\])).*?(?(?<=\\]:\\s\\().*?(?=\\)\\s)).*?(?(?<=CMD\\s\\().*?(?=\\))).*$",

+

".*(?(?<=\\[).*?(?=\\])).*?(?(?<=\\:).*?(?=$))"

+ ]

+},

+{

+ "recordType": "clamd",

+ "regex": [

+

".*(?(?<=\\[).*?(?=\\])).*?(?(?<=\\:\\s).*?(?=\\:)).*?(?(?<=\\:).*?(?=$))",

+

".*(?(?<=\\:\\s).*?(?=\\:)).*?(?(?<=\\:).*?(?=$))"

+ ]

+},

+{

+ "recordType": "run-parts",

+ "regex": ".*(?(?<=\\sparts).*?(?=$))"

+},

+{

+ "recordType": "sshd",

+ "regex": [

+

".*(?(?<=\\[).*?(?=\\])).*?(?(?<=\\]:\\s).*?(?=\\sfor)).*?(?(?<=\\sfor\\s).*?(?=\\sfrom)).*?(?(?<=\\sfrom\\s).*?(?=\\sport)).*?(?(?<=\\sport\\s).*?(?=\\s)).*?(?(?<=port\\s\\d{1,5}\\s).*(?=:\\s)).

[GitHub] metron pull request #1245: METRON-1795: Initial Commit for Regular Expressio...

Github user nickwallen commented on a diff in the pull request:

https://github.com/apache/metron/pull/1245#discussion_r237869538

--- Diff: metron-platform/metron-parsers/README.md ---

@@ -52,6 +52,62 @@ There are two general types types of parsers:

This is using the default value for `wrapEntityName` if that

property is not set.

* `wrapEntityName` : Sets the name to use when wrapping JSON using

`wrapInEntityArray`. The `jsonpQuery` should reference this name.

* A field called `timestamp` is expected to exist and, if it does not,

then current time is inserted.

+ * Regular Expressions Parser

+ * `recordTypeRegex` : A regular expression to uniquely identify a

record type.

+ * `messageHeaderRegex` : A regular expression used to extract fields

from a message part which is common across all the messages.

+ * `convertCamelCaseToUnderScore` : If this property is set to true,

this parser will automatically convert all the camel case property names to

underscore seperated.

+ For example, following convertions will automatically happen:

+

+ ```

+ ipSrcAddr -> ip_src_addr

+ ipDstAddr -> ip_dst_addr

+ ipSrcPort -> ip_src_port

+ ```

+ Note this property may be necessary, because java does not

support underscores in the named group names. So in case your property naming

conventions requires underscores in property names, use this property.

+

+ * `fields` : A json list of maps contaning a record type to regular

expression mapping.

+

+ A complete configuration example would look like:

+

+ ```json

+ "convertCamelCaseToUnderScore": true,

+ "recordTypeRegex": "kernel|syslog",

+ "messageHeaderRegex":

"((<=^)\\d{1,4}(?=>)).*?((<=>)[A-Za-z]

{3}\\s{1,2}\\d{1,2}\\s\\d{1,2}:\\d{1,2}:\\d{1,2}(?=\\s)).*?((<=\\s).*?(?=\\s))",

--- End diff --

Thanks for the explanation. Can you add these details to the README?

---

[GitHub] metron pull request #1245: METRON-1795: Initial Commit for Regular Expressio...

Github user nickwallen commented on a diff in the pull request:

https://github.com/apache/metron/pull/1245#discussion_r237869285

--- Diff: metron-platform/metron-parsers/README.md ---

@@ -52,6 +52,62 @@ There are two general types types of parsers:

This is using the default value for `wrapEntityName` if that

property is not set.

* `wrapEntityName` : Sets the name to use when wrapping JSON using

`wrapInEntityArray`. The `jsonpQuery` should reference this name.

* A field called `timestamp` is expected to exist and, if it does not,

then current time is inserted.

+ * Regular Expressions Parser

+ * `recordTypeRegex` : A regular expression to uniquely identify a

record type.

+ * `messageHeaderRegex` : A regular expression used to extract fields

from a message part which is common across all the messages.

+ * `convertCamelCaseToUnderScore` : If this property is set to true,

this parser will automatically convert all the camel case property names to

underscore seperated.

+ For example, following convertions will automatically happen:

+

+ ```

+ ipSrcAddr -> ip_src_addr

+ ipDstAddr -> ip_dst_addr

+ ipSrcPort -> ip_src_port

+ ```

+ Note this property may be necessary, because java does not

support underscores in the named group names. So in case your property naming

conventions requires underscores in property names, use this property.

+

+ * `fields` : A json list of maps contaning a record type to regular

expression mapping.

+

+ A complete configuration example would look like:

+

+ ```json

+ "convertCamelCaseToUnderScore": true,

+ "recordTypeRegex": "kernel|syslog",

+ "messageHeaderRegex":

"((<=^)\\d{1,4}(?=>)).*?((<=>)[A-Za-z]

{3}\\s{1,2}\\d{1,2}\\s\\d{1,2}:\\d{1,2}:\\d{1,2}(?=\\s)).*?((<=\\s).*?(?=\\s))",

+ "fields": [

+{

+ "recordType": "kernel",

+ "regex": ".*((<=\\]|\\w\\:).*?(?=$))"

+},

+{

+ "recordType": "syslog",

+ "regex":

".*((<=PID\\s=\\s).*?(?=\\sLine)).*((<=64\\s)\/([A-Za-z0-9_-]+\/)+(?=\\w))

(.*?(?=\")).*((<=\").*?(?=$))"

+}

+ ]

+ ```

+ **Note**: messageHeaderRegex and regex (withing fields) could be

specified as lists also e.g.

+ ```json

+ "messageHeaderRegex": [

+ "regular expression 1",

+ "regular expression 2"

+ ]

+ ```

+ Where **regular expression 1** are valid regular expressions and may

have named

+ groups, which would be extracted into fields. This list will be

evaluated in order until a

+ matching regular expression is found.

+

+ **recordTypeRegex** can be a more advanced regular expression

containing named goups. For example

--- End diff --

Good description. Can you add this advice to the documentation?

---

[GitHub] metron pull request #1245: METRON-1795: Initial Commit for Regular Expressio...

Github user nickwallen commented on a diff in the pull request:

https://github.com/apache/metron/pull/1245#discussion_r237868794

--- Diff:

metron-platform/metron-parsers/src/test/java/org/apache/metron/parsers/regex/RegularExpressionsParserTest.java

---

@@ -0,0 +1,152 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one or more

contributor license

+ * agreements. See the NOTICE file distributed with this work for

additional information regarding

+ * copyright ownership. The ASF licenses this file to you under the Apache

License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance with the

License. You may obtain a

+ * copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

distributed under the License

+ * is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF

ANY KIND, either express

+ * or implied. See the License for the specific language governing

permissions and limitations under

+ * the License.

+ */

+package org.apache.metron.parsers.regex;

+

+import org.json.simple.JSONObject;

+import org.json.simple.parser.JSONParser;

+import org.junit.Before;

+import org.junit.Test;

+

+import java.nio.file.Files;

+import java.nio.file.Paths;

+import java.util.HashMap;

+import java.util.List;

+import java.util.Map;

+

+import static org.junit.Assert.assertTrue;

+

+public class RegularExpressionsParserTest {

+

+ private RegularExpressionsParser regularExpressionsParser;

+ private JSONObject parserConfig;

+

+ @Before

+ public void setUp() throws Exception {

+regularExpressionsParser = new RegularExpressionsParser();

+ }

+

+ @Test

+ public void testSSHDParse() throws Exception {

+String message =

+"<38>Jun 20 15:01:17 deviceName sshd[11672]: Accepted publickey

for prod from 22.22.22.22 port 5 ssh2";

+

+parserConfig = getJsonConfig(

+

Paths.get("src/test/resources/config/RegularExpressionsParserConfig.json").toString());

+regularExpressionsParser.configure(parserConfig);

+JSONObject parsed = parse(message);

+// Expected

+Map expectedJson = new HashMap<>();

+expectedJson.put("device_name", "deviceName");

+expectedJson.put("dst_process_name", "sshd");

+expectedJson.put("dst_process_id", "11672");

+expectedJson.put("dst_user_id", "prod");

+expectedJson.put("ip_src_addr", "22.22.22.22");

+expectedJson.put("ip_src_port", "5");

+expectedJson.put("app_protocol", "ssh2");

+assertTrue(validate(expectedJson, parsed));

+

+ }

+

+ @Test

+ public void testNoMessageHeaderRegex() throws Exception {

+String message =

+"<38>Jun 20 15:01:17 deviceName sshd[11672]: Accepted publickey

for prod from 22.22.22.22 port 5 ssh2";

+parserConfig = getJsonConfig(

+

Paths.get("src/test/resources/config/RegularExpressionsNoMessageHeaderParserConfig.json")

+.toString());

+regularExpressionsParser.configure(parserConfig);

+JSONObject parsed = parse(message);

+// Expected

+Map expectedJson = new HashMap<>();

+expectedJson.put("dst_process_name", "sshd");

+expectedJson.put("dst_process_id", "11672");

+expectedJson.put("dst_user_id", "prod");

+expectedJson.put("ip_src_addr", "22.22.22.22");

+expectedJson.put("ip_src_port", "5");

+expectedJson.put("app_protocol", "ssh2");

+assertTrue(validate(expectedJson, parsed));

--- End diff --

> Junit best practices state that maximum one assertion per test case.

I have never heard that, nor ever, ever followed that. :) I think every

test in Metron has multiple assertions, which are necessary.

I think best practice may be to test one "thing" at a time, but you may

require multiple assertions when testing that one "thing".

I think it is much simpler the way I suggested, but we could probably spend

the time on other more important things.

---

[GitHub] metron pull request #1245: METRON-1795: Initial Commit for Regular Expressio...

Github user nickwallen commented on a diff in the pull request:

https://github.com/apache/metron/pull/1245#discussion_r237866676

--- Diff:

metron-platform/metron-parsers/src/test/java/org/apache/metron/parsers/regex/RegularExpressionsParserTest.java

---

@@ -0,0 +1,152 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one or more

contributor license

+ * agreements. See the NOTICE file distributed with this work for

additional information regarding

+ * copyright ownership. The ASF licenses this file to you under the Apache

License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance with the

License. You may obtain a

+ * copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

distributed under the License

+ * is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF

ANY KIND, either express

+ * or implied. See the License for the specific language governing

permissions and limitations under

+ * the License.

+ */

+package org.apache.metron.parsers.regex;

+

+import org.json.simple.JSONObject;

+import org.json.simple.parser.JSONParser;

+import org.junit.Before;

+import org.junit.Test;

+

+import java.nio.file.Files;

+import java.nio.file.Paths;

+import java.util.HashMap;

+import java.util.List;

+import java.util.Map;

+

+import static org.junit.Assert.assertTrue;

+

+public class RegularExpressionsParserTest {

+

+ private RegularExpressionsParser regularExpressionsParser;

+ private JSONObject parserConfig;

+

+ @Before

+ public void setUp() throws Exception {

+regularExpressionsParser = new RegularExpressionsParser();

+ }

+

+ @Test

+ public void testSSHDParse() throws Exception {

+String message =

+"<38>Jun 20 15:01:17 deviceName sshd[11672]: Accepted publickey

for prod from 22.22.22.22 port 5 ssh2";

+

+parserConfig = getJsonConfig(

+

Paths.get("src/test/resources/config/RegularExpressionsParserConfig.json").toString());

--- End diff --

Thanks @jagdeepsingh2 . I will try and debug a little further myself too.

I want to make sure there are no incompatibilities between your parser and the

newer changes introduced by the `ParserRunner`. Glad there isn't something

obviously stupid that I am doing. :)

---

[GitHub] metron pull request #1269: METRON-1879 Allow Elasticsearch to Auto-Generate ...

Github user nickwallen commented on a diff in the pull request:

https://github.com/apache/metron/pull/1269#discussion_r237852978

--- Diff:

metron-platform/metron-elasticsearch/src/main/java/org/apache/metron/elasticsearch/writer/ElasticsearchWriter.java

---

@@ -56,90 +58,107 @@

*/

private transient ElasticsearchClient client;

+ /**

+ * Responsible for writing documents.

+ *

+ * Uses a {@link TupleBasedDocument} to maintain the relationship

between

+ * a {@link Tuple} and the document created from the contents of that

tuple. If

+ * a document cannot be written, the associated tuple needs to be failed.

+ */

+ private transient BulkDocumentWriter documentWriter;

+

/**

* A simple data formatter used to build the appropriate Elasticsearch

index name.

*/

private SimpleDateFormat dateFormat;

-

@Override

public void init(Map stormConf, TopologyContext topologyContext,

WriterConfiguration configurations) {

-

Map globalConfiguration =

configurations.getGlobalConfig();

-client = ElasticsearchClientFactory.create(globalConfiguration);

dateFormat = ElasticsearchUtils.getIndexFormat(globalConfiguration);

+

+// only create the document writer, if one does not already exist.

useful for testing.

+if(documentWriter == null) {

+ client = ElasticsearchClientFactory.create(globalConfiguration);

+ documentWriter = new ElasticsearchBulkDocumentWriter<>(client);

+}

}

@Override

- public BulkWriterResponse write(String sensorType, WriterConfiguration

configurations, Iterable tuples, List messages) throws

Exception {

+ public BulkWriterResponse write(String sensorType,

+ WriterConfiguration configurations,

+ Iterable tuplesIter,

+ List messages) {

// fetch the field name converter for this sensor type

FieldNameConverter fieldNameConverter =

FieldNameConverters.create(sensorType, configurations);

+String indexPostfix = dateFormat.format(new Date());

+String indexName = ElasticsearchUtils.getIndexName(sensorType,

indexPostfix, configurations);

+

+// the number of tuples must match the number of messages

+List tuples = Lists.newArrayList(tuplesIter);

+int batchSize = tuples.size();

+if(messages.size() != batchSize) {

+ throw new IllegalStateException(format("Expect same number of tuples

and messages; |tuples|=%d, |messages|=%d",

+ tuples.size(), messages.size()));

+}

-final String indexPostfix = dateFormat.format(new Date());

-BulkRequest bulkRequest = new BulkRequest();

-for(JSONObject message: messages) {

+// create a document from each message

+List documents = new ArrayList<>();

+for(int i=0; i

[GitHub] metron pull request #1245: METRON-1795: Initial Commit for Regular Expressio...

Github user nickwallen commented on a diff in the pull request:

https://github.com/apache/metron/pull/1245#discussion_r237651771

--- Diff:

metron-platform/metron-parsers/src/test/resources/config/RegularExpressionsInvalidParserConfig.json

---

@@ -0,0 +1,208 @@

+{

+ "convertCamelCaseToUnderScore": true,

+ "messageHeaderRegex":

"(?(?<=^<)\\d{1,4}(?=>)).*?(?(?<=>)[A-Za-z]{3}\\s{1,2}\\d{1,2}\\s\\d{1,2}:\\d{1,2}:\\d{1,2}(?=\\s)).*?(?(?<=\\s).*?(?=\\s))",

+ "recordTypeRegex":