[jira] [Updated] (KAFKA-12889) log clean group consider empty log segment to avoid empty log left

[ https://issues.apache.org/jira/browse/KAFKA-12889?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] qiang Liu updated KAFKA-12889: -- Affects Version/s: 3.1.0 2.8.0 > log clean group consider empty log segment to avoid empty log left > -- > > Key: KAFKA-12889 > URL: https://issues.apache.org/jira/browse/KAFKA-12889 > Project: Kafka > Issue Type: Bug > Components: log cleaner >Affects Versions: 0.10.1.1, 2.8.0, 3.1.0 >Reporter: qiang Liu >Priority: Trivial > > to avoid log index 4 byte relative offset overflow, log cleaner group check > log segments offset to make sure group offset range not exceed Int.MaxValue. > this offset check currentlly not cosider next is next log segment is empty, > so there will left empty log files every about 2^31 messages. > the left empty logs will be reprocessed every clean cycle, which will rewrite > it with same empty content, witch cause little no need io. > for __consumer_offsets topic, normally we can set cleanup.policy to > compact,delete to get rid of this. > my cluster is 0.10.1.1, but after aylize trunk code, it should has same > problem too. > > some of my left empty logs,(run ls -l) > -rw-r- 1 u g 0 Dec 16 2017 .index > -rw-r- 1 u g 0 Dec 16 2017 .log > -rw-r- 1 u g 0 Dec 16 2017 .timeindex > -rw-r- 1 u g 0 Jan 15 2018 002148249632.index > -rw-r- 1 u g 0 Jan 15 2018 002148249632.log > -rw-r- 1 u g 0 Jan 15 2018 002148249632.timeindex > -rw-r- 1 u g 0 Jan 27 2018 004295766494.index > -rw-r- 1 u g 0 Jan 27 2018 004295766494.log > -rw-r- 1 u g 0 Jan 27 2018 004295766494.timeindex > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (KAFKA-12935) Flaky Test RestoreIntegrationTest.shouldRecycleStateFromStandbyTaskPromotedToActiveTaskAndNotRestore

[

https://issues.apache.org/jira/browse/KAFKA-12935?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17361374#comment-17361374

]

A. Sophie Blee-Goldman commented on KAFKA-12935:

Filed https://issues.apache.org/jira/browse/KAFKA-12936

> Flaky Test

> RestoreIntegrationTest.shouldRecycleStateFromStandbyTaskPromotedToActiveTaskAndNotRestore

>

>

> Key: KAFKA-12935

> URL: https://issues.apache.org/jira/browse/KAFKA-12935

> Project: Kafka

> Issue Type: Test

> Components: streams, unit tests

>Reporter: Matthias J. Sax

>Priority: Critical

> Labels: flaky-test

>

> {quote}java.lang.AssertionError: Expected: <0L> but: was <5005L> at

> org.hamcrest.MatcherAssert.assertThat(MatcherAssert.java:20) at

> org.hamcrest.MatcherAssert.assertThat(MatcherAssert.java:6) at

> org.apache.kafka.streams.integration.RestoreIntegrationTest.shouldRecycleStateFromStandbyTaskPromotedToActiveTaskAndNotRestore(RestoreIntegrationTest.java:374)

> {quote}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Created] (KAFKA-12936) In-memory stores are always restored from scratch after dropping out of the group

A. Sophie Blee-Goldman created KAFKA-12936: -- Summary: In-memory stores are always restored from scratch after dropping out of the group Key: KAFKA-12936 URL: https://issues.apache.org/jira/browse/KAFKA-12936 Project: Kafka Issue Type: Bug Components: streams Reporter: A. Sophie Blee-Goldman Whenever an in-memory store is closed, the actual store contents are garbage collected and the state will need to be restored from scratch if the task is reassigned and re-initialized. We introduced the recycling feature to prevent this from occurring when a task is transitioned from standby to active (or vice versa), but it's still possible for the in-memory state to be unnecessarily wiped out in the case the member has dropped out of the group. In this case, the onPartitionsLost callback is invoked, which will close all active tasks as dirty before the member rejoins the group. This means that all these tasks will need to be restored from scratch if they are reassigned back to this consumer. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (KAFKA-12935) Flaky Test RestoreIntegrationTest.shouldRecycleStateFromStandbyTaskPromotedToActiveTaskAndNotRestore

[

https://issues.apache.org/jira/browse/KAFKA-12935?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17361367#comment-17361367

]

A. Sophie Blee-Goldman commented on KAFKA-12935:

Hm, actually, I guess that could be considered a bug in itself, or at least a

flaw in the recycling feature – for persistent stores with ALOS, dropping out

of the group only causes tasks to be closed dirty, it doesn't force them to be

wiped out to restore from the changelog from scratch. But with in-memory

stores, simply closing them is akin to physically wiping out the state

directory for that task. Avoiding that was the basis for this recycling feature

in the first place.

This does kind of suck, but at least it should be a relatively rare event. I'm

a bit worried about how much complexity it would introduce to the code to fix

this "bug", but I'll at least file a ticket for it now and we can go from there

> Flaky Test

> RestoreIntegrationTest.shouldRecycleStateFromStandbyTaskPromotedToActiveTaskAndNotRestore

>

>

> Key: KAFKA-12935

> URL: https://issues.apache.org/jira/browse/KAFKA-12935

> Project: Kafka

> Issue Type: Test

> Components: streams, unit tests

>Reporter: Matthias J. Sax

>Priority: Critical

> Labels: flaky-test

>

> {quote}java.lang.AssertionError: Expected: <0L> but: was <5005L> at

> org.hamcrest.MatcherAssert.assertThat(MatcherAssert.java:20) at

> org.hamcrest.MatcherAssert.assertThat(MatcherAssert.java:6) at

> org.apache.kafka.streams.integration.RestoreIntegrationTest.shouldRecycleStateFromStandbyTaskPromotedToActiveTaskAndNotRestore(RestoreIntegrationTest.java:374)

> {quote}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (KAFKA-12935) Flaky Test RestoreIntegrationTest.shouldRecycleStateFromStandbyTaskPromotedToActiveTaskAndNotRestore

[

https://issues.apache.org/jira/browse/KAFKA-12935?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17361359#comment-17361359

]

A. Sophie Blee-Goldman commented on KAFKA-12935:

Hm, yeah I think I've seen this fail once or twice before. I did look into it a

bit a while back, and just could not figure out whether it was a possible bug

or an issue with the test itself. My money's definitely on the latter, but it

might be worth taking another look sometime if we have the chance.

If there is a bug that this is uncovering, at least it would not be a

correctness bug, only an annoyance in restoring when it's not necessary. I

think it's more likely that the test is flaky because for example the consumer

dropped out of the group and invoked onPartitionsLost, which would close the

task as dirty and require restoring from the changelog

> Flaky Test

> RestoreIntegrationTest.shouldRecycleStateFromStandbyTaskPromotedToActiveTaskAndNotRestore

>

>

> Key: KAFKA-12935

> URL: https://issues.apache.org/jira/browse/KAFKA-12935

> Project: Kafka

> Issue Type: Test

> Components: streams, unit tests

>Reporter: Matthias J. Sax

>Priority: Critical

> Labels: flaky-test

>

> {quote}java.lang.AssertionError: Expected: <0L> but: was <5005L> at

> org.hamcrest.MatcherAssert.assertThat(MatcherAssert.java:20) at

> org.hamcrest.MatcherAssert.assertThat(MatcherAssert.java:6) at

> org.apache.kafka.streams.integration.RestoreIntegrationTest.shouldRecycleStateFromStandbyTaskPromotedToActiveTaskAndNotRestore(RestoreIntegrationTest.java:374)

> {quote}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (KAFKA-12851) Flaky Test RaftEventSimulationTest.canMakeProgressIfMajorityIsReachable

[ https://issues.apache.org/jira/browse/KAFKA-12851?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17361312#comment-17361312 ] Matthias J. Sax commented on KAFKA-12851: - Failed again. > Flaky Test RaftEventSimulationTest.canMakeProgressIfMajorityIsReachable > --- > > Key: KAFKA-12851 > URL: https://issues.apache.org/jira/browse/KAFKA-12851 > Project: Kafka > Issue Type: Bug > Components: core >Reporter: A. Sophie Blee-Goldman >Priority: Blocker > Fix For: 3.0.0 > > > Failed twice on a [PR > build|https://ci-builds.apache.org/job/Kafka/job/kafka-pr/job/PR-10755/6/testReport/] > h3. Stacktrace > org.opentest4j.AssertionFailedError: expected: but was: at > org.junit.jupiter.api.AssertionUtils.fail(AssertionUtils.java:55) at > org.junit.jupiter.api.AssertTrue.assertTrue(AssertTrue.java:40) at > org.junit.jupiter.api.AssertTrue.assertTrue(AssertTrue.java:35) at > org.junit.jupiter.api.Assertions.assertTrue(Assertions.java:162) at > org.apache.kafka.raft.RaftEventSimulationTest.canMakeProgressIfMajorityIsReachable(RaftEventSimulationTest.java:263) -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Resolved] (KAFKA-12934) Move some controller classes to the metadata package

[ https://issues.apache.org/jira/browse/KAFKA-12934?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Colin McCabe resolved KAFKA-12934. -- Fix Version/s: 3.0.0 Reviewer: Jason Gustafson Resolution: Fixed > Move some controller classes to the metadata package > > > Key: KAFKA-12934 > URL: https://issues.apache.org/jira/browse/KAFKA-12934 > Project: Kafka > Issue Type: Improvement > Components: controller >Reporter: Colin McCabe >Assignee: Colin McCabe >Priority: Minor > Labels: kip-500 > Fix For: 3.0.0 > > > Move some controller classes to the metadata package so that they can be used > with broker snapshots. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Created] (KAFKA-12935) Flaky Test RestoreIntegrationTest.shouldRecycleStateFromStandbyTaskPromotedToActiveTaskAndNotRestore

Matthias J. Sax created KAFKA-12935:

---

Summary: Flaky Test

RestoreIntegrationTest.shouldRecycleStateFromStandbyTaskPromotedToActiveTaskAndNotRestore

Key: KAFKA-12935

URL: https://issues.apache.org/jira/browse/KAFKA-12935

Project: Kafka

Issue Type: Test

Components: streams, unit tests

Reporter: Matthias J. Sax

{quote}java.lang.AssertionError: Expected: <0L> but: was <5005L> at

org.hamcrest.MatcherAssert.assertThat(MatcherAssert.java:20) at

org.hamcrest.MatcherAssert.assertThat(MatcherAssert.java:6) at

org.apache.kafka.streams.integration.RestoreIntegrationTest.shouldRecycleStateFromStandbyTaskPromotedToActiveTaskAndNotRestore(RestoreIntegrationTest.java:374)

{quote}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [kafka] cmccabe opened a new pull request #10865: KAFKA-12934: Move some controller classes to the metadata package

cmccabe opened a new pull request #10865: URL: https://github.com/apache/kafka/pull/10865 Move some controller classes to the metadata package so that they can be used with broker snapshots. Rename ControllerTestUtils to RecordTestUtils. Move PartitionInfo to PartitionRegistration. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Created] (KAFKA-12934) Move some controller classes to the metadata package

Colin McCabe created KAFKA-12934: Summary: Move some controller classes to the metadata package Key: KAFKA-12934 URL: https://issues.apache.org/jira/browse/KAFKA-12934 Project: Kafka Issue Type: Improvement Reporter: Colin McCabe Assignee: Colin McCabe Move some controller classes to the metadata package so that they can be used with broker snapshots. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (KAFKA-12934) Move some controller classes to the metadata package

[ https://issues.apache.org/jira/browse/KAFKA-12934?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Colin McCabe updated KAFKA-12934: - Component/s: controller Labels: kip-500 (was: ) > Move some controller classes to the metadata package > > > Key: KAFKA-12934 > URL: https://issues.apache.org/jira/browse/KAFKA-12934 > Project: Kafka > Issue Type: Improvement > Components: controller >Reporter: Colin McCabe >Assignee: Colin McCabe >Priority: Minor > Labels: kip-500 > > Move some controller classes to the metadata package so that they can be used > with broker snapshots. -- This message was sent by Atlassian Jira (v8.3.4#803005)

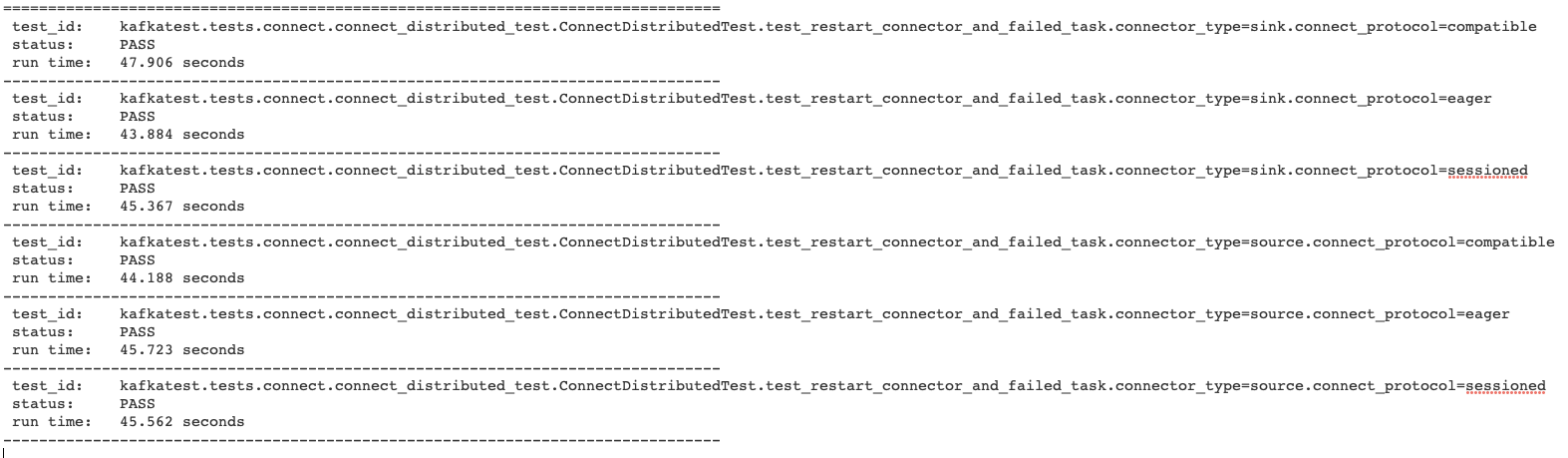

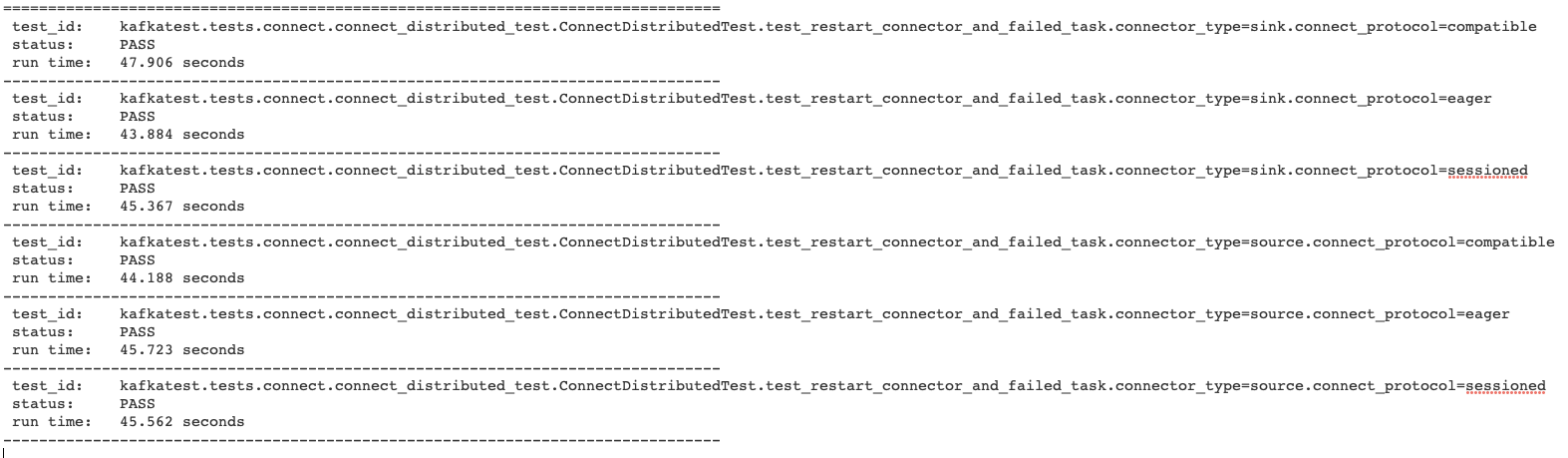

[GitHub] [kafka] kpatelatwork edited a comment on pull request #10822: KAFKA-4793: Connect API to restart connector and tasks (KIP-745)

kpatelatwork edited a comment on pull request #10822: URL: https://github.com/apache/kafka/pull/10822#issuecomment-859064545 Passing system tests results  -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] kpatelatwork commented on pull request #10822: KAFKA-4793: Connect API to restart connector and tasks (KIP-745)

kpatelatwork commented on pull request #10822: URL: https://github.com/apache/kafka/pull/10822#issuecomment-859064545 Passing system tests  -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] ableegoldman merged pull request #10736: KAFKA-9295: revert session timeout to default value

ableegoldman merged pull request #10736: URL: https://github.com/apache/kafka/pull/10736 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on a change in pull request #10862: KAFKA-12928: Add a check whether the Task's statestore is actually a directory

ableegoldman commented on a change in pull request #10862:

URL: https://github.com/apache/kafka/pull/10862#discussion_r649497973

##

File path:

streams/src/main/java/org/apache/kafka/streams/processor/internals/StateDirectory.java

##

@@ -312,7 +319,7 @@ private boolean taskDirIsEmpty(final File taskDir) {

*/

File globalStateDir() {

final File dir = new File(stateDir, "global");

-if (hasPersistentStores && !dir.exists() && !dir.mkdir()) {

+if (hasPersistentStores && ((dir.exists() && !dir.isDirectory()) ||

(!dir.exists() && !dir.mkdir( {

throw new ProcessorStateException(

String.format("global state directory [%s] doesn't exist and

couldn't be created", dir.getPath()));

Review comment:

ditto here, please add a separate check and exception

##

File path:

streams/src/main/java/org/apache/kafka/streams/processor/internals/StateDirectory.java

##

@@ -126,7 +126,7 @@ public StateDirectory(final StreamsConfig config, final

Time time, final boolean

throw new ProcessorStateException(

String.format("base state directory [%s] doesn't exist and

couldn't be created", stateDirName));

}

-if (!stateDir.exists() && !stateDir.mkdir()) {

+if ((stateDir.exists() && !stateDir.isDirectory()) ||

(!stateDir.exists() && !stateDir.mkdir())) {

Review comment:

Please split this up into a separate check for `if ((stateDir.exists()

&& !stateDir.isDirectory())` and then throw an accurate exception, eg `state

directory could not be created as there is an existing file with the same name`

##

File path:

streams/src/main/java/org/apache/kafka/streams/processor/internals/StateDirectory.java

##

@@ -230,18 +230,25 @@ public UUID initializeProcessId() {

public File getOrCreateDirectoryForTask(final TaskId taskId) {

final File taskParentDir = getTaskDirectoryParentName(taskId);

final File taskDir = new File(taskParentDir,

StateManagerUtil.toTaskDirString(taskId));

-if (hasPersistentStores && !taskDir.exists()) {

-synchronized (taskDirCreationLock) {

-// to avoid a race condition, we need to check again if the

directory does not exist:

-// otherwise, two threads might pass the outer `if` (and enter

the `then` block),

-// one blocks on `synchronized` while the other creates the

directory,

-// and the blocking one fails when trying to create it after

it's unblocked

-if (!taskParentDir.exists() && !taskParentDir.mkdir()) {

-throw new ProcessorStateException(

+if (hasPersistentStores) {

+if (!taskDir.exists()) {

+synchronized (taskDirCreationLock) {

+// to avoid a race condition, we need to check again if

the directory does not exist:

+// otherwise, two threads might pass the outer `if` (and

enter the `then` block),

+// one blocks on `synchronized` while the other creates

the directory,

+// and the blocking one fails when trying to create it

after it's unblocked

+if (!taskParentDir.exists() && !taskParentDir.mkdir()) {

+throw new ProcessorStateException(

String.format("Parent [%s] of task directory [%s]

doesn't exist and couldn't be created",

-taskParentDir.getPath(),

taskDir.getPath()));

+taskParentDir.getPath(), taskDir.getPath()));

+}

+if (!taskDir.exists() && !taskDir.mkdir()) {

+throw new ProcessorStateException(

+String.format("task directory [%s] doesn't exist

and couldn't be created", taskDir.getPath()));

+}

}

-if (!taskDir.exists() && !taskDir.mkdir()) {

+} else {

+if (!taskDir.isDirectory()) {

Review comment:

Same here, this exception message does not apply to the case this is

trying to catch

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on pull request #9671: KAFKA-10793: move handling of FindCoordinatorFuture to fix race condition

ableegoldman commented on pull request #9671: URL: https://github.com/apache/kafka/pull/9671#issuecomment-859044497 It should be sufficient to upgrade just the consumers, this is a client-side fix only -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] vvcephei commented on a change in pull request #10856: MINOR: Small optimizations and removal of unused code in Streams

vvcephei commented on a change in pull request #10856:

URL: https://github.com/apache/kafka/pull/10856#discussion_r649509926

##

File path:

streams/src/main/java/org/apache/kafka/streams/StoreQueryParameters.java

##

@@ -25,8 +25,8 @@

*/

public class StoreQueryParameters {

-private Integer partition;

-private boolean staleStores;

+private final Integer partition;

+private final boolean staleStores;

Review comment:

Yeah, it's a limitation of checkstyle, which makes me a bit sad.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] jlprat commented on pull request #10855: MINOR: clean up unneeded `@SuppressWarnings` on Streams module

jlprat commented on pull request #10855: URL: https://github.com/apache/kafka/pull/10855#issuecomment-859028893 Unless the CI is still broken, of course -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] vvcephei commented on pull request #10850: KAFKA-12924 Replace EasyMock and PowerMock with Mockito in streams (metrics)

vvcephei commented on pull request #10850: URL: https://github.com/apache/kafka/pull/10850#issuecomment-859023848 Oh, right, I meant to say that the core integration tests are broken right now. I've just run the Streams tests on my laptop, and we also have passing tests for the ARM build. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] vvcephei commented on pull request #10850: KAFKA-12924 Replace EasyMock and PowerMock with Mockito in streams (metrics)

vvcephei commented on pull request #10850: URL: https://github.com/apache/kafka/pull/10850#issuecomment-859022980 Thanks for the updates, @wycc ! Merged. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] vvcephei merged pull request #10850: KAFKA-12924 Replace EasyMock and PowerMock with Mockito in streams (metrics)

vvcephei merged pull request #10850: URL: https://github.com/apache/kafka/pull/10850 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] vvcephei commented on a change in pull request #10850: KAFKA-12924 Replace EasyMock and PowerMock with Mockito in streams (metrics)

vvcephei commented on a change in pull request #10850:

URL: https://github.com/apache/kafka/pull/10850#discussion_r649499312

##

File path:

streams/src/test/java/org/apache/kafka/streams/internals/metrics/ClientMetricsTest.java

##

@@ -135,45 +124,38 @@ public void shouldGetFailedStreamThreadsSensor() {

false,

description

);

-replay(StreamsMetricsImpl.class, streamsMetrics);

final Sensor sensor =

ClientMetrics.failedStreamThreadSensor(streamsMetrics);

-

-verify(StreamsMetricsImpl.class, streamsMetrics);

Review comment:

I see. I thought it also verified that the mocked methods actually got

used.

Looking at the test closer, though, I think that additional verification

isn't really needed here.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] vvcephei merged pull request #9414: KAFKA-10585: Kafka Streams should clean up the state store directory from cleanup

vvcephei merged pull request #9414: URL: https://github.com/apache/kafka/pull/9414 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] jlprat commented on pull request #10855: MINOR: clean up unneeded `@SuppressWarnings` on Streams module

jlprat commented on pull request #10855: URL: https://github.com/apache/kafka/pull/10855#issuecomment-858998167 @mjsax Could you restart the build? Last time it failed with the `1` exit code -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (KAFKA-8940) Flaky Test SmokeTestDriverIntegrationTest.shouldWorkWithRebalance

[ https://issues.apache.org/jira/browse/KAFKA-8940?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17361198#comment-17361198 ] Josep Prat commented on KAFKA-8940: --- Hi [~ableegoldman] I read you comment about a month ago, but as I didn't see it failing again, I thought it might have been fixed (by maybe some refactoring). But I agree with your judgement. I vote for disabling this test and creating a new ticket to code it properly. > Flaky Test SmokeTestDriverIntegrationTest.shouldWorkWithRebalance > - > > Key: KAFKA-8940 > URL: https://issues.apache.org/jira/browse/KAFKA-8940 > Project: Kafka > Issue Type: Bug > Components: streams, unit tests >Reporter: Guozhang Wang >Priority: Major > Labels: flaky-test, newbie++ > > The test does not properly account for windowing. See this comment for full > details. > We can patch this test by fixing the timestamps of the input data to avoid > crossing over a window boundary, or account for this when verifying the > output. Since we have access to the input data it should be possible to > compute whether/when we do cross a window boundary, and adjust the expected > output accordingly -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] vvcephei commented on pull request #9414: KAFKA-10585: Kafka Streams should clean up the state store directory from cleanup

vvcephei commented on pull request #9414: URL: https://github.com/apache/kafka/pull/9414#issuecomment-858990902 It looks like the core integration tests have gotten into bad shape. They've been failing on trunk as well. I just ran the Streams integration tests on my machine, and they passed, so I'll go ahead and merge. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] mumrah commented on a change in pull request #10864: KAFKA-12155 Metadata log and snapshot cleaning

mumrah commented on a change in pull request #10864:

URL: https://github.com/apache/kafka/pull/10864#discussion_r649482956

##

File path: core/src/main/scala/kafka/raft/KafkaMetadataLog.scala

##

@@ -294,19 +298,14 @@ final class KafkaMetadataLog private (

}

}

- override def deleteBeforeSnapshot(logStartSnapshotId: OffsetAndEpoch):

Boolean = {

+ override def deleteBeforeSnapshot(deleteBeforeSnapshotId: OffsetAndEpoch):

Boolean = {

Review comment:

Since this is no longer used to increase the Log Start Offset, I think

this method can go away.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] mumrah opened a new pull request #10864: KAFKA-12155 Metadata log and snapshot cleaning

mumrah opened a new pull request #10864: URL: https://github.com/apache/kafka/pull/10864 This PR includes changes to KafkaRaftClient and KafkaMetadataLog to support periodic cleaning of old log segments and snapshots. TODO the rest of the description -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (KAFKA-8940) Flaky Test SmokeTestDriverIntegrationTest.shouldWorkWithRebalance

[ https://issues.apache.org/jira/browse/KAFKA-8940?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17361196#comment-17361196 ] A. Sophie Blee-Goldman commented on KAFKA-8940: --- Hey [~mjsax] [~josep.prat] (and others), if you read my last comment on this ticket it explains exactly why this test is failing. Luckily it has to do with only the test itself, as it's a bug in the assumptions for the generated input. It's just not necessarily a quick fix. Maybe we should @Ignore it for now, and then file a separate ticket to circle back and correct the assumptions in this test. > Flaky Test SmokeTestDriverIntegrationTest.shouldWorkWithRebalance > - > > Key: KAFKA-8940 > URL: https://issues.apache.org/jira/browse/KAFKA-8940 > Project: Kafka > Issue Type: Bug > Components: streams, unit tests >Reporter: Guozhang Wang >Priority: Major > Labels: flaky-test, newbie++ > > The test does not properly account for windowing. See this comment for full > details. > We can patch this test by fixing the timestamps of the input data to avoid > crossing over a window boundary, or account for this when verifying the > output. Since we have access to the input data it should be possible to > compute whether/when we do cross a window boundary, and adjust the expected > output accordingly -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Comment Edited] (KAFKA-12468) Initial offsets are copied from source to target cluster

[

https://issues.apache.org/jira/browse/KAFKA-12468?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17361084#comment-17361084

]

Amber Liu edited comment on KAFKA-12468 at 6/10/21, 7:41 PM:

-

I was using standalone mode with active-passive setup and saw negative offsets

in the past as well. One of the reasons I found was due to consumer request

timeout, e.g. org.apache.kafka.common.errors.DisconnectException. I increased

the request timeout and tasks.max and the offsets are synced correctly now.

{code:java}

# consumer, need to set higher timeout

source.admin.request.timeout.ms = 18

source.consumer.request.timeout.ms = 18{code}

was (Author: aaamber):

I was using standalone mode with active-passive setup and saw negative offsets

in the past as well. One of the reasons I found was due to consumer request

timeout, e.g. org.apache.kafka.common.errors.DisconnectException. I increased

the request timeout and tasks.max and the offsets are synced correctly now.

{code:java}

# consumer, need to set higher timeout

source.admin.request.timeout.ms = 18

source.consumer.session.timeout.ms = 18

source.consumer.request.timeout.ms = 18{code}

> Initial offsets are copied from source to target cluster

>

>

> Key: KAFKA-12468

> URL: https://issues.apache.org/jira/browse/KAFKA-12468

> Project: Kafka

> Issue Type: Bug

> Components: mirrormaker

>Affects Versions: 2.7.0

>Reporter: Bart De Neuter

>Priority: Major

>

> We have an active-passive setup where the 3 connectors from mirror maker 2

> (heartbeat, checkpoint and source) are running on a dedicated Kafka connect

> cluster on the target cluster.

> Offset syncing is enabled as specified by KIP-545. But when activated, it

> seems the offsets from the source cluster are initially copied to the target

> cluster without translation. This causes a negative lag for all synced

> consumer groups. Only when we reset the offsets for each topic/partition on

> the target cluster and produce a record on the topic/partition in the source,

> the sync starts working correctly.

> I would expect that the consumer groups are synced but that the current

> offsets of the source cluster are not copied to the target cluster.

> This is the configuration we are currently using:

> Heartbeat connector

>

> {code:xml}

> {

> "name": "mm2-mirror-heartbeat",

> "config": {

> "name": "mm2-mirror-heartbeat",

> "connector.class":

> "org.apache.kafka.connect.mirror.MirrorHeartbeatConnector",

> "source.cluster.alias": "eventador",

> "target.cluster.alias": "msk",

> "source.cluster.bootstrap.servers": "",

> "target.cluster.bootstrap.servers": "",

> "topics": ".*",

> "groups": ".*",

> "tasks.max": "1",

> "replication.policy.class": "CustomReplicationPolicy",

> "sync.group.offsets.enabled": "true",

> "sync.group.offsets.interval.seconds": "5",

> "emit.checkpoints.enabled": "true",

> "emit.checkpoints.interval.seconds": "30",

> "emit.heartbeats.interval.seconds": "30",

> "key.converter": "

> org.apache.kafka.connect.converters.ByteArrayConverter",

> "value.converter":

> "org.apache.kafka.connect.converters.ByteArrayConverter"

> }

> }

> {code}

> Checkpoint connector:

> {code:xml}

> {

> "name": "mm2-mirror-checkpoint",

> "config": {

> "name": "mm2-mirror-checkpoint",

> "connector.class":

> "org.apache.kafka.connect.mirror.MirrorCheckpointConnector",

> "source.cluster.alias": "eventador",

> "target.cluster.alias": "msk",

> "source.cluster.bootstrap.servers": "",

> "target.cluster.bootstrap.servers": "",

> "topics": ".*",

> "groups": ".*",

> "tasks.max": "40",

> "replication.policy.class": "CustomReplicationPolicy",

> "sync.group.offsets.enabled": "true",

> "sync.group.offsets.interval.seconds": "5",

> "emit.checkpoints.enabled": "true",

> "emit.checkpoints.interval.seconds": "30",

> "emit.heartbeats.interval.seconds": "30",

> "key.converter": "

> org.apache.kafka.connect.converters.ByteArrayConverter",

> "value.converter":

> "org.apache.kafka.connect.converters.ByteArrayConverter"

> }

> }

> {code}

> Source connector:

> {code:xml}

> {

> "name": "mm2-mirror-source",

> "config": {

> "name": "mm2-mirror-source",

> "connector.class":

> "org.apache.kafka.connect.mirror.MirrorSourceConnector",

> "source.cluster.alias": "eventador",

> "target.cluster.alias": "msk",

> "source.cluster.bootstrap.servers": "",

> "target.cluster.bootstrap.servers": "",

> "topics": ".*",

> "groups": ".*",

> "tasks.max": "40",

> "replication.policy.class": "CustomReplicationPolicy",

> "sync.group.offsets.enabled": "true",

> "sync.group.offsets.interval.seconds": "5",

>

[GitHub] [kafka] jolshan commented on a change in pull request #10584: KAFKA-12701: NPE in MetadataRequest when using topic IDs

jolshan commented on a change in pull request #10584:

URL: https://github.com/apache/kafka/pull/10584#discussion_r649472712

##

File path:

clients/src/main/java/org/apache/kafka/common/requests/MetadataRequest.java

##

@@ -92,6 +93,13 @@ public MetadataRequest build(short version) {

if (!data.allowAutoTopicCreation() && version < 4)

throw new UnsupportedVersionException("MetadataRequest

versions older than 4 don't support the " +

"allowAutoTopicCreation field");

+if (data.topics() != null) {

Review comment:

I think this one is needed as we can't remove in java files. I tried to

remove all of the ones in scala files.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] jolshan commented on a change in pull request #10584: KAFKA-12701: NPE in MetadataRequest when using topic IDs

jolshan commented on a change in pull request #10584:

URL: https://github.com/apache/kafka/pull/10584#discussion_r649460759

##

File path:

clients/src/main/java/org/apache/kafka/common/requests/MetadataRequest.java

##

@@ -92,6 +93,13 @@ public MetadataRequest build(short version) {

if (!data.allowAutoTopicCreation() && version < 4)

throw new UnsupportedVersionException("MetadataRequest

versions older than 4 don't support the " +

"allowAutoTopicCreation field");

+if (data.topics() != null) {

Review comment:

will do

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Assigned] (KAFKA-12870) RecordAccumulator stuck in a flushing state

[ https://issues.apache.org/jira/browse/KAFKA-12870?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Jason Gustafson reassigned KAFKA-12870: --- Assignee: Jason Gustafson > RecordAccumulator stuck in a flushing state > --- > > Key: KAFKA-12870 > URL: https://issues.apache.org/jira/browse/KAFKA-12870 > Project: Kafka > Issue Type: Bug > Components: producer , streams >Affects Versions: 2.5.1, 2.8.0, 2.7.1, 2.6.2 >Reporter: Niclas Lockner >Assignee: Jason Gustafson >Priority: Major > Fix For: 3.0.0 > > Attachments: RecordAccumulator.log, full.log > > > After a Kafka Stream with exactly once enabled has performed its first > commit, the RecordAccumulator within the stream's internal producer gets > stuck in a state where all subsequent ProducerBatches that get allocated are > immediately flushed instead of being held in memory until they expire, > regardless of the stream's linger or batch size config. > This is reproduced in the example code found at > [https://github.com/niclaslockner/kafka-12870] which can be run with > ./gradlew run --args= > The example has a producer that sends 1 record/sec to one topic, and a Kafka > stream with EOS enabled that forwards the records from that topic to another > topic with the configuration linger = 5 sec, commit interval = 10 sec. > > The expected behavior when running the example is that the stream's > ProducerBatches will expire (or get flushed because of the commit) every 5th > second, and that the stream's producer will send a ProduceRequest every 5th > second with an expired ProducerBatch that contains 5 records. > The actual behavior is that the ProducerBatch is made immediately available > for the Sender, and the Sender sends one ProduceRequest for each record. > > The example code contains a copy of the RecordAccumulator class (copied from > kafka-clients 2.8.0) with some additional logging added to > * RecordAccumulator#ready(Cluster, long) > * RecordAccumulator#beginFlush() > * RecordAccumulator#awaitFlushCompletion() > These log entries show (see the attached RecordsAccumulator.log) > * that the batches are considered sendable because a flush is in progress > * that Sender.maybeSendAndPollTransactionalRequest() calls > RecordAccumulator's beginFlush() without also calling awaitFlushCompletion(), > and that this makes RecordAccumulator's flushesInProgress jump between 1-2 > instead of the expected 0-1. > > This issue is not reproducible in version 2.3.1 or 2.4.1. > > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] jsancio commented on pull request #10593: KAFKA-10800 Validate the snapshot id when the state machine creates a snapshot

jsancio commented on pull request #10593: URL: https://github.com/apache/kafka/pull/10593#issuecomment-858925958 > @jsancio Can you share more details about the possible concurrency scenario with createSnapshot ? BTW, will moving the validation to onSnapshotFrozen imply that before creating the snapshot, there's no validation? I think maybe we can keep the validation here and add some additional check before freeze() which makes the snapshot visible? @feyman2016, I think it is reasonable to do both. Validate when `createSnapshot` is called and validate again in `onSnapshotFrozen`. In both cases this validation should be optional. Validate if it is created through `RaftClient.createSnapshot`. Don't validate if `KafkaRaftClient` creates the snapshot internally because of a `FetchResponse` from the leader. I have been working on a PR related to this if you want to take a look: https://github.com/apache/kafka/pull/10786. It would be nice to get your PR merged before my PR. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (KAFKA-9897) Flaky Test StoreQueryIntegrationTest#shouldQuerySpecificActivePartitionStores

[

https://issues.apache.org/jira/browse/KAFKA-9897?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17361180#comment-17361180

]

Matthias J. Sax commented on KAFKA-9897:

Different test, but same sympton:

{quote}java.lang.AssertionError: Expected: a string containing "Cannot get

state store source-table because the stream thread is PARTITIONS_ASSIGNED, not

RUNNING" but: was "The state store, source-table, may have migrated to another

instance." at org.hamcrest.MatcherAssert.assertThat(MatcherAssert.java:20) at

org.hamcrest.MatcherAssert.assertThat(MatcherAssert.java:6) at

org.apache.kafka.streams.integration.StoreQueryIntegrationTest.lambda$shouldQueryOnlyActivePartitionStoresByDefault$2(StoreQueryIntegrationTest.java:151)

at

org.apache.kafka.streams.integration.StoreQueryIntegrationTest.until(StoreQueryIntegrationTest.java:420)

at

org.apache.kafka.streams.integration.StoreQueryIntegrationTest.shouldQueryOnlyActivePartitionStoresByDefault(StoreQueryIntegrationTest.java:131){quote}

> Flaky Test StoreQueryIntegrationTest#shouldQuerySpecificActivePartitionStores

> -

>

> Key: KAFKA-9897

> URL: https://issues.apache.org/jira/browse/KAFKA-9897

> Project: Kafka

> Issue Type: Bug

> Components: streams, unit tests

>Affects Versions: 2.6.0

>Reporter: Matthias J. Sax

>Priority: Critical

> Labels: flaky-test

>

> [https://builds.apache.org/job/kafka-pr-jdk14-scala2.13/22/testReport/junit/org.apache.kafka.streams.integration/StoreQueryIntegrationTest/shouldQuerySpecificActivePartitionStores/]

> {quote}org.apache.kafka.streams.errors.InvalidStateStoreException: Cannot get

> state store source-table because the stream thread is PARTITIONS_ASSIGNED,

> not RUNNING at

> org.apache.kafka.streams.state.internals.StreamThreadStateStoreProvider.stores(StreamThreadStateStoreProvider.java:85)

> at

> org.apache.kafka.streams.state.internals.QueryableStoreProvider.getStore(QueryableStoreProvider.java:61)

> at org.apache.kafka.streams.KafkaStreams.store(KafkaStreams.java:1183) at

> org.apache.kafka.streams.integration.StoreQueryIntegrationTest.shouldQuerySpecificActivePartitionStores(StoreQueryIntegrationTest.java:178){quote}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (KAFKA-12377) Flaky Test SaslAuthenticatorTest#testSslClientAuthRequiredForSaslSslListener

[

https://issues.apache.org/jira/browse/KAFKA-12377?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17361177#comment-17361177

]

Matthias J. Sax commented on KAFKA-12377:

-

Failed again.

> Flaky Test SaslAuthenticatorTest#testSslClientAuthRequiredForSaslSslListener

>

>

> Key: KAFKA-12377

> URL: https://issues.apache.org/jira/browse/KAFKA-12377

> Project: Kafka

> Issue Type: Test

> Components: core, security, unit tests

>Reporter: Matthias J. Sax

>Priority: Critical

> Labels: flaky-test

>

> {quote}org.opentest4j.AssertionFailedError: expected:

> but was: at

> org.junit.jupiter.api.AssertionUtils.fail(AssertionUtils.java:55) at

> org.junit.jupiter.api.AssertionUtils.failNotEqual(AssertionUtils.java:62) at

> org.junit.jupiter.api.AssertEquals.assertEquals(AssertEquals.java:182) at

> org.junit.jupiter.api.AssertEquals.assertEquals(AssertEquals.java:177) at

> org.junit.jupiter.api.Assertions.assertEquals(Assertions.java:1124) at

> org.apache.kafka.common.network.NetworkTestUtils.waitForChannelClose(NetworkTestUtils.java:111)

> at

> org.apache.kafka.common.security.authenticator.SaslAuthenticatorTest.createAndCheckClientConnectionFailure(SaslAuthenticatorTest.java:2187)

> at

> org.apache.kafka.common.security.authenticator.SaslAuthenticatorTest.createAndCheckSslAuthenticationFailure(SaslAuthenticatorTest.java:2210)

> at

> org.apache.kafka.common.security.authenticator.SaslAuthenticatorTest.verifySslClientAuthForSaslSslListener(SaslAuthenticatorTest.java:1846)

> at

> org.apache.kafka.common.security.authenticator.SaslAuthenticatorTest.testSslClientAuthRequiredForSaslSslListener(SaslAuthenticatorTest.java:1800){quote}

> STDOUT

> {quote}[2021-02-26 07:18:57,220] ERROR Extensions provided in login context

> without a token

> (org.apache.kafka.common.security.oauthbearer.OAuthBearerLoginModule:318)

> java.io.IOException: Extensions provided in login context without a token at

> org.apache.kafka.common.security.oauthbearer.internals.unsecured.OAuthBearerUnsecuredLoginCallbackHandler.handle(OAuthBearerUnsecuredLoginCallbackHandler.java:165)

> at

> org.apache.kafka.common.security.oauthbearer.OAuthBearerLoginModule.identifyToken(OAuthBearerLoginModule.java:316)

> at

> org.apache.kafka.common.security.oauthbearer.OAuthBearerLoginModule.login(OAuthBearerLoginModule.java:301)

> [...]

> Caused by:

> org.apache.kafka.common.security.oauthbearer.internals.unsecured.OAuthBearerConfigException:

> Extensions provided in login context without a token at

> org.apache.kafka.common.security.oauthbearer.internals.unsecured.OAuthBearerUnsecuredLoginCallbackHandler.handleTokenCallback(OAuthBearerUnsecuredLoginCallbackHandler.java:192)

> at

> org.apache.kafka.common.security.oauthbearer.internals.unsecured.OAuthBearerUnsecuredLoginCallbackHandler.handle(OAuthBearerUnsecuredLoginCallbackHandler.java:163)

> ... 116 more{quote}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (KAFKA-8940) Flaky Test SmokeTestDriverIntegrationTest.shouldWorkWithRebalance

[ https://issues.apache.org/jira/browse/KAFKA-8940?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17361176#comment-17361176 ] Matthias J. Sax commented on KAFKA-8940: Failed again. > Flaky Test SmokeTestDriverIntegrationTest.shouldWorkWithRebalance > - > > Key: KAFKA-8940 > URL: https://issues.apache.org/jira/browse/KAFKA-8940 > Project: Kafka > Issue Type: Bug > Components: streams, unit tests >Reporter: Guozhang Wang >Priority: Major > Labels: flaky-test, newbie++ > > The test does not properly account for windowing. See this comment for full > details. > We can patch this test by fixing the timestamps of the input data to avoid > crossing over a window boundary, or account for this when verifying the > output. Since we have access to the input data it should be possible to > compute whether/when we do cross a window boundary, and adjust the expected > output accordingly -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (KAFKA-12933) Flaky test ReassignPartitionsIntegrationTest.testReassignmentWithAlterIsrDisabled

[

https://issues.apache.org/jira/browse/KAFKA-12933?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17361174#comment-17361174

]

Matthias J. Sax commented on KAFKA-12933:

-

Failed a second time.

> Flaky test

> ReassignPartitionsIntegrationTest.testReassignmentWithAlterIsrDisabled

> -

>

> Key: KAFKA-12933

> URL: https://issues.apache.org/jira/browse/KAFKA-12933

> Project: Kafka

> Issue Type: Test

> Components: admin

>Reporter: Matthias J. Sax

>Priority: Critical

> Labels: flaky-test

>

> {quote}org.opentest4j.AssertionFailedError: expected: but was:

> at org.junit.jupiter.api.AssertionUtils.fail(AssertionUtils.java:55) at

> org.junit.jupiter.api.AssertTrue.assertTrue(AssertTrue.java:40) at

> org.junit.jupiter.api.AssertTrue.assertTrue(AssertTrue.java:35) at

> org.junit.jupiter.api.Assertions.assertTrue(Assertions.java:162) at

> kafka.admin.ReassignPartitionsIntegrationTest.executeAndVerifyReassignment(ReassignPartitionsIntegrationTest.scala:130)

> at

> kafka.admin.ReassignPartitionsIntegrationTest.testReassignmentWithAlterIsrDisabled(ReassignPartitionsIntegrationTest.scala:74){quote}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Created] (KAFKA-12933) Flaky test ReassignPartitionsIntegrationTest.testReassignmentWithAlterIsrDisabled

Matthias J. Sax created KAFKA-12933:

---

Summary: Flaky test

ReassignPartitionsIntegrationTest.testReassignmentWithAlterIsrDisabled

Key: KAFKA-12933

URL: https://issues.apache.org/jira/browse/KAFKA-12933

Project: Kafka

Issue Type: Test

Components: admin

Reporter: Matthias J. Sax

{quote}org.opentest4j.AssertionFailedError: expected: but was:

at org.junit.jupiter.api.AssertionUtils.fail(AssertionUtils.java:55) at

org.junit.jupiter.api.AssertTrue.assertTrue(AssertTrue.java:40) at

org.junit.jupiter.api.AssertTrue.assertTrue(AssertTrue.java:35) at

org.junit.jupiter.api.Assertions.assertTrue(Assertions.java:162) at

kafka.admin.ReassignPartitionsIntegrationTest.executeAndVerifyReassignment(ReassignPartitionsIntegrationTest.scala:130)

at

kafka.admin.ReassignPartitionsIntegrationTest.testReassignmentWithAlterIsrDisabled(ReassignPartitionsIntegrationTest.scala:74){quote}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [kafka] ijuma commented on a change in pull request #10584: KAFKA-12701: NPE in MetadataRequest when using topic IDs

ijuma commented on a change in pull request #10584:

URL: https://github.com/apache/kafka/pull/10584#discussion_r649435657

##

File path:

clients/src/main/java/org/apache/kafka/common/requests/MetadataRequest.java

##

@@ -92,6 +93,13 @@ public MetadataRequest build(short version) {

if (!data.allowAutoTopicCreation() && version < 4)

throw new UnsupportedVersionException("MetadataRequest

versions older than 4 don't support the " +

"allowAutoTopicCreation field");

+if (data.topics() != null) {

Review comment:

Can we remove the unnecessary `()` from the various files?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] jsancio commented on a change in pull request #10749: KAFKA-12773: Use UncheckedIOException when wrapping IOException

jsancio commented on a change in pull request #10749:

URL: https://github.com/apache/kafka/pull/10749#discussion_r649429301

##

File path: raft/src/main/java/org/apache/kafka/raft/KafkaRaftClient.java

##

@@ -372,27 +371,23 @@ private void maybeFireLeaderChange() {

@Override

public void initialize() {

-try {

-quorum.initialize(new OffsetAndEpoch(log.endOffset().offset,

log.lastFetchedEpoch()));

+quorum.initialize(new OffsetAndEpoch(log.endOffset().offset,

log.lastFetchedEpoch()));

-long currentTimeMs = time.milliseconds();

-if (quorum.isLeader()) {

-throw new IllegalStateException("Voter cannot initialize as a

Leader");

-} else if (quorum.isCandidate()) {

-onBecomeCandidate(currentTimeMs);

-} else if (quorum.isFollower()) {

-onBecomeFollower(currentTimeMs);

-}

+long currentTimeMs = time.milliseconds();

+if (quorum.isLeader()) {

+throw new IllegalStateException("Voter cannot initialize as a

Leader");

+} else if (quorum.isCandidate()) {

+onBecomeCandidate(currentTimeMs);

+} else if (quorum.isFollower()) {

+onBecomeFollower(currentTimeMs);

+}

-// When there is only a single voter, become candidate immediately

-if (quorum.isVoter()

-&& quorum.remoteVoters().isEmpty()

-&& !quorum.isCandidate()) {

+// When there is only a single voter, become candidate immediately

+if (quorum.isVoter()

+&& quorum.remoteVoters().isEmpty()

+&& !quorum.isCandidate()) {

-transitionToCandidate(currentTimeMs);

-}

-} catch (IOException e) {

-throw new RuntimeException(e);

+transitionToCandidate(currentTimeMs);

Review comment:

Leaving this note for future readers. My comments above are not

accurate. I misread the diff generated by GitHub. When I wrote the comment, I

was under the impression that the old code was handling, wrapping and

re-throwing the `IOException`.

Instead the old code wrapped and re-threw the `IOException`; it was not

handling the exception.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] jolshan commented on a change in pull request #10584: KAFKA-12701: NPE in MetadataRequest when using topic IDs

jolshan commented on a change in pull request #10584:

URL: https://github.com/apache/kafka/pull/10584#discussion_r649426864

##

File path: core/src/test/scala/unit/kafka/server/MetadataRequestTest.scala

##

@@ -234,6 +235,32 @@ class MetadataRequestTest extends

AbstractMetadataRequestTest {

}

}

+ @Test

+ def testInvalidMetadataRequestReturnsError(): Unit = {

Review comment:

Ah wait. I think I figured out a possible way to do this after all. :)

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] jolshan commented on a change in pull request #10584: KAFKA-12701: NPE in MetadataRequest when using topic IDs

jolshan commented on a change in pull request #10584:

URL: https://github.com/apache/kafka/pull/10584#discussion_r649425805

##

File path: core/src/test/scala/unit/kafka/server/MetadataRequestTest.scala

##

@@ -234,6 +235,32 @@ class MetadataRequestTest extends

AbstractMetadataRequestTest {

}

}

+ @Test

+ def testInvalidMetadataRequestReturnsError(): Unit = {

Review comment:

I must have missed this comment earlier. I moved the test to

KafkaApisTest. There we don't check if the response is built since that is part

of the request/response handling code. From what I saw the other invalid tests

were very focused on one thing.

I think the biggest question is whether we care to test the null name is

correctly being set to the empty string. I agree it is good not to start and

stop another kafka cluster.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] lct45 commented on pull request #10813: KAFKA-9559: Change default serde to be `null`

lct45 commented on pull request #10813: URL: https://github.com/apache/kafka/pull/10813#issuecomment-858849165 Okay I did some digging -> `defaultKeySerde` and `defaultValueSerde` are only called from the `init` of `AbstractProcessorContext`. I checked all the places that we call `AbstractProcessorContext#keySerde()` and `AbstractProcessorContext#valueSerde()` to make sure we're catching all the potential NPEs and I am fairly confident that we're ok. I did some streamlining so now we throw the `ConfigException` right after we access `AbstractProcessorContext#keySerde()` / `valueSerde()` so we aren't passing null's around and there's some tracking b/w throwing errors and calling a certain method. The one place this wasn't possible, was with creating state stores. Right now, we pass around `context.KeySerde()` and `context.valueSerde()` rather than just the `context` in `MeteredKeyValueStore`, `MeteredSessionStore`, and `MeteredWindowStore`. The tricky part with moving to passing around context is that we need to accept two types of context, a `ProcessorContext` and a `StateStoreContext`. I'm open to either leaving these calls as less streamlined than everything else, or duplicating code in `WrappingNullableUtils` to accept both types of context. Thoughts @mjsax @ableegoldman ? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] jsancio commented on pull request #10786: KAFKA-12787: Integrate controller snapshoting with raft client

jsancio commented on pull request #10786: URL: https://github.com/apache/kafka/pull/10786#issuecomment-858848250 Created a Jira for renaming the types SnapshotWriter and SnapshotReader, and to instead add interface with the same name. https://issues.apache.org/jira/browse/KAFKA-12932 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Created] (KAFKA-12932) Interfaces for SnapshotReader and SnapshotWriter

Jose Armando Garcia Sancio created KAFKA-12932: -- Summary: Interfaces for SnapshotReader and SnapshotWriter Key: KAFKA-12932 URL: https://issues.apache.org/jira/browse/KAFKA-12932 Project: Kafka Issue Type: Sub-task Reporter: Jose Armando Garcia Sancio Change the snapshot API so that SnapshotWriter and SnapshotReader are interfaces. Change the existing types SnapshotWriter and SnapshotReader to use a different name and to implement the interfaces introduced by this issue. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (KAFKA-12931) KIP-746: Revise KRaft Metadata Records

[ https://issues.apache.org/jira/browse/KAFKA-12931?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Konstantine Karantasis updated KAFKA-12931: --- Fix Version/s: 3.0.0 > KIP-746: Revise KRaft Metadata Records > -- > > Key: KAFKA-12931 > URL: https://issues.apache.org/jira/browse/KAFKA-12931 > Project: Kafka > Issue Type: Improvement > Components: controller, kraft >Reporter: Colin McCabe >Assignee: Colin McCabe >Priority: Major > Fix For: 3.0.0 > > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] dchristle edited a comment on pull request #10847: KAFKA-12921: Upgrade ZSTD JNI from 1.4.9-1 to 1.5.0-1

dchristle edited a comment on pull request #10847: URL: https://github.com/apache/kafka/pull/10847#issuecomment-858843145 > The current version is 1.4.9, so I'm a bit confused why we're mentioning anything besides 1.5.0. Woops - I'm getting my wires crossed on a different zstd 1.5.0 related PR I have with a larger upgrade. You are right -- this is just from `1.4.9-1` to `1.5.0-1`. Sorry for my confusion. I updated the PR description to reflect this. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] dchristle commented on pull request #10847: KAFKA-12921: Upgrade ZSTD JNI from 1.4.9-1 to 1.5.0-1

dchristle commented on pull request #10847: URL: https://github.com/apache/kafka/pull/10847#issuecomment-858843145 > The current version is 1.4.9, so I'm a bit confused why we're mentioning anything besides 1.5.0. Woops - I'm getting my wires crossed on a different zstd 1.5.0 related PR I have with a larger upgrade. You are right -- this is just from `1.4.9-1` to `1.5.0-1`. Sorry for my confusion. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Created] (KAFKA-12931) KIP-746: Revise KRaft Metadata Records

Colin McCabe created KAFKA-12931: Summary: KIP-746: Revise KRaft Metadata Records Key: KAFKA-12931 URL: https://issues.apache.org/jira/browse/KAFKA-12931 Project: Kafka Issue Type: Improvement Components: kraft, controller Reporter: Colin McCabe Assignee: Colin McCabe -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] dchristle edited a comment on pull request #10847: KAFKA-12921: Upgrade ZSTD JNI from 1.4.9-1 to 1.5.0-1

dchristle edited a comment on pull request #10847: URL: https://github.com/apache/kafka/pull/10847#issuecomment-858804912 @ijuma > This is a good change, but can we please quality the perf improvements claim? My understanding is that only applies to certain compression levels and Kafka currently always picks a specific one. @dongjinleekr is working on making that configurable via a separate KIP. It is true that the most recent performance improvements I quoted (for `1.5.0`) appear only in mid-range compression levels. > Also, why are we listing versions in the PR description that are not relevant to this upgrade? I tried to follow a previous `zstd-jni` PR's convention here: https://github.com/apache/kafka/pull/10285 . I think it gives context on the magnitude of the upgrade, but I can change the commit message/PR title to remove the existing version reference if you like. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] ijuma edited a comment on pull request #10847: KAFKA-12921: Upgrade ZSTD JNI from 1.4.9-1 to 1.5.0-1

ijuma edited a comment on pull request #10847: URL: https://github.com/apache/kafka/pull/10847#issuecomment-858829828 The current version is 1.4.9, so I'm a bit confused why we're mentioning anything besides 1.5.0. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] ijuma commented on pull request #10847: KAFKA-12921: Upgrade ZSTD JNI from 1.4.9-1 to 1.5.0-1

ijuma commented on pull request #10847: URL: https://github.com/apache/kafka/pull/10847#issuecomment-858829828 The current version if 1.4.9, so I'm a bit confused why we're mentioning anything besides 1.5.0. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] satishd commented on a change in pull request #10579: KAFKA-9555 Added default RLMM implementation based on internal topic storage.

satishd commented on a change in pull request #10579:

URL: https://github.com/apache/kafka/pull/10579#discussion_r649390677

##

File path:

storage/src/main/java/org/apache/kafka/server/log/remote/metadata/storage/ConsumerTask.java

##

@@ -0,0 +1,229 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.kafka.server.log.remote.metadata.storage;

+

+import org.apache.kafka.clients.consumer.ConsumerRecord;

+import org.apache.kafka.clients.consumer.ConsumerRecords;

+import org.apache.kafka.clients.consumer.KafkaConsumer;

+import org.apache.kafka.common.KafkaException;

+import org.apache.kafka.common.TopicIdPartition;

+import org.apache.kafka.common.TopicPartition;

+import

org.apache.kafka.server.log.remote.metadata.storage.serialization.RemoteLogMetadataSerde;

+import org.apache.kafka.server.log.remote.storage.RemoteLogMetadata;

+import org.apache.kafka.server.log.remote.storage.RemoteLogSegmentMetadata;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.io.Closeable;

+import java.time.Duration;

+import java.util.Collections;

+import java.util.HashSet;

+import java.util.Map;

+import java.util.Objects;

+import java.util.Optional;

+import java.util.Set;

+import java.util.concurrent.ConcurrentHashMap;

+import java.util.stream.Collectors;

+

+import static

org.apache.kafka.server.log.remote.metadata.storage.TopicBasedRemoteLogMetadataManagerConfig.REMOTE_LOG_METADATA_TOPIC_NAME;

+

+/**

+ * This class is responsible for consuming messages from remote log metadata

topic ({@link

TopicBasedRemoteLogMetadataManagerConfig#REMOTE_LOG_METADATA_TOPIC_NAME})

+ * partitions and maintain the state of the remote log segment metadata. It

gives an API to add or remove

+ * for what topic partition's metadata should be consumed by this instance

using

+ * {{@link #addAssignmentsForPartitions(Set)}} and {@link

#removeAssignmentsForPartitions(Set)} respectively.

+ *

+ * When a broker is started, controller sends topic partitions that this

broker is leader or follower for and the

+ * partitions to be deleted. This class receives those notifications with

+ * {@link #addAssignmentsForPartitions(Set)} and {@link

#removeAssignmentsForPartitions(Set)} assigns consumer for the

+ * respective remote log metadata partitions by using {@link

RemoteLogMetadataTopicPartitioner#metadataPartition(TopicIdPartition)}.

+ * Any leadership changes later are called through the same API. We will

remove the partitions that are deleted from

+ * this broker which are received through {@link

#removeAssignmentsForPartitions(Set)}.

+ *

+ * After receiving these events it invokes {@link

RemotePartitionMetadataEventHandler#handleRemoteLogSegmentMetadata(RemoteLogSegmentMetadata)},

+ * which maintains in-memory representation of the state of {@link

RemoteLogSegmentMetadata}.

+ */

+class ConsumerTask implements Runnable, Closeable {

+private static final Logger log =

LoggerFactory.getLogger(ConsumerTask.class);

+

+private static final long POLL_INTERVAL_MS = 30L;

+

+private final RemoteLogMetadataSerde serde = new RemoteLogMetadataSerde();

+private final KafkaConsumer consumer;

+private final RemotePartitionMetadataEventHandler

remotePartitionMetadataEventHandler;

+private final RemoteLogMetadataTopicPartitioner topicPartitioner;

+

+private volatile boolean close = false;

Review comment:

Done

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] satishd commented on a change in pull request #10579: KAFKA-9555 Added default RLMM implementation based on internal topic storage.

satishd commented on a change in pull request #10579:

URL: https://github.com/apache/kafka/pull/10579#discussion_r649390516

##

File path:

storage/src/main/java/org/apache/kafka/server/log/remote/metadata/storage/ProducerManager.java

##

@@ -0,0 +1,125 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.kafka.server.log.remote.metadata.storage;

+

+import org.apache.kafka.clients.producer.Callback;

+import org.apache.kafka.clients.producer.KafkaProducer;

+import org.apache.kafka.clients.producer.ProducerRecord;

+import org.apache.kafka.clients.producer.RecordMetadata;

+import org.apache.kafka.common.KafkaException;

+import org.apache.kafka.common.TopicIdPartition;

+import

org.apache.kafka.server.log.remote.metadata.storage.serialization.RemoteLogMetadataSerde;

+import org.apache.kafka.server.log.remote.storage.RemoteLogMetadata;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.io.Closeable;

+import java.time.Duration;

+

+/**

+ * This class is responsible for publishing messages into the remote log

metadata topic partitions.

+ */

+public class ProducerManager implements Closeable {

+private static final Logger log =

LoggerFactory.getLogger(ProducerManager.class);

+

+private final RemoteLogMetadataSerde serde = new RemoteLogMetadataSerde();

+private final KafkaProducer producer;

+private final RemoteLogMetadataTopicPartitioner topicPartitioner;

+private final TopicBasedRemoteLogMetadataManagerConfig rlmmConfig;

+

+private volatile boolean close = false;

Review comment:

Done.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] satishd commented on a change in pull request #10579: KAFKA-9555 Added default RLMM implementation based on internal topic storage.

satishd commented on a change in pull request #10579:

URL: https://github.com/apache/kafka/pull/10579#discussion_r649390143

##

File path:

storage/src/main/java/org/apache/kafka/server/log/remote/metadata/storage/ProducerManager.java

##

@@ -0,0 +1,125 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.kafka.server.log.remote.metadata.storage;

+

+import org.apache.kafka.clients.producer.Callback;

+import org.apache.kafka.clients.producer.KafkaProducer;

+import org.apache.kafka.clients.producer.ProducerRecord;

+import org.apache.kafka.clients.producer.RecordMetadata;

+import org.apache.kafka.common.KafkaException;

+import org.apache.kafka.common.TopicIdPartition;

+import

org.apache.kafka.server.log.remote.metadata.storage.serialization.RemoteLogMetadataSerde;

+import org.apache.kafka.server.log.remote.storage.RemoteLogMetadata;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.io.Closeable;

+import java.time.Duration;

+

+/**

+ * This class is responsible for publishing messages into the remote log

metadata topic partitions.

+ */

+public class ProducerManager implements Closeable {

+private static final Logger log =

LoggerFactory.getLogger(ProducerManager.class);

+

+private final RemoteLogMetadataSerde serde = new RemoteLogMetadataSerde();

+private final KafkaProducer producer;

+private final RemoteLogMetadataTopicPartitioner topicPartitioner;

+private final TopicBasedRemoteLogMetadataManagerConfig rlmmConfig;

+

+private volatile boolean close = false;

+

+public ProducerManager(TopicBasedRemoteLogMetadataManagerConfig rlmmConfig,

+ RemoteLogMetadataTopicPartitioner

rlmmTopicPartitioner) {

+this.rlmmConfig = rlmmConfig;

+this.producer = new KafkaProducer<>(rlmmConfig.producerProperties());

+topicPartitioner = rlmmTopicPartitioner;

+}

+

+public RecordMetadata publishMessage(TopicIdPartition topicIdPartition,

+ RemoteLogMetadata remoteLogMetadata)

throws KafkaException {

+ensureNotClosed();

+

+int metadataPartitionNo =

topicPartitioner.metadataPartition(topicIdPartition);

+log.debug("Publishing metadata message of partition:[{}] into metadata

topic partition:[{}] with payload: [{}]",

+topicIdPartition, metadataPartitionNo, remoteLogMetadata);

+

+ProducerCallback callback = new ProducerCallback();

+try {

+if (metadataPartitionNo >=

rlmmConfig.metadataTopicPartitionsCount()) {

+// This should never occur as long as metadata partitions

always remain the same.

+throw new KafkaException("Chosen partition no " +

metadataPartitionNo +

+ " is more than the partition count: "

+ rlmmConfig.metadataTopicPartitionsCount());

+}

+producer.send(new

ProducerRecord<>(rlmmConfig.remoteLogMetadataTopicName(), metadataPartitionNo,

null,

+serde.serialize(remoteLogMetadata)), callback).get();

+} catch (KafkaException e) {

+throw e;

+} catch (Exception e) {

+throw new KafkaException("Exception occurred while publishing

message for topicIdPartition: " + topicIdPartition, e);

+}

+

+if (callback.exception() != null) {

+Exception ex = callback.exception();

+if (ex instanceof KafkaException) {

+throw (KafkaException) ex;

+} else {

+throw new KafkaException(ex);

+}

+} else {

+return callback.recordMetadata();

+}

+}

+

+private void ensureNotClosed() {

+if (close) {

+throw new IllegalStateException("This instance is already set in

close state.");

Review comment:

Done

##

File path:

storage/src/main/java/org/apache/kafka/server/log/remote/metadata/storage/ProducerManager.java

##

@@ -0,0 +1,125 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding

[GitHub] [kafka] satishd commented on a change in pull request #10579: KAFKA-9555 Added default RLMM implementation based on internal topic storage.

satishd commented on a change in pull request #10579:

URL: https://github.com/apache/kafka/pull/10579#discussion_r649388017

##

File path:

storage/src/main/java/org/apache/kafka/server/log/remote/metadata/storage/ConsumerTask.java

##

@@ -0,0 +1,229 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.