[jira] [Updated] (HDDS-3683) Ozone fuse support

[ https://issues.apache.org/jira/browse/HDDS-3683?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] maobaolong updated HDDS-3683: - Description: https://github.com/opendataio/hcfsfuse design doc will be updated here. https://docs.google.com/document/d/1IY9xhRTeo42Sfzw6U-NngHOTLO_B7_0BiUsonvPKvh8/edit?usp=sharing was:https://github.com/opendataio/hcfsfuse > Ozone fuse support > --- > > Key: HDDS-3683 > URL: https://issues.apache.org/jira/browse/HDDS-3683 > Project: Hadoop Distributed Data Store > Issue Type: Improvement >Affects Versions: 0.6.0 >Reporter: maobaolong >Assignee: maobaolong >Priority: Major > Attachments: screenshot-1.png, screenshot-2.png, screenshot-3.png > > > https://github.com/opendataio/hcfsfuse > design doc will be updated here. > https://docs.google.com/document/d/1IY9xhRTeo42Sfzw6U-NngHOTLO_B7_0BiUsonvPKvh8/edit?usp=sharing -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] codecov-commenter commented on pull request #1054: Hdds 3772. Add LOG to S3ErrorTable for easier problem locating.

codecov-commenter commented on pull request #1054: URL: https://github.com/apache/hadoop-ozone/pull/1054#issuecomment-642411148 # [Codecov](https://codecov.io/gh/apache/hadoop-ozone/pull/1054?src=pr=h1) Report > :exclamation: No coverage uploaded for pull request base (`master@67244e5`). [Click here to learn what that means](https://docs.codecov.io/docs/error-reference#section-missing-base-commit). > The diff coverage is `n/a`. [](https://codecov.io/gh/apache/hadoop-ozone/pull/1054?src=pr=tree) ```diff @@Coverage Diff@@ ## master#1054 +/- ## = Coverage ? 69.43% Complexity? 9113 = Files ? 961 Lines ?48150 Branches ? 4679 = Hits ?33435 Misses?12499 Partials ? 2216 ``` -- [Continue to review full report at Codecov](https://codecov.io/gh/apache/hadoop-ozone/pull/1054?src=pr=continue). > **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta) > `Δ = absolute (impact)`, `ø = not affected`, `? = missing data` > Powered by [Codecov](https://codecov.io/gh/apache/hadoop-ozone/pull/1054?src=pr=footer). Last update [67244e5...06c521d](https://codecov.io/gh/apache/hadoop-ozone/pull/1054?src=pr=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-3481) SCM ask too many datanodes to replicate the same container

[ https://issues.apache.org/jira/browse/HDDS-3481?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Nanda kumar updated HDDS-3481: -- Status: Patch Available (was: Open) > SCM ask too many datanodes to replicate the same container > -- > > Key: HDDS-3481 > URL: https://issues.apache.org/jira/browse/HDDS-3481 > Project: Hadoop Distributed Data Store > Issue Type: Bug > Components: SCM >Reporter: runzhiwang >Assignee: runzhiwang >Priority: Blocker > Labels: Triaged, pull-request-available > Attachments: screenshot-1.png, screenshot-2.png, screenshot-3.png, > screenshot-4.png > > > *What's the problem ?* > As the image shows, scm ask 31 datanodes to replicate container 2037 every > 10 minutes from 2020-04-17 23:38:51. And at 2020-04-18 08:58:52 scm find the > replicate num of container 2037 is 12, then it ask 11 datanodes to delete > container 2037. > !screenshot-1.png! > !screenshot-2.png! > *What's the reason ?* > scm check whether (container replicates num + > inflightReplication.get(containerId).size() - > inflightDeletion.get(containerId).size()) is less than 3. If less than 3, it > will ask some datanode to replicate the container, and add the action into > inflightReplication.get(containerId). The replicate action time out is 10 > minutes, if action timeout, scm will delete the action from > inflightReplication.get(containerId) as the image shows. Then (container > replicates num + inflightReplication.get(containerId).size() - > inflightDeletion.get(containerId).size()) is less than 3 again, and scm ask > another datanode to replicate the container. > Because replicate container cost a long time, sometimes it cannot finish in > 10 minutes, thus 31 datanodes has to replicate the container every 10 > minutes. 19 of 31 datanodes replicate container from the same source > datanode, it will also cause big pressure on the source datanode and > replicate container become slower. Actually it cost 4 hours to finish the > first replicate. > !screenshot-4.png! -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] leosunli commented on a change in pull request #1033: HDDS-3667. If we gracefully stop datanode it would be better to notify scm and r…

leosunli commented on a change in pull request #1033:

URL: https://github.com/apache/hadoop-ozone/pull/1033#discussion_r438540923

##

File path:

hadoop-hdds/container-service/src/main/java/org/apache/hadoop/ozone/container/common/states/endpoint/UnRegisterEndpointTask.java

##

@@ -0,0 +1,262 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with this

+ * work for additional information regarding copyright ownership. The ASF

+ * licenses this file to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance with the License.

+ * You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS, WITHOUT

+ * WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the

+ * License for the specific language governing permissions and limitations

under

+ * the License.

+ */

+package org.apache.hadoop.ozone.container.common.states.endpoint;

+

+import java.io.IOException;

+import java.util.UUID;

+import java.util.concurrent.Callable;

+import java.util.concurrent.Future;

+

+import org.apache.commons.lang3.StringUtils;

+import org.apache.hadoop.hdds.conf.ConfigurationSource;

+import org.apache.hadoop.hdds.protocol.DatanodeDetails;

+import

org.apache.hadoop.hdds.protocol.proto.StorageContainerDatanodeProtocolProtos.ContainerReportsProto;

+import

org.apache.hadoop.hdds.protocol.proto.StorageContainerDatanodeProtocolProtos.NodeReportProto;

+import

org.apache.hadoop.hdds.protocol.proto.StorageContainerDatanodeProtocolProtos.PipelineReportsProto;

+import

org.apache.hadoop.hdds.protocol.proto.StorageContainerDatanodeProtocolProtos.SCMUNRegisteredResponseProto;

+import

org.apache.hadoop.ozone.container.common.statemachine.EndpointStateMachine;

+import

org.apache.hadoop.ozone.container.common.statemachine.EndpointStateMachine.EndPointStates;

+import org.apache.hadoop.ozone.container.common.statemachine.StateContext;

+import org.apache.hadoop.ozone.container.ozoneimpl.OzoneContainer;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import com.google.common.annotations.VisibleForTesting;

+import com.google.common.base.Preconditions;

+

+/**

+ * UnRegister a datanode with SCM.

+ */

+public final class UnRegisterEndpointTask implements

+Callable {

+ static final Logger LOG =

+ LoggerFactory.getLogger(UnRegisterEndpointTask.class);

+

+ private final EndpointStateMachine rpcEndPoint;

+ private final ConfigurationSource conf;

+ private Future result;

+ private DatanodeDetails datanodeDetails;

+ private final OzoneContainer datanodeContainerManager;

+ private StateContext stateContext;

+

+ /**

+ * Creates a register endpoint task.

+ *

+ * @param rpcEndPoint - endpoint

+ * @param conf - conf

+ * @param ozoneContainer - container

+ */

+ @VisibleForTesting

+ public UnRegisterEndpointTask(EndpointStateMachine rpcEndPoint,

+ ConfigurationSource conf, OzoneContainer ozoneContainer,

+ StateContext context) {

+this.rpcEndPoint = rpcEndPoint;

+this.conf = conf;

+this.datanodeContainerManager = ozoneContainer;

+this.stateContext = context;

+

+ }

+

+ /**

+ * Get the DatanodeDetails.

+ *

+ * @return DatanodeDetailsProto

+ */

+ public DatanodeDetails getDatanodeDetails() {

+return datanodeDetails;

+ }

+

+ /**

+ * Set the contiainerNodeID Proto.

+ *

+ * @param datanodeDetails - Container Node ID.

+ */

+ public void setDatanodeDetails(

+ DatanodeDetails datanodeDetails) {

+this.datanodeDetails = datanodeDetails;

+ }

+

+ /**

+ * Computes a result, or throws an exception if unable to do so.

+ *

+ * @return computed result

+ * @throws Exception if unable to compute a result

+ */

+ @Override

+ public EndpointStateMachine.EndPointStates call() throws Exception {

+

+if (getDatanodeDetails() == null) {

+ LOG.error("DatanodeDetails cannot be null in RegisterEndpoint task, "

+ + "shutting down the endpoint.");

+ return

rpcEndPoint.setState(EndpointStateMachine.EndPointStates.SHUTDOWN);

+}

+

+rpcEndPoint.lock();

+try {

+

+ if (rpcEndPoint.getState()

+ .equals(EndPointStates.SHUTDOWN)) {

+ContainerReportsProto containerReport =

+datanodeContainerManager.getController().getContainerReport();

+NodeReportProto nodeReport = datanodeContainerManager.getNodeReport();

+PipelineReportsProto pipelineReportsProto =

+datanodeContainerManager.getPipelineReport();

+// TODO : Add responses to the command Queue.

+SCMUNRegisteredResponseProto response = rpcEndPoint.getEndPoint()

+.unregister(datanodeDetails.getProtoBufMessage(), nodeReport,

+containerReport,

[jira] [Updated] (HDDS-3481) SCM ask too many datanodes to replicate the same container

[ https://issues.apache.org/jira/browse/HDDS-3481?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Nanda kumar updated HDDS-3481: -- Labels: Triaged pull-request-available (was: TriagePending pull-request-available) > SCM ask too many datanodes to replicate the same container > -- > > Key: HDDS-3481 > URL: https://issues.apache.org/jira/browse/HDDS-3481 > Project: Hadoop Distributed Data Store > Issue Type: Bug > Components: SCM >Reporter: runzhiwang >Assignee: runzhiwang >Priority: Blocker > Labels: Triaged, pull-request-available > Attachments: screenshot-1.png, screenshot-2.png, screenshot-3.png, > screenshot-4.png > > > *What's the problem ?* > As the image shows, scm ask 31 datanodes to replicate container 2037 every > 10 minutes from 2020-04-17 23:38:51. And at 2020-04-18 08:58:52 scm find the > replicate num of container 2037 is 12, then it ask 11 datanodes to delete > container 2037. > !screenshot-1.png! > !screenshot-2.png! > *What's the reason ?* > scm check whether (container replicates num + > inflightReplication.get(containerId).size() - > inflightDeletion.get(containerId).size()) is less than 3. If less than 3, it > will ask some datanode to replicate the container, and add the action into > inflightReplication.get(containerId). The replicate action time out is 10 > minutes, if action timeout, scm will delete the action from > inflightReplication.get(containerId) as the image shows. Then (container > replicates num + inflightReplication.get(containerId).size() - > inflightDeletion.get(containerId).size()) is less than 3 again, and scm ask > another datanode to replicate the container. > Because replicate container cost a long time, sometimes it cannot finish in > 10 minutes, thus 31 datanodes has to replicate the container every 10 > minutes. 19 of 31 datanodes replicate container from the same source > datanode, it will also cause big pressure on the source datanode and > replicate container become slower. Actually it cost 4 hours to finish the > first replicate. > !screenshot-4.png! -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] ChenSammi opened a new pull request #1054: Hdds 3772. Add LOG to S3ErrorTable for easier problem locating.

ChenSammi opened a new pull request #1054: URL: https://github.com/apache/hadoop-ozone/pull/1054 https://issues.apache.org/jira/browse/HDDS-3772 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-3772) Add LOG to S3ErrorTable for easier problem locating

[ https://issues.apache.org/jira/browse/HDDS-3772?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Sammi Chen updated HDDS-3772: - Description: Currently it's hard to directly tell the failure reason when something unexpected happened. Here is an example when downloading a file through aws java sdk. com.amazonaws.services.s3.model.AmazonS3Exception: Not Found (Service: Amazon S3; Status Code: 404; Error Code: 404 Not Found; Request ID: 0a5f2404-71bb-4edf-b488-2a5de9f6b753), S3 Extended Request ID: rv8deQRJyX3zCEk at com.amazonaws.http.AmazonHttpClient.handleErrorResponse(AmazonHttpClient.java:1389) at com.amazonaws.http.AmazonHttpClient.executeOneRequest(AmazonHttpClient.java:902) at com.amazonaws.http.AmazonHttpClient.executeHelper(AmazonHttpClient.java:607) at com.amazonaws.http.AmazonHttpClient.doExecute(AmazonHttpClient.java:376) at com.amazonaws.http.AmazonHttpClient.executeWithTimer(AmazonHttpClient.java:338) at com.amazonaws.http.AmazonHttpClient.execute(AmazonHttpClient.java:287) at com.amazonaws.services.s3.AmazonS3Client.invoke(AmazonS3Client.java:3826) at com.amazonaws.services.s3.AmazonS3Client.getObjectMetadata(AmazonS3Client.java:1015) at com.amazonaws.services.s3.transfer.TransferManager.doDownload(TransferManager.java:939) at com.amazonaws.services.s3.transfer.TransferManager.download(TransferManager.java:795) at com.amazonaws.services.s3.transfer.TransferManager.download(TransferManager.java:713) at com.amazonaws.services.s3.transfer.TransferManager.download(TransferManager.java:667) at AWSS3UtilTest$AWSS3Util.download(AWSS3UtilTest.java:213) at AWSS3UtilTest.test08_downloadAsyn(AWSS3UtilTest.java:107) at AWSS3UtilTest.main(AWSS3UtilTest.java:47) > Add LOG to S3ErrorTable for easier problem locating > --- > > Key: HDDS-3772 > URL: https://issues.apache.org/jira/browse/HDDS-3772 > Project: Hadoop Distributed Data Store > Issue Type: Improvement >Reporter: Sammi Chen >Assignee: Sammi Chen >Priority: Major > > Currently it's hard to directly tell the failure reason when something > unexpected happened. Here is an example when downloading a file through aws > java sdk. > com.amazonaws.services.s3.model.AmazonS3Exception: Not Found (Service: Amazon > S3; Status Code: 404; Error Code: 404 Not Found; Request ID: > 0a5f2404-71bb-4edf-b488-2a5de9f6b753), S3 Extended Request ID: rv8deQRJyX3zCEk > at > com.amazonaws.http.AmazonHttpClient.handleErrorResponse(AmazonHttpClient.java:1389) > at > com.amazonaws.http.AmazonHttpClient.executeOneRequest(AmazonHttpClient.java:902) > at > com.amazonaws.http.AmazonHttpClient.executeHelper(AmazonHttpClient.java:607) > at > com.amazonaws.http.AmazonHttpClient.doExecute(AmazonHttpClient.java:376) > at > com.amazonaws.http.AmazonHttpClient.executeWithTimer(AmazonHttpClient.java:338) > at > com.amazonaws.http.AmazonHttpClient.execute(AmazonHttpClient.java:287) > at > com.amazonaws.services.s3.AmazonS3Client.invoke(AmazonS3Client.java:3826) > at > com.amazonaws.services.s3.AmazonS3Client.getObjectMetadata(AmazonS3Client.java:1015) > at > com.amazonaws.services.s3.transfer.TransferManager.doDownload(TransferManager.java:939) > at > com.amazonaws.services.s3.transfer.TransferManager.download(TransferManager.java:795) > at > com.amazonaws.services.s3.transfer.TransferManager.download(TransferManager.java:713) > at > com.amazonaws.services.s3.transfer.TransferManager.download(TransferManager.java:667) > at AWSS3UtilTest$AWSS3Util.download(AWSS3UtilTest.java:213) > at AWSS3UtilTest.test08_downloadAsyn(AWSS3UtilTest.java:107) > at AWSS3UtilTest.main(AWSS3UtilTest.java:47) -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Created] (HDDS-3776) Upgrading RocksDB version to avoid java heap issue

Li Cheng created HDDS-3776:

--

Summary: Upgrading RocksDB version to avoid java heap issue

Key: HDDS-3776

URL: https://issues.apache.org/jira/browse/HDDS-3776

Project: Hadoop Distributed Data Store

Issue Type: Bug

Components: upgrade

Affects Versions: 0.5.0

Reporter: Li Cheng

Currently we have rocksdb 6.6.4 as major version and there are some jvm issues

in tests (happened in [https://github.com/apache/hadoop-ozone/pull/1019])

related to rocksdb core dump. We may upgrade to 6.8.1 to avoid this issue.

{{JRE version: Java(TM) SE Runtime Environment (8.0_211-b12) (build

1.8.0_211-b12)

# Java VM: Java HotSpot(TM) 64-Bit Server VM (25.211-b12 mixed mode bsd-amd64

compressed oops)

# Problematic frame:

# C [librocksdbjni2954960755376440018.jnilib+0x602b8]

rocksdb::GetColumnFamilyID(rocksdb::ColumnFamilyHandle*)+0x8

See full dump at

[https://the-asf.slack.com/files/U0159PV5Z6U/F0152UAJF0S/hs_err_pid90655.log?origin_team=T4S1WH2J3_channel=D014L2URB6E](url)}}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] timmylicheng commented on pull request #1019: HDDS-3679. Add unit tests for PipelineManagerV2.

timmylicheng commented on pull request #1019: URL: https://github.com/apache/hadoop-ozone/pull/1019#issuecomment-642370476 @elek Tests seem passed here. I created https://issues.apache.org/jira/browse/HDDS-3776 to track rocksdb upgrade. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Commented] (HDDS-3499) Address compatibility issue by SCM DB instances change

[ https://issues.apache.org/jira/browse/HDDS-3499?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17132853#comment-17132853 ] Li Cheng commented on HDDS-3499: [~arp] Our internal production deployment is still on schedule. But we have done internal tests to verify the step works for me. Resolving this now... > Address compatibility issue by SCM DB instances change > -- > > Key: HDDS-3499 > URL: https://issues.apache.org/jira/browse/HDDS-3499 > Project: Hadoop Distributed Data Store > Issue Type: Bug > Components: SCM >Reporter: Li Cheng >Assignee: Marton Elek >Priority: Blocker > Labels: Triaged > > After https://issues.apache.org/jira/browse/HDDS-3172, SCM now has one single > rocksdb instance instead of multiple db instances. > For running Ozone cluster, we need to address compatibility issues. One > possible way is to have a side-way tool to migrate old metadata from multiple > dbs to current single db. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Resolved] (HDDS-3499) Address compatibility issue by SCM DB instances change

[ https://issues.apache.org/jira/browse/HDDS-3499?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Li Cheng resolved HDDS-3499. Fix Version/s: 0.6.0 Resolution: Fixed > Address compatibility issue by SCM DB instances change > -- > > Key: HDDS-3499 > URL: https://issues.apache.org/jira/browse/HDDS-3499 > Project: Hadoop Distributed Data Store > Issue Type: Bug > Components: SCM >Reporter: Li Cheng >Assignee: Marton Elek >Priority: Blocker > Labels: Triaged > Fix For: 0.6.0 > > > After https://issues.apache.org/jira/browse/HDDS-3172, SCM now has one single > rocksdb instance instead of multiple db instances. > For running Ozone cluster, we need to address compatibility issues. One > possible way is to have a side-way tool to migrate old metadata from multiple > dbs to current single db. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] codecov-commenter edited a comment on pull request #986: HDDS-3476. Use persisted transaction info during OM startup in OM StateMachine.

codecov-commenter edited a comment on pull request #986: URL: https://github.com/apache/hadoop-ozone/pull/986#issuecomment-642271726 # [Codecov](https://codecov.io/gh/apache/hadoop-ozone/pull/986?src=pr=h1) Report > Merging [#986](https://codecov.io/gh/apache/hadoop-ozone/pull/986?src=pr=desc) into [master](https://codecov.io/gh/apache/hadoop-ozone/commit/f7e95d9b015e764ca93cfe2ccfc96d95160931bc=desc) will **decrease** coverage by `0.10%`. > The diff coverage is `65.28%`. [](https://codecov.io/gh/apache/hadoop-ozone/pull/986?src=pr=tree) ```diff @@ Coverage Diff @@ ## master #986 +/- ## - Coverage 69.48% 69.38% -0.11% + Complexity 9110 9102 -8 Files 961 961 Lines 4813248123 -9 Branches 4672 4676 +4 - Hits 3344633388 -58 - Misses1246812519 +51 + Partials 2218 2216 -2 ``` | [Impacted Files](https://codecov.io/gh/apache/hadoop-ozone/pull/986?src=pr=tree) | Coverage Δ | Complexity Δ | | |---|---|---|---| | [...main/java/org/apache/hadoop/ozone/OzoneConsts.java](https://codecov.io/gh/apache/hadoop-ozone/pull/986/diff?src=pr=tree#diff-aGFkb29wLWhkZHMvY29tbW9uL3NyYy9tYWluL2phdmEvb3JnL2FwYWNoZS9oYWRvb3Avb3pvbmUvT3pvbmVDb25zdHMuamF2YQ==) | `84.21% <ø> (ø)` | `1.00 <0.00> (ø)` | | | [...apache/hadoop/hdds/utils/db/RocksDBCheckpoint.java](https://codecov.io/gh/apache/hadoop-ozone/pull/986/diff?src=pr=tree#diff-aGFkb29wLWhkZHMvZnJhbWV3b3JrL3NyYy9tYWluL2phdmEvb3JnL2FwYWNoZS9oYWRvb3AvaGRkcy91dGlscy9kYi9Sb2Nrc0RCQ2hlY2twb2ludC5qYXZh) | `90.90% <ø> (+0.90%)` | `5.00 <0.00> (-3.00)` | :arrow_up: | | [.../java/org/apache/hadoop/ozone/om/OMConfigKeys.java](https://codecov.io/gh/apache/hadoop-ozone/pull/986/diff?src=pr=tree#diff-aGFkb29wLW96b25lL2NvbW1vbi9zcmMvbWFpbi9qYXZhL29yZy9hcGFjaGUvaGFkb29wL296b25lL29tL09NQ29uZmlnS2V5cy5qYXZh) | `100.00% <ø> (ø)` | `1.00 <0.00> (ø)` | | | [.../apache/hadoop/ozone/om/OMDBCheckpointServlet.java](https://codecov.io/gh/apache/hadoop-ozone/pull/986/diff?src=pr=tree#diff-aGFkb29wLW96b25lL296b25lLW1hbmFnZXIvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2hhZG9vcC9vem9uZS9vbS9PTURCQ2hlY2twb2ludFNlcnZsZXQuamF2YQ==) | `66.26% <ø> (-4.27%)` | `8.00 <0.00> (-2.00)` | | | [...a/org/apache/hadoop/ozone/om/ha/OMNodeDetails.java](https://codecov.io/gh/apache/hadoop-ozone/pull/986/diff?src=pr=tree#diff-aGFkb29wLW96b25lL296b25lLW1hbmFnZXIvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2hhZG9vcC9vem9uZS9vbS9oYS9PTU5vZGVEZXRhaWxzLmphdmE=) | `86.66% <ø> (ø)` | `12.00 <0.00> (ø)` | | | [...p/ozone/om/ratis/utils/OzoneManagerRatisUtils.java](https://codecov.io/gh/apache/hadoop-ozone/pull/986/diff?src=pr=tree#diff-aGFkb29wLW96b25lL296b25lLW1hbmFnZXIvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2hhZG9vcC9vem9uZS9vbS9yYXRpcy91dGlscy9Pem9uZU1hbmFnZXJSYXRpc1V0aWxzLmphdmE=) | `67.44% <0.00%> (-19.13%)` | `39.00 <0.00> (ø)` | | | [.../java/org/apache/hadoop/ozone/om/OzoneManager.java](https://codecov.io/gh/apache/hadoop-ozone/pull/986/diff?src=pr=tree#diff-aGFkb29wLW96b25lL296b25lLW1hbmFnZXIvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2hhZG9vcC9vem9uZS9vbS9Pem9uZU1hbmFnZXIuamF2YQ==) | `64.22% <12.50%> (-0.37%)` | `185.00 <1.00> (-1.00)` | | | [...adoop/ozone/om/ratis/OzoneManagerStateMachine.java](https://codecov.io/gh/apache/hadoop-ozone/pull/986/diff?src=pr=tree#diff-aGFkb29wLW96b25lL296b25lLW1hbmFnZXIvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2hhZG9vcC9vem9uZS9vbS9yYXRpcy9Pem9uZU1hbmFnZXJTdGF0ZU1hY2hpbmUuamF2YQ==) | `58.03% <90.00%> (+2.29%)` | `27.00 <4.00> (+1.00)` | | | [.../org/apache/hadoop/hdds/scm/pipeline/Pipeline.java](https://codecov.io/gh/apache/hadoop-ozone/pull/986/diff?src=pr=tree#diff-aGFkb29wLWhkZHMvY29tbW9uL3NyYy9tYWluL2phdmEvb3JnL2FwYWNoZS9oYWRvb3AvaGRkcy9zY20vcGlwZWxpbmUvUGlwZWxpbmUuamF2YQ==) | `85.71% <100.00%> (+0.20%)` | `44.00 <0.00> (+1.00)` | | | [.../org/apache/hadoop/ozone/om/helpers/OmKeyInfo.java](https://codecov.io/gh/apache/hadoop-ozone/pull/986/diff?src=pr=tree#diff-aGFkb29wLW96b25lL2NvbW1vbi9zcmMvbWFpbi9qYXZhL29yZy9hcGFjaGUvaGFkb29wL296b25lL29tL2hlbHBlcnMvT21LZXlJbmZvLmphdmE=) | `86.25% <100.00%> (+0.33%)` | `42.00 <0.00> (+2.00)` | | | ... and [28 more](https://codecov.io/gh/apache/hadoop-ozone/pull/986/diff?src=pr=tree-more) | | -- [Continue to review full report at Codecov](https://codecov.io/gh/apache/hadoop-ozone/pull/986?src=pr=continue). > **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta) > `Δ = absolute (impact)`, `ø = not affected`, `? = missing data`

[jira] [Updated] (HDDS-3737) Improve OM performance

[ https://issues.apache.org/jira/browse/HDDS-3737?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated HDDS-3737: - Labels: pull-request-available (was: ) > Improve OM performance > -- > > Key: HDDS-3737 > URL: https://issues.apache.org/jira/browse/HDDS-3737 > Project: Hadoop Distributed Data Store > Issue Type: Improvement >Reporter: runzhiwang >Assignee: runzhiwang >Priority: Major > Labels: pull-request-available > -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

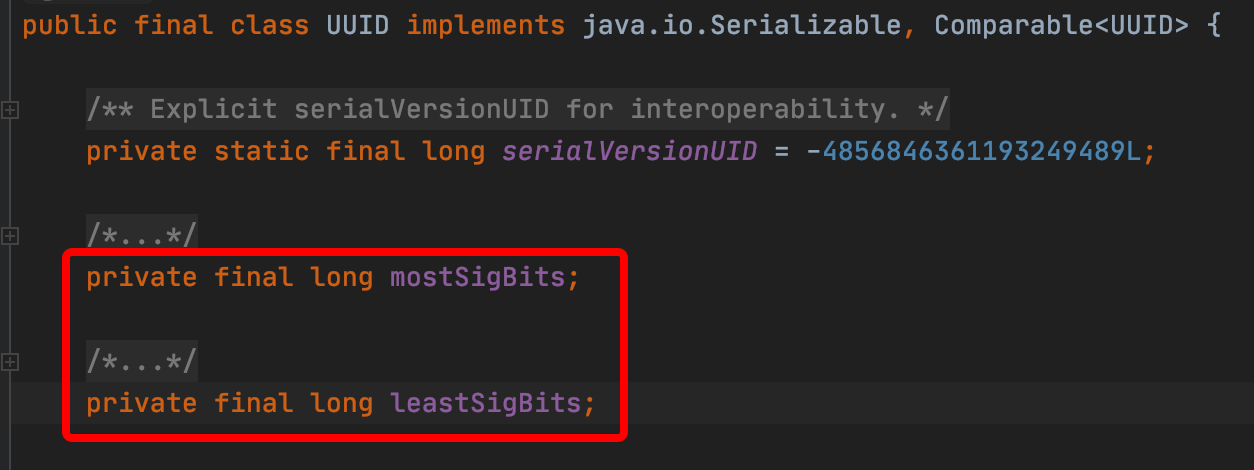

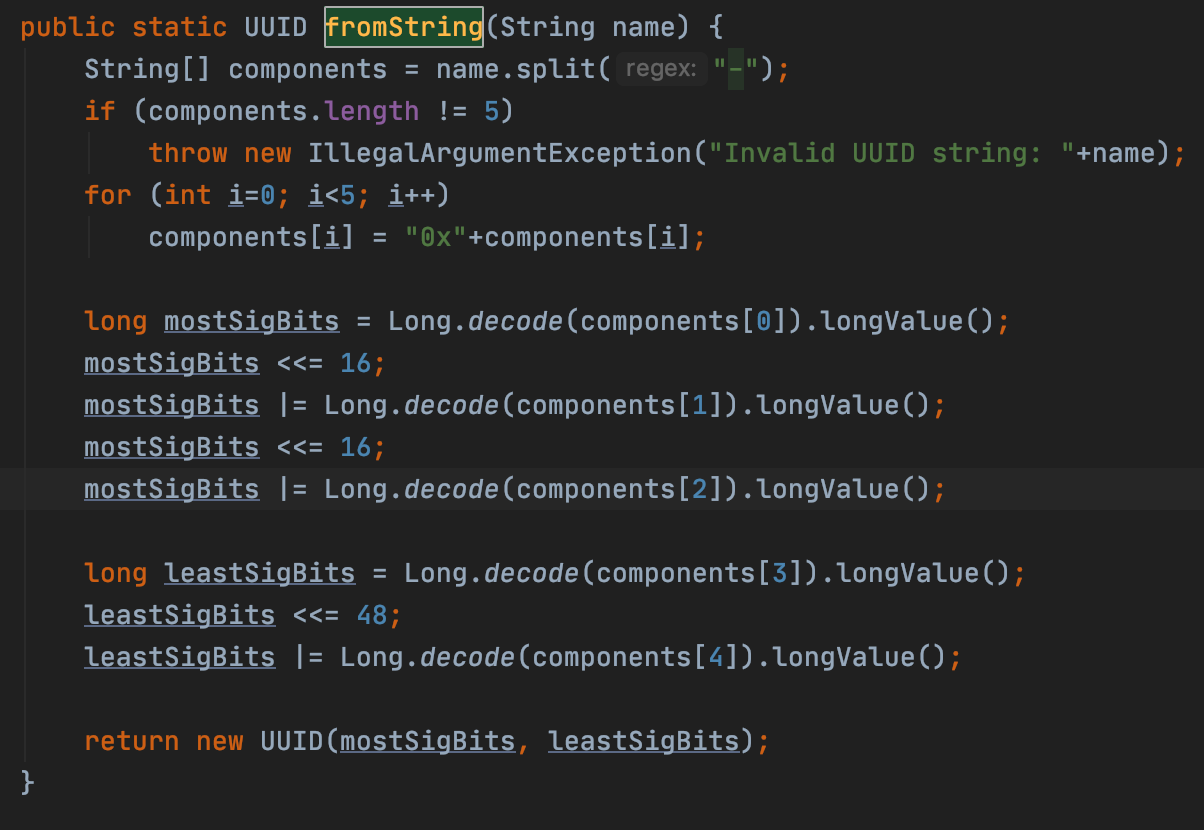

[GitHub] [hadoop-ozone] runzhiwang opened a new pull request #1053: HDDS-3737. Avoid serialization between UUID and String

runzhiwang opened a new pull request #1053: URL: https://github.com/apache/hadoop-ozone/pull/1053 ## What changes were proposed in this pull request? **What's the problem ?** Serialization between UUID and String: UUID.toString (I have improved this) and UUID.fromString, not only cost cpu, because encode and decode String and UUID.fromString both cost cpu, but also make the proto bigger, because uuid is just a number which is 16Byte, covet it to string will need 32Byte. **How to fix ?** Actually, JDK implement UUID with two long number: `mostSigBits` and `leastSigBits`. When `UUID.fromString`, JDK get `mostSigBits` and `leastSigBits` from String, and new a object of UUID. So we can convert UUID to 2 long number in proto, which make serialization and de serialization UUID more faster, and make proto smaller.   ## What is the link to the Apache JIRA https://issues.apache.org/jira/browse/HDDS-3763 ## How was this patch tested? Existed tests. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] codecov-commenter commented on pull request #1002: HDDS-3642. Stop/Pause Background services while replacing OM DB with checkpoint from Leader

codecov-commenter commented on pull request #1002: URL: https://github.com/apache/hadoop-ozone/pull/1002#issuecomment-642325738 # [Codecov](https://codecov.io/gh/apache/hadoop-ozone/pull/1002?src=pr=h1) Report > Merging [#1002](https://codecov.io/gh/apache/hadoop-ozone/pull/1002?src=pr=desc) into [master](https://codecov.io/gh/apache/hadoop-ozone/commit/f7e95d9b015e764ca93cfe2ccfc96d95160931bc=desc) will **decrease** coverage by `0.03%`. > The diff coverage is `0.00%`. [](https://codecov.io/gh/apache/hadoop-ozone/pull/1002?src=pr=tree) ```diff @@ Coverage Diff @@ ## master#1002 +/- ## - Coverage 69.48% 69.45% -0.04% - Complexity 9110 9114 +4 Files 961 961 Lines 4813248155 +23 Branches 4672 4679 +7 Hits 3344633446 - Misses1246812494 +26 + Partials 2218 2215 -3 ``` | [Impacted Files](https://codecov.io/gh/apache/hadoop-ozone/pull/1002?src=pr=tree) | Coverage Δ | Complexity Δ | | |---|---|---|---| | [.../java/org/apache/hadoop/ozone/om/OzoneManager.java](https://codecov.io/gh/apache/hadoop-ozone/pull/1002/diff?src=pr=tree#diff-aGFkb29wLW96b25lL296b25lLW1hbmFnZXIvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2hhZG9vcC9vem9uZS9vbS9Pem9uZU1hbmFnZXIuamF2YQ==) | `64.27% <0.00%> (-0.32%)` | `186.00 <0.00> (ø)` | | | [...er/common/transport/server/GrpcXceiverService.java](https://codecov.io/gh/apache/hadoop-ozone/pull/1002/diff?src=pr=tree#diff-aGFkb29wLWhkZHMvY29udGFpbmVyLXNlcnZpY2Uvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2hhZG9vcC9vem9uZS9jb250YWluZXIvY29tbW9uL3RyYW5zcG9ydC9zZXJ2ZXIvR3JwY1hjZWl2ZXJTZXJ2aWNlLmphdmE=) | `70.00% <0.00%> (-10.00%)` | `3.00% <0.00%> (ø%)` | | | [...ache/hadoop/ozone/om/codec/S3SecretValueCodec.java](https://codecov.io/gh/apache/hadoop-ozone/pull/1002/diff?src=pr=tree#diff-aGFkb29wLW96b25lL296b25lLW1hbmFnZXIvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2hhZG9vcC9vem9uZS9vbS9jb2RlYy9TM1NlY3JldFZhbHVlQ29kZWMuamF2YQ==) | `90.90% <0.00%> (-9.10%)` | `3.00% <0.00%> (-1.00%)` | | | [.../transport/server/ratis/ContainerStateMachine.java](https://codecov.io/gh/apache/hadoop-ozone/pull/1002/diff?src=pr=tree#diff-aGFkb29wLWhkZHMvY29udGFpbmVyLXNlcnZpY2Uvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2hhZG9vcC9vem9uZS9jb250YWluZXIvY29tbW9uL3RyYW5zcG9ydC9zZXJ2ZXIvcmF0aXMvQ29udGFpbmVyU3RhdGVNYWNoaW5lLmphdmE=) | `69.36% <0.00%> (-6.76%)` | `59.00% <0.00%> (-5.00%)` | | | [...ozone/container/ozoneimpl/ContainerController.java](https://codecov.io/gh/apache/hadoop-ozone/pull/1002/diff?src=pr=tree#diff-aGFkb29wLWhkZHMvY29udGFpbmVyLXNlcnZpY2Uvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2hhZG9vcC9vem9uZS9jb250YWluZXIvb3pvbmVpbXBsL0NvbnRhaW5lckNvbnRyb2xsZXIuamF2YQ==) | `63.15% <0.00%> (-5.27%)` | `11.00% <0.00%> (-1.00%)` | | | [...iner/common/transport/server/ratis/CSMMetrics.java](https://codecov.io/gh/apache/hadoop-ozone/pull/1002/diff?src=pr=tree#diff-aGFkb29wLWhkZHMvY29udGFpbmVyLXNlcnZpY2Uvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2hhZG9vcC9vem9uZS9jb250YWluZXIvY29tbW9uL3RyYW5zcG9ydC9zZXJ2ZXIvcmF0aXMvQ1NNTWV0cmljcy5qYXZh) | `67.69% <0.00%> (-3.08%)` | `19.00% <0.00%> (-1.00%)` | | | [.../ozone/container/common/volume/AbstractFuture.java](https://codecov.io/gh/apache/hadoop-ozone/pull/1002/diff?src=pr=tree#diff-aGFkb29wLWhkZHMvY29udGFpbmVyLXNlcnZpY2Uvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2hhZG9vcC9vem9uZS9jb250YWluZXIvY29tbW9uL3ZvbHVtZS9BYnN0cmFjdEZ1dHVyZS5qYXZh) | `29.87% <0.00%> (-0.52%)` | `19.00% <0.00%> (-1.00%)` | | | [...doop/ozone/container/keyvalue/KeyValueHandler.java](https://codecov.io/gh/apache/hadoop-ozone/pull/1002/diff?src=pr=tree#diff-aGFkb29wLWhkZHMvY29udGFpbmVyLXNlcnZpY2Uvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2hhZG9vcC9vem9uZS9jb250YWluZXIva2V5dmFsdWUvS2V5VmFsdWVIYW5kbGVyLmphdmE=) | `61.55% <0.00%> (-0.45%)` | `63.00% <0.00%> (-1.00%)` | | | [...adoop/ozone/om/request/key/OMKeyCommitRequest.java](https://codecov.io/gh/apache/hadoop-ozone/pull/1002/diff?src=pr=tree#diff-aGFkb29wLW96b25lL296b25lLW1hbmFnZXIvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2hhZG9vcC9vem9uZS9vbS9yZXF1ZXN0L2tleS9PTUtleUNvbW1pdFJlcXVlc3QuamF2YQ==) | `97.00% <0.00%> (ø)` | `18.00% <0.00%> (+1.00%)` | | | [.../org/apache/hadoop/hdds/scm/pipeline/Pipeline.java](https://codecov.io/gh/apache/hadoop-ozone/pull/1002/diff?src=pr=tree#diff-aGFkb29wLWhkZHMvY29tbW9uL3NyYy9tYWluL2phdmEvb3JnL2FwYWNoZS9oYWRvb3AvaGRkcy9zY20vcGlwZWxpbmUvUGlwZWxpbmUuamF2YQ==) | `85.71% <0.00%> (+0.20%)` | `44.00% <0.00%> (+1.00%)` | | | ... and [15

[GitHub] [hadoop-ozone] codecov-commenter edited a comment on pull request #986: HDDS-3476. Use persisted transaction info during OM startup in OM StateMachine.

codecov-commenter edited a comment on pull request #986: URL: https://github.com/apache/hadoop-ozone/pull/986#issuecomment-642271726 # [Codecov](https://codecov.io/gh/apache/hadoop-ozone/pull/986?src=pr=h1) Report > :exclamation: No coverage uploaded for pull request base (`master@3328d7d`). [Click here to learn what that means](https://docs.codecov.io/docs/error-reference#section-missing-base-commit). > The diff coverage is `n/a`. [](https://codecov.io/gh/apache/hadoop-ozone/pull/986?src=pr=tree) ```diff @@Coverage Diff@@ ## master #986 +/- ## = Coverage ? 69.48% Complexity? 9112 = Files ? 961 Lines ?48107 Branches ? 4669 = Hits ?33428 Misses?12468 Partials ? 2211 ``` -- [Continue to review full report at Codecov](https://codecov.io/gh/apache/hadoop-ozone/pull/986?src=pr=continue). > **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta) > `Δ = absolute (impact)`, `ø = not affected`, `? = missing data` > Powered by [Codecov](https://codecov.io/gh/apache/hadoop-ozone/pull/986?src=pr=footer). Last update [3328d7d...b2bda39](https://codecov.io/gh/apache/hadoop-ozone/pull/986?src=pr=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] bharatviswa504 merged pull request #1052: HDDS-3749. Addendum: Fix checkstyle issue.

bharatviswa504 merged pull request #1052: URL: https://github.com/apache/hadoop-ozone/pull/1052 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] bharatviswa504 commented on pull request #1034: HDDS-3749. Improve OM performance with 3.7% by avoid stream.collect

bharatviswa504 commented on pull request #1034: URL: https://github.com/apache/hadoop-ozone/pull/1034#issuecomment-642305014 Hi @xiaoyuyao This has caused checkstyle issues and PR's are failing with CI run. Posted a PR to fix this https://github.com/apache/hadoop-ozone/pull/1052 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] bharatviswa504 opened a new pull request #1052: HDDS-3479. Addendum: Fix checkstyle issue.

bharatviswa504 opened a new pull request #1052: URL: https://github.com/apache/hadoop-ozone/pull/1052 ## What changes were proposed in this pull request? (Please fill in changes proposed in this fix) ## What is the link to the Apache JIRA (Please create an issue in ASF JIRA before opening a pull request, and you need to set the title of the pull request which starts with the corresponding JIRA issue number. (e.g. HDDS-. Fix a typo in YYY.) Please replace this section with the link to the Apache JIRA) ## How was this patch tested? (Please explain how this patch was tested. Ex: unit tests, manual tests) (If this patch involves UI changes, please attach a screen-shot; otherwise, remove this) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Resolved] (HDDS-3749) Improve OM performance with 3.7% by avoid stream.collect

[ https://issues.apache.org/jira/browse/HDDS-3749?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Xiaoyu Yao resolved HDDS-3749. -- Fix Version/s: 0.6.0 Resolution: Fixed > Improve OM performance with 3.7% by avoid stream.collect > > > Key: HDDS-3749 > URL: https://issues.apache.org/jira/browse/HDDS-3749 > Project: Hadoop Distributed Data Store > Issue Type: Sub-task >Reporter: runzhiwang >Assignee: runzhiwang >Priority: Major > Labels: pull-request-available > Fix For: 0.6.0 > > Attachments: screenshot-1.png, screenshot-2.png, screenshot-3.png, > screenshot-4.png, screenshot-5.png > > > I start a ozone cluster with 1000 datanodes and 10 s3gateway, and run two > weeks with heavy workload, and perf om. > !screenshot-1.png! > !screenshot-2.png! > !screenshot-3.png! > !screenshot-4.png! > !screenshot-5.png! -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] xiaoyuyao merged pull request #1034: HDDS-3749. Improve OM performance with 3.7% by avoid stream.collect

xiaoyuyao merged pull request #1034: URL: https://github.com/apache/hadoop-ozone/pull/1034 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] bharatviswa504 commented on a change in pull request #986: HDDS-3476. Use persisted transaction info during OM startup in OM StateMachine.

bharatviswa504 commented on a change in pull request #986:

URL: https://github.com/apache/hadoop-ozone/pull/986#discussion_r438435882

##

File path:

hadoop-ozone/ozone-manager/src/main/java/org/apache/hadoop/ozone/om/ratis/OzoneManagerStateMachine.java

##

@@ -338,20 +352,19 @@ public void unpause(long newLastAppliedSnaphsotIndex,

}

/**

- * Take OM Ratis snapshot. Write the snapshot index to file. Snapshot index

- * is the log index corresponding to the last applied transaction on the OM

- * State Machine.

+ * Take OM Ratis snapshot is a dummy operation as when double buffer

+ * flushes the lastAppliedIndex is flushed to DB and that is used as

+ * snapshot index.

*

* @return the last applied index on the state machine which has been

* stored in the snapshot file.

*/

@Override

public long takeSnapshot() throws IOException {

-LOG.info("Saving Ratis snapshot on the OM.");

-if (ozoneManager != null) {

- return ozoneManager.saveRatisSnapshot().getIndex();

-}

-return 0;

+LOG.info("Current Snapshot Index {}", getLastAppliedTermIndex());

+long lastAppliedIndex = getLastAppliedTermIndex().getIndex();

+ozoneManager.getMetadataManager().getStore().flush();

+return lastAppliedIndex;

Review comment:

So, that is the reason get lastAppliedIndex first, then flush. If we

change the order, it will lead to data loss.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] bharatviswa504 commented on a change in pull request #986: HDDS-3476. Use persisted transaction info during OM startup in OM StateMachine.

bharatviswa504 commented on a change in pull request #986:

URL: https://github.com/apache/hadoop-ozone/pull/986#discussion_r438435468

##

File path:

hadoop-ozone/ozone-manager/src/main/java/org/apache/hadoop/ozone/om/ratis/OzoneManagerStateMachine.java

##

@@ -338,20 +352,19 @@ public void unpause(long newLastAppliedSnaphsotIndex,

}

/**

- * Take OM Ratis snapshot. Write the snapshot index to file. Snapshot index

- * is the log index corresponding to the last applied transaction on the OM

- * State Machine.

+ * Take OM Ratis snapshot is a dummy operation as when double buffer

+ * flushes the lastAppliedIndex is flushed to DB and that is used as

+ * snapshot index.

*

* @return the last applied index on the state machine which has been

* stored in the snapshot file.

*/

@Override

public long takeSnapshot() throws IOException {

-LOG.info("Saving Ratis snapshot on the OM.");

-if (ozoneManager != null) {

- return ozoneManager.saveRatisSnapshot().getIndex();

-}

-return 0;

+LOG.info("Current Snapshot Index {}", getLastAppliedTermIndex());

+long lastAppliedIndex = getLastAppliedTermIndex().getIndex();

+ozoneManager.getMetadataManager().getStore().flush();

+return lastAppliedIndex;

Review comment:

Why it will lead to data loss. I am returning already flushed index, and

ratis only does log purge which have been flushed to DB.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] bharatviswa504 commented on a change in pull request #986: HDDS-3476. Use persisted transaction info during OM startup in OM StateMachine.

bharatviswa504 commented on a change in pull request #986:

URL: https://github.com/apache/hadoop-ozone/pull/986#discussion_r438435468

##

File path:

hadoop-ozone/ozone-manager/src/main/java/org/apache/hadoop/ozone/om/ratis/OzoneManagerStateMachine.java

##

@@ -338,20 +352,19 @@ public void unpause(long newLastAppliedSnaphsotIndex,

}

/**

- * Take OM Ratis snapshot. Write the snapshot index to file. Snapshot index

- * is the log index corresponding to the last applied transaction on the OM

- * State Machine.

+ * Take OM Ratis snapshot is a dummy operation as when double buffer

+ * flushes the lastAppliedIndex is flushed to DB and that is used as

+ * snapshot index.

*

* @return the last applied index on the state machine which has been

* stored in the snapshot file.

*/

@Override

public long takeSnapshot() throws IOException {

-LOG.info("Saving Ratis snapshot on the OM.");

-if (ozoneManager != null) {

- return ozoneManager.saveRatisSnapshot().getIndex();

-}

-return 0;

+LOG.info("Current Snapshot Index {}", getLastAppliedTermIndex());

+long lastAppliedIndex = getLastAppliedTermIndex().getIndex();

+ozoneManager.getMetadataManager().getStore().flush();

+return lastAppliedIndex;

Review comment:

Why it will lead to data loss. We are returning already flushed index,

and ratis only does log purge which have been flushed to DB.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] bharatviswa504 commented on a change in pull request #986: HDDS-3476. Use persisted transaction info during OM startup in OM StateMachine.

bharatviswa504 commented on a change in pull request #986:

URL: https://github.com/apache/hadoop-ozone/pull/986#discussion_r438434496

##

File path:

hadoop-ozone/ozone-manager/src/main/java/org/apache/hadoop/ozone/om/ratis/OzoneManagerStateMachine.java

##

@@ -338,20 +352,19 @@ public void unpause(long newLastAppliedSnaphsotIndex,

}

/**

- * Take OM Ratis snapshot. Write the snapshot index to file. Snapshot index

- * is the log index corresponding to the last applied transaction on the OM

- * State Machine.

+ * Take OM Ratis snapshot is a dummy operation as when double buffer

+ * flushes the lastAppliedIndex is flushed to DB and that is used as

+ * snapshot index.

*

* @return the last applied index on the state machine which has been

* stored in the snapshot file.

*/

@Override

public long takeSnapshot() throws IOException {

-LOG.info("Saving Ratis snapshot on the OM.");

-if (ozoneManager != null) {

- return ozoneManager.saveRatisSnapshot().getIndex();

-}

-return 0;

+LOG.info("Current Snapshot Index {}", getLastAppliedTermIndex());

+long lastAppliedIndex = getLastAppliedTermIndex().getIndex();

+ozoneManager.getMetadataManager().getStore().flush();

Review comment:

Done

##

File path:

hadoop-ozone/ozone-manager/src/main/java/org/apache/hadoop/ozone/om/ratis/OzoneManagerStateMachine.java

##

@@ -515,13 +528,12 @@ private synchronized void

computeAndUpdateLastAppliedIndex(

}

}

- public void updateLastAppliedIndexWithSnaphsotIndex() {

+ public void updateLastAppliedIndexWithSnaphsotIndex() throws IOException {

Review comment:

Done

##

File path:

hadoop-ozone/ozone-manager/src/main/java/org/apache/hadoop/ozone/om/OzoneManager.java

##

@@ -3168,8 +3172,8 @@ File replaceOMDBWithCheckpoint(long lastAppliedIndex,

Path checkpointPath)

* All the classes which use/ store MetadataManager should also be updated

* with the new MetadataManager instance.

*/

- void reloadOMState(long newSnapshotIndex,

- long newSnapShotTermIndex) throws IOException {

+ void reloadOMState(long newSnapshotIndex, long newSnapShotTermIndex)

+ throws IOException {

Review comment:

Done

##

File path:

hadoop-ozone/ozone-manager/src/main/java/org/apache/hadoop/ozone/om/OzoneManager.java

##

@@ -3033,32 +3024,47 @@ public TermIndex installSnapshot(String leaderId) {

DBCheckpoint omDBcheckpoint = getDBCheckpointFromLeader(leaderId);

Path newDBlocation = omDBcheckpoint.getCheckpointLocation();

-// Check if current ratis log index is smaller than the downloaded

-// snapshot index. If yes, proceed by stopping the ratis server so that

-// the OM state can be re-initialized. If no, then do not proceed with

-// installSnapshot.

+LOG.info("Downloaded checkpoint from Leader {}, in to the location {}",

+leaderId, newDBlocation);

+

long lastAppliedIndex = omRatisServer.getLastAppliedTermIndex().getIndex();

-long checkpointSnapshotIndex = omDBcheckpoint.getRatisSnapshotIndex();

-long checkpointSnapshotTermIndex =

-omDBcheckpoint.getRatisSnapshotTerm();

-if (checkpointSnapshotIndex <= lastAppliedIndex) {

- LOG.error("Failed to install checkpoint from OM leader: {}. The last " +

- "applied index: {} is greater than or equal to the checkpoint's"

- + " " +

- "snapshot index: {}. Deleting the downloaded checkpoint {}",

- leaderId,

- lastAppliedIndex, checkpointSnapshotIndex,

+

+// Check if current ratis log index is smaller than the downloaded

+// checkpoint transaction index. If yes, proceed by stopping the ratis

+// server so that the OM state can be re-initialized. If no, then do not

+// proceed with installSnapshot.

+

+OMTransactionInfo omTransactionInfo = null;

+

+Path dbDir = newDBlocation.getParent();

+if (dbDir == null) {

+ LOG.error("Incorrect DB location path {} received from checkpoint.",

newDBlocation);

- try {

-FileUtils.deleteFully(newDBlocation);

- } catch (IOException e) {

-LOG.error("Failed to fully delete the downloaded DB checkpoint {} " +

-"from OM leader {}.", newDBlocation,

-leaderId, e);

- }

return null;

}

+try {

+ omTransactionInfo =

+ OzoneManagerRatisUtils.getTransactionInfoFromDownloadedSnapshot(

+ configuration, dbDir);

+} catch (Exception ex) {

+ LOG.error("Failed during opening downloaded snapshot from " +

+ "{} to obtain transaction index", newDBlocation, ex);

+ return null;

+}

+

+boolean canProceed =

+OzoneManagerRatisUtils.verifyTransactionInfo(omTransactionInfo,

+lastAppliedIndex, leaderId, newDBlocation);

+

Review comment:

Done.

##

File path:

[GitHub] [hadoop-ozone] bharatviswa504 commented on pull request #986: HDDS-3476. Use persisted transaction info during OM startup in OM StateMachine.

bharatviswa504 commented on pull request #986: URL: https://github.com/apache/hadoop-ozone/pull/986#issuecomment-642292921 Thank You @hanishakoneru for the review. I have addressed review comments. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] hanishakoneru commented on a change in pull request #986: HDDS-3476. Use persisted transaction info during OM startup in OM StateMachine.

hanishakoneru commented on a change in pull request #986:

URL: https://github.com/apache/hadoop-ozone/pull/986#discussion_r438432283

##

File path:

hadoop-ozone/ozone-manager/src/main/java/org/apache/hadoop/ozone/om/ratis/OzoneManagerStateMachine.java

##

@@ -338,20 +352,19 @@ public void unpause(long newLastAppliedSnaphsotIndex,

}

/**

- * Take OM Ratis snapshot. Write the snapshot index to file. Snapshot index

- * is the log index corresponding to the last applied transaction on the OM

- * State Machine.

+ * Take OM Ratis snapshot is a dummy operation as when double buffer

+ * flushes the lastAppliedIndex is flushed to DB and that is used as

+ * snapshot index.

*

* @return the last applied index on the state machine which has been

* stored in the snapshot file.

*/

@Override

public long takeSnapshot() throws IOException {

-LOG.info("Saving Ratis snapshot on the OM.");

-if (ozoneManager != null) {

- return ozoneManager.saveRatisSnapshot().getIndex();

-}

-return 0;

+LOG.info("Current Snapshot Index {}", getLastAppliedTermIndex());

+long lastAppliedIndex = getLastAppliedTermIndex().getIndex();

+ozoneManager.getMetadataManager().getStore().flush();

+return lastAppliedIndex;

Review comment:

So this needs to be fixed then. Or could lead to data loss.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] bharatviswa504 commented on a change in pull request #986: HDDS-3476. Use persisted transaction info during OM startup in OM StateMachine.

bharatviswa504 commented on a change in pull request #986:

URL: https://github.com/apache/hadoop-ozone/pull/986#discussion_r438431531

##

File path:

hadoop-ozone/ozone-manager/src/main/java/org/apache/hadoop/ozone/om/ratis/OzoneManagerStateMachine.java

##

@@ -338,20 +352,19 @@ public void unpause(long newLastAppliedSnaphsotIndex,

}

/**

- * Take OM Ratis snapshot. Write the snapshot index to file. Snapshot index

- * is the log index corresponding to the last applied transaction on the OM

- * State Machine.

+ * Take OM Ratis snapshot is a dummy operation as when double buffer

+ * flushes the lastAppliedIndex is flushed to DB and that is used as

+ * snapshot index.

*

* @return the last applied index on the state machine which has been

* stored in the snapshot file.

*/

@Override

public long takeSnapshot() throws IOException {

-LOG.info("Saving Ratis snapshot on the OM.");

-if (ozoneManager != null) {

- return ozoneManager.saveRatisSnapshot().getIndex();

-}

-return 0;

+LOG.info("Current Snapshot Index {}", getLastAppliedTermIndex());

+long lastAppliedIndex = getLastAppliedTermIndex().getIndex();

+ozoneManager.getMetadataManager().getStore().flush();

+return lastAppliedIndex;

Review comment:

Yes. It would not.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] bharatviswa504 commented on a change in pull request #986: HDDS-3476. Use persisted transaction info during OM startup in OM StateMachine.

bharatviswa504 commented on a change in pull request #986:

URL: https://github.com/apache/hadoop-ozone/pull/986#discussion_r438407648

##

File path:

hadoop-ozone/ozone-manager/src/main/java/org/apache/hadoop/ozone/om/snapshot/OzoneManagerSnapshotProvider.java

##

@@ -112,16 +112,16 @@ public OzoneManagerSnapshotProvider(ConfigurationSource

conf,

*/

public DBCheckpoint getOzoneManagerDBSnapshot(String leaderOMNodeID)

throws IOException {

-String snapshotFileName = OM_SNAPSHOT_DB + "_" +

System.currentTimeMillis();

-File targetFile = new File(omSnapshotDir, snapshotFileName + ".tar.gz");

+String snapshotTime = Long.toString(System.currentTimeMillis());

+String snapshotFileName = Paths.get(omSnapshotDir.getAbsolutePath(),

+snapshotTime, OM_DB_NAME).toFile().getAbsolutePath();

+File targetFile = new File(snapshotFileName + ".tar.gz");

Review comment:

Still we need this. As We use DBStore.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] hanishakoneru commented on a change in pull request #986: HDDS-3476. Use persisted transaction info during OM startup in OM StateMachine.

hanishakoneru commented on a change in pull request #986:

URL: https://github.com/apache/hadoop-ozone/pull/986#discussion_r438353082

##

File path:

hadoop-ozone/ozone-manager/src/main/java/org/apache/hadoop/ozone/om/OmMetadataManagerImpl.java

##

@@ -259,16 +261,25 @@ public void start(OzoneConfiguration configuration)

throws IOException {

rocksDBConfiguration.setSyncOption(true);

}

- DBStoreBuilder dbStoreBuilder = DBStoreBuilder.newBuilder(configuration,

- rocksDBConfiguration).setName(OM_DB_NAME)

- .setPath(Paths.get(metaDir.getPath()));

+ this.store = loadDB(configuration, metaDir);

- this.store = addOMTablesAndCodecs(dbStoreBuilder).build();

+ // This value will be used internally, not to be exposed to end users.

Review comment:

We can remove this comment now.

##

File path:

hadoop-ozone/ozone-manager/src/main/java/org/apache/hadoop/ozone/om/OzoneManager.java

##

@@ -3033,32 +3024,47 @@ public TermIndex installSnapshot(String leaderId) {

DBCheckpoint omDBcheckpoint = getDBCheckpointFromLeader(leaderId);

Path newDBlocation = omDBcheckpoint.getCheckpointLocation();

-// Check if current ratis log index is smaller than the downloaded

-// snapshot index. If yes, proceed by stopping the ratis server so that

-// the OM state can be re-initialized. If no, then do not proceed with

-// installSnapshot.

+LOG.info("Downloaded checkpoint from Leader {}, in to the location {}",

+leaderId, newDBlocation);

+

long lastAppliedIndex = omRatisServer.getLastAppliedTermIndex().getIndex();

-long checkpointSnapshotIndex = omDBcheckpoint.getRatisSnapshotIndex();

-long checkpointSnapshotTermIndex =

-omDBcheckpoint.getRatisSnapshotTerm();

-if (checkpointSnapshotIndex <= lastAppliedIndex) {

- LOG.error("Failed to install checkpoint from OM leader: {}. The last " +

- "applied index: {} is greater than or equal to the checkpoint's"

- + " " +

- "snapshot index: {}. Deleting the downloaded checkpoint {}",

- leaderId,

- lastAppliedIndex, checkpointSnapshotIndex,

+

+// Check if current ratis log index is smaller than the downloaded

+// checkpoint transaction index. If yes, proceed by stopping the ratis

+// server so that the OM state can be re-initialized. If no, then do not

+// proceed with installSnapshot.

+

+OMTransactionInfo omTransactionInfo = null;

+

+Path dbDir = newDBlocation.getParent();

+if (dbDir == null) {

+ LOG.error("Incorrect DB location path {} received from checkpoint.",

newDBlocation);

- try {

-FileUtils.deleteFully(newDBlocation);

- } catch (IOException e) {

-LOG.error("Failed to fully delete the downloaded DB checkpoint {} " +

-"from OM leader {}.", newDBlocation,

-leaderId, e);

- }

return null;

}

+try {

+ omTransactionInfo =

+ OzoneManagerRatisUtils.getTransactionInfoFromDownloadedSnapshot(

+ configuration, dbDir);

+} catch (Exception ex) {

+ LOG.error("Failed during opening downloaded snapshot from " +

+ "{} to obtain transaction index", newDBlocation, ex);

+ return null;

+}

+

+boolean canProceed =

+OzoneManagerRatisUtils.verifyTransactionInfo(omTransactionInfo,

+lastAppliedIndex, leaderId, newDBlocation);

+

Review comment:

The lastAppliedIndex could have been updated between its assignment and

the canProceed check. This check should be synchronous. Or at least the

assignment should happen after reading the transactionInfo from DB.

##

File path:

hadoop-ozone/ozone-manager/src/main/java/org/apache/hadoop/ozone/om/OzoneManager.java

##

@@ -3168,8 +3172,8 @@ File replaceOMDBWithCheckpoint(long lastAppliedIndex,

Path checkpointPath)

* All the classes which use/ store MetadataManager should also be updated

* with the new MetadataManager instance.

*/

- void reloadOMState(long newSnapshotIndex,

- long newSnapShotTermIndex) throws IOException {

+ void reloadOMState(long newSnapshotIndex, long newSnapShotTermIndex)

+ throws IOException {

Review comment:

NIT: SnapShot -> Snapshot

##

File path:

hadoop-ozone/ozone-manager/src/main/java/org/apache/hadoop/ozone/om/snapshot/OzoneManagerSnapshotProvider.java

##

@@ -112,16 +112,16 @@ public OzoneManagerSnapshotProvider(ConfigurationSource

conf,

*/

public DBCheckpoint getOzoneManagerDBSnapshot(String leaderOMNodeID)

throws IOException {

-String snapshotFileName = OM_SNAPSHOT_DB + "_" +

System.currentTimeMillis();

-File targetFile = new File(omSnapshotDir, snapshotFileName + ".tar.gz");

+String snapshotTime = Long.toString(System.currentTimeMillis());

+String snapshotFileName = Paths.get(omSnapshotDir.getAbsolutePath(),

+snapshotTime,

[jira] [Commented] (HDDS-1134) OzoneFileSystem#create should allocate alteast one block for future writes.

[ https://issues.apache.org/jira/browse/HDDS-1134?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17132701#comment-17132701 ] Bharat Viswanadham commented on HDDS-1134: -- Hi [~msingh] I see this is being handled in OzoneManager, if the length passed is zero, we allocate at least one block. Code link. [#codelink|https://github.com/apache/hadoop-ozone/blob/master/hadoop-ozone/ozone-manager/src/main/java/org/apache/hadoop/ozone/om/request/key/OMKeyCreateRequest.java#L104] > OzoneFileSystem#create should allocate alteast one block for future writes. > --- > > Key: HDDS-1134 > URL: https://issues.apache.org/jira/browse/HDDS-1134 > Project: Hadoop Distributed Data Store > Issue Type: Bug > Components: Ozone Manager >Affects Versions: 0.4.0 >Reporter: Mukul Kumar Singh >Assignee: Mukul Kumar Singh >Priority: Major > Labels: TriagePending > Fix For: 0.6.0 > > Attachments: HDDS-1134.001.patch > > > While opening a new key, OM should at least allocate one block for the key, > this should be done in case the client is not sure about the number of block. > However for users of OzoneFS, if the key is being created for a directory, > then no blocks should be allocated. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Resolved] (HDDS-1134) OzoneFileSystem#create should allocate alteast one block for future writes.

[ https://issues.apache.org/jira/browse/HDDS-1134?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Bharat Viswanadham resolved HDDS-1134. -- Fix Version/s: 0.6.0 Resolution: Fixed This has been already fixed. Right now, we allocate at least one block in createKey call. This has been taken care of during the OM HA refactor. > OzoneFileSystem#create should allocate alteast one block for future writes. > --- > > Key: HDDS-1134 > URL: https://issues.apache.org/jira/browse/HDDS-1134 > Project: Hadoop Distributed Data Store > Issue Type: Bug > Components: Ozone Manager >Affects Versions: 0.4.0 >Reporter: Mukul Kumar Singh >Assignee: Mukul Kumar Singh >Priority: Major > Labels: TriagePending > Fix For: 0.6.0 > > Attachments: HDDS-1134.001.patch > > > While opening a new key, OM should at least allocate one block for the key, > this should be done in case the client is not sure about the number of block. > However for users of OzoneFS, if the key is being created for a directory, > then no blocks should be allocated. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] smengcl edited a comment on pull request #1046: HDDS-3767. [OFS] Address merge conflicts after HDDS-3627

smengcl edited a comment on pull request #1046: URL: https://github.com/apache/hadoop-ozone/pull/1046#issuecomment-642120503 > Thanks this patch @smengcl (And sorry for the master changes, we worked paralell.) > > Understanding this PR is really challenging. Finally, I fetched the PR branch and compared with the master and everything seems to be the right place. Thanks for the review @elek . Actually I put a [compare link](https://github.com/smengcl/hadoop-ozone/compare/HDDS-2665-ofs...HDDS-3767) in a previous comment which should have make the review easier in theory. > > One question: Why did you deleted `TestRootedOzoneFileSystemWithMocks.java`? I removed `TestRootedOzoneFileSystemWithMocks.java` because HDDS-3627 removed `TestOzoneFileSystemWithMocks.java`. I have just restored `TestRootedOzoneFileSystemWithMocks.java` under `ozonefs`. > > And one comment: `META-INF/services/...FileSystem` entries can be created for ofs, too. (In the future) I believe we could only put one implementation [here](https://github.com/apache/hadoop-ozone/blob/072370b947416d89fae11d00a84a1d9a6b31beaa/hadoop-ozone/ozonefs/src/main/resources/META-INF/services/org.apache.hadoop.fs.FileSystem#L16)? Maybe later we can replace `org.apache.hadoop.fs.ozone.OzoneFileSystem` with `org.apache.hadoop.fs.ozone.RootedOzoneFileSystem`. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-3685) Remove replay logic from actual request logic

[ https://issues.apache.org/jira/browse/HDDS-3685?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Bharat Viswanadham updated HDDS-3685: - Priority: Critical (was: Major) > Remove replay logic from actual request logic > - > > Key: HDDS-3685 > URL: https://issues.apache.org/jira/browse/HDDS-3685 > Project: Hadoop Distributed Data Store > Issue Type: Sub-task >Reporter: Bharat Viswanadham >Assignee: Bharat Viswanadham >Priority: Critical > > HDDS-3476 used the transaction info persisted in OM DB during double buffer > flush when OM is restarted. This transaction info log index and the term are > used as a snapshot index. So, we can remove the replay logic from actual > request logic. (As now we shall never have the transaction which is applied > to OM DB will never be again replayed to DB) -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Comment Edited] (HDDS-3707) UUID can be non unique for a huge samples

[ https://issues.apache.org/jira/browse/HDDS-3707?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17132675#comment-17132675 ] Arpit Agarwal edited comment on HDDS-3707 at 6/10/20, 7:52 PM: --- Hi [~maobaolong] the probability is so infinitesimal I don't think it is worth trying to change it. :) was (Author: arpitagarwal): Hi [~maobaolong] the probability is so infinitesimal I don't think it is worth trying to fix it. :) > UUID can be non unique for a huge samples > - > > Key: HDDS-3707 > URL: https://issues.apache.org/jira/browse/HDDS-3707 > Project: Hadoop Distributed Data Store > Issue Type: Bug > Components: Ozone Datanode, Ozone Manager, SCM >Affects Versions: 0.7.0 >Reporter: maobaolong >Priority: Minor > Labels: Triaged > > Now, we have used UUID as id for many places, for example, DataNodeId, > pipelineId. I believe that it should be pretty less chance to met collision, > but, if met the collision, we are in trouble. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Commented] (HDDS-3707) UUID can be non unique for a huge samples

[ https://issues.apache.org/jira/browse/HDDS-3707?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17132675#comment-17132675 ] Arpit Agarwal commented on HDDS-3707: - Hi [~maobaolong] the probability is so infinitesimal I don't think it is worth trying to fix it. :) > UUID can be non unique for a huge samples > - > > Key: HDDS-3707 > URL: https://issues.apache.org/jira/browse/HDDS-3707 > Project: Hadoop Distributed Data Store > Issue Type: Bug > Components: Ozone Datanode, Ozone Manager, SCM >Affects Versions: 0.7.0 >Reporter: maobaolong >Priority: Minor > Labels: Triaged > > Now, we have used UUID as id for many places, for example, DataNodeId, > pipelineId. I believe that it should be pretty less chance to met collision, > but, if met the collision, we are in trouble. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Resolved] (HDDS-3639) Maintain FileHandle Information in OMMetadataManager

[ https://issues.apache.org/jira/browse/HDDS-3639?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Hanisha Koneru resolved HDDS-3639. -- Resolution: Fixed > Maintain FileHandle Information in OMMetadataManager > > > Key: HDDS-3639 > URL: https://issues.apache.org/jira/browse/HDDS-3639 > Project: Hadoop Distributed Data Store > Issue Type: Sub-task > Components: Ozone Filesystem >Reporter: Prashant Pogde >Assignee: Prashant Pogde >Priority: Major > Labels: pull-request-available > > Maintain FileHandle Information in OMMetadataManager. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Created] (HDDS-3775) Add documentation for flame graph

Wei-Chiu Chuang created HDDS-3775: - Summary: Add documentation for flame graph Key: HDDS-3775 URL: https://issues.apache.org/jira/browse/HDDS-3775 Project: Hadoop Distributed Data Store Issue Type: Task Reporter: Wei-Chiu Chuang HDDS-1116 added flame graph but looks like there's no documentation to enable it. To enable it, add configuration hdds.profiler.endpoint.enabled = true to ozone-site.xml download the profiler from https://github.com/jvm-profiling-tools/async-profiler to a local directory, say /tmp and start the DataNode with java system property -Dasync.profiler.home=/tmp or environment variable $ASYNC_PROFILER_HOME and then go to the datanode servlet, say dn1:9883/prof to see the graph. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] vivekratnavel commented on pull request #1047: HDDS-3726. Upload code coverage data to Codecov and enable checks in …

vivekratnavel commented on pull request #1047: URL: https://github.com/apache/hadoop-ozone/pull/1047#issuecomment-642162869 @elek Thanks for the review and merge! This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] maobaolong commented on pull request #1051: Redundancy if condition code in ListPipelinesSubcommand

maobaolong commented on pull request #1051: URL: https://github.com/apache/hadoop-ozone/pull/1051#issuecomment-642148224 @bhemanthkumar Thanks for working on this, please fix the style problem. Also, please update the description from the given template. Reference this PR. https://github.com/apache/hadoop-ozone/pull/920 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] maobaolong commented on a change in pull request #1051: Redundancy if condition code in ListPipelinesSubcommand

maobaolong commented on a change in pull request #1051:

URL: https://github.com/apache/hadoop-ozone/pull/1051#discussion_r438285600

##

File path:

hadoop-hdds/tools/src/main/java/org/apache/hadoop/hdds/scm/cli/pipeline/ListPipelinesSubcommand.java

##

@@ -54,17 +54,13 @@

@Override

public Void call() throws Exception {

try (ScmClient scmClient = parent.getParent().createScmClient()) {

- if (Strings.isNullOrEmpty(factor) && Strings.isNullOrEmpty(state)) {

-scmClient.listPipelines().forEach(System.out::println);

- } else {

-scmClient.listPipelines().stream()

-.filter(p -> ((Strings.isNullOrEmpty(factor) ||

-(p.getFactor().toString().compareToIgnoreCase(factor) == 0))

-&& (Strings.isNullOrEmpty(state) ||

-(p.getPipelineState().toString().compareToIgnoreCase(state)

-== 0

+ scmClient.listPipelines().stream()

Review comment:

Please reduce the indent to fix the checkstyle failure.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-3726) Upload code coverage to Codecov and enable checks in PR workflow of Github Actions

[ https://issues.apache.org/jira/browse/HDDS-3726?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Marton Elek updated HDDS-3726: -- Fix Version/s: 0.6.0 > Upload code coverage to Codecov and enable checks in PR workflow of Github > Actions > -- > > Key: HDDS-3726 > URL: https://issues.apache.org/jira/browse/HDDS-3726 > Project: Hadoop Distributed Data Store > Issue Type: Bug > Components: build >Affects Versions: 0.6.0 >Reporter: Vivek Ratnavel Subramanian >Assignee: Vivek Ratnavel Subramanian >Priority: Major > Labels: pull-request-available > Fix For: 0.6.0 > > > HDDS-3170 aggregates code coverage across all components. We need to upload > the reports to codecov to be able to keep track of coverage and coverage > diffs to be able to tell if a PR does not do a good job on writing unit tests. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] smengcl commented on pull request #1046: HDDS-3767. [OFS] Address merge conflicts after HDDS-3627

smengcl commented on pull request #1046: URL: https://github.com/apache/hadoop-ozone/pull/1046#issuecomment-642120503 > Thanks this patch @smengcl (And sorry for the master changes, we worked paralell.) > > Understanding this PR is really challenging. Finally, I fetched the PR branch and compared with the master and everything seems to be the right place. Thanks for the review @elek . Actually I put a [compare link](https://github.com/smengcl/hadoop-ozone/compare/HDDS-2665-ofs...HDDS-3767) in a previous comment which should have make the review easier in theory. > > One question: Why did you deleted `TestRootedOzoneFileSystemWithMocks.java`? I removed `TestRootedOzoneFileSystemWithMocks.java` because HDDS-3627 removed `TestOzoneFileSystemWithMocks.java`. Shall we put it back? > > And one comment: `META-INF/services/...FileSystem` entries can be created for ofs, too. (In the future) I believe we could only put one implementation [here](https://github.com/apache/hadoop-ozone/blob/072370b947416d89fae11d00a84a1d9a6b31beaa/hadoop-ozone/ozonefs/src/main/resources/META-INF/services/org.apache.hadoop.fs.FileSystem#L16)? Maybe later we can replace `org.apache.hadoop.fs.ozone.OzoneFileSystem` with `org.apache.hadoop.fs.ozone.RootedOzoneFileSystem`. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Comment Edited] (HDDS-3747) Remove the redundancy if condition code in ListPipelinesSubcommand