[GitHub] [spark] dilipbiswal commented on a change in pull request #26042: [SPARK-29092][SQL] Report additional information about DataSourceScanExec in EXPLAIN FORMATTED

dilipbiswal commented on a change in pull request #26042: [SPARK-29092][SQL]

Report additional information about DataSourceScanExec in EXPLAIN FORMATTED

URL: https://github.com/apache/spark/pull/26042#discussion_r333671047

##

File path:

sql/hive-thriftserver/src/test/scala/org/apache/spark/sql/hive/thriftserver/ThriftServerQueryTestSuite.scala

##

@@ -100,7 +100,8 @@ class ThriftServerQueryTestSuite extends SQLQueryTestSuite

{

"subquery/in-subquery/in-group-by.sql",

"subquery/in-subquery/simple-in.sql",

"subquery/in-subquery/in-order-by.sql",

-"subquery/in-subquery/in-set-operations.sql"

+"subquery/in-subquery/in-set-operations.sql",

+"explain.sql"

Review comment:

@cloud-fan Let me enable it and see.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dilipbiswal commented on a change in pull request #26042: [SPARK-29092][SQL] Report additional information about DataSourceScanExec in EXPLAIN FORMATTED

dilipbiswal commented on a change in pull request #26042: [SPARK-29092][SQL] Report additional information about DataSourceScanExec in EXPLAIN FORMATTED URL: https://github.com/apache/spark/pull/26042#discussion_r333670345 ## File path: sql/core/src/test/resources/sql-tests/results/explain.sql.out ## @@ -58,6 +58,11 @@ struct (1) Scan parquet default.explain_temp1 Output: [key#x, val#x] +Batched: true +Format: Parquet Review comment: @cloud-fan I can filter it. But wanted to double check first. The one we print in the node name is `relation.toString` and the one printed here is `relation.format.toString`. Are they going to be same always ? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] brkyvz commented on issue #26071: [SPARK-29412][SQL] refine the document of v2 session catalog config

brkyvz commented on issue #26071: [SPARK-29412][SQL] refine the document of v2 session catalog config URL: https://github.com/apache/spark/pull/26071#issuecomment-540707191 LGTM as well This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] rdblue commented on issue #26071: [SPARK-29412][SQL] refine the document of v2 session catalog config

rdblue commented on issue #26071: [SPARK-29412][SQL] refine the document of v2 session catalog config URL: https://github.com/apache/spark/pull/26071#issuecomment-540704376 +1 Unless there are other comments, I'll merge this later today. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] srowen commented on issue #25994: [SPARK-29323][WEBUI] Add tooltip for The Executors Tab's column names in the Spark history server Page

srowen commented on issue #25994: [SPARK-29323][WEBUI] Add tooltip for The Executors Tab's column names in the Spark history server Page URL: https://github.com/apache/spark/pull/25994#issuecomment-540700641 We'll have to wait for Jenkins to come back, to evaluate this with tests, but I'm sure it will be OK. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] srowen closed pull request #25994: [SPARK-29323][WEBUI] Add tooltip for The Executors Tab's column names in the Spark history server Page

srowen closed pull request #25994: [SPARK-29323][WEBUI] Add tooltip for The Executors Tab's column names in the Spark history server Page URL: https://github.com/apache/spark/pull/25994 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] liucht-inspur opened a new pull request #25994: [SPARK-29323][WEBUI] Add tooltip for The Executors Tab's column names in the Spark history server Page

liucht-inspur opened a new pull request #25994: [SPARK-29323][WEBUI] Add tooltip for The Executors Tab's column names in the Spark history server Page URL: https://github.com/apache/spark/pull/25994 ### What changes were proposed in this pull request? This PR is Adding tooltip for The Executors Tab's column names include RDD Blocks, Disk Used,Cores, Activity Tasks, Failed Tasks , Complete Tasks, Total Tasks in the history server Page. https://issues.apache.org/jira/browse/SPARK-29323  I have modify the following code in executorspage-template.html Before: RDD Blocks Disk Used Cores Active Tasks Failed Tasks Complete Tasks Total Tasks  After: RDD Blocks Disk Used Cores Active Tasks Failed Tasks Complete Tasks Total Tasks  ### Why are the changes needed? the spark Executors of history Tab page, the Summary part shows the line in the list of title, but format is irregular. Some column names have tooltip, such as Storage Memory, Task Time(GC Time), Input, Shuffle Read, Shuffle Write and Blacklisted, but there are still some list names that have not tooltip. They are RDD Blocks, Disk Used,Cores, Activity Tasks, Failed Tasks , Complete Tasks and Total Tasks. oddly, Executors section below,All the column names Contains the column names above have tooltip . It's important for open source projects to have consistent style and user-friendly UI, and I'm working on keeping it consistent And more user-friendly. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Manual tests for Chrome, Firefox and Safari Authored-by: liucht-inspur Signed-off-by: liucht-inspur This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] rafaelkyrdan commented on issue #19424: [SPARK-22197][SQL] push down operators to data source before planning

rafaelkyrdan commented on issue #19424: [SPARK-22197][SQL] push down operators

to data source before planning

URL: https://github.com/apache/spark/pull/19424#issuecomment-540687736

I think I found what I have to implement:

`

trait TableScan {

def buildScan(): RDD[Row]

}

`

am I right?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] tgravescs commented on issue #26083: [SPARK-29417][CORE] Resource Scheduling - add TaskContext.resource java api

tgravescs commented on issue #26083: [SPARK-29417][CORE] Resource Scheduling - add TaskContext.resource java api URL: https://github.com/apache/spark/pull/26083#issuecomment-540684613 @mengxr @jiangxb1987 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] tgravescs opened a new pull request #26083: [SPARK-29417][CORE] Resource Scheduling - add TaskContext.resource java api

tgravescs opened a new pull request #26083: [SPARK-29417][CORE] Resource Scheduling - add TaskContext.resource java api URL: https://github.com/apache/spark/pull/26083 ### What changes were proposed in this pull request? We added a TaskContext.resources() api, but I realized this is returning a scala Map which is not ideal for access from Java. Here I add a resourcesJMap function which returns a java.util.Map to make it easily accessible from Java. ### Why are the changes needed? Java API access ### Does this PR introduce any user-facing change? Yes, new TaskContext function to access from Java ### How was this patch tested? new unit test This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on issue #24851: [SPARK-27303][GRAPH] Add Spark Graph API

dongjoon-hyun commented on issue #24851: [SPARK-27303][GRAPH] Add Spark Graph API URL: https://github.com/apache/spark/pull/24851#issuecomment-540683239 Thank you for the swift updates. I'll review this afternoon again. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on a change in pull request #24851: [SPARK-27303][GRAPH] Add Spark Graph API

dongjoon-hyun commented on a change in pull request #24851:

[SPARK-27303][GRAPH] Add Spark Graph API

URL: https://github.com/apache/spark/pull/24851#discussion_r42983

##

File path:

graph/api/src/main/scala/org/apache/spark/graph/api/PropertyGraphWriter.scala

##

@@ -0,0 +1,92 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.graph.api

+

+import java.util.Locale

+

+import org.apache.spark.sql.SaveMode

+

+abstract class PropertyGraphWriter(val graph: PropertyGraph) {

+

+ protected var saveMode: SaveMode = SaveMode.ErrorIfExists

+ protected var format: String =

+graph.cypherSession.sparkSession.sessionState.conf.defaultDataSourceName

+

+ /**

+ * Specifies the behavior when the graph already exists. Options include:

+ *

+ * `SaveMode.Overwrite`: overwrite the existing data.

+ * `SaveMode.Ignore`: ignore the operation (i.e. no-op).

+ * `SaveMode.ErrorIfExists`: throw an exception at runtime.

+ *

+ *

+ * When writing the default option is `ErrorIfExists`.

+ *

+ * @since 3.0.0

+ */

+ def mode(mode: SaveMode): PropertyGraphWriter = {

+mode match {

+ case SaveMode.Append =>

+throw new IllegalArgumentException(s"Unsupported save mode: $mode. " +

+ "Accepted save modes are 'overwrite', 'ignore', 'error',

'errorifexists'.")

+ case _ =>

+this.saveMode = mode

+}

+this

+ }

+

+ /**

+ * Specifies the behavior when the graph already exists. Options include:

+ *

+ * `overwrite`: overwrite the existing graph.

+ * `ignore`: ignore the operation (i.e. no-op).

+ * `error` or `errorifexists`: default option, throw an exception at

runtime.

+ *

+ *

+ * @since 3.0.0

+ */

+ def mode(saveMode: String): PropertyGraphWriter = {

+saveMode.toLowerCase(Locale.ROOT) match {

+ case "overwrite" => mode(SaveMode.Overwrite)

+ case "ignore" => mode(SaveMode.Ignore)

+ case "error" | "errorifexists" => mode(SaveMode.ErrorIfExists)

+ case "default" => this

Review comment:

Ur, this can be wrong in case of `mode("overwrite").mode("default")`. Please

merge `default` to the line 67. Please add a test case for this, too.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] Ngone51 commented on a change in pull request #25943: [WIP][SPARK-29261][SQL][CORE] Support recover live entities from KVStore for (SQL)AppStatusListener

Ngone51 commented on a change in pull request #25943:

[WIP][SPARK-29261][SQL][CORE] Support recover live entities from KVStore for

(SQL)AppStatusListener

URL: https://github.com/apache/spark/pull/25943#discussion_r333634637

##

File path: core/src/main/scala/org/apache/spark/status/storeTypes.scala

##

@@ -76,6 +109,29 @@ private[spark] class JobDataWrapper(

@JsonIgnore @KVIndex("completionTime")

private def completionTime: Long =

info.completionTime.map(_.getTime).getOrElse(-1L)

+

+ def toLiveJob: LiveJob = {

Review comment:

While converting `LiveJob` to `JobDataWrapper`, only

`completedIndices.size`/`completedStages.size` are written into KVStore. So,

it's impossible to recover the detail `completedIndices`/`completedStages`. So,

as a compromises, we only recover the number in this case.

And this brings a problem that the recovered live job wouldn't be 100% the

same as the previous one. But, this can be acceptable when you look at

their(`completedIndices`, `completedStages`) usages in `AppStatusListener`, as

it does not mistake the final result(that is number).

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on issue #26028: [SPARK-29359][SQL][TESTS] Better exception handling in (SQL|ThriftServer)QueryTestSuite

dongjoon-hyun commented on issue #26028: [SPARK-29359][SQL][TESTS] Better exception handling in (SQL|ThriftServer)QueryTestSuite URL: https://github.com/apache/spark/pull/26028#issuecomment-540667842 Hi, @wangyum . You can merge this after manual testing. Or, we can wait until our Jenkins is back again. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

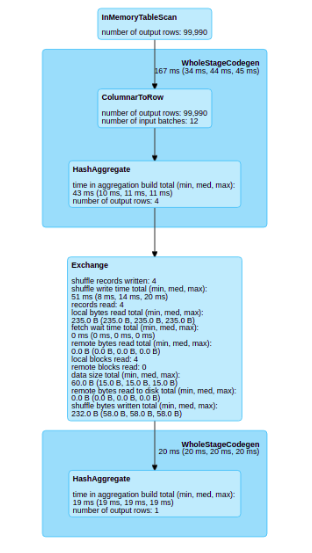

[GitHub] [spark] planga82 opened a new pull request #26082: [SPARK-29431][WebUI] Improve Web UI / Sql tab visualization with cached dataframes.

planga82 opened a new pull request #26082: [SPARK-29431][WebUI] Improve Web UI / Sql tab visualization with cached dataframes. URL: https://github.com/apache/spark/pull/26082 ### What changes were proposed in this pull request? With this pull request I want to improve the Web UI / SQL tab visualization. The principal problem that I find is when you have a cache in your plan, the SQL visualization don’t show any information about the part of the plan that has been cached. Before the change  After the change  ### Why are the changes needed? When we have a SQL plan with cached dataframes we lose the graphical information of this dataframe in the sql tab ### Does this PR introduce any user-facing change? Yes, in the sql tab ### How was this patch tested? Unit testing and manual tests throught spark shell This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] rafaelkyrdan commented on issue #19424: [SPARK-22197][SQL] push down operators to data source before planning

rafaelkyrdan commented on issue #19424: [SPARK-22197][SQL] push down operators to data source before planning URL: https://github.com/apache/spark/pull/19424#issuecomment-540666492 @cloud-fan I'm wondering whether it is not a priority or it wasn't easy to implement? If I want to implement it what class/interface should I extend? Is it possible to do it in a custom `JDBC` source? Do you have any suggestions? The use case looks like this: there are 50M records in the DB I wanna select only 1M why I should read all of them? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] srowen commented on a change in pull request #26065: [SPARK-29404][DOCS] Add an explanation about the executor color changed in WebUI documentation

srowen commented on a change in pull request #26065: [SPARK-29404][DOCS] Add an explanation about the executor color changed in WebUI documentation URL: https://github.com/apache/spark/pull/26065#discussion_r333611603 ## File path: docs/web-ui.md ## @@ -51,12 +51,17 @@ The information that is displayed in this section is -* Details of jobs grouped by status: Displays detailed information of the jobs including Job ID, description (with a link to detailed job page), submitted time, duration, stages summary and tasks progress bar +When you click on a executor, you can make the color darker to point it at. Review comment: No, I just don't think it's meaningful enough to try to explain, and the new text above is incorrect too. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] Ngone51 commented on a change in pull request #25943: [WIP][SPARK-29261][SQL][CORE] Support recover live entities from KVStore for (SQL)AppStatusListener

Ngone51 commented on a change in pull request #25943:

[WIP][SPARK-29261][SQL][CORE] Support recover live entities from KVStore for

(SQL)AppStatusListener

URL: https://github.com/apache/spark/pull/25943#discussion_r333611704

##

File path: core/src/main/scala/org/apache/spark/status/AppStatusListener.scala

##

@@ -103,6 +104,81 @@ private[spark] class AppStatusListener(

}

}

+ // visible for tests

+ private[spark] def recoverLiveEntities(): Unit = {

+if (!live) {

+ kvstore.view(classOf[JobDataWrapper])

+.asScala.filter(_.info.status == JobExecutionStatus.RUNNING)

Review comment:

Yeah. I didn't see any place we set Job status to `UNKNOWN`.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] Ngone51 commented on a change in pull request #25943: [WIP][SPARK-29261][SQL][CORE] Support recover live entities from KVStore for (SQL)AppStatusListener

Ngone51 commented on a change in pull request #25943:

[WIP][SPARK-29261][SQL][CORE] Support recover live entities from KVStore for

(SQL)AppStatusListener

URL: https://github.com/apache/spark/pull/25943#discussion_r333610531

##

File path: core/src/main/scala/org/apache/spark/status/AppStatusListener.scala

##

@@ -103,6 +104,81 @@ private[spark] class AppStatusListener(

}

}

+ // visible for tests

+ private[spark] def recoverLiveEntities(): Unit = {

+if (!live) {

+ kvstore.view(classOf[JobDataWrapper])

+.asScala.filter(_.info.status == JobExecutionStatus.RUNNING)

+.map(_.toLiveJob).foreach(job => liveJobs.put(job.jobId, job))

+

+ kvstore.view(classOf[StageDataWrapper]).asScala

+.filter { stageData =>

+ stageData.info.status == v1.StageStatus.PENDING ||

+stageData.info.status == v1.StageStatus.ACTIVE

+}

+.map { stageData =>

+ val stageId = stageData.info.stageId

+ val jobs = liveJobs.values.filter(_.stageIds.contains(stageId)).toSeq

+ stageData.toLiveStage(jobs)

+}.foreach { stage =>

+val stageId = stage.info.stageId

+val stageAttempt = stage.info.attemptNumber()

+liveStages.put((stageId, stageAttempt), stage)

+

+kvstore.view(classOf[ExecutorStageSummaryWrapper])

+ .index("stage")

+ .first(Array(stageId, stageAttempt))

+ .last(Array(stageId, stageAttempt))

+ .asScala

+ .map(_.toLiveExecutorStageSummary)

+ .foreach { esummary =>

+stage.executorSummaries.put(esummary.executorId, esummary)

+if (esummary.isBlacklisted) {

+ stage.blackListedExecutors += esummary.executorId

+ liveExecutors(esummary.executorId).isBlacklisted = true

+ liveExecutors(esummary.executorId).blacklistedInStages += stageId

+}

+ }

+

+

+kvstore.view(classOf[TaskDataWrapper])

+ .parent(Array(stageId, stageAttempt))

+ .index(TaskIndexNames.STATUS)

+ .first(TaskState.RUNNING.toString)

Review comment:

Ignore `LAUNCHING` is safe, because `status` in `TaskDataWrapper` is

actually from `LiveTask.TaskInfo.status`:

https://github.com/apache/spark/blob/e1ea806b3075d279b5f08a29fe4c1ad6d3c4191a/core/src/main/scala/org/apache/spark/scheduler/TaskInfo.scala#L96-L112

And there's no `LAUNCHING`.

Another available running status is `GET RESULT`. But this also seems

impossible. `TaskInfo` in `LiveTask` is only updated when

`SparkListenerTaskStart` and `SparkListenerTaskEnd` events comes. And these two

events don't change task's status to `GET RESULT`.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun closed pull request #26081: [SPARK-29032][FOLLOWUP][DOCS] Add PrometheusServlet in the monitoring documentation

dongjoon-hyun closed pull request #26081: [SPARK-29032][FOLLOWUP][DOCS] Add PrometheusServlet in the monitoring documentation URL: https://github.com/apache/spark/pull/26081 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on issue #26081: [SPARK-29032][FOLLOWUP][DOC] Document PrometheusServlet in the monitoring documentation

dongjoon-hyun commented on issue #26081: [SPARK-29032][FOLLOWUP][DOC] Document PrometheusServlet in the monitoring documentation URL: https://github.com/apache/spark/pull/26081#issuecomment-540653620 +1, LGTM. Thanks for the interest. Merged to master. Actually, I'm working on the full document, too. Please wait for one day~ :) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun closed pull request #26060: [SPARK-29400][CORE] Improve PrometheusResource to use labels

dongjoon-hyun closed pull request #26060: [SPARK-29400][CORE] Improve PrometheusResource to use labels URL: https://github.com/apache/spark/pull/26060 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] Ngone51 commented on a change in pull request #25943: [WIP][SPARK-29261][SQL][CORE] Support recover live entities from KVStore for (SQL)AppStatusListener

Ngone51 commented on a change in pull request #25943:

[WIP][SPARK-29261][SQL][CORE] Support recover live entities from KVStore for

(SQL)AppStatusListener

URL: https://github.com/apache/spark/pull/25943#discussion_r333598202

##

File path: core/src/main/scala/org/apache/spark/status/AppStatusListener.scala

##

@@ -103,6 +104,81 @@ private[spark] class AppStatusListener(

}

}

+ // visible for tests

+ private[spark] def recoverLiveEntities(): Unit = {

+if (!live) {

+ kvstore.view(classOf[JobDataWrapper])

+.asScala.filter(_.info.status == JobExecutionStatus.RUNNING)

+.map(_.toLiveJob).foreach(job => liveJobs.put(job.jobId, job))

+

+ kvstore.view(classOf[StageDataWrapper]).asScala

+.filter { stageData =>

+ stageData.info.status == v1.StageStatus.PENDING ||

+stageData.info.status == v1.StageStatus.ACTIVE

+}

+.map { stageData =>

+ val stageId = stageData.info.stageId

+ val jobs = liveJobs.values.filter(_.stageIds.contains(stageId)).toSeq

+ stageData.toLiveStage(jobs)

+}.foreach { stage =>

+val stageId = stage.info.stageId

+val stageAttempt = stage.info.attemptNumber()

+liveStages.put((stageId, stageAttempt), stage)

Review comment:

Yeah. But for now, I'd prefer to use Tuple2 (stageId, stageAttempt) as

`AppStatusListener` already do. And we could leave such optimization in a

separate PR later.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on issue #26060: [SPARK-29400][CORE] Improve PrometheusResource to use labels

dongjoon-hyun commented on issue #26060: [SPARK-29400][CORE] Improve PrometheusResource to use labels URL: https://github.com/apache/spark/pull/26060#issuecomment-540649306 Thank you all, @yuecong , @viirya , @srowen , @HyukjinKwon . Merged to master. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] Ngone51 commented on a change in pull request #25943: [WIP][SPARK-29261][SQL][CORE] Support recover live entities from KVStore for (SQL)AppStatusListener

Ngone51 commented on a change in pull request #25943: [WIP][SPARK-29261][SQL][CORE] Support recover live entities from KVStore for (SQL)AppStatusListener URL: https://github.com/apache/spark/pull/25943#discussion_r333596849 ## File path: core/src/main/scala/org/apache/spark/status/api/v1/api.scala ## @@ -181,6 +182,7 @@ class RDDStorageInfo private[spark]( val partitions: Option[Seq[RDDPartitionInfo]]) class RDDDataDistribution private[spark]( +val executorId: String, Review comment: Hi @squito, thanks for providing the api versioning requirements. That's really helpful. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] Ngone51 commented on a change in pull request #25943: [WIP][SPARK-29261][SQL][CORE] Support recover live entities from KVStore for (SQL)AppStatusListener

Ngone51 commented on a change in pull request #25943:

[WIP][SPARK-29261][SQL][CORE] Support recover live entities from KVStore for

(SQL)AppStatusListener

URL: https://github.com/apache/spark/pull/25943#discussion_r333596371

##

File path: core/src/main/scala/org/apache/spark/storage/StorageLevel.scala

##

@@ -163,6 +163,20 @@ object StorageLevel {

val MEMORY_AND_DISK_SER_2 = new StorageLevel(true, true, false, false, 2)

val OFF_HEAP = new StorageLevel(true, true, true, false, 1)

+ /**

+ * :: DeveloperApi ::

+ * Return the StorageLevel object with the specified description.

+ */

+ @DeveloperApi

+ def fromDescription(desc: String): StorageLevel = {

Review comment:

Good suggestion, I'll add a unit test for it.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] Ngone51 commented on issue #25943: [WIP][SPARK-29261][SQL][CORE] Support recover live entities from KVStore for (SQL)AppStatusListener

Ngone51 commented on issue #25943: [WIP][SPARK-29261][SQL][CORE] Support recover live entities from KVStore for (SQL)AppStatusListener URL: https://github.com/apache/spark/pull/25943#issuecomment-540647353 @squito > Sorry I am getting caught up on a lot of stuff here -- how is this related to #25577 ? This PR just extracts recovering live entities functionality from #25577. > Just for storing live entities, this approach seems more promising to me -- using Java serialization is really bad for compatibility, any changes to classes will break deserialization. If we want the snapshot / checkpoint files to be able to entirely replace the event log files, we'll need the compatibility. Hmm...This PR doesn't store live entities, instead, it recovers (or say, restore) live entities from a KVStore. For snapshot / checkpoint files, I think @HeartSaVioR has already posted a good solution in #25811, which has good compatibility for serialization/de-serialization. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] nonsleepr commented on a change in pull request #26000: [SPARK-29330][CORE][YARN] Allow users to chose the name of Spark Shuffle service

nonsleepr commented on a change in pull request #26000: [SPARK-29330][CORE][YARN] Allow users to chose the name of Spark Shuffle service URL: https://github.com/apache/spark/pull/26000#discussion_r333594674 ## File path: docs/running-on-yarn.md ## @@ -492,6 +492,13 @@ To use a custom metrics.properties for the application master and executors, upd If it is not set then the YARN application ID is used. + + spark.yarn.shuffle.service.name + spark_shuffle + +Name of the external shuffle service. Review comment: That's what "external" word is for, isn't it? Should I reword it as follows? > Name of the external shuffle service used by executors. *The external service is configured and started by YARN (see [Configuring the External Shuffle Service](#configuring-the-external-shuffle-service) for details). Apache Spark distribution uses name `spark_shuffle`, but other distributions (e.g. HDP) might use other names.* This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] tgravescs commented on a change in pull request #26078: [SPARK-29151][CORE] Support fractional resources for task resource scheduling

tgravescs commented on a change in pull request #26078: [SPARK-29151][CORE]

Support fractional resources for task resource scheduling

URL: https://github.com/apache/spark/pull/26078#discussion_r333581119

##

File path: core/src/main/scala/org/apache/spark/SparkContext.scala

##

@@ -2775,7 +2775,10 @@ object SparkContext extends Logging {

s" = ${taskReq.amount}")

}

// Compare and update the max slots each executor can provide.

-val resourceNumSlots = execAmount / taskReq.amount

+// If the configured amount per task was < 1.0, a task is subdividing

+// executor resources. If the amount per task was > 1.0, the task wants

+// multiple executor resources.

+val resourceNumSlots = Math.floor(execAmount *

taskReq.numParts/taskReq.amount).toInt

Review comment:

add spaces around the "/"

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] tgravescs commented on a change in pull request #26078: [SPARK-29151][CORE] Support fractional resources for task resource scheduling

tgravescs commented on a change in pull request #26078: [SPARK-29151][CORE]

Support fractional resources for task resource scheduling

URL: https://github.com/apache/spark/pull/26078#discussion_r333539938

##

File path: docs/configuration.md

##

@@ -1982,9 +1982,13 @@ Apart from these, the following properties are also

available, and may be useful

spark.task.resource.{resourceName}.amount

1

-Amount of a particular resource type to allocate for each task. If this is

specified

-you must also provide the executor config

spark.executor.resource.{resourceName}.amount

-and any corresponding discovery configs so that your executors are created

with that resource type.

+Amount of a particular resource type to allocate for each task, note that

this can be a double.

+If this is specified you must also provide the executor config

+spark.executor.resource.{resourceName}.amount and any

corresponding discovery configs

+so that your executors are created with that resource type. In addition to

whole amounts,

+a fractional amount (for example, 0.25, or 1/4th of a resource) may be

specified.

Review comment:

change "or 1/4th" to something like "which means 1/4th". They way I read

the or makes me question is I can pass in "1/4" to the config, just want it to

be clear.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] tgravescs commented on a change in pull request #26078: [SPARK-29151][CORE] Support fractional resources for task resource scheduling

tgravescs commented on a change in pull request #26078: [SPARK-29151][CORE]

Support fractional resources for task resource scheduling

URL: https://github.com/apache/spark/pull/26078#discussion_r333571682

##

File path:

core/src/main/scala/org/apache/spark/scheduler/ExecutorResourceInfo.scala

##

@@ -25,10 +25,13 @@ import org.apache.spark.resource.{ResourceAllocator,

ResourceInformation}

* information.

* @param name Resource name

* @param addresses Resource addresses provided by the executor

+ * @param numParts Number of ways each resource is subdivided when scheduling

tasks

*/

-private[spark] class ExecutorResourceInfo(name: String, addresses: Seq[String])

+private[spark] class ExecutorResourceInfo(name: String, addresses: Seq[String],

+ numParts: Int)

Review comment:

fix formatting, should be 4 spaces idented from left

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] tgravescs commented on a change in pull request #26078: [SPARK-29151][CORE] Support fractional resources for task resource scheduling

tgravescs commented on a change in pull request #26078: [SPARK-29151][CORE]

Support fractional resources for task resource scheduling

URL: https://github.com/apache/spark/pull/26078#discussion_r333586973

##

File path:

core/src/main/scala/org/apache/spark/scheduler/cluster/CoarseGrainedSchedulerBackend.scala

##

@@ -215,7 +217,14 @@ class CoarseGrainedSchedulerBackend(scheduler:

TaskSchedulerImpl, val rpcEnv: Rp

totalCoreCount.addAndGet(cores)

totalRegisteredExecutors.addAndGet(1)

val resourcesInfo = resources.map{ case (k, v) =>

-(v.name, new ExecutorResourceInfo(v.name, v.addresses))}

+(v.name,

+ new ExecutorResourceInfo(v.name, v.addresses,

+ taskResources

Review comment:

instead of doing this filter each time how about we convert taskResources

into a Map (and rename it) one time at the top when its declared and then just

lookup in the map here.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] tgravescs commented on a change in pull request #26078: [SPARK-29151][CORE] Support fractional resources for task resource scheduling

tgravescs commented on a change in pull request #26078: [SPARK-29151][CORE] Support fractional resources for task resource scheduling URL: https://github.com/apache/spark/pull/26078#discussion_r333538698 ## File path: core/src/main/scala/org/apache/spark/resource/ResourceUtils.scala ## @@ -47,7 +48,9 @@ private[spark] case class ResourceRequest( discoveryScript: Option[String], vendor: Option[String]) -private[spark] case class ResourceRequirement(resourceName: String, amount: Int) +private[spark] case class ResourceRequirement(resourceName: String, amount: Int, Review comment: lets add description, especially explaining numParts and amount. Mention fractional use case where amount is user specified get changed to 1 and numParts is the number per resource equivalent. Also need to fix formatting. Please use formatting like ResourceRequest above This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] tgravescs commented on a change in pull request #26078: [SPARK-29151][CORE] Support fractional resources for task resource scheduling

tgravescs commented on a change in pull request #26078: [SPARK-29151][CORE]

Support fractional resources for task resource scheduling

URL: https://github.com/apache/spark/pull/26078#discussion_r333564756

##

File path: docs/configuration.md

##

@@ -1982,9 +1982,13 @@ Apart from these, the following properties are also

available, and may be useful

spark.task.resource.{resourceName}.amount

1

-Amount of a particular resource type to allocate for each task. If this is

specified

-you must also provide the executor config

spark.executor.resource.{resourceName}.amount

-and any corresponding discovery configs so that your executors are created

with that resource type.

+Amount of a particular resource type to allocate for each task, note that

this can be a double.

+If this is specified you must also provide the executor config

+spark.executor.resource.{resourceName}.amount and any

corresponding discovery configs

+so that your executors are created with that resource type. In addition to

whole amounts,

+a fractional amount (for example, 0.25, or 1/4th of a resource) may be

specified.

+Fractional amounts must be less than or equal to 0.5, or in other words,

the minimum amount of

Review comment:

we should also add that any fractional value specified gets rounded to have

an even number of tasks per resource. ie 0. end up with 4 tasks per

resource

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] tgravescs commented on a change in pull request #26078: [SPARK-29151][CORE] Support fractional resources for task resource scheduling

tgravescs commented on a change in pull request #26078: [SPARK-29151][CORE]

Support fractional resources for task resource scheduling

URL: https://github.com/apache/spark/pull/26078#discussion_r333582986

##

File path:

core/src/main/scala/org/apache/spark/resource/ResourceAllocator.scala

##

@@ -30,27 +30,35 @@ trait ResourceAllocator {

protected def resourceName: String

protected def resourceAddresses: Seq[String]

+ protected def resourcesPerAddress: Int

/**

- * Map from an address to its availability, the value `true` means the

address is available,

- * while value `false` means the address is assigned.

+ * Map from an address to its availability, a value > 0 means the address is

available,

+ * while value of 0 means the address is fully assigned.

+ *

+ * For task resources ([[org.apache.spark.scheduler.ExecutorResourceInfo]]),

this value

+ * can be fractional.

Review comment:

I think we should clarify, here its actually not fractional its a multiple.

I think if its called tasksPerAddress that will help

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] tgravescs commented on a change in pull request #26078: [SPARK-29151][CORE] Support fractional resources for task resource scheduling

tgravescs commented on a change in pull request #26078: [SPARK-29151][CORE]

Support fractional resources for task resource scheduling

URL: https://github.com/apache/spark/pull/26078#discussion_r333574367

##

File path:

core/src/main/scala/org/apache/spark/scheduler/ExecutorResourceInfo.scala

##

@@ -25,10 +25,13 @@ import org.apache.spark.resource.{ResourceAllocator,

ResourceInformation}

* information.

* @param name Resource name

* @param addresses Resource addresses provided by the executor

+ * @param numParts Number of ways each resource is subdivided when scheduling

tasks

*/

-private[spark] class ExecutorResourceInfo(name: String, addresses: Seq[String])

+private[spark] class ExecutorResourceInfo(name: String, addresses: Seq[String],

+ numParts: Int)

extends ResourceInformation(name, addresses.toArray) with ResourceAllocator {

override protected def resourceName = this.name

override protected def resourceAddresses = this.addresses

+ override protected def resourcesPerAddress = numParts

Review comment:

I think we should rename. resourcesPerAddress could be confusing since an

address is a single resource.

I'm thinking we just call this tasksPerAddress and perhaps update this to be

consistent everywhere - ie rename numParts as well.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] viirya commented on issue #25979: [SPARK-29295][SQL] Insert overwrite to Hive external table partition should delete old data

viirya commented on issue #25979: [SPARK-29295][SQL] Insert overwrite to Hive external table partition should delete old data URL: https://github.com/apache/spark/pull/25979#issuecomment-540638149 @cloud-fan @felixcheung Do you have more comments on this? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] MaxGekk commented on a change in pull request #26079: [SPARK-29369][SQL] Support string intervals without the `interval` prefix

MaxGekk commented on a change in pull request #26079: [SPARK-29369][SQL]

Support string intervals without the `interval` prefix

URL: https://github.com/apache/spark/pull/26079#discussion_r333579599

##

File path:

common/unsafe/src/main/java/org/apache/spark/unsafe/types/CalendarInterval.java

##

@@ -73,45 +72,53 @@ private static long toLong(String s) {

* This method is case-insensitive.

*/

public static CalendarInterval fromString(String s) {

-if (s == null) {

- return null;

-}

-s = s.trim();

-Matcher m = p.matcher(s);

-if (!m.matches() || s.compareToIgnoreCase("interval") == 0) {

+try {

+ return fromCaseInsensitiveString(s);

+} catch (IllegalArgumentException e) {

return null;

-} else {

- long months = toLong(m.group(1)) * 12 + toLong(m.group(2));

- long microseconds = toLong(m.group(3)) * MICROS_PER_WEEK;

- microseconds += toLong(m.group(4)) * MICROS_PER_DAY;

- microseconds += toLong(m.group(5)) * MICROS_PER_HOUR;

- microseconds += toLong(m.group(6)) * MICROS_PER_MINUTE;

- microseconds += toLong(m.group(7)) * MICROS_PER_SECOND;

- microseconds += toLong(m.group(8)) * MICROS_PER_MILLI;

- microseconds += toLong(m.group(9));

- return new CalendarInterval((int) months, microseconds);

}

}

/**

- * Convert a string to CalendarInterval. Unlike fromString, this method can

handle

+ * Convert a string to CalendarInterval. This method can handle

* strings without the `interval` prefix and throws IllegalArgumentException

* when the input string is not a valid interval.

*

* @throws IllegalArgumentException if the string is not a valid internal.

*/

public static CalendarInterval fromCaseInsensitiveString(String s) {

-if (s == null || s.trim().isEmpty()) {

- throw new IllegalArgumentException("Interval cannot be null or blank.");

+if (s == null) {

+ throw new IllegalArgumentException("Interval cannot be null");

}

-String sInLowerCase = s.trim().toLowerCase(Locale.ROOT);

-String interval =

- sInLowerCase.startsWith("interval ") ? sInLowerCase : "interval " +

sInLowerCase;

-CalendarInterval cal = fromString(interval);

-if (cal == null) {

+String trimmed = s.trim();

+if (trimmed.isEmpty()) {

+ throw new IllegalArgumentException("Interval cannot be blank");

+}

+String prefix = "interval";

+String intervalStr = trimmed;

+// Checks the given interval string does not start with the `interval`

prefix

+if (!intervalStr.regionMatches(true, 0, prefix, 0, prefix.length())) {

+ // Prepend `interval` if it does not present because

+ // the regular expression strictly require it.

Review comment:

> String intervalStr = trimmed.toLowerCase();

Your code is more expensive because you lower case whole input string.

> // parse the interval string assuming there is no leading "interval"

Here there is a problem with current regexp when you delete the anchor

`"interval"`. Without this anchor, it cannot match to valid inputs:

```scala

scala> import java.util.regex._

import java.util.regex._

scala> def unitRegex(unit: String) = "(?:\\s+(-?\\d+)\\s+" + unit + "s?)?"

unitRegex: (unit: String)String

scala> val p = Pattern.compile(unitRegex("year") + unitRegex("month") +

| unitRegex("week") + unitRegex("day") + unitRegex("hour") +

unitRegex("minute") +

| unitRegex("second") + unitRegex("millisecond") +

unitRegex("microsecond"),

| Pattern.CASE_INSENSITIVE)

p: java.util.regex.Pattern =

(?:\s+(-?\d+)\s+years?)?(?:\s+(-?\d+)\s+months?)?(?:\s+(-?\d+)\s+weeks?)?(?:\s+(-?\d+)\s+days?)?(?:\s+(-?\d+)\s+hours?)?(?:\s+(-?\d+)\s+minutes?)?(?:\s+(-?\d+)\s+seconds?)?(?:\s+(-?\d+)\s+milliseconds?)?(?:\s+(-?\d+)\s+microseconds?)?

scala> val m = p.matcher("1 month 1 second")

m: java.util.regex.Matcher =

java.util.regex.Matcher[pattern=(?:\s+(-?\d+)\s+years?)?(?:\s+(-?\d+)\s+months?)?(?:\s+(-?\d+)\s+weeks?)?(?:\s+(-?\d+)\s+days?)?(?:\s+(-?\d+)\s+hours?)?(?:\s+(-?\d+)\s+minutes?)?(?:\s+(-?\d+)\s+seconds?)?(?:\s+(-?\d+)\s+milliseconds?)?(?:\s+(-?\d+)\s+microseconds?)?

region=0,16 lastmatch=]

scala> m.matches()

res7: Boolean = false

```

If we added it back:

```scala

scala> val p = Pattern.compile("interval" + unitRegex("year") +

unitRegex("month") +

| unitRegex("week") + unitRegex("day") + unitRegex("hour") +

unitRegex("minute") +

| unitRegex("second") + unitRegex("millisecond") +

unitRegex("microsecond"),

| Pattern.CASE_INSENSITIVE)

p: java.util.regex.Pattern =

interval(?:\s+(-?\d+)\s+years?)?(?:\s+(-?\d+)\s+months?)?(?:\s+(-?\d+)\s+weeks?)?(?:\s+(-?\d+)\s+days?)?(?:\s+(-?\d+)\s+hours?)?(?:\s+(-?\d+)\s+minutes?)?(?:\s+(-?\d+)\s+seconds?)?(?:\s+(-?\d+)\s+milliseconds?)?(?:\s+(-?\d+)\s+microseconds?)?

scala> val m = p.matcher("interval 1

[GitHub] [spark] cloud-fan commented on a change in pull request #26079: [SPARK-29369][SQL] Support string intervals without the `interval` prefix

cloud-fan commented on a change in pull request #26079: [SPARK-29369][SQL]

Support string intervals without the `interval` prefix

URL: https://github.com/apache/spark/pull/26079#discussion_r333562923

##

File path:

common/unsafe/src/main/java/org/apache/spark/unsafe/types/CalendarInterval.java

##

@@ -73,45 +72,53 @@ private static long toLong(String s) {

* This method is case-insensitive.

*/

public static CalendarInterval fromString(String s) {

-if (s == null) {

- return null;

-}

-s = s.trim();

-Matcher m = p.matcher(s);

-if (!m.matches() || s.compareToIgnoreCase("interval") == 0) {

+try {

+ return fromCaseInsensitiveString(s);

+} catch (IllegalArgumentException e) {

return null;

-} else {

- long months = toLong(m.group(1)) * 12 + toLong(m.group(2));

- long microseconds = toLong(m.group(3)) * MICROS_PER_WEEK;

- microseconds += toLong(m.group(4)) * MICROS_PER_DAY;

- microseconds += toLong(m.group(5)) * MICROS_PER_HOUR;

- microseconds += toLong(m.group(6)) * MICROS_PER_MINUTE;

- microseconds += toLong(m.group(7)) * MICROS_PER_SECOND;

- microseconds += toLong(m.group(8)) * MICROS_PER_MILLI;

- microseconds += toLong(m.group(9));

- return new CalendarInterval((int) months, microseconds);

}

}

/**

- * Convert a string to CalendarInterval. Unlike fromString, this method can

handle

+ * Convert a string to CalendarInterval. This method can handle

* strings without the `interval` prefix and throws IllegalArgumentException

* when the input string is not a valid interval.

*

* @throws IllegalArgumentException if the string is not a valid internal.

*/

public static CalendarInterval fromCaseInsensitiveString(String s) {

-if (s == null || s.trim().isEmpty()) {

- throw new IllegalArgumentException("Interval cannot be null or blank.");

+if (s == null) {

+ throw new IllegalArgumentException("Interval cannot be null");

}

-String sInLowerCase = s.trim().toLowerCase(Locale.ROOT);

-String interval =

- sInLowerCase.startsWith("interval ") ? sInLowerCase : "interval " +

sInLowerCase;

-CalendarInterval cal = fromString(interval);

-if (cal == null) {

+String trimmed = s.trim();

+if (trimmed.isEmpty()) {

+ throw new IllegalArgumentException("Interval cannot be blank");

+}

+String prefix = "interval";

+String intervalStr = trimmed;

+// Checks the given interval string does not start with the `interval`

prefix

+if (!intervalStr.regionMatches(true, 0, prefix, 0, prefix.length())) {

+ // Prepend `interval` if it does not present because

+ // the regular expression strictly require it.

Review comment:

How about something like

```

String intervalStr = trimmed.toLowerCase();

if (intervalStr.startsWith("interval")) {

intervalStr = intervalStr.drop(8)

}

// parse the interval string assuming there is no leading "interval"

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a change in pull request #26079: [SPARK-29369][SQL] Support string intervals without the `interval` prefix

cloud-fan commented on a change in pull request #26079: [SPARK-29369][SQL]

Support string intervals without the `interval` prefix

URL: https://github.com/apache/spark/pull/26079#discussion_r333562923

##

File path:

common/unsafe/src/main/java/org/apache/spark/unsafe/types/CalendarInterval.java

##

@@ -73,45 +72,53 @@ private static long toLong(String s) {

* This method is case-insensitive.

*/

public static CalendarInterval fromString(String s) {

-if (s == null) {

- return null;

-}

-s = s.trim();

-Matcher m = p.matcher(s);

-if (!m.matches() || s.compareToIgnoreCase("interval") == 0) {

+try {

+ return fromCaseInsensitiveString(s);

+} catch (IllegalArgumentException e) {

return null;

-} else {

- long months = toLong(m.group(1)) * 12 + toLong(m.group(2));

- long microseconds = toLong(m.group(3)) * MICROS_PER_WEEK;

- microseconds += toLong(m.group(4)) * MICROS_PER_DAY;

- microseconds += toLong(m.group(5)) * MICROS_PER_HOUR;

- microseconds += toLong(m.group(6)) * MICROS_PER_MINUTE;

- microseconds += toLong(m.group(7)) * MICROS_PER_SECOND;

- microseconds += toLong(m.group(8)) * MICROS_PER_MILLI;

- microseconds += toLong(m.group(9));

- return new CalendarInterval((int) months, microseconds);

}

}

/**

- * Convert a string to CalendarInterval. Unlike fromString, this method can

handle

+ * Convert a string to CalendarInterval. This method can handle

* strings without the `interval` prefix and throws IllegalArgumentException

* when the input string is not a valid interval.

*

* @throws IllegalArgumentException if the string is not a valid internal.

*/

public static CalendarInterval fromCaseInsensitiveString(String s) {

-if (s == null || s.trim().isEmpty()) {

- throw new IllegalArgumentException("Interval cannot be null or blank.");

+if (s == null) {

+ throw new IllegalArgumentException("Interval cannot be null");

}

-String sInLowerCase = s.trim().toLowerCase(Locale.ROOT);

-String interval =

- sInLowerCase.startsWith("interval ") ? sInLowerCase : "interval " +

sInLowerCase;

-CalendarInterval cal = fromString(interval);

-if (cal == null) {

+String trimmed = s.trim();

+if (trimmed.isEmpty()) {

+ throw new IllegalArgumentException("Interval cannot be blank");

+}

+String prefix = "interval";

+String intervalStr = trimmed;

+// Checks the given interval string does not start with the `interval`

prefix

+if (!intervalStr.regionMatches(true, 0, prefix, 0, prefix.length())) {

+ // Prepend `interval` if it does not present because

+ // the regular expression strictly require it.

Review comment:

How about something like

```

String intervalStr = trimmed.toLowerCase();

if (intervalStr.startsWith("interval")) {

intervalStr = intervalStr.drop(8)

}

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] jkremser edited a comment on issue #26075: [WIP][K8S] Spark operator

jkremser edited a comment on issue #26075: [WIP][K8S] Spark operator URL: https://github.com/apache/spark/pull/26075#issuecomment-540605666 Thanks for looking into this. Ok, I will open the discussion on the dev list. I wanted to have the PR open before the summit and put the link on the slides. And yes, it's basically dump of that repo (radanalyticsio/spark-operator) with couple of modifications. I for instance aligned the versions of the libraries to be the same as those that are used by Spark. > Is this the following? Please give a clear explanation if this is your own contribution. https://github.com/GoogleCloudPlatform/spark-on-k8s-operator No, it's different operator written in Java (GCP's one is in Go) and it supports three different custom resources, `SparkCluster` (spark running in standalone mode in the pods), `SparkApplication` (this has the same API as the operator from GCP, so it's using spark-on-k8s scheduling) and `SparkHistoryServer`. So there is some overlap, but it's a different thing. This operator is also available on [operatorhub.io](operatorhub.io) ([link](https://operatorhub.io/operator/radanalytics-spark)) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] jkremser commented on issue #26075: [WIP][K8S] Spark operator

jkremser commented on issue #26075: [WIP][K8S] Spark operator URL: https://github.com/apache/spark/pull/26075#issuecomment-540605666 Thanks for looking into this. Ok, I will open the discussion on the dev list. I wanted to have the PR open before the summit and put the link on the slides. And yes, it's basically dump of that repo (radanalyticsio/spark-operator) with couple of modifications. I for instance aligned the versions of the libraries to be the same as those that are used by Spark. > Is this the following? Please give a clear explanation if this is your own contribution. https://github.com/GoogleCloudPlatform/spark-on-k8s-operator No, it's different operator written in Java and it supports three different custom resources, `SparkCluster` (spark running in standalone mode in the pods), `SparkApplication` (this has the same API as the operator from GCP, so it's using spark-on-k8s scheduling) and `SparkHistoryServer`. So there is some overlap, but it's a different thing. This operator is also available on [operatorhub.io](operatorhub.io) ([link](https://operatorhub.io/operator/radanalytics-spark)) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] tgravescs commented on issue #26078: [SPARK-29151][CORE] Support fractional resources for task resource scheduling

tgravescs commented on issue #26078: [SPARK-29151][CORE] Support fractional resources for task resource scheduling URL: https://github.com/apache/spark/pull/26078#issuecomment-540591922 ok to test This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] wangyum commented on a change in pull request #26014: [SPARK-29349][SQL] Support FETCH_PRIOR in Thriftserver fetch request

wangyum commented on a change in pull request #26014: [SPARK-29349][SQL]

Support FETCH_PRIOR in Thriftserver fetch request

URL: https://github.com/apache/spark/pull/26014#discussion_r333516458

##

File path:

sql/hive-thriftserver/src/test/scala/org/apache/spark/sql/hive/thriftserver/HiveThriftServer2Suites.scala

##

@@ -684,6 +685,92 @@ class HiveThriftBinaryServerSuite extends

HiveThriftJdbcTest {

assert(e.getMessage.contains("org.apache.spark.sql.catalyst.parser.ParseException"))

}

}

+

+ test("ThriftCLIService FetchResults FETCH_FIRST, FETCH_NEXT, FETCH_PRIOR") {

+def checkResult(rows: RowSet, start: Long, end: Long): Unit = {

+ assert(rows.getStartOffset() == start)

+ assert(rows.numRows() == end - start)

+ rows.iterator.asScala.zip((start until end).iterator).foreach { case

(row, v) =>

+assert(row(0).asInstanceOf[Long] === v)

+ }

+}

+

+withCLIServiceClient { client =>

+ val user = System.getProperty("user.name")

+ val sessionHandle = client.openSession(user, "")

+

+ val confOverlay = new java.util.HashMap[java.lang.String,

java.lang.String]

+ val operationHandle = client.executeStatement(

+sessionHandle,

+"SELECT * FROM range(10)",

+confOverlay) // 10 rows result with sequence 0, 1, 2, ..., 9

+ var rows: RowSet = null

+

+ // Fetch 5 rows with FETCH_NEXT

+ rows = client.fetchResults(

+operationHandle, FetchOrientation.FETCH_NEXT, 5,

FetchType.QUERY_OUTPUT)

+ checkResult(rows, 0, 5) // fetched [0, 5)

+

+ // Fetch another 2 rows with FETCH_NEXT

+ rows = client.fetchResults(

+operationHandle, FetchOrientation.FETCH_NEXT, 2,

FetchType.QUERY_OUTPUT)

+ checkResult(rows, 5, 7) // fetched [5, 7)

+

+ // FETCH_PRIOR 3 rows

Review comment:

Thank you @juliuszsompolski

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] wangyum commented on a change in pull request #26014: [SPARK-29349][SQL] Support FETCH_PRIOR in Thriftserver fetch request

wangyum commented on a change in pull request #26014: [SPARK-29349][SQL]

Support FETCH_PRIOR in Thriftserver fetch request

URL: https://github.com/apache/spark/pull/26014#discussion_r333516458

##

File path:

sql/hive-thriftserver/src/test/scala/org/apache/spark/sql/hive/thriftserver/HiveThriftServer2Suites.scala

##

@@ -684,6 +685,92 @@ class HiveThriftBinaryServerSuite extends

HiveThriftJdbcTest {

assert(e.getMessage.contains("org.apache.spark.sql.catalyst.parser.ParseException"))

}

}

+

+ test("ThriftCLIService FetchResults FETCH_FIRST, FETCH_NEXT, FETCH_PRIOR") {

+def checkResult(rows: RowSet, start: Long, end: Long): Unit = {

+ assert(rows.getStartOffset() == start)

+ assert(rows.numRows() == end - start)

+ rows.iterator.asScala.zip((start until end).iterator).foreach { case

(row, v) =>

+assert(row(0).asInstanceOf[Long] === v)

+ }

+}

+

+withCLIServiceClient { client =>

+ val user = System.getProperty("user.name")

+ val sessionHandle = client.openSession(user, "")

+

+ val confOverlay = new java.util.HashMap[java.lang.String,

java.lang.String]

+ val operationHandle = client.executeStatement(

+sessionHandle,

+"SELECT * FROM range(10)",

+confOverlay) // 10 rows result with sequence 0, 1, 2, ..., 9

+ var rows: RowSet = null

+

+ // Fetch 5 rows with FETCH_NEXT

+ rows = client.fetchResults(

+operationHandle, FetchOrientation.FETCH_NEXT, 5,

FetchType.QUERY_OUTPUT)

+ checkResult(rows, 0, 5) // fetched [0, 5)

+

+ // Fetch another 2 rows with FETCH_NEXT

+ rows = client.fetchResults(

+operationHandle, FetchOrientation.FETCH_NEXT, 2,

FetchType.QUERY_OUTPUT)

+ checkResult(rows, 5, 7) // fetched [5, 7)

+

+ // FETCH_PRIOR 3 rows

Review comment:

Thank you @juliuszsompolski?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] MaxGekk commented on a change in pull request #26079: [SPARK-29369][SQL] Support string intervals without the `interval` prefix

MaxGekk commented on a change in pull request #26079: [SPARK-29369][SQL]

Support string intervals without the `interval` prefix

URL: https://github.com/apache/spark/pull/26079#discussion_r333516377

##

File path:

common/unsafe/src/main/java/org/apache/spark/unsafe/types/CalendarInterval.java

##

@@ -73,45 +72,53 @@ private static long toLong(String s) {

* This method is case-insensitive.

*/

public static CalendarInterval fromString(String s) {

-if (s == null) {

- return null;

-}

-s = s.trim();

-Matcher m = p.matcher(s);

-if (!m.matches() || s.compareToIgnoreCase("interval") == 0) {

+try {

+ return fromCaseInsensitiveString(s);

+} catch (IllegalArgumentException e) {

return null;

-} else {

- long months = toLong(m.group(1)) * 12 + toLong(m.group(2));

- long microseconds = toLong(m.group(3)) * MICROS_PER_WEEK;

- microseconds += toLong(m.group(4)) * MICROS_PER_DAY;

- microseconds += toLong(m.group(5)) * MICROS_PER_HOUR;

- microseconds += toLong(m.group(6)) * MICROS_PER_MINUTE;

- microseconds += toLong(m.group(7)) * MICROS_PER_SECOND;

- microseconds += toLong(m.group(8)) * MICROS_PER_MILLI;

- microseconds += toLong(m.group(9));

- return new CalendarInterval((int) months, microseconds);

}

}

/**

- * Convert a string to CalendarInterval. Unlike fromString, this method can

handle

+ * Convert a string to CalendarInterval. This method can handle

* strings without the `interval` prefix and throws IllegalArgumentException

* when the input string is not a valid interval.

*

* @throws IllegalArgumentException if the string is not a valid internal.

*/

public static CalendarInterval fromCaseInsensitiveString(String s) {

-if (s == null || s.trim().isEmpty()) {

- throw new IllegalArgumentException("Interval cannot be null or blank.");

+if (s == null) {

+ throw new IllegalArgumentException("Interval cannot be null");

}

-String sInLowerCase = s.trim().toLowerCase(Locale.ROOT);

-String interval =

- sInLowerCase.startsWith("interval ") ? sInLowerCase : "interval " +

sInLowerCase;

-CalendarInterval cal = fromString(interval);

-if (cal == null) {

+String trimmed = s.trim();

+if (trimmed.isEmpty()) {

+ throw new IllegalArgumentException("Interval cannot be blank");

+}

+String prefix = "interval";

+String intervalStr = trimmed;

+// Checks the given interval string does not start with the `interval`

prefix

+if (!intervalStr.regionMatches(true, 0, prefix, 0, prefix.length())) {

+ // Prepend `interval` if it does not present because

+ // the regular expression strictly require it.

Review comment:

Probably, this needs this feature

https://www.regular-expressions.info/branchreset.html which Java's regexps

doesn't have.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] MaxGekk commented on a change in pull request #26079: [SPARK-29369][SQL] Support string intervals without the `interval` prefix

MaxGekk commented on a change in pull request #26079: [SPARK-29369][SQL]

Support string intervals without the `interval` prefix

URL: https://github.com/apache/spark/pull/26079#discussion_r333514414

##

File path:

common/unsafe/src/main/java/org/apache/spark/unsafe/types/CalendarInterval.java

##

@@ -73,45 +72,53 @@ private static long toLong(String s) {

* This method is case-insensitive.

*/

public static CalendarInterval fromString(String s) {

-if (s == null) {

- return null;

-}

-s = s.trim();

-Matcher m = p.matcher(s);

-if (!m.matches() || s.compareToIgnoreCase("interval") == 0) {

+try {

+ return fromCaseInsensitiveString(s);

+} catch (IllegalArgumentException e) {

return null;

-} else {

- long months = toLong(m.group(1)) * 12 + toLong(m.group(2));

- long microseconds = toLong(m.group(3)) * MICROS_PER_WEEK;

- microseconds += toLong(m.group(4)) * MICROS_PER_DAY;

- microseconds += toLong(m.group(5)) * MICROS_PER_HOUR;

- microseconds += toLong(m.group(6)) * MICROS_PER_MINUTE;