[GitHub] [spark] gaborgsomogyi commented on a change in pull request #29729: [SPARK-32032][SS] Avoid infinite wait in driver because of KafkaConsumer.poll(long) API

gaborgsomogyi commented on a change in pull request #29729:

URL: https://github.com/apache/spark/pull/29729#discussion_r533134611

##

File path:

sql/catalyst/src/main/scala/org/apache/spark/sql/internal/SQLConf.scala

##

@@ -1415,6 +1415,15 @@ object SQLConf {

.booleanConf

.createWithDefault(true)

+ val USE_DEPRECATED_KAFKA_OFFSET_FETCHING =

+buildConf("spark.sql.streaming.kafka.useDeprecatedOffsetFetching")

+ .internal()

+ .doc("When true, the deprecated Consumer based offset fetching used

which could cause " +

Review comment:

Fixed. Updating the description because I've double checked the

generated HTML files too.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] Ngone51 opened a new pull request #30488: [SPARK-33071][SPARK-33536][SQL] Avoid changing dataset_id of LogicalPlan in join() to not break DetectAmbiguousSelfJoin

Ngone51 opened a new pull request #30488:

URL: https://github.com/apache/spark/pull/30488

### What changes were proposed in this pull request?

Currently, `join()` uses `withPlan(logicalPlan)` for convenient to call some

Dataset functions. But it leads to the `dataset_id` inconsistent between the

`logicalPlan` and the original `Dataset`(because `withPlan(logicalPlan)` will

create a new Dataset with the new id and reset the `dataset_id` with the new id

of the `logicalPlan`). As a result, it breaks the rule

`DetectAmbiguousSelfJoin`.

In this PR, we propose to drop the usage of `withPlan` but use the

`logicalPlan` directly so its `dataset_id` doesn't change.

Besides, this PR also removes related metadata (`DATASET_ID_KEY`,

`COL_POS_KEY`) when an `Alias` tries to construct its own metadata. Because the

`Alias` is no longer a reference column after converting to an `Attribute`. To

achieve that, we add a new field, `deniedMetadataKeys`, to indicate the

metadata that needs to be removed.

### Why are the changes needed?

For the query below, it returns the wrong result while it should throws

ambiguous self join exception instead:

```scala

val emp1 = Seq[TestData](

TestData(1, "sales"),

TestData(2, "personnel"),

TestData(3, "develop"),

TestData(4, "IT")).toDS()

val emp2 = Seq[TestData](

TestData(1, "sales"),

TestData(2, "personnel"),

TestData(3, "develop")).toDS()

val emp3 = emp1.join(emp2, emp1("key") === emp2("key")).select(emp1("*"))

emp1.join(emp3, emp1.col("key") === emp3.col("key"), "left_outer")

.select(emp1.col("*"), emp3.col("key").as("e2")).show()

// wrong result

+---+-+---+

|key|value| e2|

+---+-+---+

| 1|sales| 1|

| 2|personnel| 2|

| 3| develop| 3|

| 4| IT| 4|

+---+-+---+

```

This PR fixes the wrong behaviour.

### Does this PR introduce _any_ user-facing change?

Yes, users hit the exception instead of the wrong result after this PR.

### How was this patch tested?

Added a new unit test.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] Ngone51 closed pull request #30488: [SPARK-33071][SPARK-33536][SQL] Avoid changing dataset_id of LogicalPlan in join() to not break DetectAmbiguousSelfJoin

Ngone51 closed pull request #30488: URL: https://github.com/apache/spark/pull/30488 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HeartSaVioR commented on pull request #30395: [SPARK-32863][SS] Full outer stream-stream join

HeartSaVioR commented on pull request #30395: URL: https://github.com/apache/spark/pull/30395#issuecomment-736282615 All current reviewers are OK with this, so I'm kicking the Github Action build again. Once the test passes I'll merge this. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on a change in pull request #29966: [SPARK-33084][CORE][SQL] Add jar support ivy path

AngersZh commented on a change in pull request #29966:

URL: https://github.com/apache/spark/pull/29966#discussion_r533123647

##

File path: core/src/main/scala/org/apache/spark/SparkContext.scala

##

@@ -1919,18 +1919,24 @@ class SparkContext(config: SparkConf) extends Logging {

// A JAR file which exists only on the driver node

case "file" => addLocalJarFile(new File(uri.getPath))

// A JAR file which exists locally on every worker node

- case "local" => "file:" + uri.getPath

+ case "local" => Array("file:" + uri.getPath)

+ case "ivy" =>

+// Since `new Path(path).toUri` will lose query information,

+// so here we use `URI.create(path)`

+

DependencyUtils.resolveMavenDependencies(URI.create(path)).split(",")

case _ => checkRemoteJarFile(path)

}

}

- if (key != null) {

+ if (keys.nonEmpty) {

val timestamp = if (addedOnSubmit) startTime else

System.currentTimeMillis

-if (addedJars.putIfAbsent(key, timestamp).isEmpty) {

- logInfo(s"Added JAR $path at $key with timestamp $timestamp")

- postEnvironmentUpdate()

-} else {

- logWarning(s"The jar $path has been added already. Overwriting of

added jars " +

-"is not supported in the current version.")

+keys.foreach { key =>

+ if (addedJars.putIfAbsent(key, timestamp).isEmpty) {

+logInfo(s"Added JAR $path at $key with timestamp $timestamp")

+postEnvironmentUpdate()

+ } else {

+logWarning(s"The jar $path has been added already. Overwriting of

added jars " +

Review comment:

> If `transitive=true` and there are too many dependencies, users

possibly see a lot of warning messages?

Also `logInfo(s"Added JAR $path at $key with timestamp $timestamp")`

How about like

```

val (added, existed) = keys.partition(addedJars.putIfAbsent(_,

timestamp).isEmpty)

if (added.nonEmpty) {

logInfo(s"Added JAR $path at ${added.mkString(",")} with timestamp

$timestamp")

postEnvironmentUpdate()

}

if(existed.nonEmpty) {

logWarning(s"The dependencies jars ${existed.mkString(",")} of

$path has been added" +

s" already. Overwriting of added jars is not supported in the

current version.")

}

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] aokolnychyi commented on a change in pull request #30558: [SPARK-33612][SQL] Add v2SourceRewriteRules batch to Optimizer

aokolnychyi commented on a change in pull request #30558:

URL: https://github.com/apache/spark/pull/30558#discussion_r533123744

##

File path:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/Optimizer.scala

##

@@ -189,6 +189,9 @@ abstract class Optimizer(catalogManager: CatalogManager)

// plan may contain nodes that do not report stats. Anything that uses

stats must run after

// this batch.

Batch("Early Filter and Projection Push-Down", Once,

earlyScanPushDownRules: _*) :+

+// This batch rewrites plans for v2 tables. It should be run after the

operator

+// optimization batch and before any batches that depend on stats.

+Batch("V2 Source Rewrite Rules", Once, v2SourceRewriteRules: _*) :+

Review comment:

Right now, scan construction and pushdown happens in the same method. We

could split that later so I agree with moving this batch before early filter

and projection pushdown.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on a change in pull request #29966: [SPARK-33084][CORE][SQL] Add jar support ivy path

AngersZh commented on a change in pull request #29966:

URL: https://github.com/apache/spark/pull/29966#discussion_r533123647

##

File path: core/src/main/scala/org/apache/spark/SparkContext.scala

##

@@ -1919,18 +1919,24 @@ class SparkContext(config: SparkConf) extends Logging {

// A JAR file which exists only on the driver node

case "file" => addLocalJarFile(new File(uri.getPath))

// A JAR file which exists locally on every worker node

- case "local" => "file:" + uri.getPath

+ case "local" => Array("file:" + uri.getPath)

+ case "ivy" =>

+// Since `new Path(path).toUri` will lose query information,

+// so here we use `URI.create(path)`

+

DependencyUtils.resolveMavenDependencies(URI.create(path)).split(",")

case _ => checkRemoteJarFile(path)

}

}

- if (key != null) {

+ if (keys.nonEmpty) {

val timestamp = if (addedOnSubmit) startTime else

System.currentTimeMillis

-if (addedJars.putIfAbsent(key, timestamp).isEmpty) {

- logInfo(s"Added JAR $path at $key with timestamp $timestamp")

- postEnvironmentUpdate()

-} else {

- logWarning(s"The jar $path has been added already. Overwriting of

added jars " +

-"is not supported in the current version.")

+keys.foreach { key =>

+ if (addedJars.putIfAbsent(key, timestamp).isEmpty) {

+logInfo(s"Added JAR $path at $key with timestamp $timestamp")

+postEnvironmentUpdate()

+ } else {

+logWarning(s"The jar $path has been added already. Overwriting of

added jars " +

Review comment:

> If `transitive=true` and there are too many dependencies, users

possibly see a lot of warning messages?

Also `logInfo(s"Added JAR $path at $key with timestamp $timestamp")`

How about like

```

val (added, existed) = keys.partition(addedJars.putIfAbsent(_,

timestamp).isEmpty)

if (added.nonEmpty) {

logInfo(s"Added JAR $path at ${added.mkString(",")} with timestamp

$timestamp")

postEnvironmentUpdate()

}

if(existed.nonEmpty) {

logWarning(s"The dependencies jars ${existed.mkString(",")} of

$path has been added" +

s" already. Overwriting of added jars is not supported in the

current version.")

}

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on a change in pull request #29966: [SPARK-33084][CORE][SQL] Add jar support ivy path

AngersZh commented on a change in pull request #29966:

URL: https://github.com/apache/spark/pull/29966#discussion_r533123647

##

File path: core/src/main/scala/org/apache/spark/SparkContext.scala

##

@@ -1919,18 +1919,24 @@ class SparkContext(config: SparkConf) extends Logging {

// A JAR file which exists only on the driver node

case "file" => addLocalJarFile(new File(uri.getPath))

// A JAR file which exists locally on every worker node

- case "local" => "file:" + uri.getPath

+ case "local" => Array("file:" + uri.getPath)

+ case "ivy" =>

+// Since `new Path(path).toUri` will lose query information,

+// so here we use `URI.create(path)`

+

DependencyUtils.resolveMavenDependencies(URI.create(path)).split(",")

case _ => checkRemoteJarFile(path)

}

}

- if (key != null) {

+ if (keys.nonEmpty) {

val timestamp = if (addedOnSubmit) startTime else

System.currentTimeMillis

-if (addedJars.putIfAbsent(key, timestamp).isEmpty) {

- logInfo(s"Added JAR $path at $key with timestamp $timestamp")

- postEnvironmentUpdate()

-} else {

- logWarning(s"The jar $path has been added already. Overwriting of

added jars " +

-"is not supported in the current version.")

+keys.foreach { key =>

+ if (addedJars.putIfAbsent(key, timestamp).isEmpty) {

+logInfo(s"Added JAR $path at $key with timestamp $timestamp")

+postEnvironmentUpdate()

+ } else {

+logWarning(s"The jar $path has been added already. Overwriting of

added jars " +

Review comment:

> If `transitive=true` and there are too many dependencies, users

possibly see a lot of warning messages?

Also `logInfo(s"Added JAR $path at $key with timestamp $timestamp")`

How about like

```

val (added, existed) = keys.partition(addedJars.putIfAbsent(_,

timestamp).isEmpty)

if (added.nonEmpty) {

logInfo(s"Added JAR $path at ${added.mkString(",")} with timestamp

$timestamp")

postEnvironmentUpdate()

}

if(existed.nonEmpty) {

logWarning(s"The dependencies jars ${existed.mkString(",")} of

$path has been added" +

s" already. Overwriting of added jars is not supported in the

current version.")

}

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on a change in pull request #30504: [SPARK-33544][SQL] Optimizer should not insert filter when explode with CreateArray/CreateMap

HyukjinKwon commented on a change in pull request #30504:

URL: https://github.com/apache/spark/pull/30504#discussion_r533123592

##

File path:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/expressions.scala

##

@@ -48,6 +48,9 @@ object ConstantFolding extends Rule[LogicalPlan] {

// object and running eval unnecessarily.

case l: Literal => l

+ case Size(c: CreateArray, _) => Literal(c.children.length)

+ case Size(c: CreateMap, _) => Literal(c.children.length / 2)

Review comment:

Hm .. one case I can imagine is the case when the children of

`CreateArray` or `CreateMap` throw an exception e.g.) from a UDF or when ANSI

mode is enabled. After this change, looks it's going to suppress such

exceptions. For example:

```scala

spark.range(1).selectExpr("size(array(assert_true(false)))").show()

```

As an alternative, we could maybe only allow this when the children of

`CreateArray` or `CreateMap` are safe and don't throw an exception? e.g.) only

when they are `AttributeReference`s?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] otterc commented on a change in pull request #30312: [SPARK-32917][SHUFFLE][CORE] Adds support for executors to push shuffle blocks after successful map task completion

otterc commented on a change in pull request #30312:

URL: https://github.com/apache/spark/pull/30312#discussion_r533121614

##

File path:

core/src/test/scala/org/apache/spark/shuffle/ShuffleBlockPusherSuite.scala

##

@@ -0,0 +1,248 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.shuffle

+

+import java.io.File

+import java.net.ConnectException

+import java.nio.ByteBuffer

+

+import scala.collection.mutable.ArrayBuffer

+

+import org.mockito.{Mock, MockitoAnnotations}

+import org.mockito.Answers.RETURNS_SMART_NULLS

+import org.mockito.ArgumentMatchers.any

+import org.mockito.Mockito._

+import org.mockito.invocation.InvocationOnMock

+import org.scalatest.BeforeAndAfterEach

+

+import org.apache.spark._

+import org.apache.spark.network.buffer.ManagedBuffer

+import org.apache.spark.network.shuffle.{BlockFetchingListener,

BlockStoreClient}

+import org.apache.spark.network.shuffle.ErrorHandler.BlockPushErrorHandler

+import org.apache.spark.network.util.TransportConf

+import org.apache.spark.serializer.JavaSerializer

+import org.apache.spark.storage._

+

+class ShuffleBlockPusherSuite extends SparkFunSuite with BeforeAndAfterEach {

+

+ @Mock(answer = RETURNS_SMART_NULLS) private var blockManager: BlockManager =

_

+ @Mock(answer = RETURNS_SMART_NULLS) private var dependency:

ShuffleDependency[Int, Int, Int] = _

+ @Mock(answer = RETURNS_SMART_NULLS) private var shuffleClient:

BlockStoreClient = _

+

+ private var conf: SparkConf = _

+ private var pushedBlocks = new ArrayBuffer[String]

+

+ override def beforeEach(): Unit = {

+super.beforeEach()

+conf = new SparkConf(loadDefaults = false)

+MockitoAnnotations.initMocks(this)

+when(dependency.partitioner).thenReturn(new HashPartitioner(8))

+when(dependency.serializer).thenReturn(new JavaSerializer(conf))

+

when(dependency.getMergerLocs).thenReturn(Seq(BlockManagerId("test-client",

"test-client", 1)))

+conf.set("spark.shuffle.push.based.enabled", "true")

+conf.set("spark.shuffle.service.enabled", "true")

+// Set the env because the shuffler writer gets the shuffle client

instance from the env.

+val mockEnv = mock(classOf[SparkEnv])

+when(mockEnv.conf).thenReturn(conf)

+when(mockEnv.blockManager).thenReturn(blockManager)

+SparkEnv.set(mockEnv)

+when(blockManager.blockStoreClient).thenReturn(shuffleClient)

+ }

+

+ override def afterEach(): Unit = {

+pushedBlocks.clear()

+super.afterEach()

+ }

+

+ private def interceptPushedBlocksForSuccess(): Unit = {

+when(shuffleClient.pushBlocks(any(), any(), any(), any(), any()))

+ .thenAnswer((invocation: InvocationOnMock) => {

+val blocks = invocation.getArguments()(2).asInstanceOf[Array[String]]

+pushedBlocks ++= blocks

+val managedBuffers =

invocation.getArguments()(3).asInstanceOf[Array[ManagedBuffer]]

+val blockFetchListener =

invocation.getArguments()(4).asInstanceOf[BlockFetchingListener]

+(blocks, managedBuffers).zipped.foreach((blockId, buffer) => {

+ blockFetchListener.onBlockFetchSuccess(blockId, buffer)

+})

+ })

+ }

+

+ test("Basic block push") {

+interceptPushedBlocksForSuccess()

+new TestShuffleBlockPusher(mock(classOf[File]),

+ Array.fill(dependency.partitioner.numPartitions) { 2 }, dependency, 0,

conf)

+.initiateBlockPush()

+verify(shuffleClient, times(1))

+ .pushBlocks(any(), any(), any(), any(), any())

+assert(pushedBlocks.length == dependency.partitioner.numPartitions)

+ShuffleBlockPusher.stop()

+ }

+

+ test("Large blocks are skipped for push") {

+conf.set("spark.shuffle.push.maxBlockSizeToPush", "1k")

+interceptPushedBlocksForSuccess()

+new TestShuffleBlockPusher(mock(classOf[File]), Array(2, 2, 2, 2, 2, 2, 2,

1100),

+ dependency, 0, conf).initiateBlockPush()

+verify(shuffleClient, times(1))

+ .pushBlocks(any(), any(), any(), any(), any())

+assert(pushedBlocks.length == dependency.partitioner.numPartitions - 1)

+ShuffleBlockPusher.stop()

+ }

+

+ test("Number of blocks in flight per address are limited by

maxBlocksInFlightPerAddress") {

+

[GitHub] [spark] otterc commented on a change in pull request #30312: [SPARK-32917][SHUFFLE][CORE] Adds support for executors to push shuffle blocks after successful map task completion

otterc commented on a change in pull request #30312:

URL: https://github.com/apache/spark/pull/30312#discussion_r533120860

##

File path:

core/src/test/scala/org/apache/spark/shuffle/ShuffleBlockPusherSuite.scala

##

@@ -0,0 +1,248 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.shuffle

+

+import java.io.File

+import java.net.ConnectException

+import java.nio.ByteBuffer

+

+import scala.collection.mutable.ArrayBuffer

+

+import org.mockito.{Mock, MockitoAnnotations}

+import org.mockito.Answers.RETURNS_SMART_NULLS

+import org.mockito.ArgumentMatchers.any

+import org.mockito.Mockito._

+import org.mockito.invocation.InvocationOnMock

+import org.scalatest.BeforeAndAfterEach

+

+import org.apache.spark._

+import org.apache.spark.network.buffer.ManagedBuffer

+import org.apache.spark.network.shuffle.{BlockFetchingListener,

BlockStoreClient}

+import org.apache.spark.network.shuffle.ErrorHandler.BlockPushErrorHandler

+import org.apache.spark.network.util.TransportConf

+import org.apache.spark.serializer.JavaSerializer

+import org.apache.spark.storage._

+

+class ShuffleBlockPusherSuite extends SparkFunSuite with BeforeAndAfterEach {

+

+ @Mock(answer = RETURNS_SMART_NULLS) private var blockManager: BlockManager =

_

+ @Mock(answer = RETURNS_SMART_NULLS) private var dependency:

ShuffleDependency[Int, Int, Int] = _

+ @Mock(answer = RETURNS_SMART_NULLS) private var shuffleClient:

BlockStoreClient = _

+

+ private var conf: SparkConf = _

+ private var pushedBlocks = new ArrayBuffer[String]

+

+ override def beforeEach(): Unit = {

+super.beforeEach()

+conf = new SparkConf(loadDefaults = false)

+MockitoAnnotations.initMocks(this)

+when(dependency.partitioner).thenReturn(new HashPartitioner(8))

+when(dependency.serializer).thenReturn(new JavaSerializer(conf))

+

when(dependency.getMergerLocs).thenReturn(Seq(BlockManagerId("test-client",

"test-client", 1)))

+conf.set("spark.shuffle.push.based.enabled", "true")

+conf.set("spark.shuffle.service.enabled", "true")

+// Set the env because the shuffler writer gets the shuffle client

instance from the env.

+val mockEnv = mock(classOf[SparkEnv])

+when(mockEnv.conf).thenReturn(conf)

+when(mockEnv.blockManager).thenReturn(blockManager)

+SparkEnv.set(mockEnv)

+when(blockManager.blockStoreClient).thenReturn(shuffleClient)

+ }

+

+ override def afterEach(): Unit = {

+pushedBlocks.clear()

+super.afterEach()

+ }

+

+ private def interceptPushedBlocksForSuccess(): Unit = {

+when(shuffleClient.pushBlocks(any(), any(), any(), any(), any()))

+ .thenAnswer((invocation: InvocationOnMock) => {

+val blocks = invocation.getArguments()(2).asInstanceOf[Array[String]]

+pushedBlocks ++= blocks

+val managedBuffers =

invocation.getArguments()(3).asInstanceOf[Array[ManagedBuffer]]

+val blockFetchListener =

invocation.getArguments()(4).asInstanceOf[BlockFetchingListener]

+(blocks, managedBuffers).zipped.foreach((blockId, buffer) => {

+ blockFetchListener.onBlockFetchSuccess(blockId, buffer)

+})

+ })

+ }

+

+ test("Basic block push") {

+interceptPushedBlocksForSuccess()

+new TestShuffleBlockPusher(mock(classOf[File]),

+ Array.fill(dependency.partitioner.numPartitions) { 2 }, dependency, 0,

conf)

+.initiateBlockPush()

+verify(shuffleClient, times(1))

+ .pushBlocks(any(), any(), any(), any(), any())

Review comment:

done

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] otterc commented on a change in pull request #30312: [SPARK-32917][SHUFFLE][CORE] Adds support for executors to push shuffle blocks after successful map task completion

otterc commented on a change in pull request #30312:

URL: https://github.com/apache/spark/pull/30312#discussion_r533120443

##

File path: core/src/main/scala/org/apache/spark/shuffle/ShuffleBlockPusher.scala

##

@@ -0,0 +1,462 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.shuffle

+

+import java.io.File

+import java.net.ConnectException

+import java.nio.ByteBuffer

+import java.util.concurrent.ExecutorService

+

+import scala.collection.mutable.{ArrayBuffer, HashMap, HashSet, Queue}

+

+import com.google.common.base.Throwables

+

+import org.apache.spark.{ShuffleDependency, SparkConf, SparkEnv}

+import org.apache.spark.annotation.Since

+import org.apache.spark.internal.Logging

+import org.apache.spark.internal.config._

+import org.apache.spark.launcher.SparkLauncher

+import org.apache.spark.network.buffer.{FileSegmentManagedBuffer,

ManagedBuffer, NioManagedBuffer}

+import org.apache.spark.network.netty.SparkTransportConf

+import org.apache.spark.network.shuffle.BlockFetchingListener

+import org.apache.spark.network.shuffle.ErrorHandler.BlockPushErrorHandler

+import org.apache.spark.network.util.TransportConf

+import org.apache.spark.shuffle.ShuffleBlockPusher._

+import org.apache.spark.storage.{BlockId, BlockManagerId, ShufflePushBlockId}

+import org.apache.spark.util.{ThreadUtils, Utils}

+

+/**

+ * Used for pushing shuffle blocks to remote shuffle services when push

shuffle is enabled.

+ * When push shuffle is enabled, it is created after the shuffle writer

finishes writing the shuffle

+ * file and initiates the block push process.

+ *

+ * @param dataFile mapper generated shuffle data file

+ * @param partitionLengths array of shuffle block size so we can tell shuffle

block

+ * boundaries within the shuffle file

+ * @param dep shuffle dependency to get shuffle ID and the

location of remote shuffle

+ * services to push local shuffle blocks

+ * @param partitionId map index of the shuffle map task

+ * @param conf spark configuration

+ */

+@Since("3.1.0")

+private[spark] class ShuffleBlockPusher(

+dataFile: File,

+partitionLengths: Array[Long],

+dep: ShuffleDependency[_, _, _],

+partitionId: Int,

+conf: SparkConf) extends Logging {

Review comment:

I have made the change. Please take a look.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on a change in pull request #29966: [SPARK-33084][CORE][SQL] Add jar support ivy path

AngersZh commented on a change in pull request #29966:

URL: https://github.com/apache/spark/pull/29966#discussion_r533117100

##

File path: core/src/main/scala/org/apache/spark/util/DependencyUtils.scala

##

@@ -15,22 +15,122 @@

* limitations under the License.

*/

-package org.apache.spark.deploy

+package org.apache.spark.util

import java.io.File

-import java.net.URI

+import java.net.{URI, URISyntaxException}

import org.apache.commons.lang3.StringUtils

import org.apache.hadoop.conf.Configuration

import org.apache.hadoop.fs.{FileSystem, Path}

import org.apache.spark.{SecurityManager, SparkConf, SparkException}

+import org.apache.spark.deploy.SparkSubmitUtils

import org.apache.spark.internal.Logging

-import org.apache.spark.util.{MutableURLClassLoader, Utils}

-private[deploy] object DependencyUtils extends Logging {

+private[spark] object DependencyUtils extends Logging {

+

+ def getIvyProperties(): Seq[String] = {

+Seq(

+ "spark.jars.excludes",

+ "spark.jars.packages",

+ "spark.jars.repositories",

+ "spark.jars.ivy",

+ "spark.jars.ivySettings"

+).map(sys.props.get(_).orNull)

+ }

+

+ /**

+ * Parse excluded list in ivy URL. When download ivy URL jar, Spark won't

download transitive jar

+ * in excluded list.

+ *

+ * @param queryString Ivy URI query part string.

+ * @return Exclude list which contains grape parameters of exclude.

+ * Example: Input:

exclude=org.mortbay.jetty:jetty,org.eclipse.jetty:jetty-http

+ * Output: [org.mortbay.jetty:jetty, org.eclipse.jetty:jetty-http]

+ */

+ private def parseExcludeList(excludes: Array[String]): String = {

+excludes.flatMap { excludeString =>

+ val excludes: Array[String] = excludeString.split(",")

+ if (excludes.exists(_.split(":").length != 2)) {

+throw new URISyntaxException(excludeString,

+ "Invalid exclude string: expected 'org:module,org:module,..', found

" + excludeString)

+ }

+ excludes

+}.mkString(":")

+ }

+

+ /**

+ * Parse transitive parameter in ivy URL, default value is false.

+ *

+ * @param queryString Ivy URI query part string.

+ * @return Exclude list which contains grape parameters of transitive.

+ * Example: Input: exclude=org.mortbay.jetty:jetty=true

+ * Output: true

+ */

+ private def parseTransitive(transitives: Array[String]): Boolean = {

+if (transitives.isEmpty) {

+ false

+} else {

+ if (transitives.length > 1) {

+logWarning("It's best to specify `transitive` parameter in ivy URL

query only once." +

+ " If there are multiple `transitive` parameter, we will select the

last one")

+ }

+ transitives.last.toBoolean

Review comment:

> What if `transitive=invalidStr` in hive? Could you add tests?

I change the logical, make it like hive. avoid convert error in `toBoolean`

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on a change in pull request #29966: [SPARK-33084][CORE][SQL] Add jar support ivy path

AngersZh commented on a change in pull request #29966:

URL: https://github.com/apache/spark/pull/29966#discussion_r533116569

##

File path: core/src/main/scala/org/apache/spark/util/DependencyUtils.scala

##

@@ -15,22 +15,122 @@

* limitations under the License.

*/

-package org.apache.spark.deploy

+package org.apache.spark.util

import java.io.File

-import java.net.URI

+import java.net.{URI, URISyntaxException}

import org.apache.commons.lang3.StringUtils

import org.apache.hadoop.conf.Configuration

import org.apache.hadoop.fs.{FileSystem, Path}

import org.apache.spark.{SecurityManager, SparkConf, SparkException}

+import org.apache.spark.deploy.SparkSubmitUtils

import org.apache.spark.internal.Logging

-import org.apache.spark.util.{MutableURLClassLoader, Utils}

-private[deploy] object DependencyUtils extends Logging {

+private[spark] object DependencyUtils extends Logging {

+

+ def getIvyProperties(): Seq[String] = {

+Seq(

+ "spark.jars.excludes",

+ "spark.jars.packages",

+ "spark.jars.repositories",

+ "spark.jars.ivy",

+ "spark.jars.ivySettings"

+).map(sys.props.get(_).orNull)

+ }

+

+ /**

+ * Parse excluded list in ivy URL. When download ivy URL jar, Spark won't

download transitive jar

+ * in excluded list.

+ *

+ * @param queryString Ivy URI query part string.

+ * @return Exclude list which contains grape parameters of exclude.

+ * Example: Input:

exclude=org.mortbay.jetty:jetty,org.eclipse.jetty:jetty-http

+ * Output: [org.mortbay.jetty:jetty, org.eclipse.jetty:jetty-http]

+ */

+ private def parseExcludeList(excludes: Array[String]): String = {

+excludes.flatMap { excludeString =>

+ val excludes: Array[String] = excludeString.split(",")

+ if (excludes.exists(_.split(":").length != 2)) {

+throw new URISyntaxException(excludeString,

+ "Invalid exclude string: expected 'org:module,org:module,..', found

" + excludeString)

+ }

+ excludes

+}.mkString(":")

+ }

+

+ /**

+ * Parse transitive parameter in ivy URL, default value is false.

+ *

+ * @param queryString Ivy URI query part string.

+ * @return Exclude list which contains grape parameters of transitive.

+ * Example: Input: exclude=org.mortbay.jetty:jetty=true

+ * Output: true

+ */

+ private def parseTransitive(transitives: Array[String]): Boolean = {

+if (transitives.isEmpty) {

+ false

+} else {

+ if (transitives.length > 1) {

+logWarning("It's best to specify `transitive` parameter in ivy URL

query only once." +

+ " If there are multiple `transitive` parameter, we will select the

last one")

+ }

+ transitives.last.toBoolean

Review comment:

Added in `test("SPARK-33084: Add jar support ivy url")`

```

// Invalid transitive value, will use default value `false`

sc.addJar("ivy://org.scala-js:scalajs-test-interface_2.12:1.2.0?transitive=foo")

assert(!sc.listJars().exists(_.contains("scalajs-library_2.12")))

assert(sc.listJars().exists(_.contains("scalajs-test-interface_2.12")))

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on a change in pull request #29966: [SPARK-33084][CORE][SQL] Add jar support ivy path

AngersZh commented on a change in pull request #29966:

URL: https://github.com/apache/spark/pull/29966#discussion_r533115969

##

File path: core/src/test/scala/org/apache/spark/SparkContextSuite.scala

##

@@ -366,6 +366,12 @@ class SparkContextSuite extends SparkFunSuite with

LocalSparkContext with Eventu

}

}

+ test("add jar local path with comma") {

+sc = new SparkContext(new

SparkConf().setAppName("test").setMaster("local"))

+sc.addJar("file://Test,UDTF.jar")

+assert(!sc.listJars().exists(_.contains("UDTF.jar")))

Review comment:

> What does this test mean? Why didn't you write it like `

assert(sc.listJars().exists(_.contains("Test,UDTF.jar")))`?

Mess in brain...

##

File path: core/src/test/scala/org/apache/spark/SparkContextSuite.scala

##

@@ -366,6 +366,12 @@ class SparkContextSuite extends SparkFunSuite with

LocalSparkContext with Eventu

}

}

+ test("add jar local path with comma") {

+sc = new SparkContext(new

SparkConf().setAppName("test").setMaster("local"))

Review comment:

> Cold you add tests for other other schemas?

Updated

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a change in pull request #30544: [SPARK-32405][SQL][FOLLOWUP] Throw Exception if provider is specified in JDBCTableCatalog create table

cloud-fan commented on a change in pull request #30544:

URL: https://github.com/apache/spark/pull/30544#discussion_r533110907

##

File path:

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/v2/jdbc/JDBCTableCatalog.scala

##

@@ -128,6 +128,8 @@ class JDBCTableCatalog extends TableCatalog with Logging {

case "comment" => tableComment = v

// ToDo: have a follow up to fail provider once unify create table

syntax PR is merged

Review comment:

nit: remove TODO

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] waitinfuture commented on pull request #30516: [SPARK-33572][SQL] Datetime building should fail if the year, month, ..., second combination is invalid

waitinfuture commented on pull request #30516: URL: https://github.com/apache/spark/pull/30516#issuecomment-736253437 @cloud-fan test passed, ready for merge This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on a change in pull request #29966: [SPARK-33084][CORE][SQL] Add jar support ivy path

AngersZh commented on a change in pull request #29966:

URL: https://github.com/apache/spark/pull/29966#discussion_r533099833

##

File path: core/src/main/scala/org/apache/spark/util/DependencyUtils.scala

##

@@ -15,22 +15,122 @@

* limitations under the License.

*/

-package org.apache.spark.deploy

+package org.apache.spark.util

import java.io.File

-import java.net.URI

+import java.net.{URI, URISyntaxException}

import org.apache.commons.lang3.StringUtils

import org.apache.hadoop.conf.Configuration

import org.apache.hadoop.fs.{FileSystem, Path}

import org.apache.spark.{SecurityManager, SparkConf, SparkException}

+import org.apache.spark.deploy.SparkSubmitUtils

import org.apache.spark.internal.Logging

-import org.apache.spark.util.{MutableURLClassLoader, Utils}

-private[deploy] object DependencyUtils extends Logging {

+private[spark] object DependencyUtils extends Logging {

+

+ def getIvyProperties(): Seq[String] = {

+Seq(

+ "spark.jars.excludes",

+ "spark.jars.packages",

+ "spark.jars.repositories",

+ "spark.jars.ivy",

+ "spark.jars.ivySettings"

+).map(sys.props.get(_).orNull)

+ }

+

+ /**

+ * Parse excluded list in ivy URL. When download ivy URL jar, Spark won't

download transitive jar

+ * in excluded list.

+ *

+ * @param queryString Ivy URI query part string.

+ * @return Exclude list which contains grape parameters of exclude.

+ * Example: Input:

exclude=org.mortbay.jetty:jetty,org.eclipse.jetty:jetty-http

+ * Output: [org.mortbay.jetty:jetty, org.eclipse.jetty:jetty-http]

+ */

+ private def parseExcludeList(excludes: Array[String]): String = {

+excludes.flatMap { excludeString =>

+ val excludes: Array[String] = excludeString.split(",")

+ if (excludes.exists(_.split(":").length != 2)) {

+throw new URISyntaxException(excludeString,

+ "Invalid exclude string: expected 'org:module,org:module,..', found

" + excludeString)

+ }

+ excludes

+}.mkString(":")

+ }

+

+ /**

+ * Parse transitive parameter in ivy URL, default value is false.

+ *

+ * @param queryString Ivy URI query part string.

+ * @return Exclude list which contains grape parameters of transitive.

+ * Example: Input: exclude=org.mortbay.jetty:jetty=true

+ * Output: true

+ */

+ private def parseTransitive(transitives: Array[String]): Boolean = {

+if (transitives.isEmpty) {

+ false

+} else {

+ if (transitives.length > 1) {

+logWarning("It's best to specify `transitive` parameter in ivy URL

query only once." +

+ " If there are multiple `transitive` parameter, we will select the

last one")

+ }

+ transitives.last.toBoolean

+}

+ }

+

+ /**

+ * Download Ivy URIs dependency jars.

+ *

+ * @param uri Ivy uri need to be downloaded. The URI format should be:

+ * `ivy://group:module:version[?query]`

+ *Ivy URI query part format should be:

+ * `parameter=value=value...`

+ *Note that currently ivy URI query part support two parameters:

+ * 1. transitive: whether to download dependent jars related to

your ivy URL.

+ *transitive=false or `transitive=true`, if not set, the

default value is false.

+ * 2. exclude: exclusion list when download ivy URL jar and

dependency jars.

+ *The `exclude` parameter content is a ',' separated

`group:module` pair string :

+ *`exclude=group:module,group:module...`

+ * @return Comma separated string list of URIs of downloaded jars

+ */

+ def resolveMavenDependencies(uri: URI): String = {

+val Seq(_, _, repositories, ivyRepoPath, ivySettingsPath) =

+ DependencyUtils.getIvyProperties()

+val authority = uri.getAuthority

+if (authority == null) {

+ throw new URISyntaxException(

+authority, "Invalid url: Expected 'org:module:version', found null")

+}

+if (authority.split(":").length != 3) {

+ throw new URISyntaxException(

+authority, "Invalid url: Expected 'org:module:version', found " +

authority)

+}

+

+val uriQuery = uri.getQuery

+val queryParams: Array[(String, String)] = if (uriQuery == null) {

+ Array.empty[(String, String)]

+} else {

+ val mapTokens = uriQuery.split("&").map(_.split("="))

+ if (mapTokens.exists(_.length != 2)) {

+throw new URISyntaxException(uriQuery, s"Invalid query string:

$uriQuery")

+ }

+ mapTokens.map(kv => (kv(0), kv(1)))

+}

+

+resolveMavenDependencies(

+ parseTransitive(queryParams.filter(_._1.equals("transitive")).map(_._2)),

+ parseExcludeList(queryParams.filter(_._1.equals("exclude")).map(_._2)),

Review comment:

Yea.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub

[GitHub] [spark] AngersZhuuuu commented on a change in pull request #29966: [SPARK-33084][CORE][SQL] Add jar support ivy path

AngersZh commented on a change in pull request #29966:

URL: https://github.com/apache/spark/pull/29966#discussion_r533099768

##

File path: core/src/main/scala/org/apache/spark/util/DependencyUtils.scala

##

@@ -15,22 +15,122 @@

* limitations under the License.

*/

-package org.apache.spark.deploy

+package org.apache.spark.util

import java.io.File

-import java.net.URI

+import java.net.{URI, URISyntaxException}

import org.apache.commons.lang3.StringUtils

import org.apache.hadoop.conf.Configuration

import org.apache.hadoop.fs.{FileSystem, Path}

import org.apache.spark.{SecurityManager, SparkConf, SparkException}

+import org.apache.spark.deploy.SparkSubmitUtils

import org.apache.spark.internal.Logging

-import org.apache.spark.util.{MutableURLClassLoader, Utils}

-private[deploy] object DependencyUtils extends Logging {

+private[spark] object DependencyUtils extends Logging {

+

+ def getIvyProperties(): Seq[String] = {

+Seq(

+ "spark.jars.excludes",

+ "spark.jars.packages",

+ "spark.jars.repositories",

+ "spark.jars.ivy",

+ "spark.jars.ivySettings"

+).map(sys.props.get(_).orNull)

+ }

+

+ /**

+ * Parse excluded list in ivy URL. When download ivy URL jar, Spark won't

download transitive jar

+ * in excluded list.

+ *

+ * @param queryString Ivy URI query part string.

+ * @return Exclude list which contains grape parameters of exclude.

+ * Example: Input:

exclude=org.mortbay.jetty:jetty,org.eclipse.jetty:jetty-http

+ * Output: [org.mortbay.jetty:jetty, org.eclipse.jetty:jetty-http]

+ */

+ private def parseExcludeList(excludes: Array[String]): String = {

Review comment:

Yea, thank s for your advise

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] maropu commented on pull request #30532: [MINOR] Spelling sql not core

maropu commented on pull request #30532: URL: https://github.com/apache/spark/pull/30532#issuecomment-736249327 Jenkins is down now, so could you re-invoke the GA tests? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on a change in pull request #29966: [SPARK-33084][CORE][SQL] Add jar support ivy path

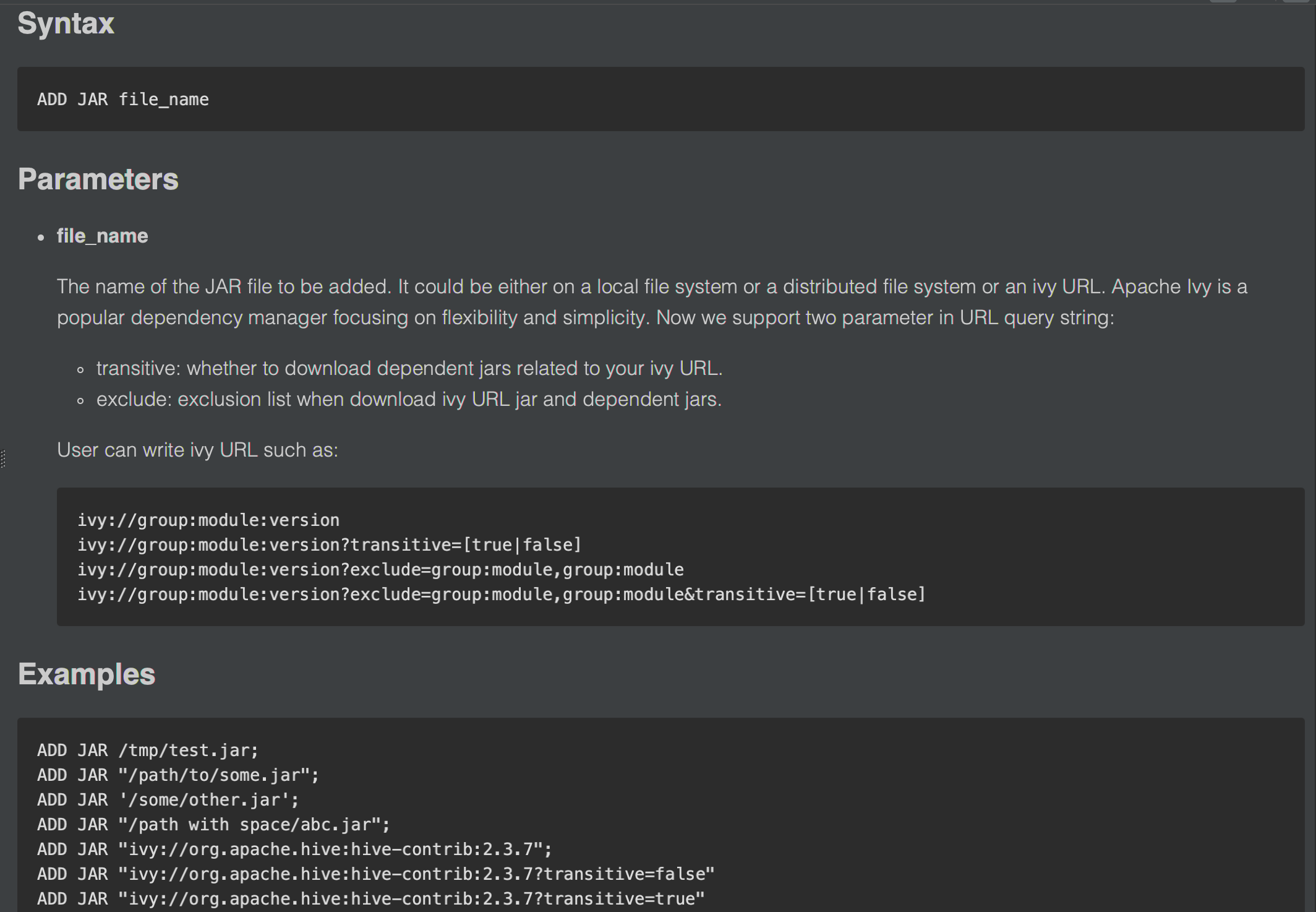

AngersZh commented on a change in pull request #29966: URL: https://github.com/apache/spark/pull/29966#discussion_r533095376 ## File path: docs/sql-ref-syntax-aux-resource-mgmt-add-jar.md ## @@ -33,15 +33,30 @@ ADD JAR file_name * **file_name** -The name of the JAR file to be added. It could be either on a local file system or a distributed file system. +The name of the JAR file to be added. It could be either on a local file system or a distributed file system or an ivy URL. +Apache Ivy is a popular dependency manager focusing on flexibility and simplicity. Now we support two parameter in URL query string: + * transitive: whether to download dependent jars related to your ivy URL. + * exclude: exclusion list when download ivy URL jar and dependent jars. + +User can write ivy URL such as: + + ivy://group:module:version + ivy://group:module:version?transitive=true Review comment: Done This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on a change in pull request #29966: [SPARK-33084][CORE][SQL] Add jar support ivy path

AngersZh commented on a change in pull request #29966:

URL: https://github.com/apache/spark/pull/29966#discussion_r533095212

##

File path: core/src/main/scala/org/apache/spark/util/DependencyUtils.scala

##

@@ -15,22 +15,122 @@

* limitations under the License.

*/

-package org.apache.spark.deploy

+package org.apache.spark.util

import java.io.File

-import java.net.URI

+import java.net.{URI, URISyntaxException}

import org.apache.commons.lang3.StringUtils

import org.apache.hadoop.conf.Configuration

import org.apache.hadoop.fs.{FileSystem, Path}

import org.apache.spark.{SecurityManager, SparkConf, SparkException}

+import org.apache.spark.deploy.SparkSubmitUtils

import org.apache.spark.internal.Logging

-import org.apache.spark.util.{MutableURLClassLoader, Utils}

-private[deploy] object DependencyUtils extends Logging {

+private[spark] object DependencyUtils extends Logging {

+

+ def getIvyProperties(): Seq[String] = {

+Seq(

+ "spark.jars.excludes",

+ "spark.jars.packages",

+ "spark.jars.repositories",

+ "spark.jars.ivy",

+ "spark.jars.ivySettings"

+).map(sys.props.get(_).orNull)

+ }

+

+ /**

+ * Parse excluded list in ivy URL. When download ivy URL jar, Spark won't

download transitive jar

+ * in excluded list.

+ *

+ * @param queryString Ivy URI query part string.

+ * @return Exclude list which contains grape parameters of exclude.

+ * Example: Input:

exclude=org.mortbay.jetty:jetty,org.eclipse.jetty:jetty-http

+ * Output: [org.mortbay.jetty:jetty, org.eclipse.jetty:jetty-http]

+ */

+ private def parseExcludeList(excludes: Array[String]): String = {

+excludes.flatMap { excludeString =>

+ val excludes: Array[String] = excludeString.split(",")

+ if (excludes.exists(_.split(":").length != 2)) {

+throw new URISyntaxException(excludeString,

+ "Invalid exclude string: expected 'org:module,org:module,..', found

" + excludeString)

+ }

+ excludes

+}.mkString(":")

+ }

+

+ /**

+ * Parse transitive parameter in ivy URL, default value is false.

+ *

+ * @param queryString Ivy URI query part string.

+ * @return Exclude list which contains grape parameters of transitive.

+ * Example: Input: exclude=org.mortbay.jetty:jetty=true

+ * Output: true

+ */

+ private def parseTransitive(transitives: Array[String]): Boolean = {

+if (transitives.isEmpty) {

+ false

+} else {

+ if (transitives.length > 1) {

+logWarning("It's best to specify `transitive` parameter in ivy URL

query only once." +

+ " If there are multiple `transitive` parameter, we will select the

last one")

+ }

+ transitives.last.toBoolean

+}

+ }

+

+ /**

+ * Download Ivy URIs dependency jars.

+ *

+ * @param uri Ivy uri need to be downloaded. The URI format should be:

+ * `ivy://group:module:version[?query]`

+ *Ivy URI query part format should be:

+ * `parameter=value=value...`

+ *Note that currently ivy URI query part support two parameters:

+ * 1. transitive: whether to download dependent jars related to

your ivy URL.

+ *transitive=false or `transitive=true`, if not set, the

default value is false.

+ * 2. exclude: exclusion list when download ivy URL jar and

dependency jars.

+ *The `exclude` parameter content is a ',' separated

`group:module` pair string :

+ *`exclude=group:module,group:module...`

+ * @return Comma separated string list of URIs of downloaded jars

+ */

+ def resolveMavenDependencies(uri: URI): String = {

Review comment:

Change this

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] LuciferYang commented on a change in pull request #30547: [SPARK-33557][CORE][MESOS][TEST] Ensure the relationship between STORAGE_BLOCKMANAGER_HEARTBEAT_TIMEOUT and NETWORK_TIMEOUT

LuciferYang commented on a change in pull request #30547: URL: https://github.com/apache/spark/pull/30547#discussion_r533094765 ## File path: core/src/main/scala/org/apache/spark/HeartbeatReceiver.scala ## @@ -80,7 +80,7 @@ private[spark] class HeartbeatReceiver(sc: SparkContext, clock: Clock) // executor ID -> timestamp of when the last heartbeat from this executor was received private val executorLastSeen = new HashMap[String, Long] - private val executorTimeoutMs = sc.conf.get(config.STORAGE_BLOCKMANAGER_HEARTBEAT_TIMEOUT) + private val executorTimeoutMs = Utils.blockManagerHeartbeatTimeoutAsMs(sc.conf) Review comment: So if use `fallbackConf `, we need to change the name of `spark.storage.blockManagerHeartbeatTimeoutMs` to remove the suffix `Ms`. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] LuciferYang commented on a change in pull request #30547: [SPARK-33557][CORE][MESOS][TEST] Ensure the relationship between STORAGE_BLOCKMANAGER_HEARTBEAT_TIMEOUT and NETWORK_TIMEOUT

LuciferYang commented on a change in pull request #30547: URL: https://github.com/apache/spark/pull/30547#discussion_r533094765 ## File path: core/src/main/scala/org/apache/spark/HeartbeatReceiver.scala ## @@ -80,7 +80,7 @@ private[spark] class HeartbeatReceiver(sc: SparkContext, clock: Clock) // executor ID -> timestamp of when the last heartbeat from this executor was received private val executorLastSeen = new HashMap[String, Long] - private val executorTimeoutMs = sc.conf.get(config.STORAGE_BLOCKMANAGER_HEARTBEAT_TIMEOUT) + private val executorTimeoutMs = Utils.blockManagerHeartbeatTimeoutAsMs(sc.conf) Review comment: So if use `fallbackConf `, we need to change the name of `spark.storage.blockManagerHeartbeatTimeoutMs` to remove the suffix "Ms". This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] LuciferYang commented on a change in pull request #30547: [SPARK-33557][CORE][MESOS][TEST] Ensure the relationship between STORAGE_BLOCKMANAGER_HEARTBEAT_TIMEOUT and NETWORK_TIMEOUT

LuciferYang commented on a change in pull request #30547:

URL: https://github.com/apache/spark/pull/30547#discussion_r533093278

##

File path: core/src/main/scala/org/apache/spark/HeartbeatReceiver.scala

##

@@ -80,7 +80,7 @@ private[spark] class HeartbeatReceiver(sc: SparkContext,

clock: Clock)

// executor ID -> timestamp of when the last heartbeat from this executor

was received

private val executorLastSeen = new HashMap[String, Long]

- private val executorTimeoutMs =

sc.conf.get(config.STORAGE_BLOCKMANAGER_HEARTBEAT_TIMEOUT)

+ private val executorTimeoutMs =

Utils.blockManagerHeartbeatTimeoutAsMs(sc.conf)

Review comment:

definition `STORAGE_BLOCKMANAGER_HEARTBEAT_TIMEOUT` from

```

private[spark] val STORAGE_BLOCKMANAGER_HEARTBEAT_TIMEOUT =

ConfigBuilder("spark.storage.blockManagerHeartbeatTimeoutMs")

.version("0.7.0")

.withAlternative("spark.storage.blockManagerSlaveTimeoutMs")

.timeConf(TimeUnit.MILLISECONDS)

.createWithDefaultString(Network.NETWORK_TIMEOUT.defaultValueString)

```

to

```

private[spark] val STORAGE_BLOCKMANAGER_HEARTBEAT_TIMEOUT =

ConfigBuilder("spark.storage.blockManagerHeartbeatTimeoutMs")

.version("0.7.0")

.withAlternative("spark.storage.blockManagerSlaveTimeoutMs")

.fallbackConf(Network.NETWORK_TIMEOUT)

```

?

If so, the TimeUnit of `STORAGE_BLOCKMANAGER_HEARTBEAT_TIMEOUT` will change

from `TimeUnit.MILLISECONDS` to `TimeUnit.SECONDS`, and the default value will

change from `12` to `120`,

This does not match the "Ms" unit described in

`spark.storage.blockManagerHeartbeatTimeoutMs`.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on a change in pull request #29966: [SPARK-33084][CORE][SQL] Add jar support ivy path

AngersZh commented on a change in pull request #29966:

URL: https://github.com/apache/spark/pull/29966#discussion_r533091418

##

File path: core/src/main/scala/org/apache/spark/SparkContext.scala

##

@@ -1869,15 +1869,15 @@ class SparkContext(config: SparkConf) extends Logging {

throw new IllegalArgumentException(

s"Directory ${file.getAbsoluteFile} is not allowed for addJar")

}

-env.rpcEnv.fileServer.addJar(file)

+Array(env.rpcEnv.fileServer.addJar(file))

} catch {

case NonFatal(e) =>

logError(s"Failed to add $path to Spark environment", e)

- null

+ Array.empty

Review comment:

Yea

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] maropu commented on a change in pull request #30212: [SPARK-33308][SQL] Refactor current grouping analytics

maropu commented on a change in pull request #30212:

URL: https://github.com/apache/spark/pull/30212#discussion_r533090836

##

File path:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/Analyzer.scala

##

@@ -2027,65 +1952,76 @@ class Analyzer(override val catalogManager:

CatalogManager)

*/

object ResolveFunctions extends Rule[LogicalPlan] {

val trimWarningEnabled = new AtomicBoolean(true)

+

+def resolveFunction(): PartialFunction[Expression, Expression] = {

+ case u if !u.childrenResolved => u // Skip until children are resolved.

+ case u: UnresolvedAttribute if resolver(u.name,

VirtualColumn.hiveGroupingIdName) =>

+withPosition(u) {

+ Alias(GroupingID(Nil), VirtualColumn.hiveGroupingIdName)()

+}

+ case u @ UnresolvedGenerator(name, children) =>

+withPosition(u) {

+ v1SessionCatalog.lookupFunction(name, children) match {

+case generator: Generator => generator

+case other =>

+ failAnalysis(s"$name is expected to be a generator. However, " +

+s"its class is ${other.getClass.getCanonicalName}, which is

not a generator.")

+ }

+}

+ case u @ UnresolvedFunction(funcId, arguments, isDistinct, filter) =>

+withPosition(u) {

+ v1SessionCatalog.lookupFunction(funcId, arguments) match {

+// AggregateWindowFunctions are AggregateFunctions that can only

be evaluated within

+// the context of a Window clause. They do not need to be wrapped

in an

+// AggregateExpression.

+case wf: AggregateWindowFunction =>

+ if (isDistinct || filter.isDefined) {

+failAnalysis("DISTINCT or FILTER specified, " +

+ s"but ${wf.prettyName} is not an aggregate function")

+ } else {

+wf

+ }

+// We get an aggregate function, we need to wrap it in an

AggregateExpression.

+case agg: AggregateFunction =>

+ if (filter.isDefined && !filter.get.deterministic) {

+failAnalysis("FILTER expression is non-deterministic, " +

+ "it cannot be used in aggregate functions")

+ }

+ AggregateExpression(agg, Complete, isDistinct, filter)

+// This function is not an aggregate function, just return the

resolved one.

+case other if (isDistinct || filter.isDefined) =>

+ failAnalysis("DISTINCT or FILTER specified, " +

+s"but ${other.prettyName} is not an aggregate function")

+case e: String2TrimExpression if arguments.size == 2 =>

+ if (trimWarningEnabled.get) {

+logWarning("Two-parameter TRIM/LTRIM/RTRIM function signatures

are deprecated." +

+ " Use SQL syntax `TRIM((BOTH | LEADING | TRAILING)? trimStr

FROM str)`" +

+ " instead.")

+trimWarningEnabled.set(false)

+ }

+ e

+case other =>

+ other

+ }

+}

+}

+

def apply(plan: LogicalPlan): LogicalPlan = plan.resolveOperatorsUp {

// Resolve functions with concrete relations from v2 catalog.

case UnresolvedFunc(multipartIdent) =>

val funcIdent = parseSessionCatalogFunctionIdentifier(multipartIdent)

ResolvedFunc(Identifier.of(funcIdent.database.toArray,

funcIdent.funcName))

- case q: LogicalPlan =>

-q transformExpressions {

- case u if !u.childrenResolved => u // Skip until children are

resolved.

- case u: UnresolvedAttribute if resolver(u.name,

VirtualColumn.hiveGroupingIdName) =>

-withPosition(u) {

- Alias(GroupingID(Nil), VirtualColumn.hiveGroupingIdName)()

-}

- case u @ UnresolvedGenerator(name, children) =>

-withPosition(u) {

- v1SessionCatalog.lookupFunction(name, children) match {

-case generator: Generator => generator

-case other =>

- failAnalysis(s"$name is expected to be a generator. However,

" +

-s"its class is ${other.getClass.getCanonicalName}, which

is not a generator.")

- }

-}

- case u @ UnresolvedFunction(funcId, arguments, isDistinct, filter) =>

-withPosition(u) {

- v1SessionCatalog.lookupFunction(funcId, arguments) match {

-// AggregateWindowFunctions are AggregateFunctions that can

only be evaluated within

-// the context of a Window clause. They do not need to be

wrapped in an

-// AggregateExpression.

-case wf: AggregateWindowFunction =>

- if (isDistinct || filter.isDefined) {

-failAnalysis("DISTINCT or FILTER specified, " +

- s"but ${wf.prettyName} is not an

[GitHub] [spark] AngersZhuuuu commented on a change in pull request #29966: [SPARK-33084][CORE][SQL] Add jar support ivy path

AngersZh commented on a change in pull request #29966:

URL: https://github.com/apache/spark/pull/29966#discussion_r533090454

##

File path: core/src/main/scala/org/apache/spark/SparkContext.scala

##

@@ -1860,7 +1860,7 @@ class SparkContext(config: SparkConf) extends Logging {

}

private def addJar(path: String, addedOnSubmit: Boolean): Unit = {

-def addLocalJarFile(file: File): String = {

+def addLocalJarFile(file: File): Array[String] = {

Review comment:

Yea

##

File path: core/src/main/scala/org/apache/spark/SparkContext.scala

##

@@ -1869,15 +1869,15 @@ class SparkContext(config: SparkConf) extends Logging {

throw new IllegalArgumentException(

s"Directory ${file.getAbsoluteFile} is not allowed for addJar")

}

-env.rpcEnv.fileServer.addJar(file)

+Array(env.rpcEnv.fileServer.addJar(file))

} catch {

case NonFatal(e) =>

logError(s"Failed to add $path to Spark environment", e)

- null

+ Array.empty

}

}

-def checkRemoteJarFile(path: String): String = {

+def checkRemoteJarFile(path: String): Array[String] = {

Review comment:

Yea

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on pull request #29966: [SPARK-33084][CORE][SQL] Add jar support ivy path

AngersZh commented on pull request #29966: URL: https://github.com/apache/spark/pull/29966#issuecomment-736240432 > For other reviewers, could you put the screenshot of the updated doc in the PR description?  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on a change in pull request #29966: [SPARK-33084][CORE][SQL] Add jar support ivy path

AngersZh commented on a change in pull request #29966:

URL: https://github.com/apache/spark/pull/29966#discussion_r533088945

##

File path: core/src/main/scala/org/apache/spark/util/DependencyUtils.scala

##

@@ -15,22 +15,122 @@

* limitations under the License.

*/

-package org.apache.spark.deploy

+package org.apache.spark.util

import java.io.File

-import java.net.URI

+import java.net.{URI, URISyntaxException}

import org.apache.commons.lang3.StringUtils

import org.apache.hadoop.conf.Configuration

import org.apache.hadoop.fs.{FileSystem, Path}

import org.apache.spark.{SecurityManager, SparkConf, SparkException}

+import org.apache.spark.deploy.SparkSubmitUtils

import org.apache.spark.internal.Logging

-import org.apache.spark.util.{MutableURLClassLoader, Utils}

-private[deploy] object DependencyUtils extends Logging {

+private[spark] object DependencyUtils extends Logging {

+

+ def getIvyProperties(): Seq[String] = {

+Seq(

+ "spark.jars.excludes",

+ "spark.jars.packages",

+ "spark.jars.repositories",

+ "spark.jars.ivy",

+ "spark.jars.ivySettings"

+).map(sys.props.get(_).orNull)

+ }

+

+ /**

+ * Parse excluded list in ivy URL. When download ivy URL jar, Spark won't

download transitive jar

+ * in excluded list.

+ *

+ * @param queryString Ivy URI query part string.

+ * @return Exclude list which contains grape parameters of exclude.

+ * Example: Input:

exclude=org.mortbay.jetty:jetty,org.eclipse.jetty:jetty-http

+ * Output: [org.mortbay.jetty:jetty, org.eclipse.jetty:jetty-http]

+ */

+ private def parseExcludeList(excludes: Array[String]): String = {

+excludes.flatMap { excludeString =>

+ val excludes: Array[String] = excludeString.split(",")

+ if (excludes.exists(_.split(":").length != 2)) {

+throw new URISyntaxException(excludeString,

+ "Invalid exclude string: expected 'org:module,org:module,..', found

" + excludeString)

+ }

+ excludes

+}.mkString(":")

+ }

+

+ /**

+ * Parse transitive parameter in ivy URL, default value is false.

+ *

+ * @param queryString Ivy URI query part string.

+ * @return Exclude list which contains grape parameters of transitive.

+ * Example: Input: exclude=org.mortbay.jetty:jetty=true

+ * Output: true

+ */

+ private def parseTransitive(transitives: Array[String]): Boolean = {

+if (transitives.isEmpty) {

+ false

+} else {

+ if (transitives.length > 1) {

+logWarning("It's best to specify `transitive` parameter in ivy URL

query only once." +

+ " If there are multiple `transitive` parameter, we will select the

last one")

+ }

+ transitives.last.toBoolean

+}

+ }

+

+ /**

+ * Download Ivy URIs dependency jars.

+ *

+ * @param uri Ivy uri need to be downloaded. The URI format should be:

+ * `ivy://group:module:version[?query]`

+ *Ivy URI query part format should be:

+ * `parameter=value=value...`

+ *Note that currently ivy URI query part support two parameters:

+ * 1. transitive: whether to download dependent jars related to

your ivy URL.

+ *transitive=false or `transitive=true`, if not set, the

default value is false.

+ * 2. exclude: exclusion list when download ivy URL jar and

dependency jars.

+ *The `exclude` parameter content is a ',' separated

`group:module` pair string :

+ *`exclude=group:module,group:module...`

+ * @return Comma separated string list of URIs of downloaded jars

+ */

+ def resolveMavenDependencies(uri: URI): String = {

+val Seq(_, _, repositories, ivyRepoPath, ivySettingsPath) =

+ DependencyUtils.getIvyProperties()

+val authority = uri.getAuthority

+if (authority == null) {

+ throw new URISyntaxException(

+authority, "Invalid url: Expected 'org:module:version', found null")

+}

+if (authority.split(":").length != 3) {

+ throw new URISyntaxException(

+authority, "Invalid url: Expected 'org:module:version', found " +

authority)

+}

+

+val uriQuery = uri.getQuery

+val queryParams: Array[(String, String)] = if (uriQuery == null) {

+ Array.empty[(String, String)]

+} else {

+ val mapTokens = uriQuery.split("&").map(_.split("="))

+ if (mapTokens.exists(_.length != 2)) {

+throw new URISyntaxException(uriQuery, s"Invalid query string:

$uriQuery")

+ }

+ mapTokens.map(kv => (kv(0), kv(1)))

+}

+

+resolveMavenDependencies(

+ parseTransitive(queryParams.filter(_._1.equals("transitive")).map(_._2)),

Review comment:

> We need to respect case-sensitivity for params?

Normally we use lowercase, respect may be good, and hive respect too.

This is an automated message from the Apache Git Service.

To

[GitHub] [spark] otterc commented on a change in pull request #30312: [SPARK-32917][SHUFFLE][CORE] Adds support for executors to push shuffle blocks after successful map task completion

otterc commented on a change in pull request #30312:

URL: https://github.com/apache/spark/pull/30312#discussion_r533088124

##

File path:

core/src/test/scala/org/apache/spark/shuffle/ShuffleBlockPusherSuite.scala

##

@@ -0,0 +1,248 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.shuffle

+

+import java.io.File

+import java.net.ConnectException

+import java.nio.ByteBuffer

+

+import scala.collection.mutable.ArrayBuffer

+

+import org.mockito.{Mock, MockitoAnnotations}

+import org.mockito.Answers.RETURNS_SMART_NULLS

+import org.mockito.ArgumentMatchers.any

+import org.mockito.Mockito._

+import org.mockito.invocation.InvocationOnMock

+import org.scalatest.BeforeAndAfterEach

+

+import org.apache.spark._

+import org.apache.spark.network.buffer.ManagedBuffer

+import org.apache.spark.network.shuffle.{BlockFetchingListener,

BlockStoreClient}

+import org.apache.spark.network.shuffle.ErrorHandler.BlockPushErrorHandler

+import org.apache.spark.network.util.TransportConf

+import org.apache.spark.serializer.JavaSerializer