[GitHub] [spark] zhengruifeng commented on pull request #28458: [SPARK-30659][ML][PYSPARK] LogisticRegression blockify input vectors

zhengruifeng commented on pull request #28458: URL: https://github.com/apache/spark/pull/28458#issuecomment-624987233 Merged to master This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] zhengruifeng commented on pull request #28458: [SPARK-30659][ML][PYSPARK] LogisticRegression blockify input vectors

zhengruifeng commented on pull request #28458: URL: https://github.com/apache/spark/pull/28458#issuecomment-624985569 This PR is a update of https://github.com/apache/spark/pull/27374, it can avoid performance regression on sparse datasets by default (with blockSize=1). On dense datasets like `epsilon`, it is much faster than existing impl. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] zhengruifeng commented on pull request #28458: [SPARK-30659][ML][PYSPARK] LogisticRegression blockify input vectors

zhengruifeng commented on pull request #28458: URL: https://github.com/apache/spark/pull/28458#issuecomment-624487752 retest this please This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] zhengruifeng commented on pull request #28458: [SPARK-30659][ML][PYSPARK] LogisticRegression blockify input vectors

zhengruifeng commented on pull request #28458: URL: https://github.com/apache/spark/pull/28458#issuecomment-624470597 retest this please This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] zhengruifeng commented on pull request #28458: [SPARK-30659][ML][PYSPARK] LogisticRegression blockify input vectors

zhengruifeng commented on pull request #28458:

URL: https://github.com/apache/spark/pull/28458#issuecomment-624427337

performace test on the first 10,000 instances of

`webspam_wc_normalized_trigram`

code:

```scala

val df = spark.read.option("numFeatures",

"8289919").format("libsvm").load("/data1/Datasets/webspam/webspam_wc_normalized_trigram.svm.10k").withColumn("label",

(col("label")+1)/2)

df.persist(StorageLevel.MEMORY_AND_DISK)

df.count

val lr = new LogisticRegression().setBlockSize(1).setMaxIter(10)

lr.fit(df)

val results = Seq(1, 4, 16, 64, 256, 1024, 4096).map { size => val start =

System.currentTimeMillis; val model = lr.setBlockSize(size).fit(df); val end =

System.currentTimeMillis; (size, model.coefficients, end - start) }

```

results:

```

scala> results.map(_._3)

res17: Seq[Long] = List(33948, 425923, 129811, 56288, 47587, 42816, 39809)

scala> results.map(_._2).foreach(coef => println(coef.toString.take(100)))

(8289919,[549219,551719,592137,592138,592141,592154,592160,592162,592163,592164,592166,592167,592168

(8289919,[549219,551719,592137,592138,592141,592154,592160,592162,592163,592164,592166,592167,592168

(8289919,[549219,551719,592137,592138,592141,592154,592160,592162,592163,592164,592166,592167,592168

(8289919,[549219,551719,592137,592138,592141,592154,592160,592162,592163,592164,592166,592167,592168

(8289919,[549219,551719,592137,592138,592141,592154,592160,592162,592163,592164,592166,592167,592168

(8289919,[549219,551719,592137,592138,592141,592154,592160,592162,592163,592164,592166,592167,592168

(8289919,[549219,551719,592137,592138,592141,592154,592160,592162,592163,592164,592166,592167,592168

scala> results.map(_._2).foreach(coef =>

println(coef.toString.takeRight(100)))

87,-1188.1053920127556,335.5565308836645,-135.79302172669907,849.0515530033497,-27.040836637047736])

91,-1188.105392012755,335.55653088366444,-135.79302172669907,849.0515530033497,-27.040836637047736])

9,-1188.1053920127551,335.55653088366444,-135.79302172669904,849.0515530033495,-27.040836637047725])

94,-1188.1053920127556,335.55653088366444,-135.79302172669904,849.0515530033495,-27.04083663704773])

1,-1188.1053920127551,335.55653088366444,-135.79302172669904,849.0515530033493,-27.040836637047722])

5,-1188.1053920127556,335.55653088366444,-135.79302172669904,849.0515530033495,-27.040836637047736])

29,-1188.105392012756,335.55653088366444,-135.79302172669904,849.0515530033495,-27.040836637047736])

```

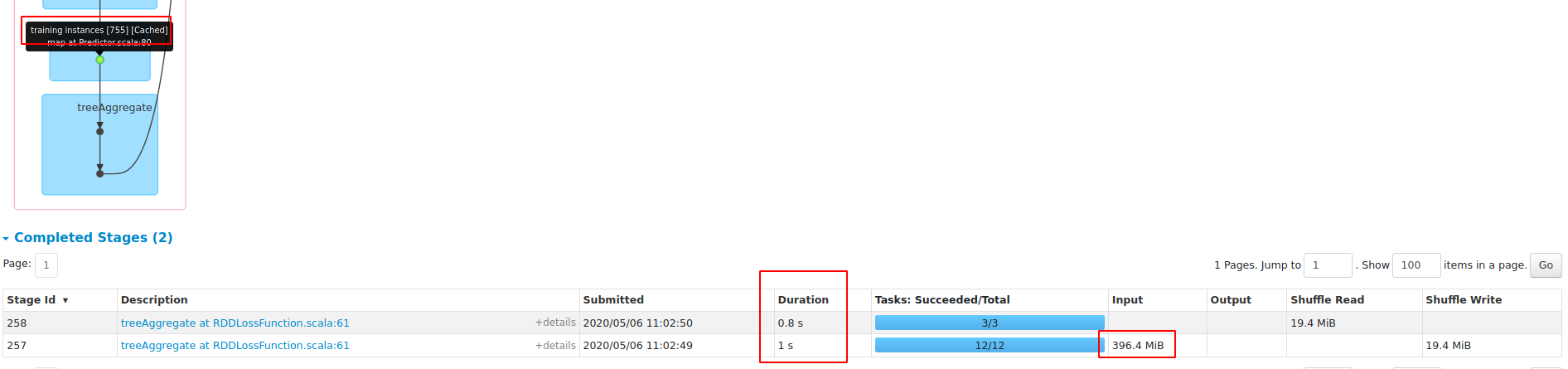

**blockSize==1**

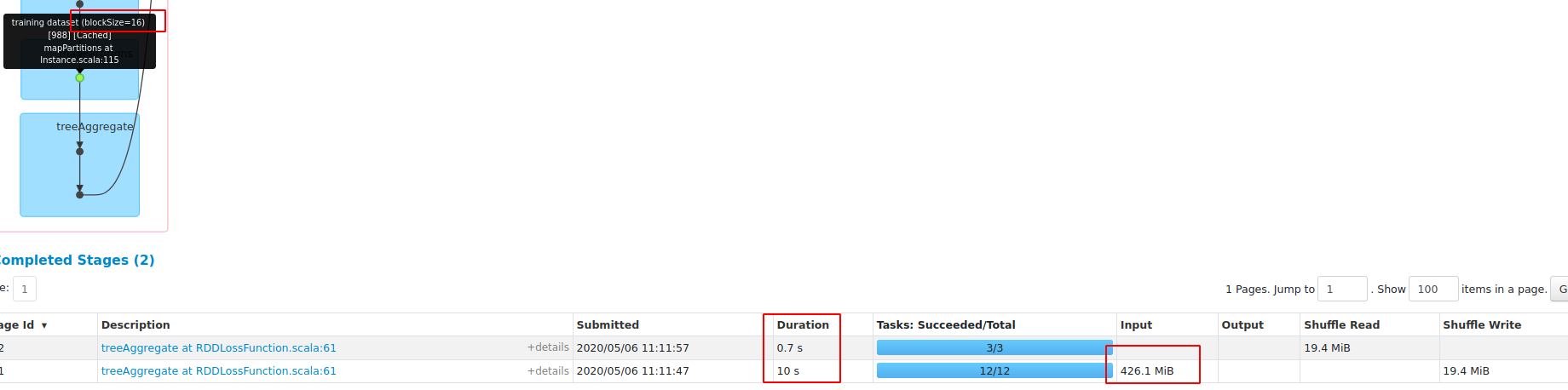

**blockSize=16**

test with **Master**:

```

import org.apache.spark.ml.classification._

import org.apache.spark.storage.StorageLevel

val df = spark.read.option("numFeatures",

"8289919").format("libsvm").load("/data1/Datasets/webspam/webspam_wc_normalized_trigram.svm.10k").withColumn("label",

(col("label")+1)/2)

df.persist(StorageLevel.MEMORY_AND_DISK)

df.count

val lr = new LogisticRegression().setMaxIter(10)

lr.fit(df)

val start = System.currentTimeMillis; val model = lr.setMaxIter(10).fit(df);

val end = System.currentTimeMillis; end - start

scala> val start = System.currentTimeMillis; val model =

lr.setMaxIter(10).fit(df); val end = System.currentTimeMillis; end - start

start: Long = 1588735447883

model: org.apache.spark.ml.classification.LogisticRegressionModel =

LogisticRegressionModel: uid=logreg_99d29a0ecc13, numClasses=2,

numFeatures=8289919

end: Long = 1588735483170

res3: Long = 35287

```

In this PR, when blockSize==1, the duration is 33948, so there will be no

performance regression on sparse datasets.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] zhengruifeng commented on pull request #28458: [SPARK-30659][ML][PYSPARK] LogisticRegression blockify input vectors

zhengruifeng commented on pull request #28458:

URL: https://github.com/apache/spark/pull/28458#issuecomment-624426340

performace test on

[`epsilon_normalized.t`](https://www.csie.ntu.edu.tw/~cjlin/libsvmtools/datasets/binary.html)

code:

```scala

import org.apache.spark.ml.classification._

import org.apache.spark.storage.StorageLevel

val df = spark.read.option("numFeatures",

"2000").format("libsvm").load("/data1/Datasets/epsilon/epsilon_normalized.t").withColumn("label",

(col("label")+1)/2)

df.persist(StorageLevel.MEMORY_AND_DISK)

df.count

val lr = new LogisticRegression().setBlockSize(1).setMaxIter(10)

lr.fit(df)

val results = Seq(1, 4, 16, 64, 256, 1024, 4096).map { size => val start =

System.currentTimeMillis; val model = lr.setBlockSize(size).fit(df); val end =

System.currentTimeMillis; (size, model.coefficients, end - start) }

```

results:

```

scala> results.map(_._3)

res3: Seq[Long] = List(31076, 6771, 6732, 7590, 7186, 7094, 7276)

scala> results.map(_._2).foreach(coef => println(coef.toString.take(100)))

[2.1557250220880024,-0.22767392418436572,4.569220246330072,0.04739667339597046,0.14605181933865558,-

[2.1557250220880064,-0.22767392418436597,4.56922024633007,0.04739667339596951,0.14605181933865646,-0

[2.1557250220880064,-0.2276739241843657,4.569220246330077,0.04739667339597028,0.14605181933865646,-0

[2.155725022088007,-0.2276739241843664,4.569220246330073,0.047396673395969764,0.14605181933865688,-0

[2.1557250220880038,-0.22767392418436605,4.569220246330073,0.04739667339597022,0.14605181933865458,-

[2.1557250220880033,-0.22767392418436683,4.569220246330072,0.047396673395970035,0.14605181933865727,

[2.1557250220880033,-0.22767392418436613,4.56922024633007,0.0473966733959703,0.1460518193386559,-0.0

```

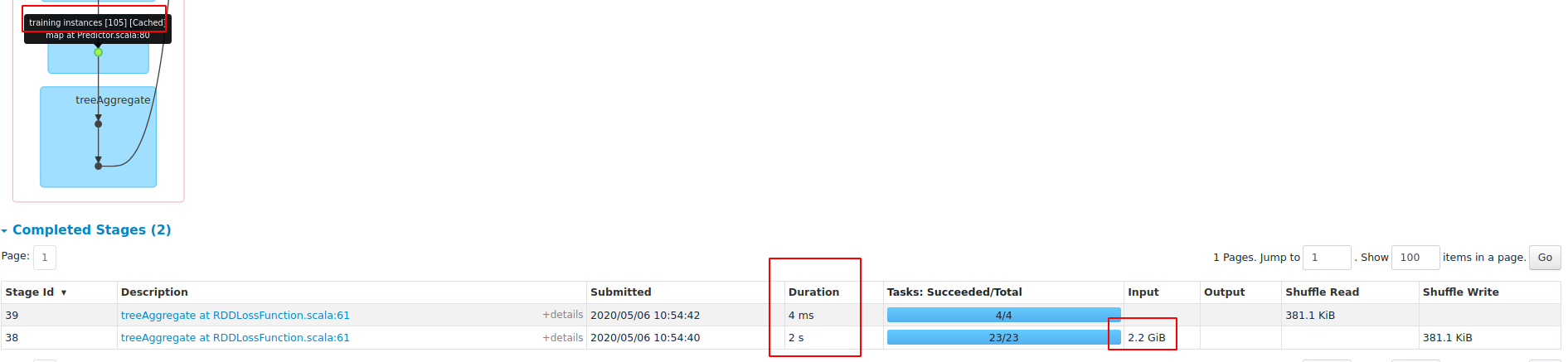

**blockSize==1**

**blockSize==256**

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org