[GitHub] codecov-io edited a comment on issue #3718: [AIRFLOW-2872] Implement 'Ad Hoc Query' for RBAC and Refine QueryView()

codecov-io edited a comment on issue #3718: [AIRFLOW-2872] Implement 'Ad Hoc Query' for RBAC and Refine QueryView() URL: https://github.com/apache/incubator-airflow/pull/3718#issuecomment-411331261 # [Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3718?src=pr=h1) Report > Merging [#3718](https://codecov.io/gh/apache/incubator-airflow/pull/3718?src=pr=desc) into [master](https://codecov.io/gh/apache/incubator-airflow/commit/8b04e20709ebeb41aeefc0c5e3f12d35108ea504?src=pr=desc) will **decrease** coverage by `0.2%`. > The diff coverage is `25%`. [](https://codecov.io/gh/apache/incubator-airflow/pull/3718?src=pr=tree) ```diff @@Coverage Diff @@ ## master#3718 +/- ## == - Coverage 77.64% 77.43% -0.21% == Files 204 204 Lines 1580115859 +58 == + Hits1226812281 +13 - Misses 3533 3578 +45 ``` | [Impacted Files](https://codecov.io/gh/apache/incubator-airflow/pull/3718?src=pr=tree) | Coverage Δ | | |---|---|---| | [airflow/www/views.py](https://codecov.io/gh/apache/incubator-airflow/pull/3718/diff?src=pr=tree#diff-YWlyZmxvdy93d3cvdmlld3MucHk=) | `68.88% <100%> (ø)` | :arrow_up: | | [airflow/www\_rbac/app.py](https://codecov.io/gh/apache/incubator-airflow/pull/3718/diff?src=pr=tree#diff-YWlyZmxvdy93d3dfcmJhYy9hcHAucHk=) | `97.82% <100%> (+0.04%)` | :arrow_up: | | [airflow/www\_rbac/views.py](https://codecov.io/gh/apache/incubator-airflow/pull/3718/diff?src=pr=tree#diff-YWlyZmxvdy93d3dfcmJhYy92aWV3cy5weQ==) | `71.2% <17.5%> (-1.66%)` | :arrow_down: | | [airflow/www\_rbac/utils.py](https://codecov.io/gh/apache/incubator-airflow/pull/3718/diff?src=pr=tree#diff-YWlyZmxvdy93d3dfcmJhYy91dGlscy5weQ==) | `62.42% <29.41%> (-3.8%)` | :arrow_down: | -- [Continue to review full report at Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3718?src=pr=continue). > **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta) > `Δ = absolute (impact)`, `ø = not affected`, `? = missing data` > Powered by [Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3718?src=pr=footer). Last update [8b04e20...6b13086](https://codecov.io/gh/apache/incubator-airflow/pull/3718?src=pr=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments). This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Resolved] (AIRFLOW-2861) Need index on log table

[ https://issues.apache.org/jira/browse/AIRFLOW-2861?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Vardan Gupta resolved AIRFLOW-2861. --- Resolution: Fixed Fix Version/s: 2.0.0 Change has been merged to Master. > Need index on log table > --- > > Key: AIRFLOW-2861 > URL: https://issues.apache.org/jira/browse/AIRFLOW-2861 > Project: Apache Airflow > Issue Type: Improvement > Components: database >Affects Versions: 1.10.0 >Reporter: Vardan Gupta >Assignee: Vardan Gupta >Priority: Major > Fix For: 2.0.0 > > > Delete dag functionality is added in v1-10-stable, whose implementation > during the metadata cleanup > [part|https://github.com/apache/incubator-airflow/blob/dc78b9196723ca6724185231ccd6f5bbe8edcaf3/airflow/api/common/experimental/delete_dag.py#L48], > look for classes which has attribute named as dag_id and then formulate the > query on matching model and then delete from metadata, we've few numbers > where we've observed slowness especially in log table because it doesn't have > any single or multiple-column index. Creating an index would boost the > performance though insertion will be a bit slower. Since deletion will be a > sync call, would be good idea to create index. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[GitHub] XD-DENG commented on issue #3718: [AIRFLOW-2872] Implement 'Ad Hoc Query' for RBAC and Refine QueryView()

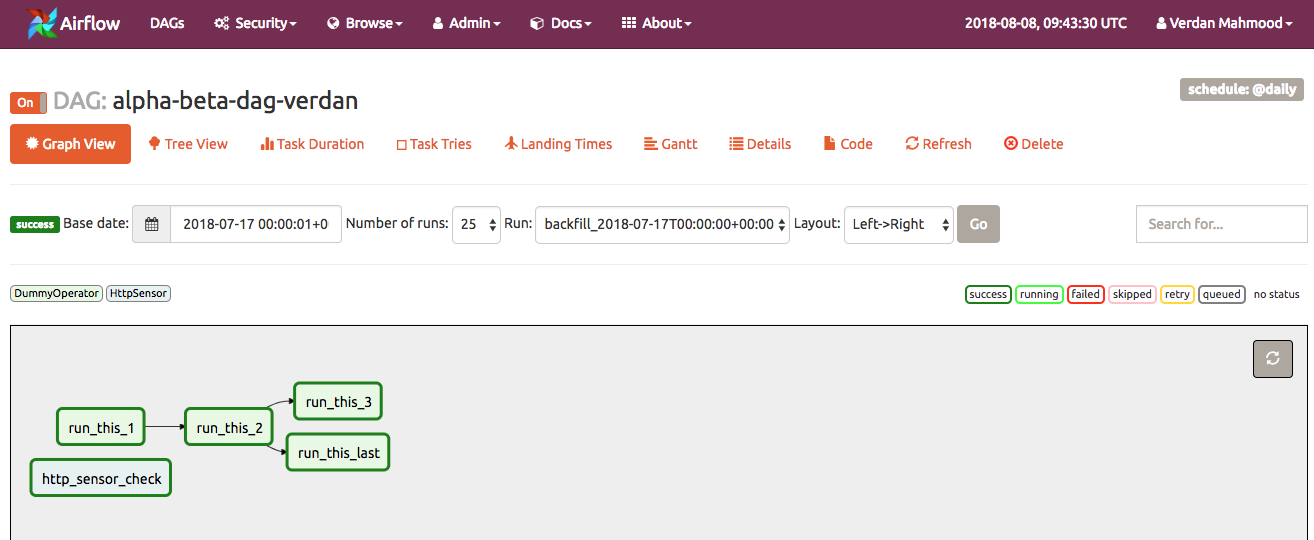

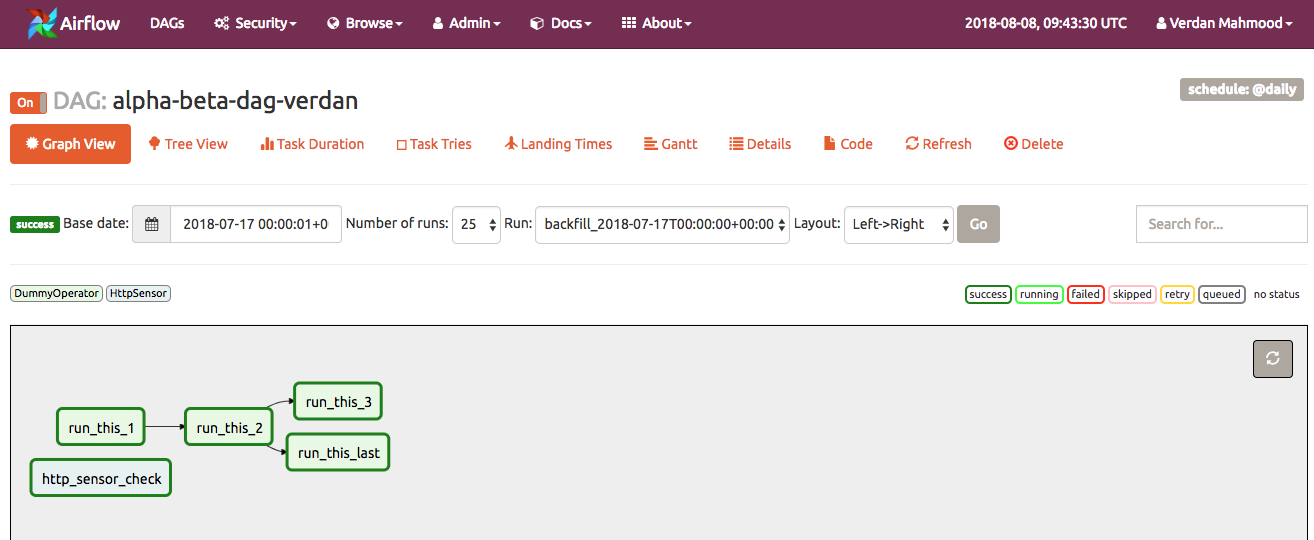

XD-DENG commented on issue #3718: [AIRFLOW-2872] Implement 'Ad Hoc Query' for RBAC and Refine QueryView() URL: https://github.com/apache/incubator-airflow/pull/3718#issuecomment-411634875 Hi @bolkedebruin , as suggested, I have implemented the **Ad Hoc Query** view for RBAC under `/www_rbac`. It's mainly based on the current implementation in `/www`. I have updated the subject and contents of this PR accordingly as well. **Screenshot - Nav Bar** https://user-images.githubusercontent.com/11539188/43878430-ac307542-9bd1-11e8-988e-114daaff4b10.png;> **Screenshot - *Ad Hoc Query* view** https://user-images.githubusercontent.com/11539188/43878434-b2321a0e-9bd1-11e8-911d-9a841fc1ed7a.png;> This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Updated] (AIRFLOW-2872) Implement "Ad Hoc Query" in /www_rbac, and refine existing QueryView()

[ https://issues.apache.org/jira/browse/AIRFLOW-2872?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Xiaodong DENG updated AIRFLOW-2872: --- Description: To implement "Ad Hoc Query" in for RBAC in /www_rbac, based on the existing implementation in /www. In addition, refine the existing QueryView(): # The ".csv" button in *Ad Hoc Query* view is responding with a plain text file, rather than a CSV file (even though users can manually change the extension). # Argument 'has_data' passed to the template is not used by the template 'airflow/query.html'. # Sometimes get errors 'UnboundLocalError: local variable 'df' referenced before assignment' # 'result = df.to_html()' should only be invoked when user doesn NOT choose '.csv'. Otherwise it's a waste of resource to invoke 'df.to_html()' since the result it returns will not be used if user askes for CSV downloading instead of a html page. was: # The ".csv" button in *Ad Hoc Query* view is responding with a plain text file, rather than a CSV file (even though users can manually change the extension). # Argument 'has_data' passed to the template is not used by the template 'airflow/query.html'. # Sometimes get errors 'UnboundLocalError: local variable 'df' referenced before assignment' # 'result = df.to_html()' should only be invoked when user doesn NOT choose '.csv'. Otherwise it's a waste of resource to invoke 'df.to_html()' since the result it returns will not be used if user askes for CSV downloading instead of a html page. Summary: Implement "Ad Hoc Query" in /www_rbac, and refine existing QueryView() (was: Minor bugs in "Ad Hoc Query" view, and refinement) > Implement "Ad Hoc Query" in /www_rbac, and refine existing QueryView() > -- > > Key: AIRFLOW-2872 > URL: https://issues.apache.org/jira/browse/AIRFLOW-2872 > Project: Apache Airflow > Issue Type: Improvement > Components: ui >Reporter: Xiaodong DENG >Assignee: Xiaodong DENG >Priority: Critical > > To implement "Ad Hoc Query" in for RBAC in /www_rbac, based on the existing > implementation in /www. > In addition, refine the existing QueryView(): > # The ".csv" button in *Ad Hoc Query* view is responding with a plain text > file, rather than a CSV file (even though users can manually change the > extension). > # Argument 'has_data' passed to the template is not used by the template > 'airflow/query.html'. > # Sometimes get errors 'UnboundLocalError: local variable 'df' referenced > before assignment' > # 'result = df.to_html()' should only be invoked when user doesn NOT choose > '.csv'. Otherwise it's a waste of resource to invoke 'df.to_html()' since the > result it returns will not be used if user askes for CSV downloading instead > of a html page. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[GitHub] feng-tao commented on a change in pull request #3648: [AIRFLOW-2786] Fix editing Variable with empty key crashing

feng-tao commented on a change in pull request #3648: [AIRFLOW-2786] Fix

editing Variable with empty key crashing

URL: https://github.com/apache/incubator-airflow/pull/3648#discussion_r208799225

##

File path: tests/www_rbac/test_views.py

##

@@ -172,6 +172,26 @@ def test_xss_prevention(self):

self.assertNotIn("",

resp.data.decode("utf-8"))

+def test_import_variables(self):

+import mock

Review comment:

nit: put the import mock among others lib import.

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[jira] [Closed] (AIRFLOW-2686) Default Variables not base on default_timezone

[ https://issues.apache.org/jira/browse/AIRFLOW-2686?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Eric Luo closed AIRFLOW-2686. - Resolution: Fixed > Default Variables not base on default_timezone > -- > > Key: AIRFLOW-2686 > URL: https://issues.apache.org/jira/browse/AIRFLOW-2686 > Project: Apache Airflow > Issue Type: Bug >Reporter: Eric Luo >Priority: Major > -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Commented] (AIRFLOW-2686) Default Variables not base on default_timezone

[

https://issues.apache.org/jira/browse/AIRFLOW-2686?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16574189#comment-16574189

]

ASF GitHub Bot commented on AIRFLOW-2686:

-

lxneng closed pull request #3554: [AIRFLOW-2686] Fix Default Variables not base

on default_timezone

URL: https://github.com/apache/incubator-airflow/pull/3554

This is a PR merged from a forked repository.

As GitHub hides the original diff on merge, it is displayed below for

the sake of provenance:

As this is a foreign pull request (from a fork), the diff is supplied

below (as it won't show otherwise due to GitHub magic):

diff --git a/airflow/models.py b/airflow/models.py

index 260c0ba5a2..2fe229a685 100755

--- a/airflow/models.py

+++ b/airflow/models.py

@@ -1809,14 +1809,15 @@ def get_template_context(self, session=None):

tables = None

if 'tables' in task.params:

tables = task.params['tables']

+# convert to default timezone

+execution_date_tz = settings.TIMEZONE.convert(self.execution_date)

+ds = execution_date_tz.strftime('%Y-%m-%d')

+ts = execution_date_tz.isoformat()

+yesterday_ds = (execution_date_tz - timedelta(1)).strftime('%Y-%m-%d')

+tomorrow_ds = (execution_date_tz + timedelta(1)).strftime('%Y-%m-%d')

-ds = self.execution_date.strftime('%Y-%m-%d')

-ts = self.execution_date.isoformat()

-yesterday_ds = (self.execution_date -

timedelta(1)).strftime('%Y-%m-%d')

-tomorrow_ds = (self.execution_date + timedelta(1)).strftime('%Y-%m-%d')

-

-prev_execution_date = task.dag.previous_schedule(self.execution_date)

-next_execution_date = task.dag.following_schedule(self.execution_date)

+prev_execution_date = task.dag.previous_schedule(execution_date_tz)

+next_execution_date = task.dag.following_schedule(execution_date_tz)

next_ds = None

if next_execution_date:

@@ -1903,7 +1904,7 @@ def __repr__(self):

'end_date': ds,

'dag_run': dag_run,

'run_id': run_id,

-'execution_date': self.execution_date,

+'execution_date': execution_date_tz,

'prev_execution_date': prev_execution_date,

'next_execution_date': next_execution_date,

'latest_date': ds,

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Default Variables not base on default_timezone

> --

>

> Key: AIRFLOW-2686

> URL: https://issues.apache.org/jira/browse/AIRFLOW-2686

> Project: Apache Airflow

> Issue Type: Bug

>Reporter: Eric Luo

>Priority: Major

>

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[GitHub] lxneng commented on issue #3554: [AIRFLOW-2686] Fix Default Variables not base on default_timezone

lxneng commented on issue #3554: [AIRFLOW-2686] Fix Default Variables not base on default_timezone URL: https://github.com/apache/incubator-airflow/pull/3554#issuecomment-411614674 Agree with @Fokko , That would be mush better. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] lxneng closed pull request #3554: [AIRFLOW-2686] Fix Default Variables not base on default_timezone

lxneng closed pull request #3554: [AIRFLOW-2686] Fix Default Variables not base

on default_timezone

URL: https://github.com/apache/incubator-airflow/pull/3554

This is a PR merged from a forked repository.

As GitHub hides the original diff on merge, it is displayed below for

the sake of provenance:

As this is a foreign pull request (from a fork), the diff is supplied

below (as it won't show otherwise due to GitHub magic):

diff --git a/airflow/models.py b/airflow/models.py

index 260c0ba5a2..2fe229a685 100755

--- a/airflow/models.py

+++ b/airflow/models.py

@@ -1809,14 +1809,15 @@ def get_template_context(self, session=None):

tables = None

if 'tables' in task.params:

tables = task.params['tables']

+# convert to default timezone

+execution_date_tz = settings.TIMEZONE.convert(self.execution_date)

+ds = execution_date_tz.strftime('%Y-%m-%d')

+ts = execution_date_tz.isoformat()

+yesterday_ds = (execution_date_tz - timedelta(1)).strftime('%Y-%m-%d')

+tomorrow_ds = (execution_date_tz + timedelta(1)).strftime('%Y-%m-%d')

-ds = self.execution_date.strftime('%Y-%m-%d')

-ts = self.execution_date.isoformat()

-yesterday_ds = (self.execution_date -

timedelta(1)).strftime('%Y-%m-%d')

-tomorrow_ds = (self.execution_date + timedelta(1)).strftime('%Y-%m-%d')

-

-prev_execution_date = task.dag.previous_schedule(self.execution_date)

-next_execution_date = task.dag.following_schedule(self.execution_date)

+prev_execution_date = task.dag.previous_schedule(execution_date_tz)

+next_execution_date = task.dag.following_schedule(execution_date_tz)

next_ds = None

if next_execution_date:

@@ -1903,7 +1904,7 @@ def __repr__(self):

'end_date': ds,

'dag_run': dag_run,

'run_id': run_id,

-'execution_date': self.execution_date,

+'execution_date': execution_date_tz,

'prev_execution_date': prev_execution_date,

'next_execution_date': next_execution_date,

'latest_date': ds,

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] codecov-io edited a comment on issue #3722: [AIRFLOW-2759] Add changes to extract proxy details at the base hook …

codecov-io edited a comment on issue #3722: [AIRFLOW-2759] Add changes to extract proxy details at the base hook … URL: https://github.com/apache/incubator-airflow/pull/3722#issuecomment-411556404 # [Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3722?src=pr=h1) Report > Merging [#3722](https://codecov.io/gh/apache/incubator-airflow/pull/3722?src=pr=desc) into [master](https://codecov.io/gh/apache/incubator-airflow/commit/8b04e20709ebeb41aeefc0c5e3f12d35108ea504?src=pr=desc) will **decrease** coverage by `59.92%`. > The diff coverage is `15.38%`. [](https://codecov.io/gh/apache/incubator-airflow/pull/3722?src=pr=tree) ```diff @@ Coverage Diff @@ ## master#3722 +/- ## === - Coverage 77.64% 17.71% -59.93% === Files 204 204 Lines 1580115826 +25 === - Hits12268 2803 -9465 - Misses 353313023 +9490 ``` | [Impacted Files](https://codecov.io/gh/apache/incubator-airflow/pull/3722?src=pr=tree) | Coverage Δ | | |---|---|---| | [airflow/utils/helpers.py](https://codecov.io/gh/apache/incubator-airflow/pull/3722/diff?src=pr=tree#diff-YWlyZmxvdy91dGlscy9oZWxwZXJzLnB5) | `26.13% <15.38%> (-45.21%)` | :arrow_down: | | [airflow/hooks/base\_hook.py](https://codecov.io/gh/apache/incubator-airflow/pull/3722/diff?src=pr=tree#diff-YWlyZmxvdy9ob29rcy9iYXNlX2hvb2sucHk=) | `40.62% <15.38%> (-51.54%)` | :arrow_down: | | [airflow/hooks/slack\_hook.py](https://codecov.io/gh/apache/incubator-airflow/pull/3722/diff?src=pr=tree#diff-YWlyZmxvdy9ob29rcy9zbGFja19ob29rLnB5) | `0% <0%> (-100%)` | :arrow_down: | | [airflow/api/common/experimental/get\_dag\_runs.py](https://codecov.io/gh/apache/incubator-airflow/pull/3722/diff?src=pr=tree#diff-YWlyZmxvdy9hcGkvY29tbW9uL2V4cGVyaW1lbnRhbC9nZXRfZGFnX3J1bnMucHk=) | `0% <0%> (-100%)` | :arrow_down: | | [airflow/example\_dags/test\_utils.py](https://codecov.io/gh/apache/incubator-airflow/pull/3722/diff?src=pr=tree#diff-YWlyZmxvdy9leGFtcGxlX2RhZ3MvdGVzdF91dGlscy5weQ==) | `0% <0%> (-100%)` | :arrow_down: | | [airflow/www/forms.py](https://codecov.io/gh/apache/incubator-airflow/pull/3722/diff?src=pr=tree#diff-YWlyZmxvdy93d3cvZm9ybXMucHk=) | `0% <0%> (-100%)` | :arrow_down: | | [airflow/sensors/time\_delta\_sensor.py](https://codecov.io/gh/apache/incubator-airflow/pull/3722/diff?src=pr=tree#diff-YWlyZmxvdy9zZW5zb3JzL3RpbWVfZGVsdGFfc2Vuc29yLnB5) | `0% <0%> (-100%)` | :arrow_down: | | [airflow/operators/email\_operator.py](https://codecov.io/gh/apache/incubator-airflow/pull/3722/diff?src=pr=tree#diff-YWlyZmxvdy9vcGVyYXRvcnMvZW1haWxfb3BlcmF0b3IucHk=) | `0% <0%> (-100%)` | :arrow_down: | | [airflow/sensors/time\_sensor.py](https://codecov.io/gh/apache/incubator-airflow/pull/3722/diff?src=pr=tree#diff-YWlyZmxvdy9zZW5zb3JzL3RpbWVfc2Vuc29yLnB5) | `0% <0%> (-100%)` | :arrow_down: | | [airflow/utils/json.py](https://codecov.io/gh/apache/incubator-airflow/pull/3722/diff?src=pr=tree#diff-YWlyZmxvdy91dGlscy9qc29uLnB5) | `0% <0%> (-100%)` | :arrow_down: | | ... and [163 more](https://codecov.io/gh/apache/incubator-airflow/pull/3722/diff?src=pr=tree-more) | | -- [Continue to review full report at Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3722?src=pr=continue). > **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta) > `Δ = absolute (impact)`, `ø = not affected`, `? = missing data` > Powered by [Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3722?src=pr=footer). Last update [8b04e20...9742956](https://codecov.io/gh/apache/incubator-airflow/pull/3722?src=pr=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments). This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] r39132 edited a comment on issue #3723: [AIRFLOW-2876] Update Tenacity to 4.12

r39132 edited a comment on issue #3723: [AIRFLOW-2876] Update Tenacity to 4.12

URL:

https://github.com/apache/incubator-airflow/pull/3723#issuecomment-411582062

@Fokko

I ran setup.py install on a branch based on this PR and I am getting

exceptions when starting the scheduler.

Here's the version of tenacity that was installed:

```

sianand@LM-SJN-21002367:~/Projects/airflow_incubator $ pip freeze | grep

tenacity

tenacity==4.12.0

```

When I run the scheduler, I see:

```

[2018-08-08 16:06:35,130] {models.py:368} ERROR - Failed to import:

/usr/local/lib/python3.6/site-packages/apache_airflow-2.0.0.dev0+incubating-py3.6.egg/airflow/example_dags/example_http_operator.py

Traceback (most recent call last):

File

"/usr/local/lib/python3.6/site-packages/apache_airflow-2.0.0.dev0+incubating-py3.6.egg/airflow/models.py",

line 365, in process_file

m = imp.load_source(mod_name, filepath)

File

"/usr/local/Cellar/python/3.6.5/Frameworks/Python.framework/Versions/3.6/lib/python3.6/imp.py",

line 172, in load_source

module = _load(spec)

File "", line 684, in _load

File "", line 665, in _load_unlocked

File "", line 678, in exec_module

File "", line 219, in

_call_with_frames_removed

File

"/usr/local/lib/python3.6/site-packages/apache_airflow-2.0.0.dev0+incubating-py3.6.egg/airflow/example_dags/example_http_operator.py",

line 27, in

from airflow.operators.http_operator import SimpleHttpOperator

File

"/usr/local/lib/python3.6/site-packages/apache_airflow-2.0.0.dev0+incubating-py3.6.egg/airflow/operators/http_operator.py",

line 21, in

from airflow.hooks.http_hook import HttpHook

File

"/usr/local/lib/python3.6/site-packages/apache_airflow-2.0.0.dev0+incubating-py3.6.egg/airflow/hooks/http_hook.py",

line 23, in

import tenacity

File

"/usr/local/lib/python3.6/site-packages/tenacity-4.12.0-py3.6.egg/tenacity/__init__.py",

line 21, in

import asyncio

File

"/usr/local/Cellar/python/3.6.5/Frameworks/Python.framework/Versions/3.6/lib/python3.6/asyncio/__init__.py",

line 21, in

from .base_events import *

File

"/usr/local/Cellar/python/3.6.5/Frameworks/Python.framework/Versions/3.6/lib/python3.6/asyncio/base_events.py",

line 17, in

import concurrent.futures

File

"/usr/local/lib/python3.6/site-packages/concurrent/futures/__init__.py", line

8, in

from concurrent.futures._base import (FIRST_COMPLETED,

File "/usr/local/lib/python3.6/site-packages/concurrent/futures/_base.py",

line 381

raise exception_type, self._exception, self._traceback

^

SyntaxError: invalid syntax

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] r39132 commented on issue #3723: [AIRFLOW-2876] Update Tenacity to 4.12

r39132 commented on issue #3723: [AIRFLOW-2876] Update Tenacity to 4.12

URL:

https://github.com/apache/incubator-airflow/pull/3723#issuecomment-411582062

@Fokko

I ran setup.py install on a branch based on this PR and I am getting

exceptions when starting the scheduler:

```

sianand@LM-SJN-21002367:~/Projects/airflow_incubator $ pip freeze | grep

tenacity

tenacity==4.12.0

[2018-08-08 16:06:35,130] {models.py:368} ERROR - Failed to import:

/usr/local/lib/python3.6/site-packages/apache_airflow-2.0.0.dev0+incubating-py3.6.egg/airflow/example_dags/example_http_operator.py

Traceback (most recent call last):

File

"/usr/local/lib/python3.6/site-packages/apache_airflow-2.0.0.dev0+incubating-py3.6.egg/airflow/models.py",

line 365, in process_file

m = imp.load_source(mod_name, filepath)

File

"/usr/local/Cellar/python/3.6.5/Frameworks/Python.framework/Versions/3.6/lib/python3.6/imp.py",

line 172, in load_source

module = _load(spec)

File "", line 684, in _load

File "", line 665, in _load_unlocked

File "", line 678, in exec_module

File "", line 219, in

_call_with_frames_removed

File

"/usr/local/lib/python3.6/site-packages/apache_airflow-2.0.0.dev0+incubating-py3.6.egg/airflow/example_dags/example_http_operator.py",

line 27, in

from airflow.operators.http_operator import SimpleHttpOperator

File

"/usr/local/lib/python3.6/site-packages/apache_airflow-2.0.0.dev0+incubating-py3.6.egg/airflow/operators/http_operator.py",

line 21, in

from airflow.hooks.http_hook import HttpHook

File

"/usr/local/lib/python3.6/site-packages/apache_airflow-2.0.0.dev0+incubating-py3.6.egg/airflow/hooks/http_hook.py",

line 23, in

import tenacity

File

"/usr/local/lib/python3.6/site-packages/tenacity-4.12.0-py3.6.egg/tenacity/__init__.py",

line 21, in

import asyncio

File

"/usr/local/Cellar/python/3.6.5/Frameworks/Python.framework/Versions/3.6/lib/python3.6/asyncio/__init__.py",

line 21, in

from .base_events import *

File

"/usr/local/Cellar/python/3.6.5/Frameworks/Python.framework/Versions/3.6/lib/python3.6/asyncio/base_events.py",

line 17, in

import concurrent.futures

File

"/usr/local/lib/python3.6/site-packages/concurrent/futures/__init__.py", line

8, in

from concurrent.futures._base import (FIRST_COMPLETED,

File "/usr/local/lib/python3.6/site-packages/concurrent/futures/_base.py",

line 381

raise exception_type, self._exception, self._traceback

^

SyntaxError: invalid syntax

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] codecov-io commented on issue #3723: [AIRFLOW-2876] Update Tenacity to 4.12

codecov-io commented on issue #3723: [AIRFLOW-2876] Update Tenacity to 4.12 URL: https://github.com/apache/incubator-airflow/pull/3723#issuecomment-411565604 # [Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3723?src=pr=h1) Report > Merging [#3723](https://codecov.io/gh/apache/incubator-airflow/pull/3723?src=pr=desc) into [master](https://codecov.io/gh/apache/incubator-airflow/commit/8b04e20709ebeb41aeefc0c5e3f12d35108ea504?src=pr=desc) will **not change** coverage. > The diff coverage is `n/a`. [](https://codecov.io/gh/apache/incubator-airflow/pull/3723?src=pr=tree) ```diff @@ Coverage Diff @@ ## master#3723 +/- ## === Coverage 77.64% 77.64% === Files 204 204 Lines 1580115801 === Hits1226812268 Misses 3533 3533 ``` -- [Continue to review full report at Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3723?src=pr=continue). > **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta) > `Δ = absolute (impact)`, `ø = not affected`, `? = missing data` > Powered by [Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3723?src=pr=footer). Last update [8b04e20...271ea66](https://codecov.io/gh/apache/incubator-airflow/pull/3723?src=pr=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments). This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] codecov-io edited a comment on issue #3658: [AIRFLOW-2524] Add Amazon SageMaker Training

codecov-io edited a comment on issue #3658: [AIRFLOW-2524] Add Amazon SageMaker Training URL: https://github.com/apache/incubator-airflow/pull/3658#issuecomment-408564225 # [Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3658?src=pr=h1) Report > Merging [#3658](https://codecov.io/gh/apache/incubator-airflow/pull/3658?src=pr=desc) into [master](https://codecov.io/gh/apache/incubator-airflow/commit/096ba9ecd961cdaebd062599f408571ffb21165a?src=pr=desc) will **increase** coverage by `0.52%`. > The diff coverage is `n/a`. [](https://codecov.io/gh/apache/incubator-airflow/pull/3658?src=pr=tree) ```diff @@Coverage Diff @@ ## master#3658 +/- ## == + Coverage 77.11% 77.63% +0.52% == Files 206 204 -2 Lines 1577215801 +29 == + Hits1216212267 +105 + Misses 3610 3534 -76 ``` | [Impacted Files](https://codecov.io/gh/apache/incubator-airflow/pull/3658?src=pr=tree) | Coverage Δ | | |---|---|---| | [airflow/api/common/experimental/mark\_tasks.py](https://codecov.io/gh/apache/incubator-airflow/pull/3658/diff?src=pr=tree#diff-YWlyZmxvdy9hcGkvY29tbW9uL2V4cGVyaW1lbnRhbC9tYXJrX3Rhc2tzLnB5) | `66.92% <0%> (-1.08%)` | :arrow_down: | | [airflow/hooks/druid\_hook.py](https://codecov.io/gh/apache/incubator-airflow/pull/3658/diff?src=pr=tree#diff-YWlyZmxvdy9ob29rcy9kcnVpZF9ob29rLnB5) | `87.67% <0%> (-1.07%)` | :arrow_down: | | [airflow/www/app.py](https://codecov.io/gh/apache/incubator-airflow/pull/3658/diff?src=pr=tree#diff-YWlyZmxvdy93d3cvYXBwLnB5) | `99.01% <0%> (-0.99%)` | :arrow_down: | | [airflow/www/validators.py](https://codecov.io/gh/apache/incubator-airflow/pull/3658/diff?src=pr=tree#diff-YWlyZmxvdy93d3cvdmFsaWRhdG9ycy5weQ==) | `100% <0%> (ø)` | :arrow_up: | | [airflow/operators/hive\_stats\_operator.py](https://codecov.io/gh/apache/incubator-airflow/pull/3658/diff?src=pr=tree#diff-YWlyZmxvdy9vcGVyYXRvcnMvaGl2ZV9zdGF0c19vcGVyYXRvci5weQ==) | `0% <0%> (ø)` | :arrow_up: | | [airflow/sensors/hdfs\_sensor.py](https://codecov.io/gh/apache/incubator-airflow/pull/3658/diff?src=pr=tree#diff-YWlyZmxvdy9zZW5zb3JzL2hkZnNfc2Vuc29yLnB5) | `100% <0%> (ø)` | :arrow_up: | | [airflow/www/views.py](https://codecov.io/gh/apache/incubator-airflow/pull/3658/diff?src=pr=tree#diff-YWlyZmxvdy93d3cvdmlld3MucHk=) | `68.88% <0%> (ø)` | :arrow_up: | | [airflow/hooks/presto\_hook.py](https://codecov.io/gh/apache/incubator-airflow/pull/3658/diff?src=pr=tree#diff-YWlyZmxvdy9ob29rcy9wcmVzdG9faG9vay5weQ==) | `39.13% <0%> (ø)` | :arrow_up: | | [airflow/\_\_init\_\_.py](https://codecov.io/gh/apache/incubator-airflow/pull/3658/diff?src=pr=tree#diff-YWlyZmxvdy9fX2luaXRfXy5weQ==) | `80.43% <0%> (ø)` | :arrow_up: | | [airflow/bin/cli.py](https://codecov.io/gh/apache/incubator-airflow/pull/3658/diff?src=pr=tree#diff-YWlyZmxvdy9iaW4vY2xpLnB5) | `64.35% <0%> (ø)` | :arrow_up: | | ... and [15 more](https://codecov.io/gh/apache/incubator-airflow/pull/3658/diff?src=pr=tree-more) | | -- [Continue to review full report at Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3658?src=pr=continue). > **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta) > `Δ = absolute (impact)`, `ø = not affected`, `? = missing data` > Powered by [Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3658?src=pr=footer). Last update [096ba9e...2ef4f6f](https://codecov.io/gh/apache/incubator-airflow/pull/3658?src=pr=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments). This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Commented] (AIRFLOW-2876) Bump version of Tenacity

[

https://issues.apache.org/jira/browse/AIRFLOW-2876?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16573904#comment-16573904

]

ASF GitHub Bot commented on AIRFLOW-2876:

-

Fokko opened a new pull request #3723: [AIRFLOW-2876] Update Tenacity to 4.12

URL: https://github.com/apache/incubator-airflow/pull/3723

Tenacity 4.8 is not python 3.7 compatible because it contains reserved

keywords in the code:

```

[2018-08-08 21:21:22,016] {models.py:366} ERROR - Failed to import:

/usr/local/lib/python3.7/site-packages/airflow/example_dags/example_http_operator.py

Traceback (most recent call last):

File "/usr/local/lib/python3.7/site-packages/airflow/models.py", line 363,

in process_file

m = imp.load_source(mod_name, filepath)

File "/usr/local/lib/python3.7/imp.py", line 172, in load_source

module = _load(spec)

File "", line 696, in _load

File "", line 677, in _load_unlocked

File "", line 728, in exec_module

File "", line 219, in

_call_with_frames_removed

File

"/usr/local/lib/python3.7/site-packages/airflow/example_dags/example_http_operator.py",

line 27, in

from airflow.operators.http_operator import SimpleHttpOperator

File

"/usr/local/lib/python3.7/site-packages/airflow/operators/http_operator.py",

line 21, in

from airflow.hooks.http_hook import HttpHook

File "/usr/local/lib/python3.7/site-packages/airflow/hooks/http_hook.py",

line 23, in

import tenacity

File "/usr/local/lib/python3.7/site-packages/tenacity/__init__.py", line

352

from tenacity.async import AsyncRetrying

```

Make sure you have checked _all_ steps below.

### Jira

- [x] My PR addresses the following [Airflow

Jira](https://issues.apache.org/jira/browse/AIRFLOW/) issues and references

them in the PR title. For example, "\[AIRFLOW-XXX\] My Airflow PR"

- https://issues.apache.org/jira/browse/AIRFLOW-2876

- In case you are fixing a typo in the documentation you can prepend your

commit with \[AIRFLOW-2876\], code changes always need a Jira issue.

### Description

- [x] Here are some details about my PR, including screenshots of any UI

changes:

### Tests

- [x] My PR adds the following unit tests __OR__ does not need testing for

this extremely good reason:

### Commits

- [x] My commits all reference Jira issues in their subject lines, and I

have squashed multiple commits if they address the same issue. In addition, my

commits follow the guidelines from "[How to write a good git commit

message](http://chris.beams.io/posts/git-commit/)":

1. Subject is separated from body by a blank line

1. Subject is limited to 50 characters (not including Jira issue reference)

1. Subject does not end with a period

1. Subject uses the imperative mood ("add", not "adding")

1. Body wraps at 72 characters

1. Body explains "what" and "why", not "how"

### Documentation

- [x] In case of new functionality, my PR adds documentation that describes

how to use it.

- When adding new operators/hooks/sensors, the autoclass documentation

generation needs to be added.

### Code Quality

- [x] Passes `git diff upstream/master -u -- "*.py" | flake8 --diff`

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Bump version of Tenacity

>

>

> Key: AIRFLOW-2876

> URL: https://issues.apache.org/jira/browse/AIRFLOW-2876

> Project: Apache Airflow

> Issue Type: Bug

>Reporter: Fokko Driesprong

>Priority: Major

>

> Since 4.8.0 is not Python 3.7 compatible, we want to bump the version to

> 4.12.0

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[GitHub] Noremac201 commented on issue #3648: [AIRFLOW-2786] Fix editing Variable with empty key crashing

Noremac201 commented on issue #3648: [AIRFLOW-2786] Fix editing Variable with empty key crashing URL: https://github.com/apache/incubator-airflow/pull/3648#issuecomment-411556954 The tests are passing now, however instead of checking the POST response webpage, it checks the variables in the database. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] Fokko opened a new pull request #3723: [AIRFLOW-2876] Update Tenacity to 4.12

Fokko opened a new pull request #3723: [AIRFLOW-2876] Update Tenacity to 4.12

URL: https://github.com/apache/incubator-airflow/pull/3723

Tenacity 4.8 is not python 3.7 compatible because it contains reserved

keywords in the code:

```

[2018-08-08 21:21:22,016] {models.py:366} ERROR - Failed to import:

/usr/local/lib/python3.7/site-packages/airflow/example_dags/example_http_operator.py

Traceback (most recent call last):

File "/usr/local/lib/python3.7/site-packages/airflow/models.py", line 363,

in process_file

m = imp.load_source(mod_name, filepath)

File "/usr/local/lib/python3.7/imp.py", line 172, in load_source

module = _load(spec)

File "", line 696, in _load

File "", line 677, in _load_unlocked

File "", line 728, in exec_module

File "", line 219, in

_call_with_frames_removed

File

"/usr/local/lib/python3.7/site-packages/airflow/example_dags/example_http_operator.py",

line 27, in

from airflow.operators.http_operator import SimpleHttpOperator

File

"/usr/local/lib/python3.7/site-packages/airflow/operators/http_operator.py",

line 21, in

from airflow.hooks.http_hook import HttpHook

File "/usr/local/lib/python3.7/site-packages/airflow/hooks/http_hook.py",

line 23, in

import tenacity

File "/usr/local/lib/python3.7/site-packages/tenacity/__init__.py", line

352

from tenacity.async import AsyncRetrying

```

Make sure you have checked _all_ steps below.

### Jira

- [x] My PR addresses the following [Airflow

Jira](https://issues.apache.org/jira/browse/AIRFLOW/) issues and references

them in the PR title. For example, "\[AIRFLOW-XXX\] My Airflow PR"

- https://issues.apache.org/jira/browse/AIRFLOW-2876

- In case you are fixing a typo in the documentation you can prepend your

commit with \[AIRFLOW-2876\], code changes always need a Jira issue.

### Description

- [x] Here are some details about my PR, including screenshots of any UI

changes:

### Tests

- [x] My PR adds the following unit tests __OR__ does not need testing for

this extremely good reason:

### Commits

- [x] My commits all reference Jira issues in their subject lines, and I

have squashed multiple commits if they address the same issue. In addition, my

commits follow the guidelines from "[How to write a good git commit

message](http://chris.beams.io/posts/git-commit/)":

1. Subject is separated from body by a blank line

1. Subject is limited to 50 characters (not including Jira issue reference)

1. Subject does not end with a period

1. Subject uses the imperative mood ("add", not "adding")

1. Body wraps at 72 characters

1. Body explains "what" and "why", not "how"

### Documentation

- [x] In case of new functionality, my PR adds documentation that describes

how to use it.

- When adding new operators/hooks/sensors, the autoclass documentation

generation needs to be added.

### Code Quality

- [x] Passes `git diff upstream/master -u -- "*.py" | flake8 --diff`

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] codecov-io edited a comment on issue #3722: [AIRFLOW-2759] Add changes to extract proxy details at the base hook …

codecov-io edited a comment on issue #3722: [AIRFLOW-2759] Add changes to extract proxy details at the base hook … URL: https://github.com/apache/incubator-airflow/pull/3722#issuecomment-411556404 # [Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3722?src=pr=h1) Report > Merging [#3722](https://codecov.io/gh/apache/incubator-airflow/pull/3722?src=pr=desc) into [master](https://codecov.io/gh/apache/incubator-airflow/commit/8b04e20709ebeb41aeefc0c5e3f12d35108ea504?src=pr=desc) will **decrease** coverage by `0.14%`. > The diff coverage is `46.15%`. [](https://codecov.io/gh/apache/incubator-airflow/pull/3722?src=pr=tree) ```diff @@Coverage Diff @@ ## master#3722 +/- ## == - Coverage 77.64% 77.49% -0.15% == Files 204 204 Lines 1580115826 +25 == - Hits1226812264 -4 - Misses 3533 3562 +29 ``` | [Impacted Files](https://codecov.io/gh/apache/incubator-airflow/pull/3722?src=pr=tree) | Coverage Δ | | |---|---|---| | [airflow/hooks/base\_hook.py](https://codecov.io/gh/apache/incubator-airflow/pull/3722/diff?src=pr=tree#diff-YWlyZmxvdy9ob29rcy9iYXNlX2hvb2sucHk=) | `76.56% <15.38%> (-15.6%)` | :arrow_down: | | [airflow/utils/helpers.py](https://codecov.io/gh/apache/incubator-airflow/pull/3722/diff?src=pr=tree#diff-YWlyZmxvdy91dGlscy9oZWxwZXJzLnB5) | `71.59% <76.92%> (+0.24%)` | :arrow_up: | | [airflow/utils/sqlalchemy.py](https://codecov.io/gh/apache/incubator-airflow/pull/3722/diff?src=pr=tree#diff-YWlyZmxvdy91dGlscy9zcWxhbGNoZW15LnB5) | `71.42% <0%> (-10%)` | :arrow_down: | | [airflow/utils/dag\_processing.py](https://codecov.io/gh/apache/incubator-airflow/pull/3722/diff?src=pr=tree#diff-YWlyZmxvdy91dGlscy9kYWdfcHJvY2Vzc2luZy5weQ==) | `88.6% <0%> (-0.85%)` | :arrow_down: | | [airflow/jobs.py](https://codecov.io/gh/apache/incubator-airflow/pull/3722/diff?src=pr=tree#diff-YWlyZmxvdy9qb2JzLnB5) | `82.49% <0%> (-0.27%)` | :arrow_down: | | [airflow/www/views.py](https://codecov.io/gh/apache/incubator-airflow/pull/3722/diff?src=pr=tree#diff-YWlyZmxvdy93d3cvdmlld3MucHk=) | `68.76% <0%> (-0.13%)` | :arrow_down: | | [airflow/models.py](https://codecov.io/gh/apache/incubator-airflow/pull/3722/diff?src=pr=tree#diff-YWlyZmxvdy9tb2RlbHMucHk=) | `88.78% <0%> (-0.05%)` | :arrow_down: | -- [Continue to review full report at Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3722?src=pr=continue). > **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta) > `Δ = absolute (impact)`, `ø = not affected`, `? = missing data` > Powered by [Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3722?src=pr=footer). Last update [8b04e20...5aaf8ea](https://codecov.io/gh/apache/incubator-airflow/pull/3722?src=pr=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments). This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] codecov-io commented on issue #3722: [AIRFLOW-2759] Add changes to extract proxy details at the base hook …

codecov-io commented on issue #3722: [AIRFLOW-2759] Add changes to extract proxy details at the base hook … URL: https://github.com/apache/incubator-airflow/pull/3722#issuecomment-411556404 # [Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3722?src=pr=h1) Report > Merging [#3722](https://codecov.io/gh/apache/incubator-airflow/pull/3722?src=pr=desc) into [master](https://codecov.io/gh/apache/incubator-airflow/commit/8b04e20709ebeb41aeefc0c5e3f12d35108ea504?src=pr=desc) will **decrease** coverage by `0.14%`. > The diff coverage is `46.15%`. [](https://codecov.io/gh/apache/incubator-airflow/pull/3722?src=pr=tree) ```diff @@Coverage Diff @@ ## master#3722 +/- ## == - Coverage 77.64% 77.49% -0.15% == Files 204 204 Lines 1580115826 +25 == - Hits1226812264 -4 - Misses 3533 3562 +29 ``` | [Impacted Files](https://codecov.io/gh/apache/incubator-airflow/pull/3722?src=pr=tree) | Coverage Δ | | |---|---|---| | [airflow/hooks/base\_hook.py](https://codecov.io/gh/apache/incubator-airflow/pull/3722/diff?src=pr=tree#diff-YWlyZmxvdy9ob29rcy9iYXNlX2hvb2sucHk=) | `76.56% <15.38%> (-15.6%)` | :arrow_down: | | [airflow/utils/helpers.py](https://codecov.io/gh/apache/incubator-airflow/pull/3722/diff?src=pr=tree#diff-YWlyZmxvdy91dGlscy9oZWxwZXJzLnB5) | `71.59% <76.92%> (+0.24%)` | :arrow_up: | | [airflow/utils/sqlalchemy.py](https://codecov.io/gh/apache/incubator-airflow/pull/3722/diff?src=pr=tree#diff-YWlyZmxvdy91dGlscy9zcWxhbGNoZW15LnB5) | `71.42% <0%> (-10%)` | :arrow_down: | | [airflow/utils/dag\_processing.py](https://codecov.io/gh/apache/incubator-airflow/pull/3722/diff?src=pr=tree#diff-YWlyZmxvdy91dGlscy9kYWdfcHJvY2Vzc2luZy5weQ==) | `88.6% <0%> (-0.85%)` | :arrow_down: | | [airflow/jobs.py](https://codecov.io/gh/apache/incubator-airflow/pull/3722/diff?src=pr=tree#diff-YWlyZmxvdy9qb2JzLnB5) | `82.49% <0%> (-0.27%)` | :arrow_down: | | [airflow/www/views.py](https://codecov.io/gh/apache/incubator-airflow/pull/3722/diff?src=pr=tree#diff-YWlyZmxvdy93d3cvdmlld3MucHk=) | `68.76% <0%> (-0.13%)` | :arrow_down: | | [airflow/models.py](https://codecov.io/gh/apache/incubator-airflow/pull/3722/diff?src=pr=tree#diff-YWlyZmxvdy9tb2RlbHMucHk=) | `88.78% <0%> (-0.05%)` | :arrow_down: | -- [Continue to review full report at Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3722?src=pr=continue). > **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta) > `Δ = absolute (impact)`, `ø = not affected`, `? = missing data` > Powered by [Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3722?src=pr=footer). Last update [8b04e20...5aaf8ea](https://codecov.io/gh/apache/incubator-airflow/pull/3722?src=pr=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments). This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Created] (AIRFLOW-2876) Bump version of Tenacity

Fokko Driesprong created AIRFLOW-2876: - Summary: Bump version of Tenacity Key: AIRFLOW-2876 URL: https://issues.apache.org/jira/browse/AIRFLOW-2876 Project: Apache Airflow Issue Type: Bug Reporter: Fokko Driesprong Since 4.8.0 is not Python 3.7 compatible, we want to bump the version to 4.12.0 -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[GitHub] codecov-io edited a comment on issue #3648: [AIRFLOW-2786] Fix editing Variable with empty key crashing

codecov-io edited a comment on issue #3648: [AIRFLOW-2786] Fix editing Variable with empty key crashing URL: https://github.com/apache/incubator-airflow/pull/3648#issuecomment-408263467 # [Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3648?src=pr=h1) Report > Merging [#3648](https://codecov.io/gh/apache/incubator-airflow/pull/3648?src=pr=desc) into [master](https://codecov.io/gh/apache/incubator-airflow/commit/8b04e20709ebeb41aeefc0c5e3f12d35108ea504?src=pr=desc) will **increase** coverage by `<.01%`. > The diff coverage is `86.66%`. [](https://codecov.io/gh/apache/incubator-airflow/pull/3648?src=pr=tree) ```diff @@Coverage Diff @@ ## master#3648 +/- ## == + Coverage 77.64% 77.64% +<.01% == Files 204 204 Lines 1580115825 +24 == + Hits1226812287 +19 - Misses 3533 3538 +5 ``` | [Impacted Files](https://codecov.io/gh/apache/incubator-airflow/pull/3648?src=pr=tree) | Coverage Δ | | |---|---|---| | [airflow/www\_rbac/utils.py](https://codecov.io/gh/apache/incubator-airflow/pull/3648/diff?src=pr=tree#diff-YWlyZmxvdy93d3dfcmJhYy91dGlscy5weQ==) | `67.1% <100%> (+0.88%)` | :arrow_up: | | [airflow/www/views.py](https://codecov.io/gh/apache/incubator-airflow/pull/3648/diff?src=pr=tree#diff-YWlyZmxvdy93d3cvdmlld3MucHk=) | `69.04% <100%> (+0.15%)` | :arrow_up: | | [airflow/www/utils.py](https://codecov.io/gh/apache/incubator-airflow/pull/3648/diff?src=pr=tree#diff-YWlyZmxvdy93d3cvdXRpbHMucHk=) | `88.81% <100%> (+0.28%)` | :arrow_up: | | [airflow/www\_rbac/views.py](https://codecov.io/gh/apache/incubator-airflow/pull/3648/diff?src=pr=tree#diff-YWlyZmxvdy93d3dfcmJhYy92aWV3cy5weQ==) | `72.72% <60%> (-0.14%)` | :arrow_down: | | [airflow/models.py](https://codecov.io/gh/apache/incubator-airflow/pull/3648/diff?src=pr=tree#diff-YWlyZmxvdy9tb2RlbHMucHk=) | `88.78% <0%> (-0.05%)` | :arrow_down: | -- [Continue to review full report at Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3648?src=pr=continue). > **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta) > `Δ = absolute (impact)`, `ø = not affected`, `? = missing data` > Powered by [Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3648?src=pr=footer). Last update [8b04e20...e8669f1](https://codecov.io/gh/apache/incubator-airflow/pull/3648?src=pr=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments). This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] tedmiston commented on a change in pull request #3703: [AIRFLOW-2857] Fix broken RTD env

tedmiston commented on a change in pull request #3703: [AIRFLOW-2857] Fix broken RTD env URL: https://github.com/apache/incubator-airflow/pull/3703#discussion_r208734436 ## File path: setup.py ## @@ -161,6 +164,7 @@ def write_version(filename=os.path.join(*['airflow', databricks = ['requests>=2.5.1, <3'] datadog = ['datadog>=0.14.0'] doc = [ +'mock', Review comment: @Fokko What Kaxil found is consistent with what I saw as well. There's a bit more info on it in (1) in my comment here https://github.com/apache/incubator-airflow/pull/3703#issuecomment-410836135. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] codecov-io edited a comment on issue #3648: [AIRFLOW-2786] Fix editing Variable with empty key crashing

codecov-io edited a comment on issue #3648: [AIRFLOW-2786] Fix editing Variable with empty key crashing URL: https://github.com/apache/incubator-airflow/pull/3648#issuecomment-408263467 # [Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3648?src=pr=h1) Report > Merging [#3648](https://codecov.io/gh/apache/incubator-airflow/pull/3648?src=pr=desc) into [master](https://codecov.io/gh/apache/incubator-airflow/commit/8b04e20709ebeb41aeefc0c5e3f12d35108ea504?src=pr=desc) will **increase** coverage by `<.01%`. > The diff coverage is `86.66%`. [](https://codecov.io/gh/apache/incubator-airflow/pull/3648?src=pr=tree) ```diff @@Coverage Diff @@ ## master#3648 +/- ## == + Coverage 77.64% 77.64% +<.01% == Files 204 204 Lines 1580115825 +24 == + Hits1226812287 +19 - Misses 3533 3538 +5 ``` | [Impacted Files](https://codecov.io/gh/apache/incubator-airflow/pull/3648?src=pr=tree) | Coverage Δ | | |---|---|---| | [airflow/www\_rbac/utils.py](https://codecov.io/gh/apache/incubator-airflow/pull/3648/diff?src=pr=tree#diff-YWlyZmxvdy93d3dfcmJhYy91dGlscy5weQ==) | `67.1% <100%> (+0.88%)` | :arrow_up: | | [airflow/www/utils.py](https://codecov.io/gh/apache/incubator-airflow/pull/3648/diff?src=pr=tree#diff-YWlyZmxvdy93d3cvdXRpbHMucHk=) | `88.81% <100%> (+0.28%)` | :arrow_up: | | [airflow/www/views.py](https://codecov.io/gh/apache/incubator-airflow/pull/3648/diff?src=pr=tree#diff-YWlyZmxvdy93d3cvdmlld3MucHk=) | `69.04% <100%> (+0.15%)` | :arrow_up: | | [airflow/www\_rbac/views.py](https://codecov.io/gh/apache/incubator-airflow/pull/3648/diff?src=pr=tree#diff-YWlyZmxvdy93d3dfcmJhYy92aWV3cy5weQ==) | `72.72% <60%> (-0.14%)` | :arrow_down: | | [airflow/models.py](https://codecov.io/gh/apache/incubator-airflow/pull/3648/diff?src=pr=tree#diff-YWlyZmxvdy9tb2RlbHMucHk=) | `88.78% <0%> (-0.05%)` | :arrow_down: | -- [Continue to review full report at Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3648?src=pr=continue). > **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta) > `Δ = absolute (impact)`, `ø = not affected`, `? = missing data` > Powered by [Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3648?src=pr=footer). Last update [8b04e20...e8669f1](https://codecov.io/gh/apache/incubator-airflow/pull/3648?src=pr=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments). This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] codecov-io edited a comment on issue #3648: [AIRFLOW-2786] Fix editing Variable with empty key crashing

codecov-io edited a comment on issue #3648: [AIRFLOW-2786] Fix editing Variable with empty key crashing URL: https://github.com/apache/incubator-airflow/pull/3648#issuecomment-408263467 # [Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3648?src=pr=h1) Report > Merging [#3648](https://codecov.io/gh/apache/incubator-airflow/pull/3648?src=pr=desc) into [master](https://codecov.io/gh/apache/incubator-airflow/commit/8b04e20709ebeb41aeefc0c5e3f12d35108ea504?src=pr=desc) will **increase** coverage by `<.01%`. > The diff coverage is `86.66%`. [](https://codecov.io/gh/apache/incubator-airflow/pull/3648?src=pr=tree) ```diff @@Coverage Diff @@ ## master#3648 +/- ## == + Coverage 77.64% 77.64% +<.01% == Files 204 204 Lines 1580115825 +24 == + Hits1226812287 +19 - Misses 3533 3538 +5 ``` | [Impacted Files](https://codecov.io/gh/apache/incubator-airflow/pull/3648?src=pr=tree) | Coverage Δ | | |---|---|---| | [airflow/www\_rbac/utils.py](https://codecov.io/gh/apache/incubator-airflow/pull/3648/diff?src=pr=tree#diff-YWlyZmxvdy93d3dfcmJhYy91dGlscy5weQ==) | `67.1% <100%> (+0.88%)` | :arrow_up: | | [airflow/www/views.py](https://codecov.io/gh/apache/incubator-airflow/pull/3648/diff?src=pr=tree#diff-YWlyZmxvdy93d3cvdmlld3MucHk=) | `69.04% <100%> (+0.15%)` | :arrow_up: | | [airflow/www/utils.py](https://codecov.io/gh/apache/incubator-airflow/pull/3648/diff?src=pr=tree#diff-YWlyZmxvdy93d3cvdXRpbHMucHk=) | `88.81% <100%> (+0.28%)` | :arrow_up: | | [airflow/www\_rbac/views.py](https://codecov.io/gh/apache/incubator-airflow/pull/3648/diff?src=pr=tree#diff-YWlyZmxvdy93d3dfcmJhYy92aWV3cy5weQ==) | `72.72% <60%> (-0.14%)` | :arrow_down: | | [airflow/models.py](https://codecov.io/gh/apache/incubator-airflow/pull/3648/diff?src=pr=tree#diff-YWlyZmxvdy9tb2RlbHMucHk=) | `88.78% <0%> (-0.05%)` | :arrow_down: | -- [Continue to review full report at Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3648?src=pr=continue). > **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta) > `Δ = absolute (impact)`, `ø = not affected`, `? = missing data` > Powered by [Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3648?src=pr=footer). Last update [8b04e20...e8669f1](https://codecov.io/gh/apache/incubator-airflow/pull/3648?src=pr=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments). This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Commented] (AIRFLOW-2759) Simplify proxy server based access to external platforms like Google cloud

[

https://issues.apache.org/jira/browse/AIRFLOW-2759?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16573840#comment-16573840

]

ASF GitHub Bot commented on AIRFLOW-2759:

-

amohan34 opened a new pull request #3722: [AIRFLOW-2759] Add changes to extract

proxy details at the base hook …

URL: https://github.com/apache/incubator-airflow/pull/3722

…level

Make sure you have checked _all_ steps below.

### Jira

- [x] My PR addresses the following [Airflow

Jira](https://issues.apache.org/jira/browse/AIRFLOW/) issues and references

them in the PR title. For example, "\[AIRFLOW-2759\] My Airflow PR"

- https://issues.apache.org/jira/browse/AIRFLOW-2759

- In case you are fixing a typo in the documentation you can prepend your

commit with \[AIRFLOW-2759\], code changes always need a Jira issue.

### Description

- [x] Here are some details about my PR, including screenshots of any UI

changes:

### Tests

- [x] My PR adds the following unit tests __OR__ does not need testing for

this extremely good reason:

To test, run the following commands within tests/contrib/hooks

nosetests test_gcp_api_base_hook_proxy.py --with-coverage

--cover-package=airflow.contrib.hooks.gcp_api_base_hook

### Commits

- [x†] My commits all reference Jira issues in their subject lines, and I

have squashed multiple commits if they address the same issue. In addition, my

commits follow the guidelines from "[How to write a good git commit

message](http://chris.beams.io/posts/git-commit/)":

1. Subject is separated from body by a blank line

1. Subject is limited to 50 characters (not including Jira issue reference)

1. Subject does not end with a period

1. Subject uses the imperative mood ("add", not "adding")

1. Body wraps at 72 characters

1. Body explains "what" and "why", not "how"

### Documentation

- [x] In case of new functionality, my PR adds documentation that describes

how to use it.

- When adding new operators/hooks/sensors, the autoclass documentation

generation needs to be added.

### Code Quality

- [x] Passes `git diff upstream/master -u -- "*.py" | flake8 --diff`

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Simplify proxy server based access to external platforms like Google cloud

> ---

>

> Key: AIRFLOW-2759

> URL: https://issues.apache.org/jira/browse/AIRFLOW-2759

> Project: Apache Airflow

> Issue Type: Improvement

> Components: hooks

>Reporter: Aishwarya Mohan

>Assignee: Aishwarya Mohan

>Priority: Major

> Labels: hooks, proxy

>

> Several companies adopt a Proxy Server based approach in order to provide an

> additional layer of security while communicating with external platforms to

> establish legitimacy of caller and calle. A potential use case would be

> writing logs from Airflow to a cloud storage platform like google cloud via

> an intermediary proxy server.

> In the current scenario the proxy details need to be hardcoded and passed to

> the HTTP client library(httplib2) in the GoogleCloudBaseHook class

> (gcp_api_base_hook.py). It would be convenient if the proxy details (for

> example, host and port) can be extracted from the airflow configuration file

> as opposed to hardcoding the details at hook level.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[GitHub] amohan34 opened a new pull request #3722: [AIRFLOW-2759] Add changes to extract proxy details at the base hook …

amohan34 opened a new pull request #3722: [AIRFLOW-2759] Add changes to extract

proxy details at the base hook …

URL: https://github.com/apache/incubator-airflow/pull/3722

…level

Make sure you have checked _all_ steps below.

### Jira

- [x] My PR addresses the following [Airflow

Jira](https://issues.apache.org/jira/browse/AIRFLOW/) issues and references

them in the PR title. For example, "\[AIRFLOW-2759\] My Airflow PR"

- https://issues.apache.org/jira/browse/AIRFLOW-2759

- In case you are fixing a typo in the documentation you can prepend your

commit with \[AIRFLOW-2759\], code changes always need a Jira issue.

### Description

- [x] Here are some details about my PR, including screenshots of any UI

changes:

### Tests

- [x] My PR adds the following unit tests __OR__ does not need testing for

this extremely good reason:

To test, run the following commands within tests/contrib/hooks

nosetests test_gcp_api_base_hook_proxy.py --with-coverage

--cover-package=airflow.contrib.hooks.gcp_api_base_hook

### Commits

- [x†] My commits all reference Jira issues in their subject lines, and I

have squashed multiple commits if they address the same issue. In addition, my

commits follow the guidelines from "[How to write a good git commit

message](http://chris.beams.io/posts/git-commit/)":

1. Subject is separated from body by a blank line

1. Subject is limited to 50 characters (not including Jira issue reference)

1. Subject does not end with a period

1. Subject uses the imperative mood ("add", not "adding")

1. Body wraps at 72 characters

1. Body explains "what" and "why", not "how"

### Documentation

- [x] In case of new functionality, my PR adds documentation that describes

how to use it.

- When adding new operators/hooks/sensors, the autoclass documentation

generation needs to be added.

### Code Quality

- [x] Passes `git diff upstream/master -u -- "*.py" | flake8 --diff`

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] bolkedebruin commented on issue #3560: [AIRFLOW-2697] Drop snakebite in favour of hdfs3

bolkedebruin commented on issue #3560: [AIRFLOW-2697] Drop snakebite in favour of hdfs3 URL: https://github.com/apache/incubator-airflow/pull/3560#issuecomment-411509935 Of course! But the ball needs to get rolling too This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] Fokko commented on issue #3560: [AIRFLOW-2697] Drop snakebite in favour of hdfs3

Fokko commented on issue #3560: [AIRFLOW-2697] Drop snakebite in favour of hdfs3 URL: https://github.com/apache/incubator-airflow/pull/3560#issuecomment-411506325 @bolkedebruin My preference would be to get rid of the deprecation warnings and target it for Apache Airflow 2.0 This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] feng-tao commented on a change in pull request #3714: [AIRFLOW-2867] Refactor code to conform Python standards & guidelines

feng-tao commented on a change in pull request #3714: [AIRFLOW-2867] Refactor code to conform Python standards & guidelines URL: https://github.com/apache/incubator-airflow/pull/3714#discussion_r208683154 ## File path: airflow/contrib/operators/mongo_to_s3.py ## @@ -105,7 +106,8 @@ def _stringify(self, iterable, joinable='\n'): [json.dumps(doc, default=json_util.default) for doc in iterable] ) -def transform(self, docs): +@staticmethod Review comment: +1, I would also prefer static methods above instance methods This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Commented] (AIRFLOW-2856) Update Docker Env Setup Docs to Account for verify_gpl_dependency()

[ https://issues.apache.org/jira/browse/AIRFLOW-2856?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16573627#comment-16573627 ] ASF subversion and git services commented on AIRFLOW-2856: -- Commit 55200b48bbc0bf86db72c2b7eab01109bb98102f in incubator-airflow's branch refs/heads/v1-10-stable from [~ajc] [ https://gitbox.apache.org/repos/asf?p=incubator-airflow.git;h=55200b4 ] [AIRFLOW-2856] Pass in SLUGIFY_USES_TEXT_UNIDECODE=yes ENV to docker run (#3701) (cherry picked from commit 8687ab9271b7b93473584a720f225f20fa9a7aa4) Signed-off-by: Bolke de Bruin (cherry picked from commit 3670d49a4c5da4769be1df5b4f8ef3504b74a184) Signed-off-by: Bolke de Bruin > Update Docker Env Setup Docs to Account for verify_gpl_dependency() > --- > > Key: AIRFLOW-2856 > URL: https://issues.apache.org/jira/browse/AIRFLOW-2856 > Project: Apache Airflow > Issue Type: Improvement >Reporter: Andy Cooper >Assignee: Andy Cooper >Priority: Major > -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Commented] (AIRFLOW-2869) Remove smart quote from default config

[ https://issues.apache.org/jira/browse/AIRFLOW-2869?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16573623#comment-16573623 ] ASF subversion and git services commented on AIRFLOW-2869: -- Commit 26f6faed690846c963f90fe06458b3711d841e25 in incubator-airflow's branch refs/heads/v1-10-stable from [~wdhorton] [ https://gitbox.apache.org/repos/asf?p=incubator-airflow.git;h=26f6fae ] [AIRFLOW-2869] Remove smart quote from default config Closes #3716 from wdhorton/remove-smart-quote- from-cfg (cherry picked from commit 67e2bb96cdc5ea37226d11332362d3bd3778cea0) Signed-off-by: Bolke de Bruin (cherry picked from commit 700f5f088dbead866170c9a3fe7e021e86ab30bb) Signed-off-by: Bolke de Bruin > Remove smart quote from default config > -- > > Key: AIRFLOW-2869 > URL: https://issues.apache.org/jira/browse/AIRFLOW-2869 > Project: Apache Airflow > Issue Type: Improvement >Reporter: Siddharth Anand >Assignee: Siddharth Anand >Priority: Trivial > Fix For: 2.0.0 > > -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Commented] (AIRFLOW-2859) DateTimes returned from the database are not converted to UTC

[ https://issues.apache.org/jira/browse/AIRFLOW-2859?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16573625#comment-16573625 ] ASF subversion and git services commented on AIRFLOW-2859: -- Commit a6d5ee9ce57bcdd22dde3fdf00e3b1cd26274ede in incubator-airflow's branch refs/heads/v1-10-stable from bolkedebruin [ https://gitbox.apache.org/repos/asf?p=incubator-airflow.git;h=a6d5ee9 ] [AIRFLOW-2859] Implement own UtcDateTime (#3708) The different UtcDateTime implementations all have issues. Either they replace tzinfo directly without converting or they do not convert to UTC at all. We also ensure all mysql connections are in UTC in order to keep sanity, as mysql will ignore the timezone of a field when inserting/updating. (cherry picked from commit 6fd4e6055e36e9867923b0b402363fcd8c30e297) Signed-off-by: Bolke de Bruin (cherry picked from commit 8fc8c7ae5483c002f5264b087b26a20fd8ae7b67) Signed-off-by: Bolke de Bruin > DateTimes returned from the database are not converted to UTC > - > > Key: AIRFLOW-2859 > URL: https://issues.apache.org/jira/browse/AIRFLOW-2859 > Project: Apache Airflow > Issue Type: Bug > Components: database >Reporter: Bolke de Bruin >Priority: Blocker > Fix For: 1.10.0 > > > This is due to the fact that sqlalchemy-utcdatetime does not convert to UTC > when the database returns datetimes with tzinfo. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Commented] (AIRFLOW-2856) Update Docker Env Setup Docs to Account for verify_gpl_dependency()

[ https://issues.apache.org/jira/browse/AIRFLOW-2856?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16573619#comment-16573619 ] ASF subversion and git services commented on AIRFLOW-2856: -- Commit 3670d49a4c5da4769be1df5b4f8ef3504b74a184 in incubator-airflow's branch refs/heads/v1-10-test from [~ajc] [ https://gitbox.apache.org/repos/asf?p=incubator-airflow.git;h=3670d49 ] [AIRFLOW-2856] Pass in SLUGIFY_USES_TEXT_UNIDECODE=yes ENV to docker run (#3701) (cherry picked from commit 8687ab9271b7b93473584a720f225f20fa9a7aa4) Signed-off-by: Bolke de Bruin > Update Docker Env Setup Docs to Account for verify_gpl_dependency() > --- > > Key: AIRFLOW-2856 > URL: https://issues.apache.org/jira/browse/AIRFLOW-2856 > Project: Apache Airflow > Issue Type: Improvement >Reporter: Andy Cooper >Assignee: Andy Cooper >Priority: Major > -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Commented] (AIRFLOW-2870) Migrations fail when upgrading from below cc1e65623dc7_add_max_tries_column_to_task_instance

[

https://issues.apache.org/jira/browse/AIRFLOW-2870?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16573618#comment-16573618

]

ASF subversion and git services commented on AIRFLOW-2870:

--

Commit 95aa49a71dcc69d2e9a8e32b69a2a61cacec2b1b in incubator-airflow's branch

refs/heads/v1-10-test from bolkedebruin

[ https://gitbox.apache.org/repos/asf?p=incubator-airflow.git;h=95aa49a ]

[AIRFLOW-2870] Use abstract TaskInstance for migration (#3720)

If we use the full model for migration it can have columns

added that are not available yet in the database. Using

an abstraction ensures only the columns that are required

for data migration are present.

(cherry picked from commit 546f1cdb5208ba8e1cf3bde36bbdbb639fa20b22)

Signed-off-by: Bolke de Bruin

> Migrations fail when upgrading from below

> cc1e65623dc7_add_max_tries_column_to_task_instance

>

>

> Key: AIRFLOW-2870

> URL: https://issues.apache.org/jira/browse/AIRFLOW-2870

> Project: Apache Airflow

> Issue Type: Bug

>Reporter: George Leslie-Waksman

>Priority: Blocker

>

> Running migrations from below

> cc1e65623dc7_add_max_tries_column_to_task_instance.py fail with:

> {noformat}

> INFO [alembic.runtime.migration] Context impl PostgresqlImpl.

> INFO [alembic.runtime.migration] Will assume transactional DDL.

> INFO [alembic.runtime.migration] Running upgrade 127d2bf2dfa7 ->

> cc1e65623dc7, add max tries column to task instance

> Traceback (most recent call last):

> File "/usr/local/lib/python3.6/site-packages/sqlalchemy/engine/base.py",

> line 1182, in _execute_context

> context)

> File "/usr/local/lib/python3.6/site-packages/sqlalchemy/engine/default.py",

> line 470, in do_execute

> cursor.execute(statement, parameters)

> psycopg2.ProgrammingError: column task_instance.executor_config does not exist

> LINE 1: ...ued_dttm, task_instance.pid AS task_instance_pid, task_insta...

> {noformat}

> The failure is occurring because

> cc1e65623dc7_add_max_tries_column_to_task_instance.py imports TaskInstance

> from the current code version, which has changes to the task_instance table

> that are not expected by the migration.

> Specifically, 27c6a30d7c24_add_executor_config_to_task_instance.py adds an

> executor_config column that does not exist as of when

> cc1e65623dc7_add_max_tries_column_to_task_instance.py is run.

> It is worth noting that this will not be observed for new installs because

> the migration branches on table existence/non-existence at a point that will

> hide the issue from new installs.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[GitHub] troychen728 commented on a change in pull request #3658: [AIRFLOW-2524] Add Amazon SageMaker Training

troychen728 commented on a change in pull request #3658: [AIRFLOW-2524] Add

Amazon SageMaker Training

URL: https://github.com/apache/incubator-airflow/pull/3658#discussion_r208672024

##

File path: airflow/contrib/hooks/sagemaker_hook.py

##

@@ -0,0 +1,239 @@

+# -*- coding: utf-8 -*-

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+import copy

+import time

+from botocore.exceptions import ClientError

+

+from airflow.exceptions import AirflowException

+from airflow.contrib.hooks.aws_hook import AwsHook

+from airflow.hooks.S3_hook import S3Hook

+

+

+class SageMakerHook(AwsHook):

+"""

+Interact with Amazon SageMaker.

+sagemaker_conn_id is required for using

+the config stored in db for training/tuning

+"""

+

+def __init__(self,

+ sagemaker_conn_id=None,

+ use_db_config=False,

+ region_name=None,

+ check_interval=5,

+ max_ingestion_time=None,

+ *args, **kwargs):

+super(SageMakerHook, self).__init__(*args, **kwargs)

+self.sagemaker_conn_id = sagemaker_conn_id

+self.use_db_config = use_db_config

+self.region_name = region_name

+self.check_interval = check_interval

+self.max_ingestion_time = max_ingestion_time

+self.conn = self.get_conn()

+

+def check_for_url(self, s3url):

+"""

+check if the s3url exists

+:param s3url: S3 url

+:type s3url:str

+:return: bool

+"""

+bucket, key = S3Hook.parse_s3_url(s3url)

+s3hook = S3Hook(aws_conn_id=self.aws_conn_id)

+if not s3hook.check_for_bucket(bucket_name=bucket):

+raise AirflowException(

+"The input S3 Bucket {} does not exist ".format(bucket))

+if not s3hook.check_for_key(key=key, bucket_name=bucket):

+raise AirflowException("The input S3 Key {} does not exist in the

Bucket"

+ .format(s3url, bucket))

+return True

+

+def check_valid_training_input(self, training_config):

+"""

+Run checks before a training starts

+:param config: training_config

+:type config: dict

+:return: None

+"""

+for channel in training_config['InputDataConfig']:

+self.check_for_url(channel['DataSource']

+ ['S3DataSource']['S3Uri'])

+

+def check_valid_tuning_input(self, tuning_config):

+"""

+Run checks before a tuning job starts

+:param config: tuning_config

+:type config: dict

+:return: None

+"""

+for channel in

tuning_config['TrainingJobDefinition']['InputDataConfig']:

+self.check_for_url(channel['DataSource']

+ ['S3DataSource']['S3Uri'])

+

+def check_status(self, non_terminal_states,

+ failed_state, key,

+ describe_function, *args):

+"""

+:param non_terminal_states: the set of non_terminal states

+:type non_terminal_states: dict

+:param failed_state: the set of failed states

+:type failed_state: dict

+:param key: the key of the response dict

+that points to the state

+:type key: string

+:param describe_function: the function used to retrieve the status

+:type describe_function: python callable

+:param args: the arguments for the function

+:return: None

+"""

+sec = 0

+running = True

+

+while running:

+

+sec = sec + self.check_interval

+

+if self.max_ingestion_time and sec > self.max_ingestion_time:

+# ensure that the job gets killed if the max ingestion time is

exceeded

+raise AirflowException("SageMaker job took more than "

+ "%s seconds", self.max_ingestion_time)

+

+time.sleep(self.check_interval)

+try:

+status = describe_function(*args)[key]

+self.log.info("Job still running for %s seconds... "

+ "current status is %s" % (sec, status))

+

[GitHub] troychen728 commented on a change in pull request #3658: [AIRFLOW-2524] Add Amazon SageMaker Training

troychen728 commented on a change in pull request #3658: [AIRFLOW-2524] Add

Amazon SageMaker Training

URL: https://github.com/apache/incubator-airflow/pull/3658#discussion_r208671964

##

File path: airflow/contrib/hooks/sagemaker_hook.py

##

@@ -0,0 +1,239 @@

+# -*- coding: utf-8 -*-

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+import copy

+import time

+from botocore.exceptions import ClientError

+

+from airflow.exceptions import AirflowException

+from airflow.contrib.hooks.aws_hook import AwsHook

+from airflow.hooks.S3_hook import S3Hook

+

+

+class SageMakerHook(AwsHook):

+"""

+Interact with Amazon SageMaker.

+sagemaker_conn_id is required for using

+the config stored in db for training/tuning

+"""

+

+def __init__(self,

+ sagemaker_conn_id=None,

+ use_db_config=False,

+ region_name=None,

+ check_interval=5,

+ max_ingestion_time=None,

+ *args, **kwargs):

+super(SageMakerHook, self).__init__(*args, **kwargs)

+self.sagemaker_conn_id = sagemaker_conn_id

+self.use_db_config = use_db_config