Zhangg7723 opened a new pull request, #5100:

URL: https://github.com/apache/iceberg/pull/5100

**Purpose**

V2 table support equality deletes for row level delete, delete rows are

loaded into memory when rewrite or query job is running, but this will cause

OOM with too many delete rows in a real scenario, especially in flink upsert

mode. As issue #4312 mentioned, flink rewrite jobs caused out of memory

exception which also happen with spark or other engine support v2 table.

delete rows in hash set occupy most of the heap memory.

**Goal**

Reduce delete rows loaded in memory, optimize the performance of delete

compaction by bloom filter, just for parquet format in this PR, thanks for the

pull request of @huaxingao about the parquet bloom filter support #4831, and we

are working on orc format.

**How**

<img width="377" alt="image"

src="https://user-images.githubusercontent.com/18146312/174741477-02a9fcae-dade-494c-803e-bcd2cb45c61a.png";>

Before reading the equality delete data, load the bloom filter of current

data file, then filter delete rows not in this data file.

**Verification**

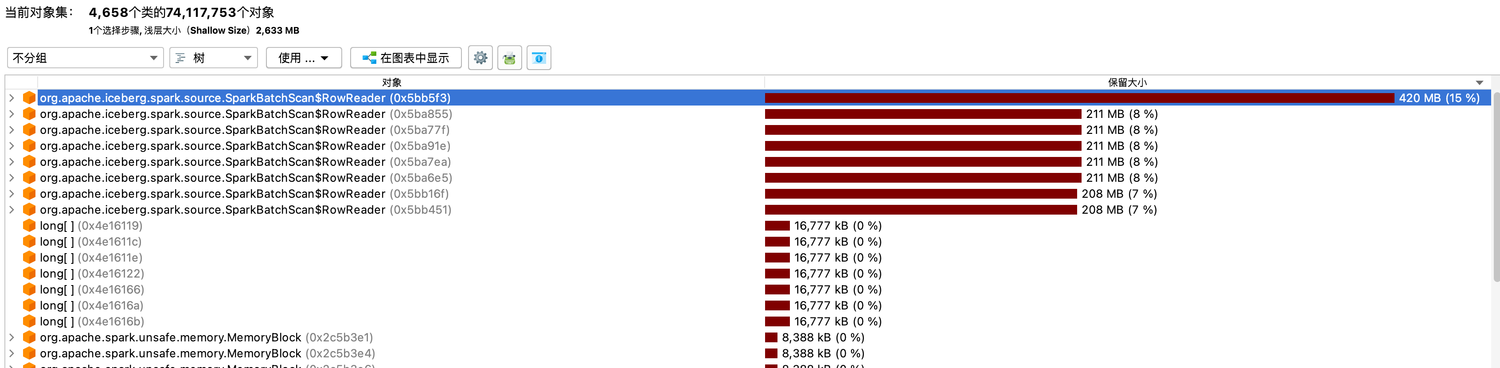

We verified the performance improvement by a test case.

Environment:

Job: spark rewrite job with 300 million data rows and 30 million delete

rows

executor num:2

executor memory:2G

executor core:8

Before optimization:

<img width="666" alt="image"

src="https://user-images.githubusercontent.com/18146312/174747923-a3fb0452-8dab-447b-89ba-2e788a91ece4.png";>

JVM did full gc frequently,this job failed in the end.

After optimization:

<img width="666" alt="image"

src="https://user-images.githubusercontent.com/18146312/174748078-8763ad9d-d90a-4c49-933b-fc587c0d5610.png";>

Obviously, the memory pressure reduced, and the job finished successfully.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: [email protected]

For queries about this service, please contact Infrastructure at:

[email protected]

---------------------------------------------------------------------

To unsubscribe, e-mail: [email protected]

For additional commands, e-mail: [email protected]