(spark-docker) branch master updated: [SPARK-47206][FOLLOWUP] Fix wrong path version

This is an automated email from the ASF dual-hosted git repository.

yikun pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark-docker.git

The following commit(s) were added to refs/heads/master by this push:

new 4f2d96a [SPARK-47206][FOLLOWUP] Fix wrong path version

4f2d96a is described below

commit 4f2d96a415c89cfe0fde89a55e9034d095224c94

Author: Yikun Jiang

AuthorDate: Thu Feb 29 09:49:01 2024 +0800

[SPARK-47206][FOLLOWUP] Fix wrong path version

### What changes were proposed in this pull request?

Fix wrong path version.

### Why are the changes needed?

This will be used by https://github.com/docker-library/official-images .

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

```

$ tools/manifest.py manifest

Maintainers: Apache Spark Developers (ApacheSpark)

GitRepo: https://github.com/apache/spark-docker.git

Tags: 3.5.1-scala2.12-java17-python3-ubuntu, 3.5.1-java17-python3,

3.5.1-java17, python3-java17

Architectures: amd64, arm64v8

GitCommit: 8b4329162bbbd1ce5c9d885a1edcd6d61ebcc676

Directory: ./3.5.1/scala2.12-java17-python3-ubuntu

Tags: 3.5.1-scala2.12-java17-r-ubuntu, 3.5.1-java17-r

Architectures: amd64, arm64v8

GitCommit: 8b4329162bbbd1ce5c9d885a1edcd6d61ebcc676

Directory: ./3.5.1/scala2.12-java17-r-ubuntu

Tags: 3.5.1-scala2.12-java17-ubuntu, 3.5.1-java17-scala

Architectures: amd64, arm64v8

GitCommit: 8b4329162bbbd1ce5c9d885a1edcd6d61ebcc676

Directory: ./3.5.1/scala2.12-java17-ubuntu

Tags: 3.5.1-scala2.12-java17-python3-r-ubuntu

Architectures: amd64, arm64v8

GitCommit: 8b4329162bbbd1ce5c9d885a1edcd6d61ebcc676

Directory: ./3.5.1/scala2.12-java17-python3-r-ubuntu

Tags: 3.5.1-scala2.12-java11-python3-ubuntu, 3.5.1-python3, 3.5.1, python3,

latest

Architectures: amd64, arm64v8

GitCommit: 8b4329162bbbd1ce5c9d885a1edcd6d61ebcc676

Directory: ./3.5.1/scala2.12-java11-python3-ubuntu

Tags: 3.5.1-scala2.12-java11-r-ubuntu, 3.5.1-r, r

Architectures: amd64, arm64v8

GitCommit: 8b4329162bbbd1ce5c9d885a1edcd6d61ebcc676

Directory: ./3.5.1/scala2.12-java11-r-ubuntu

Tags: 3.5.1-scala2.12-java11-ubuntu, 3.5.1-scala, scala

Architectures: amd64, arm64v8

GitCommit: 8b4329162bbbd1ce5c9d885a1edcd6d61ebcc676

Directory: ./3.5.1/scala2.12-java11-ubuntu

Tags: 3.5.1-scala2.12-java11-python3-r-ubuntu

Architectures: amd64, arm64v8

GitCommit: 8b4329162bbbd1ce5c9d885a1edcd6d61ebcc676

Directory: ./3.5.1/scala2.12-java11-python3-r-ubuntu

```

Closes #60 from Yikun/3.5.1-follow.

Authored-by: Yikun Jiang

Signed-off-by: Yikun Jiang

---

versions.json | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/versions.json b/versions.json

index 3d3e3b9..6ea6d71 100644

--- a/versions.json

+++ b/versions.json

@@ -30,7 +30,7 @@

]

},

{

- "path": "3.5.0/scala2.12-java11-python3-ubuntu",

+ "path": "3.5.1/scala2.12-java11-python3-ubuntu",

"tags": [

"3.5.1-scala2.12-java11-python3-ubuntu",

"3.5.1-python3",

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

(spark-docker) branch master updated: [SPARK-47206] Add official image Dockerfile for Apache Spark 3.5.1

This is an automated email from the ASF dual-hosted git repository.

yikun pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark-docker.git

The following commit(s) were added to refs/heads/master by this push:

new 7216374 [SPARK-47206] Add official image Dockerfile for Apache Spark

3.5.1

7216374 is described below

commit 7216374855ba57ce14c8ddbf56890538f678ec3d

Author: Yikun Jiang

AuthorDate: Thu Feb 29 08:55:47 2024 +0800

[SPARK-47206] Add official image Dockerfile for Apache Spark 3.5.1

### What changes were proposed in this pull request?

Add Apache Spark 3.5.1 Dockerfiles.

- Add 3.5.1 GPG key

- Add .github/workflows/build_3.5.1.yaml

- `./add-dockerfiles.sh 3.5.1` to generate dockerfiles

- Add version and tag info

### Why are the changes needed?

Apache Spark 3.5.1 released

### Does this PR introduce _any_ user-facing change?

Docker image will be published.

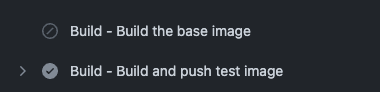

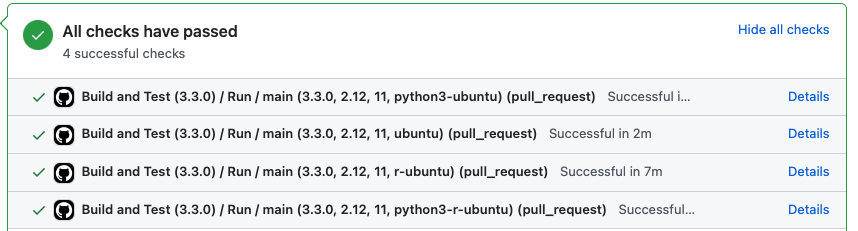

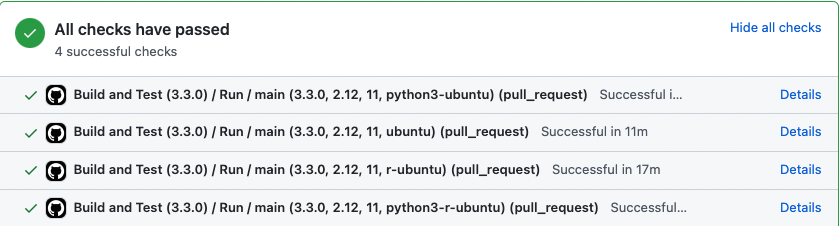

### How was this patch tested?

Add workflow and CI passed

Closes #59 from Yikun/3.5.1.

Authored-by: Yikun Jiang

Signed-off-by: Yikun Jiang

---

.github/workflows/build_3.5.1.yaml | 43 +++

.github/workflows/publish.yml | 4 +-

.github/workflows/test.yml | 3 +-

3.5.1/scala2.12-java11-python3-r-ubuntu/Dockerfile | 29 +

3.5.1/scala2.12-java11-python3-ubuntu/Dockerfile | 26 +

3.5.1/scala2.12-java11-r-ubuntu/Dockerfile | 28 +

3.5.1/scala2.12-java11-ubuntu/Dockerfile | 79 +

3.5.1/scala2.12-java11-ubuntu/entrypoint.sh| 130 +

3.5.1/scala2.12-java17-python3-r-ubuntu/Dockerfile | 29 +

3.5.1/scala2.12-java17-python3-ubuntu/Dockerfile | 26 +

3.5.1/scala2.12-java17-r-ubuntu/Dockerfile | 28 +

3.5.1/scala2.12-java17-ubuntu/Dockerfile | 79 +

3.5.1/scala2.12-java17-ubuntu/entrypoint.sh| 130 +

tools/template.py | 4 +-

versions.json | 74 ++--

15 files changed, 699 insertions(+), 13 deletions(-)

diff --git a/.github/workflows/build_3.5.1.yaml

b/.github/workflows/build_3.5.1.yaml

new file mode 100644

index 000..65a8d5d

--- /dev/null

+++ b/.github/workflows/build_3.5.1.yaml

@@ -0,0 +1,43 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+#

+

+name: "Build and Test (3.5.1)"

+

+on:

+ pull_request:

+branches:

+ - 'master'

+paths:

+ - '3.5.1/**'

+

+jobs:

+ run-build:

+strategy:

+ matrix:

+image-type: ["all", "python", "scala", "r"]

+java: [11, 17]

+name: Run

+secrets: inherit

+uses: ./.github/workflows/main.yml

+with:

+ spark: 3.5.1

+ scala: 2.12

+ java: ${{ matrix.java }}

+ image-type: ${{ matrix.image-type }}

+

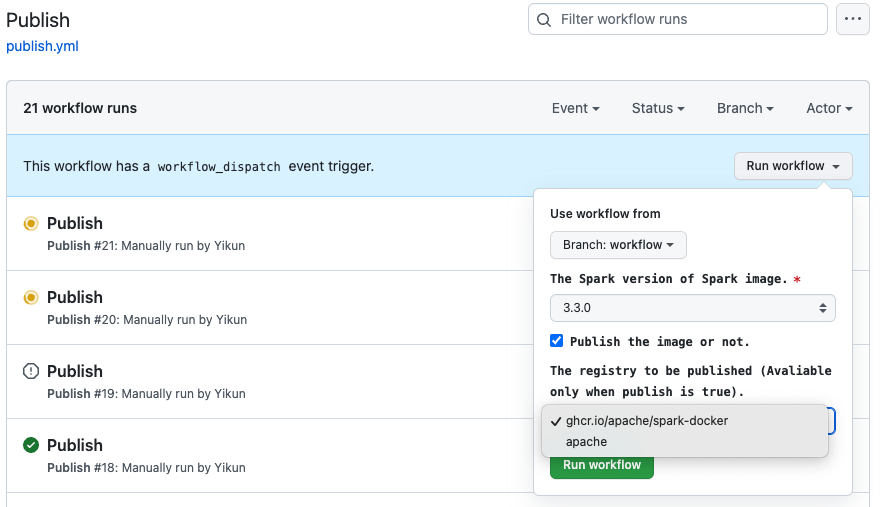

diff --git a/.github/workflows/publish.yml b/.github/workflows/publish.yml

index 2f828a4..5dfc210 100644

--- a/.github/workflows/publish.yml

+++ b/.github/workflows/publish.yml

@@ -25,10 +25,10 @@ on:

spark:

description: 'The Spark version of Spark image.'

required: true

-default: '3.5.0'

+default: '3.5.1'

type: choice

options:

-- 3.5.0

+- 3.5.1

publish:

description: 'Publish the image or not.'

default: false

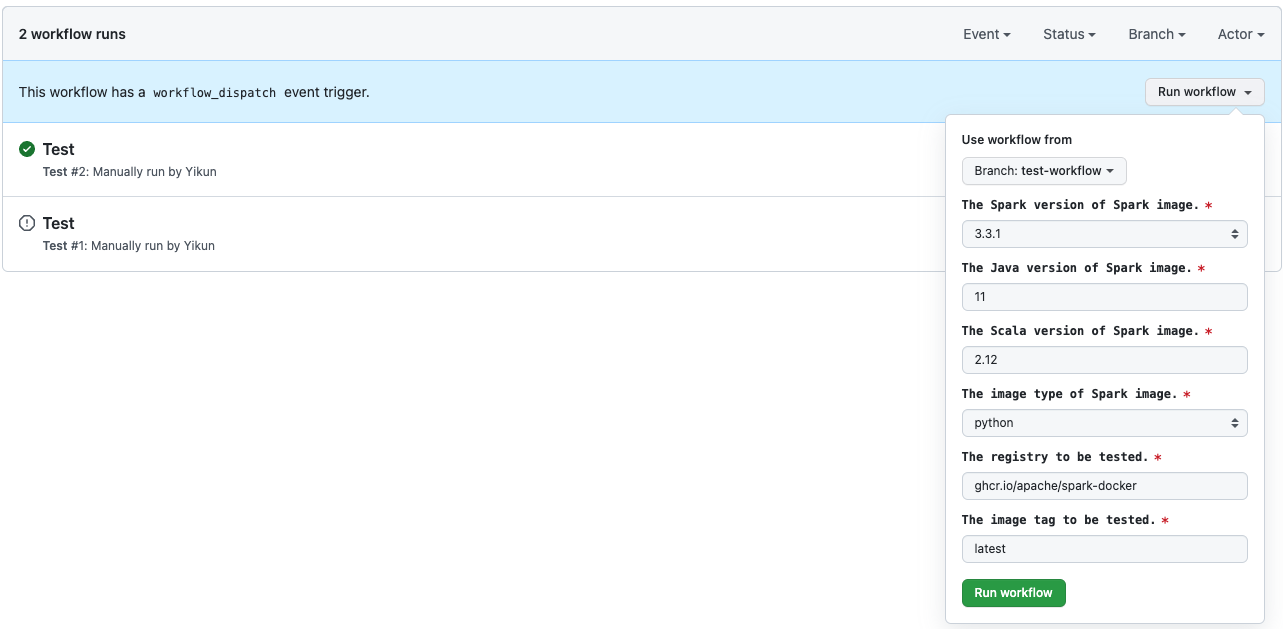

diff --git a/.github/workflows/test.yml b/.github/workflows/test.yml

index df79364..9c08b33 100644

--- a/.github/workflows/test.yml

+++ b/.github/workflows/test.yml

@@ -25,9 +25,10 @@ on:

spark:

description: 'The Spark version of Spark image.'

required: true

-default: '3.5.0'

+default: '3.5.1'

type: choice

options:

+- 3.5.1

- 3.5.0

- 3.4.2

- 3.4.1

diff --git a/3.5.1/scala2.12-java11-python3-r-ubuntu/Dockerfile

b/3.5.1/scala2.12-java11-python3-r-ubuntu/Dockerfile

new file mode 100644

index 000..57c044b

--- /dev/null

+++ b/3.5.1/scala2.12-java11-python3-r-ubuntu/Dockerfile

@@ -0,0 +1,29 @@

+#

+# Licensed to the Apa

(spark-docker) branch master updated: [SPARK-46209] Add java 11 only yml for version before 3.5

This is an automated email from the ASF dual-hosted git repository.

yikun pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark-docker.git

The following commit(s) were added to refs/heads/master by this push:

new 431aa51 [SPARK-46209] Add java 11 only yml for version before 3.5

431aa51 is described below

commit 431aa516ba58985c902bf2d2a07bf0eaa1df6740

Author: Yikun Jiang

AuthorDate: Sat Dec 2 20:36:29 2023 +0800

[SPARK-46209] Add java 11 only yml for version before 3.5

### What changes were proposed in this pull request?

Add Java11 only workflow for version before 3.5.0.

### Why are the changes needed?

otherwise, the publish will failed due to no java 17 file founded in

version before v 3.5.0.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Test on my repo:

https://github.com/Yikun/spark-docker/actions/workflows/publish-java11.yml

Closes #58 from Yikun/java11-publish.

Authored-by: Yikun Jiang

Signed-off-by: Yikun Jiang

---

.github/workflows/{publish.yml => publish-java11.yml} | 9 -

.github/workflows/publish.yml | 7 ---

2 files changed, 4 insertions(+), 12 deletions(-)

diff --git a/.github/workflows/publish.yml

b/.github/workflows/publish-java11.yml

similarity index 96%

copy from .github/workflows/publish.yml

copy to .github/workflows/publish-java11.yml

index ec0d66c..caa3702 100644

--- a/.github/workflows/publish.yml

+++ b/.github/workflows/publish-java11.yml

@@ -17,7 +17,7 @@

# under the License.

#

-name: "Publish"

+name: "Publish (Java 11 only)"

on:

workflow_dispatch:

@@ -25,10 +25,9 @@ on:

spark:

description: 'The Spark version of Spark image.'

required: true

-default: '3.5.0'

+default: '3.4.2'

type: choice

options:

-- 3.5.0

- 3.4.2

- 3.4.1

- 3.4.0

@@ -59,7 +58,7 @@ jobs:

strategy:

matrix:

scala: [2.12]

-java: [11, 17]

+java: [11]

image-type: ["scala"]

permissions:

packages: write

@@ -81,7 +80,7 @@ jobs:

strategy:

matrix:

scala: [2.12]

-java: [11, 17]

+java: [11]

image-type: ["all", "python", "r"]

permissions:

packages: write

diff --git a/.github/workflows/publish.yml b/.github/workflows/publish.yml

index ec0d66c..2f828a4 100644

--- a/.github/workflows/publish.yml

+++ b/.github/workflows/publish.yml

@@ -29,13 +29,6 @@ on:

type: choice

options:

- 3.5.0

-- 3.4.2

-- 3.4.1

-- 3.4.0

-- 3.3.3

-- 3.3.2

-- 3.3.1

-- 3.3.0

publish:

description: 'Publish the image or not.'

default: false

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

(spark-docker) branch master updated: [SPARK-46185] Add official image Dockerfile for Apache Spark 3.4.2

This is an automated email from the ASF dual-hosted git repository.

yikun pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark-docker.git

The following commit(s) were added to refs/heads/master by this push:

new ec69b9c [SPARK-46185] Add official image Dockerfile for Apache Spark

3.4.2

ec69b9c is described below

commit ec69b9c77bc733ed5937f5068d23f7407eb51ea9

Author: Yikun Jiang

AuthorDate: Sat Dec 2 10:00:48 2023 +0800

[SPARK-46185] Add official image Dockerfile for Apache Spark 3.4.2

### What changes were proposed in this pull request?

Add Apache Spark 3.4.2 Dockerfiles.

- Add 3.4.2 GPG key

- Add .github/workflows/build_3.4.2.yaml

- `./add-dockerfiles.sh 3.4.2` to generate dockerfiles (and remove master

changes:

https://github.com/apache/spark-docker/pull/55/commits/24cbf40abdc252fdcf48303efa33ba7f84adefaf)

- Add version and tag info

### Why are the changes needed?

Apache Spark 3.4.2 released

### Does this PR introduce _any_ user-facing change?

Docker image will be published.

### How was this patch tested?

Add workflow and CI passed

Closes #57 from Yikun/3.4.2.

Authored-by: Yikun Jiang

Signed-off-by: Yikun Jiang

---

.github/workflows/build_3.4.2.yaml | 41 +++

.github/workflows/publish.yml | 1 +

.github/workflows/test.yml | 1 +

3.4.2/scala2.12-java11-python3-r-ubuntu/Dockerfile | 29 +

3.4.2/scala2.12-java11-python3-ubuntu/Dockerfile | 26 +

3.4.2/scala2.12-java11-r-ubuntu/Dockerfile | 28 +

3.4.2/scala2.12-java11-ubuntu/Dockerfile | 79 +

3.4.2/scala2.12-java11-ubuntu/entrypoint.sh| 126 +

tools/template.py | 2 +

versions.json | 28 +

10 files changed, 361 insertions(+)

diff --git a/.github/workflows/build_3.4.2.yaml

b/.github/workflows/build_3.4.2.yaml

new file mode 100644

index 000..8ae17d1

--- /dev/null

+++ b/.github/workflows/build_3.4.2.yaml

@@ -0,0 +1,41 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+#

+

+name: "Build and Test (3.4.2)"

+

+on:

+ pull_request:

+branches:

+ - 'master'

+paths:

+ - '3.4.2/**'

+

+jobs:

+ run-build:

+strategy:

+ matrix:

+image-type: ["all", "python", "scala", "r"]

+name: Run

+secrets: inherit

+uses: ./.github/workflows/main.yml

+with:

+ spark: 3.4.2

+ scala: 2.12

+ java: 11

+ image-type: ${{ matrix.image-type }}

diff --git a/.github/workflows/publish.yml b/.github/workflows/publish.yml

index 879a9c2..ec0d66c 100644

--- a/.github/workflows/publish.yml

+++ b/.github/workflows/publish.yml

@@ -29,6 +29,7 @@ on:

type: choice

options:

- 3.5.0

+- 3.4.2

- 3.4.1

- 3.4.0

- 3.3.3

diff --git a/.github/workflows/test.yml b/.github/workflows/test.yml

index 689981a..df79364 100644

--- a/.github/workflows/test.yml

+++ b/.github/workflows/test.yml

@@ -29,6 +29,7 @@ on:

type: choice

options:

- 3.5.0

+- 3.4.2

- 3.4.1

- 3.4.0

- 3.3.3

diff --git a/3.4.2/scala2.12-java11-python3-r-ubuntu/Dockerfile

b/3.4.2/scala2.12-java11-python3-r-ubuntu/Dockerfile

new file mode 100644

index 000..7c7e96a

--- /dev/null

+++ b/3.4.2/scala2.12-java11-python3-r-ubuntu/Dockerfile

@@ -0,0 +1,29 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one or more

+# contributor license agreements. See the NOTICE file distributed with

+# this work for additional information regarding copyright ownership.

+# The ASF licenses this file to You under the Apache License, Version 2.0

+# (the "License"); you may not use this file except in compliance with

+# the License. You may obtain a copy of the License at

+#

+#http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS"

(spark-docker) branch master updated: Add support for java 17 from spark 3.5.0

This is an automated email from the ASF dual-hosted git repository.

yikun pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark-docker.git

The following commit(s) were added to refs/heads/master by this push:

new 6f68fe0 Add support for java 17 from spark 3.5.0

6f68fe0 is described below

commit 6f68fe0f7051c10f2bf43a50a7decfce2e97baf0

Author: vakarisbk

AuthorDate: Fri Nov 10 11:33:39 2023 +0800

Add support for java 17 from spark 3.5.0

### What changes were proposed in this pull request?

1. Create Java17 base images alongside Java11 images starting from spark

3.5.0

2. Change ubuntu version to 22.04 for `scala2.12-java17-*`

### Why are the changes needed?

Spark supports multiple Java versions, but the images are currently built

only with Java 11.

### Does this PR introduce _any_ user-facing change?

New images would be available in the repositories.

### How was this patch tested?

Closes #56 from vakarisbk/master.

Authored-by: vakarisbk

Signed-off-by: Yikun Jiang

---

.github/workflows/build_3.5.0.yaml | 3 +-

.github/workflows/main.yml | 20 +++-

.github/workflows/publish.yml | 4 +-

.github/workflows/test.yml | 3 +

3.5.0/scala2.12-java17-python3-r-ubuntu/Dockerfile | 29 +

3.5.0/scala2.12-java17-python3-ubuntu/Dockerfile | 26 +

3.5.0/scala2.12-java17-r-ubuntu/Dockerfile | 28 +

3.5.0/scala2.12-java17-ubuntu/Dockerfile | 79 +

3.5.0/scala2.12-java17-ubuntu/entrypoint.sh| 130 +

add-dockerfiles.sh | 23 +++-

tools/ci_runner_cleaner/free_disk_space.sh | 53 +

.../ci_runner_cleaner/free_disk_space_container.sh | 33 ++

tools/template.py | 2 +-

versions.json | 29 +

14 files changed, 454 insertions(+), 8 deletions(-)

diff --git a/.github/workflows/build_3.5.0.yaml

b/.github/workflows/build_3.5.0.yaml

index 6eb3ad6..9f2b2d6 100644

--- a/.github/workflows/build_3.5.0.yaml

+++ b/.github/workflows/build_3.5.0.yaml

@@ -31,11 +31,12 @@ jobs:

strategy:

matrix:

image-type: ["all", "python", "scala", "r"]

+java: [11, 17]

name: Run

secrets: inherit

uses: ./.github/workflows/main.yml

with:

spark: 3.5.0

scala: 2.12

- java: 11

+ java: ${{ matrix.java }}

image-type: ${{ matrix.image-type }}

diff --git a/.github/workflows/main.yml b/.github/workflows/main.yml

index fe755ed..145b529 100644

--- a/.github/workflows/main.yml

+++ b/.github/workflows/main.yml

@@ -79,6 +79,14 @@ jobs:

- name: Checkout Spark Docker repository

uses: actions/checkout@v3

+ - name: Free up disk space

+shell: 'script -q -e -c "bash {0}"'

+run: |

+ chmod +x tools/ci_runner_cleaner/free_disk_space_container.sh

+ tools/ci_runner_cleaner/free_disk_space_container.sh

+ chmod +x tools/ci_runner_cleaner/free_disk_space.sh

+ tools/ci_runner_cleaner/free_disk_space.sh

+

- name: Prepare - Generate tags

run: |

case "${{ inputs.image-type }}" in

@@ -195,7 +203,8 @@ jobs:

- name : Test - Run spark application for standalone cluster on docker

run: testing/run_tests.sh --image-url $IMAGE_URL --scala-version ${{

inputs.scala }} --spark-version ${{ inputs.spark }}

- - name: Test - Checkout Spark repository

+ - name: Test - Checkout Spark repository for Spark 3.3.0 (with

fetch-depth 0)

+if: inputs.spark == '3.3.0'

uses: actions/checkout@v3

with:

fetch-depth: 0

@@ -203,6 +212,14 @@ jobs:

ref: v${{ inputs.spark }}

path: ${{ github.workspace }}/spark

+ - name: Test - Checkout Spark repository

+if: inputs.spark != '3.3.0'

+uses: actions/checkout@v3

+with:

+ repository: apache/spark

+ ref: v${{ inputs.spark }}

+ path: ${{ github.workspace }}/spark

+

- name: Test - Cherry pick commits

# Apache Spark enable resource limited k8s IT since v3.3.1,

cherry-pick patches for old release

# https://github.com/apache/spark/pull/36087#issuecomment-1251756266

@@ -247,6 +264,7 @@ jobs:

# TODO(SPARK-44495): Resume to use the latest minikube for

k8s-integration-tests.

curl -LO

https://storage.googleapis.com/minikube/releases/v1.30.1/minikube-linux-amd64

sudo install minikube-linux-amd64 /usr/local/bin/minikube

+ rm minikube-linux-amd64

# Github Action limit cpu:2, memory: 6947MB, limit to 2U6G for

better resource statistic

minikube start --cpus 2 --memory 6144

diff --git a/.github

[spark-docker] branch master updated: [SPARK-45169] Add official image Dockerfile for Apache Spark 3.5.0

This is an automated email from the ASF dual-hosted git repository.

yikun pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark-docker.git

The following commit(s) were added to refs/heads/master by this push:

new 028efd4 [SPARK-45169] Add official image Dockerfile for Apache Spark

3.5.0

028efd4 is described below

commit 028efd4637fb2cf791d5bd9ea70b2fca472de4b7

Author: Yikun Jiang

AuthorDate: Thu Sep 14 21:22:32 2023 +0800

[SPARK-45169] Add official image Dockerfile for Apache Spark 3.5.0

### What changes were proposed in this pull request?

Add Apache Spark 3.5.0 Dockerfiles.

- Add 3.5.0 GPG key

- Add .github/workflows/build_3.5.0.yaml

- `./add-dockerfiles.sh 3.5.0` to generate dockerfiles

- Add version and tag info

- Backport

https://github.com/apache/spark/commit/1d2c338c867c69987d8ed1f3666358af54a040e3

and

https://github.com/apache/spark/commit/0c7b4306c7c5fbdd6c54f8172f82e1d23e3b

entrypoint changes

### Why are the changes needed?

Apache Spark 3.5.0 released

### Does this PR introduce _any_ user-facing change?

Docker image will be published.

### How was this patch tested?

Add workflow and CI passed

Closes #55 from Yikun/3.5.0.

Authored-by: Yikun Jiang

Signed-off-by: Yikun Jiang

---

.github/workflows/build_3.5.0.yaml | 41 +++

.github/workflows/publish.yml | 3 +-

.github/workflows/test.yml | 3 +-

3.5.0/scala2.12-java11-python3-r-ubuntu/Dockerfile | 29

3.5.0/scala2.12-java11-python3-ubuntu/Dockerfile | 26 +++

3.5.0/scala2.12-java11-r-ubuntu/Dockerfile | 28

3.5.0/scala2.12-java11-ubuntu/Dockerfile | 79 ++

.../scala2.12-java11-ubuntu/entrypoint.sh | 4 ++

entrypoint.sh.template | 4 ++

tools/template.py | 4 +-

versions.json | 42 ++--

11 files changed, 253 insertions(+), 10 deletions(-)

diff --git a/.github/workflows/build_3.5.0.yaml

b/.github/workflows/build_3.5.0.yaml

new file mode 100644

index 000..6eb3ad6

--- /dev/null

+++ b/.github/workflows/build_3.5.0.yaml

@@ -0,0 +1,41 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+#

+

+name: "Build and Test (3.5.0)"

+

+on:

+ pull_request:

+branches:

+ - 'master'

+paths:

+ - '3.5.0/**'

+

+jobs:

+ run-build:

+strategy:

+ matrix:

+image-type: ["all", "python", "scala", "r"]

+name: Run

+secrets: inherit

+uses: ./.github/workflows/main.yml

+with:

+ spark: 3.5.0

+ scala: 2.12

+ java: 11

+ image-type: ${{ matrix.image-type }}

diff --git a/.github/workflows/publish.yml b/.github/workflows/publish.yml

index d213ada..8cfa95d 100644

--- a/.github/workflows/publish.yml

+++ b/.github/workflows/publish.yml

@@ -25,9 +25,10 @@ on:

spark:

description: 'The Spark version of Spark image.'

required: true

-default: '3.4.1'

+default: '3.5.0'

type: choice

options:

+- 3.5.0

- 3.4.1

- 3.4.0

- 3.3.3

diff --git a/.github/workflows/test.yml b/.github/workflows/test.yml

index 4f0f741..47dac20 100644

--- a/.github/workflows/test.yml

+++ b/.github/workflows/test.yml

@@ -25,9 +25,10 @@ on:

spark:

description: 'The Spark version of Spark image.'

required: true

-default: '3.4.1'

+default: '3.5.0'

type: choice

options:

+- 3.5.0

- 3.4.1

- 3.4.0

- 3.3.3

diff --git a/3.5.0/scala2.12-java11-python3-r-ubuntu/Dockerfile

b/3.5.0/scala2.12-java11-python3-r-ubuntu/Dockerfile

new file mode 100644

index 000..d6faaa7

--- /dev/null

+++ b/3.5.0/scala2.12-java11-python3-r-ubuntu/Dockerfile

@@ -0,0 +1,29 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one or more

+# contributor license agreements. See the NOTICE file distributed with

+# this work for additional information regarding copyright ownership.

[spark-docker] branch master updated: [SPARK-44494] Pin minikube to v1.30.1 to fix spark-docker K8s CI

This is an automated email from the ASF dual-hosted git repository. yikun pushed a commit to branch master in repository https://gitbox.apache.org/repos/asf/spark-docker.git The following commit(s) were added to refs/heads/master by this push: new 6fd201e [SPARK-44494] Pin minikube to v1.30.1 to fix spark-docker K8s CI 6fd201e is described below commit 6fd201e7c6e6a36c7a18e3b5877c3616081a05cf Author: Yikun Jiang AuthorDate: Thu Aug 17 15:30:59 2023 +0800 [SPARK-44494] Pin minikube to v1.30.1 to fix spark-docker K8s CI ### What changes were proposed in this pull request? Pin minikube to v1.30.1 to fix spark-docker K8s CI. ### Why are the changes needed? Pin minikube to v1.30.1 to fix spark-docker K8s CI ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? CI passed Closes #53 from Yikun/minikube. Authored-by: Yikun Jiang Signed-off-by: Yikun Jiang --- .github/workflows/main.yml | 4 +++- 1 file changed, 3 insertions(+), 1 deletion(-) diff --git a/.github/workflows/main.yml b/.github/workflows/main.yml index 870c8c7..fe755ed 100644 --- a/.github/workflows/main.yml +++ b/.github/workflows/main.yml @@ -243,7 +243,9 @@ jobs: - name: Test - Start minikube run: | # See more in "Installation" https://minikube.sigs.k8s.io/docs/start/ - curl -LO https://storage.googleapis.com/minikube/releases/latest/minikube-linux-amd64 + # curl -LO https://storage.googleapis.com/minikube/releases/latest/minikube-linux-amd64 + # TODO(SPARK-44495): Resume to use the latest minikube for k8s-integration-tests. + curl -LO https://storage.googleapis.com/minikube/releases/v1.30.1/minikube-linux-amd64 sudo install minikube-linux-amd64 /usr/local/bin/minikube # Github Action limit cpu:2, memory: 6947MB, limit to 2U6G for better resource statistic minikube start --cpus 2 --memory 6144 - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark-docker] branch master updated: [SPARK-40513] Add --batch to gpg command

This is an automated email from the ASF dual-hosted git repository.

yikun pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark-docker.git

The following commit(s) were added to refs/heads/master by this push:

new 58d2885 [SPARK-40513] Add --batch to gpg command

58d2885 is described below

commit 58d288546e8419d229f14b62b6a653999e0390f1

Author: Yikun Jiang

AuthorDate: Thu Jun 29 16:05:47 2023 +0800

[SPARK-40513] Add --batch to gpg command

### What changes were proposed in this pull request?

Add --batch to gpg command which essentially puts GnuPG into "API mode"

instead of "UI mode".

Apply changes to 3.4.x dockerfile.

### Why are the changes needed?

Address DOI comments:

https://github.com/docker-library/official-images/pull/13089#issuecomment-1611814491

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

CI passed

Closes #51 from Yikun/batch.

Authored-by: Yikun Jiang

Signed-off-by: Yikun Jiang

---

3.4.0/scala2.12-java11-ubuntu/Dockerfile | 4 ++--

3.4.1/scala2.12-java11-ubuntu/Dockerfile | 4 ++--

Dockerfile.template | 4 ++--

3 files changed, 6 insertions(+), 6 deletions(-)

diff --git a/3.4.0/scala2.12-java11-ubuntu/Dockerfile

b/3.4.0/scala2.12-java11-ubuntu/Dockerfile

index 854f86c..a4b081e 100644

--- a/3.4.0/scala2.12-java11-ubuntu/Dockerfile

+++ b/3.4.0/scala2.12-java11-ubuntu/Dockerfile

@@ -46,8 +46,8 @@ RUN set -ex; \

wget -nv -O spark.tgz "$SPARK_TGZ_URL"; \

wget -nv -O spark.tgz.asc "$SPARK_TGZ_ASC_URL"; \

export GNUPGHOME="$(mktemp -d)"; \

-gpg --keyserver hkps://keys.openpgp.org --recv-key "$GPG_KEY" || \

-gpg --keyserver hkps://keyserver.ubuntu.com --recv-keys "$GPG_KEY"; \

+gpg --batch --keyserver hkps://keys.openpgp.org --recv-key "$GPG_KEY" || \

+gpg --batch --keyserver hkps://keyserver.ubuntu.com --recv-keys

"$GPG_KEY"; \

gpg --batch --verify spark.tgz.asc spark.tgz; \

gpgconf --kill all; \

rm -rf "$GNUPGHOME" spark.tgz.asc; \

diff --git a/3.4.1/scala2.12-java11-ubuntu/Dockerfile

b/3.4.1/scala2.12-java11-ubuntu/Dockerfile

index 6d62769..d8bba7e 100644

--- a/3.4.1/scala2.12-java11-ubuntu/Dockerfile

+++ b/3.4.1/scala2.12-java11-ubuntu/Dockerfile

@@ -46,8 +46,8 @@ RUN set -ex; \

wget -nv -O spark.tgz "$SPARK_TGZ_URL"; \

wget -nv -O spark.tgz.asc "$SPARK_TGZ_ASC_URL"; \

export GNUPGHOME="$(mktemp -d)"; \

-gpg --keyserver hkps://keys.openpgp.org --recv-key "$GPG_KEY" || \

-gpg --keyserver hkps://keyserver.ubuntu.com --recv-keys "$GPG_KEY"; \

+gpg --batch --keyserver hkps://keys.openpgp.org --recv-key "$GPG_KEY" || \

+gpg --batch --keyserver hkps://keyserver.ubuntu.com --recv-keys

"$GPG_KEY"; \

gpg --batch --verify spark.tgz.asc spark.tgz; \

gpgconf --kill all; \

rm -rf "$GNUPGHOME" spark.tgz.asc; \

diff --git a/Dockerfile.template b/Dockerfile.template

index 80b57e2..3d0aacf 100644

--- a/Dockerfile.template

+++ b/Dockerfile.template

@@ -46,8 +46,8 @@ RUN set -ex; \

wget -nv -O spark.tgz "$SPARK_TGZ_URL"; \

wget -nv -O spark.tgz.asc "$SPARK_TGZ_ASC_URL"; \

export GNUPGHOME="$(mktemp -d)"; \

-gpg --keyserver hkps://keys.openpgp.org --recv-key "$GPG_KEY" || \

-gpg --keyserver hkps://keyserver.ubuntu.com --recv-keys "$GPG_KEY"; \

+gpg --batch --keyserver hkps://keys.openpgp.org --recv-key "$GPG_KEY" || \

+gpg --batch --keyserver hkps://keyserver.ubuntu.com --recv-keys

"$GPG_KEY"; \

gpg --batch --verify spark.tgz.asc spark.tgz; \

gpgconf --kill all; \

rm -rf "$GNUPGHOME" spark.tgz.asc; \

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

[spark-docker] branch master updated: [SPARK-44168][FOLLOWUP] Change v3.4 GPG_KEY to full key fingerprint

This is an automated email from the ASF dual-hosted git repository.

yikun pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark-docker.git

The following commit(s) were added to refs/heads/master by this push:

new 39264c5 [SPARK-44168][FOLLOWUP] Change v3.4 GPG_KEY to full key

fingerprint

39264c5 is described below

commit 39264c502cf21b71a1ab5da71760e5864abce099

Author: Yikun Jiang

AuthorDate: Thu Jun 29 16:04:50 2023 +0800

[SPARK-44168][FOLLOWUP] Change v3.4 GPG_KEY to full key fingerprint

### What changes were proposed in this pull request?

Change GPG key from `34F0FC5C` to

`F28C9C925C188C35E345614DEDA00CE834F0FC5C` to avoid pontential collision.

The full finger print can get from below cmd:

```

$ wget https://dist.apache.org/repos/dist/dev/spark/KEYS

$ gpg --import KEYS

$ gpg --fingerprint 34F0FC5C

pub rsa4096 2015-05-05 [SC]

F28C 9C92 5C18 8C35 E345 614D EDA0 0CE8 34F0 FC5C

uid [ unknown] Dongjoon Hyun (CODE SIGNING KEY)

sub rsa4096 2015-05-05 [E]

```

### Why are the changes needed?

- A short gpg key had been added as v3.4.0 gpg key in

https://github.com/apache/spark-docker/pull/46 .

- The short key `34F0FC5C` is from

https://dist.apache.org/repos/dist/dev/spark/KEYS

- According DOI review comments,

https://github.com/docker-library/official-images/pull/13089#issuecomment-1609990551

, `this should be the full key fingerprint:

F28C9C925C188C35E345614DEDA00CE834F0FC5C (generating a collision for such a

short key ID is trivial.`

- We'd better to switch the short key to full fingerprint

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

CI passed

Closes #50 from Yikun/gpg_key.

Authored-by: Yikun Jiang

Signed-off-by: Yikun Jiang

---

3.4.1/scala2.12-java11-ubuntu/Dockerfile | 2 +-

tools/template.py| 2 +-

2 files changed, 2 insertions(+), 2 deletions(-)

diff --git a/3.4.1/scala2.12-java11-ubuntu/Dockerfile

b/3.4.1/scala2.12-java11-ubuntu/Dockerfile

index bf106a6..6d62769 100644

--- a/3.4.1/scala2.12-java11-ubuntu/Dockerfile

+++ b/3.4.1/scala2.12-java11-ubuntu/Dockerfile

@@ -38,7 +38,7 @@ RUN set -ex; \

# https://downloads.apache.org/spark/KEYS

ENV

SPARK_TGZ_URL=https://archive.apache.org/dist/spark/spark-3.4.1/spark-3.4.1-bin-hadoop3.tgz

\

SPARK_TGZ_ASC_URL=https://archive.apache.org/dist/spark/spark-3.4.1/spark-3.4.1-bin-hadoop3.tgz.asc

\

-GPG_KEY=34F0FC5C

+GPG_KEY=F28C9C925C188C35E345614DEDA00CE834F0FC5C

RUN set -ex; \

export SPARK_TMP="$(mktemp -d)"; \

diff --git a/tools/template.py b/tools/template.py

index 93e842a..cdc167c 100755

--- a/tools/template.py

+++ b/tools/template.py

@@ -31,7 +31,7 @@ GPG_KEY_DICT = {

# issuer "xinr...@apache.org"

"3.4.0": "CC68B3D16FE33A766705160BA7E57908C7A4E1B1",

# issuer "dongj...@apache.org"

-"3.4.1": "34F0FC5C"

+"3.4.1": "F28C9C925C188C35E345614DEDA00CE834F0FC5C"

}

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

[spark-docker] branch master updated: [SPARK-40513][DOCS] Add apache/spark docker image overview

This is an automated email from the ASF dual-hosted git repository. yikun pushed a commit to branch master in repository https://gitbox.apache.org/repos/asf/spark-docker.git The following commit(s) were added to refs/heads/master by this push: new d02ff60 [SPARK-40513][DOCS] Add apache/spark docker image overview d02ff60 is described below commit d02ff6091835311a32c7ccc73d8ebae1d5817ecc Author: Yikun Jiang AuthorDate: Tue Jun 27 14:28:21 2023 +0800 [SPARK-40513][DOCS] Add apache/spark docker image overview ### What changes were proposed in this pull request? This PR add the `OVERVIEW.md`. ### Why are the changes needed? This will be used in the page of https://hub.docker.com/r/apache/spark to introduce the spark docker image and tag info. ### Does this PR introduce _any_ user-facing change? Yes, doc only ### How was this patch tested? Doc only, review. Closes #34 from Yikun/overview. Authored-by: Yikun Jiang Signed-off-by: Yikun Jiang --- OVERVIEW.md | 83 + 1 file changed, 83 insertions(+) diff --git a/OVERVIEW.md b/OVERVIEW.md new file mode 100644 index 000..046 --- /dev/null +++ b/OVERVIEW.md @@ -0,0 +1,83 @@ +# What is Apache Spark™? + +Apache Spark™ is a multi-language engine for executing data engineering, data science, and machine learning on single-node machines or clusters. It provides high-level APIs in Scala, Java, Python, and R, and an optimized engine that supports general computation graphs for data analysis. It also supports a rich set of higher-level tools including Spark SQL for SQL and DataFrames, pandas API on Spark for pandas workloads, MLlib for machine learning, GraphX for graph processing, and Structu [...] + +https://spark.apache.org/ + +## Online Documentation + +You can find the latest Spark documentation, including a programming guide, on the [project web page](https://spark.apache.org/documentation.html). This README file only contains basic setup instructions. + +## Interactive Scala Shell + +The easiest way to start using Spark is through the Scala shell: + +``` +docker run -it apache/spark /opt/spark/bin/spark-shell +``` + +Try the following command, which should return 1,000,000,000: + +``` +scala> spark.range(1000 * 1000 * 1000).count() +``` + +## Interactive Python Shell + +The easiest way to start using PySpark is through the Python shell: + +``` +docker run -it apache/spark /opt/spark/bin/pyspark +``` + +And run the following command, which should also return 1,000,000,000: + +``` +>>> spark.range(1000 * 1000 * 1000).count() +``` + +## Interactive R Shell + +The easiest way to start using R on Spark is through the R shell: + +``` +docker run -it apache/spark:r /opt/spark/bin/sparkR +``` + +## Running Spark on Kubernetes + +https://spark.apache.org/docs/latest/running-on-kubernetes.html + +## Supported tags and respective Dockerfile links + +Currently, the `apache/spark` docker image supports 4 types for each version: + +Such as for v3.4.0: +- [3.4.0-scala2.12-java11-python3-ubuntu, 3.4.0-python3, 3.4.0, python3, latest](https://github.com/apache/spark-docker/tree/fe05e38f0ffad271edccd6ae40a77d5f14f3eef7/3.4.0/scala2.12-java11-python3-ubuntu) +- [3.4.0-scala2.12-java11-r-ubuntu, 3.4.0-r, r](https://github.com/apache/spark-docker/tree/fe05e38f0ffad271edccd6ae40a77d5f14f3eef7/3.4.0/scala2.12-java11-r-ubuntu) +- [3.4.0-scala2.12-java11-ubuntu, 3.4.0-scala, scala](https://github.com/apache/spark-docker/tree/fe05e38f0ffad271edccd6ae40a77d5f14f3eef7/3.4.0/scala2.12-java11-ubuntu) +- [3.4.0-scala2.12-java11-python3-r-ubuntu](https://github.com/apache/spark-docker/tree/fe05e38f0ffad271edccd6ae40a77d5f14f3eef7/3.4.0/scala2.12-java11-python3-r-ubuntu) + +## Environment Variable + +The environment variables of entrypoint.sh are listed below: + +| Environment Variable | Meaning | +|--|---| +| SPARK_EXTRA_CLASSPATH | The extra path to be added to the classpath, see also in https://spark.apache.org/docs/latest/running-on-kubernetes.html#dependency-management | +| PYSPARK_PYTHON | Python binary executable to use for PySpark in both driver and workers (default is python3 if available, otherwise python). Property spark.pyspark.python take precedence if it is set | +| PYSPARK_DRIVER_PYTHON | Python binary executable to use for PySpark in driver only (default is PYSPARK_PYTHON). Property spark.pyspark.driver.python take precedence if it is set | +| SPARK_DIST_CLASSPATH | Distribution-defined classpath to add to processes | +| SPARK_DRIVER_BIND_ADDRESS | Hostname or IP address where to bind listening sockets. See also `spark.driver.bindAddress` | +| SPARK_EXECUTOR_JAVA_OPTS | The Java opts of Spark Executor | +| SPARK_APPLICATION_ID | A unique identifier for the Spark application | +| SPARK_EXECUTOR_POD_IP | The Pod IP address of spark executor | +| SPARK_RESOURC

[spark-docker] branch master updated: [SPARK-44175] Remove useless lib64 path link in dockerfile

This is an automated email from the ASF dual-hosted git repository.

yikun pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark-docker.git

The following commit(s) were added to refs/heads/master by this push:

new 5405b49 [SPARK-44175] Remove useless lib64 path link in dockerfile

5405b49 is described below

commit 5405b49b52aa1661d31ac80cdb8c9aad530d6847

Author: Yikun Jiang

AuthorDate: Tue Jun 27 14:09:34 2023 +0800

[SPARK-44175] Remove useless lib64 path link in dockerfile

### What changes were proposed in this pull request?

Remove useless lib64 path

### Why are the changes needed?

Address comments:

https://github.com/docker-library/official-images/pull/13089#issuecomment-1601813499

It was introduced by

https://github.com/apache/spark/commit/f13ea15d79fb4752a0a75a05a4a89bd8625ea3d5

to address the issue about snappy on alpine OS, but we already switch the OS to

ubuntu, so `/lib64` hack can be cleanup.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

CI passed

Closes #48 from Yikun/rm-lib64-hack.

Authored-by: Yikun Jiang

Signed-off-by: Yikun Jiang

---

3.4.0/scala2.12-java11-ubuntu/Dockerfile | 1 -

3.4.1/scala2.12-java11-ubuntu/Dockerfile | 1 -

Dockerfile.template | 1 -

3 files changed, 3 deletions(-)

diff --git a/3.4.0/scala2.12-java11-ubuntu/Dockerfile

b/3.4.0/scala2.12-java11-ubuntu/Dockerfile

index 77ace47..854f86c 100644

--- a/3.4.0/scala2.12-java11-ubuntu/Dockerfile

+++ b/3.4.0/scala2.12-java11-ubuntu/Dockerfile

@@ -23,7 +23,6 @@ RUN groupadd --system --gid=${spark_uid} spark && \

RUN set -ex; \

apt-get update; \

-ln -s /lib /lib64; \

apt-get install -y gnupg2 wget bash tini libc6 libpam-modules krb5-user

libnss3 procps net-tools gosu libnss-wrapper; \

mkdir -p /opt/spark; \

mkdir /opt/spark/python; \

diff --git a/3.4.1/scala2.12-java11-ubuntu/Dockerfile

b/3.4.1/scala2.12-java11-ubuntu/Dockerfile

index e782686..bf106a6 100644

--- a/3.4.1/scala2.12-java11-ubuntu/Dockerfile

+++ b/3.4.1/scala2.12-java11-ubuntu/Dockerfile

@@ -23,7 +23,6 @@ RUN groupadd --system --gid=${spark_uid} spark && \

RUN set -ex; \

apt-get update; \

-ln -s /lib /lib64; \

apt-get install -y gnupg2 wget bash tini libc6 libpam-modules krb5-user

libnss3 procps net-tools gosu libnss-wrapper; \

mkdir -p /opt/spark; \

mkdir /opt/spark/python; \

diff --git a/Dockerfile.template b/Dockerfile.template

index 6fedce9..80b57e2 100644

--- a/Dockerfile.template

+++ b/Dockerfile.template

@@ -23,7 +23,6 @@ RUN groupadd --system --gid=${spark_uid} spark && \

RUN set -ex; \

apt-get update; \

-ln -s /lib /lib64; \

apt-get install -y gnupg2 wget bash tini libc6 libpam-modules krb5-user

libnss3 procps net-tools gosu libnss-wrapper; \

mkdir -p /opt/spark; \

mkdir /opt/spark/python; \

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

[spark-docker] branch master updated: [SPARK-44177] Add 'set -eo pipefail' to entrypoint and quote variables

This is an automated email from the ASF dual-hosted git repository.

yikun pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark-docker.git

The following commit(s) were added to refs/heads/master by this push:

new 6022289 [SPARK-44177] Add 'set -eo pipefail' to entrypoint and quote

variables

6022289 is described below

commit 60222892836549f05c56edd49ac81c688c8e7356

Author: Yikun Jiang

AuthorDate: Tue Jun 27 08:59:03 2023 +0800

[SPARK-44177] Add 'set -eo pipefail' to entrypoint and quote variables

### What changes were proposed in this pull request?

Add 'set -eo pipefail' to entrypoint and quote variables

### Why are the changes needed?

Address DOI comments:

1. Have you considered a set -eo pipefail on the entrypoint script to help

prevent any errors from being silently ignored?

2. You probably want to quote this (and many of the other variables in this

execution); ala --driver-url "$SPARK_DRIVER_URL"

[1]

https://github.com/docker-library/official-images/pull/13089#issuecomment-1601334895

[2]

https://github.com/docker-library/official-images/pull/13089#issuecomment-1601813499

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

CI passed

Closes #49 from Yikun/quote.

Authored-by: Yikun Jiang

Signed-off-by: Yikun Jiang

---

3.4.0/scala2.12-java11-ubuntu/entrypoint.sh | 31 -

3.4.1/scala2.12-java11-ubuntu/entrypoint.sh | 31 -

entrypoint.sh.template | 31 -

3 files changed, 51 insertions(+), 42 deletions(-)

diff --git a/3.4.0/scala2.12-java11-ubuntu/entrypoint.sh

b/3.4.0/scala2.12-java11-ubuntu/entrypoint.sh

index 08fc925..2e3d2a8 100755

--- a/3.4.0/scala2.12-java11-ubuntu/entrypoint.sh

+++ b/3.4.0/scala2.12-java11-ubuntu/entrypoint.sh

@@ -15,6 +15,9 @@

# See the License for the specific language governing permissions and

# limitations under the License.

#

+# Prevent any errors from being silently ignored

+set -eo pipefail

+

attempt_setup_fake_passwd_entry() {

# Check whether there is a passwd entry for the container UID

local myuid; myuid="$(id -u)"

@@ -51,10 +54,10 @@ if [ -n "$SPARK_EXTRA_CLASSPATH" ]; then

SPARK_CLASSPATH="$SPARK_CLASSPATH:$SPARK_EXTRA_CLASSPATH"

fi

-if ! [ -z ${PYSPARK_PYTHON+x} ]; then

+if ! [ -z "${PYSPARK_PYTHON+x}" ]; then

export PYSPARK_PYTHON

fi

-if ! [ -z ${PYSPARK_DRIVER_PYTHON+x} ]; then

+if ! [ -z "${PYSPARK_DRIVER_PYTHON+x}" ]; then

export PYSPARK_DRIVER_PYTHON

fi

@@ -64,13 +67,13 @@ if [ -n "${HADOOP_HOME}" ] && [ -z

"${SPARK_DIST_CLASSPATH}" ]; then

export SPARK_DIST_CLASSPATH="$($HADOOP_HOME/bin/hadoop classpath)"

fi

-if ! [ -z ${HADOOP_CONF_DIR+x} ]; then

+if ! [ -z "${HADOOP_CONF_DIR+x}" ]; then

SPARK_CLASSPATH="$HADOOP_CONF_DIR:$SPARK_CLASSPATH";

fi

-if ! [ -z ${SPARK_CONF_DIR+x} ]; then

+if ! [ -z "${SPARK_CONF_DIR+x}" ]; then

SPARK_CLASSPATH="$SPARK_CONF_DIR:$SPARK_CLASSPATH";

-elif ! [ -z ${SPARK_HOME+x} ]; then

+elif ! [ -z "${SPARK_HOME+x}" ]; then

SPARK_CLASSPATH="$SPARK_HOME/conf:$SPARK_CLASSPATH";

fi

@@ -99,17 +102,17 @@ case "$1" in

CMD=(

${JAVA_HOME}/bin/java

"${SPARK_EXECUTOR_JAVA_OPTS[@]}"

- -Xms$SPARK_EXECUTOR_MEMORY

- -Xmx$SPARK_EXECUTOR_MEMORY

+ -Xms"$SPARK_EXECUTOR_MEMORY"

+ -Xmx"$SPARK_EXECUTOR_MEMORY"

-cp "$SPARK_CLASSPATH:$SPARK_DIST_CLASSPATH"

org.apache.spark.scheduler.cluster.k8s.KubernetesExecutorBackend

- --driver-url $SPARK_DRIVER_URL

- --executor-id $SPARK_EXECUTOR_ID

- --cores $SPARK_EXECUTOR_CORES

- --app-id $SPARK_APPLICATION_ID

- --hostname $SPARK_EXECUTOR_POD_IP

- --resourceProfileId $SPARK_RESOURCE_PROFILE_ID

- --podName $SPARK_EXECUTOR_POD_NAME

+ --driver-url "$SPARK_DRIVER_URL"

+ --executor-id "$SPARK_EXECUTOR_ID"

+ --cores "$SPARK_EXECUTOR_CORES"

+ --app-id "$SPARK_APPLICATION_ID"

+ --hostname "$SPARK_EXECUTOR_POD_IP"

+ --resourceProfileId "$SPARK_RESOURCE_PROFILE_ID"

+ --podName "$SPARK_EXECUTOR_POD_NAME"

)

attempt_setup_fake_passwd_entry

# Execute the container CMD under tini for better hygiene

diff --git a/3.4.1/scala2.12-java11-ubuntu/entrypoint.sh

b/3.4.1/scala2.12-java11-ubuntu/entrypoint.sh

index 08fc925..2e3d2a8 100755

--- a/3.4.1/scala2.12-java11-ubuntu/entrypoint.sh

+++ b/3.4.1/scala2.12-java11-ubuntu/entrypoint.sh

@@ -15,6 +15,9 @@

# See the License for the specific language governing permissions and

# limitations under the License.

#

+# Prevent any errors fr

[spark-docker] branch master updated: [SPARK-44176] Change apt to apt-get and remove useless cleanup

This is an automated email from the ASF dual-hosted git repository.

yikun pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark-docker.git

The following commit(s) were added to refs/heads/master by this push:

new 6f1a0a5 [SPARK-44176] Change apt to apt-get and remove useless cleanup

6f1a0a5 is described below

commit 6f1a0a5fbb8034ebc4ea04e4f0b2fda728a4dd1e

Author: Yikun Jiang

AuthorDate: Tue Jun 27 08:56:54 2023 +0800

[SPARK-44176] Change apt to apt-get and remove useless cleanup

### What changes were proposed in this pull request?

This patch change `apt` to `apt-get` and also remove useless `rm -rf

/var/cache/apt/*; \`.

And also apply the change to 3.4.0 and 3.4.1

### Why are the changes needed?

Address comments from DOI:

- `apt install ...`, This should be apt-get (apt is not intended for

unattended use, as the warning during build makes clear).

- `rm -rf /var/cache/apt/*; \` This is harmless, but should be unnecessary

(the base image configuration already makes sure this directory stays empty).

See more in:

[1]

https://github.com/docker-library/official-images/pull/13089#issuecomment-1601813499

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

CI passed

Closes #47 from Yikun/apt-get.

Authored-by: Yikun Jiang

Signed-off-by: Yikun Jiang

---

3.4.0/scala2.12-java11-python3-r-ubuntu/Dockerfile | 5 ++---

3.4.0/scala2.12-java11-python3-ubuntu/Dockerfile | 3 +--

3.4.0/scala2.12-java11-r-ubuntu/Dockerfile | 3 +--

3.4.0/scala2.12-java11-ubuntu/Dockerfile | 3 +--

3.4.1/scala2.12-java11-python3-r-ubuntu/Dockerfile | 5 ++---

3.4.1/scala2.12-java11-python3-ubuntu/Dockerfile | 3 +--

3.4.1/scala2.12-java11-r-ubuntu/Dockerfile | 3 +--

3.4.1/scala2.12-java11-ubuntu/Dockerfile | 3 +--

Dockerfile.template| 3 +--

r-python.template | 5 ++---

10 files changed, 13 insertions(+), 23 deletions(-)

diff --git a/3.4.0/scala2.12-java11-python3-r-ubuntu/Dockerfile

b/3.4.0/scala2.12-java11-python3-r-ubuntu/Dockerfile

index 0f1962f..10aa23e 100644

--- a/3.4.0/scala2.12-java11-python3-r-ubuntu/Dockerfile

+++ b/3.4.0/scala2.12-java11-python3-r-ubuntu/Dockerfile

@@ -20,9 +20,8 @@ USER root

RUN set -ex; \

apt-get update; \

-apt install -y python3 python3-pip; \

-apt install -y r-base r-base-dev; \

-rm -rf /var/cache/apt/*; \

+apt-get install -y python3 python3-pip; \

+apt-get install -y r-base r-base-dev; \

rm -rf /var/lib/apt/lists/*

ENV R_HOME /usr/lib/R

diff --git a/3.4.0/scala2.12-java11-python3-ubuntu/Dockerfile

b/3.4.0/scala2.12-java11-python3-ubuntu/Dockerfile

index 258d806..3240e57 100644

--- a/3.4.0/scala2.12-java11-python3-ubuntu/Dockerfile

+++ b/3.4.0/scala2.12-java11-python3-ubuntu/Dockerfile

@@ -20,8 +20,7 @@ USER root

RUN set -ex; \

apt-get update; \

-apt install -y python3 python3-pip; \

-rm -rf /var/cache/apt/*; \

+apt-get install -y python3 python3-pip; \

rm -rf /var/lib/apt/lists/*

USER spark

diff --git a/3.4.0/scala2.12-java11-r-ubuntu/Dockerfile

b/3.4.0/scala2.12-java11-r-ubuntu/Dockerfile

index 4c928c6..266392f 100644

--- a/3.4.0/scala2.12-java11-r-ubuntu/Dockerfile

+++ b/3.4.0/scala2.12-java11-r-ubuntu/Dockerfile

@@ -20,8 +20,7 @@ USER root

RUN set -ex; \

apt-get update; \

-apt install -y r-base r-base-dev; \

-rm -rf /var/cache/apt/*; \

+apt-get install -y r-base r-base-dev; \

rm -rf /var/lib/apt/lists/*

ENV R_HOME /usr/lib/R

diff --git a/3.4.0/scala2.12-java11-ubuntu/Dockerfile

b/3.4.0/scala2.12-java11-ubuntu/Dockerfile

index aa754b7..77ace47 100644

--- a/3.4.0/scala2.12-java11-ubuntu/Dockerfile

+++ b/3.4.0/scala2.12-java11-ubuntu/Dockerfile

@@ -24,7 +24,7 @@ RUN groupadd --system --gid=${spark_uid} spark && \

RUN set -ex; \

apt-get update; \

ln -s /lib /lib64; \

-apt install -y gnupg2 wget bash tini libc6 libpam-modules krb5-user

libnss3 procps net-tools gosu libnss-wrapper; \

+apt-get install -y gnupg2 wget bash tini libc6 libpam-modules krb5-user

libnss3 procps net-tools gosu libnss-wrapper; \

mkdir -p /opt/spark; \

mkdir /opt/spark/python; \

mkdir -p /opt/spark/examples; \

@@ -33,7 +33,6 @@ RUN set -ex; \

touch /opt/spark/RELEASE; \

chown -R spark:spark /opt/spark; \

echo "auth required pam_wheel.so use_uid" >> /etc/pam.d/su; \

-rm -rf /var/cache/apt/*; \

rm -rf /var/lib/apt/lists/*

# Install Apache Spark

diff --git a/3.4.1/scala2.12-java11-python3-r-ubuntu/Dockerfile

b/3.4.1/scala2.12-java11-python3-r-ubuntu/Dockerfile

index 95c98b9..30e6b86 100644

--- a/3.4.1/scala2.12-java11-python3-r-ubuntu/Dockerfile

+++ b/3.4.1/scala2.12-java11-python3-r-ubuntu/Dockerfile

@@ -20,9 +20,8 @@ USER root

RUN set -ex; \

apt-get updat

[spark-docker] branch master updated: [SPARK-44168] Add Apache Spark 3.4.1 Dockerfiles

This is an automated email from the ASF dual-hosted git repository.

yikun pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark-docker.git

The following commit(s) were added to refs/heads/master by this push:

new 6f36415 [SPARK-44168] Add Apache Spark 3.4.1 Dockerfiles

6f36415 is described below

commit 6f3641534a97a80491cba926cc7a5e67972494ea

Author: Yikun Jiang

AuthorDate: Sun Jun 25 10:51:46 2023 +0800

[SPARK-44168] Add Apache Spark 3.4.1 Dockerfiles

### What changes were proposed in this pull request?

Add Apache Spark 3.4.1 Dockerfiles.

- Add 3.4.1 GPG key

- Add .github/workflows/build_3.4.1.yaml

- ./add-dockerfiles.sh 3.4.1

- Add version and tag info

### Why are the changes needed?

Apache Spark 3.4.1 released:

https://spark.apache.org/releases/spark-release-3-4-1.html

### Does this PR introduce _any_ user-facing change?

Docker image will be published.

### How was this patch tested?

Add workflow and CI passed

Closes #46 from Yikun/3.4.1.

Authored-by: Yikun Jiang

Signed-off-by: Yikun Jiang

---

.github/workflows/build_3.4.1.yaml | 41 +++

.github/workflows/publish.yml | 3 +-

.github/workflows/test.yml | 3 +-

3.4.1/scala2.12-java11-python3-r-ubuntu/Dockerfile | 30 +

3.4.1/scala2.12-java11-python3-ubuntu/Dockerfile | 27 +

3.4.1/scala2.12-java11-r-ubuntu/Dockerfile | 29 +

3.4.1/scala2.12-java11-ubuntu/Dockerfile | 81 ++

3.4.1/scala2.12-java11-ubuntu/entrypoint.sh| 123 +

tools/template.py | 2 +

versions.json | 42 +--

10 files changed, 372 insertions(+), 9 deletions(-)

diff --git a/.github/workflows/build_3.4.1.yaml

b/.github/workflows/build_3.4.1.yaml

new file mode 100644

index 000..2eba18e

--- /dev/null

+++ b/.github/workflows/build_3.4.1.yaml

@@ -0,0 +1,41 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+#

+

+name: "Build and Test (3.4.1)"

+

+on:

+ pull_request:

+branches:

+ - 'master'

+paths:

+ - '3.4.1/**'

+

+jobs:

+ run-build:

+strategy:

+ matrix:

+image-type: ["all", "python", "scala", "r"]

+name: Run

+secrets: inherit

+uses: ./.github/workflows/main.yml

+with:

+ spark: 3.4.1

+ scala: 2.12

+ java: 11

+ image-type: ${{ matrix.image-type }}

diff --git a/.github/workflows/publish.yml b/.github/workflows/publish.yml

index 3063bfe..1138a9f 100644

--- a/.github/workflows/publish.yml

+++ b/.github/workflows/publish.yml

@@ -25,9 +25,10 @@ on:

spark:

description: 'The Spark version of Spark image.'

required: true

-default: '3.4.0'

+default: '3.4.1'

type: choice

options:

+- 3.4.1

- 3.4.0

- 3.3.2

- 3.3.1

diff --git a/.github/workflows/test.yml b/.github/workflows/test.yml

index 06e2321..4136f1c 100644

--- a/.github/workflows/test.yml

+++ b/.github/workflows/test.yml

@@ -25,9 +25,10 @@ on:

spark:

description: 'The Spark version of Spark image.'

required: true

-default: '3.4.0'

+default: '3.4.1'

type: choice

options:

+- 3.4.1

- 3.4.0

- 3.3.2

- 3.3.1

diff --git a/3.4.1/scala2.12-java11-python3-r-ubuntu/Dockerfile

b/3.4.1/scala2.12-java11-python3-r-ubuntu/Dockerfile

new file mode 100644

index 000..95c98b9

--- /dev/null

+++ b/3.4.1/scala2.12-java11-python3-r-ubuntu/Dockerfile

@@ -0,0 +1,30 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one or more

+# contributor license agreements. See the NOTICE file distributed with

+# this work for additional information regarding copyright ownership.

+# The ASF licenses this file to You under the Apache License, Version 2.0

+# (the "License"); you may not use this file except in compliance with

+# the License. You may obtain a copy of the License at

+#

+#http://www.apache.org/licenses/LICEN

[spark-docker] branch master updated: [SPARK-43368] Use `libnss_wrapper` to fake passwd entry

This is an automated email from the ASF dual-hosted git repository.

yikun pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark-docker.git

The following commit(s) were added to refs/heads/master by this push:

new c07ae18 [SPARK-43368] Use `libnss_wrapper` to fake passwd entry

c07ae18 is described below

commit c07ae18355678370fd270bedb8b39ab2aceb5ac2

Author: Yikun Jiang

AuthorDate: Fri Jun 2 10:27:01 2023 +0800

[SPARK-43368] Use `libnss_wrapper` to fake passwd entry

### What changes were proposed in this pull request?

Use `libnss_wrapper` to fake passwd entry instead of changing passwd to

resolve random UID problem. And also we only attempt to setup fake passwd entry

for driver/executor, but for cmd like `bash`, the fake passwd will not be set.

### Why are the changes needed?

In the past, we add the entry to `/etc/passwd` directly for current UID,

it's mainly for [OpenShift anonymous random `uid`

case](https://github.com/docker-library/official-images/pull/13089#issuecomment-1534706523)

(See also in https://github.com/apache-spark-on-k8s/spark/pull/404), but this

way bring the pontential security issue about widely permision of `/etc/passwd`.

According to DOI reviewer

[suggestion](https://github.com/docker-library/official-images/pull/13089#issuecomment-1561793792),

we'd better to resolve this problem by using

[libnss_wrapper](https://cwrap.org/nss_wrapper.html). It's a library to help

set a fake passwd entry by setting `LD_PRELOAD`, `NSS_WRAPPER_PASSWD`,

`NSS_WRAPPER_GROUP`. Such as random UID is `1000`, the env will be:

```

spark6f41b8e5be9b:/opt/spark/work-dir$ id -u

1000

spark6f41b8e5be9b:/opt/spark/work-dir$ id -g

1000

spark6f41b8e5be9b:/opt/spark/work-dir$ whoami

spark

spark6f41b8e5be9b:/opt/spark/work-dir$ echo $LD_PRELOAD

/usr/lib/libnss_wrapper.so

spark6f41b8e5be9b:/opt/spark/work-dir$ echo $NSS_WRAPPER_PASSWD

/tmp/tmp.r5x4SMX35B

spark6f41b8e5be9b:/opt/spark/work-dir$ cat /tmp/tmp.r5x4SMX35B

spark:x:1000:1000:${SPARK_USER_NAME:-anonymous uid}:/opt/spark:/bin/false

spark6f41b8e5be9b:/opt/spark/work-dir$ echo $NSS_WRAPPER_GROUP

/tmp/tmp.XcnnYuD68r

spark6f41b8e5be9b:/opt/spark/work-dir$ cat /tmp/tmp.XcnnYuD68r

spark:x:1000:

```

### Does this PR introduce _any_ user-facing change?

Yes, setup fake ENV rather than changing `/etc/passwd`.

### How was this patch tested?

1. Without `attempt_setup_fake_passwd_entry`, the user is `I have no

name!`

```

# docker run -it --rm --user 1000:1000 spark-test bash

groups: cannot find name for group ID 1000

I have no name!998110cd5a26:/opt/spark/work-dir$

I have no name!0fea1d27d67d:/opt/spark/work-dir$ id -u

1000

I have no name!0fea1d27d67d:/opt/spark/work-dir$ id -g

1000

I have no name!0fea1d27d67d:/opt/spark/work-dir$ whoami

whoami: cannot find name for user ID 1000

```

2. Mannual stub the `attempt_setup_fake_passwd_entry`, the user is

`spark`.

2.1 Apply a tmp change to cmd

```patch

diff --git a/entrypoint.sh.template b/entrypoint.sh.template

index 08fc925..77d5b04 100644

--- a/entrypoint.sh.template

+++ b/entrypoint.sh.template

-118,6 +118,7 case "$1" in

*)

# Non-spark-on-k8s command provided, proceeding in pass-through mode...

+attempt_setup_fake_passwd_entry

exec "$"

;;

esac

```

2.2 Build and run the image, specify a random UID/GID 1000

```bash

$ docker build . -t spark-test

$ docker run -it --rm --user 1000:1000 spark-test bash

# the user is set to spark rather than unknow user

spark6f41b8e5be9b:/opt/spark/work-dir$

spark6f41b8e5be9b:/opt/spark/work-dir$ id -u

1000

spark6f41b8e5be9b:/opt/spark/work-dir$ id -g

1000

spark6f41b8e5be9b:/opt/spark/work-dir$ whoami

spark

```

```

# NSS env is set right

spark6f41b8e5be9b:/opt/spark/work-dir$ echo $LD_PRELOAD

/usr/lib/libnss_wrapper.so

spark6f41b8e5be9b:/opt/spark/work-dir$ echo $NSS_WRAPPER_PASSWD

/tmp/tmp.r5x4SMX35B

spark6f41b8e5be9b:/opt/spark/work-dir$ cat /tmp/tmp.r5x4SMX35B

spark:x:1000:1000:${SPARK_USER_NAME:-anonymous uid}:/opt/spark:/bin/false

spark6f41b8e5be9b:/opt/spark/work-dir$ echo $NSS_WRAPPER_GROUP

/tmp/tmp.XcnnYuD68r

spark6f41b8e5be9b:/opt/spark/work-dir$ cat /tmp/tmp.XcnnYuD68r

spark:x:1000:

```

3. If specify current exsiting user (such as `spark`, `root`), no fake

setup

```bash

# docker run -it --rm --user 0 spark-test bash

roote5bf55d4df22:/opt/spark/work-dir# echo $LD_PRELOAD

```

```bash

# docker run -it --rm spark-test bash

sparkdef8d8ca4e7d:/opt/spark/work-dir$ echo $LD_PRELOAD

```

Closes #45 from Yikun/SPARK-43368.

Auth

[spark-docker] branch master updated: [SPARK-43370] Switch spark user only when run driver and executor

This is an automated email from the ASF dual-hosted git repository.

yikun pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark-docker.git

The following commit(s) were added to refs/heads/master by this push:

new 2dc12d9 [SPARK-43370] Switch spark user only when run driver and

executor

2dc12d9 is described below

commit 2dc12d96910710aa6ee2d717c4c723ddd75127a1

Author: Yikun Jiang

AuthorDate: Thu Jun 1 14:36:17 2023 +0800

[SPARK-43370] Switch spark user only when run driver and executor

### What changes were proposed in this pull request?

Switch spark user only when run driver and executor

### Why are the changes needed?

Address doi comments: question 7 [1]

[1]

https://github.com/docker-library/official-images/pull/13089#issuecomment-1533540388

[2]

https://github.com/docker-library/official-images/pull/13089#issuecomment-1561793792

### Does this PR introduce _any_ user-facing change?

Yes

### How was this patch tested?

1. test mannuly

```

cd ~/spark-docker/3.4.0/scala2.12-java11-ubuntu

$ docker build . -t spark-test

$ docker run -ti spark-test bash

sparkafa78af05cf8:/opt/spark/work-dir$

$ docker run --user root -ti spark-test bash

root095e0d7651fd:/opt/spark/work-dir#

```

2. ci passed

Closes: https://github.com/apache/spark-docker/pull/44

Closes #43 from Yikun/SPARK-43370.

Authored-by: Yikun Jiang

Signed-off-by: Yikun Jiang

---

3.4.0/scala2.12-java11-python3-r-ubuntu/Dockerfile | 4

3.4.0/scala2.12-java11-python3-ubuntu/Dockerfile | 4

3.4.0/scala2.12-java11-r-ubuntu/Dockerfile | 4

3.4.0/scala2.12-java11-ubuntu/Dockerfile | 2 ++

3.4.0/scala2.12-java11-ubuntu/entrypoint.sh| 23 +++---

Dockerfile.template| 2 ++

entrypoint.sh.template | 23 +++---

r-python.template | 4

8 files changed, 44 insertions(+), 22 deletions(-)

diff --git a/3.4.0/scala2.12-java11-python3-r-ubuntu/Dockerfile

b/3.4.0/scala2.12-java11-python3-r-ubuntu/Dockerfile

index 7734100..0f1962f 100644

--- a/3.4.0/scala2.12-java11-python3-r-ubuntu/Dockerfile

+++ b/3.4.0/scala2.12-java11-python3-r-ubuntu/Dockerfile

@@ -16,6 +16,8 @@

#

FROM spark:3.4.0-scala2.12-java11-ubuntu

+USER root

+

RUN set -ex; \

apt-get update; \

apt install -y python3 python3-pip; \

@@ -24,3 +26,5 @@ RUN set -ex; \

rm -rf /var/lib/apt/lists/*

ENV R_HOME /usr/lib/R

+

+USER spark

diff --git a/3.4.0/scala2.12-java11-python3-ubuntu/Dockerfile

b/3.4.0/scala2.12-java11-python3-ubuntu/Dockerfile

index 6c12c30..258d806 100644

--- a/3.4.0/scala2.12-java11-python3-ubuntu/Dockerfile

+++ b/3.4.0/scala2.12-java11-python3-ubuntu/Dockerfile

@@ -16,8 +16,12 @@

#

FROM spark:3.4.0-scala2.12-java11-ubuntu

+USER root

+

RUN set -ex; \

apt-get update; \

apt install -y python3 python3-pip; \

rm -rf /var/cache/apt/*; \

rm -rf /var/lib/apt/lists/*

+

+USER spark

diff --git a/3.4.0/scala2.12-java11-r-ubuntu/Dockerfile

b/3.4.0/scala2.12-java11-r-ubuntu/Dockerfile

index 24cd41a..4c928c6 100644

--- a/3.4.0/scala2.12-java11-r-ubuntu/Dockerfile

+++ b/3.4.0/scala2.12-java11-r-ubuntu/Dockerfile

@@ -16,6 +16,8 @@

#

FROM spark:3.4.0-scala2.12-java11-ubuntu

+USER root

+

RUN set -ex; \

apt-get update; \

apt install -y r-base r-base-dev; \

@@ -23,3 +25,5 @@ RUN set -ex; \

rm -rf /var/lib/apt/lists/*

ENV R_HOME /usr/lib/R

+

+USER spark

diff --git a/3.4.0/scala2.12-java11-ubuntu/Dockerfile

b/3.4.0/scala2.12-java11-ubuntu/Dockerfile

index 205b399..a680106 100644

--- a/3.4.0/scala2.12-java11-ubuntu/Dockerfile

+++ b/3.4.0/scala2.12-java11-ubuntu/Dockerfile

@@ -77,4 +77,6 @@ ENV SPARK_HOME /opt/spark

WORKDIR /opt/spark/work-dir

+USER spark

+

ENTRYPOINT [ "/opt/entrypoint.sh" ]

diff --git a/3.4.0/scala2.12-java11-ubuntu/entrypoint.sh

b/3.4.0/scala2.12-java11-ubuntu/entrypoint.sh

index 716f1af..6def3f9 100755

--- a/3.4.0/scala2.12-java11-ubuntu/entrypoint.sh

+++ b/3.4.0/scala2.12-java11-ubuntu/entrypoint.sh

@@ -69,6 +69,13 @@ elif ! [ -z ${SPARK_HOME+x} ]; then

SPARK_CLASSPATH="$SPARK_HOME/conf:$SPARK_CLASSPATH";

fi

+# Switch to spark if no USER specified (root by default) otherwise use USER

directly

+switch_spark_if_root() {

+ if [ $(id -u) -eq 0 ]; then

+echo gosu spark

+ fi

+}

+

case "$1" in

driver)

shift 1

@@ -78,6 +85,8 @@ case "$1" in

--deploy-mode client

"$@"

)

+# Execute the container CMD under tini for better hygiene

+exec $(switch_spark_if_root) /usr/bin/tini -s -- "${CMD[@]}"

;;

executor)

shift 1

@@ -96,20 +105,12 @@ case "$1" in

--resourceProfileId $SPARK_RESOURCE_PROFILE_ID

--podName $SPARK_EXECUTOR_POD_NAME

[spark-docker] branch master updated: [SPARK-43806] Add awesome-spark-docker.md

This is an automated email from the ASF dual-hosted git repository. yikun pushed a commit to branch master in repository https://gitbox.apache.org/repos/asf/spark-docker.git The following commit(s) were added to refs/heads/master by this push: new 9d4c98c [SPARK-43806] Add awesome-spark-docker.md 9d4c98c is described below commit 9d4c98c62c4ce517e69e65d1f6f7bf412d775b75 Author: Yikun Jiang AuthorDate: Fri May 26 09:53:20 2023 +0800 [SPARK-43806] Add awesome-spark-docker.md ### What changes were proposed in this pull request? Add links to more related images and dockerfile reference. ### Why are the changes needed? Something we talked about in "Spark on Kube Coffe Chats“[1] to add links to more related images and dockerfile reference. Init with [2]. [1] https://lists.apache.org/thread/26gpmlhqhk5cp2fhtzrpl5f61p8jc551 [2] https://github.com/awesome-spark/awesome-spark/blob/main/README.md#docker-images ### Does this PR introduce _any_ user-facing change? Doc only ### How was this patch tested? No Closes #28 from Yikun/awesome-spark-docker. Authored-by: Yikun Jiang Signed-off-by: Yikun Jiang --- awesome-spark-docker.md | 7 +++ 1 file changed, 7 insertions(+) diff --git a/awesome-spark-docker.md b/awesome-spark-docker.md new file mode 100644 index 000..c7bb840 --- /dev/null +++ b/awesome-spark-docker.md @@ -0,0 +1,7 @@ +A curated list of awesome Apache Spark Docker resources. + +- [jupyter/docker-stacks/pyspark-notebook](https://github.com/jupyter/docker-stacks/tree/master/pyspark-notebook) - PySpark with Jupyter Notebook. +- [big-data-europe/docker-spark](https://github.com/big-data-europe/docker-spark) - The standalone cluster and spark applications related Dockerfiles. +- [openeuler/spark](https://github.com/openeuler-mirror/openeuler-docker-images/tree/master/spark) - Dockerfile reference for dnf/yum based OS. +- [GoogleCloudPlatform/spark-on-k8s-operator](https://github.com/GoogleCloudPlatform/spark-on-k8s-operator) - Kubernetes operator for managing the lifecycle of Apache Spark applications on Kubernetes. + - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark-docker] branch master updated: [SPARK-43367] Recover sh in dockerfile

This is an automated email from the ASF dual-hosted git repository. yikun pushed a commit to branch master in repository https://gitbox.apache.org/repos/asf/spark-docker.git The following commit(s) were added to refs/heads/master by this push: new ce3e122 [SPARK-43367] Recover sh in dockerfile ce3e122 is described below commit ce3e12266ef82264b814f6f7823165f7c7ae215a Author: Yikun Jiang AuthorDate: Thu May 25 19:07:55 2023 +0800 [SPARK-43367] Recover sh in dockerfile ### What changes were proposed in this pull request? Recover `sh`, we remove `sh` due to https://github.com/apache-spark-on-k8s/spark/pull/444/files#r134075892 , now `SPARK_DRIVER_JAVA_OPTS` related code already move to `entrypoint.sh` with `#!/bin/bash`, so we don't need this hack way. See also: [1] https://github.com/docker-library/official-images/pull/13089#issuecomment-1533540388 [2] https://github.com/docker-library/official-images/pull/13089#issuecomment-1561793792 ### Why are the changes needed? Recover sh ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? CI passed Closes #41 from Yikun/SPARK-43367. Authored-by: Yikun Jiang Signed-off-by: Yikun Jiang --- 3.4.0/scala2.12-java11-ubuntu/Dockerfile | 2 -- Dockerfile.template | 2 -- 2 files changed, 4 deletions(-) diff --git a/3.4.0/scala2.12-java11-ubuntu/Dockerfile b/3.4.0/scala2.12-java11-ubuntu/Dockerfile index 11f997f..205b399 100644 --- a/3.4.0/scala2.12-java11-ubuntu/Dockerfile +++ b/3.4.0/scala2.12-java11-ubuntu/Dockerfile @@ -32,8 +32,6 @@ RUN set -ex; \ chmod g+w /opt/spark/work-dir; \ touch /opt/spark/RELEASE; \ chown -R spark:spark /opt/spark; \ -rm /bin/sh; \ -ln -sv /bin/bash /bin/sh; \ echo "auth required pam_wheel.so use_uid" >> /etc/pam.d/su; \ chgrp root /etc/passwd && chmod ug+rw /etc/passwd; \ rm -rf /var/cache/apt/*; \ diff --git a/Dockerfile.template b/Dockerfile.template index 6e85cd3..8b13e4a 100644 --- a/Dockerfile.template +++ b/Dockerfile.template @@ -32,8 +32,6 @@ RUN set -ex; \ chmod g+w /opt/spark/work-dir; \ touch /opt/spark/RELEASE; \ chown -R spark:spark /opt/spark; \ -rm /bin/sh; \ -ln -sv /bin/bash /bin/sh; \ echo "auth required pam_wheel.so use_uid" >> /etc/pam.d/su; \ chgrp root /etc/passwd && chmod ug+rw /etc/passwd; \ rm -rf /var/cache/apt/*; \ - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark-docker] branch master updated: [SPARK-43793] Fix SPARK_EXECUTOR_JAVA_OPTS assignment bug

This is an automated email from the ASF dual-hosted git repository.

yikun pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark-docker.git

The following commit(s) were added to refs/heads/master by this push:

new 006e8fa [SPARK-43793] Fix SPARK_EXECUTOR_JAVA_OPTS assignment bug

006e8fa is described below

commit 006e8fade69f148a05fc73f591f52c7678e48f04

Author: Yikun Jiang

AuthorDate: Thu May 25 19:05:26 2023 +0800

[SPARK-43793] Fix SPARK_EXECUTOR_JAVA_OPTS assignment bug

### What changes were proposed in this pull request?

In previous code, this is susceptible to a few bugs particularly around

newlines in values.

```

env | grep SPARK_JAVA_OPT_ | sort -t_ -k4 -n | sed 's/[^=]*=\(.*\)/\1/g' >

/tmp/java_opts.txt

readarray -t SPARK_EXECUTOR_JAVA_OPTS < /tmp/java_opts.txt

```

### Why are the changes needed?

To address DOI comments:

https://github.com/docker-library/official-images/pull/13089#issuecomment-1533540388

, question 6.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

1. Test mannully

```

export SPARK_JAVA_OPT_0="foo=bar"

export SPARK_JAVA_OPT_1="foo1=bar1"

for v in "${!SPARK_JAVA_OPT_}"; do

SPARK_EXECUTOR_JAVA_OPTS+=( "${!v}" )

done

for v in ${SPARK_EXECUTOR_JAVA_OPTS[]}; do

echo $v

done

# foo=bar

# foo1=bar1

```

2. CI passed

Closes #42 from Yikun/SPARK-43793.

Authored-by: Yikun Jiang

Signed-off-by: Yikun Jiang

---

3.4.0/scala2.12-java11-ubuntu/entrypoint.sh | 5 +++--

entrypoint.sh.template | 5 +++--

2 files changed, 6 insertions(+), 4 deletions(-)

diff --git a/3.4.0/scala2.12-java11-ubuntu/entrypoint.sh

b/3.4.0/scala2.12-java11-ubuntu/entrypoint.sh

index 4bb1557..716f1af 100755

--- a/3.4.0/scala2.12-java11-ubuntu/entrypoint.sh

+++ b/3.4.0/scala2.12-java11-ubuntu/entrypoint.sh

@@ -38,8 +38,9 @@ if [ -z "$JAVA_HOME" ]; then

fi

SPARK_CLASSPATH="$SPARK_CLASSPATH:${SPARK_HOME}/jars/*"

-env | grep SPARK_JAVA_OPT_ | sort -t_ -k4 -n | sed 's/[^=]*=\(.*\)/\1/g' >

/tmp/java_opts.txt

-readarray -t SPARK_EXECUTOR_JAVA_OPTS < /tmp/java_opts.txt

+for v in "${!SPARK_JAVA_OPT_@}"; do

+SPARK_EXECUTOR_JAVA_OPTS+=( "${!v}" )

+done

if [ -n "$SPARK_EXTRA_CLASSPATH" ]; then

SPARK_CLASSPATH="$SPARK_CLASSPATH:$SPARK_EXTRA_CLASSPATH"

diff --git a/entrypoint.sh.template b/entrypoint.sh.template

index 4bb1557..716f1af 100644

--- a/entrypoint.sh.template

+++ b/entrypoint.sh.template

@@ -38,8 +38,9 @@ if [ -z "$JAVA_HOME" ]; then

fi

SPARK_CLASSPATH="$SPARK_CLASSPATH:${SPARK_HOME}/jars/*"

-env | grep SPARK_JAVA_OPT_ | sort -t_ -k4 -n | sed 's/[^=]*=\(.*\)/\1/g' >

/tmp/java_opts.txt

-readarray -t SPARK_EXECUTOR_JAVA_OPTS < /tmp/java_opts.txt

+for v in "${!SPARK_JAVA_OPT_@}"; do

+SPARK_EXECUTOR_JAVA_OPTS+=( "${!v}" )

+done

if [ -n "$SPARK_EXTRA_CLASSPATH" ]; then

SPARK_CLASSPATH="$SPARK_CLASSPATH:$SPARK_EXTRA_CLASSPATH"

-