[jira] [Updated] (HIVE-26131) Incorrect OutputFormat when describing jdbc connector table

[

https://issues.apache.org/jira/browse/HIVE-26131?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

ASF GitHub Bot updated HIVE-26131:

--

Labels: pull-request-available (was: )

> Incorrect OutputFormat when describing jdbc connector table

>

>

> Key: HIVE-26131

> URL: https://issues.apache.org/jira/browse/HIVE-26131

> Project: Hive

> Issue Type: Bug

> Components: JDBC storage handler

>Affects Versions: 4.0.0-alpha-1, 4.0.0-alpha-2

>Reporter: zhangbutao

>Assignee: zhangbutao

>Priority: Minor

> Labels: pull-request-available

> Fix For: 4.0.0-alpha-2

>

> Attachments: image-2022-04-12-13-07-09-647.png

>

> Time Spent: 10m

> Remaining Estimate: 0h

>

> Step to repro:

> {code:java}

> CREATE CONNECTOR mysql_qtest

> TYPE 'mysql'

> URL 'jdbc:mysql://localhost:3306/testdb'

> WITH DCPROPERTIES (

> "hive.sql.dbcp.username"="root",

> "hive.sql.dbcp.password"="");

> CREATE REMOTE DATABASE db_mysql USING mysql_qtest with

> DBPROPERTIES("connector.remoteDbName"="testdb");

> describe formatted db_mysql.test;{code}

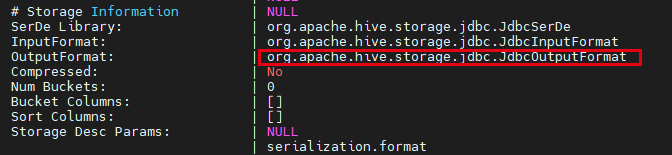

> You can see incorrect OuptputFormat info:

> !image-2022-04-12-13-07-09-647.png!

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Work logged] (HIVE-26131) Incorrect OutputFormat when describing jdbc connector table

[

https://issues.apache.org/jira/browse/HIVE-26131?focusedWorklogId=755609=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-755609

]

ASF GitHub Bot logged work on HIVE-26131:

-

Author: ASF GitHub Bot

Created on: 12/Apr/22 05:33

Start Date: 12/Apr/22 05:33

Worklog Time Spent: 10m

Work Description: zhangbutao opened a new pull request, #3200:

URL: https://github.com/apache/hive/pull/3200

### What changes were proposed in this pull request?

Use correct OutputFormat when describing jdbc connector table

### Why are the changes needed?

Incorrect OutputFormat when describing jdbc connector table

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Small fix, just local cluster test.

After the fixing:

Issue Time Tracking

---

Worklog Id: (was: 755609)

Remaining Estimate: 0h

Time Spent: 10m

> Incorrect OutputFormat when describing jdbc connector table

>

>

> Key: HIVE-26131

> URL: https://issues.apache.org/jira/browse/HIVE-26131

> Project: Hive

> Issue Type: Bug

> Components: JDBC storage handler

>Affects Versions: 4.0.0-alpha-1, 4.0.0-alpha-2

>Reporter: zhangbutao

>Assignee: zhangbutao

>Priority: Minor

> Fix For: 4.0.0-alpha-2

>

> Attachments: image-2022-04-12-13-07-09-647.png

>

> Time Spent: 10m

> Remaining Estimate: 0h

>

> Step to repro:

> {code:java}

> CREATE CONNECTOR mysql_qtest

> TYPE 'mysql'

> URL 'jdbc:mysql://localhost:3306/testdb'

> WITH DCPROPERTIES (

> "hive.sql.dbcp.username"="root",

> "hive.sql.dbcp.password"="");

> CREATE REMOTE DATABASE db_mysql USING mysql_qtest with

> DBPROPERTIES("connector.remoteDbName"="testdb");

> describe formatted db_mysql.test;{code}

> You can see incorrect OuptputFormat info:

> !image-2022-04-12-13-07-09-647.png!

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Updated] (HIVE-26131) Incorrect OutputFormat when describing jdbc connector table

[

https://issues.apache.org/jira/browse/HIVE-26131?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

zhangbutao updated HIVE-26131:

--

Attachment: (was: image-2022-04-12-13-07-36-876.png)

> Incorrect OutputFormat when describing jdbc connector table

>

>

> Key: HIVE-26131

> URL: https://issues.apache.org/jira/browse/HIVE-26131

> Project: Hive

> Issue Type: Bug

> Components: JDBC storage handler

>Affects Versions: 4.0.0-alpha-1, 4.0.0-alpha-2

>Reporter: zhangbutao

>Assignee: zhangbutao

>Priority: Minor

> Fix For: 4.0.0-alpha-2

>

> Attachments: image-2022-04-12-13-07-09-647.png

>

>

> Step to repro:

> {code:java}

> CREATE CONNECTOR mysql_qtest

> TYPE 'mysql'

> URL 'jdbc:mysql://localhost:3306/testdb'

> WITH DCPROPERTIES (

> "hive.sql.dbcp.username"="root",

> "hive.sql.dbcp.password"="");

> CREATE REMOTE DATABASE db_mysql USING mysql_qtest with

> DBPROPERTIES("connector.remoteDbName"="testdb");

> describe formatted db_mysql.test;{code}

> You can see incorrect OuptputFormat info:

> !image-2022-04-12-13-07-09-647.png!

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Work started] (HIVE-26131) Incorrect OutputFormat when describing jdbc connector table

[

https://issues.apache.org/jira/browse/HIVE-26131?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Work on HIVE-26131 started by zhangbutao.

-

> Incorrect OutputFormat when describing jdbc connector table

>

>

> Key: HIVE-26131

> URL: https://issues.apache.org/jira/browse/HIVE-26131

> Project: Hive

> Issue Type: Bug

> Components: JDBC storage handler

>Affects Versions: 4.0.0-alpha-1, 4.0.0-alpha-2

>Reporter: zhangbutao

>Assignee: zhangbutao

>Priority: Minor

> Attachments: image-2022-04-12-13-07-09-647.png,

> image-2022-04-12-13-07-36-876.png

>

>

> Step to repro:

> {code:java}

> CREATE CONNECTOR mysql_qtest

> TYPE 'mysql'

> URL 'jdbc:mysql://localhost:3306/testdb'

> WITH DCPROPERTIES (

> "hive.sql.dbcp.username"="root",

> "hive.sql.dbcp.password"="");

> CREATE REMOTE DATABASE db_mysql USING mysql_qtest with

> DBPROPERTIES("connector.remoteDbName"="testdb");

> describe formatted db_mysql.test;{code}

> You can see incorrect OuptputFormat info:

> !image-2022-04-12-13-07-09-647.png!

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Updated] (HIVE-26131) Incorrect OutputFormat when describing jdbc connector table

[

https://issues.apache.org/jira/browse/HIVE-26131?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

zhangbutao updated HIVE-26131:

--

Fix Version/s: 4.0.0-alpha-2

> Incorrect OutputFormat when describing jdbc connector table

>

>

> Key: HIVE-26131

> URL: https://issues.apache.org/jira/browse/HIVE-26131

> Project: Hive

> Issue Type: Bug

> Components: JDBC storage handler

>Affects Versions: 4.0.0-alpha-1, 4.0.0-alpha-2

>Reporter: zhangbutao

>Assignee: zhangbutao

>Priority: Minor

> Fix For: 4.0.0-alpha-2

>

> Attachments: image-2022-04-12-13-07-09-647.png,

> image-2022-04-12-13-07-36-876.png

>

>

> Step to repro:

> {code:java}

> CREATE CONNECTOR mysql_qtest

> TYPE 'mysql'

> URL 'jdbc:mysql://localhost:3306/testdb'

> WITH DCPROPERTIES (

> "hive.sql.dbcp.username"="root",

> "hive.sql.dbcp.password"="");

> CREATE REMOTE DATABASE db_mysql USING mysql_qtest with

> DBPROPERTIES("connector.remoteDbName"="testdb");

> describe formatted db_mysql.test;{code}

> You can see incorrect OuptputFormat info:

> !image-2022-04-12-13-07-09-647.png!

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Updated] (HIVE-26131) Incorrect OutputFormat when describing jdbc connector table

[

https://issues.apache.org/jira/browse/HIVE-26131?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

zhangbutao updated HIVE-26131:

--

Description:

Step to repro:

{code:java}

CREATE CONNECTOR mysql_qtest

TYPE 'mysql'

URL 'jdbc:mysql://localhost:3306/testdb'

WITH DCPROPERTIES (

"hive.sql.dbcp.username"="root",

"hive.sql.dbcp.password"="");

CREATE REMOTE DATABASE db_mysql USING mysql_qtest with

DBPROPERTIES("connector.remoteDbName"="testdb");

describe formatted db_mysql.test;{code}

You can see incorrect OuptputFormat info:

!image-2022-04-12-13-07-09-647.png!

was:

Step to repro:

{code:java}

CREATE CONNECTOR mysql_qtest

TYPE 'mysql'

URL 'jdbc:mysql://localhost:3306/testdb'

WITH DCPROPERTIES (

"hive.sql.dbcp.username"="root",

"hive.sql.dbcp.password"="");

CREATE REMOTE DATABASE db_mysql USING mysql_qtest with

DBPROPERTIES("connector.remoteDbName"="testdb");

describe formatted db_mysql.test;{code}

You can see incorrect

!image-2022-04-12-13-07-09-647.png!

> Incorrect OutputFormat when describing jdbc connector table

>

>

> Key: HIVE-26131

> URL: https://issues.apache.org/jira/browse/HIVE-26131

> Project: Hive

> Issue Type: Bug

> Components: JDBC storage handler

>Affects Versions: 4.0.0-alpha-1, 4.0.0-alpha-2

>Reporter: zhangbutao

>Assignee: zhangbutao

>Priority: Minor

> Attachments: image-2022-04-12-13-07-09-647.png,

> image-2022-04-12-13-07-36-876.png

>

>

> Step to repro:

> {code:java}

> CREATE CONNECTOR mysql_qtest

> TYPE 'mysql'

> URL 'jdbc:mysql://localhost:3306/testdb'

> WITH DCPROPERTIES (

> "hive.sql.dbcp.username"="root",

> "hive.sql.dbcp.password"="");

> CREATE REMOTE DATABASE db_mysql USING mysql_qtest with

> DBPROPERTIES("connector.remoteDbName"="testdb");

> describe formatted db_mysql.test;{code}

> You can see incorrect OuptputFormat info:

> !image-2022-04-12-13-07-09-647.png!

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Updated] (HIVE-26131) Incorrect OutputFormat when describing jdbc connector table

[

https://issues.apache.org/jira/browse/HIVE-26131?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

zhangbutao updated HIVE-26131:

--

Description:

Step to repro:

{code:java}

CREATE CONNECTOR mysql_qtest

TYPE 'mysql'

URL 'jdbc:mysql://localhost:3306/testdb'

WITH DCPROPERTIES (

"hive.sql.dbcp.username"="root",

"hive.sql.dbcp.password"="");

CREATE REMOTE DATABASE db_mysql USING mysql_qtest with

DBPROPERTIES("connector.remoteDbName"="testdb");

describe formatted db_mysql.test;{code}

You can see incorrect

!image-2022-04-12-13-07-09-647.png!

was:

Step to repro:

{code:java}

CREATE CONNECTOR mysql_qtest

TYPE 'mysql'

URL 'jdbc:mysql://localhost:3306/testdb'

WITH DCPROPERTIES (

"hive.sql.dbcp.username"="root",

"hive.sql.dbcp.password"="");

CREATE REMOTE DATABASE db_mysql USING mysql_qtest with

DBPROPERTIES("connector.remoteDbName"="testdb");

describe formatted db_mysql.test;{code}

> Incorrect OutputFormat when describing jdbc connector table

>

>

> Key: HIVE-26131

> URL: https://issues.apache.org/jira/browse/HIVE-26131

> Project: Hive

> Issue Type: Bug

> Components: JDBC storage handler

>Affects Versions: 4.0.0-alpha-1, 4.0.0-alpha-2

>Reporter: zhangbutao

>Assignee: zhangbutao

>Priority: Minor

> Attachments: image-2022-04-12-13-07-09-647.png,

> image-2022-04-12-13-07-36-876.png

>

>

> Step to repro:

> {code:java}

> CREATE CONNECTOR mysql_qtest

> TYPE 'mysql'

> URL 'jdbc:mysql://localhost:3306/testdb'

> WITH DCPROPERTIES (

> "hive.sql.dbcp.username"="root",

> "hive.sql.dbcp.password"="");

> CREATE REMOTE DATABASE db_mysql USING mysql_qtest with

> DBPROPERTIES("connector.remoteDbName"="testdb");

> describe formatted db_mysql.test;{code}

> You can see incorrect

> !image-2022-04-12-13-07-09-647.png!

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Updated] (HIVE-26131) Incorrect OutputFormat when describing jdbc connector table

[

https://issues.apache.org/jira/browse/HIVE-26131?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

zhangbutao updated HIVE-26131:

--

Attachment: image-2022-04-12-13-07-09-647.png

> Incorrect OutputFormat when describing jdbc connector table

>

>

> Key: HIVE-26131

> URL: https://issues.apache.org/jira/browse/HIVE-26131

> Project: Hive

> Issue Type: Bug

> Components: JDBC storage handler

>Affects Versions: 4.0.0-alpha-1, 4.0.0-alpha-2

>Reporter: zhangbutao

>Assignee: zhangbutao

>Priority: Minor

> Attachments: image-2022-04-12-13-07-09-647.png,

> image-2022-04-12-13-07-36-876.png

>

>

> Step to repro:

> {code:java}

> CREATE CONNECTOR mysql_qtest

> TYPE 'mysql'

> URL 'jdbc:mysql://localhost:3306/testdb'

> WITH DCPROPERTIES (

> "hive.sql.dbcp.username"="root",

> "hive.sql.dbcp.password"="");

> CREATE REMOTE DATABASE db_mysql USING mysql_qtest with

> DBPROPERTIES("connector.remoteDbName"="testdb");

> describe formatted db_mysql.test;{code}

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Updated] (HIVE-26131) Incorrect OutputFormat when describing jdbc connector table

[

https://issues.apache.org/jira/browse/HIVE-26131?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

zhangbutao updated HIVE-26131:

--

Attachment: image-2022-04-12-13-07-36-876.png

> Incorrect OutputFormat when describing jdbc connector table

>

>

> Key: HIVE-26131

> URL: https://issues.apache.org/jira/browse/HIVE-26131

> Project: Hive

> Issue Type: Bug

> Components: JDBC storage handler

>Affects Versions: 4.0.0-alpha-1, 4.0.0-alpha-2

>Reporter: zhangbutao

>Assignee: zhangbutao

>Priority: Minor

> Attachments: image-2022-04-12-13-07-09-647.png,

> image-2022-04-12-13-07-36-876.png

>

>

> Step to repro:

> {code:java}

> CREATE CONNECTOR mysql_qtest

> TYPE 'mysql'

> URL 'jdbc:mysql://localhost:3306/testdb'

> WITH DCPROPERTIES (

> "hive.sql.dbcp.username"="root",

> "hive.sql.dbcp.password"="");

> CREATE REMOTE DATABASE db_mysql USING mysql_qtest with

> DBPROPERTIES("connector.remoteDbName"="testdb");

> describe formatted db_mysql.test;{code}

> You can see incorrect

> !image-2022-04-12-13-07-09-647.png!

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Assigned] (HIVE-26131) Incorrect OutputFormat when describing jdbc connector table

[

https://issues.apache.org/jira/browse/HIVE-26131?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

zhangbutao reassigned HIVE-26131:

-

Assignee: zhangbutao

> Incorrect OutputFormat when describing jdbc connector table

>

>

> Key: HIVE-26131

> URL: https://issues.apache.org/jira/browse/HIVE-26131

> Project: Hive

> Issue Type: Bug

> Components: JDBC storage handler

>Affects Versions: 4.0.0-alpha-1, 4.0.0-alpha-2

>Reporter: zhangbutao

>Assignee: zhangbutao

>Priority: Minor

>

> Step to repro:

> {code:java}

> CREATE CONNECTOR mysql_qtest

> TYPE 'mysql'

> URL 'jdbc:mysql://localhost:3306/testdb'

> WITH DCPROPERTIES (

> "hive.sql.dbcp.username"="root",

> "hive.sql.dbcp.password"="");

> CREATE REMOTE DATABASE db_mysql USING mysql_qtest with

> DBPROPERTIES("connector.remoteDbName"="testdb");

> describe formatted db_mysql.test;{code}

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Updated] (HIVE-26131) Incorrect OutputFormat when describing jdbc connector table

[

https://issues.apache.org/jira/browse/HIVE-26131?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

zhangbutao updated HIVE-26131:

--

Description:

Step to repro:

{code:java}

CREATE CONNECTOR mysql_qtest

TYPE 'mysql'

URL 'jdbc:mysql://localhost:3306/testdb'

WITH DCPROPERTIES (

"hive.sql.dbcp.username"="root",

"hive.sql.dbcp.password"="");

CREATE REMOTE DATABASE db_mysql USING mysql_qtest with

DBPROPERTIES("connector.remoteDbName"="testdb");

describe formatted db_mysql.test;{code}

> Incorrect OutputFormat when describing jdbc connector table

>

>

> Key: HIVE-26131

> URL: https://issues.apache.org/jira/browse/HIVE-26131

> Project: Hive

> Issue Type: Bug

> Components: JDBC storage handler

>Affects Versions: 4.0.0-alpha-1, 4.0.0-alpha-2

>Reporter: zhangbutao

>Priority: Minor

>

> Step to repro:

> {code:java}

> CREATE CONNECTOR mysql_qtest

> TYPE 'mysql'

> URL 'jdbc:mysql://localhost:3306/testdb'

> WITH DCPROPERTIES (

> "hive.sql.dbcp.username"="root",

> "hive.sql.dbcp.password"="");

> CREATE REMOTE DATABASE db_mysql USING mysql_qtest with

> DBPROPERTIES("connector.remoteDbName"="testdb");

> describe formatted db_mysql.test;{code}

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Work logged] (HIVE-21456) Hive Metastore Thrift over HTTP

[ https://issues.apache.org/jira/browse/HIVE-21456?focusedWorklogId=755376=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-755376 ] ASF GitHub Bot logged work on HIVE-21456: - Author: ASF GitHub Bot Created on: 11/Apr/22 18:49 Start Date: 11/Apr/22 18:49 Worklog Time Spent: 10m Work Description: sourabh912 commented on code in PR #3105: URL: https://github.com/apache/hive/pull/3105#discussion_r847635937 ## standalone-metastore/pom.xml: ## @@ -361,6 +362,12 @@ runtime true + Hive Metastore Thrift over HTTP > --- > > Key: HIVE-21456 > URL: https://issues.apache.org/jira/browse/HIVE-21456 > Project: Hive > Issue Type: New Feature > Components: Metastore, Standalone Metastore >Reporter: Amit Khanna >Assignee: Sourabh Goyal >Priority: Major > Labels: pull-request-available > Attachments: HIVE-21456.2.patch, HIVE-21456.3.patch, > HIVE-21456.4.patch, HIVE-21456.patch > > Time Spent: 5h > Remaining Estimate: 0h > > Hive Metastore currently doesn't have support for HTTP transport because of > which it is not possible to access it via Knox. Adding support for Thrift > over HTTP transport will allow the clients to access via Knox -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Work logged] (HIVE-21456) Hive Metastore Thrift over HTTP

[

https://issues.apache.org/jira/browse/HIVE-21456?focusedWorklogId=755368=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-755368

]

ASF GitHub Bot logged work on HIVE-21456:

-

Author: ASF GitHub Bot

Created on: 11/Apr/22 18:20

Start Date: 11/Apr/22 18:20

Worklog Time Spent: 10m

Work Description: sourabh912 commented on code in PR #3105:

URL: https://github.com/apache/hive/pull/3105#discussion_r847613439

##

standalone-metastore/metastore-server/src/main/java/org/apache/hadoop/hive/metastore/HiveMetaStore.java:

##

@@ -343,21 +366,162 @@ public static void startMetaStore(int port,

HadoopThriftAuthBridge bridge,

startMetaStore(port, bridge, conf, false, null);

}

- /**

- * Start Metastore based on a passed {@link HadoopThriftAuthBridge}.

- *

- * @param port The port on which the Thrift server will start to serve

- * @param bridge

- * @param conf Configuration overrides

- * @param startMetaStoreThreads Start the background threads (initiator,

cleaner, statsupdater, etc.)

- * @param startedBackgroundThreads If startMetaStoreThreads is true, this

AtomicBoolean will be switched to true,

- * when all of the background threads are scheduled. Useful for testing

purposes to wait

- * until the MetaStore is fully initialized.

- * @throws Throwable

- */

- public static void startMetaStore(int port, HadoopThriftAuthBridge bridge,

- Configuration conf, boolean startMetaStoreThreads, AtomicBoolean

startedBackgroundThreads) throws Throwable {

-isMetaStoreRemote = true;

+ public static boolean isThriftServerRunning() {

+return thriftServer != null && thriftServer.isRunning();

+ }

+

+ // TODO: Is it worth trying to use a server that supports HTTP/2?

+ // Does the Thrift http client support this?

+

+ public static ThriftServer startHttpMetastore(int port, Configuration conf)

+ throws Exception {

+LOG.info("Attempting to start http metastore server on port: {}", port);

Review Comment:

@pvary : Thanks for the pointers. I have addressed disabling TRACE for HMS

http server.

Issue Time Tracking

---

Worklog Id: (was: 755368)

Time Spent: 4h 50m (was: 4h 40m)

> Hive Metastore Thrift over HTTP

> ---

>

> Key: HIVE-21456

> URL: https://issues.apache.org/jira/browse/HIVE-21456

> Project: Hive

> Issue Type: New Feature

> Components: Metastore, Standalone Metastore

>Reporter: Amit Khanna

>Assignee: Sourabh Goyal

>Priority: Major

> Labels: pull-request-available

> Attachments: HIVE-21456.2.patch, HIVE-21456.3.patch,

> HIVE-21456.4.patch, HIVE-21456.patch

>

> Time Spent: 4h 50m

> Remaining Estimate: 0h

>

> Hive Metastore currently doesn't have support for HTTP transport because of

> which it is not possible to access it via Knox. Adding support for Thrift

> over HTTP transport will allow the clients to access via Knox

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Work logged] (HIVE-21456) Hive Metastore Thrift over HTTP

[

https://issues.apache.org/jira/browse/HIVE-21456?focusedWorklogId=755367=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-755367

]

ASF GitHub Bot logged work on HIVE-21456:

-

Author: ASF GitHub Bot

Created on: 11/Apr/22 18:19

Start Date: 11/Apr/22 18:19

Worklog Time Spent: 10m

Work Description: sourabh912 commented on code in PR #3105:

URL: https://github.com/apache/hive/pull/3105#discussion_r847612658

##

standalone-metastore/metastore-server/src/main/java/org/apache/hadoop/hive/metastore/HmsThriftHttpServlet.java:

##

@@ -0,0 +1,116 @@

+/* * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hadoop.hive.metastore;

+

+import java.io.IOException;

+import java.security.PrivilegedExceptionAction;

+import java.util.Enumeration;

+

+import javax.servlet.ServletException;

+import javax.servlet.http.HttpServletRequest;

+import javax.servlet.http.HttpServletResponse;

+import org.apache.hadoop.hive.metastore.utils.MetaStoreUtils;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import org.apache.hadoop.security.UserGroupInformation;

+import org.apache.thrift.TProcessor;

+import org.apache.thrift.protocol.TProtocolFactory;

+import org.apache.thrift.server.TServlet;

+

+public class HmsThriftHttpServlet extends TServlet {

+

+ private static final Logger LOG = LoggerFactory

+ .getLogger(HmsThriftHttpServlet.class);

+

+ private static final String X_USER = MetaStoreUtils.USER_NAME_HTTP_HEADER;

+

+ private final boolean isSecurityEnabled;

+

+ public HmsThriftHttpServlet(TProcessor processor,

+ TProtocolFactory inProtocolFactory, TProtocolFactory outProtocolFactory)

{

+super(processor, inProtocolFactory, outProtocolFactory);

+// This should ideally be reveiving an instance of the Configuration which

is used for the check

+isSecurityEnabled = UserGroupInformation.isSecurityEnabled();

+ }

+

+ public HmsThriftHttpServlet(TProcessor processor,

+ TProtocolFactory protocolFactory) {

+super(processor, protocolFactory);

+isSecurityEnabled = UserGroupInformation.isSecurityEnabled();

+ }

+

+ @Override

+ protected void doPost(HttpServletRequest request,

+ HttpServletResponse response) throws ServletException, IOException {

+

+Enumeration headerNames = request.getHeaderNames();

+if (LOG.isDebugEnabled()) {

+ LOG.debug("Logging headers in request");

+ while (headerNames.hasMoreElements()) {

+String headerName = headerNames.nextElement();

+LOG.debug("Header: [{}], Value: [{}]", headerName,

+request.getHeader(headerName));

+ }

+}

+String userFromHeader = request.getHeader(X_USER);

+if (userFromHeader == null || userFromHeader.isEmpty()) {

+ LOG.error("No user header: {} found", X_USER);

+ response.sendError(HttpServletResponse.SC_FORBIDDEN,

+ "User Header missing");

+ return;

+}

+

+// TODO: These should ideally be in some kind of a Cache with Weak

referencse.

+// If HMS were to set up some kind of a session, this would go into the

session by having

+// this filter work with a custom Processor / or set the username into the

session

+// as is done for HS2.

+// In case of HMS, it looks like each request is independent, and there is

no session

+// information, so the UGI needs to be set up in the Connection layer

itself.

+UserGroupInformation clientUgi;

+// Temporary, and useless for now. Here only to allow this to work on an

otherwise kerberized

+// server.

+if (isSecurityEnabled) {

+ LOG.info("Creating proxy user for: {}", userFromHeader);

+ clientUgi = UserGroupInformation.createProxyUser(userFromHeader,

UserGroupInformation.getLoginUser());

+} else {

+ LOG.info("Creating remote user for: {}", userFromHeader);

+ clientUgi = UserGroupInformation.createRemoteUser(userFromHeader);

+}

+

+

+PrivilegedExceptionAction action = new

PrivilegedExceptionAction() {

+ @Override

+ public Void run() throws Exception {

+HmsThriftHttpServlet.super.doPost(request, response);

+return

[jira] [Work logged] (HIVE-21456) Hive Metastore Thrift over HTTP

[

https://issues.apache.org/jira/browse/HIVE-21456?focusedWorklogId=755363=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-755363

]

ASF GitHub Bot logged work on HIVE-21456:

-

Author: ASF GitHub Bot

Created on: 11/Apr/22 18:15

Start Date: 11/Apr/22 18:15

Worklog Time Spent: 10m

Work Description: sourabh912 commented on code in PR #3105:

URL: https://github.com/apache/hive/pull/3105#discussion_r847609721

##

standalone-metastore/metastore-server/src/test/java/org/apache/hadoop/hive/metastore/TestRemoteHiveHttpMetaStore.java:

##

@@ -0,0 +1,47 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.hadoop.hive.metastore;

+

+import org.apache.hadoop.hive.metastore.annotation.MetastoreUnitTest;

+import org.junit.experimental.categories.Category;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import org.apache.hadoop.hive.metastore.annotation.MetastoreCheckinTest;

+import org.apache.hadoop.hive.metastore.conf.MetastoreConf;

+import org.apache.hadoop.hive.metastore.conf.MetastoreConf.ConfVars;

+

+@Category(MetastoreCheckinTest.class)

+public class TestRemoteHiveHttpMetaStore extends TestRemoteHiveMetaStore {

+

+ private static final Logger LOG =

LoggerFactory.getLogger(TestRemoteHiveHttpMetaStore.class);

+

+ @Override

+ public void start() throws Exception {

+MetastoreConf.setVar(conf, ConfVars.THRIFT_TRANSPORT_MODE, "http");

+LOG.info("Attempting to start test remote metastore in http mode");

+super.start();

+LOG.info("Successfully started test remote metastore in http mode");

+ }

+

+ @Override

+ protected HiveMetaStoreClient createClient() throws Exception {

+MetastoreConf.setVar(conf,

ConfVars.METASTORE_CLIENT_THRIFT_TRANSPORT_MODE, "http");

+return super.createClient();

+ }

+}

Review Comment:

Done

##

standalone-metastore/metastore-server/src/main/java/org/apache/hadoop/hive/metastore/HmsThriftHttpServlet.java:

##

@@ -0,0 +1,116 @@

+/* * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hadoop.hive.metastore;

+

+import java.io.IOException;

+import java.security.PrivilegedExceptionAction;

+import java.util.Enumeration;

+

+import javax.servlet.ServletException;

+import javax.servlet.http.HttpServletRequest;

+import javax.servlet.http.HttpServletResponse;

+import org.apache.hadoop.hive.metastore.utils.MetaStoreUtils;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import org.apache.hadoop.security.UserGroupInformation;

+import org.apache.thrift.TProcessor;

+import org.apache.thrift.protocol.TProtocolFactory;

+import org.apache.thrift.server.TServlet;

+

+public class HmsThriftHttpServlet extends TServlet {

+

+ private static final Logger LOG = LoggerFactory

+ .getLogger(HmsThriftHttpServlet.class);

+

+ private static final String X_USER = MetaStoreUtils.USER_NAME_HTTP_HEADER;

+

+ private final boolean isSecurityEnabled;

+

+ public HmsThriftHttpServlet(TProcessor processor,

+ TProtocolFactory inProtocolFactory, TProtocolFactory outProtocolFactory)

{

+super(processor, inProtocolFactory, outProtocolFactory);

+// This should ideally be reveiving an instance of the Configuration which

is used for the check

+isSecurityEnabled = UserGroupInformation.isSecurityEnabled();

+ }

+

+ public HmsThriftHttpServlet(TProcessor

[jira] [Work logged] (HIVE-21456) Hive Metastore Thrift over HTTP

[ https://issues.apache.org/jira/browse/HIVE-21456?focusedWorklogId=755362=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-755362 ] ASF GitHub Bot logged work on HIVE-21456: - Author: ASF GitHub Bot Created on: 11/Apr/22 18:14 Start Date: 11/Apr/22 18:14 Worklog Time Spent: 10m Work Description: sourabh912 commented on code in PR #3105: URL: https://github.com/apache/hive/pull/3105#discussion_r847609041 ## standalone-metastore/pom.xml: ## @@ -361,6 +362,12 @@ runtime true + Hive Metastore Thrift over HTTP > --- > > Key: HIVE-21456 > URL: https://issues.apache.org/jira/browse/HIVE-21456 > Project: Hive > Issue Type: New Feature > Components: Metastore, Standalone Metastore >Reporter: Amit Khanna >Assignee: Sourabh Goyal >Priority: Major > Labels: pull-request-available > Attachments: HIVE-21456.2.patch, HIVE-21456.3.patch, > HIVE-21456.4.patch, HIVE-21456.patch > > Time Spent: 4h 20m > Remaining Estimate: 0h > > Hive Metastore currently doesn't have support for HTTP transport because of > which it is not possible to access it via Knox. Adding support for Thrift > over HTTP transport will allow the clients to access via Knox -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Work logged] (HIVE-21456) Hive Metastore Thrift over HTTP

[

https://issues.apache.org/jira/browse/HIVE-21456?focusedWorklogId=755360=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-755360

]

ASF GitHub Bot logged work on HIVE-21456:

-

Author: ASF GitHub Bot

Created on: 11/Apr/22 18:14

Start Date: 11/Apr/22 18:14

Worklog Time Spent: 10m

Work Description: sourabh912 commented on code in PR #3105:

URL: https://github.com/apache/hive/pull/3105#discussion_r847608730

##

itests/hive-unit/src/test/java/org/apache/hive/jdbc/TestSSL.java:

##

@@ -437,15 +439,36 @@ public void testConnectionWrongCertCN() throws Exception {

* Test HMS server with SSL

* @throws Exception

*/

+ @Ignore

@Test

public void testMetastoreWithSSL() throws Exception {

testSSLHMS(true);

}

+ /**

+ * Test HMS server with Http + SSL

+ * @throws Exception

+ */

+ @Test

+ public void testMetastoreWithHttps() throws Exception {

+// MetastoreConf.setBoolVar(conf,

MetastoreConf.ConfVars.EVENT_DB_NOTIFICATION_API_AUTH, false);

+//MetastoreConf.setVar(conf,

MetastoreConf.ConfVars.METASTORE_CLIENT_TRANSPORT_MODE, "http");

+SSLTestUtils.setMetastoreHttpsConf(conf);

+MetastoreConf.setVar(conf,

MetastoreConf.ConfVars.SSL_TRUSTMANAGERFACTORY_ALGORITHM,

+KEY_MANAGER_FACTORY_ALGORITHM);

+MetastoreConf.setVar(conf, MetastoreConf.ConfVars.SSL_TRUSTSTORE_TYPE,

KEY_STORE_TRUST_STORE_TYPE);

+MetastoreConf.setVar(conf, MetastoreConf.ConfVars.SSL_KEYSTORE_TYPE,

KEY_STORE_TRUST_STORE_TYPE);

+MetastoreConf.setVar(conf,

MetastoreConf.ConfVars.SSL_KEYMANAGERFACTORY_ALGORITHM,

+KEY_MANAGER_FACTORY_ALGORITHM);

+

+testSSLHMS(false);

Review Comment:

Thanks for pointing it out. I am setting the conf

`MetastoreConf.ConfVars.SSL_KEYSTORE_TYPE` in testSSLHMS(false) now.

Issue Time Tracking

---

Worklog Id: (was: 755360)

Time Spent: 4h 10m (was: 4h)

> Hive Metastore Thrift over HTTP

> ---

>

> Key: HIVE-21456

> URL: https://issues.apache.org/jira/browse/HIVE-21456

> Project: Hive

> Issue Type: New Feature

> Components: Metastore, Standalone Metastore

>Reporter: Amit Khanna

>Assignee: Sourabh Goyal

>Priority: Major

> Labels: pull-request-available

> Attachments: HIVE-21456.2.patch, HIVE-21456.3.patch,

> HIVE-21456.4.patch, HIVE-21456.patch

>

> Time Spent: 4h 10m

> Remaining Estimate: 0h

>

> Hive Metastore currently doesn't have support for HTTP transport because of

> which it is not possible to access it via Knox. Adding support for Thrift

> over HTTP transport will allow the clients to access via Knox

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Work logged] (HIVE-26102) Implement DELETE statements for Iceberg tables

[

https://issues.apache.org/jira/browse/HIVE-26102?focusedWorklogId=755303=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-755303

]

ASF GitHub Bot logged work on HIVE-26102:

-

Author: ASF GitHub Bot

Created on: 11/Apr/22 16:37

Start Date: 11/Apr/22 16:37

Worklog Time Spent: 10m

Work Description: pvary commented on code in PR #3131:

URL: https://github.com/apache/hive/pull/3131#discussion_r847528556

##

ql/src/java/org/apache/hadoop/hive/ql/io/IOContext.java:

##

@@ -187,6 +188,14 @@ public void parseRecordIdentifier(Configuration

configuration) {

}

}

+ public void parsePositionDeleteInfo(Configuration configuration) {

+this.pdi = PositionDeleteInfo.parseFromConf(configuration);

Review Comment:

Would it worth to set the `pdi` fields one-by-one instead of creating a new

object for every row?

Issue Time Tracking

---

Worklog Id: (was: 755303)

Time Spent: 17.5h (was: 17h 20m)

> Implement DELETE statements for Iceberg tables

> --

>

> Key: HIVE-26102

> URL: https://issues.apache.org/jira/browse/HIVE-26102

> Project: Hive

> Issue Type: New Feature

>Reporter: Marton Bod

>Assignee: Marton Bod

>Priority: Major

> Labels: pull-request-available

> Time Spent: 17.5h

> Remaining Estimate: 0h

>

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Work logged] (HIVE-26102) Implement DELETE statements for Iceberg tables

[ https://issues.apache.org/jira/browse/HIVE-26102?focusedWorklogId=755302=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-755302 ] ASF GitHub Bot logged work on HIVE-26102: - Author: ASF GitHub Bot Created on: 11/Apr/22 16:34 Start Date: 11/Apr/22 16:34 Worklog Time Spent: 10m Work Description: pvary commented on code in PR #3131: URL: https://github.com/apache/hive/pull/3131#discussion_r847525880 ## ql/src/java/org/apache/hadoop/hive/ql/exec/MapOperator.java: ## @@ -673,7 +674,31 @@ private String toErrorMessage(Writable value, Object row, ObjectInspector inspec ctx.getIoCxt().setRecordIdentifier(null);//so we don't accidentally cache the value; shouldn't //happen since IO layer either knows how to produce ROW__ID or not - but to be safe } - break; + break; +case PARTITION_SPEC_ID: Review Comment: Ok.. I would have accepted the change in the `Deserializer` for this, but I do not see how can we extend the `VirtualColumn` to allow columns from the Deserializer... Any ideas are welcome, until then we will work with this Issue Time Tracking --- Worklog Id: (was: 755302) Time Spent: 17h 20m (was: 17h 10m) > Implement DELETE statements for Iceberg tables > -- > > Key: HIVE-26102 > URL: https://issues.apache.org/jira/browse/HIVE-26102 > Project: Hive > Issue Type: New Feature >Reporter: Marton Bod >Assignee: Marton Bod >Priority: Major > Labels: pull-request-available > Time Spent: 17h 20m > Remaining Estimate: 0h > -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Work logged] (HIVE-26102) Implement DELETE statements for Iceberg tables

[

https://issues.apache.org/jira/browse/HIVE-26102?focusedWorklogId=755298=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-755298

]

ASF GitHub Bot logged work on HIVE-26102:

-

Author: ASF GitHub Bot

Created on: 11/Apr/22 16:23

Start Date: 11/Apr/22 16:23

Worklog Time Spent: 10m

Work Description: pvary commented on code in PR #3131:

URL: https://github.com/apache/hive/pull/3131#discussion_r847515950

##

iceberg/iceberg-handler/src/main/java/org/apache/iceberg/mr/mapreduce/IcebergInputFormat.java:

##

@@ -468,14 +475,17 @@ private CloseableIterable newOrcIterable(InputFile

inputFile, FileScanTask ta

Set idColumns = spec.identitySourceIds();

Schema partitionSchema = TypeUtil.select(expectedSchema, idColumns);

boolean projectsIdentityPartitionColumns =

!partitionSchema.columns().isEmpty();

- if (projectsIdentityPartitionColumns) {

+ if (expectedSchema.findField(MetadataColumns.PARTITION_COLUMN_ID) !=

null) {

Review Comment:

Why is this change needed?

Issue Time Tracking

---

Worklog Id: (was: 755298)

Time Spent: 17h 10m (was: 17h)

> Implement DELETE statements for Iceberg tables

> --

>

> Key: HIVE-26102

> URL: https://issues.apache.org/jira/browse/HIVE-26102

> Project: Hive

> Issue Type: New Feature

>Reporter: Marton Bod

>Assignee: Marton Bod

>Priority: Major

> Labels: pull-request-available

> Time Spent: 17h 10m

> Remaining Estimate: 0h

>

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Work logged] (HIVE-26102) Implement DELETE statements for Iceberg tables

[

https://issues.apache.org/jira/browse/HIVE-26102?focusedWorklogId=755280=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-755280

]

ASF GitHub Bot logged work on HIVE-26102:

-

Author: ASF GitHub Bot

Created on: 11/Apr/22 15:44

Start Date: 11/Apr/22 15:44

Worklog Time Spent: 10m

Work Description: marton-bod commented on code in PR #3131:

URL: https://github.com/apache/hive/pull/3131#discussion_r847473881

##

iceberg/iceberg-handler/src/main/java/org/apache/iceberg/mr/hive/HiveIcebergOutputCommitter.java:

##

@@ -325,9 +327,40 @@ private void commitTable(FileIO io, ExecutorService

executor, JobContext jobCont

"numReduceTasks/numMapTasks", jobContext.getJobID(), name);

return conf.getNumReduceTasks() > 0 ? conf.getNumReduceTasks() :

conf.getNumMapTasks();

});

-Collection dataFiles = dataFiles(numTasks, executor, location,

jobContext, io, true);

-boolean isOverwrite = conf.getBoolean(InputFormatConfig.IS_OVERWRITE,

false);

+if (HiveIcebergStorageHandler.isDelete(conf, name)) {

+ Collection writeResults = collectResults(numTasks,

executor, location, jobContext, io, true);

+ commitDelete(jobContext, table, startTime, writeResults);

+} else if (HiveIcebergStorageHandler.isWrite(conf, name)) {

+ Collection writeResults = collectResults(numTasks,

executor, location, jobContext, io, true);

+ boolean isOverwrite = conf.getBoolean(InputFormatConfig.IS_OVERWRITE,

false);

+ commitInsert(jobContext, table, startTime, writeResults, isOverwrite);

+} else {

+ LOG.info("Unable to determine commit operation type for table: {},

jobID: {}. Will not create a commit.",

+ table, jobContext.getJobID());

+}

+ }

+

+ private void commitDelete(JobContext jobContext, Table table, long

startTime, Collection results) {

Review Comment:

That should allow you to do something like:

```

// update

Transaction transaction = table.newTransaction();

commitDelete(table, Optional.of(transaction), startTime, deleteWriteResults);

commitInsert(table, Optional.of(transaction), startTime, insertWriteResults,

isOverwrite);

transaction.commitTransaction();

```

Issue Time Tracking

---

Worklog Id: (was: 755280)

Time Spent: 17h (was: 16h 50m)

> Implement DELETE statements for Iceberg tables

> --

>

> Key: HIVE-26102

> URL: https://issues.apache.org/jira/browse/HIVE-26102

> Project: Hive

> Issue Type: New Feature

>Reporter: Marton Bod

>Assignee: Marton Bod

>Priority: Major

> Labels: pull-request-available

> Time Spent: 17h

> Remaining Estimate: 0h

>

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Work logged] (HIVE-26102) Implement DELETE statements for Iceberg tables

[

https://issues.apache.org/jira/browse/HIVE-26102?focusedWorklogId=755279=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-755279

]

ASF GitHub Bot logged work on HIVE-26102:

-

Author: ASF GitHub Bot

Created on: 11/Apr/22 15:44

Start Date: 11/Apr/22 15:44

Worklog Time Spent: 10m

Work Description: marton-bod commented on code in PR #3131:

URL: https://github.com/apache/hive/pull/3131#discussion_r847473881

##

iceberg/iceberg-handler/src/main/java/org/apache/iceberg/mr/hive/HiveIcebergOutputCommitter.java:

##

@@ -325,9 +327,40 @@ private void commitTable(FileIO io, ExecutorService

executor, JobContext jobCont

"numReduceTasks/numMapTasks", jobContext.getJobID(), name);

return conf.getNumReduceTasks() > 0 ? conf.getNumReduceTasks() :

conf.getNumMapTasks();

});

-Collection dataFiles = dataFiles(numTasks, executor, location,

jobContext, io, true);

-boolean isOverwrite = conf.getBoolean(InputFormatConfig.IS_OVERWRITE,

false);

+if (HiveIcebergStorageHandler.isDelete(conf, name)) {

+ Collection writeResults = collectResults(numTasks,

executor, location, jobContext, io, true);

+ commitDelete(jobContext, table, startTime, writeResults);

+} else if (HiveIcebergStorageHandler.isWrite(conf, name)) {

+ Collection writeResults = collectResults(numTasks,

executor, location, jobContext, io, true);

+ boolean isOverwrite = conf.getBoolean(InputFormatConfig.IS_OVERWRITE,

false);

+ commitInsert(jobContext, table, startTime, writeResults, isOverwrite);

+} else {

+ LOG.info("Unable to determine commit operation type for table: {},

jobID: {}. Will not create a commit.",

+ table, jobContext.getJobID());

+}

+ }

+

+ private void commitDelete(JobContext jobContext, Table table, long

startTime, Collection results) {

Review Comment:

That should allow you to do something like:

```

// update

Transaction transaction = table.newTransaction();

commitDelete(table, Optional.of(transaction), startTime, deleteWriteResults);

commitInsert(table, Optional.of(transaction), startTime, insertWriteResults);

transaction.commitTransaction();

```

Issue Time Tracking

---

Worklog Id: (was: 755279)

Time Spent: 16h 50m (was: 16h 40m)

> Implement DELETE statements for Iceberg tables

> --

>

> Key: HIVE-26102

> URL: https://issues.apache.org/jira/browse/HIVE-26102

> Project: Hive

> Issue Type: New Feature

>Reporter: Marton Bod

>Assignee: Marton Bod

>Priority: Major

> Labels: pull-request-available

> Time Spent: 16h 50m

> Remaining Estimate: 0h

>

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Updated] (HIVE-26129) Non blocking DROP CONNECTOR

[ https://issues.apache.org/jira/browse/HIVE-26129?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated HIVE-26129: -- Labels: pull-request-available (was: ) > Non blocking DROP CONNECTOR > --- > > Key: HIVE-26129 > URL: https://issues.apache.org/jira/browse/HIVE-26129 > Project: Hive > Issue Type: Task >Reporter: Denys Kuzmenko >Priority: Major > Labels: pull-request-available > Time Spent: 10m > Remaining Estimate: 0h > > Use a less restrictive lock for data connectors, they do not have any > dependencies on other tables. -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Work logged] (HIVE-26129) Non blocking DROP CONNECTOR

[ https://issues.apache.org/jira/browse/HIVE-26129?focusedWorklogId=755238=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-755238 ] ASF GitHub Bot logged work on HIVE-26129: - Author: ASF GitHub Bot Created on: 11/Apr/22 13:59 Start Date: 11/Apr/22 13:59 Worklog Time Spent: 10m Work Description: nrg4878 commented on code in PR #3173: URL: https://github.com/apache/hive/pull/3173#discussion_r847360100 ## ql/src/java/org/apache/hadoop/hive/ql/ddl/dataconnector/drop/DropDataConnectorAnalyzer.java: ## @@ -18,13 +18,15 @@ package org.apache.hadoop.hive.ql.ddl.dataconnector.drop; +import org.apache.hadoop.hive.conf.HiveConf; import org.apache.hadoop.hive.metastore.api.DataConnector; import org.apache.hadoop.hive.ql.QueryState; import org.apache.hadoop.hive.ql.exec.TaskFactory; import org.apache.hadoop.hive.ql.ddl.DDLSemanticAnalyzerFactory.DDLType; import org.apache.hadoop.hive.ql.ddl.DDLWork; import org.apache.hadoop.hive.ql.hooks.ReadEntity; import org.apache.hadoop.hive.ql.hooks.WriteEntity; +import org.apache.hadoop.hive.ql.io.AcidUtils; Review Comment: nit: Appears to be unnecessary import ## ql/src/java/org/apache/hadoop/hive/ql/ddl/dataconnector/drop/DropDataConnectorAnalyzer.java: ## @@ -18,13 +18,15 @@ package org.apache.hadoop.hive.ql.ddl.dataconnector.drop; +import org.apache.hadoop.hive.conf.HiveConf; Review Comment: nit: Appears to be unnecessary import. Issue Time Tracking --- Worklog Id: (was: 755238) Remaining Estimate: 0h Time Spent: 10m > Non blocking DROP CONNECTOR > --- > > Key: HIVE-26129 > URL: https://issues.apache.org/jira/browse/HIVE-26129 > Project: Hive > Issue Type: Task >Reporter: Denys Kuzmenko >Priority: Major > Time Spent: 10m > Remaining Estimate: 0h > > Use a less restrictive lock for data connectors, they do not have any > dependencies on other tables. -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Work logged] (HIVE-25941) Long compilation time of complex query due to analysis for materialized view rewrite

[

https://issues.apache.org/jira/browse/HIVE-25941?focusedWorklogId=755236=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-755236

]

ASF GitHub Bot logged work on HIVE-25941:

-

Author: ASF GitHub Bot

Created on: 11/Apr/22 13:58

Start Date: 11/Apr/22 13:58

Worklog Time Spent: 10m

Work Description: kasakrisz commented on code in PR #3014:

URL: https://github.com/apache/hive/pull/3014#discussion_r847360214

##

ql/src/java/org/apache/hadoop/hive/ql/optimizer/calcite/HiveMaterializedViewASTSubQueryRewriteShuttle.java:

##

@@ -0,0 +1,189 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.hadoop.hive.ql.optimizer.calcite;

+

+import org.apache.calcite.plan.RelOptCluster;

+import org.apache.calcite.rel.RelNode;

+import org.apache.calcite.rex.RexNode;

+import org.apache.calcite.tools.RelBuilder;

+import org.apache.hadoop.hive.common.TableName;

+import org.apache.hadoop.hive.ql.lockmgr.HiveTxnManager;

+import org.apache.hadoop.hive.ql.metadata.Hive;

+import org.apache.hadoop.hive.ql.metadata.HiveException;

+import org.apache.hadoop.hive.ql.metadata.HiveRelOptMaterialization;

+import org.apache.hadoop.hive.ql.metadata.Table;

+import org.apache.hadoop.hive.ql.optimizer.calcite.reloperators.HiveFilter;

+import org.apache.hadoop.hive.ql.optimizer.calcite.reloperators.HiveProject;

+import

org.apache.hadoop.hive.ql.optimizer.calcite.rules.views.HiveMaterializedViewUtils;

+import org.apache.hadoop.hive.ql.parse.ASTNode;

+import org.apache.hadoop.hive.ql.parse.CalcitePlanner;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.util.EnumSet;

+import java.util.HashSet;

+import java.util.List;

+import java.util.Map;

+import java.util.Set;

+import java.util.Stack;

+import java.util.function.Predicate;

+

+import static java.util.Collections.singletonList;

+import static java.util.Collections.unmodifiableMap;

+import static java.util.Collections.unmodifiableSet;

+import static

org.apache.hadoop.hive.ql.metadata.HiveRelOptMaterialization.RewriteAlgorithm.NON_CALCITE;

+import static

org.apache.hadoop.hive.ql.optimizer.calcite.rules.views.HiveMaterializedViewUtils.extractTable;

+

+/**

+ * Traverse the plan and tries to rewrite subtrees of the plan to materialized

view scans.

+ *

+ * The rewrite depends on whether the subtree's corresponding AST match with

any materialized view

+ * definitions AST.

+ */

+public class HiveMaterializedViewASTSubQueryRewriteShuttle extends

HiveRelShuttleImpl {

+

+ private static final Logger LOG =

LoggerFactory.getLogger(HiveMaterializedViewASTSubQueryRewriteShuttle.class);

+

+ private final Map subQueryMap;

+ private final ASTNode originalAST;

+ private final ASTNode expandedAST;

+ private final RelBuilder relBuilder;

+ private final Hive db;

+ private final Set tablesUsedByOriginalPlan;

+ private final HiveTxnManager txnManager;

+

+ public HiveMaterializedViewASTSubQueryRewriteShuttle(

+ Map subQueryMap,

+ ASTNode originalAST,

+ ASTNode expandedAST,

+ RelBuilder relBuilder,

+ Hive db,

+ Set tablesUsedByOriginalPlan,

+ HiveTxnManager txnManager) {

+this.subQueryMap = unmodifiableMap(subQueryMap);

+this.originalAST = originalAST;

+this.expandedAST = expandedAST;

+this.relBuilder = relBuilder;

+this.db = db;

+this.tablesUsedByOriginalPlan = unmodifiableSet(tablesUsedByOriginalPlan);

+this.txnManager = txnManager;

+ }

+

+ public RelNode rewrite(RelNode relNode) {

+return relNode.accept(this);

+ }

+

+ @Override

+ public RelNode visit(HiveProject project) {

+if (!subQueryMap.containsKey(project)) {

+ // No AST is found for this subtree

+ return super.visit(project);

+}

+

+// The AST associated to the RelNode is part of the original AST, but we

need the expanded one

+// 1. Collect the path elements of this node in the original AST

+Stack path = new Stack<>();

+ASTNode curr = subQueryMap.get(project);

+while (curr != null && curr != originalAST) {

+ path.push(curr.getType());

+ curr = (ASTNode)

[jira] [Work logged] (HIVE-25941) Long compilation time of complex query due to analysis for materialized view rewrite

[

https://issues.apache.org/jira/browse/HIVE-25941?focusedWorklogId=755235=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-755235

]

ASF GitHub Bot logged work on HIVE-25941:

-

Author: ASF GitHub Bot

Created on: 11/Apr/22 13:58

Start Date: 11/Apr/22 13:58

Worklog Time Spent: 10m

Work Description: kasakrisz commented on code in PR #3014:

URL: https://github.com/apache/hive/pull/3014#discussion_r847359651

##

ql/src/java/org/apache/hadoop/hive/ql/optimizer/calcite/HiveMaterializedViewASTSubQueryRewriteShuttle.java:

##

@@ -0,0 +1,189 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.hadoop.hive.ql.optimizer.calcite;

+

+import org.apache.calcite.plan.RelOptCluster;

+import org.apache.calcite.rel.RelNode;

+import org.apache.calcite.rex.RexNode;

+import org.apache.calcite.tools.RelBuilder;

+import org.apache.hadoop.hive.common.TableName;

+import org.apache.hadoop.hive.ql.lockmgr.HiveTxnManager;

+import org.apache.hadoop.hive.ql.metadata.Hive;

+import org.apache.hadoop.hive.ql.metadata.HiveException;

+import org.apache.hadoop.hive.ql.metadata.HiveRelOptMaterialization;

+import org.apache.hadoop.hive.ql.metadata.Table;

+import org.apache.hadoop.hive.ql.optimizer.calcite.reloperators.HiveFilter;

+import org.apache.hadoop.hive.ql.optimizer.calcite.reloperators.HiveProject;

+import

org.apache.hadoop.hive.ql.optimizer.calcite.rules.views.HiveMaterializedViewUtils;

+import org.apache.hadoop.hive.ql.parse.ASTNode;

+import org.apache.hadoop.hive.ql.parse.CalcitePlanner;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.util.EnumSet;

+import java.util.HashSet;

+import java.util.List;

+import java.util.Map;

+import java.util.Set;

+import java.util.Stack;

+import java.util.function.Predicate;

+

+import static java.util.Collections.singletonList;

+import static java.util.Collections.unmodifiableMap;

+import static java.util.Collections.unmodifiableSet;

+import static

org.apache.hadoop.hive.ql.metadata.HiveRelOptMaterialization.RewriteAlgorithm.NON_CALCITE;

+import static

org.apache.hadoop.hive.ql.optimizer.calcite.rules.views.HiveMaterializedViewUtils.extractTable;

+

+/**

+ * Traverse the plan and tries to rewrite subtrees of the plan to materialized

view scans.

+ *

+ * The rewrite depends on whether the subtree's corresponding AST match with

any materialized view

+ * definitions AST.

+ */

+public class HiveMaterializedViewASTSubQueryRewriteShuttle extends

HiveRelShuttleImpl {

+

+ private static final Logger LOG =

LoggerFactory.getLogger(HiveMaterializedViewASTSubQueryRewriteShuttle.class);

+

+ private final Map subQueryMap;

+ private final ASTNode originalAST;

+ private final ASTNode expandedAST;

+ private final RelBuilder relBuilder;

+ private final Hive db;

+ private final Set tablesUsedByOriginalPlan;

+ private final HiveTxnManager txnManager;

+

+ public HiveMaterializedViewASTSubQueryRewriteShuttle(

+ Map subQueryMap,

+ ASTNode originalAST,

+ ASTNode expandedAST,

+ RelBuilder relBuilder,

+ Hive db,

+ Set tablesUsedByOriginalPlan,

+ HiveTxnManager txnManager) {

+this.subQueryMap = unmodifiableMap(subQueryMap);

+this.originalAST = originalAST;

+this.expandedAST = expandedAST;

+this.relBuilder = relBuilder;

+this.db = db;

+this.tablesUsedByOriginalPlan = unmodifiableSet(tablesUsedByOriginalPlan);

+this.txnManager = txnManager;

+ }

+

+ public RelNode rewrite(RelNode relNode) {

+return relNode.accept(this);

+ }

+

+ @Override

+ public RelNode visit(HiveProject project) {

+if (!subQueryMap.containsKey(project)) {

Review Comment:

Added check

Issue Time Tracking

---

Worklog Id: (was: 755235)

Time Spent: 1.5h (was: 1h 20m)

> Long compilation time of complex query due to analysis for materialized view

> rewrite

>

>

> Key: HIVE-25941

> URL: https://issues.apache.org/jira/browse/HIVE-25941

> Project:

[jira] [Work logged] (HIVE-26102) Implement DELETE statements for Iceberg tables

[ https://issues.apache.org/jira/browse/HIVE-26102?focusedWorklogId=755231=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-755231 ] ASF GitHub Bot logged work on HIVE-26102: - Author: ASF GitHub Bot Created on: 11/Apr/22 13:46 Start Date: 11/Apr/22 13:46 Worklog Time Spent: 10m Work Description: marton-bod commented on PR #3131: URL: https://github.com/apache/hive/pull/3131#issuecomment-1095073865 @pvary I've refactored the `UpdateDeleteSemanticAnalyzer` to obtain the selectColumns and sortColumns during query rewriting from the `HiveStorageHandler` (see HiveStorageHandler#acidSelectColumns and HiveStorageHandler#acidSortColumns in [509c58b](https://github.com/apache/hive/pull/3131/commits/509c58b94693394e031b8780d3e6805286c85262)) Issue Time Tracking --- Worklog Id: (was: 755231) Time Spent: 16h 40m (was: 16.5h) > Implement DELETE statements for Iceberg tables > -- > > Key: HIVE-26102 > URL: https://issues.apache.org/jira/browse/HIVE-26102 > Project: Hive > Issue Type: New Feature >Reporter: Marton Bod >Assignee: Marton Bod >Priority: Major > Labels: pull-request-available > Time Spent: 16h 40m > Remaining Estimate: 0h > -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Work logged] (HIVE-26102) Implement DELETE statements for Iceberg tables

[

https://issues.apache.org/jira/browse/HIVE-26102?focusedWorklogId=755230=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-755230

]

ASF GitHub Bot logged work on HIVE-26102:

-

Author: ASF GitHub Bot

Created on: 11/Apr/22 13:43

Start Date: 11/Apr/22 13:43

Worklog Time Spent: 10m

Work Description: marton-bod commented on code in PR #3131:

URL: https://github.com/apache/hive/pull/3131#discussion_r847343603

##

iceberg/iceberg-handler/src/main/java/org/apache/iceberg/mr/hive/HiveIcebergOutputCommitter.java:

##

@@ -325,9 +327,40 @@ private void commitTable(FileIO io, ExecutorService

executor, JobContext jobCont

"numReduceTasks/numMapTasks", jobContext.getJobID(), name);

return conf.getNumReduceTasks() > 0 ? conf.getNumReduceTasks() :

conf.getNumMapTasks();

});

-Collection dataFiles = dataFiles(numTasks, executor, location,

jobContext, io, true);

-boolean isOverwrite = conf.getBoolean(InputFormatConfig.IS_OVERWRITE,

false);

+if (HiveIcebergStorageHandler.isDelete(conf, name)) {

+ Collection writeResults = collectResults(numTasks,

executor, location, jobContext, io, true);

+ commitDelete(jobContext, table, startTime, writeResults);

+} else if (HiveIcebergStorageHandler.isWrite(conf, name)) {

+ Collection writeResults = collectResults(numTasks,

executor, location, jobContext, io, true);

+ boolean isOverwrite = conf.getBoolean(InputFormatConfig.IS_OVERWRITE,

false);

+ commitInsert(jobContext, table, startTime, writeResults, isOverwrite);

+} else {

+ LOG.info("Unable to determine commit operation type for table: {},

jobID: {}. Will not create a commit.",

+ table, jobContext.getJobID());

+}

+ }

+

+ private void commitDelete(JobContext jobContext, Table table, long

startTime, Collection results) {

Review Comment:

Thanks for checking! I've refactored the `commitDelete` and `commitInsert`

to use an optional Transaction object, which can be passed in case of an update

or merge query.

Issue Time Tracking

---

Worklog Id: (was: 755230)

Time Spent: 16.5h (was: 16h 20m)

> Implement DELETE statements for Iceberg tables

> --

>

> Key: HIVE-26102

> URL: https://issues.apache.org/jira/browse/HIVE-26102

> Project: Hive

> Issue Type: New Feature

>Reporter: Marton Bod

>Assignee: Marton Bod

>Priority: Major

> Labels: pull-request-available

> Time Spent: 16.5h

> Remaining Estimate: 0h

>

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Work logged] (HIVE-26102) Implement DELETE statements for Iceberg tables

[ https://issues.apache.org/jira/browse/HIVE-26102?focusedWorklogId=755228=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-755228 ] ASF GitHub Bot logged work on HIVE-26102: - Author: ASF GitHub Bot Created on: 11/Apr/22 13:39 Start Date: 11/Apr/22 13:39 Worklog Time Spent: 10m Work Description: marton-bod commented on code in PR #3131: URL: https://github.com/apache/hive/pull/3131#discussion_r847339367 ## ql/src/java/org/apache/hadoop/hive/ql/exec/MapOperator.java: ## @@ -673,7 +674,31 @@ private String toErrorMessage(Writable value, Object row, ObjectInspector inspec ctx.getIoCxt().setRecordIdentifier(null);//so we don't accidentally cache the value; shouldn't //happen since IO layer either knows how to produce ROW__ID or not - but to be safe } - break; + break; +case PARTITION_SPEC_ID: Review Comment: Unfortunately we don't have the Table object anywhere around this area as far as I can tell, so I'm not sure how we could inject the logic using the storage handler. Besides, this method is called `populateVirtualColumns` where all the other virtual cols are filled out too, so right now I don't see a better place to put it Issue Time Tracking --- Worklog Id: (was: 755228) Time Spent: 16h 20m (was: 16h 10m) > Implement DELETE statements for Iceberg tables > -- > > Key: HIVE-26102 > URL: https://issues.apache.org/jira/browse/HIVE-26102 > Project: Hive > Issue Type: New Feature >Reporter: Marton Bod >Assignee: Marton Bod >Priority: Major > Labels: pull-request-available > Time Spent: 16h 20m > Remaining Estimate: 0h > -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Work logged] (HIVE-26130) Incorrect matching of external table when validating NOT NULL constraints

[

https://issues.apache.org/jira/browse/HIVE-26130?focusedWorklogId=755225=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-755225

]

ASF GitHub Bot logged work on HIVE-26130:

-

Author: ASF GitHub Bot

Created on: 11/Apr/22 13:37

Start Date: 11/Apr/22 13:37

Worklog Time Spent: 10m

Work Description: zhangbutao commented on PR #3199:

URL: https://github.com/apache/hive/pull/3199#issuecomment-1095063659

Failed tests unrelated

Issue Time Tracking

---

Worklog Id: (was: 755225)

Time Spent: 20m (was: 10m)

> Incorrect matching of external table when validating NOT NULL constraints

> -

>

> Key: HIVE-26130

> URL: https://issues.apache.org/jira/browse/HIVE-26130

> Project: Hive

> Issue Type: Bug

>Affects Versions: 4.0.0-alpha-1, 4.0.0-alpha-2

>Reporter: zhangbutao

>Assignee: zhangbutao

>Priority: Major

> Labels: pull-request-available

> Fix For: 4.0.0-alpha-2

>

> Time Spent: 20m

> Remaining Estimate: 0h

>

> _AbstractAlterTablePropertiesAnalyzer.validate_ uses incorrect external table

> judgment statement:

> {code:java}

> else if (entry.getKey().equals("external") && entry.getValue().equals("true")

> {code}

> In current hive code, we use hive tblproperties('EXTERNAL'='true' or

> 'EXTERNAL'='TRUE) to validate external table.

>

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Work logged] (HIVE-25941) Long compilation time of complex query due to analysis for materialized view rewrite

[

https://issues.apache.org/jira/browse/HIVE-25941?focusedWorklogId=755214=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-755214

]

ASF GitHub Bot logged work on HIVE-25941:

-

Author: ASF GitHub Bot

Created on: 11/Apr/22 13:06

Start Date: 11/Apr/22 13:06

Worklog Time Spent: 10m

Work Description: kasakrisz commented on code in PR #3014:

URL: https://github.com/apache/hive/pull/3014#discussion_r847305506

##

ql/src/java/org/apache/hadoop/hive/ql/metadata/MaterializedViewsCache.java:

##

@@ -205,4 +212,52 @@ HiveRelOptMaterialization get(String dbName, String

viewName) {

public boolean isEmpty() {

return materializedViews.isEmpty();

}

+

+

+ private static class ASTKey {

+private final ASTNode root;

+

+public ASTKey(ASTNode root) {

+ this.root = root;

+}

+

+@Override

+public boolean equals(Object o) {

+ if (this == o) return true;

+ if (o == null || getClass() != o.getClass()) return false;

+ ASTKey that = (ASTKey) o;

+ return equals(root, that.root);

+}

+

+private boolean equals(ASTNode astNode1, ASTNode astNode2) {

+ if (!(astNode1.getType() == astNode2.getType() &&

+ astNode1.getText().equals(astNode2.getText()) &&

+ astNode1.getChildCount() == astNode2.getChildCount())) {

+return false;

+ }

+

+ for (int i = 0; i < astNode1.getChildCount(); ++i) {

+if (!equals((ASTNode) astNode1.getChild(i), (ASTNode)

astNode2.getChild(i))) {

+ return false;

+}

+ }

+

+ return true;

+}

+

+@Override

+public int hashCode() {

+ return hashcode(root);

Review Comment:

* Hashcode of the ASTs stored in the `MaterializedViewCache` calculated only

once: when the MVs are loaded when hs2 starts or a new MV is created because

Java hashmap implementation caches the key's hashcode.

* When we look-up a Materialization the hashcode of the key is calculated

every time the get method is called. This is called only once for the entire

tree per query.

* To find sub-query rewrites the look-up is done by sub AST-s and the

hashcode is also calculated for the subTrees but when I did some performance

tests locally I didn't found this as a bottleneck.

This solution is still much faster then generating the expanded query text

of every possible sub-query using `UnparseTranslator` and `TokenRewriteStream`.

Issue Time Tracking

---

Worklog Id: (was: 755214)

Time Spent: 1h 20m (was: 1h 10m)

> Long compilation time of complex query due to analysis for materialized view

> rewrite

>

>

> Key: HIVE-25941

> URL: https://issues.apache.org/jira/browse/HIVE-25941

> Project: Hive

> Issue Type: Bug

> Components: Materialized views

>Reporter: Krisztian Kasa

>Assignee: Krisztian Kasa

>Priority: Major

> Labels: pull-request-available

> Attachments: sample.png

>

> Time Spent: 1h 20m

> Remaining Estimate: 0h

>

> When compiling query the optimizer tries to rewrite the query plan or

> subtrees of the plan to use materialized view scans.

> If

> {code}

> set hive.materializedview.rewriting.sql.subquery=false;

> {code}

> the compilation succeed in less then 10 sec otherwise it takes several

> minutes (~ 5min) depending on the hardware.

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Work logged] (HIVE-26093) Deduplicate org.apache.hadoop.hive.metastore.annotation package-info.java

[

https://issues.apache.org/jira/browse/HIVE-26093?focusedWorklogId=755195=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-755195

]

ASF GitHub Bot logged work on HIVE-26093:

-

Author: ASF GitHub Bot

Created on: 11/Apr/22 12:07

Start Date: 11/Apr/22 12:07

Worklog Time Spent: 10m

Work Description: pvary commented on code in PR #3168:

URL: https://github.com/apache/hive/pull/3168#discussion_r847253069

##

standalone-metastore/pom.xml:

##

@@ -531,6 +531,29 @@

+

+ javadoc

+

+

+

+org.apache.maven.plugins

+maven-javadoc-plugin

Review Comment:

Since the javadoc generation is a big mess ATM, I would suggest to keep it

as it is, and if the tests are failing then we can decide what we want to do

with them.

See also: #3185

Issue Time Tracking

---

Worklog Id: (was: 755195)

Time Spent: 2h 10m (was: 2h)

> Deduplicate org.apache.hadoop.hive.metastore.annotation package-info.java

> -

>

> Key: HIVE-26093

> URL: https://issues.apache.org/jira/browse/HIVE-26093

> Project: Hive

> Issue Type: Task

>Reporter: Peter Vary

>Assignee: Peter Vary

>Priority: Major

> Labels: pull-request-available

> Fix For: 4.0.0

>

> Time Spent: 2h 10m

> Remaining Estimate: 0h

>

> Currently we define

> org.apache.hadoop.hive.metastore.annotation.MetastoreVersionAnnotation in 2

> places:

> -

> ./standalone-metastore/metastore-common/src/gen/version/org/apache/hadoop/hive/metastore/annotation/package-info.java

> -

> ./standalone-metastore/metastore-server/src/gen/version/org/apache/hadoop/hive/metastore/annotation/package-info.java

> This causes javadoc generation to fail with:

> {code}

> [ERROR] Failed to execute goal

> org.apache.maven.plugins:maven-javadoc-plugin:3.0.1:aggregate (default-cli)

> on project hive: An error has occurred in Javadoc report generation:

> [ERROR] Exit code: 1 -

> /Users/pvary/dev/upstream/hive/standalone-metastore/metastore-server/src/gen/version/org/apache/hadoop/hive/metastore/annotation/package-info.java:8:

> warning: a package-info.java file has already been seen for package

> org.apache.hadoop.hive.metastore.annotation

> [ERROR] package org.apache.hadoop.hive.metastore.annotation;

> [ERROR] ^

> [ERROR] javadoc: warning - Multiple sources of package comments found for

> package "org.apache.hive.streaming"

> [ERROR]

> /Users/pvary/dev/upstream/hive/ql/src/java/org/apache/hadoop/hive/ql/exec/SerializationUtilities.java:556:

> error: type MapSerializer does not take parameters

> [ERROR] com.esotericsoftware.kryo.serializers.MapSerializer {

> [ERROR] ^

> [ERROR]

> /Users/pvary/dev/upstream/hive/standalone-metastore/metastore-server/src/gen/version/org/apache/hadoop/hive/metastore/annotation/package-info.java:4:

> error: package org.apache.hadoop.hive.metastore.annotation has already been

> annotated

> [ERROR] @MetastoreVersionAnnotation(version="4.0.0-alpha-1",

> shortVersion="4.0.0-alpha-1",

> [ERROR] ^

> [ERROR] java.lang.AssertionError

> [ERROR] at com.sun.tools.javac.util.Assert.error(Assert.java:126)

> [ERROR] at com.sun.tools.javac.util.Assert.check(Assert.java:45)

> [ERROR] at

> com.sun.tools.javac.code.SymbolMetadata.setDeclarationAttributesWithCompletion(SymbolMetadata.java:177)

> [ERROR] at

> com.sun.tools.javac.code.Symbol.setDeclarationAttributesWithCompletion(Symbol.java:215)

> [ERROR] at

> com.sun.tools.javac.comp.MemberEnter.actualEnterAnnotations(MemberEnter.java:952)

> [ERROR] at

> com.sun.tools.javac.comp.MemberEnter.access$600(MemberEnter.java:64)

> [ERROR] at

> com.sun.tools.javac.comp.MemberEnter$5.run(MemberEnter.java:876)

> [ERROR] at com.sun.tools.javac.comp.Annotate.flush(Annotate.java:143)

> [ERROR] at

> com.sun.tools.javac.comp.Annotate.enterDone(Annotate.java:129)

> [ERROR] at com.sun.tools.javac.comp.Enter.complete(Enter.java:512)

> [ERROR] at com.sun.tools.javac.comp.Enter.main(Enter.java:471)

> [ERROR] at com.sun.tools.javadoc.JavadocEnter.main(JavadocEnter.java:78)

> [ERROR] at

> com.sun.tools.javadoc.JavadocTool.getRootDocImpl(JavadocTool.java:186)

> [ERROR] at com.sun.tools.javadoc.Start.parseAndExecute(Start.java:346)