[jira] [Commented] (NIFI-7856) Provenance failed to be compressed after nifi upgrade to 1.12

[

https://issues.apache.org/jira/browse/NIFI-7856?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17219396#comment-17219396

]

Mengze Li commented on NIFI-7856:

-

Hi Mark,

I see your PR hasn't been merged yet however the status of this ticket is

"PATCH AVAILABLE".

Does that mean there will be a patch version including the fix?

> Provenance failed to be compressed after nifi upgrade to 1.12

> -

>

> Key: NIFI-7856

> URL: https://issues.apache.org/jira/browse/NIFI-7856

> Project: Apache NiFi

> Issue Type: Bug

>Affects Versions: 1.12.0

>Reporter: Mengze Li

>Assignee: Mark Payne

>Priority: Major

> Fix For: 1.13.0

>

> Attachments: 1683472.prov, NIFI-7856.xml, ls.png, screenshot-1.png,

> screenshot-2.png, screenshot-3.png

>

> Time Spent: 20m

> Remaining Estimate: 0h

>

> We upgraded our nifi cluster from 1.11.3 to 1.12.0.

> The nodes come up and everything looks to be functional. I can see 1.12.0 is

> running.

> Later on, we discovered that the data provenance is missing. From checking

> our logs, we see tons of errors compressing the logs.

> {code}

> 2020-09-28 03:38:35,205 ERROR [Compress Provenance Logs-1-thread-1]

> o.a.n.p.s.EventFileCompressor Failed to compress

> ./provenance_repository/2752821.prov on rollover

> {code}

> This didn't happen in 1.11.3.

> Is this a known issue? We are considering reverting back if there is no

> solution for this since we can't go prod with no/broken data provenance.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [nifi] adamfisher commented on pull request #3317: NIFI-6047 Add DetectDuplicateRecord Processor

adamfisher commented on pull request #3317: URL: https://github.com/apache/nifi/pull/3317#issuecomment-714820284 Last I remember getting the tests running but it was really hard to get the whole framework to build. It's super close. Please let me know if you need anything. I did add commit permissions to this Branch if you want to work on this one. If I recall really it's just some test tweaking and then it's ready for merge. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [nifi] MikeThomsen commented on pull request #3317: NIFI-6047 Add DetectDuplicateRecord Processor

MikeThomsen commented on pull request #3317: URL: https://github.com/apache/nifi/pull/3317#issuecomment-714777665 @mattyb149 @joewitt Been a while since I looked at this, but I think I was mostly +1 on this. We have a strong need for this, so I'll take over the PR and close the gaps. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [nifi] pkelly-nifi commented on a change in pull request #4576: NIFI-7886: FetchAzureBlobStorage, FetchS3Object, and FetchGCSObject processors should be able to fetch ranges

pkelly-nifi commented on a change in pull request #4576:

URL: https://github.com/apache/nifi/pull/4576#discussion_r510465823

##

File path:

nifi-nar-bundles/nifi-aws-bundle/nifi-aws-processors/src/main/java/org/apache/nifi/processors/aws/s3/FetchS3Object.java

##

@@ -147,6 +171,11 @@ public void onTrigger(final ProcessContext context, final

ProcessSession session

request = new GetObjectRequest(bucket, key, versionId);

}

request.setRequesterPays(requesterPays);

+if(rangeLength != null) {

Review comment:

Thanks for your feedback. I just tested it again against real AWS S3

and you are absolutely right. This is apparently a bug in a third party

product we've been using. I will update with the -1 tomorrow.

As for the validation errors you mentioned, this is using DataSize

validation rather than pure long values, which allows shortcuts such as Range

start: 0B, Range Length: 1GB, followed by Range start: 1GB, Range length: 1GB.

So to use plain bytes, it requires adding a 'B' to the end. It seemed slightly

cleaner than requiring someone to calculate out to the exact byte. Do you have

a strong opinion against this syntax? Or a suggestion for making it clearer in

the documentation? I'd be happy to update it.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [nifi] mtien-apache commented on a change in pull request #4593: NIFI-7584 Added OIDC logout mechanism.

mtien-apache commented on a change in pull request #4593:

URL: https://github.com/apache/nifi/pull/4593#discussion_r510450932

##

File path:

nifi-nar-bundles/nifi-framework-bundle/nifi-framework/nifi-web/nifi-web-api/src/main/java/org/apache/nifi/web/api/AccessResource.java

##

@@ -329,24 +359,221 @@ public Response oidcExchange(@Context HttpServletRequest

httpServletRequest, @Co

)

public void oidcLogout(@Context HttpServletRequest httpServletRequest,

@Context HttpServletResponse httpServletResponse) throws Exception {

if (!httpServletRequest.isSecure()) {

-throw new IllegalStateException("User authentication/authorization

is only supported when running over HTTPS.");

+throw new IllegalStateException(AUTHENTICATION_NOT_ENABLED_MSG);

}

if (!oidcService.isOidcEnabled()) {

-throw new IllegalStateException("OpenId Connect is not

configured.");

+throw new

IllegalStateException(OPEN_ID_CONNECT_SUPPORT_IS_NOT_CONFIGURED_MSG);

Review comment:

@thenatog This request is invoked as an XHR and it can't direct the

browser. So, it can't forward an error message either.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Updated] (NIFI-7941) Add options and example(s) for NiFi Registry mode to the Encrypt-Config section of the Toolkit Guide

[ https://issues.apache.org/jira/browse/NIFI-7941?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Andrew M. Lim updated NIFI-7941: Summary: Add options and example(s) for NiFi Registry mode to the Encrypt-Config section of the Toolkit Guide (was: Add an example for NiFi Registry mode to the Encrypt-Config section of the Toolkit Guide) > Add options and example(s) for NiFi Registry mode to the Encrypt-Config > section of the Toolkit Guide > > > Key: NIFI-7941 > URL: https://issues.apache.org/jira/browse/NIFI-7941 > Project: Apache NiFi > Issue Type: Improvement > Components: Documentation Website >Reporter: Andrew M. Lim >Priority: Trivial > > The Encrypt-Config tool has a NiFi Registry mode/flag ( ./encrypt-config.sh > --nifiRegistry). Should add the related options to the Toolkit Guide and > example usage. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Assigned] (NIFI-7941) Add options and example(s) for NiFi Registry mode to the Encrypt-Config section of the Toolkit Guide

[ https://issues.apache.org/jira/browse/NIFI-7941?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Andrew M. Lim reassigned NIFI-7941: --- Assignee: Andrew M. Lim > Add options and example(s) for NiFi Registry mode to the Encrypt-Config > section of the Toolkit Guide > > > Key: NIFI-7941 > URL: https://issues.apache.org/jira/browse/NIFI-7941 > Project: Apache NiFi > Issue Type: Improvement > Components: Documentation Website >Reporter: Andrew M. Lim >Assignee: Andrew M. Lim >Priority: Trivial > > The Encrypt-Config tool has a NiFi Registry mode/flag ( ./encrypt-config.sh > --nifiRegistry). Should add the related options to the Toolkit Guide and > example usage. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Created] (NIFI-7941) Add an example for NiFi Registry mode to the Encrypt-Config section of the Toolkit Guide

Andrew M. Lim created NIFI-7941: --- Summary: Add an example for NiFi Registry mode to the Encrypt-Config section of the Toolkit Guide Key: NIFI-7941 URL: https://issues.apache.org/jira/browse/NIFI-7941 Project: Apache NiFi Issue Type: Improvement Components: Documentation Website Reporter: Andrew M. Lim The Encrypt-Config tool has a NiFi Registry mode/flag ( ./encrypt-config.sh --nifiRegistry). Should add the related options to the Toolkit Guide and example usage. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Resolved] (NIFIREG-349) Bootstrap Properties section in Registry Admin Guide missing nifi.registry.bootstrap.sensitive.key

[ https://issues.apache.org/jira/browse/NIFIREG-349?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Andrew M. Lim resolved NIFIREG-349. --- Fix Version/s: 0.9.0 Resolution: Fixed > Bootstrap Properties section in Registry Admin Guide missing > nifi.registry.bootstrap.sensitive.key > -- > > Key: NIFIREG-349 > URL: https://issues.apache.org/jira/browse/NIFIREG-349 > Project: NiFi Registry > Issue Type: Improvement >Reporter: Andrew M. Lim >Assignee: Andrew M. Lim >Priority: Trivial > Fix For: 0.9.0 > > Time Spent: 20m > Remaining Estimate: 0h > > Here is the section where this property should be added: > https://nifi.apache.org/docs/nifi-registry-docs/html/administration-guide.html#bootstrap_properties -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Resolved] (NIFIREG-425) Automated UI tests fail due to incorrect URLs

[ https://issues.apache.org/jira/browse/NIFIREG-425?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Andrew M. Lim resolved NIFIREG-425. --- Fix Version/s: 0.9.0 Resolution: Fixed > Automated UI tests fail due to incorrect URLs > - > > Key: NIFIREG-425 > URL: https://issues.apache.org/jira/browse/NIFIREG-425 > Project: NiFi Registry > Issue Type: Bug >Reporter: Andrew M. Lim >Assignee: Andrew M. Lim >Priority: Major > Fix For: 0.9.0 > > Time Spent: 20m > Remaining Estimate: 0h > > The automated UI tests were failing because the "#" in the URL is now > required. > For example, references to the Buckets administration page URL should be: > [http://localhost:18080/nifi-registry/#/administration/workflow|http://localhost:18080/nifi-registry/*#*/administration/workflow] -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [nifi-registry] kevdoran commented on pull request #287: NIFIREG-399 Support chown docker in docker

kevdoran commented on pull request #287: URL: https://github.com/apache/nifi-registry/pull/287#issuecomment-714697537 Closing for now. @nkininge please let me know if you have found a solution for this. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [nifi-registry] kevdoran closed pull request #307: NIFIREG-425 Update automated UI tests with correct URL

kevdoran closed pull request #307: URL: https://github.com/apache/nifi-registry/pull/307 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [nifi-registry] kevdoran closed pull request #287: NIFIREG-399 Support chown docker in docker

kevdoran closed pull request #287: URL: https://github.com/apache/nifi-registry/pull/287 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [nifi-registry] kevdoran merged pull request #308: NIFIREG-349 Add nifi.registry.bootstrap.sensitive.key to Bootstrap Pr…

kevdoran merged pull request #308: URL: https://github.com/apache/nifi-registry/pull/308 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [nifi] lucasmoten commented on a change in pull request #4576: NIFI-7886: FetchAzureBlobStorage, FetchS3Object, and FetchGCSObject processors should be able to fetch ranges

lucasmoten commented on a change in pull request #4576:

URL: https://github.com/apache/nifi/pull/4576#discussion_r510368352

##

File path:

nifi-nar-bundles/nifi-aws-bundle/nifi-aws-processors/src/main/java/org/apache/nifi/processors/aws/s3/FetchS3Object.java

##

@@ -147,6 +171,11 @@ public void onTrigger(final ProcessContext context, final

ProcessSession session

request = new GetObjectRequest(bucket, key, versionId);

}

request.setRequesterPays(requesterPays);

+if(rangeLength != null) {

Review comment:

Maybe change

```

request.setRange(rangeStart, rangeStart + rangeLength);

```

to

```

request.setRange(rangeStart, rangeStart + rangeLength - 1);

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

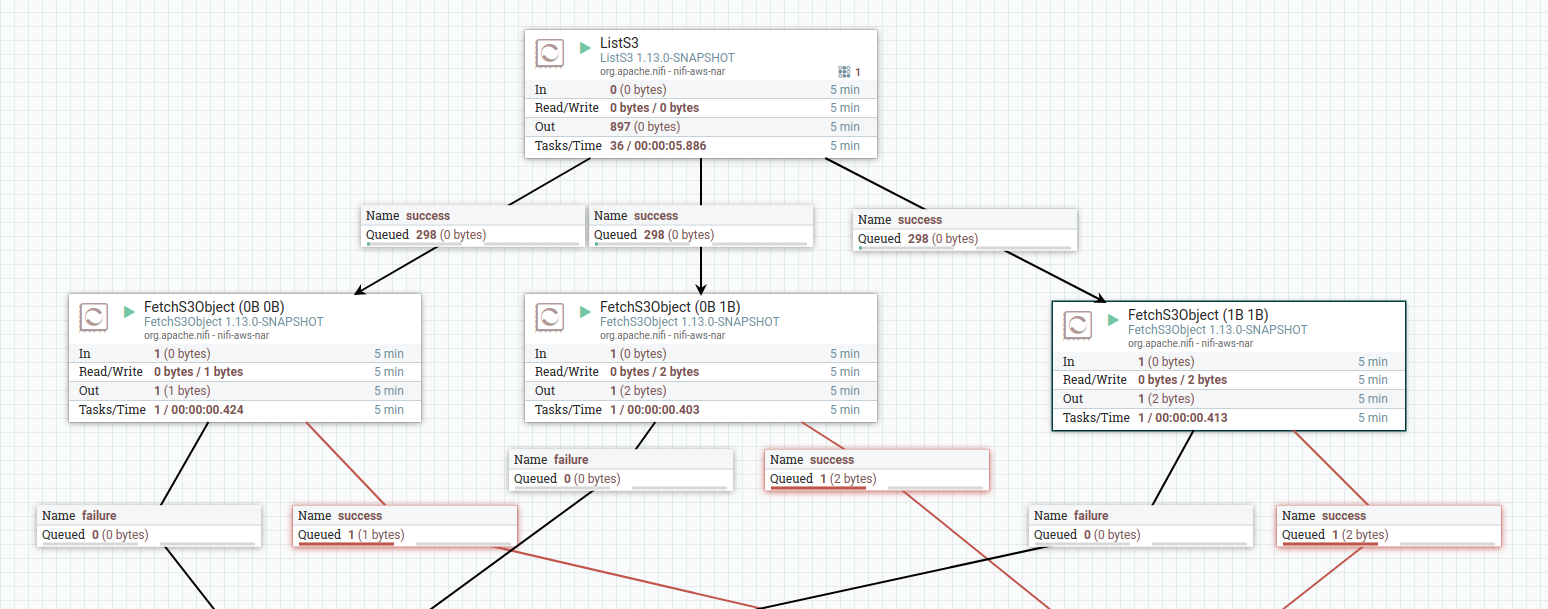

[GitHub] [nifi] lucasmoten commented on a change in pull request #4576: NIFI-7886: FetchAzureBlobStorage, FetchS3Object, and FetchGCSObject processors should be able to fetch ranges

lucasmoten commented on a change in pull request #4576:

URL: https://github.com/apache/nifi/pull/4576#discussion_r510366644

##

File path:

nifi-nar-bundles/nifi-aws-bundle/nifi-aws-processors/src/main/java/org/apache/nifi/processors/aws/s3/FetchS3Object.java

##

@@ -147,6 +171,11 @@ public void onTrigger(final ProcessContext context, final

ProcessSession session

request = new GetObjectRequest(bucket, key, versionId);

}

request.setRequesterPays(requesterPays);

+if(rangeLength != null) {

Review comment:

At time of writing, im testing this with a small file in an s3 bucket,

and with three different FetchS3Object calls on the same,

See this image of a flow depicting the three different FetchS3Object

processors, which only differ in what range start and range length is requested

With the following hex representation of a file that is the source being

retrieved ...

```

0x 5B 2E 53 68 65 6C 6C 43

6C 61 73 73 49 6E 66 6F [.ShellClassInfo

0x0010 5D 0D 0A 43 4C 53 49 44

3D 7B 36 34 35 46 46 30 ]..CLSID={645FF0

0x0020 34 30 2D 35 30 38 31 2D

31 30 31 42 2D 39 46 30 40-5081-101B-9F0

0x0030 38 2D 30 30 41 41 30 30

32 46 39 35 34 45 7D 0D 8-00AA002F954E}.

0x0040 0A 4C 6F 63 61 6C 69 7A

65 64 52 65 73 6F 75 72 .LocalizedResour

0x0050 63 65 4E 61 6D 65 3D 40

25 53 79 73 74 65 6D 52 ceName=@%SystemR

0x0060 6F 6F 74 25 5C 73 79 73

74 65 6D 33 32 5C 73 68 oot%\system32\sh

0x0070 65 6C 6C 33 32 2E 64 6C

6C 2C 2D 38 39 36 34 0D ell32.dll,-8964.

0x0080 0A .

```

from left to right ...

- start 0, length 0 ... yields 1 byte ... viewing queue in nifi, content in

hex mode has `0x5B 10[.`

- Im not sure whether this should error (returning nothing), or return the

entire file?

- start 0, length 1 ... yields 2 bytes .. viewing queue in nifi, content in

hex mode has `0x5B 2E[.`

- I was expecting this to return `5B`

- start 1, length 1 ... yields 2 bytes .. viewing queue in nifi, content in

hex mode has `0x2E 53.S`

- I was expecting this to return `2E`

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [nifi] lucasmoten commented on a change in pull request #4576: NIFI-7886: FetchAzureBlobStorage, FetchS3Object, and FetchGCSObject processors should be able to fetch ranges

lucasmoten commented on a change in pull request #4576:

URL: https://github.com/apache/nifi/pull/4576#discussion_r510351426

##

File path:

nifi-nar-bundles/nifi-aws-bundle/nifi-aws-processors/src/main/java/org/apache/nifi/processors/aws/s3/FetchS3Object.java

##

@@ -147,6 +171,11 @@ public void onTrigger(final ProcessContext context, final

ProcessSession session

request = new GetObjectRequest(bucket, key, versionId);

}

request.setRequesterPays(requesterPays);

+if(rangeLength != null) {

Review comment:

As a follow up, if I change

- Range Start = 1B

- Range Length = 1B

What currently gets returned is bytes 1 and 2, which is returning 1 more

byte then requested.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [nifi] lucasmoten commented on a change in pull request #4576: NIFI-7886: FetchAzureBlobStorage, FetchS3Object, and FetchGCSObject processors should be able to fetch ranges

lucasmoten commented on a change in pull request #4576:

URL: https://github.com/apache/nifi/pull/4576#discussion_r510346462

##

File path:

nifi-nar-bundles/nifi-aws-bundle/nifi-aws-processors/src/main/java/org/apache/nifi/processors/aws/s3/FetchS3Object.java

##

@@ -147,6 +171,11 @@ public void onTrigger(final ProcessContext context, final

ProcessSession session

request = new GetObjectRequest(bucket, key, versionId);

}

request.setRequesterPays(requesterPays);

+if(rangeLength != null) {

Review comment:

I'm trying to test from your recent changes on the branch and ran into

an issue when I set these property values for FetchS3Object

- Range Start = 1

- Range Length = 1

The processor can't validate with that setting as it produces this

validation warning:

- 's3-object-range-start' validated against '1' is invalid because Must be

of format where is a non-negative integer

and is a supported Data Unit, such as: B, KB, MB, GB, TB

- Same validation error for 's3-object-range-length'.

To clarify my original concern, should require Range Length be > 0 if Range

Start is set to a value.

Since range requests start at 0 byte, It should be valid to have

- Range Start = 0

- Range Length = 1

with a result indicating that this is requesting just the first byte from

the resource.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Resolved] (NIFI-7549) Addign Hazelcast based implementation for DistributedMapCacheClient

[ https://issues.apache.org/jira/browse/NIFI-7549?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Peter Turcsanyi resolved NIFI-7549. --- Fix Version/s: 1.13.0 Resolution: Fixed > Addign Hazelcast based implementation for DistributedMapCacheClient > --- > > Key: NIFI-7549 > URL: https://issues.apache.org/jira/browse/NIFI-7549 > Project: Apache NiFi > Issue Type: New Feature > Components: Extensions >Reporter: Simon Bence >Assignee: Simon Bence >Priority: Major > Fix For: 1.13.0 > > Time Spent: 5h 40m > Remaining Estimate: 0h > > Adding Hazelcast support in the same fashion as in case of Redis for example > would be useful. Even further: in order to make it easier to use, embedded > Hazelcast support should be added, which makes it unnecessary to start > Hazelcast cluster manually. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (NIFI-7549) Addign Hazelcast based implementation for DistributedMapCacheClient

[ https://issues.apache.org/jira/browse/NIFI-7549?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17219213#comment-17219213 ] ASF subversion and git services commented on NIFI-7549: --- Commit b980a8ea8caf077be8046de3f7b5c1bd337864d8 in nifi's branch refs/heads/main from Bence Simon [ https://gitbox.apache.org/repos/asf?p=nifi.git;h=b980a8e ] NIFI-7549 Adding Hazelcast based DistributedMapCacheClient support NIFI-7549 Refining documentation; Changing explicit HA mode; Smaller review comments NIFI-7549 Code review responses about license, documentation and dependencies NIFI-7549 Fixing issue when explicit HA; Some further review based adjustments NIFI-7549 Response to code review comments NIFI-7549 Adding extra serialization test NIFI-7549 Minor changes based on review comments NIFI-7549 Adding hook point to the shutdown This closes #4510. Signed-off-by: Peter Turcsanyi > Addign Hazelcast based implementation for DistributedMapCacheClient > --- > > Key: NIFI-7549 > URL: https://issues.apache.org/jira/browse/NIFI-7549 > Project: Apache NiFi > Issue Type: New Feature > Components: Extensions >Reporter: Simon Bence >Assignee: Simon Bence >Priority: Major > Time Spent: 5.5h > Remaining Estimate: 0h > > Adding Hazelcast support in the same fashion as in case of Redis for example > would be useful. Even further: in order to make it easier to use, embedded > Hazelcast support should be added, which makes it unnecessary to start > Hazelcast cluster manually. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (NIFI-7549) Addign Hazelcast based implementation for DistributedMapCacheClient

[ https://issues.apache.org/jira/browse/NIFI-7549?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17219214#comment-17219214 ] ASF subversion and git services commented on NIFI-7549: --- Commit b980a8ea8caf077be8046de3f7b5c1bd337864d8 in nifi's branch refs/heads/main from Bence Simon [ https://gitbox.apache.org/repos/asf?p=nifi.git;h=b980a8e ] NIFI-7549 Adding Hazelcast based DistributedMapCacheClient support NIFI-7549 Refining documentation; Changing explicit HA mode; Smaller review comments NIFI-7549 Code review responses about license, documentation and dependencies NIFI-7549 Fixing issue when explicit HA; Some further review based adjustments NIFI-7549 Response to code review comments NIFI-7549 Adding extra serialization test NIFI-7549 Minor changes based on review comments NIFI-7549 Adding hook point to the shutdown This closes #4510. Signed-off-by: Peter Turcsanyi > Addign Hazelcast based implementation for DistributedMapCacheClient > --- > > Key: NIFI-7549 > URL: https://issues.apache.org/jira/browse/NIFI-7549 > Project: Apache NiFi > Issue Type: New Feature > Components: Extensions >Reporter: Simon Bence >Assignee: Simon Bence >Priority: Major > Time Spent: 5.5h > Remaining Estimate: 0h > > Adding Hazelcast support in the same fashion as in case of Redis for example > would be useful. Even further: in order to make it easier to use, embedded > Hazelcast support should be added, which makes it unnecessary to start > Hazelcast cluster manually. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (NIFI-7549) Addign Hazelcast based implementation for DistributedMapCacheClient

[ https://issues.apache.org/jira/browse/NIFI-7549?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17219220#comment-17219220 ] ASF subversion and git services commented on NIFI-7549: --- Commit b980a8ea8caf077be8046de3f7b5c1bd337864d8 in nifi's branch refs/heads/main from Bence Simon [ https://gitbox.apache.org/repos/asf?p=nifi.git;h=b980a8e ] NIFI-7549 Adding Hazelcast based DistributedMapCacheClient support NIFI-7549 Refining documentation; Changing explicit HA mode; Smaller review comments NIFI-7549 Code review responses about license, documentation and dependencies NIFI-7549 Fixing issue when explicit HA; Some further review based adjustments NIFI-7549 Response to code review comments NIFI-7549 Adding extra serialization test NIFI-7549 Minor changes based on review comments NIFI-7549 Adding hook point to the shutdown This closes #4510. Signed-off-by: Peter Turcsanyi > Addign Hazelcast based implementation for DistributedMapCacheClient > --- > > Key: NIFI-7549 > URL: https://issues.apache.org/jira/browse/NIFI-7549 > Project: Apache NiFi > Issue Type: New Feature > Components: Extensions >Reporter: Simon Bence >Assignee: Simon Bence >Priority: Major > Time Spent: 5.5h > Remaining Estimate: 0h > > Adding Hazelcast support in the same fashion as in case of Redis for example > would be useful. Even further: in order to make it easier to use, embedded > Hazelcast support should be added, which makes it unnecessary to start > Hazelcast cluster manually. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (NIFI-7549) Addign Hazelcast based implementation for DistributedMapCacheClient

[ https://issues.apache.org/jira/browse/NIFI-7549?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17219216#comment-17219216 ] ASF subversion and git services commented on NIFI-7549: --- Commit b980a8ea8caf077be8046de3f7b5c1bd337864d8 in nifi's branch refs/heads/main from Bence Simon [ https://gitbox.apache.org/repos/asf?p=nifi.git;h=b980a8e ] NIFI-7549 Adding Hazelcast based DistributedMapCacheClient support NIFI-7549 Refining documentation; Changing explicit HA mode; Smaller review comments NIFI-7549 Code review responses about license, documentation and dependencies NIFI-7549 Fixing issue when explicit HA; Some further review based adjustments NIFI-7549 Response to code review comments NIFI-7549 Adding extra serialization test NIFI-7549 Minor changes based on review comments NIFI-7549 Adding hook point to the shutdown This closes #4510. Signed-off-by: Peter Turcsanyi > Addign Hazelcast based implementation for DistributedMapCacheClient > --- > > Key: NIFI-7549 > URL: https://issues.apache.org/jira/browse/NIFI-7549 > Project: Apache NiFi > Issue Type: New Feature > Components: Extensions >Reporter: Simon Bence >Assignee: Simon Bence >Priority: Major > Time Spent: 5.5h > Remaining Estimate: 0h > > Adding Hazelcast support in the same fashion as in case of Redis for example > would be useful. Even further: in order to make it easier to use, embedded > Hazelcast support should be added, which makes it unnecessary to start > Hazelcast cluster manually. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (NIFI-7549) Addign Hazelcast based implementation for DistributedMapCacheClient

[ https://issues.apache.org/jira/browse/NIFI-7549?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17219217#comment-17219217 ] ASF subversion and git services commented on NIFI-7549: --- Commit b980a8ea8caf077be8046de3f7b5c1bd337864d8 in nifi's branch refs/heads/main from Bence Simon [ https://gitbox.apache.org/repos/asf?p=nifi.git;h=b980a8e ] NIFI-7549 Adding Hazelcast based DistributedMapCacheClient support NIFI-7549 Refining documentation; Changing explicit HA mode; Smaller review comments NIFI-7549 Code review responses about license, documentation and dependencies NIFI-7549 Fixing issue when explicit HA; Some further review based adjustments NIFI-7549 Response to code review comments NIFI-7549 Adding extra serialization test NIFI-7549 Minor changes based on review comments NIFI-7549 Adding hook point to the shutdown This closes #4510. Signed-off-by: Peter Turcsanyi > Addign Hazelcast based implementation for DistributedMapCacheClient > --- > > Key: NIFI-7549 > URL: https://issues.apache.org/jira/browse/NIFI-7549 > Project: Apache NiFi > Issue Type: New Feature > Components: Extensions >Reporter: Simon Bence >Assignee: Simon Bence >Priority: Major > Time Spent: 5.5h > Remaining Estimate: 0h > > Adding Hazelcast support in the same fashion as in case of Redis for example > would be useful. Even further: in order to make it easier to use, embedded > Hazelcast support should be added, which makes it unnecessary to start > Hazelcast cluster manually. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (NIFI-7549) Addign Hazelcast based implementation for DistributedMapCacheClient

[ https://issues.apache.org/jira/browse/NIFI-7549?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17219215#comment-17219215 ] ASF subversion and git services commented on NIFI-7549: --- Commit b980a8ea8caf077be8046de3f7b5c1bd337864d8 in nifi's branch refs/heads/main from Bence Simon [ https://gitbox.apache.org/repos/asf?p=nifi.git;h=b980a8e ] NIFI-7549 Adding Hazelcast based DistributedMapCacheClient support NIFI-7549 Refining documentation; Changing explicit HA mode; Smaller review comments NIFI-7549 Code review responses about license, documentation and dependencies NIFI-7549 Fixing issue when explicit HA; Some further review based adjustments NIFI-7549 Response to code review comments NIFI-7549 Adding extra serialization test NIFI-7549 Minor changes based on review comments NIFI-7549 Adding hook point to the shutdown This closes #4510. Signed-off-by: Peter Turcsanyi > Addign Hazelcast based implementation for DistributedMapCacheClient > --- > > Key: NIFI-7549 > URL: https://issues.apache.org/jira/browse/NIFI-7549 > Project: Apache NiFi > Issue Type: New Feature > Components: Extensions >Reporter: Simon Bence >Assignee: Simon Bence >Priority: Major > Time Spent: 5.5h > Remaining Estimate: 0h > > Adding Hazelcast support in the same fashion as in case of Redis for example > would be useful. Even further: in order to make it easier to use, embedded > Hazelcast support should be added, which makes it unnecessary to start > Hazelcast cluster manually. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (NIFI-7549) Addign Hazelcast based implementation for DistributedMapCacheClient

[ https://issues.apache.org/jira/browse/NIFI-7549?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17219219#comment-17219219 ] ASF subversion and git services commented on NIFI-7549: --- Commit b980a8ea8caf077be8046de3f7b5c1bd337864d8 in nifi's branch refs/heads/main from Bence Simon [ https://gitbox.apache.org/repos/asf?p=nifi.git;h=b980a8e ] NIFI-7549 Adding Hazelcast based DistributedMapCacheClient support NIFI-7549 Refining documentation; Changing explicit HA mode; Smaller review comments NIFI-7549 Code review responses about license, documentation and dependencies NIFI-7549 Fixing issue when explicit HA; Some further review based adjustments NIFI-7549 Response to code review comments NIFI-7549 Adding extra serialization test NIFI-7549 Minor changes based on review comments NIFI-7549 Adding hook point to the shutdown This closes #4510. Signed-off-by: Peter Turcsanyi > Addign Hazelcast based implementation for DistributedMapCacheClient > --- > > Key: NIFI-7549 > URL: https://issues.apache.org/jira/browse/NIFI-7549 > Project: Apache NiFi > Issue Type: New Feature > Components: Extensions >Reporter: Simon Bence >Assignee: Simon Bence >Priority: Major > Time Spent: 5.5h > Remaining Estimate: 0h > > Adding Hazelcast support in the same fashion as in case of Redis for example > would be useful. Even further: in order to make it easier to use, embedded > Hazelcast support should be added, which makes it unnecessary to start > Hazelcast cluster manually. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (NIFI-7549) Addign Hazelcast based implementation for DistributedMapCacheClient

[ https://issues.apache.org/jira/browse/NIFI-7549?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17219218#comment-17219218 ] ASF subversion and git services commented on NIFI-7549: --- Commit b980a8ea8caf077be8046de3f7b5c1bd337864d8 in nifi's branch refs/heads/main from Bence Simon [ https://gitbox.apache.org/repos/asf?p=nifi.git;h=b980a8e ] NIFI-7549 Adding Hazelcast based DistributedMapCacheClient support NIFI-7549 Refining documentation; Changing explicit HA mode; Smaller review comments NIFI-7549 Code review responses about license, documentation and dependencies NIFI-7549 Fixing issue when explicit HA; Some further review based adjustments NIFI-7549 Response to code review comments NIFI-7549 Adding extra serialization test NIFI-7549 Minor changes based on review comments NIFI-7549 Adding hook point to the shutdown This closes #4510. Signed-off-by: Peter Turcsanyi > Addign Hazelcast based implementation for DistributedMapCacheClient > --- > > Key: NIFI-7549 > URL: https://issues.apache.org/jira/browse/NIFI-7549 > Project: Apache NiFi > Issue Type: New Feature > Components: Extensions >Reporter: Simon Bence >Assignee: Simon Bence >Priority: Major > Time Spent: 5.5h > Remaining Estimate: 0h > > Adding Hazelcast support in the same fashion as in case of Redis for example > would be useful. Even further: in order to make it easier to use, embedded > Hazelcast support should be added, which makes it unnecessary to start > Hazelcast cluster manually. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [nifi] asfgit closed pull request #4510: NIFI-7549 Adding Hazelcast based DistributedMapCacheClient support

asfgit closed pull request #4510: URL: https://github.com/apache/nifi/pull/4510 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [nifi-minifi-cpp] szaszm edited a comment on pull request #900: MINIFICPP-1350 - Explicit serializer for MergeContent

szaszm edited a comment on pull request #900: URL: https://github.com/apache/nifi-minifi-cpp/pull/900#issuecomment-714642657 I did some pre-merge manual testing of FFv3. For a while everything worked fine and just as I removed the GenerateFlowFile -> MergeContent connection, NiFi started spewing out these exceptions: ``` 2020-10-22 19:16:28,318 ERROR [Timer-Driven Process Thread-6] o.a.n.processors.standard.UnpackContent UnpackContent[id=514003df-0175-1000-9df2-b9696fdbaa96] Unable to unpack StandardFlowFileRecord [uuid=6803473c-2f9c-443f-900a-1f58f8721af2,claim=,offset=0,name=1603386311828679904,size=0] due to org.apache.nifi.processor.exception.ProcessException: IOException thrown from UnpackContent[id=51 4003df-0175-1000-9df2-b9696fdbaa96]: java.io.IOException: Not in FlowFile-v3 format; routing to failure: org.apache.nifi.processor.exception.ProcessException: IOException thrown from UnpackContent [id=514003df-0175-1000-9df2-b9696fdbaa96]: java.io.IOException: Not in FlowFile-v3 format org.apache.nifi.processor.exception.ProcessException: IOException thrown from UnpackContent[id=514003df-0175-1000-9df2-b9696fdbaa96]: java.io.IOException: Not in FlowFile-v3 format at org.apache.nifi.controller.repository.StandardProcessSession.write(StandardProcessSession.java:2770) at org.apache.nifi.processors.standard.UnpackContent$FlowFileStreamUnpacker$1.process(UnpackContent.java:441) [...] ``` Apparently 0 sized flow files with the mime.type of `application/flowfile-v3` appeared in NiFi after I stopped feeding flow files to MiNiFi C++ MergeContent. If this is expected, then fine, otherwise could you check it? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [nifi-minifi-cpp] szaszm commented on pull request #900: MINIFICPP-1350 - Explicit serializer for MergeContent

szaszm commented on pull request #900: URL: https://github.com/apache/nifi-minifi-cpp/pull/900#issuecomment-714642657 I did some pre-merge manual testing of FFv3. For a while everything worked fine and just as I removed the GenerateFlowFile -> MergeContent connection, NiFi started spewing out these exceptions: ``` 2020-10-22 19:16:28,318 ERROR [Timer-Driven Process Thread-6] o.a.n.processors.standard.UnpackContent UnpackContent[id=514003df-0175-1000-9df2-b9696fdbaa96] Unable to unpack StandardFlowFileRecord [uuid=6803473c-2f9c-443f-900a-1f58f8721af2,claim=,offset=0,name=1603386311828679904,size=0] due to org.apache.nifi.processor.exception.ProcessException: IOException thrown from UnpackContent[id=51 4003df-0175-1000-9df2-b9696fdbaa96]: java.io.IOException: Not in FlowFile-v3 format; routing to failure: org.apache.nifi.processor.exception.ProcessException: IOException thrown from UnpackContent [id=514003df-0175-1000-9df2-b9696fdbaa96]: java.io.IOException: Not in FlowFile-v3 format org.apache.nifi.processor.exception.ProcessException: IOException thrown from UnpackContent[id=514003df-0175-1000-9df2-b9696fdbaa96]: java.io.IOException: Not in FlowFile-v3 format at org.apache.nifi.controller.repository.StandardProcessSession.write(StandardProcessSession.java:2770) at org.apache.nifi.processors.standard.UnpackContent$FlowFileStreamUnpacker$1.process(UnpackContent.java:441) [...] ``` Apparently 0 sized flow files with the mime.type of `application/flowfile-v3` appeared in NiFi. If this is expected, then fine, otherwise could you check it? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [nifi-registry] andrewmlim opened a new pull request #308: NIFIREG-349 Add nifi.registry.bootstrap.sensitive.key to Bootstrap Pr…

andrewmlim opened a new pull request #308: URL: https://github.com/apache/nifi-registry/pull/308 …operties section in Admin Guide This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [nifi] kevdoran commented on a change in pull request #4510: NIFI-7549 Adding Hazelcast based DistributedMapCacheClient support

kevdoran commented on a change in pull request #4510:

URL: https://github.com/apache/nifi/pull/4510#discussion_r510242994

##

File path:

nifi-nar-bundles/nifi-hazelcast-bundle/nifi-hazelcast-services/src/main/resources/docs/org.apache.nifi.hazelcast.services.cachemanager.EmbeddedHazelcastCacheManager/additionalDetails.html

##

@@ -0,0 +1,89 @@

+

+

+

+

+

+EmbeddedHazelcastCacheManager

+

+

+

+

+EmbeddedHazelcastCacheManager

Review comment:

Excellent documentation!

##

File path:

nifi-nar-bundles/nifi-hazelcast-bundle/nifi-hazelcast-services/src/main/java/org/apache/nifi/hazelcast/services/cache/IMapBasedHazelcastCache.java

##

@@ -0,0 +1,128 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.nifi.hazelcast.services.cache;

+

+import com.hazelcast.map.IMap;

+import com.hazelcast.map.ReachedMaxSizeException;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.util.Set;

+import java.util.concurrent.TimeUnit;

+import java.util.function.Predicate;

+

+/**

+ * Implementation of {@link HazelcastCache} backed by Hazelcast's IMap data

structure. It's purpose is to wrap Hazelcast implementation specific details in

order to

+ * make it possible to easily change version or data structure.

+ */

+public class IMapBasedHazelcastCache implements HazelcastCache {

+private static final Logger LOGGER =

LoggerFactory.getLogger(IMapBasedHazelcastCache.class);

+

+private final long ttlInMillis;

+private final IMap storage;

+

+/**

+ * @param storage Reference to the actual storage. It should be the IMap

with the same identifier as cache name.

+ * @param ttlInMillis The guaranteed lifetime of a cache entry in

milliseconds.

+ */

+public IMapBasedHazelcastCache(

+final IMap storage,

+final long ttlInMillis) {

+this.ttlInMillis = ttlInMillis;

+this.storage = storage;

+}

+

+@Override

+public String name() {

+return storage.getName();

+}

+

+@Override

+public byte[] get(final String key) {

+return storage.get(key);

+}

+

+@Override

+public byte[] putIfAbsent(final String key, final byte[] value) {

+return storage.putIfAbsent(key, value, ttlInMillis,

TimeUnit.MILLISECONDS);

+}

+

+@Override

+public boolean put(final String key, final byte[] value) {

+try {

+storage.put(key, value, ttlInMillis, TimeUnit.MILLISECONDS);

+return true;

+} catch (final ReachedMaxSizeException e) {

+LOGGER.error("Cache {} reached the maximum allowed size!",

storage.getName());

+return false;

+}

Review comment:

If Hazelcast 4 is similar to 3 in this regard, then I believe there are

some other runtime-exceptions that IMap datastructures can throw. One in

particular is a SplitBrainProtectionException, which can occur on an external

HZ cluster if nodes lose connectivity to each other, such as unwanted network

partitioning. It might be worth adding handling for that, usually the solution

would be a configurable number of retries as the same operation can succeed if

called to a HZ instance that is part of the majority/quorum above the split

brain protection threshold.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Created] (MINIFICPP-1398) EL date functions are not available on Windows

Marton Szasz created MINIFICPP-1398:

---

Summary: EL date functions are not available on Windows

Key: MINIFICPP-1398

URL: https://issues.apache.org/jira/browse/MINIFICPP-1398

Project: Apache NiFi MiNiFi C++

Issue Type: Bug

Reporter: Marton Szasz

in Expression.h:

{{#define EXPRESSION_LANGUAGE_USE_DATE}}

{{// Disable date in EL for incompatible compilers}}

{{#if \_\_GNUC\_\_ < 5}}

{{#undef EXPRESSION_LANGUAGE_USE_DATE}}

{{#endif}}

I assume \_\_GNUC\_\_ is evaluated as 0 on Windows and that's the cause.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [nifi] tlsmith109 opened a new pull request #4615: NIFI-5629 Optimize listing before applying batching.

tlsmith109 opened a new pull request #4615:

URL: https://github.com/apache/nifi/pull/4615

Thank you for submitting a contribution to Apache NiFi.

Please provide a short description of the PR here:

Description of PR

Modified standard processor GetFile to prevent blocking on "large

directories". Added threaded listing using file input stream and control of

listing size to process. This pull request address NIFI-5629.

In order to streamline the review of the contribution we ask you

to ensure the following steps have been taken:

### For all changes:

- [ ] Is there a JIRA ticket associated with this PR? Is it referenced

in the commit message?

- [ ] Does your PR title start with **NIFI-** where is the JIRA

number you are trying to resolve? Pay particular attention to the hyphen "-"

character.

- [ ] Has your PR been rebased against the latest commit within the target

branch (typically `main`)?

- [ ] Is your initial contribution a single, squashed commit? _Additional

commits in response to PR reviewer feedback should be made on this branch and

pushed to allow change tracking. Do not `squash` or use `--force` when pushing

to allow for clean monitoring of changes._

### For code changes:

- [ ] Have you ensured that the full suite of tests is executed via `mvn

-Pcontrib-check clean install` at the root `nifi` folder?

- [ ] Have you written or updated unit tests to verify your changes?

- [ ] Have you verified that the full build is successful on JDK 8?

- [ ] Have you verified that the full build is successful on JDK 11?

- [ ] If adding new dependencies to the code, are these dependencies

licensed in a way that is compatible for inclusion under [ASF

2.0](http://www.apache.org/legal/resolved.html#category-a)?

- [ ] If applicable, have you updated the `LICENSE` file, including the main

`LICENSE` file under `nifi-assembly`?

- [ ] If applicable, have you updated the `NOTICE` file, including the main

`NOTICE` file found under `nifi-assembly`?

- [ ] If adding new Properties, have you added `.displayName` in addition to

.name (programmatic access) for each of the new properties?

### For documentation related changes:

- [ ] Have you ensured that format looks appropriate for the output in which

it is rendered?

### Note:

Please ensure that once the PR is submitted, you check GitHub Actions CI for

build issues and submit an update to your PR as soon as possible.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Updated] (NIFI-7939) Github CI/Actions has changed - requires updates to build config

[ https://issues.apache.org/jira/browse/NIFI-7939?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Joe Witt updated NIFI-7939: --- Fix Version/s: 1.13.0 > Github CI/Actions has changed - requires updates to build config > > > Key: NIFI-7939 > URL: https://issues.apache.org/jira/browse/NIFI-7939 > Project: Apache NiFi > Issue Type: Task >Reporter: Joe Witt >Assignee: Joe Witt >Priority: Major > Fix For: 1.13.0 > > > In https://github.com/apache/nifi/actions/runs/311573127 we see a lot of > things like > github-actions > / Windows - JDK 1.8 > .github#L1 > The `set-env` command is deprecated and will be disabled soon. Please upgrade > to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / Windows - JDK 1.8 > .github#L1 > The `set-env` command is deprecated and will be disabled soon. Please upgrade > to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / Windows - JDK 1.8 > .github#L1 > The `add-path` command is deprecated and will be disabled soon. Please > upgrade to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / Ubuntu - JDK 11 EN > .github#L1 > The `set-env` command is deprecated and will be disabled soon. Please upgrade > to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / Ubuntu - JDK 11 EN > .github#L1 > The `set-env` command is deprecated and will be disabled soon. Please upgrade > to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / Ubuntu - JDK 11 EN > .github#L1 > The `add-path` command is deprecated and will be disabled soon. Please > upgrade to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / Ubuntu - JDK 1.8 FR > .github#L1 > The `set-env` command is deprecated and will be disabled soon. Please upgrade > to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / Ubuntu - JDK 1.8 FR > .github#L1 > The `set-env` command is deprecated and will be disabled soon. Please upgrade > to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / Ubuntu - JDK 1.8 FR > .github#L1 > The `add-path` command is deprecated and will be disabled soon. Please > upgrade to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / MacOS - JDK 1.8 JP > .github#L1 > The `set-env` command is deprecated and will be disabled soon. Please upgrade > to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / MacOS - JDK 1.8 JP > .github#L1 > The `set-env` command is deprecated and will be disabled soon. Please upgrade > to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / MacOS - JDK 1.8 JP > .github#L1 > The `add-path` command is deprecated and will be disabled soon. Please > upgrade to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Resolved] (NIFI-7939) Github CI/Actions has changed - requires updates to build config

[ https://issues.apache.org/jira/browse/NIFI-7939?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Joe Witt resolved NIFI-7939. Resolution: Fixed > Github CI/Actions has changed - requires updates to build config > > > Key: NIFI-7939 > URL: https://issues.apache.org/jira/browse/NIFI-7939 > Project: Apache NiFi > Issue Type: Task >Reporter: Joe Witt >Assignee: Joe Witt >Priority: Major > Fix For: 1.13.0 > > > In https://github.com/apache/nifi/actions/runs/311573127 we see a lot of > things like > github-actions > / Windows - JDK 1.8 > .github#L1 > The `set-env` command is deprecated and will be disabled soon. Please upgrade > to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / Windows - JDK 1.8 > .github#L1 > The `set-env` command is deprecated and will be disabled soon. Please upgrade > to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / Windows - JDK 1.8 > .github#L1 > The `add-path` command is deprecated and will be disabled soon. Please > upgrade to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / Ubuntu - JDK 11 EN > .github#L1 > The `set-env` command is deprecated and will be disabled soon. Please upgrade > to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / Ubuntu - JDK 11 EN > .github#L1 > The `set-env` command is deprecated and will be disabled soon. Please upgrade > to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / Ubuntu - JDK 11 EN > .github#L1 > The `add-path` command is deprecated and will be disabled soon. Please > upgrade to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / Ubuntu - JDK 1.8 FR > .github#L1 > The `set-env` command is deprecated and will be disabled soon. Please upgrade > to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / Ubuntu - JDK 1.8 FR > .github#L1 > The `set-env` command is deprecated and will be disabled soon. Please upgrade > to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / Ubuntu - JDK 1.8 FR > .github#L1 > The `add-path` command is deprecated and will be disabled soon. Please > upgrade to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / MacOS - JDK 1.8 JP > .github#L1 > The `set-env` command is deprecated and will be disabled soon. Please upgrade > to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / MacOS - JDK 1.8 JP > .github#L1 > The `set-env` command is deprecated and will be disabled soon. Please upgrade > to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / MacOS - JDK 1.8 JP > .github#L1 > The `add-path` command is deprecated and will be disabled soon. Please > upgrade to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (NIFI-7939) Github CI/Actions has changed - requires updates to build config

[ https://issues.apache.org/jira/browse/NIFI-7939?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17219041#comment-17219041 ] ASF subversion and git services commented on NIFI-7939: --- Commit d1bfa679276e4d73f10a2d0287ca76b4791c8a00 in nifi's branch refs/heads/main from Joe Witt [ https://gitbox.apache.org/repos/asf?p=nifi.git;h=d1bfa67 ] NIFI-7939 improving version ranges to fix usage of now deprecated github actions commands self merging given nature of change Signed-off-by: Joe Witt > Github CI/Actions has changed - requires updates to build config > > > Key: NIFI-7939 > URL: https://issues.apache.org/jira/browse/NIFI-7939 > Project: Apache NiFi > Issue Type: Task >Reporter: Joe Witt >Assignee: Joe Witt >Priority: Major > > In https://github.com/apache/nifi/actions/runs/311573127 we see a lot of > things like > github-actions > / Windows - JDK 1.8 > .github#L1 > The `set-env` command is deprecated and will be disabled soon. Please upgrade > to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / Windows - JDK 1.8 > .github#L1 > The `set-env` command is deprecated and will be disabled soon. Please upgrade > to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / Windows - JDK 1.8 > .github#L1 > The `add-path` command is deprecated and will be disabled soon. Please > upgrade to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / Ubuntu - JDK 11 EN > .github#L1 > The `set-env` command is deprecated and will be disabled soon. Please upgrade > to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / Ubuntu - JDK 11 EN > .github#L1 > The `set-env` command is deprecated and will be disabled soon. Please upgrade > to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / Ubuntu - JDK 11 EN > .github#L1 > The `add-path` command is deprecated and will be disabled soon. Please > upgrade to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / Ubuntu - JDK 1.8 FR > .github#L1 > The `set-env` command is deprecated and will be disabled soon. Please upgrade > to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / Ubuntu - JDK 1.8 FR > .github#L1 > The `set-env` command is deprecated and will be disabled soon. Please upgrade > to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / Ubuntu - JDK 1.8 FR > .github#L1 > The `add-path` command is deprecated and will be disabled soon. Please > upgrade to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / MacOS - JDK 1.8 JP > .github#L1 > The `set-env` command is deprecated and will be disabled soon. Please upgrade > to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / MacOS - JDK 1.8 JP > .github#L1 > The `set-env` command is deprecated and will be disabled soon. Please upgrade > to using Environment Files. For more information see: > https://github.blog/changelog/2020-10-01-github-actions-deprecating-set-env-and-add-path-commands/ > Check warning on line 1 in .github > @github-actions > github-actions > / MacOS - JDK 1.8 JP > .github#L1 > The `add-path` command is deprecated

[jira] [Updated] (NIFI-7940) Create a ScriptedPartitionRecord

[ https://issues.apache.org/jira/browse/NIFI-7940?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Mark Payne updated NIFI-7940: - Description: In 1.12.0, we introduced the ScriptedTransformRecord. This has worked very well for many different transformations that are simple in code but very difficult with the DSL's that NiFi supports. In addition to transforming records, another use case that can be made dramatically easier with scripting is partitioning records. The PartitionRecord processor is very powerful and easy to use, but RecordPath is somewhat limited in the functions that it provides. For example, recently in the Apache Slack channel, we had someone asking about how to route data based on whether or not the "timestamp" field matches a given regular expression. Attempts were made using QueryRecord with RPATH but that didn't work because the timestamp field is a top-level field, not a Record. Tried using UpdateRecord but that failed because the matchesRegex function of RecordPath is a predicate so can't be used to partition on. Eventually a pattern was found with QueryRecord using the `SIMILAR TO` but that function does not support for regular expressions. A Scripted processor would likely make this far more trivial to handle. This processor should be focused around making it dead simple to partition (and subsequently route based on added attributes) records with a scripting language. So, it will be important, like ScriptedTransformRecord, to make the processor geared more toward ease of use than being given the full power of FlowFiles, sessions, etc. The script should have the same bindings as ScriptedTransformRecord: * attributes * log * record * recordIndex Unlike ScriptedTransformRecord, though, the ScriptedPartitionRecord should return one of three things: * A string (or a primitive value such as an int, that can be turned into a String) representing the partition for the Record * A collection of strings/primitives representing multiple partitions that the Record should go to (indicating that the Record should be added to multiple Record Writers) * A null value or an empty collection indicating that the Record should be dropped. The processor should then write the Record to a FlowFile for each of the Partitions returned. For each outbound FlowFile, an attribute should be added indicating the partition for that FlowFile for easy follow-on routing via RouteOnAttribute, etc. The processor should keep a counter for how many Records were dropped. The processor should keep counters for how many Records were routed to each Partition. The processor should include additionalDetails.html to provide sufficient documentation. Similar to ScriptedTransformRecord, the documentation should include several examples, spanning at least Groovy and Python (since those are by far the most often used languages we see used in script processors). Each example provided in the additionalDetails.html should also have an accompanying unit test to verify the behavior. Given the similarities to the ScriptedTransformRecord processor, there's a high likelihood that the ScriptedTransformRecord processor could be refactored into an AbstractScriptedRecord processor with both ScriptedTransformRecord and ScriptedPartitionRecord extending from it. was: In 1.12.0, we introduced the ScriptedTransformRecord. This has worked very well for many different transformations that are simple in code but very difficult with the DSL's that NiFi supports. In addition to transforming records, another use case that can be made dramatically easier with scripting is partitioning records. The PartitionRecord processor is very powerful and easy to use, but RecordPath is somewhat limited in the functions that it provides. For example, recently in the Apache Slack channel, we had someone asking about how to route data based on whether or not the "timestamp" field matches a given regular expression. Attempts were made using QueryRecord with RPATH but that didn't work because the timestamp field is a top-level field, not a Record. Tried using UpdateRecord but that failed because the matchesRegex function of RecordPath is a predicate so can't be used to partition on. Eventually a pattern was found with QueryRecord using the `SIMILAR TO` but that function does not support for regular expressions. A Scripted processor would likely make this far more trivial to handle. This processor should be focused around making it dead simple to partition (and subsequently route based on added attributes) records with a scripting language. So, it will be important, like ScriptedTransformRecord, to make the processor geared more toward ease of use than being given the full power of FlowFiles, sessions, etc. The script should have the same bindings as ScriptedTransformRecord: * attributes * log * record * recordIndex Unlike

[jira] [Created] (NIFI-7940) Create a ScriptedPartitionRecord

Mark Payne created NIFI-7940: Summary: Create a ScriptedPartitionRecord Key: NIFI-7940 URL: https://issues.apache.org/jira/browse/NIFI-7940 Project: Apache NiFi Issue Type: Bug Components: Extensions Reporter: Mark Payne In 1.12.0, we introduced the ScriptedTransformRecord. This has worked very well for many different transformations that are simple in code but very difficult with the DSL's that NiFi supports. In addition to transforming records, another use case that can be made dramatically easier with scripting is partitioning records. The PartitionRecord processor is very powerful and easy to use, but RecordPath is somewhat limited in the functions that it provides. For example, recently in the Apache Slack channel, we had someone asking about how to route data based on whether or not the "timestamp" field matches a given regular expression. Attempts were made using QueryRecord with RPATH but that didn't work because the timestamp field is a top-level field, not a Record. Tried using UpdateRecord but that failed because the matchesRegex function of RecordPath is a predicate so can't be used to partition on. Eventually a pattern was found with QueryRecord using the `SIMILAR TO` but that function does not support for regular expressions. A Scripted processor would likely make this far more trivial to handle. This processor should be focused around making it dead simple to partition (and subsequently route based on added attributes) records with a scripting language. So, it will be important, like ScriptedTransformRecord, to make the processor geared more toward ease of use than being given the full power of FlowFiles, sessions, etc. The script should have the same bindings as ScriptedTransformRecord: * attributes * log * record * recordIndex Unlike ScriptedTransformRecord, though, the ScriptedPartitionRecord should return one of three things: * A string (or a primitive value such as an int, that can be turned into a String) representing the partition for the Record * A collection of strings/primitives representing multiple partitions that the Record should go to (indicating that the Record should be added to multiple Record Writers) * A null value or an empty collection indicating that the Record should be dropped. The processor should then write the Record to a FlowFile for each of the Partitions returned. For each outbound FlowFile, an attribute should be added indicating the partition for that FlowFile for easy follow-on routing via RouteOnAttribute, etc. The processor should keep a counter for how many Records were dropped. The processor should keep counters for how many Records were routed to each Partition. The processor should include additionalDetails.html to provide sufficient documentation. Similar to ScriptedTransformRecord, the documentation should include several examples, spanning at least Groovy and Python (since those are by far the most often used languages we see used in script processors). Each example provided in the additionalDetails.html should also have an accompanying unit test to verify the behavior. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [nifi] naddym commented on pull request #4588: NIFI-7904: Support for validation query timeout in DBCP,Hive and HBas…

naddym commented on pull request #4588: URL: https://github.com/apache/nifi/pull/4588#issuecomment-714372371 Sure @mattyb149, We have REST API based dataflow which entertains requests and returns responses after series of manipulation which also involves database connections. Sometimes, we notice validation connection being waiting forever whenever the database access error happens either due to database refresh/clone. Generally, DBA team does database refresh/clone every month or twice a month. This is rare scenario that happens but however we never get response from a failed request as it gets hung waiting. Adding timeout to validation connection forces to timeout and successfully returns failure response to complete the request. Thanks for Hive_1.1ConnectionPool, I completely missed it. I have changed that as well. Thanks again. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [nifi] simonbence commented on a change in pull request #4510: NIFI-7549 Adding Hazelcast based DistributedMapCacheClient support

simonbence commented on a change in pull request #4510:

URL: https://github.com/apache/nifi/pull/4510#discussion_r509949183

##

File path:

nifi-nar-bundles/nifi-hazelcast-bundle/nifi-hazelcast-services/src/main/java/org/apache/nifi/hazelcast/services/cachemanager/EmbeddedHazelcastCacheManager.java

##

@@ -0,0 +1,244 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.nifi.hazelcast.services.cachemanager;

+

+import com.hazelcast.config.Config;

+import com.hazelcast.config.NetworkConfig;

+import com.hazelcast.config.TcpIpConfig;

+import com.hazelcast.core.Hazelcast;

+import com.hazelcast.core.HazelcastInstance;

+import org.apache.nifi.annotation.documentation.CapabilityDescription;

+import org.apache.nifi.annotation.documentation.Tags;

+import org.apache.nifi.components.AllowableValue;

+import org.apache.nifi.components.PropertyDescriptor;

+import org.apache.nifi.components.ValidationContext;

+import org.apache.nifi.components.ValidationResult;

+import org.apache.nifi.context.PropertyContext;

+import org.apache.nifi.controller.ConfigurationContext;

+import org.apache.nifi.expression.ExpressionLanguageScope;

+import org.apache.nifi.processor.exception.ProcessException;

+import org.apache.nifi.processor.util.StandardValidators;

+

+import java.util.Arrays;

+import java.util.Collection;

+import java.util.Collections;

+import java.util.HashSet;

+import java.util.List;

+import java.util.Set;

+import java.util.UUID;

+import java.util.concurrent.TimeUnit;

+import java.util.stream.Collectors;

+

+@Tags({"hazelcast", "cache"})

+@CapabilityDescription("A service that runs embedded Hazelcast and provides

cache instances backed by that." +

+" The server does not ask for authentication, it is recommended to run

it within secured network.")

+public class EmbeddedHazelcastCacheManager extends

IMapBasedHazelcastCacheManager {

+

+private static final int DEFAULT_HAZELCAST_PORT = 5701;

+private static final String PORT_SEPARATOR = ":";

+private static final String INSTANCE_CREATION_LOG = "Embedded Hazelcast

server instance with instance name %s has been created successfully";

+private static final String MEMBER_LIST_LOG = "Hazelcast cluster will be

created based on the NiFi cluster with the following members: %s";

+

+private static final AllowableValue CLUSTER_NONE = new

AllowableValue("none", "None", "No high availability or data replication is

provided," +

+" every node has access only to the data stored locally.");

+private static final AllowableValue CLUSTER_ALL_NODES = new

AllowableValue("all_nodes", "All Nodes", "Creates Hazelcast cluster based on

the NiFi cluster:" +

+" It expects every NiFi nodes to have a running Hazelcast instance

on the same port as specified in the Hazelcast Port property. No explicit

listing of the" +

+" instances is needed.");

+private static final AllowableValue CLUSTER_EXPLICIT = new

AllowableValue("explicit", "Explicit", "Works with an explicit list of

Hazelcast instances," +

+" creating a cluster using the listed instances. This provides

greater control, making it possible to utilize only certain nodes as Hazelcast

servers." +

+" The list of Hazelcast instances can be set in the property

\"Hazelcast Instances\". The list items must refer to hosts within the NiFi

cluster, no external Hazelcast" +

+" is allowed. NiFi nodes are not listed will be join to the

Hazelcast cluster as clients.");

+

+private static final PropertyDescriptor HAZELCAST_PORT = new

PropertyDescriptor.Builder()

+.name("hazelcast-port")

+.displayName("Hazelcast Port")

+.description("Port for the Hazelcast instance to use.")

+.required(true)

+.defaultValue(String.valueOf(DEFAULT_HAZELCAST_PORT))

+.addValidator(StandardValidators.PORT_VALIDATOR)

+

.expressionLanguageSupported(ExpressionLanguageScope.VARIABLE_REGISTRY)

+.build();

+

+private static final PropertyDescriptor HAZELCAST_CLUSTERING_STRATEGY =

new PropertyDescriptor.Builder()

+.name("hazelcast-clustering-strategy")

+

[GitHub] [nifi] simonbence commented on a change in pull request #4510: NIFI-7549 Adding Hazelcast based DistributedMapCacheClient support

simonbence commented on a change in pull request #4510:

URL: https://github.com/apache/nifi/pull/4510#discussion_r509948525

##

File path:

nifi-nar-bundles/nifi-hazelcast-bundle/nifi-hazelcast-services/src/main/java/org/apache/nifi/hazelcast/services/cachemanager/EmbeddedHazelcastCacheManager.java

##

@@ -0,0 +1,244 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.nifi.hazelcast.services.cachemanager;

+

+import com.hazelcast.config.Config;

+import com.hazelcast.config.NetworkConfig;

+import com.hazelcast.config.TcpIpConfig;

+import com.hazelcast.core.Hazelcast;

+import com.hazelcast.core.HazelcastInstance;

+import org.apache.nifi.annotation.documentation.CapabilityDescription;

+import org.apache.nifi.annotation.documentation.Tags;

+import org.apache.nifi.components.AllowableValue;

+import org.apache.nifi.components.PropertyDescriptor;

+import org.apache.nifi.components.ValidationContext;

+import org.apache.nifi.components.ValidationResult;

+import org.apache.nifi.context.PropertyContext;

+import org.apache.nifi.controller.ConfigurationContext;

+import org.apache.nifi.expression.ExpressionLanguageScope;

+import org.apache.nifi.processor.exception.ProcessException;

+import org.apache.nifi.processor.util.StandardValidators;

+

+import java.util.Arrays;

+import java.util.Collection;

+import java.util.Collections;

+import java.util.HashSet;