[GitHub] spark pull request #18429: [SPARK-21222] Move elimination of Distinct clause...

Github user rxin commented on a diff in the pull request:

https://github.com/apache/spark/pull/18429#discussion_r124457557

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/Optimizer.scala

---

@@ -152,6 +153,19 @@ abstract class Optimizer(sessionCatalog:

SessionCatalog, conf: SQLConf)

}

/**

+ * Remove useless DISTINCT for MAX and MIN.

+ * This rule should be applied before RewriteDistinctAggregates.

+ */

+object EliminateDistinct extends Rule[LogicalPlan] {

+ override def apply(plan: LogicalPlan): LogicalPlan = plan

transformExpressions {

+case ae: AggregateExpression if ae.isDistinct =>

+ ae.aggregateFunction match {

+case _: Max | _: Min => ae.copy(isDistinct = false)

--- End diff --

actually i made a mistake earlier. you'd need to do a match on other cases

and return the ae itself too

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18371: [SPARK-20889][SparkR] Grouped documentation for M...

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/18371#discussion_r124457521

--- Diff: R/pkg/R/functions.R ---

@@ -41,14 +41,21 @@ NULL

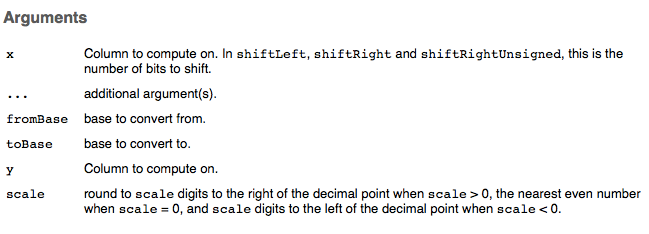

#' @param x Column to compute on. In \code{shiftLeft}, \code{shiftRight}

and \code{shiftRightUnsigned},

#' this is the number of bits to shift.

#' @param y Column to compute on.

-#' @param ... additional argument(s). For example, it could be used to

pass additional Columns.

--- End diff --

Right, `...` is not used in any of these functions here.

But it is still documented because one of the generic methods `bround` has

it.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18421: [SPARK-21213][SQL] Support collecting partition-l...

Github user gatorsmile commented on a diff in the pull request:

https://github.com/apache/spark/pull/18421#discussion_r124457412

--- Diff:

sql/core/src/test/scala/org/apache/spark/sql/execution/SparkSqlParserSuite.scala

---

@@ -239,18 +239,20 @@ class SparkSqlParserSuite extends AnalysisTest {

AnalyzeTableCommand(TableIdentifier("t"), noscan = false))

assertEqual("analyze table t compute statistics noscan",

AnalyzeTableCommand(TableIdentifier("t"), noscan = true))

-assertEqual("analyze table t partition (a) compute statistics nOscAn",

+assertEqual("analyze table t compute statistics nOscAn",

AnalyzeTableCommand(TableIdentifier("t"), noscan = true))

-// Partitions specified - we currently parse them but don't do

anything with it

+// Partitions specified

assertEqual("ANALYZE TABLE t PARTITION(ds='2008-04-09', hr=11) COMPUTE

STATISTICS",

- AnalyzeTableCommand(TableIdentifier("t"), noscan = false))

+ AnalyzeTableCommand(TableIdentifier("t"), noscan = false,

+partitionSpec = Some(Map("ds" -> "2008-04-09", "hr" -> "11"

assertEqual("ANALYZE TABLE t PARTITION(ds='2008-04-09', hr=11) COMPUTE

STATISTICS noscan",

- AnalyzeTableCommand(TableIdentifier("t"), noscan = true))

-assertEqual("ANALYZE TABLE t PARTITION(ds, hr) COMPUTE STATISTICS",

- AnalyzeTableCommand(TableIdentifier("t"), noscan = false))

-assertEqual("ANALYZE TABLE t PARTITION(ds, hr) COMPUTE STATISTICS

noscan",

- AnalyzeTableCommand(TableIdentifier("t"), noscan = true))

+ AnalyzeTableCommand(TableIdentifier("t"), noscan = true,

+partitionSpec = Some(Map("ds" -> "2008-04-09", "hr" -> "11"

+intercept("ANALYZE TABLE t PARTITION(ds, hr) COMPUTE STATISTICS",

--- End diff --

I am fine about this. Please write a comment in your PR description, the

class description of `visitAnalyze` and `AnalyzeTableCommand `

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18301: [SPARK-21052][SQL] Add hash map metrics to join

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/18301 Merged build finished. Test FAILed. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18301: [SPARK-21052][SQL] Add hash map metrics to join

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/18301 Test FAILed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/78748/ Test FAILed. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18301: [SPARK-21052][SQL] Add hash map metrics to join

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/18301 **[Test build #78748 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78748/testReport)** for PR 18301 at commit [`6c5b318`](https://github.com/apache/spark/commit/6c5b3188c10a7aa066d4212e98fc93b09cea7b16). * This patch **fails PySpark pip packaging tests**. * This patch merges cleanly. * This patch adds no public classes. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18431: [SPARK-21224][R] Specify a schema by using a DDL-formatt...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/18431 **[Test build #78757 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78757/testReport)** for PR 18431 at commit [`7c47934`](https://github.com/apache/spark/commit/7c4793473dec38f1d5da185ab743e0bd21afa5c9). --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18366: [SPARK-20889][SparkR] Grouped documentation for STRING c...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/18366 **[Test build #78759 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78759/testReport)** for PR 18366 at commit [`0ba66fd`](https://github.com/apache/spark/commit/0ba66fd00b76874adbc80c483cfad735bdaf11f5). --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18429: [SPARK-21222] Move elimination of Distinct clause from a...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/18429 **[Test build #78758 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78758/testReport)** for PR 18429 at commit [`536aae2`](https://github.com/apache/spark/commit/536aae27b2e85ecf7f6e3d1e38fd4c93afe8ab4b). --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18366: [SPARK-20889][SparkR] Grouped documentation for STRING c...

Github user actuaryzhang commented on the issue: https://github.com/apache/spark/pull/18366 OK. Incorporated your suggested changes now. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18334: [SPARK-21127] [SQL] Update statistics after data changin...

Github user wzhfy commented on the issue: https://github.com/apache/spark/pull/18334 @cloud-fan OK. I'll create another ticket for invalidating stats. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18440: [SPARK-21229][SQL] remove QueryPlan.preCanonicalized

Github user gatorsmile commented on the issue: https://github.com/apache/spark/pull/18440 How about improving [the description](https://github.com/apache/spark/blob/master/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/plans/QueryPlan.scala#L191) of `canonicalized ` in `QueryPlan`. We might forget to normalize the expression when overwriting `canonicalized `. We already have a few PRs to fix this issue. For example, [SPARK-20718](https://issues.apache.org/jira/browse/SPARK-20718) --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18442: [SS][Minor] Fix flaky test in DatastreamReaderWriterSuit...

Github user tdas commented on the issue: https://github.com/apache/spark/pull/18442 jenkins retest this please --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18431: [SPARK-21224][R] Specify a schema by using a DDL-...

Github user felixcheung commented on a diff in the pull request:

https://github.com/apache/spark/pull/18431#discussion_r124456659

--- Diff: R/pkg/R/SQLContext.R ---

@@ -584,7 +584,7 @@ tableToDF <- function(tableName) {

#'

#' @param path The path of files to load

#' @param source The name of external data source

-#' @param schema The data schema defined in structType

+#' @param schema The data schema defined in structType or a DDL-formatted

string.

--- End diff --

ok, a fair point

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18334: [SPARK-21127] [SQL] Update statistics after data ...

Github user wzhfy commented on a diff in the pull request:

https://github.com/apache/spark/pull/18334#discussion_r124456568

--- Diff:

sql/hive/src/test/scala/org/apache/spark/sql/hive/StatisticsSuite.scala ---

@@ -448,6 +433,145 @@ class StatisticsSuite extends

StatisticsCollectionTestBase with TestHiveSingleto

"ALTER TABLE unset_prop_table UNSET TBLPROPERTIES ('prop1')")

}

+ /**

+ * To see if stats exist, we need to check spark's stats properties

instead of catalog

+ * statistics, because hive would change stats in metastore and thus

change catalog statistics.

--- End diff --

Hive updates stats for some formats, e.g. in the test `test statistics of

LogicalRelation converted from Hive serde tables`, hive updates stats for orc

table but not parquet table.

I think it's tricky and fussy to relay on hive's stats, we should rely on

spark's stats. Here I just eliminate hive's influence for checking. As in

`CatalogStatistics` we first fill in hive's stats and then override it using

spark's stats, we can't tell where the stats is from if we check

`CatalogStatistics`.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18429: [SPARK-21222] Move elimination of Distinct clause from a...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/18429 Test FAILed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/78747/ Test FAILed. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18429: [SPARK-21222] Move elimination of Distinct clause from a...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/18429 Merged build finished. Test FAILed. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18429: [SPARK-21222] Move elimination of Distinct clause from a...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/18429 **[Test build #78747 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78747/testReport)** for PR 18429 at commit [`08f61f4`](https://github.com/apache/spark/commit/08f61f40db06174043a58c4dc6dc9e20c9107aef). * This patch **fails PySpark pip packaging tests**. * This patch merges cleanly. * This patch adds no public classes. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18431: [SPARK-21224][R] Specify a schema by using a DDL-...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/18431#discussion_r124456111

--- Diff: R/pkg/R/SQLContext.R ---

@@ -584,7 +584,7 @@ tableToDF <- function(tableName) {

#'

#' @param path The path of files to load

#' @param source The name of external data source

-#' @param schema The data schema defined in structType

+#' @param schema The data schema defined in structType or a DDL-formatted

string.

--- End diff --

but it is `data schema ... in a DDL-formatted string`. I get the point but

I am quite sure users would probably less confused (and example is also

provided). I actually don't know how I can explain this shorter and cleaner ...

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18023: [SPARK-12139] [SQL] REGEX Column Specification

Github user janewangfb commented on a diff in the pull request:

https://github.com/apache/spark/pull/18023#discussion_r124456075

--- Diff: sql/core/src/test/scala/org/apache/spark/sql/DatasetSuite.scala

---

@@ -244,6 +244,71 @@ class DatasetSuite extends QueryTest with

SharedSQLContext {

("a", ClassData("a", 1)), ("b", ClassData("b", 2)), ("c",

ClassData("c", 3)))

}

+ test("REGEX column specification") {

+val ds = Seq(("a", 1), ("b", 2), ("c", 3)).toDS()

+

+intercept[AnalysisException] {

+ ds.select(expr("`(_1)?+.+`").as[Int])

+}

+

+intercept[AnalysisException] {

+ ds.select(expr("`(_1|_2)`").as[Int])

+}

+

+intercept[AnalysisException] {

+ ds.select(ds("`(_1)?+.+`"))

+}

+

+intercept[AnalysisException] {

+ ds.select(ds("`(_1|_2)`"))

+}

--- End diff --

updated with message

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18023: [SPARK-12139] [SQL] REGEX Column Specification

Github user janewangfb commented on a diff in the pull request:

https://github.com/apache/spark/pull/18023#discussion_r124456024

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/unresolved.scala

---

@@ -123,7 +124,14 @@ case class UnresolvedAttribute(nameParts: Seq[String])

extends Attribute with Un

override def toString: String = s"'$name"

- override def sql: String = quoteIdentifier(name)

+ override def sql: String = {

+name match {

+ case ParserUtils.escapedIdentifier(_) |

+ ParserUtils.qualifiedEscapedIdentifier(_, _) => name

--- End diff --

shortened

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18023: [SPARK-12139] [SQL] REGEX Column Specification

Github user janewangfb commented on a diff in the pull request:

https://github.com/apache/spark/pull/18023#discussion_r124455888

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -1189,8 +1189,24 @@ class Dataset[T] private[sql](

case "*" =>

Column(ResolvedStar(queryExecution.analyzed.output))

case _ =>

- val expr = resolve(colName)

- Column(expr)

+ if (sqlContext.conf.supportQuotedRegexColumnName) {

+colRegex(colName)

+ } else {

+val expr = resolve(colName)

+Column(expr)

+ }

+ }

+

+ /**

+ * Selects column based on the column name specified as a regex and

return it as [[Column]].

--- End diff --

added

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18429: [SPARK-21222] Move elimination of Distinct clause...

Github user gengliangwang commented on a diff in the pull request:

https://github.com/apache/spark/pull/18429#discussion_r124455883

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/Optimizer.scala

---

@@ -152,6 +153,19 @@ abstract class Optimizer(sessionCatalog:

SessionCatalog, conf: SQLConf)

}

/**

+ * Remove useless DISTINCT for MAX and MIN.

+ * This rule should be applied before RewriteDistinctAggregates.

+ */

+object EliminateDistinct extends Rule[LogicalPlan] {

+ override def apply(plan: LogicalPlan): LogicalPlan = plan

transformExpressions {

+case AggregateExpression(max: Max, mode: AggregateMode, true, _) =>

--- End diff --

Got it, appreciate your help!

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18445: [Spark-19726][SQL] Faild to insert null timestamp value ...

Github user gatorsmile commented on the issue: https://github.com/apache/spark/pull/18445 To run the Scala style check, you can run the command in your computer: > dev/lint-scala --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18445: [Spark-19726][SQL] Faild to insert null timestamp value ...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/18445 Test FAILed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/78755/ Test FAILed. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18445: [Spark-19726][SQL] Faild to insert null timestamp value ...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/18445 **[Test build #78755 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78755/testReport)** for PR 18445 at commit [`dde9123`](https://github.com/apache/spark/commit/dde91236ecf54d9ede76bf888dda1499c7cd225f). * This patch **fails Scala style tests**. * This patch merges cleanly. * This patch adds no public classes. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18438: [SPARK-18294][CORE] Implement commit protocol to support...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/18438 Test FAILed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/78746/ Test FAILed. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18445: [Spark-19726][SQL] Faild to insert null timestamp...

Github user gatorsmile commented on a diff in the pull request:

https://github.com/apache/spark/pull/18445#discussion_r124455697

--- Diff:

sql/core/src/test/scala/org/apache/spark/sql/jdbc/JDBCWriteSuite.scala ---

@@ -20,10 +20,10 @@ package org.apache.spark.sql.jdbc

import java.sql.{Date, DriverManager, Timestamp}

import java.util.Properties

-import scala.collection.JavaConverters.propertiesAsScalaMapConverter

+import org.apache.spark.SparkException

--- End diff --

Move this to line 27

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18438: [SPARK-18294][CORE] Implement commit protocol to support...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/18438 Merged build finished. Test FAILed. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18445: [Spark-19726][SQL] Faild to insert null timestamp value ...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/18445 Merged build finished. Test FAILed. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18438: [SPARK-18294][CORE] Implement commit protocol to support...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/18438 **[Test build #78746 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78746/testReport)** for PR 18438 at commit [`80884b7`](https://github.com/apache/spark/commit/80884b7de35758e17a3b2f17656cbe8cc0785d94). * This patch **fails PySpark pip packaging tests**. * This patch merges cleanly. * This patch adds no public classes. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18023: [SPARK-12139] [SQL] REGEX Column Specification

Github user janewangfb commented on a diff in the pull request: https://github.com/apache/spark/pull/18023#discussion_r124455687 --- Diff: sql/core/src/test/resources/sql-tests/inputs/query_regex_column.sql --- @@ -0,0 +1,24 @@ +CREATE OR REPLACE TEMPORARY VIEW testData AS SELECT * FROM VALUES +(1, "1", "11"), (2, "2", "22"), (3, "3", "33"), (4, "4", "44"), (5, "5", "55"), (6, "6", "66") +AS testData(key, value1, value2); + +CREATE OR REPLACE TEMPORARY VIEW testData2 AS SELECT * FROM VALUES +(1, 1, 1, 2), (1, 2, 1, 2), (2, 1, 2, 3), (2, 2, 2, 3), (3, 1, 3, 4), (3, 2, 3, 4) +AS testData2(a, b, c, d); + +-- AnalysisException +SELECT `(a)?+.+` FROM testData2 WHERE a = 1; +SELECT t.`(a)?+.+` FROM testData2 t WHERE a = 1; +SELECT `(a|b)` FROM testData2 WHERE a = 2; +SELECT `(a|b)?+.+` FROM testData2 WHERE a = 2; + +set spark.sql.parser.quotedRegexColumnNames=true; + +-- Regex columns +SELECT `(a)?+.+` FROM testData2 WHERE a = 1; +SELECT t.`(a)?+.+` FROM testData2 t WHERE a = 1; +SELECT `(a|b)` FROM testData2 WHERE a = 2; +SELECT `(a|b)?+.+` FROM testData2 WHERE a = 2; +SELECT `(e|f)` FROM testData2; +SELECT t.`(e|f)` FROM testData2 t; +SELECT p.`(key)?+.+`, b, testdata2.`(b)?+.+` FROM testData p join testData2 ON p.key = testData2.a WHERE key < 3; --- End diff -- added --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18445: [Spark-19726][SQL] Faild to insert null timestamp value ...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/18445 **[Test build #78755 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78755/testReport)** for PR 18445 at commit [`dde9123`](https://github.com/apache/spark/commit/dde91236ecf54d9ede76bf888dda1499c7cd225f). --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18445: [Spark-19726][SQL] Faild to insert null timestamp...

Github user gatorsmile commented on a diff in the pull request:

https://github.com/apache/spark/pull/18445#discussion_r124455651

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/jdbc/JdbcUtils.scala

---

@@ -273,6 +273,10 @@ object JdbcUtils extends Logging {

val rsmd = resultSet.getMetaData

val ncols = rsmd.getColumnCount

val fields = new Array[StructField](ncols)

+// if true, spark will propagate null to underlying

+// DB engine instead of using type default value

+val alwaysNullable = true

--- End diff --

put it as the parameter of `getSchema`

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18443: [SPARK-21231] Disable installing pyarrow during run pip ...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/18443 **[Test build #78756 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78756/testReport)** for PR 18443 at commit [`d92fe2b`](https://github.com/apache/spark/commit/d92fe2b6d77c405a5d2cb37e875a24d826de050d). --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18445: [Spark-19726][SQL] Faild to insert null timestamp value ...

Github user shuangshuangwang commented on the issue: https://github.com/apache/spark/pull/18445 Sorry for scala style checks errors again. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18440: [SPARK-21229][SQL] remove QueryPlan.preCanonicali...

Github user gatorsmile commented on a diff in the pull request:

https://github.com/apache/spark/pull/18440#discussion_r124455418

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/catalog/interface.scala

---

@@ -403,13 +404,11 @@ object CatalogTypes {

/**

* A [[LogicalPlan]] that represents a table.

*/

-case class CatalogRelation(

+case class CatalogRelation private(

tableMeta: CatalogTable,

dataCols: Seq[AttributeReference],

partitionCols: Seq[AttributeReference]) extends LeafNode with

MultiInstanceRelation {

assert(tableMeta.identifier.database.isDefined)

- assert(tableMeta.partitionSchema.sameType(partitionCols.toStructType))

- assert(tableMeta.dataSchema.sameType(dataCols.toStructType))

--- End diff --

But users might still be able to call the constructor of `CatalogRelation`,

which is `case class` instead of `class`

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18431: [SPARK-21224][R] Specify a schema by using a DDL-...

Github user felixcheung commented on a diff in the pull request:

https://github.com/apache/spark/pull/18431#discussion_r124455176

--- Diff: R/pkg/R/SQLContext.R ---

@@ -584,7 +584,7 @@ tableToDF <- function(tableName) {

#'

#' @param path The path of files to load

#' @param source The name of external data source

-#' @param schema The data schema defined in structType

+#' @param schema The data schema defined in structType or a DDL-formatted

string.

--- End diff --

hmm, to say `DDL` this might be too broad? what if someone pass the whole

CREATE statement here?

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18443: [SPARK-21231] Disable installing pyarrow during run pip ...

Github user BryanCutler commented on the issue: https://github.com/apache/spark/pull/18443 Jenkins retest this please --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18429: [SPARK-21222] Move elimination of Distinct clause...

Github user rxin commented on a diff in the pull request:

https://github.com/apache/spark/pull/18429#discussion_r124455032

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/Optimizer.scala

---

@@ -152,6 +153,19 @@ abstract class Optimizer(sessionCatalog:

SessionCatalog, conf: SQLConf)

}

/**

+ * Remove useless DISTINCT for MAX and MIN.

+ * This rule should be applied before RewriteDistinctAggregates.

+ */

+object EliminateDistinct extends Rule[LogicalPlan] {

+ override def apply(plan: LogicalPlan): LogicalPlan = plan

transformExpressions {

+case AggregateExpression(max: Max, mode: AggregateMode, true, _) =>

--- End diff --

in general i'm not a big fan of using extractors unless we need almost all

arguments ... it makes refactoring a lot more complicated. Extractors are

over/abused in Catalyst (there are some code that use extractors when they are

simply doing type matching).

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18429: [SPARK-21222] Move elimination of Distinct clause...

Github user rxin commented on a diff in the pull request:

https://github.com/apache/spark/pull/18429#discussion_r124455104

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/Optimizer.scala

---

@@ -152,6 +153,19 @@ abstract class Optimizer(sessionCatalog:

SessionCatalog, conf: SQLConf)

}

/**

+ * Remove useless DISTINCT for MAX and MIN.

+ * This rule should be applied before RewriteDistinctAggregates.

+ */

+object EliminateDistinct extends Rule[LogicalPlan] {

+ override def apply(plan: LogicalPlan): LogicalPlan = plan

transformExpressions {

+case AggregateExpression(max: Max, mode: AggregateMode, true, _) =>

--- End diff --

See https://github.com/databricks/scala-style-guide#pattern-matching

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18429: [SPARK-21222] Move elimination of Distinct clause...

Github user gengliangwang commented on a diff in the pull request:

https://github.com/apache/spark/pull/18429#discussion_r124454937

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/Optimizer.scala

---

@@ -152,6 +153,19 @@ abstract class Optimizer(sessionCatalog:

SessionCatalog, conf: SQLConf)

}

/**

+ * Remove useless DISTINCT for MAX and MIN.

+ * This rule should be applied before RewriteDistinctAggregates.

+ */

+object EliminateDistinct extends Rule[LogicalPlan] {

+ override def apply(plan: LogicalPlan): LogicalPlan = plan

transformExpressions {

+case AggregateExpression(max: Max, mode: AggregateMode, true, _) =>

--- End diff --

I think your version is more readable, the first param "aggregateFunction"

is specified.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18445: [Spark-19726][SQL] Faild to insert null timestamp value ...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/18445 Test FAILed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/78754/ Test FAILed. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18445: [Spark-19726][SQL] Faild to insert null timestamp value ...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/18445 **[Test build #78754 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78754/testReport)** for PR 18445 at commit [`d9526f1`](https://github.com/apache/spark/commit/d9526f1aa6f31bd802905023fcbd01c669b58b86). * This patch **fails Scala style tests**. * This patch merges cleanly. * This patch adds no public classes. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18445: [Spark-19726][SQL] Faild to insert null timestamp value ...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/18445 Merged build finished. Test FAILed. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18445: [Spark-19726][SQL] Faild to insert null timestamp value ...

Github user shuangshuangwang commented on the issue: https://github.com/apache/spark/pull/18445 Fix scala style tests errors. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18445: [Spark-19726][SQL] Faild to insert null timestamp value ...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/18445 **[Test build #78754 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78754/testReport)** for PR 18445 at commit [`d9526f1`](https://github.com/apache/spark/commit/d9526f1aa6f31bd802905023fcbd01c669b58b86). --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18371: [SPARK-20889][SparkR] Grouped documentation for M...

Github user felixcheung commented on a diff in the pull request:

https://github.com/apache/spark/pull/18371#discussion_r124454234

--- Diff: R/pkg/R/functions.R ---

@@ -41,14 +41,21 @@ NULL

#' @param x Column to compute on. In \code{shiftLeft}, \code{shiftRight}

and \code{shiftRightUnsigned},

#' this is the number of bits to shift.

#' @param y Column to compute on.

-#' @param ... additional argument(s). For example, it could be used to

pass additional Columns.

--- End diff --

we don't have math func that takes more than 2 columns?

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18023: [SPARK-12139] [SQL] REGEX Column Specification

Github user gatorsmile commented on the issue: https://github.com/apache/spark/pull/18023 Yes. We need to consider it. > (?i) makes the regex case insensitive. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18366: [SPARK-20889][SparkR] Grouped documentation for S...

Github user felixcheung commented on a diff in the pull request:

https://github.com/apache/spark/pull/18366#discussion_r124453861

--- Diff: R/pkg/R/functions.R ---

@@ -90,8 +90,11 @@ NULL

#'

#' String functions defined for \code{Column}.

#'

-#' @param x Column to compute on. In \code{instr}, it is the substring to

check. In \code{format_number},

-#' it is the number of decimal place to format to.

+#' @param x Column to compute on except in the following methods:

+#' \itemize{

+#' \item \code{instr}: class "character", the substring to check.

--- End diff --

the form `class "character"` might not be commonly use around here.

how about like

https://stat.ethz.ch/R-manual/R-devel/library/utils/html/read.table.html, just

say

```

instr: character, the substring to check.

```

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18429: [SPARK-21222] Move elimination of Distinct clause...

Github user gengliangwang commented on a diff in the pull request:

https://github.com/apache/spark/pull/18429#discussion_r124453937

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/Optimizer.scala

---

@@ -152,6 +153,19 @@ abstract class Optimizer(sessionCatalog:

SessionCatalog, conf: SQLConf)

}

/**

+ * Remove useless DISTINCT for MAX and MIN.

+ * This rule should be applied before RewriteDistinctAggregates.

+ */

+object EliminateDistinct extends Rule[LogicalPlan] {

+ override def apply(plan: LogicalPlan): LogicalPlan = plan

transformExpressions {

+case AggregateExpression(max: Max, mode: AggregateMode, true, _) =>

--- End diff --

Make sense. I will remember that and revise the current patch.

Also, how about this:

```scala

case ae @ AggregateExpression(_: Max | _: Min, _, isDistinct, _) if

isDistinct =>

ae.copy(isDistinct = false)

```

Is it too long?

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #17227: [SPARK-19507][PySpark][SQL] Show field name in _verify_t...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/17227 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/78740/ Test PASSed. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #17227: [SPARK-19507][PySpark][SQL] Show field name in _verify_t...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/17227 Build finished. Test PASSed. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18301: [SPARK-21052][SQL] Add hash map metrics to join

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/18301 **[Test build #78753 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78753/testReport)** for PR 18301 at commit [`59a2ba8`](https://github.com/apache/spark/commit/59a2ba82f0ede328433eccc398197e1bebccce2e). --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18431: [SPARK-21224][R] Specify a schema by using a DDL-...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/18431#discussion_r124453665

--- Diff: R/pkg/R/SQLContext.R ---

@@ -623,15 +625,20 @@ read.df.default <- function(path = NULL, source =

NULL, schema = NULL, na.string

if (source == "csv" && is.null(options[["nullValue"]])) {

options[["nullValue"]] <- na.strings

}

+ read <- callJMethod(sparkSession, "read")

+ read <- callJMethod(read, "format", source)

if (!is.null(schema)) {

-stopifnot(class(schema) == "structType")

-sdf <- handledCallJStatic("org.apache.spark.sql.api.r.SQLUtils",

"loadDF", sparkSession,

- source, schema$jobj, options)

- } else {

-sdf <- handledCallJStatic("org.apache.spark.sql.api.r.SQLUtils",

"loadDF", sparkSession,

- source, options)

+if (class(schema) == "structType") {

+ read <- callJMethod(read, "schema", schema$jobj)

+} else if (is.character(schema)) {

+ read <- callJMethod(read, "schema", schema)

+} else {

+ stop("schema should be structType or character.")

+}

}

- dataFrame(sdf)

+ read <- callJMethod(read, "options", options)

+ sdf <- handledCallJMethod(read, "load")

+ dataFrame(callJMethod(sdf, "toDF"))

--- End diff --

Definitely.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #17293: [SPARK-19950][SQL] Fix to ignore nullable when df.load()...

Github user cloud-fan commented on the issue: https://github.com/apache/spark/pull/17293 My main concern is about how to validate it. This is one pain point of Spark: we don't control the storage layer and we can't trust any constraints like nullable, primary key, etc. I think it's a safe choice to always treat input data as nullable, and I think it's all about performance, validating also have performance penalty, and it may eliminate the benefit of non-nullable optimization. BTW another reason is Spark doesn't work well if the nullable information is wrong, we may return wrong result which is very hard to debug. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #17227: [SPARK-19507][PySpark][SQL] Show field name in _verify_t...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/17227 **[Test build #78740 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78740/testReport)** for PR 17227 at commit [`6c1e0b6`](https://github.com/apache/spark/commit/6c1e0b690bdd1914b5056c8b2934614534c622cb). * This patch passes all tests. * This patch **does not merge cleanly**. * This patch adds no public classes. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18431: [SPARK-21224][R] Specify a schema by using a DDL-...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/18431#discussion_r124453592

--- Diff: R/pkg/R/SQLContext.R ---

@@ -584,7 +584,7 @@ tableToDF <- function(tableName) {

#'

#' @param path The path of files to load

#' @param source The name of external data source

-#' @param schema The data schema defined in structType

+#' @param schema The data schema defined in structType or a DDL-formatted

string.

--- End diff --

Ah... this is, actually and up to my knowledge, loosely related with

https://issues.apache.org/jira/browse/SPARK-14764 ... I will keep in mind and

add a link if this one is resolved.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18366: [SPARK-20889][SparkR] Grouped documentation for STRING c...

Github user felixcheung commented on the issue: https://github.com/apache/spark/pull/18366 thanks, re https://github.com/apache/spark/pull/18366#issuecomment-310974184 I think I'm going to suggest adding `See Details` at the end for the param doc for `x` like in here https://stat.ethz.ch/R-manual/R-devel/library/utils/html/read.table.html --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18445: [Spark-19726][SQL] Faild to insert null timestamp value ...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/18445 **[Test build #78752 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78752/testReport)** for PR 18445 at commit [`60b007d`](https://github.com/apache/spark/commit/60b007d18ef09c170ddca17d5567bf30b5b8b993). * This patch **fails Scala style tests**. * This patch merges cleanly. * This patch adds no public classes. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18445: [Spark-19726][SQL] Faild to insert null timestamp value ...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/18445 Merged build finished. Test FAILed. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18445: [Spark-19726][SQL] Faild to insert null timestamp value ...

Github user shuangshuangwang commented on the issue: https://github.com/apache/spark/pull/18445 Hi @gatorsmile , I have just pushed code changes. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18445: [Spark-19726][SQL] Faild to insert null timestamp value ...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/18445 Test FAILed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/78752/ Test FAILed. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18445: [Spark-19726][SQL] Faild to insert null timestamp value ...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/18445 **[Test build #78752 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78752/testReport)** for PR 18445 at commit [`60b007d`](https://github.com/apache/spark/commit/60b007d18ef09c170ddca17d5567bf30b5b8b993). --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18301: [SPARK-21052][SQL] Add hash map metrics to join

Github user viirya commented on a diff in the pull request: https://github.com/apache/spark/pull/18301#discussion_r124453286 --- Diff: sql/core/src/main/scala/org/apache/spark/sql/execution/aggregate/TungstenAggregationIterator.scala --- @@ -422,8 +422,7 @@ class TungstenAggregationIterator( metrics.incPeakExecutionMemory(maxMemory) // Update average hashmap probe if this is the last record. -val averageProbes = hashMap.getAverageProbesPerLookup() -avgHashmapProbe.add(averageProbes.ceil.toLong) +avgHashProbe.set(hashMap.getAverageProbesPerLookup()) --- End diff -- The probe for non-whole-stage HashAggregate is updated here. Actually, I now think we should move this update to the end of processing input rows, instead of at the last record. Because even the iterator is not consumed to the end (e.g., a Limit after Aggregate), we still should show the metric. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18431: [SPARK-21224][R] Specify a schema by using a DDL-...

Github user felixcheung commented on a diff in the pull request:

https://github.com/apache/spark/pull/18431#discussion_r124452703

--- Diff: R/pkg/R/SQLContext.R ---

@@ -623,15 +625,20 @@ read.df.default <- function(path = NULL, source =

NULL, schema = NULL, na.string

if (source == "csv" && is.null(options[["nullValue"]])) {

options[["nullValue"]] <- na.strings

}

+ read <- callJMethod(sparkSession, "read")

+ read <- callJMethod(read, "format", source)

if (!is.null(schema)) {

-stopifnot(class(schema) == "structType")

-sdf <- handledCallJStatic("org.apache.spark.sql.api.r.SQLUtils",

"loadDF", sparkSession,

- source, schema$jobj, options)

- } else {

-sdf <- handledCallJStatic("org.apache.spark.sql.api.r.SQLUtils",

"loadDF", sparkSession,

- source, options)

+if (class(schema) == "structType") {

+ read <- callJMethod(read, "schema", schema$jobj)

+} else if (is.character(schema)) {

+ read <- callJMethod(read, "schema", schema)

+} else {

+ stop("schema should be structType or character.")

+}

}

- dataFrame(sdf)

+ read <- callJMethod(read, "options", options)

+ sdf <- handledCallJMethod(read, "load")

+ dataFrame(callJMethod(sdf, "toDF"))

--- End diff --

I'm fairly sure we don't need to call toDF here - could you check?

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18431: [SPARK-21224][R] Specify a schema by using a DDL-...

Github user felixcheung commented on a diff in the pull request:

https://github.com/apache/spark/pull/18431#discussion_r124453132

--- Diff: R/pkg/R/SQLContext.R ---

@@ -584,7 +584,7 @@ tableToDF <- function(tableName) {

#'

#' @param path The path of files to load

#' @param source The name of external data source

-#' @param schema The data schema defined in structType

+#' @param schema The data schema defined in structType or a DDL-formatted

string.

--- End diff --

is `DDL-formatted` a Spark specific thing? do we have a doc page on what

that looks like?

perhaps we need to link to it?

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18431: [SPARK-21224][R] Specify a schema by using a DDL-formatt...

Github user felixcheung commented on the issue: https://github.com/apache/spark/pull/18431 fair enough - IMO, good idea to add to scala api but I'd defer to @cloud-fan --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #16699: [SPARK-18710][ML] Add offset in GLM

Github user felixcheung commented on the issue: https://github.com/apache/spark/pull/16699 this is a known issue in test runs currently - it's mentioned in d...@spark.apache.org, just so you know. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #17681: [SPARK-20383][SQL] Supporting Create [temporary] Functio...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/17681 Test FAILed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/78745/ Test FAILed. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #17681: [SPARK-20383][SQL] Supporting Create [temporary] Functio...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/17681 Merged build finished. Test FAILed. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #17681: [SPARK-20383][SQL] Supporting Create [temporary] Functio...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/17681 **[Test build #78745 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78745/testReport)** for PR 17681 at commit [`68a9d08`](https://github.com/apache/spark/commit/68a9d082f6a665d338b0a17005df07d1535fc433). * This patch **fails Spark unit tests**. * This patch merges cleanly. * This patch adds no public classes. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18429: [SPARK-21222] Move elimination of Distinct clause...

Github user rxin commented on a diff in the pull request:

https://github.com/apache/spark/pull/18429#discussion_r124452275

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/Optimizer.scala

---

@@ -152,6 +153,19 @@ abstract class Optimizer(sessionCatalog:

SessionCatalog, conf: SQLConf)

}

/**

+ * Remove useless DISTINCT for MAX and MIN.

+ * This rule should be applied before RewriteDistinctAggregates.

+ */

+object EliminateDistinct extends Rule[LogicalPlan] {

+ override def apply(plan: LogicalPlan): LogicalPlan = plan

transformExpressions {

+case AggregateExpression(max: Max, mode: AggregateMode, true, _) =>

--- End diff --

it's unclear what the "true" is. I'd either use named argument, or rewrite

it to something like

```

case ae: AggregateExpression if ae.isDistinct =>

ae.aggregateFunction match {

case _: Max | _: Min => ae.copy(isDistinct = false)

}

}

```

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18301: [SPARK-21052][SQL] Add hash map metrics to join

Github user viirya commented on the issue: https://github.com/apache/spark/pull/18301 non-whole-stage HashAggregate is performed by `TungstenAggregationIterator`. The update to hash probe is only set at the end of the iterator. So I think it is consistent with whole stage HashAggregate? --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18388: [SPARK-21175] Reject OpenBlocks when memory shortage on ...