[GitHub] spark pull request #22314: [SPARK-25307][SQL] ArraySort function may return ...

Github user dilipbiswal commented on a diff in the pull request:

https://github.com/apache/spark/pull/22314#discussion_r214582048

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/collectionOperations.scala

---

@@ -1047,7 +1047,8 @@ trait ArraySortLike extends ExpectsInputTypes {

s"int $c = ${ctx.genComp(elementType, s"(($jt) $o1)", s"(($jt)

$o2)")};"

}

val nonNullPrimitiveAscendingSort =

-if (CodeGenerator.isPrimitiveType(elementType) && !containsNull) {

+if (CodeGenerator.isPrimitiveType(elementType) && elementType !=

BooleanType

+ && !containsNull) {

--- End diff --

will make a change

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22314: [SPARK-25307][SQL] ArraySort function may return ...

Github user dilipbiswal commented on a diff in the pull request:

https://github.com/apache/spark/pull/22314#discussion_r214581948

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/collectionOperations.scala

---

@@ -1047,7 +1047,8 @@ trait ArraySortLike extends ExpectsInputTypes {

s"int $c = ${ctx.genComp(elementType, s"(($jt) $o1)", s"(($jt)

$o2)")};"

}

val nonNullPrimitiveAscendingSort =

--- End diff --

@maropu Lets keep the variable name the same .. but add a private def like

you suggest in the following comment.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22319: [SPARK-25044][SQL][followup] add back UserDefinedFunctio...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22319 Merged build finished. Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21638: [SPARK-22357][CORE] SparkContext.binaryFiles ignore minP...

Github user gatorsmile commented on the issue: https://github.com/apache/spark/pull/21638 @bomeng Could you submit a follow-up PR to add a test case? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22319: [SPARK-25044][SQL][followup] add back UserDefinedFunctio...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/22319 **[Test build #95599 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/95599/testReport)** for PR 22319 at commit [`5acb1df`](https://github.com/apache/spark/commit/5acb1dfe912d580413882ed86ee18b1350b2eea1). * This patch **fails to build**. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22319: [SPARK-25044][SQL][followup] add back UserDefinedFunctio...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22319 Test FAILed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/95599/ Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22319: [SPARK-25044][SQL][followup] add back UserDefined...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/22319#discussion_r214581734

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/expressions/UserDefinedFunction.scala

---

@@ -129,3 +135,11 @@ case class UserDefinedFunction protected[sql] (

}

}

}

+

+object UserDefinedFunction {

--- End diff --

`private[sql]` since we don't explicitly mention `expressions` package is

meant to be internal.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22319: [SPARK-25044][SQL][followup] add back UserDefinedFunctio...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22319 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/2775/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22319: [SPARK-25044][SQL][followup] add back UserDefinedFunctio...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/22319 **[Test build #95600 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/95600/testReport)** for PR 22319 at commit [`2245395`](https://github.com/apache/spark/commit/224539524446f69efe8e10375de6ba5ad3ae80c3). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22319: [SPARK-25044][SQL][followup] add back UserDefinedFunctio...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22319 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21638: [SPARK-22357][CORE] SparkContext.binaryFiles igno...

Github user gatorsmile commented on a diff in the pull request:

https://github.com/apache/spark/pull/21638#discussion_r214581248

--- Diff:

core/src/main/scala/org/apache/spark/input/PortableDataStream.scala ---

@@ -47,7 +47,7 @@ private[spark] abstract class StreamFileInputFormat[T]

def setMinPartitions(sc: SparkContext, context: JobContext,

minPartitions: Int) {

val defaultMaxSplitBytes =

sc.getConf.get(config.FILES_MAX_PARTITION_BYTES)

val openCostInBytes = sc.getConf.get(config.FILES_OPEN_COST_IN_BYTES)

-val defaultParallelism = sc.defaultParallelism

+val defaultParallelism = Math.max(sc.defaultParallelism, minPartitions)

--- End diff --

BTW, it is easy to add such a test case. We can even test the behaviors of

the boundary cases. cc @srowen @HyukjinKwon @MaxGekk @jiangxb1987

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22319: [SPARK-25044][SQL][followup] add back UserDefined...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/22319#discussion_r214581204

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/expressions/UserDefinedFunction.scala

---

@@ -129,3 +135,11 @@ case class UserDefinedFunction protected[sql] (

}

}

}

+

+object UserDefinedFunction {

+ // This is to keep backward compatibility for this case class.

+ // TODO: revisit this case class in Spark 3.0, and narrow down the

public surface.

+ def unapply(arg: UserDefinedFunction): Option[(AnyRef, DataType,

Option[Seq[DataType]])] = {

--- End diff --

Doesn't this still break binary compatibility since we bind to another

signature?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21638: [SPARK-22357][CORE] SparkContext.binaryFiles igno...

Github user gatorsmile commented on a diff in the pull request:

https://github.com/apache/spark/pull/21638#discussion_r214581076

--- Diff:

core/src/main/scala/org/apache/spark/input/PortableDataStream.scala ---

@@ -47,7 +47,7 @@ private[spark] abstract class StreamFileInputFormat[T]

def setMinPartitions(sc: SparkContext, context: JobContext,

minPartitions: Int) {

val defaultMaxSplitBytes =

sc.getConf.get(config.FILES_MAX_PARTITION_BYTES)

val openCostInBytes = sc.getConf.get(config.FILES_OPEN_COST_IN_BYTES)

-val defaultParallelism = sc.defaultParallelism

+val defaultParallelism = Math.max(sc.defaultParallelism, minPartitions)

--- End diff --

We should have a test case; otherwise, we could hit the same issue again.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22319: [SPARK-25044][SQL][followup] add back UserDefinedFunctio...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/22319 **[Test build #95599 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/95599/testReport)** for PR 22319 at commit [`5acb1df`](https://github.com/apache/spark/commit/5acb1dfe912d580413882ed86ee18b1350b2eea1). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22319: [SPARK-25044][SQL][followup] add back UserDefined...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/22319#discussion_r214577826

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/expressions/UserDefinedFunction.scala

---

@@ -41,12 +41,18 @@ import org.apache.spark.sql.types.DataType

case class UserDefinedFunction protected[sql] (

f: AnyRef,

dataType: DataType,

-inputTypes: Option[Seq[ScalaReflection.Schema]]) {

+inputSchemas: Option[Seq[ScalaReflection.Schema]]) {

private var _nameOption: Option[String] = None

private var _nullable: Boolean = true

private var _deterministic: Boolean = true

+ // This is to keep backward compatibility for this case class.

+ // TODO: revisit this case class in Spark 3.0, and narrow down the

public surface.

+ def inputTypes: Option[Seq[DataType]] = {

--- End diff --

@cloud-fan, I think this break compatibility when `UserDefinedFunction`'s

used in a pattern match.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22319: [SPARK-25044][SQL][followup] add back UserDefinedFunctio...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22319 Merged build finished. Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22319: [SPARK-25044][SQL][followup] add back UserDefinedFunctio...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22319 Test FAILed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/95598/ Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22319: [SPARK-25044][SQL][followup] add back UserDefinedFunctio...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/22319 **[Test build #95598 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/95598/testReport)** for PR 22319 at commit [`e9c6fbc`](https://github.com/apache/spark/commit/e9c6fbc1b7298d7055d91a1a118eea3abd246d27). * This patch **fails to build**. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22313: [SPARK-25306][SQL] Use cache to speed up `createFilter` ...

Github user cloud-fan commented on the issue: https://github.com/apache/spark/pull/22313 Do you know why `createFilter` function has exponential time complexity? Let's make sure the algorithm is good before adding the cache. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22319: [SPARK-25044][SQL][followup] add back UserDefinedFunctio...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/22319 **[Test build #95598 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/95598/testReport)** for PR 22319 at commit [`e9c6fbc`](https://github.com/apache/spark/commit/e9c6fbc1b7298d7055d91a1a118eea3abd246d27). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22319: [SPARK-25044][SQL][followup] add back UserDefinedFunctio...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22319 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/2774/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22319: [SPARK-25044][SQL][followup] add back UserDefinedFunctio...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22319 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22319: [SPARK-25044][SQL][followup] add back UserDefinedFunctio...

Github user cloud-fan commented on the issue: https://github.com/apache/spark/pull/22319 cc @srowen @gatorsmile --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22319: [SPARK-25044][SQL][followup] add back UserDefined...

GitHub user cloud-fan opened a pull request: https://github.com/apache/spark/pull/22319 [SPARK-25044][SQL][followup] add back UserDefinedFunction.inputTypes ## What changes were proposed in this pull request? This is a followup of https://github.com/apache/spark/pull/22259 . Scala case class has a wide surface: apply, accessors, copy, etc. In https://github.com/apache/spark/pull/22259 , we change the type of `UserDefinedFunctions.inputTypes` from `Option[Seq[DataType]]` to `Option[Seq[Schema]]`. This breaks backward compatibility. This PR adds back `UserDefinedFunctions.inputTypes: Option[Seq[DataType]]`, but does not add back the `apply` and `copy` methods, for 2 reasons. 1. the `apply` and `copy` methods are not meant to be public to end users. 2. Users can't call `apply` and `copy` with input types anymore. They must provide the nullability information to get corrected result. ## How was this patch tested? N/A You can merge this pull request into a Git repository by running: $ git pull https://github.com/cloud-fan/spark revert Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/22319.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #22319 commit e9c6fbc1b7298d7055d91a1a118eea3abd246d27 Author: Wenchen Fan Date: 2018-09-03T04:33:18Z add back UserDefinedFunction.inputTypes --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22307: [SPARK-25301][SQL] When a view uses an UDF from a non de...

Github user vinodkc commented on the issue: https://github.com/apache/spark/pull/22307 @HyukjinKwon , even with this ```create function d100.udf100 as 'org.apache.hadoop.hive.ql.udf.generic.GenericUDFUpper'; ``` we can simulate this issue. I've updated PR description. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22317: [SPARK-25310][SQL] ArraysOverlap may throw a Compilation...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22317 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22317: [SPARK-25310][SQL] ArraysOverlap may throw a Compilation...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22317 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/95594/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22317: [SPARK-25310][SQL] ArraysOverlap may throw a Compilation...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/22317 **[Test build #95594 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/95594/testReport)** for PR 22317 at commit [`7e2f327`](https://github.com/apache/spark/commit/7e2f327d21527ac036f78d6ee2e3bc49ca97a143). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22010: [SPARK-21436][CORE] Take advantage of known parti...

Github user holdenk commented on a diff in the pull request:

https://github.com/apache/spark/pull/22010#discussion_r214575455

--- Diff: core/src/main/scala/org/apache/spark/rdd/RDD.scala ---

@@ -396,7 +396,16 @@ abstract class RDD[T: ClassTag](

* Return a new RDD containing the distinct elements in this RDD.

*/

def distinct(numPartitions: Int)(implicit ord: Ordering[T] = null):

RDD[T] = withScope {

-map(x => (x, null)).reduceByKey((x, y) => x, numPartitions).map(_._1)

+// If the data is already approriately partitioned with a known

partitioner we can work locally.

+def removeDuplicatesInPartition(itr: Iterator[T]): Iterator[T] = {

+ val set = new mutable.HashSet[T]()

+ itr.filter(set.add(_))

--- End diff --

So to reuse `reduceByKey` I'd write a custom partitioner which uses the

existing partioner as it's base but takes in the combined key type as input and

drop it down to the original key.

Sound right?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22311: [SPARK-25305][SQL] Respect attribute name in CollapsePro...

Github user maropu commented on the issue: https://github.com/apache/spark/pull/22311 ok, thanks. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22318: [SPARK-25150][SQL] Fix attribute deduplication in...

Github user maropu commented on a diff in the pull request:

https://github.com/apache/spark/pull/22318#discussion_r214573505

--- Diff:

sql/core/src/test/scala/org/apache/spark/sql/DataFrameJoinSuite.scala ---

@@ -295,4 +295,17 @@ class DataFrameJoinSuite extends QueryTest with

SharedSQLContext {

df.join(df, df("id") <=> df("id")).queryExecution.optimizedPlan

}

}

+

+ test("SPARK-25150: Attribute deduplication handles attributes in join

condition properly") {

+withSQLConf(SQLConf.CROSS_JOINS_ENABLED.key -> "false") {

+ val a = spark.range(1, 5)

+ val b = spark.range(10)

+ val c = b.filter($"id" % 2 === 0)

+

+ val r = a.join(b, a("id") === b("id"), "inner").join(c, a("id") ===

c("id"), "inner")

--- End diff --

Do we need a df `a` for this test? I think a simple test is better.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22318: [SPARK-25150][SQL] Fix attribute deduplication in...

Github user maropu commented on a diff in the pull request:

https://github.com/apache/spark/pull/22318#discussion_r214573271

--- Diff:

sql/core/src/test/scala/org/apache/spark/sql/DataFrameJoinSuite.scala ---

@@ -295,4 +295,17 @@ class DataFrameJoinSuite extends QueryTest with

SharedSQLContext {

df.join(df, df("id") <=> df("id")).queryExecution.optimizedPlan

}

}

+

+ test("SPARK-25150: Attribute deduplication handles attributes in join

condition properly") {

+withSQLConf(SQLConf.CROSS_JOINS_ENABLED.key -> "false") {

+ val a = spark.range(1, 5)

+ val b = spark.range(10)

+ val c = b.filter($"id" % 2 === 0)

+

+ val r = a.join(b, a("id") === b("id"), "inner").join(c, a("id") ===

c("id"), "inner")

+

+ checkAnswer(r, Row(2, 2, 2) :: Row(4, 4, 4) :: Nil)

+}

+ }

+

--- End diff --

nit: remove this empty line

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22318: [SPARK-25150][SQL] Fix attribute deduplication in...

Github user maropu commented on a diff in the pull request:

https://github.com/apache/spark/pull/22318#discussion_r214573288

--- Diff:

sql/core/src/test/scala/org/apache/spark/sql/DataFrameJoinSuite.scala ---

@@ -295,4 +295,17 @@ class DataFrameJoinSuite extends QueryTest with

SharedSQLContext {

df.join(df, df("id") <=> df("id")).queryExecution.optimizedPlan

}

}

+

+ test("SPARK-25150: Attribute deduplication handles attributes in join

condition properly") {

+withSQLConf(SQLConf.CROSS_JOINS_ENABLED.key -> "false") {

--- End diff --

`withSQLConf(SQLConf.CROSS_JOINS_ENABLED.key -> "true") {`

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22318: [SPARK-25150][SQL] Fix attribute deduplication in join

Github user maropu commented on the issue: https://github.com/apache/spark/pull/22318 Could you describe more in the PR description?; what's the root cause of this issue? How did you solve this by this pr? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22281: [SPARK-25280][SQL] Add support for USING syntax for Data...

Github user HyukjinKwon commented on the issue: https://github.com/apache/spark/pull/22281 Would you guys mind if I ask to point out some concerns that I might better have to double check by myself? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18447: [SPARK-21232][SQL][SparkR][PYSPARK] New built-in SQL fun...

Github user HyukjinKwon commented on the issue: https://github.com/apache/spark/pull/18447 ping @mmolimar to close --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22234: [SPARK-25241][SQL] Configurable empty values when...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/22234#discussion_r214572617

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/csv/CSVInferSchema.scala

---

@@ -79,7 +79,8 @@ private[csv] object CSVInferSchema {

* point checking if it is an Int, as the final type must be Double or

higher.

*/

def inferField(typeSoFar: DataType, field: String, options: CSVOptions):

DataType = {

-if (field == null || field.isEmpty || field == options.nullValue) {

+if (field == null || field.isEmpty || field == options.nullValue ||

+ field == options.emptyValueInRead) {

--- End diff --

I wouldn't do this for now. It needs another review iteration. Let's revert

this back.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22234: [SPARK-25241][SQL] Configurable empty values when...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/22234#discussion_r214572625

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/csv/CSVDataSource.scala

---

@@ -91,9 +91,10 @@ abstract class CSVDataSource extends Serializable {

}

row.zipWithIndex.map { case (value, index) =>

-if (value == null || value.isEmpty || value == options.nullValue) {

- // When there are empty strings or the values set in

`nullValue`, put the

- // index as the suffix.

+if (value == null || value.isEmpty || value == options.nullValue ||

+ value == options.emptyValueInRead) {

--- End diff --

ditto for excluding.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22226: [SPARK-25252][SQL] Support arrays of any types by...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/6#discussion_r214572336

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/json/JacksonUtils.scala

---

@@ -32,29 +32,29 @@ object JacksonUtils {

}

}

- /**

- * Verify if the schema is supported in JSON parsing.

- */

- def verifySchema(schema: StructType): Unit = {

-def verifyType(name: String, dataType: DataType): Unit = dataType

match {

- case NullType | BooleanType | ByteType | ShortType | IntegerType |

LongType | FloatType |

- DoubleType | StringType | TimestampType | DateType | BinaryType

| _: DecimalType =>

+ def verifyType(name: String, dataType: DataType): Unit = dataType match {

--- End diff --

We can do:

```

def verifyType(name: String, dataType: DataType): Unit = {

dataType match {

case ...

}

}

```

to reduce the diff.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22226: [SPARK-25252][SQL] Support arrays of any types by...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/6#discussion_r214572178

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/json/JacksonGenerator.scala

---

@@ -43,20 +42,22 @@ private[sql] class JacksonGenerator(

// we can directly access data in `ArrayData` without the help of

`SpecificMutableRow`.

private type ValueWriter = (SpecializedGetters, Int) => Unit

- // `JackGenerator` can only be initialized with a `StructType` or a

`MapType`.

- require(dataType.isInstanceOf[StructType] ||

dataType.isInstanceOf[MapType],

-s"JacksonGenerator only supports to be initialized with a

${StructType.simpleString} " +

- s"or ${MapType.simpleString} but got ${dataType.catalogString}")

+ // `JackGenerator` can only be initialized with a `StructType`, a

`MapType` or a `ArrayType`.

+ require(dataType.isInstanceOf[StructType] ||

dataType.isInstanceOf[MapType]

+|| dataType.isInstanceOf[ArrayType],

+s"JacksonGenerator only supports to be initialized with a

${StructType.simpleString}, " +

+ s"${MapType.simpleString} or ${ArrayType.simpleString} but got

${dataType.catalogString}")

// `ValueWriter`s for all fields of the schema

private lazy val rootFieldWriters: Array[ValueWriter] = dataType match {

case st: StructType => st.map(_.dataType).map(makeWriter).toArray

case _ => throw new UnsupportedOperationException(

- s"Initial type ${dataType.catalogString} must be a struct")

+ s"Initial type ${dataType.catalogString} must be a

${StructType.simpleString}")

}

// `ValueWriter` for array data storing rows of the schema.

private lazy val arrElementWriter: ValueWriter = dataType match {

+case at: ArrayType => makeWriter(at.elementType)

case st: StructType =>

--- End diff --

Can we do `case _: StructType | _: MapType => makeWriter(dataType)`?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22311: [SPARK-25305][SQL] Respect attribute name in CollapsePro...

Github user cloud-fan commented on the issue: https://github.com/apache/spark/pull/22311 > This behaivour depends on spark.sql.caseSensitive? No. It's writing not resolving a column, so Spark should be case-preserving. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22311: [SPARK-25305][SQL] Respect attribute name in Coll...

Github user maropu commented on a diff in the pull request:

https://github.com/apache/spark/pull/22311#discussion_r214570600

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/Optimizer.scala

---

@@ -515,8 +515,7 @@ object PushProjectionThroughUnion extends

Rule[LogicalPlan] with PredicateHelper

*/

object ColumnPruning extends Rule[LogicalPlan] {

private def sameOutput(output1: Seq[Attribute], output2:

Seq[Attribute]): Boolean =

-output1.size == output2.size &&

- output1.zip(output2).forall(pair => pair._1.semanticEquals(pair._2))

+output1.size == output2.size && output1.zip(output2).forall(pair =>

pair._1 == pair._2)

--- End diff --

I think still we need to check if the exprIds that they refere to are the

same.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22311: [SPARK-25305][SQL] Respect attribute name in CollapsePro...

Github user maropu commented on the issue: https://github.com/apache/spark/pull/22311 This behaivour depends on `spark.sql.caseSensitive`? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22112: [SPARK-23243][Core] Fix RDD.repartition() data correctne...

Github user cloud-fan commented on the issue: https://github.com/apache/spark/pull/22112 Update, according to the discussion in https://github.com/apache/spark/pull/9214 , the current behavior of shuffle writing is: "first write wins". We can't simply change it to "last write wins", as we may have concurrent read-write issues. To switch to "last write wins", we have to finish https://github.com/apache/spark/pull/6648 first. Since it's not realistic to complete https://github.com/apache/spark/pull/6648 before Spark 2.4, in this PR I fail the job directly if we hit a fetch failure and the preceding map stage is indeterminate. The error message asks users to do checkpoint to avoid this issue. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21273: [SPARK-17916][SQL] Fix empty string being parsed as null...

Github user koertkuipers commented on the issue: https://github.com/apache/spark/pull/21273 it would provide a workaround i think, yes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22063: [WIP][SPARK-25044][SQL] Address translation of LM...

Github user srowen commented on a diff in the pull request:

https://github.com/apache/spark/pull/22063#discussion_r214567945

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/expressions/UserDefinedFunction.scala

---

@@ -40,7 +41,7 @@ import org.apache.spark.sql.types.DataType

case class UserDefinedFunction protected[sql] (

f: AnyRef,

dataType: DataType,

-inputTypes: Option[Seq[DataType]]) {

+inputTypes: Option[Seq[ScalaReflection.Schema]]) {

--- End diff --

(BTW it was PR https://github.com/apache/spark/pull/22259 that was merged)

We can add back accessors, constructors, if it would make life easier for

callers. But if this is protected, who are the callers of this code we're

accommodating? maybe some hacky but important integration?

We'd have to rename `inputTypes` and then add back an accessor with the old

type.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22112: [SPARK-23243][Core] Fix RDD.repartition() data correctne...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/22112 **[Test build #95597 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/95597/testReport)** for PR 22112 at commit [`9a3b8f4`](https://github.com/apache/spark/commit/9a3b8f42c6f9f992fa870e0c7e35ef4be533b561). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22112: [SPARK-23243][Core] Fix RDD.repartition() data correctne...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22112 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/2773/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22112: [SPARK-23243][Core] Fix RDD.repartition() data correctne...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22112 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22308: [SPARK-25304][SQL][TEST] Fix HiveSparkSubmitSuite...

Github user asfgit closed the pull request at: https://github.com/apache/spark/pull/22308 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22308: [SPARK-25304][SQL][TEST] Fix HiveSparkSubmitSuite SPARK-...

Github user srowen commented on the issue: https://github.com/apache/spark/pull/22308 Merged to master --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21330: [SPARK-22234] Support distinct window functions

Github user jinxing64 commented on the issue: https://github.com/apache/spark/pull/21330 If this feature is interested, could you please help start the review @jiangxb1987 Thanks a lot. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22316: [SPARK-25048][SQL] Pivoting by multiple columns i...

Github user maropu commented on a diff in the pull request:

https://github.com/apache/spark/pull/22316#discussion_r214566503

--- Diff:

sql/core/src/test/scala/org/apache/spark/sql/DataFramePivotSuite.scala ---

@@ -308,4 +308,27 @@ class DataFramePivotSuite extends QueryTest with

SharedSQLContext {

assert(exception.getMessage.contains("aggregate functions are not

allowed"))

}

+

+ test("pivoting column list with values") {

+val expected = Row(2012, 1.0, null) :: Row(2013, 48000.0, 3.0)

:: Nil

+val df = trainingSales

+ .groupBy($"sales.year")

+ .pivot(struct(lower($"sales.course"), $"training"), Seq(

+struct(lit("dotnet"), lit("Experts")),

+struct(lit("java"), lit("Dummies")))

+ ).agg(sum($"sales.earnings"))

+

+checkAnswer(df, expected)

+ }

+

+ test("pivoting column list") {

+val exception = intercept[RuntimeException] {

+ trainingSales

+.groupBy($"sales.year")

+.pivot(struct(lower($"sales.course"), $"training"))

+.agg(sum($"sales.earnings"))

+.collect()

--- End diff --

Don't need this `.collect()` to cactch `RuntimeException`? btw, IMHO

`AnalysisException` is better than `RuntimeException` in this case? Can't we?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/7#discussion_r214566285

--- Diff: R/pkg/R/functions.R ---

@@ -3410,13 +3410,15 @@ setMethod("collect_set",

#' \dontrun{

#' head(select(df, split_string(df$Sex, "a")))

#' head(select(df, split_string(df$Class, "\\d")))

+#' head(select(df, split_string(df$Class, "\\d", 2)))

--- End diff --

The current build failure:

```

Undocumented arguments in documentation object 'column_string_functions'

'limit'

Functions with \usage entries need to have the appropriate \alias

entries, and all their arguments documented.

The \usage entries must correspond to syntactically valid R code.

See the chapter 'Writing R documentation files' in the 'Writing R

```

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22316: [SPARK-25048][SQL] Pivoting by multiple columns i...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/22316#discussion_r214566083

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/RelationalGroupedDataset.scala ---

@@ -406,6 +407,14 @@ class RelationalGroupedDataset protected[sql](

* df.groupBy($"year").pivot($"course", Seq("dotNET",

"Java")).sum($"earnings")

* }}}

*

+ * For pivoting by multiple columns, use the `struct` function to

combine the columns and values:

--- End diff --

Since the documentation states it's an overloaded version of ``` the

`pivot` method with `pivotColumn` of the `String` type. ```, shall we move this

contents to that method?

Also, I would document this, for instance,

From Spark 2.4.0, values can be literal columns, for instance, `struct`.

For pivoting by multiple columns, use the `struct` function to combine the

columns and values.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22302: [SPARK-21786][SQL][FOLLOWUP] Add compressionCodec test f...

Github user fjh100456 commented on the issue: https://github.com/apache/spark/pull/22302 @maropu I'd update the PR description, thank you! --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22314: [SPARK-25307][SQL] ArraySort function may return ...

Github user maropu commented on a diff in the pull request:

https://github.com/apache/spark/pull/22314#discussion_r214565371

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/collectionOperations.scala

---

@@ -1047,7 +1047,8 @@ trait ArraySortLike extends ExpectsInputTypes {

s"int $c = ${ctx.genComp(elementType, s"(($jt) $o1)", s"(($jt)

$o2)")};"

}

val nonNullPrimitiveAscendingSort =

-if (CodeGenerator.isPrimitiveType(elementType) && !containsNull) {

+if (CodeGenerator.isPrimitiveType(elementType) && elementType !=

BooleanType

+ && !containsNull) {

--- End diff --

How about making this condition a separate method like

`isFastSortSupported`?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22314: [SPARK-25307][SQL] ArraySort function may return ...

Github user maropu commented on a diff in the pull request:

https://github.com/apache/spark/pull/22314#discussion_r214565311

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/collectionOperations.scala

---

@@ -1047,7 +1047,8 @@ trait ArraySortLike extends ExpectsInputTypes {

s"int $c = ${ctx.genComp(elementType, s"(($jt) $o1)", s"(($jt)

$o2)")};"

}

val nonNullPrimitiveAscendingSort =

--- End diff --

`nonNullPrimitiveNumericAscendingSort` or `fastSortCode` preferred?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22306: [SPARK-25300][CORE]Unified the configuration parameter `...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/22306 **[Test build #95596 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/95596/testReport)** for PR 22306 at commit [`8d7baee`](https://github.com/apache/spark/commit/8d7baee91199141f5999f0e49ab3092fb121cc41). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22310: [Spark-25298][Build] Improve build definition for Scala ...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/22310 **[Test build #95595 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/95595/testReport)** for PR 22310 at commit [`77edff4`](https://github.com/apache/spark/commit/77edff4b8d3a2a2a32d7b4ba7d7cc9c8175ea231). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22306: [SPARK-25300][CORE]Unified the configuration parameter `...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22306 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/2772/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22306: [SPARK-25300][CORE]Unified the configuration parameter `...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22306 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22310: [Spark-25298][Build] Improve build definition for Scala ...

Github user sadhen commented on the issue: https://github.com/apache/spark/pull/22310 The problem of package hierarchy is fixed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22315: [SPARK-25308][SQL] ArrayContains function may ret...

Github user maropu commented on a diff in the pull request:

https://github.com/apache/spark/pull/22315#discussion_r214564677

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/collectionOperations.scala

---

@@ -1464,17 +1464,35 @@ case class ArrayContains(left: Expression, right:

Expression)

nullSafeCodeGen(ctx, ev, (arr, value) => {

val i = ctx.freshName("i")

val getValue = CodeGenerator.getValue(arr, right.dataType, i)

- s"""

- for (int $i = 0; $i < $arr.numElements(); $i ++) {

-if ($arr.isNullAt($i)) {

- ${ev.isNull} = true;

-} else if (${ctx.genEqual(right.dataType, value, getValue)}) {

- ${ev.isNull} = false;

- ${ev.value} = true;

- break;

+ def checkAndSetIsNullCode(body: String) = {

--- End diff --

nit: How about ` def checkAndSetIsNullCode(body: String) = if

(nullable) {`?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22270: [SPARK-25267][SQL][TEST] Disable ConvertToLocalRe...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/22270#discussion_r214564426

--- Diff:

sql/core/src/test/scala/org/apache/spark/sql/DataFrameFunctionsSuite.scala ---

@@ -85,12 +85,12 @@ class DataFrameFunctionsSuite extends QueryTest with

SharedSQLContext {

}

val df5 = Seq((Seq("a", null), Seq(1, 2))).toDF("k", "v")

-intercept[RuntimeException] {

+intercept[Exception] {

--- End diff --

Shall we also catch specific exception per

https://github.com/databricks/scala-style-guide#testing-intercepting

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22315: [SPARK-25308][SQL] ArrayContains function may ret...

Github user maropu commented on a diff in the pull request:

https://github.com/apache/spark/pull/22315#discussion_r214564353

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/collectionOperations.scala

---

@@ -1464,17 +1464,35 @@ case class ArrayContains(left: Expression, right:

Expression)

nullSafeCodeGen(ctx, ev, (arr, value) => {

val i = ctx.freshName("i")

val getValue = CodeGenerator.getValue(arr, right.dataType, i)

- s"""

- for (int $i = 0; $i < $arr.numElements(); $i ++) {

-if ($arr.isNullAt($i)) {

- ${ev.isNull} = true;

-} else if (${ctx.genEqual(right.dataType, value, getValue)}) {

- ${ev.isNull} = false;

- ${ev.value} = true;

- break;

+ def checkAndSetIsNullCode(body: String) = {

+if (nullable) {

+ s"""

+ |if ($arr.isNullAt($i)) {

--- End diff --

How about the case `right.nullable = true` and

`left.nullable = false AND

left.dataType.asInstanceOf[ArrayType].containsNull = false`?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22318: [SPARK-25150][SQL] Fix attribute deduplication in join

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22318 Can one of the admins verify this patch? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22318: [SPARK-25150][SQL] Fix attribute deduplication in join

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22318 Can one of the admins verify this patch? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22310: [Spark-25298][Build] Improve build definition for Scala ...

Github user sadhen commented on the issue: https://github.com/apache/spark/pull/22310 @srowen Sorry I should have explained why I made these changes. The follow steps failed to compile: ``` $ ./dev/change-scala-version.sh 2.12 $ ./build/sbt -Dscala-2.12 -Dscala.version=2.12.6 > project repl > compile ``` After these changes, the commands are simplified and the compile works: ``` $ ./dev/change-scala-version.sh 2.12 $ ./build/sbt -Dscala.version=2.12.6 > project repl > compile ``` --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22312: [SPARK-17916][SQL] Fix new behavior when quote is set an...

Github user HyukjinKwon commented on the issue: https://github.com/apache/spark/pull/22312 #22234 was already open. Wouldn't it be able to workaround if it's configurable? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22318: [SPARK-25150][SQL] Fix attribute deduplication in join

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22318 Can one of the admins verify this patch? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21273: [SPARK-17916][SQL] Fix empty string being parsed as null...

Github user HyukjinKwon commented on the issue: https://github.com/apache/spark/pull/21273 https://github.com/apache/spark/pull/22234 was already open. Wouldn't it be able to workaround if it's configurable? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22318: [SPARK-25150][SQL] Fix attribute deduplication in...

GitHub user peter-toth opened a pull request: https://github.com/apache/spark/pull/22318 [SPARK-25150][SQL] Fix attribute deduplication in join ## What changes were proposed in this pull request? Fixes attribute deduplication in join conditions. ## How was this patch tested? Added unit test. Please review http://spark.apache.org/contributing.html before opening a pull request. You can merge this pull request into a Git repository by running: $ git pull https://github.com/peter-toth/spark SPARK-25150 Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/22318.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #22318 commit be7d8e7fb8439e3bb3238269263a37556e6bf9b1 Author: Peter Toth Date: 2018-09-02T17:56:18Z [SPARK-25150][SQL] Fix attribute deduplication in join --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

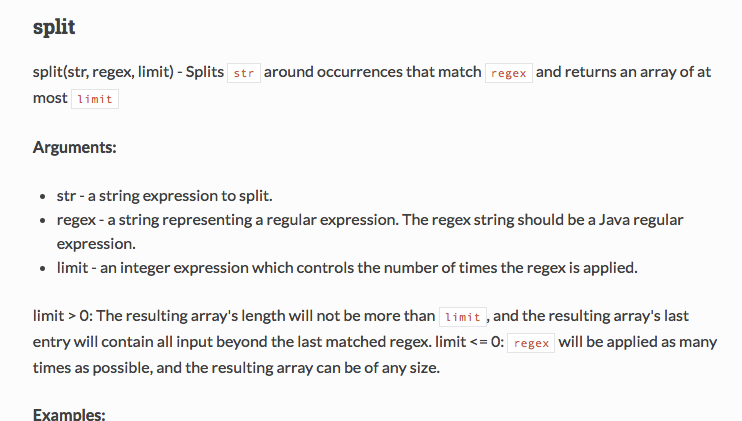

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Github user HyukjinKwon commented on a diff in the pull request: https://github.com/apache/spark/pull/7#discussion_r214563659 --- Diff: sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/regexpExpressions.scala --- @@ -229,36 +229,58 @@ case class RLike(left: Expression, right: Expression) extends StringRegexExpress /** - * Splits str around pat (pattern is a regular expression). + * Splits str around matches of the given regex. */ @ExpressionDescription( - usage = "_FUNC_(str, regex) - Splits `str` around occurrences that match `regex`.", + usage = "_FUNC_(str, regex, limit) - Splits `str` around occurrences that match `regex`" + +" and returns an array of at most `limit`", + arguments = """ +Arguments: + * str - a string expression to split. + * regex - a string representing a regular expression. The regex string should be a +Java regular expression. + * limit - an integer expression which controls the number of times the regex is applied. + +limit > 0: The resulting array's length will not be more than `limit`, + and the resulting array's last entry will contain all input + beyond the last matched regex. +limit <= 0: `regex` will be applied as many times as possible, and +the resulting array can be of any size. --- End diff -- Current bullt doc is:  which doesn;t look quite nice. Please verify the output by referring https://github.com/apache/spark/tree/master/docs --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/7#discussion_r214563339

--- Diff: R/pkg/R/functions.R ---

@@ -3410,13 +3410,15 @@ setMethod("collect_set",

#' \dontrun{

#' head(select(df, split_string(df$Sex, "a")))

#' head(select(df, split_string(df$Class, "\\d")))

+#' head(select(df, split_string(df$Class, "\\d", 2)))

--- End diff --

We should add documentation for R side too. Please document `limit` here

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22308: [SPARK-25304][SQL][TEST] Fix HiveSparkSubmitSuite SPARK-...

Github user sadhen commented on the issue: https://github.com/apache/spark/pull/22308 @srowen The 2.12 jar is compiled and packaged from `Main.scala` and `MyCoolClass.scala`. Not a copy of 2.10 jar. Diff it, you will verify it. The steps to generate it: ``` mvn install // install the 2.4.0-SNAPSHOT at project root sbt package // package at sql/hive/src/test/resources/regression-test-SPARK-8489 ``` This is the build.sbt: ``` scalaVersion := 2.12.6 libraryDependencies ++= Seq( "org.apache.spark" %% "spark-sql" % "2.4.0-SNAPSHOT" ) ``` --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/7#discussion_r214562895

--- Diff: sql/core/src/test/resources/sql-tests/inputs/string-functions.sql

---

@@ -46,4 +46,10 @@ FROM (

encode(string(id + 2), 'utf-8') col3,

encode(string(id + 3), 'utf-8') col4

FROM range(10)

-)

+);

+

+-- split function

+select split('aa1cc2ee3', '[1-9]+');

+select split('aa1cc2ee3', '[1-9]+', -1);

+select split('aa1cc2ee3', '[1-9]+', 0);

+select split('aa1cc2ee3', '[1-9]+', 2);

--- End diff --

Likewise, I don't think we should add test cases for all cases. Just one

test case and check if they are called fine.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Github user HyukjinKwon commented on a diff in the pull request: https://github.com/apache/spark/pull/7#discussion_r214562760 --- Diff: sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/regexpExpressions.scala --- @@ -229,36 +229,58 @@ case class RLike(left: Expression, right: Expression) extends StringRegexExpress /** - * Splits str around pat (pattern is a regular expression). + * Splits str around matches of the given regex. */ @ExpressionDescription( - usage = "_FUNC_(str, regex) - Splits `str` around occurrences that match `regex`.", + usage = "_FUNC_(str, regex, limit) - Splits `str` around occurrences that match `regex`" + +" and returns an array of at most `limit`", + arguments = """ +Arguments: + * str - a string expression to split. + * regex - a string representing a regular expression. The regex string should be a +Java regular expression. + * limit - an integer expression which controls the number of times the regex is applied. + +limit > 0: The resulting array's length will not be more than `limit`, + and the resulting array's last entry will contain all input + beyond the last matched regex. +limit <= 0: `regex` will be applied as many times as possible, and +the resulting array can be of any size. --- End diff -- I would do this: ``` * limit > 0: The resulting array's length will not be more than `limit`, and the resulting array's last entry will contain all input beyond the last matched regex. * limit <= 0: `regex` will be applied as many times as possible, and the resulting array can be of any size. ``` This is just markdown rendered by mkdocs; so probably, it's better to make it consistent with other docs. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22300: [SPARK-25296][SQL][TEST] Create ExplainSuite

Github user maropu commented on the issue: https://github.com/apache/spark/pull/22300 @kiszk ping, could you do that? https://github.com/apache/spark/pull/22300#issuecomment-417706754 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22198: [SPARK-25121][SQL] Supports multi-part table names for b...

Github user maropu commented on the issue: https://github.com/apache/spark/pull/22198 ping --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22204: [SPARK-25196][SQL] Analyze column statistics in cached q...

Github user maropu commented on the issue: https://github.com/apache/spark/pull/22204 @dongjoon-hyun could you check again? thanks! (btw, congrats, committer!) --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/7#discussion_r214562525

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/regexpExpressions.scala

---

@@ -229,36 +229,58 @@ case class RLike(left: Expression, right: Expression)

extends StringRegexExpress

/**

- * Splits str around pat (pattern is a regular expression).

+ * Splits str around matches of the given regex.

*/

@ExpressionDescription(

- usage = "_FUNC_(str, regex) - Splits `str` around occurrences that match

`regex`.",

+ usage = "_FUNC_(str, regex, limit) - Splits `str` around occurrences

that match `regex`" +

+" and returns an array of at most `limit`",

+ arguments = """

+Arguments:

+ * str - a string expression to split.

+ * regex - a string representing a regular expression. The regex

string should be a

+Java regular expression.

+ * limit - an integer expression which controls the number of times

the regex is applied.

+

+limit > 0: The resulting array's length will not be more than

`limit`,

+ and the resulting array's last entry will contain all

input

+ beyond the last matched regex.

+limit <= 0: `regex` will be applied as many times as possible, and

+the resulting array can be of any size.

+ """,

examples = """

Examples:

> SELECT _FUNC_('oneAtwoBthreeC', '[ABC]');

["one","two","three",""]

+ > SELECT _FUNC_('oneAtwoBthreeC', '[ABC]', -1);

+ ["one","two","three",""]

+ > SELECT _FUNC_('oneAtwoBthreeC', '[ABC]', 2);

+ ["one","twoBthreeC"]

""")

-case class StringSplit(str: Expression, pattern: Expression)

- extends BinaryExpression with ImplicitCastInputTypes {

+case class StringSplit(str: Expression, regex: Expression, limit:

Expression)

+ extends TernaryExpression with ImplicitCastInputTypes {

- override def left: Expression = str

- override def right: Expression = pattern

override def dataType: DataType = ArrayType(StringType)

- override def inputTypes: Seq[DataType] = Seq(StringType, StringType)

+ override def inputTypes: Seq[DataType] = Seq(StringType, StringType,

IntegerType)

+ override def children: Seq[Expression] = str :: regex :: limit :: Nil

+

+ def this(exp: Expression, regex: Expression) = this(exp, regex,

Literal(-1));

- override def nullSafeEval(string: Any, regex: Any): Any = {

-val strings =

string.asInstanceOf[UTF8String].split(regex.asInstanceOf[UTF8String], -1)

+ override def nullSafeEval(string: Any, regex: Any, limit: Any): Any = {

+val strings = string.asInstanceOf[UTF8String].split(

+ regex.asInstanceOf[UTF8String], limit.asInstanceOf[Int])

new GenericArrayData(strings.asInstanceOf[Array[Any]])

}

override def doGenCode(ctx: CodegenContext, ev: ExprCode): ExprCode = {

val arrayClass = classOf[GenericArrayData].getName

-nullSafeCodeGen(ctx, ev, (str, pattern) =>

+nullSafeCodeGen(ctx, ev, (str, regex, limit) => {

// Array in java is covariant, so we don't need to cast UTF8String[]

to Object[].

- s"""${ev.value} = new $arrayClass($str.split($pattern, -1));""")

+ s"""${ev.value} = new

$arrayClass($str.split($regex,$limit));""".stripMargin

+})

}

override def prettyName: String = "split"

+

--- End diff --

Not a big deal but let's revert unrelated newline change.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/7#discussion_r214562493

--- Diff:

common/unsafe/src/main/java/org/apache/spark/unsafe/types/UTF8String.java ---

@@ -952,6 +952,11 @@ public static UTF8String concatWs(UTF8String

separator, UTF8String... inputs) {

}

public UTF8String[] split(UTF8String pattern, int limit) {

+// Java String's split method supports "ignore empty string" behavior

when the limit is 0.

+// To avoid this, we fall back to -1 when the limit is 0.

--- End diff --

I also would leave a short justification for this given

https://github.com/apache/spark/pull/7#issuecomment-417471241

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/7#discussion_r214562429

--- Diff:

common/unsafe/src/test/java/org/apache/spark/unsafe/types/UTF8StringSuite.java

---

@@ -394,12 +394,14 @@ public void substringSQL() {

@Test

public void split() {

-

assertTrue(Arrays.equals(fromString("ab,def,ghi").split(fromString(","), -1),

- new UTF8String[]{fromString("ab"), fromString("def"),

fromString("ghi")}));

-

assertTrue(Arrays.equals(fromString("ab,def,ghi").split(fromString(","), 2),

- new UTF8String[]{fromString("ab"), fromString("def,ghi")}));

-

assertTrue(Arrays.equals(fromString("ab,def,ghi").split(fromString(","), 2),

- new UTF8String[]{fromString("ab"), fromString("def,ghi")}));

+UTF8String[] negativeAndZeroLimitCase =

+new UTF8String[]{fromString("ab"), fromString("def"),

fromString("ghi"), fromString("")};

+

assertTrue(Arrays.equals(fromString("ab,def,ghi,").split(fromString(","), 0),

+negativeAndZeroLimitCase));

+

assertTrue(Arrays.equals(fromString("ab,def,ghi,").split(fromString(","), -1),

--- End diff --

Why should we change the existing tests? Just add one test to check

```

if (limit == 0) {

limit = -1;

}

```

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Github user maropu commented on a diff in the pull request:

https://github.com/apache/spark/pull/7#discussion_r214562374

--- Diff:

common/unsafe/src/main/java/org/apache/spark/unsafe/types/UTF8String.java ---

@@ -952,6 +952,11 @@ public static UTF8String concatWs(UTF8String

separator, UTF8String... inputs) {

}

public UTF8String[] split(UTF8String pattern, int limit) {

+// Java String's split method supports "ignore empty string" behavior

when the limit is 0.

+// To avoid this, we fall back to -1 when the limit is 0.

--- End diff --

Could you narrow down why we avoid the -1 case for others? thanks.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/7#discussion_r214562388

--- Diff: R/pkg/tests/fulltests/test_sparkSQL.R ---

@@ -1803,6 +1803,18 @@ test_that("string operators", {

collect(select(df4, split_string(df4$a, "")))[1, 1],

list(list("a.b@c.d 1", "b"))

)

+ expect_equal(

+collect(select(df4, split_string(df4$a, "\\.", 2)))[1, 1],

+list(list("a", "b@c.d 1\\b"))

+ )

+ expect_equal(

+collect(select(df4, split_string(df4$a, "b", -2)))[1, 1],

+list(list("a.", "@c.d 1\\", ""))

+ )

+ expect_equal(

+collect(select(df4, split_string(df4$a, "b", 0)))[1, 1],

--- End diff --

I wouldn't add all of those cases for R. One test case to check if they can

be called should be good enough.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/7#discussion_r214562340

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/functions.scala ---

@@ -2546,15 +2546,39 @@ object functions {

def soundex(e: Column): Column = withExpr { SoundEx(e.expr) }

/**

- * Splits str around pattern (pattern is a regular expression).

+ * Splits str around matches of the given regex.

*

- * @note Pattern is a string representation of the regular expression.

+ * @param str a string expression to split

+ * @param regex a string representing a regular expression. The regex

string should be

+ * a Java regular expression.

*

* @group string_funcs

* @since 1.5.0

*/

- def split(str: Column, pattern: String): Column = withExpr {

-StringSplit(str.expr, lit(pattern).expr)

+ def split(str: Column, regex: String): Column = withExpr {

+StringSplit(str.expr, Literal(regex), Literal(-1))

+ }

+

+ /**

+ * Splits str around matches of the given regex.

+ *

+ * @param str a string expression to split

+ * @param regex a string representing a regular expression. The regex

string should be

+ * a Java regular expression.

+ * @param limit an integer expression which controls the number of times

the regex is applied.

+ *

+ *limit greater than 0: The resulting array's length will not be

more than `limit`,

+ * and the resulting array's last entry

will contain all input beyond

+ * the last matched regex.

+ *

+ *limit less than or equal to 0: `regex` will be applied as many

times as possible, and

+ * the resulting array can be of

any size.

--- End diff --

I think you can refer

https://github.com/apache/spark/blob/e754887182304ad0d622754e33192ebcdd515965/sql/core/src/main/scala/org/apache/spark/sql/DataFrameReader.scala#L338-L386

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22306: [SPARK-25300][CORE]Unified the configuration parameter `...

Github user 10110346 commented on the issue: https://github.com/apache/spark/pull/22306 Thanks,I will apply them to test cases @kiszk --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Github user HyukjinKwon commented on a diff in the pull request: https://github.com/apache/spark/pull/7#discussion_r214562121 --- Diff: python/pyspark/sql/functions.py --- @@ -1669,20 +1669,33 @@ def repeat(col, n): return Column(sc._jvm.functions.repeat(_to_java_column(col), n)) -@since(1.5) +@since(2.4) @ignore_unicode_prefix -def split(str, pattern): +def split(str, pattern, limit=-1): """ -Splits str around pattern (pattern is a regular expression). +Splits str around matches of the given pattern. + +:param str: a string expression to split +:param pattern: a string representing a regular expression. The regex string should be + a Java regular expression. +:param limit: an integer expression which controls the number of times the pattern is applied. -.. note:: pattern is a string represent the regular expression. +* ``limit > 0``: The resulting array's length will not be more than `limit`, and the + resulting array's last entry will contain all input beyond the last + matched pattern. +* ``limit <= 0``: `pattern` will be applied as many times as possible, and the resulting + array can be of any size. --- End diff -- Indentation: ```diff -* ``limit > 0``: The resulting array's length will not be more than `limit`, and the - resulting array's last entry will contain all input beyond the last - matched pattern. -* ``limit <= 0``: `pattern` will be applied as many times as possible, and the resulting - array can be of any size. +* ``limit > 0``: The resulting array's length will not be more than `limit`, and the + resulting array's last entry will contain all input beyond the last + matched pattern. +* ``limit <= 0``: `pattern` will be applied as many times as possible, and the resulting + array can be of any size. ``` Did you check the HTML output? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Github user HyukjinKwon commented on a diff in the pull request: https://github.com/apache/spark/pull/7#discussion_r214562034 --- Diff: python/pyspark/sql/functions.py --- @@ -1669,20 +1669,33 @@ def repeat(col, n): return Column(sc._jvm.functions.repeat(_to_java_column(col), n)) -@since(1.5) +@since(2.4) --- End diff -- I wouldn't change `since`. You can describe the behaviour changed by, for instance: ```python .. versionchanged:: 2.4 The ``limit`` parameter blah blah.. ``` --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22218: [SPARK-25228][CORE]Add executor CPU time metric.

Github user maropu commented on a diff in the pull request:

https://github.com/apache/spark/pull/22218#discussion_r214561873

--- Diff:

core/src/main/scala/org/apache/spark/executor/ExecutorSource.scala ---

@@ -73,6 +76,28 @@ class ExecutorSource(threadPool: ThreadPoolExecutor,

executorId: String) extends

registerFileSystemStat(scheme, "write_ops", _.getWriteOps(), 0)

}

+ // Dropwizard metrics gauge measuring the executor's process CPU time.

+ // This Gauge will try to get and return the JVM Process CPU time or

return -1 otherwise.

+ // The CPU time value is returned in nanoseconds.

+ // It will use proprietary extensions such as

com.sun.management.OperatingSystemMXBean or

+ // com.ibm.lang.management.OperatingSystemMXBean, if available.

+ metricRegistry.register(MetricRegistry.name("jvmCpuTime"), new

Gauge[Long] {

+val mBean: MBeanServer = ManagementFactory.getPlatformMBeanServer

+val name = new ObjectName("java.lang", "type", "OperatingSystem")

+override def getValue: Long = {

+ try {

+val attribute = mBean.getAttribute(name, "ProcessCpuTime")

+if (attribute != null) {

--- End diff --

I checked the doc for `getAttribute though, when does it return null?

https://docs.oracle.com/javase/8/docs/api/javax/management/MBeanServerConnection.html#getAttribute-javax.management.ObjectName-java.lang.String-

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/7#discussion_r214561691

--- Diff: sql/core/src/test/resources/sql-tests/inputs/string-functions.sql

---

@@ -46,4 +46,10 @@ FROM (

encode(string(id + 2), 'utf-8') col3,

encode(string(id + 3), 'utf-8') col4

FROM range(10)

-)

+);

+

+-- split function

+select split('aa1cc2ee3', '[1-9]+');

--- End diff --

I would use upper cases here for keywords.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/7#discussion_r214561410

--- Diff: python/pyspark/sql/functions.py ---

@@ -1669,20 +1669,33 @@ def repeat(col, n):

return Column(sc._jvm.functions.repeat(_to_java_column(col), n))

-@since(1.5)

+@since(2.4)

@ignore_unicode_prefix

-def split(str, pattern):

+def split(str, pattern, limit=-1):

"""

-Splits str around pattern (pattern is a regular expression).

+Splits str around matches of the given pattern.

+

+:param str: a string expression to split

+:param pattern: a string representing a regular expression. The regex

string should be

+ a Java regular expression.

+:param limit: an integer expression which controls the number of times

the pattern is applied.

-.. note:: pattern is a string represent the regular expression.

+* ``limit > 0``: The resulting array's length will not be more

than `limit`, and the

+ resulting array's last entry will contain all

input beyond the last

+ matched pattern.

+* ``limit <= 0``: `pattern` will be applied as many times as

possible, and the resulting

+ array can be of any size.

->>> df = spark.createDataFrame([('ab12cd',)], ['s',])

->>> df.select(split(df.s, '[0-9]+').alias('s')).collect()

-[Row(s=[u'ab', u'cd'])]

+>>> df = spark.createDataFrame([('oneAtwoBthreeC',)], ['s',])

+>>> df.select(split(df.s, '[ABC]', 2).alias('s')).collect()

+[Row(s=[u'one', u'twoBthreeC'])]

+>>> df.select(split(df.s, '[ABC]', -1).alias('s')).collect()

+[Row(s=[u'one', u'two', u'three', u''])]

+>>> df.select(split(df.s, '[ABC]', 0).alias('s')).collect()

--- End diff --

I wouldn't have this test since we now don't have a specific behaviour to 0.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/7#discussion_r214561362

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/functions.scala ---

@@ -2546,15 +2546,39 @@ object functions {

def soundex(e: Column): Column = withExpr { SoundEx(e.expr) }

/**

- * Splits str around pattern (pattern is a regular expression).

+ * Splits str around matches of the given regex.

*

- * @note Pattern is a string representation of the regular expression.

+ * @param str a string expression to split

+ * @param regex a string representing a regular expression. The regex

string should be

+ * a Java regular expression.

*

* @group string_funcs

* @since 1.5.0

*/

- def split(str: Column, pattern: String): Column = withExpr {

-StringSplit(str.expr, lit(pattern).expr)

+ def split(str: Column, regex: String): Column = withExpr {

--- End diff --

Shall we just keep it as `pattern`? I think we don't better change the

name. Doesn;t `pattern` also make sense?

---

-