[GitHub] [spark] viirya commented on a change in pull request #24675: [SPARK-27803][SQL][PYTHON] Fix column pruning for Python UDF

viirya commented on a change in pull request #24675: [SPARK-27803][SQL][PYTHON]

Fix column pruning for Python UDF

URL: https://github.com/apache/spark/pull/24675#discussion_r286786053

##

File path:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/plans/logical/pythonLogicalOperators.scala

##

@@ -38,3 +38,30 @@ case class FlatMapGroupsInPandas(

*/

override val producedAttributes = AttributeSet(output)

}

+

+trait BaseEvalPython extends UnaryNode {

Review comment:

Is `producedAttributes` missing from this? Previously, `BatchEvalPython` and

`ArrowEvalPython` have it defined.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] viirya commented on a change in pull request #24675: [SPARK-27803][SQL][PYTHON] Fix column pruning for Python UDF

viirya commented on a change in pull request #24675: [SPARK-27803][SQL][PYTHON]

Fix column pruning for Python UDF

URL: https://github.com/apache/spark/pull/24675#discussion_r286785463

##

File path:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/plans/logical/pythonLogicalOperators.scala

##

@@ -38,3 +38,30 @@ case class FlatMapGroupsInPandas(

*/

override val producedAttributes = AttributeSet(output)

}

+

+trait BaseEvalPython extends UnaryNode {

+

+ def udfs: Seq[PythonUDF]

+

+ def resultAttrs: Seq[Attribute]

+

+ override def output: Seq[Attribute] = child.output ++ resultAttrs

+

+ override def references: AttributeSet =

AttributeSet(udfs.flatMap(_.references))

Review comment:

If `references` only cover references in `udfs`, will some output attributes

from child that aren't referred by `udfs` be pruned from `BaseEvalPython`?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun edited a comment on issue #24680: [SPARK-26045][BUILD] Leave avro, avro-ipc dependendencies as compile scope even for hadoop-provided usages

dongjoon-hyun edited a comment on issue #24680: [SPARK-26045][BUILD] Leave avro, avro-ipc dependendencies as compile scope even for hadoop-provided usages URL: https://github.com/apache/spark/pull/24680#issuecomment-495068392 I'll leave this PR here since @vanzin 's review is requested. We need this in `master/2.4` branches. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on issue #24640: [SPARK-27770] [SQL] [TEST] Port AGGREGATES.sql [Part 1]

dongjoon-hyun commented on issue #24640: [SPARK-27770] [SQL] [TEST] Port AGGREGATES.sql [Part 1] URL: https://github.com/apache/spark/pull/24640#issuecomment-495074398 Could you fix the UT failure? ``` [info] - aggregates_part1.sql *** FAILED *** (3 seconds, 720 milliseconds) ``` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] pengbo removed a comment on issue #24666: [SPARK-27482][SQL][WEBUI] Show BroadcastHashJoinExec numOutputRows statistics info on SparkSQL UI page

pengbo removed a comment on issue #24666: [SPARK-27482][SQL][WEBUI] Show BroadcastHashJoinExec numOutputRows statistics info on SparkSQL UI page URL: https://github.com/apache/spark/pull/24666#issuecomment-495049729 retest this please This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] pengbo commented on issue #24666: [SPARK-27482][SQL][WEBUI] Show BroadcastHashJoinExec numOutputRows statistics info on SparkSQL UI page

pengbo commented on issue #24666: [SPARK-27482][SQL][WEBUI] Show BroadcastHashJoinExec numOutputRows statistics info on SparkSQL UI page URL: https://github.com/apache/spark/pull/24666#issuecomment-495073870 retest this please This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on issue #24675: [SPARK-27803][SQL][PYTHON] Fix column pruning for Python UDF

HyukjinKwon commented on issue #24675: [SPARK-27803][SQL][PYTHON] Fix column pruning for Python UDF URL: https://github.com/apache/spark/pull/24675#issuecomment-495073865 makes sense to me. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on issue #24344: [SPARK-27440][SQL] Optimize uncorrelated predicate subquery

cloud-fan commented on issue #24344: [SPARK-27440][SQL] Optimize uncorrelated predicate subquery URL: https://github.com/apache/spark/pull/24344#issuecomment-495070324 I think @dilipbiswal has a good point here. For non-correlated EXISTS/IN, it's a bad idea to collect all the data of a table to the driver side and do the calculation. That said, we should not have a physical version of EXISTS/IN, they always need to be converted to join (sorry for the back and forth!). But we do have a chance to optimize non-correlated EXISTS/IN. More generally, if a left semi/anti join has a condition that only refers to attributes from the right side, we can probably turn this join into a filter operator. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on issue #24559: [SPARK-27658][SQL] Add FunctionCatalog API

AmplabJenkins removed a comment on issue #24559: [SPARK-27658][SQL] Add FunctionCatalog API URL: https://github.com/apache/spark/pull/24559#issuecomment-495068453 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/105709/ Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on issue #24559: [SPARK-27658][SQL] Add FunctionCatalog API

AmplabJenkins removed a comment on issue #24559: [SPARK-27658][SQL] Add FunctionCatalog API URL: https://github.com/apache/spark/pull/24559#issuecomment-495068451 Merged build finished. Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on issue #24680: [SPARK-26045][BUILD] Leave avro, avro-ipc dependendencies as compile scope even for hadoop-provided usages

dongjoon-hyun commented on issue #24680: [SPARK-26045][BUILD] Leave avro, avro-ipc dependendencies as compile scope even for hadoop-provided usages URL: https://github.com/apache/spark/pull/24680#issuecomment-495068392 I'll leave this PR here since @vanzin 's review is requested. We need this in `master/2.4` branch. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on issue #24559: [SPARK-27658][SQL] Add FunctionCatalog API

AmplabJenkins commented on issue #24559: [SPARK-27658][SQL] Add FunctionCatalog API URL: https://github.com/apache/spark/pull/24559#issuecomment-495068451 Merged build finished. Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on issue #24559: [SPARK-27658][SQL] Add FunctionCatalog API

AmplabJenkins commented on issue #24559: [SPARK-27658][SQL] Add FunctionCatalog API URL: https://github.com/apache/spark/pull/24559#issuecomment-495068453 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/105709/ Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA removed a comment on issue #24559: [SPARK-27658][SQL] Add FunctionCatalog API

SparkQA removed a comment on issue #24559: [SPARK-27658][SQL] Add FunctionCatalog API URL: https://github.com/apache/spark/pull/24559#issuecomment-495039716 **[Test build #105709 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/105709/testReport)** for PR 24559 at commit [`21a5f07`](https://github.com/apache/spark/commit/21a5f074e3b564a353da28901c8d6cb107ec04c2). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on issue #24559: [SPARK-27658][SQL] Add FunctionCatalog API

SparkQA commented on issue #24559: [SPARK-27658][SQL] Add FunctionCatalog API URL: https://github.com/apache/spark/pull/24559#issuecomment-495068179 **[Test build #105709 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/105709/testReport)** for PR 24559 at commit [`21a5f07`](https://github.com/apache/spark/commit/21a5f074e3b564a353da28901c8d6cb107ec04c2). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on issue #24617: [SPARK-27732][SQL] Add v2 CreateTable implementation.

AmplabJenkins commented on issue #24617: [SPARK-27732][SQL] Add v2 CreateTable implementation. URL: https://github.com/apache/spark/pull/24617#issuecomment-495067333 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/105708/ Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on issue #24617: [SPARK-27732][SQL] Add v2 CreateTable implementation.

AmplabJenkins commented on issue #24617: [SPARK-27732][SQL] Add v2 CreateTable implementation. URL: https://github.com/apache/spark/pull/24617#issuecomment-495067331 Merged build finished. Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on issue #24617: [SPARK-27732][SQL] Add v2 CreateTable implementation.

AmplabJenkins removed a comment on issue #24617: [SPARK-27732][SQL] Add v2 CreateTable implementation. URL: https://github.com/apache/spark/pull/24617#issuecomment-495067331 Merged build finished. Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on issue #24617: [SPARK-27732][SQL] Add v2 CreateTable implementation.

AmplabJenkins removed a comment on issue #24617: [SPARK-27732][SQL] Add v2 CreateTable implementation. URL: https://github.com/apache/spark/pull/24617#issuecomment-495067333 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/105708/ Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on issue #24617: [SPARK-27732][SQL] Add v2 CreateTable implementation.

SparkQA commented on issue #24617: [SPARK-27732][SQL] Add v2 CreateTable implementation. URL: https://github.com/apache/spark/pull/24617#issuecomment-495067035 **[Test build #105708 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/105708/testReport)** for PR 24617 at commit [`47d89d3`](https://github.com/apache/spark/commit/47d89d37a196e75173996adc6feb475a5c8ce87b). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA removed a comment on issue #24617: [SPARK-27732][SQL] Add v2 CreateTable implementation.

SparkQA removed a comment on issue #24617: [SPARK-27732][SQL] Add v2 CreateTable implementation. URL: https://github.com/apache/spark/pull/24617#issuecomment-495038346 **[Test build #105708 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/105708/testReport)** for PR 24617 at commit [`47d89d3`](https://github.com/apache/spark/commit/47d89d37a196e75173996adc6feb475a5c8ce87b). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on issue #24671: [SPARK-27811][Core][Docs]Improve docs about spark.driver.memoryOverhead and spark.executor.memoryOverhead.

AmplabJenkins removed a comment on issue #24671: [SPARK-27811][Core][Docs]Improve docs about spark.driver.memoryOverhead and spark.executor.memoryOverhead. URL: https://github.com/apache/spark/pull/24671#issuecomment-495066701 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/105710/ Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on issue #24671: [SPARK-27811][Core][Docs]Improve docs about spark.driver.memoryOverhead and spark.executor.memoryOverhead.

AmplabJenkins removed a comment on issue #24671: [SPARK-27811][Core][Docs]Improve docs about spark.driver.memoryOverhead and spark.executor.memoryOverhead. URL: https://github.com/apache/spark/pull/24671#issuecomment-495066698 Merged build finished. Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on issue #24671: [SPARK-27811][Core][Docs]Improve docs about spark.driver.memoryOverhead and spark.executor.memoryOverhead.

AmplabJenkins commented on issue #24671: [SPARK-27811][Core][Docs]Improve docs about spark.driver.memoryOverhead and spark.executor.memoryOverhead. URL: https://github.com/apache/spark/pull/24671#issuecomment-495066698 Merged build finished. Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on issue #24671: [SPARK-27811][Core][Docs]Improve docs about spark.driver.memoryOverhead and spark.executor.memoryOverhead.

AmplabJenkins commented on issue #24671: [SPARK-27811][Core][Docs]Improve docs about spark.driver.memoryOverhead and spark.executor.memoryOverhead. URL: https://github.com/apache/spark/pull/24671#issuecomment-495066701 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/105710/ Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA removed a comment on issue #24671: [SPARK-27811][Core][Docs]Improve docs about spark.driver.memoryOverhead and spark.executor.memoryOverhead.

SparkQA removed a comment on issue #24671: [SPARK-27811][Core][Docs]Improve docs about spark.driver.memoryOverhead and spark.executor.memoryOverhead. URL: https://github.com/apache/spark/pull/24671#issuecomment-495045176 **[Test build #105710 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/105710/testReport)** for PR 24671 at commit [`3f79e89`](https://github.com/apache/spark/commit/3f79e89e00f920af959a6b979e736af5a43a93c7). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on issue #24671: [SPARK-27811][Core][Docs]Improve docs about spark.driver.memoryOverhead and spark.executor.memoryOverhead.

SparkQA commented on issue #24671: [SPARK-27811][Core][Docs]Improve docs about spark.driver.memoryOverhead and spark.executor.memoryOverhead. URL: https://github.com/apache/spark/pull/24671#issuecomment-495066402 **[Test build #105710 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/105710/testReport)** for PR 24671 at commit [`3f79e89`](https://github.com/apache/spark/commit/3f79e89e00f920af959a6b979e736af5a43a93c7). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] viirya commented on a change in pull request #24682: [SPARK-27762][SQL] [FOLLOWUP] Add behavior change for Avro writer in migration guide

viirya commented on a change in pull request #24682: [SPARK-27762][SQL]

[FOLLOWUP] Add behavior change for Avro writer in migration guide

URL: https://github.com/apache/spark/pull/24682#discussion_r286774379

##

File path:

external/avro/src/test/scala/org/apache/spark/sql/avro/AvroSuite.scala

##

@@ -930,6 +930,33 @@ class AvroSuite extends QueryTest with SharedSQLContext

with SQLTestUtils {

}

}

+ test("support user provided non-nullable avro schema " +

Review comment:

Have we documented `avroSchema` about this the behavior?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] viirya commented on a change in pull request #24682: [SPARK-27762][SQL] [FOLLOWUP] Add behavior change for Avro writer in migration guide

viirya commented on a change in pull request #24682: [SPARK-27762][SQL]

[FOLLOWUP] Add behavior change for Avro writer in migration guide

URL: https://github.com/apache/spark/pull/24682#discussion_r286774235

##

File path:

external/avro/src/test/scala/org/apache/spark/sql/avro/AvroSuite.scala

##

@@ -930,6 +930,33 @@ class AvroSuite extends QueryTest with SharedSQLContext

with SQLTestUtils {

}

}

+ test("support user provided non-nullable avro schema " +

+"for nullable catalyst schema without any null record") {

Review comment:

Sounds good to have warning messages for the case. So can let users know

they're actually writing from nullable catalyst schema into non-nullable avro

schema.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

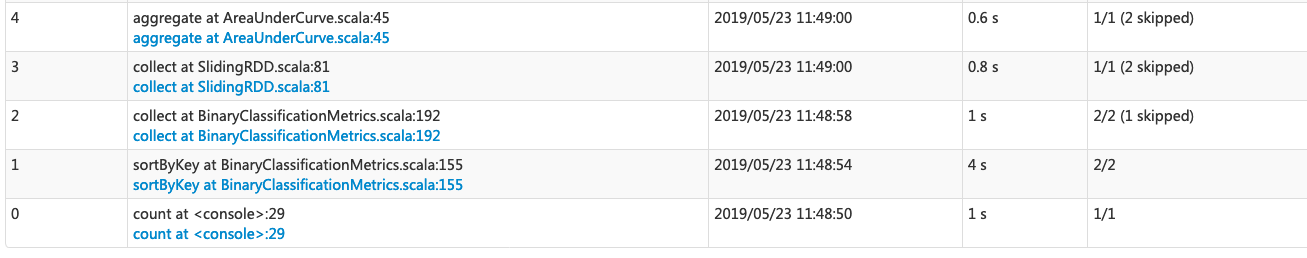

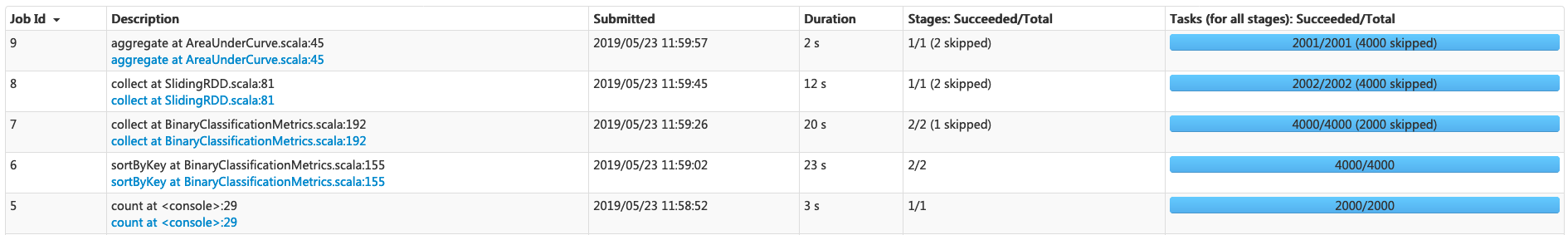

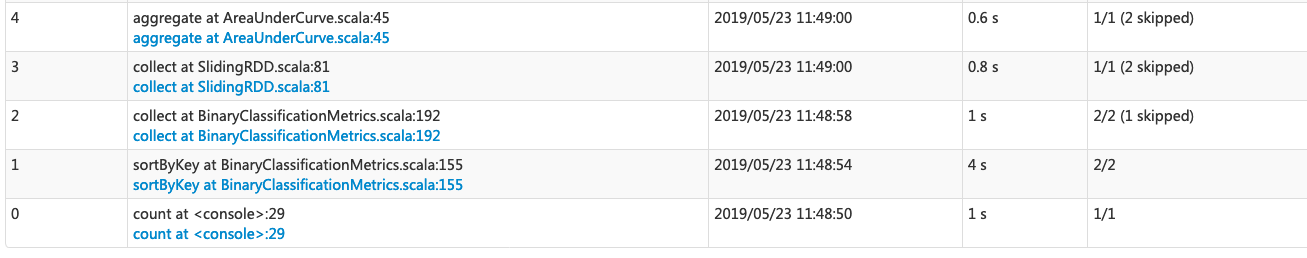

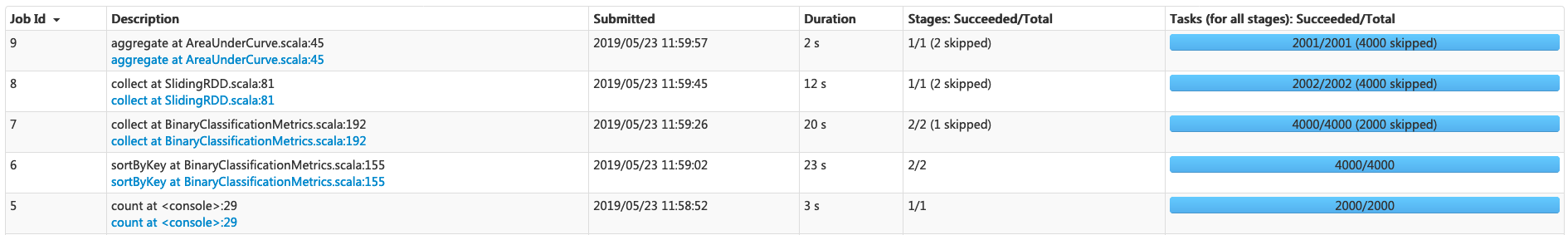

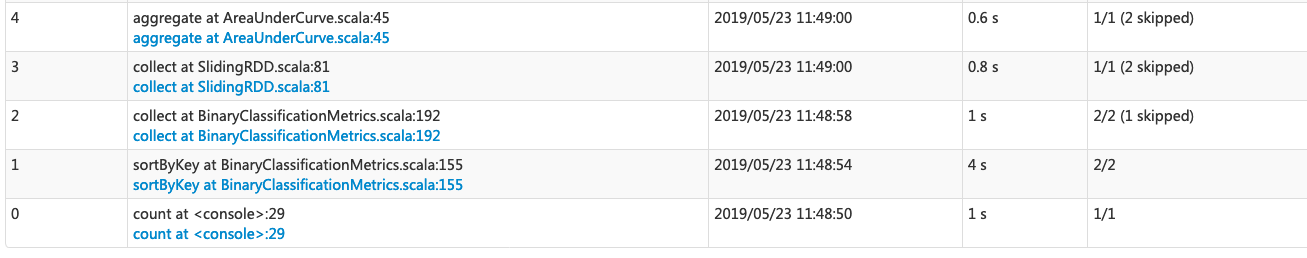

[GitHub] [spark] zhengruifeng edited a comment on issue #24648: [SPARK-27777][ML] Eliminate uncessary sliding job in AreaUnderCurve

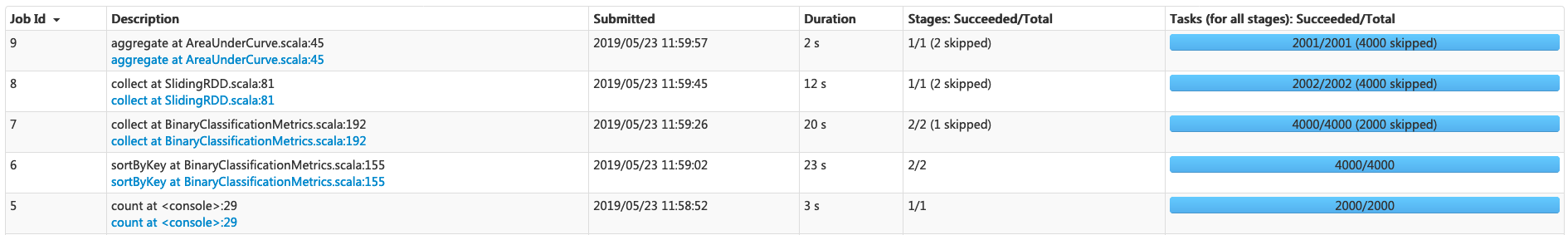

zhengruifeng edited a comment on issue #24648: [SPARK-2][ML] Eliminate uncessary sliding job in AreaUnderCurve URL: https://github.com/apache/spark/pull/24648#issuecomment-495060029 @srowen Oh, not a pass. My expression was not correct. Sliding need a separate job to collect head rows on each partitions, which can be eliminated. When the number of points is small, e.g. 1000, the difference is tiny. As shown in the first fig, only 0.8 sec is saved.  Serveral reasons will result in more points in curve: 1, when I want a more accurate score 2, if we evaluate on a big dataset, then the points easily exceed 1000 even if we set `numBins`=1000. Since the grouping in the curve is limiited in partitions, or each partition will contains at least one point. In many practical cases, there are tens of thounds of partitions, so there are tens of thounds of points. As shown in the second fig, we set `numBins` to default value, and repartition the input data to 2000 partitions. Then the sliding job can not be ignored.  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] zhengruifeng edited a comment on issue #24648: [SPARK-27777][ML] Eliminate uncessary sliding job in AreaUnderCurve

zhengruifeng edited a comment on issue #24648: [SPARK-2][ML] Eliminate uncessary sliding job in AreaUnderCurve URL: https://github.com/apache/spark/pull/24648#issuecomment-495060029 @srowen Oh, not a pass. My expression was not correct. Sliding need a separate job to collect head rows on each partitions, which can be eliminated. When the number of points is small, e.g. 1000, the difference is tiny. As shown in the first fig, only 0.8 sec is saved.  Serveral reasons will result in more points in curve: 1, when I want a more accurate score 2, if we evaluate on a big dataset, then the points easily exceed 1000 even if we set `numBins`=1000. Since the grouping in the curve is limiited in partitions, or each partition will contains at least one point. In many practical cases, there are tens of thounds of partitions, so there are tens of thounds of points. As shown in the second fig, we set `numBins` to default value, and repartition the input data to 2000 partitions. Then the sliding job will take 12 sec, which is much longer than the computation time of AUC (2s)  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] gengliangwang edited a comment on issue #24682: [SPARK-27762][SQL] [FOLLOWUP] Add behavior change for Avro writer in migration guide

gengliangwang edited a comment on issue #24682: [SPARK-27762][SQL] [FOLLOWUP] Add behavior change for Avro writer in migration guide URL: https://github.com/apache/spark/pull/24682#issuecomment-495059043 This should not be a big concern. The file writing job is almost transactional since Spark follows the `FileCommitProtocol`. If failure happens during writes, the middle output files won't show up in the target path. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] zhengruifeng commented on issue #24648: [SPARK-27777][ML] Eliminate uncessary sliding job in AreaUnderCurve

zhengruifeng commented on issue #24648: [SPARK-2][ML] Eliminate uncessary sliding job in AreaUnderCurve URL: https://github.com/apache/spark/pull/24648#issuecomment-495060029 @srowen Oh, not a pass. My expression was not correct. Sliding need a separate job to collect head rows on each partitions, which can be eliminated. When the number of points is small, e.g. 1000, the difference is tiny. As shown in the first fig, only 0.8 sec is saved.  Serveral reasons will result in more points in curve: 1, when I want a more accurate score 2, if we evaluate on a big dataset, then the points easily exceed 1000 even if we set `numBins`=1000. Since the grouping in the curve is limiit in partitions, or each partition will contains at least on partition. In many practional cases, there are tens of thounds of partitions, so there are tens of thounds of points. As shown in the second fig, we set `numBins` to default value, and repartition the input data to 2000 partitions. Then the sliding job will take 12 sec, which is much longer than the computation time of AUC (2s)  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] gengliangwang commented on issue #24682: [SPARK-27762][SQL] [FOLLOWUP] Add behavior change for Avro writer in migration guide

gengliangwang commented on issue #24682: [SPARK-27762][SQL] [FOLLOWUP] Add behavior change for Avro writer in migration guide URL: https://github.com/apache/spark/pull/24682#issuecomment-495059043 This should not be a big concern. The file writing job is almost transactional since Spark follows the `FileCommitProtocol`. If failure happens during writes, the existing output file won't show up in the target path. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] gengliangwang commented on a change in pull request #24682: [SPARK-27762][SQL] [FOLLOWUP] Add behavior change for Avro writer in migration guide

gengliangwang commented on a change in pull request #24682: [SPARK-27762][SQL]

[FOLLOWUP] Add behavior change for Avro writer in migration guide

URL: https://github.com/apache/spark/pull/24682#discussion_r286765012

##

File path:

external/avro/src/test/scala/org/apache/spark/sql/avro/AvroSuite.scala

##

@@ -930,6 +930,33 @@ class AvroSuite extends QueryTest with SharedSQLContext

with SQLTestUtils {

}

}

+ test("support user provided non-nullable avro schema " +

+"for nullable catalyst schema without any null record") {

Review comment:

@cloud-fan I think this is fine. Otherwise, there is no way for users to

write with a non-nullable schema.

But should we show warning messages for such case? So that users can be

aware of the risk.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] gengliangwang commented on a change in pull request #24682: [SPARK-27762][SQL] [FOLLOWUP] Add behavior change for Avro writer in migration guide

gengliangwang commented on a change in pull request #24682: [SPARK-27762][SQL]

[FOLLOWUP] Add behavior change for Avro writer in migration guide

URL: https://github.com/apache/spark/pull/24682#discussion_r286765012

##

File path:

external/avro/src/test/scala/org/apache/spark/sql/avro/AvroSuite.scala

##

@@ -930,6 +930,33 @@ class AvroSuite extends QueryTest with SharedSQLContext

with SQLTestUtils {

}

}

+ test("support user provided non-nullable avro schema " +

+"for nullable catalyst schema without any null record") {

Review comment:

@cloud-fan I think this is fine. Otherwise, that is no way for users to

write with a non-nullable schema.

But should we show warning messages for such case? So that users can be

aware of the risk.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun edited a comment on issue #24682: [SPARK-27762][SQL] [FOLLOWUP] Add behavior change for Avro writer in migration guide

dongjoon-hyun edited a comment on issue #24682: [SPARK-27762][SQL] [FOLLOWUP]

Add behavior change for Avro writer in migration guide

URL: https://github.com/apache/spark/pull/24682#issuecomment-495054805

I understand the concern about the difference from our default `.schema`

option. I believe this is the main reason why we add `.option("avroSchema",

...)`.

For Avro, `nullable` column type is `"type": ["int", "null"]` and

non-nullable column type is `"type": "int"` explicitly.

For ORC/Parquet (DSv1/v2), everything is always nullable by default when

reading. So, please don't worry about `.schema` use cases. This is a different

option for different use cases.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] JkSelf commented on issue #21899: [SPARK-24912][SQL] Don't obscure source of OOM during broadcast join

JkSelf commented on issue #21899: [SPARK-24912][SQL] Don't obscure source of OOM during broadcast join URL: https://github.com/apache/spark/pull/21899#issuecomment-495055518 @beliefer Thanks for your working. Here before we new the newPage in `val newPage = new Array[Long](newNumWords.toInt)`, we already check the available memory by `ensureAcquireMemory(newNumWords * 8L)` and if enough memory, we will do the creation operation of `newPage`. And if the memory is enough, why throw the oom exception in `val newPage = new Array[Long](newNumWords.toInt)`? Thanks for your help. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] francis0407 commented on a change in pull request #24344: [SPARK-27440][SQL] Optimize uncorrelated predicate subquery

francis0407 commented on a change in pull request #24344: [SPARK-27440][SQL]

Optimize uncorrelated predicate subquery

URL: https://github.com/apache/spark/pull/24344#discussion_r286764851

##

File path:

sql/core/src/main/scala/org/apache/spark/sql/execution/subquery.scala

##

@@ -55,6 +55,112 @@ object ExecSubqueryExpression {

}

}

+/**

+ * Exists is used to test for the existence of any record in a subquery.

+ *

+ * This is the physical copy of Exists to be used inside SparkPlan.

+ */

+case class Exists(

+plan: BaseSubqueryExec,

+exprId: ExprId)

+ extends ExecSubqueryExpression {

+

+ override def dataType: DataType = BooleanType

+ override def children: Seq[Expression] = Nil

+ override def nullable: Boolean = false

+ override def toString: String =

plan.simpleString(SQLConf.get.maxToStringFields)

+ override def withNewPlan(plan: BaseSubqueryExec): Exists = copy(plan = plan)

+

+ // Whether the subquery returns one or more records

+ @volatile private var result: Boolean = _

+ @volatile private var updated: Boolean = false

+

+ def updateResult(): Unit = {

+val rows = plan.executeCollect()

+result = rows.nonEmpty

+updated = true

+ }

+

+ override def eval(input: InternalRow): Boolean = {

+require(updated, s"$this has not finished")

+result

+ }

+

+ override protected def doGenCode(ctx: CodegenContext, ev: ExprCode):

ExprCode = {

+require(updated, s"$this has not finished")

+Literal.create(result, BooleanType).doGenCode(ctx, ev)

+ }

+}

+

+/**

+ * Evaluates to `true` if `values` are returned in the subquery's result set.

+ * If `values` are not found in the subquery's result set, and there are nulls

in

+ * `values` or the result set, it should return null.

+ * This is the physical copy of InSubquery to be used inside SparkPlan.

+ */

+case class InSubquery(

+values: Seq[Literal],

+plan: BaseSubqueryExec,

+exprId: ExprId)

+ extends ExecSubqueryExpression {

+ override def dataType: DataType = BooleanType

+ override def children: Seq[Expression] = Nil

+ override def nullable: Boolean = true

+ override def toString: String =

plan.simpleString(SQLConf.get.maxToStringFields)

+ override def withNewPlan(plan: BaseSubqueryExec): InSubquery = copy(plan =

plan)

+

+ @volatile private var result: Boolean = _

+ @volatile private var isNull: Boolean = false

+ @volatile private var updated: Boolean = false

+

+ def updateResult(): Unit = {

+val rows = plan.executeCollect()

+// The semantic of '(a,b) in ((x1, y1), (x2, y2), ...)' is

+// '(a = x1 and b = y1) or (a = x2 and b = y2) or ...'

+val expression = rows.map(row => {

Review comment:

I have updated this, could you please help check it? cc @dilipbiswal

@cloud-fan

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun edited a comment on issue #24682: [SPARK-27762][SQL] [FOLLOWUP] Add behavior change for Avro writer in migration guide

dongjoon-hyun edited a comment on issue #24682: [SPARK-27762][SQL] [FOLLOWUP]

Add behavior change for Avro writer in migration guide

URL: https://github.com/apache/spark/pull/24682#issuecomment-495054805

I understand the concern about the difference from our default `.schema`

option. I believe this is the main reason why we add `.option("avroSchema",

...)`.

For Avro, `nullable` column type is `"type": ["int", "null"]` and

non-nullable column type is `"type": "int"` explicitly.

For ORC/Parquet (DSv1/v2), everything is always nullable by default when

reading. So, please don't worry about `.schema` use cases. This is a different

use case.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on issue #24682: [SPARK-27762][SQL] [FOLLOWUP] Add behavior change for Avro writer in migration guide

dongjoon-hyun commented on issue #24682: [SPARK-27762][SQL] [FOLLOWUP] Add

behavior change for Avro writer in migration guide

URL: https://github.com/apache/spark/pull/24682#issuecomment-495054805

I understand the concern about the difference from our default `.schema`

option. I believe this is the main reason why we add `.option("avroSchema",

...)`.

For Avro, `nullable` column type is `"type": ["int", "null"]` and

non-nullable column type is `"type": "int"` explicitly.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on a change in pull request #24682: [SPARK-27762][SQL] [FOLLOWUP] Add behavior change for Avro writer in migration guide

dongjoon-hyun commented on a change in pull request #24682: [SPARK-27762][SQL]

[FOLLOWUP] Add behavior change for Avro writer in migration guide

URL: https://github.com/apache/spark/pull/24682#discussion_r286763767

##

File path:

external/avro/src/test/scala/org/apache/spark/sql/avro/AvroSuite.scala

##

@@ -930,6 +930,33 @@ class AvroSuite extends QueryTest with SharedSQLContext

with SQLTestUtils {

}

}

+ test("support user provided non-nullable avro schema " +

Review comment:

`catalyst` schema is always nullable when we read from the file. This is a

special support for `.option("avroSchema", ...)` for Avro.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on a change in pull request #24682: [SPARK-27762][SQL] [FOLLOWUP] Add behavior change for Avro writer in migration guide

dongjoon-hyun commented on a change in pull request #24682: [SPARK-27762][SQL]

[FOLLOWUP] Add behavior change for Avro writer in migration guide

URL: https://github.com/apache/spark/pull/24682#discussion_r286763619

##

File path:

external/avro/src/test/scala/org/apache/spark/sql/avro/AvroSuite.scala

##

@@ -930,6 +930,33 @@ class AvroSuite extends QueryTest with SharedSQLContext

with SQLTestUtils {

}

}

+ test("support user provided non-nullable avro schema " +

+"for nullable catalyst schema without any null record") {

Review comment:

For `.schema()` option, we always enforce `nullable` by using

`dataSchema.asNullable` in `FileTable`. For me, this is a special support for

`.option("avroSchema", "")`.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on issue #23791: [SPARK-20597][SQL][SS][WIP] KafkaSourceProvider falls back on path as synonym for topic

AmplabJenkins commented on issue #23791: [SPARK-20597][SQL][SS][WIP] KafkaSourceProvider falls back on path as synonym for topic URL: https://github.com/apache/spark/pull/23791#issuecomment-495053513 Can one of the admins verify this patch? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on a change in pull request #24682: [SPARK-27762][SQL] [FOLLOWUP] Add behavior change for Avro writer in migration guide

HyukjinKwon commented on a change in pull request #24682: [SPARK-27762][SQL]

[FOLLOWUP] Add behavior change for Avro writer in migration guide

URL: https://github.com/apache/spark/pull/24682#discussion_r286763265

##

File path:

external/avro/src/test/scala/org/apache/spark/sql/avro/AvroSuite.scala

##

@@ -930,6 +930,33 @@ class AvroSuite extends QueryTest with SharedSQLContext

with SQLTestUtils {

}

}

+ test("support user provided non-nullable avro schema " +

Review comment:

BTW, note that we don't currently support non-nullable schema in file format

sources because they are turned to be nullable in SQL batch code path.

Non-nullable is able to be set in SS tho. Both codes paths should be matched

- it's a long standing issue.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on issue #23791: [SPARK-20597][SQL][SS][WIP] KafkaSourceProvider falls back on path as synonym for topic

AmplabJenkins removed a comment on issue #23791: [SPARK-20597][SQL][SS][WIP] KafkaSourceProvider falls back on path as synonym for topic URL: https://github.com/apache/spark/pull/23791#issuecomment-463663060 Can one of the admins verify this patch? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on a change in pull request #24682: [SPARK-27762][SQL] [FOLLOWUP] Add behavior change for Avro writer in migration guide

HyukjinKwon commented on a change in pull request #24682: [SPARK-27762][SQL]

[FOLLOWUP] Add behavior change for Avro writer in migration guide

URL: https://github.com/apache/spark/pull/24682#discussion_r286762917

##

File path:

external/avro/src/test/scala/org/apache/spark/sql/avro/AvroSuite.scala

##

@@ -930,6 +930,33 @@ class AvroSuite extends QueryTest with SharedSQLContext

with SQLTestUtils {

}

}

+ test("support user provided non-nullable avro schema " +

Review comment:

This doesn't quite make sense to me. Looks if the catalyst schema is

nullable, it should reject non-nullable avro schema

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] wangyum commented on issue #24672: [SPARK-27801] Improve performance of InMemoryFileIndex.listLeafFiles for HDFS directories with many files

wangyum commented on issue #24672: [SPARK-27801] Improve performance of InMemoryFileIndex.listLeafFiles for HDFS directories with many files URL: https://github.com/apache/spark/pull/24672#issuecomment-495052170 Thank you @rrusso2007 @JoshRosen I did simple benchmark in our production environment(Hadoop version is 2.7.1): ``` 19/05/22 19:53:18 WARN InMemoryFileIndex: Elements: 10. default time token: 41, SPARK-27801 time token: 18, SPARK-27807 time token: 30 19/05/22 19:53:29 WARN InMemoryFileIndex: Elements: 20. default time token: 22, SPARK-27801 time token: 10, SPARK-27807 time token: 24 19/05/22 19:53:30 WARN InMemoryFileIndex: Elements: 50. default time token: 47, SPARK-27801 time token: 13, SPARK-27807 time token: 25 19/05/22 19:53:33 WARN InMemoryFileIndex: Elements: 100. default time token: 54, SPARK-27801 time token: 10, SPARK-27807 time token: 30 19/05/22 19:53:42 WARN InMemoryFileIndex: Elements: 200. default time token: 86, SPARK-27801 time token: 19, SPARK-27807 time token: 40 19/05/22 19:53:52 WARN InMemoryFileIndex: Elements: 500. default time token: 254, SPARK-27801 time token: 30, SPARK-27807 time token: 90 19/05/22 19:54:06 WARN InMemoryFileIndex: Elements: 1000. default time token: 507, SPARK-27801 time token: 165, SPARK-27807 time token: 117 19/05/22 19:54:18 WARN InMemoryFileIndex: Elements: 2000. default time token: 1193, SPARK-27801 time token: 114, SPARK-27807 time token: 216 19/05/22 19:54:34 WARN InMemoryFileIndex: Elements: 5000. default time token: 2401, SPARK-27801 time token: 430, SPARK-27807 time token: 565 19/05/22 19:54:56 WARN InMemoryFileIndex: Elements: 1. default time token: 4831, SPARK-27801 time token: 646, SPARK-27807 time token: 1202 19/05/22 19:55:40 WARN InMemoryFileIndex: Elements: 2. default time token: 9121, SPARK-27801 time token: 1535, SPARK-27807 time token: 1920 19/05/22 19:56:45 WARN InMemoryFileIndex: Elements: 4. default time token: 18873, SPARK-27801 time token: 2784, SPARK-27807 time token: 3997 19/05/22 19:58:18 WARN InMemoryFileIndex: Elements: 8. default time token: 33658, SPARK-27801 time token: 6476, SPARK-27807 time token: 8326 ``` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] wangyum closed pull request #24679: [SPARK-27807][SQL] Parallel resolve leaf statuses InMemoryFileIndex

wangyum closed pull request #24679: [SPARK-27807][SQL] Parallel resolve leaf statuses InMemoryFileIndex URL: https://github.com/apache/spark/pull/24679 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] pengbo removed a comment on issue #24666: [SPARK-27482][SQL][WEBUI] Show BroadcastHashJoinExec numOutputRows statistics info on SparkSQL UI page

pengbo removed a comment on issue #24666: [SPARK-27482][SQL][WEBUI] Show BroadcastHashJoinExec numOutputRows statistics info on SparkSQL UI page URL: https://github.com/apache/spark/pull/24666#issuecomment-495044115 Retest this please This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] pengbo commented on issue #24666: [SPARK-27482][SQL][WEBUI] Show BroadcastHashJoinExec numOutputRows statistics info on SparkSQL UI page

pengbo commented on issue #24666: [SPARK-27482][SQL][WEBUI] Show BroadcastHashJoinExec numOutputRows statistics info on SparkSQL UI page URL: https://github.com/apache/spark/pull/24666#issuecomment-495049729 retest this please This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] pengbo removed a comment on issue #24666: [SPARK-27482][SQL][WEBUI] Show BroadcastHashJoinExec numOutputRows statistics info on SparkSQL UI page

pengbo removed a comment on issue #24666: [SPARK-27482][SQL][WEBUI] Show BroadcastHashJoinExec numOutputRows statistics info on SparkSQL UI page URL: https://github.com/apache/spark/pull/24666#issuecomment-495042381 retest this please This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on issue #24628: [SPARK-27749][SQL][test-hadoop3.2] hadoop-3.2 support hive-thriftserver

AmplabJenkins removed a comment on issue #24628: [SPARK-27749][SQL][test-hadoop3.2] hadoop-3.2 support hive-thriftserver URL: https://github.com/apache/spark/pull/24628#issuecomment-495046736 Merged build finished. Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on issue #24628: [SPARK-27749][SQL][test-hadoop3.2] hadoop-3.2 support hive-thriftserver

AmplabJenkins removed a comment on issue #24628: [SPARK-27749][SQL][test-hadoop3.2] hadoop-3.2 support hive-thriftserver URL: https://github.com/apache/spark/pull/24628#issuecomment-495046742 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/105707/ Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on issue #24628: [SPARK-27749][SQL][test-hadoop3.2] hadoop-3.2 support hive-thriftserver

AmplabJenkins commented on issue #24628: [SPARK-27749][SQL][test-hadoop3.2] hadoop-3.2 support hive-thriftserver URL: https://github.com/apache/spark/pull/24628#issuecomment-495046736 Merged build finished. Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on issue #24628: [SPARK-27749][SQL][test-hadoop3.2] hadoop-3.2 support hive-thriftserver

AmplabJenkins commented on issue #24628: [SPARK-27749][SQL][test-hadoop3.2] hadoop-3.2 support hive-thriftserver URL: https://github.com/apache/spark/pull/24628#issuecomment-495046742 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/105707/ Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA removed a comment on issue #24628: [SPARK-27749][SQL][test-hadoop3.2] hadoop-3.2 support hive-thriftserver

SparkQA removed a comment on issue #24628: [SPARK-27749][SQL][test-hadoop3.2] hadoop-3.2 support hive-thriftserver URL: https://github.com/apache/spark/pull/24628#issuecomment-495024200 **[Test build #105707 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/105707/testReport)** for PR 24628 at commit [`a0e52aa`](https://github.com/apache/spark/commit/a0e52aae93fd8c1b3a3b1931b2102943cb0202a4). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on issue #24628: [SPARK-27749][SQL][test-hadoop3.2] hadoop-3.2 support hive-thriftserver

SparkQA commented on issue #24628: [SPARK-27749][SQL][test-hadoop3.2] hadoop-3.2 support hive-thriftserver URL: https://github.com/apache/spark/pull/24628#issuecomment-495046418 **[Test build #105707 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/105707/testReport)** for PR 24628 at commit [`a0e52aa`](https://github.com/apache/spark/commit/a0e52aae93fd8c1b3a3b1931b2102943cb0202a4). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on issue #24671: [MINOR][DOCS]Improve docs about spark.driver.memoryOverhead and spark.executor.memoryOverhead.

SparkQA commented on issue #24671: [MINOR][DOCS]Improve docs about spark.driver.memoryOverhead and spark.executor.memoryOverhead. URL: https://github.com/apache/spark/pull/24671#issuecomment-495045176 **[Test build #105710 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/105710/testReport)** for PR 24671 at commit [`3f79e89`](https://github.com/apache/spark/commit/3f79e89e00f920af959a6b979e736af5a43a93c7). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on issue #24671: [MINOR][DOCS]Improve docs about spark.driver.memoryOverhead and spark.executor.memoryOverhead.

AmplabJenkins removed a comment on issue #24671: [MINOR][DOCS]Improve docs about spark.driver.memoryOverhead and spark.executor.memoryOverhead. URL: https://github.com/apache/spark/pull/24671#issuecomment-495044831 Merged build finished. Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on issue #24671: [MINOR][DOCS]Improve docs about spark.driver.memoryOverhead and spark.executor.memoryOverhead.

AmplabJenkins removed a comment on issue #24671: [MINOR][DOCS]Improve docs about spark.driver.memoryOverhead and spark.executor.memoryOverhead. URL: https://github.com/apache/spark/pull/24671#issuecomment-495044835 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/10966/ Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on issue #24671: [MINOR][DOCS]Improve docs about spark.driver.memoryOverhead and spark.executor.memoryOverhead.

AmplabJenkins commented on issue #24671: [MINOR][DOCS]Improve docs about spark.driver.memoryOverhead and spark.executor.memoryOverhead. URL: https://github.com/apache/spark/pull/24671#issuecomment-495044835 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/10966/ Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on issue #24671: [MINOR][DOCS]Improve docs about spark.driver.memoryOverhead and spark.executor.memoryOverhead.

AmplabJenkins commented on issue #24671: [MINOR][DOCS]Improve docs about spark.driver.memoryOverhead and spark.executor.memoryOverhead. URL: https://github.com/apache/spark/pull/24671#issuecomment-495044831 Merged build finished. Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] beliefer commented on issue #24671: [MINOR][DOCS]Improve docs about spark.driver.memoryOverhead and spark.executor.memoryOverhead.

beliefer commented on issue #24671: [MINOR][DOCS]Improve docs about spark.driver.memoryOverhead and spark.executor.memoryOverhead. URL: https://github.com/apache/spark/pull/24671#issuecomment-495044178 Retest this please. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] pengbo commented on issue #24666: [SPARK-27482][SQL][WEBUI] Show BroadcastHashJoinExec numOutputRows statistics info on SparkSQL UI page

pengbo commented on issue #24666: [SPARK-27482][SQL][WEBUI] Show BroadcastHashJoinExec numOutputRows statistics info on SparkSQL UI page URL: https://github.com/apache/spark/pull/24666#issuecomment-495044115 Retest this please This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] beliefer commented on a change in pull request #24647: [SPARK-27776][SQL]Avoid duplicate Java reflection in DataSource.

beliefer commented on a change in pull request #24647: [SPARK-27776][SQL]Avoid duplicate Java reflection in DataSource. URL: https://github.com/apache/spark/pull/24647#discussion_r286753702 ## File path: sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/DataSource.scala ## @@ -105,6 +105,8 @@ case class DataSource( case _ => cls } } + private def providingInstance = providingClass.getConstructor().newInstance() Review comment: If we add a return type, only `Any` could use here. This method is modified by private, so whether the return type can be omitted? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] habren commented on issue #24663: [SPARK-27792][SQL] SkewJoin--handle only skewed keys with broadcastjoin

habren commented on issue #24663: [SPARK-27792][SQL] SkewJoin--handle only skewed keys with broadcastjoin URL: https://github.com/apache/spark/pull/24663#issuecomment-495043133 @viirya Could you please review this pull request ? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] pengbo removed a comment on issue #24666: [SPARK-27482][SQL][WEBUI] Show BroadcastHashJoinExec numOutputRows statistics info on SparkSQL UI page

pengbo removed a comment on issue #24666: [SPARK-27482][SQL][WEBUI] Show BroadcastHashJoinExec numOutputRows statistics info on SparkSQL UI page URL: https://github.com/apache/spark/pull/24666#issuecomment-494819839 retest this please This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] pengbo commented on issue #24666: [SPARK-27482][SQL][WEBUI] Show BroadcastHashJoinExec numOutputRows statistics info on SparkSQL UI page

pengbo commented on issue #24666: [SPARK-27482][SQL][WEBUI] Show BroadcastHashJoinExec numOutputRows statistics info on SparkSQL UI page URL: https://github.com/apache/spark/pull/24666#issuecomment-495042381 retest this please This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] beliefer commented on a change in pull request #24647: [SPARK-27776][SQL]Avoid duplicate Java reflection in DataSource.

beliefer commented on a change in pull request #24647: [SPARK-27776][SQL]Avoid duplicate Java reflection in DataSource. URL: https://github.com/apache/spark/pull/24647#discussion_r286753702 ## File path: sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/DataSource.scala ## @@ -105,6 +105,8 @@ case class DataSource( case _ => cls } } + private def providingInstance = providingClass.getConstructor().newInstance() Review comment: If we add a return type, only Any could use here. This method is modified by private, so whether the return type can be omitted? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] sjrand edited a comment on issue #24645: [SPARK-27773][Shuffle] add metrics for number of exceptions caught in ExternalShuffleBlockHandler

sjrand edited a comment on issue #24645: [SPARK-27773][Shuffle] add metrics for

number of exceptions caught in ExternalShuffleBlockHandler

URL: https://github.com/apache/spark/pull/24645#issuecomment-495040379

On the client (executor) side we were seeing lots of timeouts, e.g.:

```

ERROR [2019-05-16T18:34:57.782Z] org.apache.spark.storage.BlockManager:

Failed to connect to external shuffle server, will retry 2 more times after

waiting 5 seconds...

java.io.IOException: Failed to connect to /:7337

at

org.apache.spark.network.client.TransportClientFactory.createClient(TransportClientFactory.java:250)

at

org.apache.spark.network.client.TransportClientFactory.createUnmanagedClient(TransportClientFactory.java:206)

at

org.apache.spark.network.shuffle.ExternalShuffleClient.registerWithShuffleServer(ExternalShuffleClient.java:142)

at

org.apache.spark.storage.BlockManager$$anonfun$registerWithExternalShuffleServer$1.apply$mcVI$sp(BlockManager.scala:300)

at scala.collection.immutable.Range.foreach$mVc$sp(Range.scala:160)

at

org.apache.spark.storage.BlockManager.registerWithExternalShuffleServer(BlockManager.scala:297)

at

org.apache.spark.storage.BlockManager.initialize(BlockManager.scala:271)

at org.apache.spark.executor.Executor.(Executor.scala:121)

at

org.apache.spark.executor.CoarseGrainedExecutorBackend$$anonfun$receive$1.applyOrElse(CoarseGrainedExecutorBackend.scala:92)

at

org.apache.spark.rpc.netty.Inbox$$anonfun$process$1.apply$mcV$sp(Inbox.scala:117)

at org.apache.spark.rpc.netty.Inbox.safelyCall(Inbox.scala:205)

at org.apache.spark.rpc.netty.Inbox.process(Inbox.scala:101)

at

org.apache.spark.rpc.netty.Dispatcher$MessageLoop.run(Dispatcher.scala:222)

at

java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at

java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: io.netty.channel.AbstractChannel$AnnotatedConnectException:

Connection timed out: /:7337

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at

sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717)

at

io.netty.channel.socket.nio.NioSocketChannel.doFinishConnect(NioSocketChannel.java:327)

at

io.netty.channel.nio.AbstractNioChannel$AbstractNioUnsafe.finishConnect(AbstractNioChannel.java:340)

at

io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:632)

at

io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:579)

at

io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:496)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:458)

at

io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:897)

at

io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.net.ConnectException: Connection timed out

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at

sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717)

at

io.netty.channel.socket.nio.NioSocketChannel.doFinishConnect(NioSocketChannel.java:327)

at

io.netty.channel.nio.AbstractNioChannel$AbstractNioUnsafe.finishConnect(AbstractNioChannel.java:340)

at

io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:632)

at

io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:579)

at

io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:496)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:458)

at