[GitHub] spark issue #17455: [Spark-20044][Web UI] Support Spark UI behind front-end ...

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/17455 @elgalu feel free to try picking it back up, I was a fan of it when reviewing it "back in the day" --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21688: [SPARK-21809] : Change Stage Page to use datatables to s...

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/21688 Sorry, I have not. I've been really busy with other projects and haven't been in the spark code for a few months. I'll take a look when/if I have time, but it'd be faster for @vanzin or @tgravescs to review at this point. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21688: [SPARK-21809] : Change Stage Page to use datatables to s...

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/21688 I'll see if I get a chance to look at this this week, but with the holiday it may have to wait a week. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21213: [SPARK-24120] Show `Jobs` page when `jobId` is missing

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/21213 So I just ran it and have a couple minor issues: 1. The immediate refresh is a bit jarring, maybe put it on a few second delay (long enough to read the redirect message?) 2. The automatic refresh is also a maybe for me, it may make more sense to not refresh automatically but instead provide a link with a message, but I'll leave this up to others which make more sense. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21213: [SPARK-24120] Show `Jobs` page when `jobId` is missing

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/21213 Code-wsie I think this looks fine, I'll check it out and test it later. But it seems the Utils file has some old style error that's making the GitHub diff crazy, mind just fixing it in this pr? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20891: [SPARK-23782][CORE][UI] SHS should list only application...

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/20891 jerry is correct, the community rejected a similar pr in the past --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20570: [spark-23382][WEB-UI]Spark Streaming ui about the...

Github user ajbozarth commented on a diff in the pull request:

https://github.com/apache/spark/pull/20570#discussion_r167770721

--- Diff: core/src/main/resources/org/apache/spark/ui/static/webui.js ---

@@ -80,4 +80,6 @@ $(function() {

collapseTablePageLoad('collapse-aggregated-poolActiveStages','aggregated-poolActiveStages');

collapseTablePageLoad('collapse-aggregated-tasks','aggregated-tasks');

collapseTablePageLoad('collapse-aggregated-rdds','aggregated-rdds');

+

collapseTablePageLoad('collapse-aggregated-activeBatches','aggregated-activeBatches');

--- End diff --

This function just makes sure to persist collapsed tables on page reload

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20570: [spark-23382][WEB-UI]Spark Streaming ui about the...

Github user ajbozarth commented on a diff in the pull request:

https://github.com/apache/spark/pull/20570#discussion_r167668944

--- Diff: core/src/main/resources/org/apache/spark/ui/static/webui.js ---

@@ -80,4 +80,6 @@ $(function() {

collapseTablePageLoad('collapse-aggregated-poolActiveStages','aggregated-poolActiveStages');

collapseTablePageLoad('collapse-aggregated-tasks','aggregated-tasks');

collapseTablePageLoad('collapse-aggregated-rdds','aggregated-rdds');

+

collapseTablePageLoad('collapse-aggregated-activeBatches','aggregated-activeBatches');

--- End diff --

I would agree there's no reason to, but this simply enables user to if they

want. And I would prefer the uniform look and feel of have all tables

collapsible like the other pages.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20408: [SPARK-23189][Core][Web UI] Reflect stage level blacklis...

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/20408 Saw the new screenshots, they look good --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20335: [SPARK-23088][CORE] History server not showing incomplet...

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/20335 @jiangxb1987 I agree that this is a behavior change, but it's a behavior change back to how it was before we switched the page from scala to js. This LGTM --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20408: [SPARK-23189][Core][Web UI] Reflect stage level blacklis...

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/20408 I agree that despite being wordy `Active (Blacklisted in Stages: [...])` looks best, could you update it and post screenshots to confirm? And if @squito says the code looks good I trust him (I'm a bit busy atm to do a proper code review). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20408: [SPARK-23189][Core][Web UI] Reflect stage level blacklis...

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/20408 I'll take a look at the code when I have a moment, but from a UI perspective only have one issue. Having the status of `Blacklisted in Stages: [...]` when an exec is Active could be easily misunderstood. I think it should still say `Active`, though `Active (Blacklisted in Stages: [...])` is a bit wordy so I'm not sure how best to do so. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20203: [SPARK-22577] [core] executor page blacklist status shou...

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/20203 @squito thanks, I missed the img link and misread ExecutorTable as ExecutorPage. On that note the UI portion of this change LGTM. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20203: [SPARK-22577] [core] executor page blacklist status shou...

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/20203 From a code pov the UI change looks fine, but could you upload a few screenshots of the change? Also the UI simply says if the exec is blacklisted for the whole app or just a stage, but doesn't specify which stage. Is knowing the stage which blacklisted the node important? If so we should try to raise that to the UI as well. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20216: [SPARK-23024][WEB-UI]Spark ui about the contents of the ...

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/20216 LGTM now --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20216: [SPARK-23024][WEB-UI]Spark ui about the contents of the ...

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/20216 Thanks for updating the first one, but I stand by the second. The UI should be consistent and in the previous use of this feature it persisted so it should here, even if it's not necessary every time. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20138: [SPARK-20664][core] Delete stale application data from S...

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/20138 Ok, no problems here on that front then. If I have time later to do a proper review and this has't been merged yet I'll take better a look at the whole PR --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20138: [SPARK-20664][core] Delete stale application data from S...

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/20138 I haven't had a chance to read though your code, but as @squito said, I am against any default feature that deletes files from the eventLog dir. Many users, such as myself, use one log dir for both the event log as well as their Spark logs. I believe it is a great feature for most use cases and should be available as a option defaulted to off. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20216: [SPARK-23024][WEB-UI]Spark ui about the contents of the ...

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/20216 Hiding Tables like this has been done elsewhere in the UI (can't remember where right now though). In that case there's a triangle marker next to the Header that shows that it's collapsable and it also persists across page load. If we decided to do this I think we should emulate that previous example --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20216: [SPARK-23024][WEB-UI]Spark ui about the contents of the ...

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/20216 Since this is a UI change can you post screenshots, and I'm not 100% convinced on the necessity of this when we already have jump links at the top of most pages. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20013: [SPARK-20657][core] Speed up rendering of the stages pag...

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/20013 I did a read through, I didn't see any issues from a code standpoint but I haven't spent much time in your new code so another pair of eyes would be good. I also didn't check out and run the code either, if you want me too I can do that tomorrow. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20013: [SPARK-20657][core] Speed up rendering of the stages pag...

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/20013 I'll take a look at this either today or tomorrow --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19770: [SPARK-21571][WEB UI] Spark history server leaves incomp...

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/19770 This look a bit cleaner this time around. Since this is left off by default it LGTM --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19750: [SPARK-20650][core] Remove JobProgressListener.

Github user ajbozarth commented on a diff in the pull request:

https://github.com/apache/spark/pull/19750#discussion_r151288247

--- Diff:

core/src/main/scala/org/apache/spark/deploy/history/FsHistoryProvider.scala ---

@@ -315,48 +315,43 @@ private[history] class FsHistoryProvider(conf:

SparkConf, clock: Clock)

(new InMemoryStore(), true)

}

-val listener = if (needReplay) {

- val _listener = new AppStatusListener(kvstore, conf, false,

+if (needReplay) {

+ val replayBus = new ReplayListenerBus()

--- End diff --

It's still there in this PR though (line 297)

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19750: [SPARK-20650][core] Remove JobProgressListener.

Github user ajbozarth commented on a diff in the pull request:

https://github.com/apache/spark/pull/19750#discussion_r151288302

--- Diff:

core/src/main/scala/org/apache/spark/status/AppStatusListener.scala ---

@@ -563,7 +575,7 @@ private[spark] class AppStatusListener(

/** Flush all live entities' data to the underlying store. */

def flush(): Unit = {

val now = System.nanoTime()

-liveStages.values.foreach { stage =>

+liveStages.values.asScala.foreach { stage =>

--- End diff --

Ah I missed that that class change was scala -> java

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19750: [SPARK-20650][core] Remove JobProgressListener.

Github user ajbozarth commented on a diff in the pull request:

https://github.com/apache/spark/pull/19750#discussion_r151283135

--- Diff:

core/src/main/scala/org/apache/spark/status/AppStatusListener.scala ---

@@ -563,7 +575,7 @@ private[spark] class AppStatusListener(

/** Flush all live entities' data to the underlying store. */

def flush(): Unit = {

val now = System.nanoTime()

-liveStages.values.foreach { stage =>

+liveStages.values.asScala.foreach { stage =>

--- End diff --

Why add `asScala`?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19750: [SPARK-20650][core] Remove JobProgressListener.

Github user ajbozarth commented on a diff in the pull request:

https://github.com/apache/spark/pull/19750#discussion_r151281491

--- Diff: core/src/main/scala/org/apache/spark/SparkStatusTracker.scala ---

@@ -89,39 +83,33 @@ class SparkStatusTracker private[spark] (sc:

SparkContext) {

* garbage collected.

*/

def getStageInfo(stageId: Int): Option[SparkStageInfo] = {

-jobProgressListener.synchronized {

- for (

-info <- jobProgressListener.stageIdToInfo.get(stageId);

-data <- jobProgressListener.stageIdToData.get((stageId,

info.attemptId))

- ) yield {

-new SparkStageInfoImpl(

- stageId,

- info.attemptId,

- info.submissionTime.getOrElse(0),

- info.name,

- info.numTasks,

- data.numActiveTasks,

- data.numCompleteTasks,

- data.numFailedTasks)

- }

+store.asOption(store.lastStageAttempt(stageId)).map { stage =>

+ new SparkStageInfoImpl(

+stageId,

+stage.attemptId,

+stage.submissionTime.map(_.getTime()).getOrElse(0L),

+stage.name,

+stage.numTasks,

+stage.numActiveTasks,

+stage.numCompleteTasks,

+stage.numFailedTasks)

}

}

/**

* Returns information of all known executors, including host, port,

cacheSize, numRunningTasks.

*/

def getExecutorInfos: Array[SparkExecutorInfo] = {

-val executorIdToRunningTasks: Map[String, Int] =

-

sc.taskScheduler.asInstanceOf[TaskSchedulerImpl].runningTasksByExecutors

-

-sc.getExecutorStorageStatus.map { status =>

- val bmId = status.blockManagerId

+store.executorList(true).map { exec =>

+ val (host, port) = exec.hostPort.split(":", 2) match {

+case Array(h, p) => (h, p.toInt)

+case Array(h) => (h, -1)

+ }

new SparkExecutorInfoImpl(

-bmId.host,

-bmId.port,

-status.cacheSize,

-executorIdToRunningTasks.getOrElse(bmId.executorId, 0)

- )

-}

+host,

+port,

+exec.maxMemory,

--- End diff --

Why this change from cacheSize to maxMemory, are they synonymous?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19750: [SPARK-20650][core] Remove JobProgressListener.

Github user ajbozarth commented on a diff in the pull request:

https://github.com/apache/spark/pull/19750#discussion_r151281951

--- Diff: core/src/main/scala/org/apache/spark/TestUtils.scala ---

@@ -232,6 +232,30 @@ private[spark] object TestUtils {

}

}

+ /**

+ * Wait until at least `numExecutors` executors are up, or throw

`TimeoutException` if the waiting

+ * time elapsed before `numExecutors` executors up. Exposed for testing.

+ *

+ * @param numExecutors the number of executors to wait at least

+ * @param timeout time to wait in milliseconds

+ */

+ private[spark] def waitUntilExecutorsUp(

+ sc: SparkContext,

+ numExecutors: Int,

+ timeout: Long): Unit = {

+val finishTime = System.nanoTime() +

TimeUnit.MILLISECONDS.toNanos(timeout)

+while (System.nanoTime() < finishTime) {

+ if (sc.statusTracker.getExecutorInfos.length > numExecutors) {

--- End diff --

Based on your comment `at least` above, shouldn't this be `>=`?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19750: [SPARK-20650][core] Remove JobProgressListener.

Github user ajbozarth commented on a diff in the pull request:

https://github.com/apache/spark/pull/19750#discussion_r151282422

--- Diff:

core/src/main/scala/org/apache/spark/deploy/history/FsHistoryProvider.scala ---

@@ -315,48 +315,43 @@ private[history] class FsHistoryProvider(conf:

SparkConf, clock: Clock)

(new InMemoryStore(), true)

}

-val listener = if (needReplay) {

- val _listener = new AppStatusListener(kvstore, conf, false,

+if (needReplay) {

+ val replayBus = new ReplayListenerBus()

--- End diff --

Doesn't this val already exist?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19640: [SPARK-16986][WEB-UI] Converter Started, Completed and L...

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/19640 I'm still not 100% convinced this is the best change, but the implementation LGTM. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19640: [SPARK-16986][WEB-UI] Converter Started, Complete...

Github user ajbozarth commented on a diff in the pull request:

https://github.com/apache/spark/pull/19640#discussion_r151253170

--- Diff: core/src/main/resources/org/apache/spark/ui/static/utils.js ---

@@ -46,3 +46,31 @@ function formatBytes(bytes, type) {

var i = Math.floor(Math.log(bytes) / Math.log(k));

return parseFloat((bytes / Math.pow(k, i)).toFixed(dm)) + ' ' +

sizes[i];

}

+

+function padZeroes(num) {

+ return ("0" + num).slice(-2);

+}

+

+function formatTimeMillis(timeMillis) {

+ if (timeMillis <= 0) {

+return "-";

+ } else {

+var dt = new Date(timeMillis);

+return dt.getFullYear() + "-" +

+ padZeroes(dt.getMonth() + 1) + "-" +

+ padZeroes(dt.getDate()) + " " +

+ padZeroes(dt.getHours()) + ":" +

+ padZeroes(dt.getMinutes()) + ":" +

+ padZeroes(dt.getSeconds());

+ }

+}

+

+function getTimeZone() {

+ try {

--- End diff --

Spark does not have a list of supported browsers, from my experience most

of us just test new UI changes on whatever browsers we have (In my case the

latest Safari, Chrome and Firefox ESR). Though I'd like to think we don't have

any IE users, we can't make that assumption while IE 11 is still supported by

MS.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19640: [SPARK-16986][WEB-UI] Converter Started, Completed and L...

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/19640 I'd be ok with that --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19640: [SPARK-16986][WEB-UI] Converter Started, Completed and L...

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/19640 I would tend to agree with @srowen on this, but from an implication side this is pretty good. I would suggest adding the timezone abbreviation to the end so the user knows it's in local time not GMT like previous. Also it may be better to move both the `padZeros` and `formateDate` functions to `utils.js` since they seem like they be used elsewhere eventually. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19532: [CORE]Modify the duration real-time calculation and upda...

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/19532 Thanks, if you could update the title and description to match as well. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19532: [CORE]Modify the duration real-time calculation a...

Github user ajbozarth commented on a diff in the pull request: https://github.com/apache/spark/pull/19532#discussion_r147795197 --- Diff: core/src/main/scala/org/apache/spark/ui/SparkUI.scala --- @@ -120,7 +120,7 @@ private[spark] class SparkUI private ( attemptId = None, startTime = new Date(startTime), endTime = new Date(-1), -duration = 0, +duration = System.currentTimeMillis() - startTime, --- End diff -- I'm on the fence on this change, I would tend to agree with @srowen but as @cloud-fan said, the consistency with the UI would make sense. If we did keep this change I thing removing the redundant code would also make sense. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19520: [SPARK-22298][WEB-UI] url encode APP id before generatin...

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/19520 I'm ok either way but if we add this we should probably make sure we cover this issue anywhere else it may pop up --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19520: [SPARK-22298][WEB-UI] url encode APP id before generatin...

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/19520 I don't think this is something to "fix" in Spark, having slashes in the appId seems like a generally bad idea and this may not be the only place that it breaks things. Instead it may be better for Nomad to check appIds for slashes and reject them on creation. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19270: [SPARK-21809] : Change Stage Page to use datatables to s...

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/19270 After reading through Tom and Marcello's comments I'll defer to them on whether this should be merged in or not. I will note that moving from a hard-coded, server-side, scala generated web ui to a dynamic, client-side, js generated web ui utilizing the metrics api is the direction we've been moving the ui for some time, first with the SHS summery page then with the executors page. I still think this is the right direction, but of all the remaining pages to convert this page at the same time has both the most to gain and the most potential to drag down the UI. If you guys think holding off on this until Marcello's work is done and re-optimizing then is better I'll stand by that, and even though I never did heavy testing for speed I'll stand by Tom that this still needs optimization work before merging. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19520: [SPARK-22298][WEB-UI] url encode APP id before generatin...

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/19520 I'm a bit confused by the issue this is addressing, how do you get an appId with a `/` in it to beh=gin with, last I checked appId formats were hard coded inside Spark --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19507: [WEB-UI] Add count in fair scheduler pool page

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/19507 This change for UI consistency is fine by me, but looking at the actually code I'm not sure where the parenthesis in the screen shots come from given all you did was move the number from the front to the end of each header. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19270: [SPARK-21809] : Change Stage Page to use datatabl...

Github user ajbozarth commented on a diff in the pull request:

https://github.com/apache/spark/pull/19270#discussion_r144163298

--- Diff: core/src/main/resources/org/apache/spark/ui/static/stagepage.js

---

@@ -0,0 +1,468 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+$(document).ajaxStop($.unblockUI);

+$(document).ajaxStart(function () {

+$.blockUI({message: 'Loading Stage Page...'});

+});

+

+$.extend( $.fn.dataTable.ext.type.order, {

+"file-size-pre": ConvertDurationString,

+

+"file-size-asc": function ( a, b ) {

+a = ConvertDurationString( a );

+b = ConvertDurationString( b );

+return ((a < b) ? -1 : ((a > b) ? 1 : 0));

+},

+

+"file-size-desc": function ( a, b ) {

+a = ConvertDurationString( a );

+b = ConvertDurationString( b );

+return ((a < b) ? 1 : ((a > b) ? -1 : 0));

+}

+} );

+

+// This function will only parse the URL under certain format

+// e.g.

https://axonitered-jt1.red.ygrid.yahoo.com:50509/history/application_1502220952225_59143/stages/stage/?id=0&attempt=0

+function stageEndPoint(appId) {

+var words = document.baseURI.split('/');

+var words2 = document.baseURI.split('?');

+var ind = words.indexOf("proxy");

+if (ind > 0) {

+var appId = words[ind + 1];

+var stageIdLen = words2[1].indexOf('&');

+var stageId = words2[1].substr(3, stageIdLen - 3);

+var newBaseURI = words.slice(0, ind + 2).join('/');

+return newBaseURI + "/api/v1/applications/" + appId + "/stages/" +

stageId;

+}

+ind = words.indexOf("history");

+if (ind > 0) {

+var appId = words[ind + 1];

+var attemptId = words[ind + 2];

+var stageIdLen = words2[1].indexOf('&');

+var stageId = words2[1].substr(3, stageIdLen - 3);

+var newBaseURI = words.slice(0, ind).join('/');

+if (isNaN(attemptId) || attemptId == "0") {

+return newBaseURI + "/api/v1/applications/" + appId +

"/stages/" + stageId;

+} else {

+return newBaseURI + "/api/v1/applications/" + appId + "/" +

attemptId + "/stages/" + stageId;

+}

+}

+var stageIdLen = words2[1].indexOf('&');

+var stageId = words2[1].substr(3, stageIdLen - 3);

+return location.origin + "/api/v1/applications/" + appId + "/stages/"

+ stageId;

+}

+

+function sortNumber(a,b) {

+return a - b;

+}

+

+function quantile(array, percentile) {

+index = percentile/100. * (array.length-1);

+if (Math.floor(index) == index) {

+ result = array[index];

+} else {

+var i = Math.floor(index);

+fraction = index - i;

+result = array[i];

+}

+return result;

+}

+

+$(document).ready(function () {

+setDataTableDefaults();

+

+$("#showAdditionalMetrics").append(

+"" +

+"" +

+" Show Additional Metrics" +

+"" +

+"" +

+" Select All" +

+" Scheduler Delay" +

+" Task Deserialization Time" +

+" Shuffle Read Blocked Time" +

+" Shuffle Remote Reads" +

+" Result Serialization Time" +

+" Getting Result Time" +

+" Peak Execution Memory" +

+"");

+

+tasksSummary = $("#active-tasks");

+getStandAloneAppId(function (appId) {

+

+var endPoint = stageEndPoint(appId);

+

[GitHub] spark pull request #19270: [SPARK-21809] : Change Stage Page to use datatabl...

Github user ajbozarth commented on a diff in the pull request:

https://github.com/apache/spark/pull/19270#discussion_r144162168

--- Diff: core/src/main/resources/org/apache/spark/ui/static/stagepage.js

---

@@ -0,0 +1,468 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+$(document).ajaxStop($.unblockUI);

+$(document).ajaxStart(function () {

+$.blockUI({message: 'Loading Stage Page...'});

+});

+

+$.extend( $.fn.dataTable.ext.type.order, {

+"file-size-pre": ConvertDurationString,

+

+"file-size-asc": function ( a, b ) {

+a = ConvertDurationString( a );

+b = ConvertDurationString( b );

+return ((a < b) ? -1 : ((a > b) ? 1 : 0));

+},

+

+"file-size-desc": function ( a, b ) {

+a = ConvertDurationString( a );

+b = ConvertDurationString( b );

+return ((a < b) ? 1 : ((a > b) ? -1 : 0));

+}

+} );

+

+// This function will only parse the URL under certain format

+// e.g.

https://axonitered-jt1.red.ygrid.yahoo.com:50509/history/application_1502220952225_59143/stages/stage/?id=0&attempt=0

+function stageEndPoint(appId) {

+var words = document.baseURI.split('/');

+var words2 = document.baseURI.split('?');

+var ind = words.indexOf("proxy");

+if (ind > 0) {

+var appId = words[ind + 1];

+var stageIdLen = words2[1].indexOf('&');

+var stageId = words2[1].substr(3, stageIdLen - 3);

+var newBaseURI = words.slice(0, ind + 2).join('/');

+return newBaseURI + "/api/v1/applications/" + appId + "/stages/" +

stageId;

+}

+ind = words.indexOf("history");

+if (ind > 0) {

+var appId = words[ind + 1];

+var attemptId = words[ind + 2];

+var stageIdLen = words2[1].indexOf('&');

+var stageId = words2[1].substr(3, stageIdLen - 3);

+var newBaseURI = words.slice(0, ind).join('/');

+if (isNaN(attemptId) || attemptId == "0") {

+return newBaseURI + "/api/v1/applications/" + appId +

"/stages/" + stageId;

+} else {

+return newBaseURI + "/api/v1/applications/" + appId + "/" +

attemptId + "/stages/" + stageId;

+}

+}

+var stageIdLen = words2[1].indexOf('&');

+var stageId = words2[1].substr(3, stageIdLen - 3);

+return location.origin + "/api/v1/applications/" + appId + "/stages/"

+ stageId;

+}

+

+function sortNumber(a,b) {

+return a - b;

+}

+

+function quantile(array, percentile) {

+index = percentile/100. * (array.length-1);

+if (Math.floor(index) == index) {

+ result = array[index];

+} else {

+var i = Math.floor(index);

+fraction = index - i;

+result = array[i];

+}

+return result;

+}

+

+$(document).ready(function () {

+setDataTableDefaults();

+

+$("#showAdditionalMetrics").append(

+"" +

+"" +

+" Show Additional Metrics" +

+"" +

+"" +

+" Select All" +

+" Scheduler Delay" +

+" Task Deserialization Time" +

+" Shuffle Read Blocked Time" +

+" Shuffle Remote Reads" +

+" Result Serialization Time" +

+" Getting Result Time" +

+" Peak Execution Memory" +

+"");

+

+tasksSummary = $("#active-tasks");

+getStandAloneAppId(function (appId) {

+

+var endPoint = stageEndPoint(appId);

+

[GitHub] spark pull request #19270: [SPARK-21809] : Change Stage Page to use datatabl...

Github user ajbozarth commented on a diff in the pull request:

https://github.com/apache/spark/pull/19270#discussion_r143865206

--- Diff: core/src/main/resources/org/apache/spark/ui/static/stagepage.js

---

@@ -0,0 +1,471 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+$(document).ajaxStop($.unblockUI);

+$(document).ajaxStart(function () {

+$.blockUI({message: 'Loading Stage Page...'});

+});

+

+$.extend( $.fn.dataTable.ext.type.order, {

+"file-size-pre": ConvertDurationString,

+

+"file-size-asc": function ( a, b ) {

+a = ConvertDurationString( a );

+b = ConvertDurationString( b );

+return ((a < b) ? -1 : ((a > b) ? 1 : 0));

+},

+

+"file-size-desc": function ( a, b ) {

+a = ConvertDurationString( a );

+b = ConvertDurationString( b );

+return ((a < b) ? 1 : ((a > b) ? -1 : 0));

+}

+} );

+

+// This function will only parse the URL under certain formate

+// e.g.

https://axonitered-jt1.red.ygrid.yahoo.com:50509/history/application_1502220952225_59143/stages/stage/?id=0&attempt=0

+function stageEndPoint(appId) {

+var words = document.baseURI.split('/');

+var words2 = document.baseURI.split('?');

+var ind = words.indexOf("proxy");

+if (ind > 0) {

+var appId = words[ind + 1];

+var stageIdLen = words2[1].indexOf('&');

+var stageId = words2[1].substr(3, stageIdLen - 3);

+var newBaseURI = words.slice(0, ind + 2).join('/');

+return newBaseURI + "/api/v1/applications/" + appId + "/stages/" +

stageId;

+}

+ind = words.indexOf("history");

+if (ind > 0) {

+var appId = words[ind + 1];

+var attemptId = words[ind + 2];

+var stageIdLen = words2[1].indexOf('&');

+var stageId = words2[1].substr(3, stageIdLen - 3);

+var newBaseURI = words.slice(0, ind).join('/');

+if (isNaN(attemptId) || attemptId == "0") {

+return newBaseURI + "/api/v1/applications/" + appId +

"/stages/" + stageId;

+} else {

+return newBaseURI + "/api/v1/applications/" + appId + "/" +

attemptId + "/stages/" + stageId;

+}

+}

+var stageIdLen = words2[1].indexOf('&');

+var stageId = words2[1].substr(3, stageIdLen - 3);

+return location.origin + "/api/v1/applications/" + appId + "/stages/"

+ stageId;

+}

+

+function sortNumber(a,b) {

+return a - b;

+}

+

+function quantile(array, percentile) {

+index = percentile/100. * (array.length-1);

+if (Math.floor(index) == index) {

+ result = array[index];

+} else {

+var i = Math.floor(index);

+fraction = index - i;

+result = array[i];

+}

+return result;

+}

+

+$(document).ready(function () {

+setDataTableDefaults();

+

+$("#showAdditionalMetrics").append(

+"" +

+"" +

+" Show Additional Metrics" +

+"" +

+"" +

+" Select All" +

+" Scheduler Delay" +

+" Task Deserialization Time" +

+" Shuffle Read Blocked Time" +

+" Shuffle Remote Reads" +

+" Result Serialization Time" +

+" Getting Result Time" +

+" Peak Execution Memory" +

+"");

+

+tasksSummary = $("#active-tasks");

+var app_id = getStandAloneAppId();

+buildStageDataTables(app_i

[GitHub] spark pull request #19270: [SPARK-21809] : Change Stage Page to use datatabl...

Github user ajbozarth commented on a diff in the pull request:

https://github.com/apache/spark/pull/19270#discussion_r143862026

--- Diff: core/src/main/resources/org/apache/spark/ui/static/stagepage.js

---

@@ -0,0 +1,471 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+$(document).ajaxStop($.unblockUI);

+$(document).ajaxStart(function () {

+$.blockUI({message: 'Loading Stage Page...'});

+});

+

+$.extend( $.fn.dataTable.ext.type.order, {

+"file-size-pre": ConvertDurationString,

+

+"file-size-asc": function ( a, b ) {

+a = ConvertDurationString( a );

+b = ConvertDurationString( b );

+return ((a < b) ? -1 : ((a > b) ? 1 : 0));

+},

+

+"file-size-desc": function ( a, b ) {

+a = ConvertDurationString( a );

+b = ConvertDurationString( b );

+return ((a < b) ? 1 : ((a > b) ? -1 : 0));

+}

+} );

+

+// This function will only parse the URL under certain formate

--- End diff --

typo: `format`

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19270: [SPARK-21809] : Change Stage Page to use datatabl...

Github user ajbozarth commented on a diff in the pull request:

https://github.com/apache/spark/pull/19270#discussion_r143864647

--- Diff: core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala ---

@@ -340,261 +259,19 @@ private[ui] class StagePage(parent: StagesTab)

extends WebUIPage("stage") {

// Excludes tasks which failed and have incomplete metrics

val validTasks = tasks.filter(t => t.taskInfo.status == "SUCCESS" &&

t.metrics.isDefined)

- val summaryTable: Option[Seq[Node]] =

-if (validTasks.isEmpty) {

- None

-}

-else {

- def getDistributionQuantiles(data: Seq[Double]):

IndexedSeq[Double] =

-Distribution(data).get.getQuantiles()

- def getFormattedTimeQuantiles(times: Seq[Double]): Seq[Node] = {

-getDistributionQuantiles(times).map { millis =>

- {UIUtils.formatDuration(millis.toLong)}

-}

- }

- def getFormattedSizeQuantiles(data: Seq[Double]): Seq[Elem] = {

-getDistributionQuantiles(data).map(d =>

{Utils.bytesToString(d.toLong)})

- }

-

- val deserializationTimes = validTasks.map { taskUIData:

TaskUIData =>

-taskUIData.metrics.get.executorDeserializeTime.toDouble

- }

- val deserializationQuantiles =

-

-

-Task Deserialization Time

-

- +: getFormattedTimeQuantiles(deserializationTimes)

-

- val serviceTimes = validTasks.map { taskUIData: TaskUIData =>

-taskUIData.metrics.get.executorRunTime.toDouble

- }

- val serviceQuantiles = Duration +:

getFormattedTimeQuantiles(serviceTimes)

-

- val gcTimes = validTasks.map { taskUIData: TaskUIData =>

-taskUIData.metrics.get.jvmGCTime.toDouble

- }

- val gcQuantiles =

-

- GC Time

-

- +: getFormattedTimeQuantiles(gcTimes)

-

- val serializationTimes = validTasks.map { taskUIData: TaskUIData

=>

-taskUIData.metrics.get.resultSerializationTime.toDouble

- }

- val serializationQuantiles =

-

-

-Result Serialization Time

-

- +: getFormattedTimeQuantiles(serializationTimes)

-

- val gettingResultTimes = validTasks.map { taskUIData: TaskUIData

=>

-getGettingResultTime(taskUIData.taskInfo, currentTime).toDouble

- }

- val gettingResultQuantiles =

-

-

-Getting Result Time

-

- +:

-getFormattedTimeQuantiles(gettingResultTimes)

-

- val peakExecutionMemory = validTasks.map { taskUIData:

TaskUIData =>

-taskUIData.metrics.get.peakExecutionMemory.toDouble

- }

- val peakExecutionMemoryQuantiles = {

-

-

-Peak Execution Memory

-

- +: getFormattedSizeQuantiles(peakExecutionMemory)

- }

-

- // The scheduler delay includes the network delay to send the

task to the worker

- // machine and to send back the result (but not the time to

fetch the task result,

- // if it needed to be fetched from the block manager on the

worker).

- val schedulerDelays = validTasks.map { taskUIData: TaskUIData =>

-getSchedulerDelay(taskUIData.taskInfo, taskUIData.metrics.get,

currentTime).toDouble

- }

- val schedulerDelayTitle = Scheduler Delay

- val schedulerDelayQuantiles = schedulerDelayTitle +:

-getFormattedTimeQuantiles(schedulerDelays)

- def getFormattedSizeQuantilesWithRecords(data: Seq[Double],

records: Seq[Double])

-: Seq[Elem] = {

-val recordDist = getDistributionQuantiles(records).iterator

-getDistributionQuantiles(data).map(d =>

- {s"${Utils.bytesToString(d.toLong)} /

${recordDist.next().toLong}"}

-)

- }

-

- val inputSizes = validTasks.map { taskUIData: TaskUIData =>

-taskUIData.metrics.get.inputMetrics.bytesRead.toDouble

- }

-

- val inputRecords = validTasks.map { taskUIData: TaskUIData =>

-taskUIData.metrics.get.inputMetrics.recordsRead.toDouble

- }

-

- val inputQuantiles = Input Size / Records +:

-getFormattedSizeQuanti

[GitHub] spark pull request #19270: [SPARK-21809] : Change Stage Page to use datatabl...

Github user ajbozarth commented on a diff in the pull request:

https://github.com/apache/spark/pull/19270#discussion_r143861205

--- Diff: core/src/main/resources/org/apache/spark/ui/static/utils.js ---

@@ -46,3 +46,84 @@ function formatBytes(bytes, type) {

var i = Math.floor(Math.log(bytes) / Math.log(k));

return parseFloat((bytes / Math.pow(k, i)).toFixed(dm)) + ' ' +

sizes[i];

}

+

+function formatLogsCells(execLogs, type) {

+if (type !== 'display') return Object.keys(execLogs);

+if (!execLogs) return;

+var result = '';

+$.each(execLogs, function (logName, logUrl) {

+result += '' + logName + ''

+});

+return result;

+}

+

+function getStandAloneAppId() {

+var words = document.baseURI.split('/');

+var ind = words.indexOf("proxy");

+if (ind > 0) {

+var appId = words[ind + 1];

+return appId;

+}

+ind = words.indexOf("history");

+if (ind > 0) {

+var appId = words[ind + 1];

+return appId;

+}

+//Looks like Web UI is running in standalone mode

+//Let's get application-id using REST End Point

+$.getJSON(location.origin + "/api/v1/applications", function(response,

status, jqXHR) {

+if (response && response.length > 0) {

+var appId = response[0].id

+return appId;

--- End diff --

Since this is inside a getJSON this value will never return. This line here

is why this function took in a function before. So we'll need to change it back.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19270: [SPARK-21809] : Change Stage Page to use datatabl...

Github user ajbozarth commented on a diff in the pull request:

https://github.com/apache/spark/pull/19270#discussion_r143861262

--- Diff: core/src/main/resources/org/apache/spark/ui/static/utils.js ---

@@ -46,3 +46,84 @@ function formatBytes(bytes, type) {

var i = Math.floor(Math.log(bytes) / Math.log(k));

return parseFloat((bytes / Math.pow(k, i)).toFixed(dm)) + ' ' +

sizes[i];

}

+

+function formatLogsCells(execLogs, type) {

+if (type !== 'display') return Object.keys(execLogs);

+if (!execLogs) return;

+var result = '';

+$.each(execLogs, function (logName, logUrl) {

+result += '' + logName + ''

+});

+return result;

+}

+

+function getStandAloneAppId() {

+var words = document.baseURI.split('/');

+var ind = words.indexOf("proxy");

+if (ind > 0) {

+var appId = words[ind + 1];

+return appId;

+}

+ind = words.indexOf("history");

+if (ind > 0) {

+var appId = words[ind + 1];

+return appId;

+}

+//Looks like Web UI is running in standalone mode

+//Let's get application-id using REST End Point

+$.getJSON(location.origin + "/api/v1/applications", function(response,

status, jqXHR) {

+if (response && response.length > 0) {

+var appId = response[0].id

--- End diff --

missing `;`

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19399: [SPARK-22175][WEB-UI] Add status column to history page

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/19399 With @jerryshao comments I'm going to get off the fence firmly against this, we already have too many things slowing down the SHS as it is --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

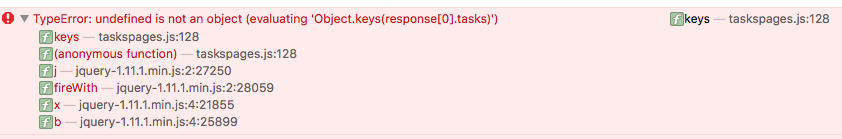

[GitHub] spark issue #19270: [SPARK-21809] : Change Stage Page to use datatables to s...

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/19270 So I think I know why the appId was handled the way it was, the live app ui no longer works because the appId var is "undefined" in all the api calls --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19270: [SPARK-21809] : Change Stage Page to use datatables to s...

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/19270 Also could you update the description with new (and more) screen shots? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19270: [SPARK-21809] : Change Stage Page to use datatables to s...

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/19270 Thanks, I'll take a look later today --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19270: [SPARK-21809] : Change Stage Page to use datatabl...

Github user ajbozarth commented on a diff in the pull request:

https://github.com/apache/spark/pull/19270#discussion_r143287894

--- Diff: core/src/main/resources/org/apache/spark/ui/static/utils.js ---

@@ -46,3 +46,64 @@ function formatBytes(bytes, type) {

var i = Math.floor(Math.log(bytes) / Math.log(k));

return parseFloat((bytes / Math.pow(k, i)).toFixed(dm)) + ' ' +

sizes[i];

}

+

+function formatLogsCells(execLogs, type) {

+if (type !== 'display') return Object.keys(execLogs);

+if (!execLogs) return;

+var result = '';

+$.each(execLogs, function (logName, logUrl) {

+result += '' + logName + ''

+});

+return result;

+}

+

+function getStandAloneAppId(cb) {

--- End diff --

exactly, I'm still not sure why it was implemented this way in the first

place

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19270: [SPARK-21809] : Change Stage Page to use datatables to s...

Github user ajbozarth commented on the issue: https://github.com/apache/spark/pull/19270 Thanks, I must've missed that in the description. You've take care of all but my last comment, I'm not seeing the accumulators table, have you checked it shows up when theres accumulators? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19270: [SPARK-21809] : Change Stage Page to use datatabl...

Github user ajbozarth commented on a diff in the pull request:

https://github.com/apache/spark/pull/19270#discussion_r143286538

--- Diff: core/src/main/resources/org/apache/spark/ui/static/utils.js ---

@@ -46,3 +46,64 @@ function formatBytes(bytes, type) {

var i = Math.floor(Math.log(bytes) / Math.log(k));

return parseFloat((bytes / Math.pow(k, i)).toFixed(dm)) + ' ' +

sizes[i];

}

+

+function formatLogsCells(execLogs, type) {

+if (type !== 'display') return Object.keys(execLogs);

+if (!execLogs) return;

+var result = '';

+$.each(execLogs, function (logName, logUrl) {

+result += '' + logName + ''

+});

+return result;

+}

+

+function getStandAloneAppId(cb) {

--- End diff --

I was thinking it should return the appId rather than take in a function as

a param

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19270: [SPARK-21809] : Change Stage Page to use datatabl...

Github user ajbozarth commented on a diff in the pull request:

https://github.com/apache/spark/pull/19270#discussion_r142782246

--- Diff: core/src/main/resources/org/apache/spark/ui/static/taskspages.js

---

@@ -0,0 +1,474 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+$(document).ajaxStop($.unblockUI);

+$(document).ajaxStart(function () {

+$.blockUI({message: 'Loading Tasks Page...'});

+});

+

+$.extend( $.fn.dataTable.ext.type.order, {

+"file-size-pre": ConvertDurationString,

+

+"file-size-asc": function ( a, b ) {

+a = ConvertDurationString( a );

+b = ConvertDurationString( b );

+return ((a < b) ? -1 : ((a > b) ? 1 : 0));

+},

+

+"file-size-desc": function ( a, b ) {

+a = ConvertDurationString( a );

+b = ConvertDurationString( b );

+return ((a < b) ? 1 : ((a > b) ? -1 : 0));

+}

+} );

+

+function createTemplateURI(appId) {

+var words = document.baseURI.split('/');

+var ind = words.indexOf("proxy");

+if (ind > 0) {

+var baseURI = words.slice(0, ind + 1).join('/') + '/' + appId +

'/static/stagespage-template.html';

+return baseURI;

+}

+ind = words.indexOf("history");

+if(ind > 0) {

+var baseURI = words.slice(0, ind).join('/') +

'/static/stagespage-template.html';

+return baseURI;

+}

+return location.origin + "/static/stagespage-template.html";

+}

+

+// This function will only parse the URL under certain formate

+// e.g.

https://axonitered-jt1.red.ygrid.yahoo.com:50509/history/application_1502220952225_59143/stages/stage/?id=0&attempt=0

+function StageEndPoint(appId) {

+var words = document.baseURI.split('/');

+var words2 = document.baseURI.split('?');

+var ind = words.indexOf("proxy");

+if (ind > 0) {

+var appId = words[ind + 1];

+var stageIdLen = words2[1].indexOf('&');

+var stageId = words2[1].substr(3, stageIdLen - 3);

+var newBaseURI = words.slice(0, ind + 2).join('/');

+return newBaseURI + "/api/v1/applications/" + appId + "/stages/" +

stageId;

+}

+ind = words.indexOf("history");

+if (ind > 0) {

+var appId = words[ind + 1];

+var attemptId = words[ind + 2];

+var stageIdLen = words2[1].indexOf('&');

+var stageId = words2[1].substr(3, stageIdLen - 3);

+var newBaseURI = words.slice(0, ind).join('/');

+if (isNaN(attemptId) || attemptId == "0") {

+return newBaseURI + "/api/v1/applications/" + appId +

"/stages/" + stageId;

+} else {

+return newBaseURI + "/api/v1/applications/" + appId + "/" +

attemptId + "/stages/" + stageId;

+}

+}

+var stageIdLen = words2[1].indexOf('&');

+var stageId = words2[1].substr(3, stageIdLen - 3);

+return location.origin + "/api/v1/applications/" + appId + "/stages/"

+ stageId;

+}

+

+function sortNumber(a,b) {

+return a - b;

+}

+

+function quantile(array, percentile) {

+index = percentile/100. * (array.length-1);

+if (Math.floor(index) == index) {

+ result = array[index];

+} else {

+var i = Math.floor(index);

+fraction = index - i;

+result = array[i];

+}

+return result;

+}

+

+$(document).ready(function () {

+$.extend($.fn.dataTable.defaults, {

+stateSave: true,

+lengthMenu: [[20, 40, 60, 100, -1], [20, 40, 60, 100, "All"]],

[GitHub] spark pull request #19270: [SPARK-21809] : Change Stage Page to use datatabl...

Github user ajbozarth commented on a diff in the pull request:

https://github.com/apache/spark/pull/19270#discussion_r142781908

--- Diff: core/src/main/resources/org/apache/spark/ui/static/utils.js ---

@@ -46,3 +46,64 @@ function formatBytes(bytes, type) {

var i = Math.floor(Math.log(bytes) / Math.log(k));

return parseFloat((bytes / Math.pow(k, i)).toFixed(dm)) + ' ' +

sizes[i];

}

+

+function formatLogsCells(execLogs, type) {

+if (type !== 'display') return Object.keys(execLogs);

+if (!execLogs) return;

+var result = '';

+$.each(execLogs, function (logName, logUrl) {

+result += '' + logName + ''

+});

+return result;

+}

+

+function getStandAloneAppId(cb) {

--- End diff --

So I know you just copied this function over, but why doesn't this just

return the appId rather than taking in a function to run on an appId? It seems

to me the later would make more sense. If changing it makes sense to you as

well we should update it here.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19270: [SPARK-21809] : Change Stage Page to use datatabl...

Github user ajbozarth commented on a diff in the pull request:

https://github.com/apache/spark/pull/19270#discussion_r142547222

--- Diff: core/src/main/resources/org/apache/spark/ui/static/taskspages.js

---

@@ -0,0 +1,474 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+$(document).ajaxStop($.unblockUI);

+$(document).ajaxStart(function () {

+$.blockUI({message: 'Loading Tasks Page...'});

+});

+

+$.extend( $.fn.dataTable.ext.type.order, {

+"file-size-pre": ConvertDurationString,

+

+"file-size-asc": function ( a, b ) {

+a = ConvertDurationString( a );

+b = ConvertDurationString( b );

+return ((a < b) ? -1 : ((a > b) ? 1 : 0));

+},

+

+"file-size-desc": function ( a, b ) {

+a = ConvertDurationString( a );

+b = ConvertDurationString( b );

+return ((a < b) ? 1 : ((a > b) ? -1 : 0));

+}

+} );

+

+function createTemplateURI(appId) {

+var words = document.baseURI.split('/');

+var ind = words.indexOf("proxy");

+if (ind > 0) {

+var baseURI = words.slice(0, ind + 1).join('/') + '/' + appId +

'/static/stagespage-template.html';

+return baseURI;

+}

+ind = words.indexOf("history");

+if(ind > 0) {

+var baseURI = words.slice(0, ind).join('/') +

'/static/stagespage-template.html';

+return baseURI;

+}

+return location.origin + "/static/stagespage-template.html";

+}

+

+// This function will only parse the URL under certain formate

+// e.g.

https://axonitered-jt1.red.ygrid.yahoo.com:50509/history/application_1502220952225_59143/stages/stage/?id=0&attempt=0

+function StageEndPoint(appId) {

+var words = document.baseURI.split('/');

+var words2 = document.baseURI.split('?');

+var ind = words.indexOf("proxy");

+if (ind > 0) {

+var appId = words[ind + 1];

+var stageIdLen = words2[1].indexOf('&');

+var stageId = words2[1].substr(3, stageIdLen - 3);

+var newBaseURI = words.slice(0, ind + 2).join('/');

+return newBaseURI + "/api/v1/applications/" + appId + "/stages/" +

stageId;

+}

+ind = words.indexOf("history");

+if (ind > 0) {

+var appId = words[ind + 1];

+var attemptId = words[ind + 2];

+var stageIdLen = words2[1].indexOf('&');

+var stageId = words2[1].substr(3, stageIdLen - 3);

+var newBaseURI = words.slice(0, ind).join('/');

+if (isNaN(attemptId) || attemptId == "0") {

+return newBaseURI + "/api/v1/applications/" + appId +

"/stages/" + stageId;

+} else {

+return newBaseURI + "/api/v1/applications/" + appId + "/" +

attemptId + "/stages/" + stageId;

+}

+}

+var stageIdLen = words2[1].indexOf('&');

+var stageId = words2[1].substr(3, stageIdLen - 3);

+return location.origin + "/api/v1/applications/" + appId + "/stages/"

+ stageId;

+}

+

+function sortNumber(a,b) {

+return a - b;

+}

+

+function quantile(array, percentile) {

+index = percentile/100. * (array.length-1);

+if (Math.floor(index) == index) {

+ result = array[index];

+} else {

+var i = Math.floor(index);

+fraction = index - i;

+result = array[i];

+}

+return result;

+}

+

+$(document).ready(function () {

+$.extend($.fn.dataTable.defaults, {

--- End diff --

this might also be worth moving to `utils.js` since we set the same

defaults on all our pages

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19270: [SPARK-21809] : Change Stage Page to use datatabl...

Github user ajbozarth commented on a diff in the pull request:

https://github.com/apache/spark/pull/19270#discussion_r142546728

--- Diff: core/src/main/resources/org/apache/spark/ui/static/taskspages.js

---

@@ -0,0 +1,474 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+$(document).ajaxStop($.unblockUI);

+$(document).ajaxStart(function () {

+$.blockUI({message: 'Loading Tasks Page...'});

+});

+

+$.extend( $.fn.dataTable.ext.type.order, {

+"file-size-pre": ConvertDurationString,

+

+"file-size-asc": function ( a, b ) {

+a = ConvertDurationString( a );

+b = ConvertDurationString( b );

+return ((a < b) ? -1 : ((a > b) ? 1 : 0));

+},

+

+"file-size-desc": function ( a, b ) {

+a = ConvertDurationString( a );

+b = ConvertDurationString( b );

+return ((a < b) ? 1 : ((a > b) ? -1 : 0));

+}

+} );

+

+function createTemplateURI(appId) {

+var words = document.baseURI.split('/');

+var ind = words.indexOf("proxy");

+if (ind > 0) {

+var baseURI = words.slice(0, ind + 1).join('/') + '/' + appId +

'/static/stagespage-template.html';

+return baseURI;

+}

+ind = words.indexOf("history");

+if(ind > 0) {

+var baseURI = words.slice(0, ind).join('/') +

'/static/stagespage-template.html';

+return baseURI;

+}

+return location.origin + "/static/stagespage-template.html";

+}

+

+// This function will only parse the URL under certain formate

+// e.g.

https://axonitered-jt1.red.ygrid.yahoo.com:50509/history/application_1502220952225_59143/stages/stage/?id=0&attempt=0

+function StageEndPoint(appId) {

+var words = document.baseURI.split('/');

+var words2 = document.baseURI.split('?');

+var ind = words.indexOf("proxy");

+if (ind > 0) {

+var appId = words[ind + 1];

+var stageIdLen = words2[1].indexOf('&');

+var stageId = words2[1].substr(3, stageIdLen - 3);

+var newBaseURI = words.slice(0, ind + 2).join('/');

+return newBaseURI + "/api/v1/applications/" + appId + "/stages/" +

stageId;

+}

+ind = words.indexOf("history");

+if (ind > 0) {

+var appId = words[ind + 1];

+var attemptId = words[ind + 2];

+var stageIdLen = words2[1].indexOf('&');

+var stageId = words2[1].substr(3, stageIdLen - 3);

+var newBaseURI = words.slice(0, ind).join('/');

+if (isNaN(attemptId) || attemptId == "0") {

+return newBaseURI + "/api/v1/applications/" + appId +

"/stages/" + stageId;

+} else {

+return newBaseURI + "/api/v1/applications/" + appId + "/" +

attemptId + "/stages/" + stageId;

+}

+}

+var stageIdLen = words2[1].indexOf('&');

+var stageId = words2[1].substr(3, stageIdLen - 3);

--- End diff --

iiuc this doesn't account for anchors in the url (`#tasks-section`), I'd

look into some url parsing js functions libs, I've seen some good ones before.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19270: [SPARK-21809] : Change Stage Page to use datatabl...

Github user ajbozarth commented on a diff in the pull request:

https://github.com/apache/spark/pull/19270#discussion_r142539880

--- Diff:

core/src/main/scala/org/apache/spark/status/api/v1/AllStagesResource.scala ---

@@ -138,21 +155,61 @@ private[v1] object AllStagesResource {

}

}

- def convertTaskData(uiData: TaskUIData, lastUpdateTime: Option[Long]):

TaskData = {

+ private def getGettingResultTime(info: TaskInfo, currentTime: Long):

Long = {

--- End diff --

`currentTime` is never used

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19270: [SPARK-21809] : Change Stage Page to use datatabl...

Github user ajbozarth commented on a diff in the pull request:

https://github.com/apache/spark/pull/19270#discussion_r142546939

--- Diff: core/src/main/resources/org/apache/spark/ui/static/taskspages.js

---

@@ -0,0 +1,474 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+$(document).ajaxStop($.unblockUI);

+$(document).ajaxStart(function () {

+$.blockUI({message: 'Loading Tasks Page...'});

+});

+

+$.extend( $.fn.dataTable.ext.type.order, {

+"file-size-pre": ConvertDurationString,

+

+"file-size-asc": function ( a, b ) {

+a = ConvertDurationString( a );

+b = ConvertDurationString( b );

+return ((a < b) ? -1 : ((a > b) ? 1 : 0));

+},

+

+"file-size-desc": function ( a, b ) {

+a = ConvertDurationString( a );

+b = ConvertDurationString( b );

+return ((a < b) ? 1 : ((a > b) ? -1 : 0));

+}

+} );

+

+function createTemplateURI(appId) {

+var words = document.baseURI.split('/');

+var ind = words.indexOf("proxy");

+if (ind > 0) {

+var baseURI = words.slice(0, ind + 1).join('/') + '/' + appId +

'/static/stagespage-template.html';

+return baseURI;

+}

+ind = words.indexOf("history");

+if(ind > 0) {

+var baseURI = words.slice(0, ind).join('/') +

'/static/stagespage-template.html';

+return baseURI;

+}

+return location.origin + "/static/stagespage-template.html";

+}

+

+// This function will only parse the URL under certain formate

+// e.g.

https://axonitered-jt1.red.ygrid.yahoo.com:50509/history/application_1502220952225_59143/stages/stage/?id=0&attempt=0

+function StageEndPoint(appId) {

+var words = document.baseURI.split('/');

+var words2 = document.baseURI.split('?');

+var ind = words.indexOf("proxy");

+if (ind > 0) {

+var appId = words[ind + 1];

+var stageIdLen = words2[1].indexOf('&');

+var stageId = words2[1].substr(3, stageIdLen - 3);

+var newBaseURI = words.slice(0, ind + 2).join('/');

+return newBaseURI + "/api/v1/applications/" + appId + "/stages/" +

stageId;

+}

+ind = words.indexOf("history");

+if (ind > 0) {

+var appId = words[ind + 1];

+var attemptId = words[ind + 2];

+var stageIdLen = words2[1].indexOf('&');

+var stageId = words2[1].substr(3, stageIdLen - 3);

+var newBaseURI = words.slice(0, ind).join('/');

+if (isNaN(attemptId) || attemptId == "0") {

+return newBaseURI + "/api/v1/applications/" + appId +

"/stages/" + stageId;

+} else {

+return newBaseURI + "/api/v1/applications/" + appId + "/" +

attemptId + "/stages/" + stageId;

+}

+}

+var stageIdLen = words2[1].indexOf('&');

+var stageId = words2[1].substr(3, stageIdLen - 3);

--- End diff --

Also I'm not quite sure what the `3`s are doing here

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19270: [SPARK-21809] : Change Stage Page to use datatabl...

Github user ajbozarth commented on a diff in the pull request:

https://github.com/apache/spark/pull/19270#discussion_r142545431

--- Diff: core/src/main/resources/org/apache/spark/ui/static/taskspages.js

---

@@ -0,0 +1,474 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+$(document).ajaxStop($.unblockUI);

+$(document).ajaxStart(function () {

+$.blockUI({message: 'Loading Tasks Page...'});

+});

+

+$.extend( $.fn.dataTable.ext.type.order, {

+"file-size-pre": ConvertDurationString,

+

+"file-size-asc": function ( a, b ) {

+a = ConvertDurationString( a );

+b = ConvertDurationString( b );

+return ((a < b) ? -1 : ((a > b) ? 1 : 0));

+},

+

+"file-size-desc": function ( a, b ) {

+a = ConvertDurationString( a );

+b = ConvertDurationString( b );

+return ((a < b) ? 1 : ((a > b) ? -1 : 0));

+}

+} );

+

+function createTemplateURI(appId) {

+var words = document.baseURI.split('/');

+var ind = words.indexOf("proxy");

+if (ind > 0) {

+var baseURI = words.slice(0, ind + 1).join('/') + '/' + appId +

'/static/stagespage-template.html';

+return baseURI;

+}

+ind = words.indexOf("history");

+if(ind > 0) {

+var baseURI = words.slice(0, ind).join('/') +

'/static/stagespage-template.html';

+return baseURI;

+}

+return location.origin + "/static/stagespage-template.html";

+}

+

+// This function will only parse the URL under certain formate

+// e.g.

https://axonitered-jt1.red.ygrid.yahoo.com:50509/history/application_1502220952225_59143/stages/stage/?id=0&attempt=0

+function StageEndPoint(appId) {

--- End diff --

style: `stageEndPoint`

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19270: [SPARK-21809] : Change Stage Page to use datatabl...

Github user ajbozarth commented on a diff in the pull request:

https://github.com/apache/spark/pull/19270#discussion_r142547431

--- Diff: core/src/main/resources/org/apache/spark/ui/static/taskspages.js

---

@@ -0,0 +1,474 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+$(document).ajaxStop($.unblockUI);

+$(document).ajaxStart(function () {

+$.blockUI({message: 'Loading Tasks Page...'});

+});

+

+$.extend( $.fn.dataTable.ext.type.order, {

+"file-size-pre": ConvertDurationString,

+

+"file-size-asc": function ( a, b ) {

+a = ConvertDurationString( a );

+b = ConvertDurationString( b );

+return ((a < b) ? -1 : ((a > b) ? 1 : 0));

+},

+

+"file-size-desc": function ( a, b ) {