[GitHub] spark issue #23144: [SPARK-26172][ML][WIP] Unify String Params' case-insensi...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/23144 Using an optional `normalize` function argument maybe OK, I will have a try. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #23144: [SPARK-26172][ML][WIP] Unify String Params' case-insensi...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/23144 @srowen To adopt an optional `normalize` function argument, we may need to create a new class `StringParam` and add the argument into it. But this will be a breaking change, since existing string params are of type `Param[String]`. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #23144: [SPARK-26172][ML][WIP] Unify String Params' case-insensi...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/23144 I am not sure about `$$` or `%%`, we can replace them with other names. I want to resolve the confusion of case-insensitivity, and wonder whether a new flag can do this. If we want to keep the return of getter identical to value passed to setter, we will need two version of 'getter', one to return the original value, the other to return the lower/upper value. Another issue is that, there is no `StringParam` trait, so we have to modify `Param` trait directly. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #23122: [MINOR][ML] add missing params to Instr

Github user zhengruifeng commented on a diff in the pull request:

https://github.com/apache/spark/pull/23122#discussion_r236537309

--- Diff: mllib/src/main/scala/org/apache/spark/ml/recommendation/ALS.scala

---

@@ -671,7 +671,7 @@ class ALS(@Since("1.4.0") override val uid: String)

extends Estimator[ALSModel]

instr.logDataset(dataset)

instr.logParams(this, rank, numUserBlocks, numItemBlocks,

implicitPrefs, alpha, userCol,

itemCol, ratingCol, predictionCol, maxIter, regParam, nonnegative,

checkpointInterval,

- seed, intermediateStorageLevel, finalStorageLevel)

+ seed, intermediateStorageLevel, finalStorageLevel, coldStartStrategy)

--- End diff --

Yes, we could let coldStartStrategy alone. BTW, I made a rapid scan and

found that some algs do not log the columns.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #23144: [SPARK-26172][ML][WIP] Unify String Params' case-...

GitHub user zhengruifeng opened a pull request: https://github.com/apache/spark/pull/23144 [SPARK-26172][ML][WIP] Unify String Params' case-insensitivity in ML ## What changes were proposed in this pull request? 1, methods `lowerCaseInArray` and `upperCaseInArray` are created in `ParamValidators` to check case-insensitivity; 2, methods `$$(param: Param[String])` and `%%(param: Param[String])` are created in trait Params to lower/upper the param value conveniently; 3, in `SharedParamsCodeGen`, `handleInvalid` and `distanceMeasure` are updated to use `lowerCaseInArray`; 4, make string params (except colnames) in ml case-insensitive ## How was this patch tested? updated suites You can merge this pull request into a Git repository by running: $ git pull https://github.com/zhengruifeng/spark case_insensitive_params Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/23144.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #23144 commit 85a5fcb5d7d20b3adc97c59b726c941ef071d5a6 Author: zhengruifeng Date: 2018-11-05T10:33:34Z init commit e8f0cb40a822c124d7bba03765064bba8725315b Author: zhengruifeng Date: 2018-11-22T10:20:13Z fix conflict commit 8be289ebb5e0118669514e3e2c531623ed698b4e Author: zhengruifeng Date: 2018-11-23T03:19:22Z use 2418 as lowercase commit 810d8556beef0a27bd39723c2e5733da1d546621 Author: zhengruifeng Date: 2018-11-23T03:28:32Z update CodeGen commit e55244afa41e959f99c02f1afbe916fc7d1ffec3 Author: zhengruifeng Date: 2018-11-26T09:17:18Z update suites --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22991: [SPARK-25989][ML] OneVsRestModel handle empty out...

Github user zhengruifeng commented on a diff in the pull request:

https://github.com/apache/spark/pull/22991#discussion_r236110139

--- Diff:

mllib/src/main/scala/org/apache/spark/ml/classification/OneVsRest.scala ---

@@ -219,14 +225,20 @@ final class OneVsRestModel private[ml] (

Vectors.dense(predArray)

}

- // output the index of the classifier with highest confidence as

prediction

- val labelUDF = udf { (rawPredictions: Vector) =>

rawPredictions.argmax.toDouble }

-

- // output confidence as raw prediction, label and label metadata as

prediction

- aggregatedDataset

-.withColumn(getRawPredictionCol, rawPredictionUDF(col(accColName)))

-.withColumn(getPredictionCol, labelUDF(col(getRawPredictionCol)),

labelMetadata)

-.drop(accColName)

+ if (getPredictionCol != "") {

--- End diff --

I implemented this in another way, classificationmodel update the output

dataset, and I direct return the output in each if clause.

Then I update the to follow ClassificationModel, and update the

outputColumns in each clauses. And `withColumns` is used to return the output

columns.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22991: [SPARK-25989][ML] OneVsRestModel handle empty outputCols...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/22991 friendly ping @srowen @jkbradley @MLnick --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #23100: [SPARK-26133][ML] Remove deprecated OneHotEncoder...

Github user zhengruifeng commented on a diff in the pull request:

https://github.com/apache/spark/pull/23100#discussion_r235886910

--- Diff:

mllib/src/main/scala/org/apache/spark/ml/feature/OneHotEncoder.scala ---

@@ -17,126 +17,512 @@

package org.apache.spark.ml.feature

+import org.apache.hadoop.fs.Path

+

+import org.apache.spark.SparkException

import org.apache.spark.annotation.Since

-import org.apache.spark.ml.Transformer

+import org.apache.spark.ml.{Estimator, Model}

import org.apache.spark.ml.attribute._

import org.apache.spark.ml.linalg.Vectors

import org.apache.spark.ml.param._

-import org.apache.spark.ml.param.shared.{HasInputCol, HasOutputCol}

+import org.apache.spark.ml.param.shared.{HasHandleInvalid, HasInputCols,

HasOutputCols}

import org.apache.spark.ml.util._

import org.apache.spark.sql.{DataFrame, Dataset}

-import org.apache.spark.sql.functions.{col, udf}

-import org.apache.spark.sql.types.{DoubleType, NumericType, StructType}

+import org.apache.spark.sql.expressions.UserDefinedFunction

+import org.apache.spark.sql.functions.{col, lit, udf}

+import org.apache.spark.sql.types.{DoubleType, StructField, StructType}

+

+/** Private trait for params and common methods for OneHotEncoder and

OneHotEncoderModel */

+private[ml] trait OneHotEncoderBase extends Params with HasHandleInvalid

+with HasInputCols with HasOutputCols {

+

+ /**

+ * Param for how to handle invalid data during transform().

+ * Options are 'keep' (invalid data presented as an extra categorical

feature) or

+ * 'error' (throw an error).

+ * Note that this Param is only used during transform; during fitting,

invalid data

+ * will result in an error.

+ * Default: "error"

+ * @group param

+ */

+ @Since("2.3.0")

+ override val handleInvalid: Param[String] = new Param[String](this,

"handleInvalid",

+"How to handle invalid data during transform(). " +

+"Options are 'keep' (invalid data presented as an extra categorical

feature) " +

+"or error (throw an error). Note that this Param is only used during

transform; " +

+"during fitting, invalid data will result in an error.",

+ParamValidators.inArray(OneHotEncoder.supportedHandleInvalids))

+

+ setDefault(handleInvalid, OneHotEncoder.ERROR_INVALID)

+

+ /**

+ * Whether to drop the last category in the encoded vector (default:

true)

+ * @group param

+ */

+ @Since("2.3.0")

+ final val dropLast: BooleanParam =

+new BooleanParam(this, "dropLast", "whether to drop the last category")

+ setDefault(dropLast -> true)

+

+ /** @group getParam */

+ @Since("2.3.0")

+ def getDropLast: Boolean = $(dropLast)

+

+ protected def validateAndTransformSchema(

+ schema: StructType,

+ dropLast: Boolean,

+ keepInvalid: Boolean): StructType = {

+val inputColNames = $(inputCols)

+val outputColNames = $(outputCols)

+

+require(inputColNames.length == outputColNames.length,

+ s"The number of input columns ${inputColNames.length} must be the

same as the number of " +

+s"output columns ${outputColNames.length}.")

+

+// Input columns must be NumericType.

+inputColNames.foreach(SchemaUtils.checkNumericType(schema, _))

+

+// Prepares output columns with proper attributes by examining input

columns.

+val inputFields = $(inputCols).map(schema(_))

+

+val outputFields = inputFields.zip(outputColNames).map { case

(inputField, outputColName) =>

+ OneHotEncoderCommon.transformOutputColumnSchema(

+inputField, outputColName, dropLast, keepInvalid)

+}

+outputFields.foldLeft(schema) { case (newSchema, outputField) =>

+ SchemaUtils.appendColumn(newSchema, outputField)

+}

+ }

+}

/**

* A one-hot encoder that maps a column of category indices to a column of

binary vectors, with

* at most a single one-value per row that indicates the input category

index.

* For example with 5 categories, an input value of 2.0 would map to an

output vector of

* `[0.0, 0.0, 1.0, 0.0]`.

- * The last category is not included by default (configurable via

`OneHotEncoder!.dropLast`

+ * The last category is not included by default (configurable via

`dropLast`),

* because it makes the vector entries sum up to one, and hence linearly

dependent.

* So an input value of 4.0 maps to `[0.0, 0.0, 0.0, 0.0]`.

*

* @note This is different from scikit-learn's OneHotEncoder, which keeps

all categories.

* The output vectors are sparse.

*

[GitHub] spark pull request #23123: [SPARK-26153][ML] GBT & RandomForest avoid unnece...

GitHub user zhengruifeng opened a pull request: https://github.com/apache/spark/pull/23123 [SPARK-26153][ML] GBT & RandomForest avoid unnecessary `first` job to compute `numFeatures` ## What changes were proposed in this pull request? use base models' `numFeature` instead of `first` job ## How was this patch tested? existing tests You can merge this pull request into a Git repository by running: $ git pull https://github.com/zhengruifeng/spark avoid_first_job Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/23123.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #23123 commit 28a0a923703e2d751d409773ec8995bbc731440b Author: zhengruifeng Date: 2018-11-23T03:55:01Z init --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #23122: [MINOR][ML] add missing params to Instr

GitHub user zhengruifeng opened a pull request: https://github.com/apache/spark/pull/23122 [MINOR][ML] add missing params to Instr ## What changes were proposed in this pull request? add following param to instr: GBTC: validationTol GBTR: validationTol, validationIndicatorCol ALS: coldStartStrategy ## How was this patch tested? existing tests You can merge this pull request into a Git repository by running: $ git pull https://github.com/zhengruifeng/spark instr_append_missing_params Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/23122.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #23122 commit de3aa789490e87e44da7a998455c31d03ffe2aa3 Author: zhengruifeng Date: 2018-11-23T03:41:03Z init --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22974: [SPARK-22450][WIP][Core][MLLib][FollowUp] Safely registe...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/22974 retest this please --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22974: [SPARK-22450][WIP][Core][MLLib][FollowUp] Safely registe...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/22974 @srowen Yes, this is the problem. I have to register `Param*` before any prediction model, but there are too many anonymous classes in `ParamValidators` and other places, and I have not found an efficient way to list those anonymous ones. So I wonder whether we could continue to register all of them. Or only list simple classes like `MultivariateGaussian `? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22974: [SPARK-22450][WIP][Core][MLLib][FollowUp] Safely registe...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/22974 retest this please --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22974: [SPARK-22450][WIP][Core][MLLib][FollowUp] Safely registe...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/22974 retest this please --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22087: [SPARK-25097][ML] Support prediction on single instance ...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/22087 I also expose GMM's predictProbability. could you please make a final pass? @srowen @felixcheung --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22974: [SPARK-22450][Core][MLLib][FollowUp] Safely register Mul...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/22974 @srowen I have some spare time, and will work on it. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19927: [SPARK-22737][ML][WIP] OVR transform optimization

Github user zhengruifeng closed the pull request at: https://github.com/apache/spark/pull/19927 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22974: [SPARK-22450][Core][MLLib][FollowUp] Safely register Mul...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/22974 retest this please --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22975: [SPARK-20156][SQL][ML][FOLLOW-UP] Java String toLowerCas...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/22975 retest this please --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22991: [SPARK-25989][ML] OneVsRestModel handle empty out...

GitHub user zhengruifeng opened a pull request: https://github.com/apache/spark/pull/22991 [SPARK-25989][ML] OneVsRestModel handle empty outputCols incorrectly ## What changes were proposed in this pull request? ignore empty output columns ## How was this patch tested? added tests You can merge this pull request into a Git repository by running: $ git pull https://github.com/zhengruifeng/spark ovrm_empty_outcol Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/22991.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #22991 commit 035362d9ab6d04ff04e3060edd941fdbd0c26222 Author: zhengruifeng Date: 2018-11-09T07:47:30Z lint --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22975: [SPARK-20156][SQL][ML][FOLLOW-UP] Java String toLowerCas...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/22975 retest this please --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22974: [SPARK-22450][Core][MLLib][FollowUp] Safely register Mul...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/22974 not all public serializable classes are needed to registered. Only those one which needed ser-deser should be registered, one important groups should be transformers and prediction models. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22974: [SPARK-22450][Core][MLLib][FollowUp] Safely register Mul...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/22974 I am not sure, but maybe all serializable classes need to be registered. Since `MultivariateGaussian` is a public class, so I think we need to add it. I also wonder whether a test is needed. If no longer needed, I can list all other public ones in ML in this PR. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22974: [SPARK-22450][Core][MLLib][FollowUp] Safely register Mul...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/22974 Do you mean fail in this pr? It was caused by a non-registered filed `BDM[Double]`. `MultivariateGaussian` is used in GMM, kryo-registration should help performance. As to mllib-local's dependency, it is another thing: current kryo-regiestered classes, like 'ml.linalg.Vector', 'ml.linalg.Matrix', do not have kryo test in their testsuites. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22974: [SPARK-22450][Core][MLLib][FollowUp] Safely register Mul...

Github user zhengruifeng commented on the issue:

https://github.com/apache/spark/pull/22974

@srowen Existing kryo-register testsuite need to import spark-core:

```

import org.apache.spark.SparkConf

import org.apache.spark.serializer.KryoSerializer

val conf = new SparkConf(false)

conf.set("spark.kryo.registrationRequired", "true")

val ser = new KryoSerializer(conf).newInstance()

```

Since mllib-local is not dependent on spark-core, current classes in

mllib-local do not test kryo-serialization at all. E.g.

`mllib.linalg.VectorsSuite` contains test `test("kryo class register")`, while

`ml.linalg.VectorsSuite` do not have it.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22975: [SPARK-20156][SQL][ML][FOLLOW-UP] Java String toLowerCas...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/22975 @srowen Yes, we should keep user input data and column names. Thanks for your explain! --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22975: [SPARK-20156][SQL][ML][FOLLOW-UP] Java String toL...

GitHub user zhengruifeng opened a pull request: https://github.com/apache/spark/pull/22975 [SPARK-20156][SQL][ML][FOLLOW-UP] Java String toLowerCase with Locale.ROOT ## What changes were proposed in this pull request? Add `Locale.ROOT` to all internal calls to String `toLowerCase`, `toUpperCase` ## How was this patch tested? existing tests You can merge this pull request into a Git repository by running: $ git pull https://github.com/zhengruifeng/spark Tokenizer_Locale Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/22975.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #22975 commit 69fa7e5fb3709a8d04ead77e8316c70782dc271c Author: zhengruifeng Date: 2018-11-08T09:31:03Z init commit 898e8260dd834de386120eddf160f7995661b3ff Author: zhengruifeng Date: 2018-11-08T09:57:51Z init --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22974: [SPARK-22450][Core][MLLib][FollowUp] Safely regis...

GitHub user zhengruifeng opened a pull request: https://github.com/apache/spark/pull/22974 [SPARK-22450][Core][MLLib][FollowUp] Safely register MultivariateGaussian ## What changes were proposed in this pull request? register following classes in Kryo: "org.apache.spark.ml.stat.distribution.MultivariateGaussian", "org.apache.spark.mllib.stat.distribution.MultivariateGaussian" ## How was this patch tested? added tests Due to existing module dependency, I can not import spark-core in mllib-local's testsuits, so I do not add testsuite in `org.apache.spark.ml.stat.distribution.MultivariateGaussianSuite`. And I notice that class `ClusterStats` in `ClusteringEvaluator` is registered in a different way, should it be modified to keep in line with others in ML? @srowen You can merge this pull request into a Git repository by running: $ git pull https://github.com/zhengruifeng/spark kryo_MultivariateGaussian Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/22974.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #22974 commit 0b9ed17837414b4cd0a788d48be9817723084da2 Author: zhengruifeng Date: 2018-11-08T08:16:57Z init --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22971: [SPARK-25970][ML] Add Instrumentation to PrefixSpan

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/22971 retest this please --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22971: [SPARK-25970][ML] Add Instrumentation to PrefixSp...

GitHub user zhengruifeng opened a pull request: https://github.com/apache/spark/pull/22971 [SPARK-25970][ML] Add Instrumentation to PrefixSpan ## What changes were proposed in this pull request? Add Instrumentation to PrefixSpan ## How was this patch tested? existing tests You can merge this pull request into a Git repository by running: $ git pull https://github.com/zhengruifeng/spark log_PrefixSpan Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/22971.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #22971 commit fd15a57823efc2c8d3c4fa0883452c0e1815bd73 Author: zhengruifeng Date: 2018-11-08T06:45:36Z init --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22087: [SPARK-25097][ML] Support prediction on single instance ...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/22087 Sounds good to design a universal prediction model as a super-class. BTW, I think we can also create a new class `ProbabilisticPredictionModel` (as a subclass of `PredictionModel`), so that we can let soft-clustering model extends it to expose method `predictProbability`. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19927: [SPARK-22737][ML][WIP] OVR transform optimization

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/19927 @srowen How do you think about this? Current OVR model's transform is too slow. Thanks. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22087: [SPARK-25097][ML] Support prediction on single instance ...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/22087 @imatiach-msft Updated according to your comments! Thanks for your reviewing! --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21561: [SPARK-24555][ML] logNumExamples in KMeans/BiKM/G...

Github user zhengruifeng commented on a diff in the pull request:

https://github.com/apache/spark/pull/21561#discussion_r210468639

--- Diff:

mllib/src/main/scala/org/apache/spark/mllib/clustering/BisectingKMeans.scala ---

@@ -246,6 +245,16 @@ class BisectingKMeans private (

new BisectingKMeansModel(root, this.distanceMeasure)

}

+ /**

+ * Runs the bisecting k-means algorithm.

+ * @param input RDD of vectors

+ * @return model for the bisecting kmeans

+ */

+ @Since("1.6.0")

--- End diff --

`def run(input: RDD[Vector]): BisectingKMeansModel` is a public api since

1.6, and users can call it.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21561: [SPARK-24555][ML] logNumExamples in KMeans/BiKM/G...

Github user zhengruifeng commented on a diff in the pull request:

https://github.com/apache/spark/pull/21561#discussion_r210467653

--- Diff:

mllib/src/main/scala/org/apache/spark/mllib/clustering/BisectingKMeans.scala ---

@@ -246,6 +245,16 @@ class BisectingKMeans private (

new BisectingKMeansModel(root, this.distanceMeasure)

}

+ /**

+ * Runs the bisecting k-means algorithm.

+ * @param input RDD of vectors

+ * @return model for the bisecting kmeans

+ */

+ @Since("1.6.0")

--- End diff --

this api was already existing since 1.6.0, so we should keep the since

annotation?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21561: [SPARK-24555][ML] logNumExamples in KMeans/BiKM/G...

Github user zhengruifeng commented on a diff in the pull request:

https://github.com/apache/spark/pull/21561#discussion_r210158840

--- Diff:

mllib/src/main/scala/org/apache/spark/mllib/clustering/KMeans.scala ---

@@ -299,7 +299,7 @@ class KMeans private (

val bcCenters = sc.broadcast(centers)

// Find the new centers

- val newCenters = data.mapPartitions { points =>

+ val collected = data.mapPartitions { points =>

--- End diff --

I am neutral on this.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22087: [SPARK-25097][ML] Support prediction on single instance ...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/22087 @felixcheung Testsuites is added. Thanks for reviewing! --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22087: [SPARK-25097][Support prediction on single instan...

GitHub user zhengruifeng opened a pull request: https://github.com/apache/spark/pull/22087 [SPARK-25097][Support prediction on single instance in KMeans/BiKMeans/GMM] Support prediction on single instance in KMeans/BiKMeans/GMM ## What changes were proposed in this pull request? expose method `predict` in KMeans/BiKMeans/GMM ## How was this patch tested? NA You can merge this pull request into a Git repository by running: $ git pull https://github.com/zhengruifeng/spark clu_pre_instance Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/22087.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #22087 commit f6cbb46f46f039d6168de382a18018a37e0e3ee7 Author: zhengruifeng Date: 2018-08-13T06:10:35Z init --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21561: [SPARK-24555][ML] logNumExamples in KMeans/BiKM/G...

Github user zhengruifeng commented on a diff in the pull request:

https://github.com/apache/spark/pull/21561#discussion_r209498032

--- Diff:

mllib/src/main/scala/org/apache/spark/mllib/clustering/BisectingKMeans.scala ---

@@ -151,13 +152,9 @@ class BisectingKMeans private (

this

}

- /**

- * Runs the bisecting k-means algorithm.

- * @param input RDD of vectors

- * @return model for the bisecting kmeans

- */

- @Since("1.6.0")

- def run(input: RDD[Vector]): BisectingKMeansModel = {

+

+ private[spark] def run(input: RDD[Vector],

+ instr: Option[Instrumentation]):

BisectingKMeansModel = {

--- End diff --

`instrumented` will create a new `Instrumentation`, and `instrumented` is

only used in ml

When mllib's impls is called, the `Instrumentation` will be passed as a

parameters, like what KMeans does

(https://github.com/apache/spark/blob/master/mllib/src/main/scala/org/apache/spark/ml/clustering/KMeans.scala#L362).

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21561: [SPARK-24555][ML] logNumExamples in KMeans/BiKM/G...

Github user zhengruifeng commented on a diff in the pull request:

https://github.com/apache/spark/pull/21561#discussion_r209496789

--- Diff:

mllib/src/main/scala/org/apache/spark/ml/classification/NaiveBayes.scala ---

@@ -157,11 +157,15 @@ class NaiveBayes @Since("1.5.0") (

instr.logNumFeatures(numFeatures)

val w = if (!isDefined(weightCol) || $(weightCol).isEmpty) lit(1.0)

else col($(weightCol))

+val countAccum = dataset.sparkSession.sparkContext.longAccumulator

+

// Aggregates term frequencies per label.

// TODO: Calling aggregateByKey and collect creates two stages, we can

implement something

// TODO: similar to reduceByKeyLocally to save one stage.

val aggregated = dataset.select(col($(labelCol)), w,

col($(featuresCol))).rdd

- .map { row => (row.getDouble(0), (row.getDouble(1),

row.getAs[Vector](2)))

+ .map { row =>

+countAccum.add(1L)

--- End diff --

This should work correctly, however, to guarantee the correctness, I update

the pr to compute the number without Accumulator

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19084: [SPARK-20711][ML]MultivariateOnlineSummarizer/Sum...

Github user zhengruifeng closed the pull request at: https://github.com/apache/spark/pull/19084 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21563: [SPARK-24557][ML] ClusteringEvaluator support array inpu...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/21563 @mengxr I notice that you open a ticket for supporting integer type labels in ClusteringEvalutator, would you like to shepherd this pr too? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19186: [SPARK-21972][ML] Add param handlePersistence

Github user zhengruifeng closed the pull request at: https://github.com/apache/spark/pull/19186 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20918: [SPARK-23805][ML][WIP] Features alg support vecto...

Github user zhengruifeng closed the pull request at: https://github.com/apache/spark/pull/20918 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18589: [SPARK-16872][ML] Add Gaussian NB

Github user zhengruifeng closed the pull request at: https://github.com/apache/spark/pull/18589 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18389: [SPARK-14174][ML] Add minibatch kmeans

Github user zhengruifeng closed the pull request at: https://github.com/apache/spark/pull/18389 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21563: [SPARK-24557][ML] ClusteringEvaluator support array inpu...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/21563 retest this please --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20028: [SPARK-19053][ML]Supporting multiple evaluation metrics ...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/20028 LGTM, except for the since annotations. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21788: [SPARK-24609][ML][DOC] PySpark/SparkR doc doesn't explai...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/21788 retest this please --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21563: [SPARK-24557][ML] ClusteringEvaluator support array inpu...

Github user zhengruifeng commented on the issue:

https://github.com/apache/spark/pull/21563

@mgaido91 I am sorry to make a force push to update my git username in this

PR.

Since I found that my current PRs are not linked to my account and it is

troublesome to track them.

and I found that `.expr.sql` may not be a good way to get the output column

name:

```

val df = Seq((1,2),(3,4),(1,1),(2,-1),(3,1)).toDF("a","b")

val z = (col("a") + col("b")).as("x").as("z")

scala> z.expr.sql

res15: String = (`a` + `b`) AS `x` AS `z`

```

So I still keep the orignal method.

@MLnick @mengxr @jkbradley Would you please help reveiwing this? Thanks!

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21788: [SPARK-24609][ML][DOC] PySpark/SparkR doc doesn't explai...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/21788 retest this please --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21788: [SPARK-24609][ML][DOC] PySpark/SparkR doc doesn't explai...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/21788 @felixcheung I have to force push it so as to change the git username. I will look for what happend --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21792: [SPARK-23231][ML][DOC] Add doc for string indexer orderi...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/21792 @srowen I think we need to update the docs 1, Current doc in `StringIndexer` is somewhat misleading: "The indices are in `[0, numLabels)`, ordered by label frequencies, so the most frequent label gets index `0`." this is true only with default ordering type. 2, In RFormula, `stringOrderType` only affect feature columns, not label column. This need to be emphasised, which is somewhat out of expectation. @MLnick your thoughts? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21792: [SPARK-23231][ML][DOC] Add doc for string indexer...

GitHub user zhengruifeng opened a pull request: https://github.com/apache/spark/pull/21792 [SPARK-23231][ML][DOC] Add doc for string indexer ordering to user guide (also to RFormula guide) ## What changes were proposed in this pull request? add doc for string indexer ordering ## How was this patch tested? existing tests You can merge this pull request into a Git repository by running: $ git pull https://github.com/zhengruifeng/spark doc_string_indexer_ordering Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/21792.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #21792 commit e93c2cefd60383f4dde085d4c668a462bdbda895 Author: zhengruifeng3 Date: 2018-07-17T10:43:05Z init commit febd66fb6bf5bff5ce377bd5f2899d10d7da6ccc Author: zhengruifeng3 Date: 2018-07-17T10:58:25Z add ordering example --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

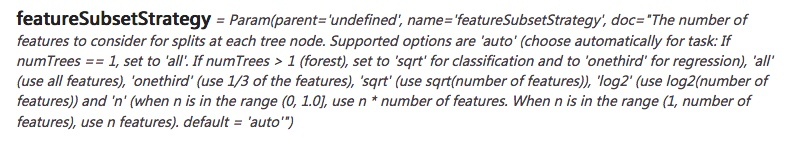

[GitHub] spark pull request #21788: [SPARK-24609][ML][DOC] PySpark/SparkR doc doesn't...

GitHub user zhengruifeng opened a pull request: https://github.com/apache/spark/pull/21788 [SPARK-24609][ML][DOC] PySpark/SparkR doc doesn't explain RandomForestClassifier.featureSubsetStrategy well ## What changes were proposed in this pull request? update doc of RandomForestClassifier.featureSubsetStrategy ## How was this patch tested? local built doc rdoc:  pydoc:  You can merge this pull request into a Git repository by running: $ git pull https://github.com/zhengruifeng/spark rf_doc_py_r Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/21788.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #21788 commit b53d14f129bdce0f7a4b6495edb86e515c18a162 Author: zhengruifeng3 Date: 2018-07-17T09:08:13Z init --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21562: [Trivial][ML] GMM unpersist RDD after training

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/21562 @felixcheung Would you mind make a final pass? Thanks! --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21563: [SPARK-24557][ML] ClusteringEvaluator support arr...

Github user zhengruifeng commented on a diff in the pull request:

https://github.com/apache/spark/pull/21563#discussion_r197600500

--- Diff:

mllib/src/main/scala/org/apache/spark/ml/evaluation/ClusteringEvaluator.scala

---

@@ -107,15 +106,18 @@ class ClusteringEvaluator @Since("2.3.0")

(@Since("2.3.0") override val uid: Str

@Since("2.3.0")

override def evaluate(dataset: Dataset[_]): Double = {

-SchemaUtils.checkColumnType(dataset.schema, $(featuresCol), new

VectorUDT)

+SchemaUtils.validateVectorCompatibleColumn(dataset.schema,

$(featuresCol))

SchemaUtils.checkNumericType(dataset.schema, $(predictionCol))

+val vectorCol = DatasetUtils.columnToVector(dataset, $(featuresCol))

+val df = dataset.select(col($(predictionCol)),

--- End diff --

@mgaido91 I think it maybe nice to first add a name getter for column

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21563: [SPARK-24557][ML] ClusteringEvaluator support arr...

Github user zhengruifeng commented on a diff in the pull request:

https://github.com/apache/spark/pull/21563#discussion_r195618344

--- Diff:

mllib/src/main/scala/org/apache/spark/ml/evaluation/ClusteringEvaluator.scala

---

@@ -107,15 +106,18 @@ class ClusteringEvaluator @Since("2.3.0")

(@Since("2.3.0") override val uid: Str

@Since("2.3.0")

override def evaluate(dataset: Dataset[_]): Double = {

-SchemaUtils.checkColumnType(dataset.schema, $(featuresCol), new

VectorUDT)

+SchemaUtils.validateVectorCompatibleColumn(dataset.schema,

$(featuresCol))

SchemaUtils.checkNumericType(dataset.schema, $(predictionCol))

+val vectorCol = DatasetUtils.columnToVector(dataset, $(featuresCol))

+val df = dataset.select(col($(predictionCol)),

--- End diff --

@mgaido91 Thanks for your reviewing!

I have considered this, however there exists a problem:

if we want to append metadata into the transformed column (like using

method `.as(alias: String, metadata: Metadata)`) in

`DatasetUtils.columnToVector`, how can we get the name of transformed column?

The only way to do this I know is:

```

val metadata = ...

val vectorCol = ..

val vectorName = dataset.select(vectorCol) .schema.head.name

vectorCol.as(vectorName, metadata)

```

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #16171: [SPARK-18739][ML][PYSPARK] Classification and regression...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/16171 It is out of date, and I will close it --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #16171: [SPARK-18739][ML][PYSPARK] Classification and reg...

Github user zhengruifeng closed the pull request at: https://github.com/apache/spark/pull/16171 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #16763: [SPARK-19422][ML][WIP] Cache input data in algori...

Github user zhengruifeng closed the pull request at: https://github.com/apache/spark/pull/16763 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #16763: [SPARK-19422][ML][WIP] Cache input data in algorithms

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/16763 This pr is out of date. I will close it. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19084: [SPARK-20711][ML]MultivariateOnlineSummarizer/Summarizer...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/19084 @srowen Could you please give a final review? Thanks --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19927: [SPARK-22737][ML][WIP] OVR transform optimization

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/19927 @mengxr @holdenk How do you think about this? Thanks. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21563: [SPARK-24557][ML] ClusteringEvaluator support arr...

GitHub user zhengruifeng opened a pull request: https://github.com/apache/spark/pull/21563 [SPARK-24557][ML] ClusteringEvaluator support array input ## What changes were proposed in this pull request? ClusteringEvaluator support array input ## How was this patch tested? added tests You can merge this pull request into a Git repository by running: $ git pull https://github.com/zhengruifeng/spark clu_eval_support_array Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/21563.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #21563 commit b126bd4f410ab4a01bbe7a980042704ea7420c6f Author: éçå³° Date: 2018-06-14T08:15:43Z init pr --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21562: [Trivial][ML] GMM unpersist RDD after training

GitHub user zhengruifeng opened a pull request: https://github.com/apache/spark/pull/21562 [Trivial][ML] GMM unpersist RDD after training ## What changes were proposed in this pull request? unpersist `instances` after training ## How was this patch tested? existing tests You can merge this pull request into a Git repository by running: $ git pull https://github.com/zhengruifeng/spark gmm_unpersist Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/21562.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #21562 commit 1945ff4adf3423b324c02e7b7f799cb137a385fb Author: éçå³° Date: 2018-06-14T03:46:13Z init pr --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18154: [SPARK-20932][ML]CountVectorizer support handle p...

Github user zhengruifeng closed the pull request at: https://github.com/apache/spark/pull/18154 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18154: [SPARK-20932][ML]CountVectorizer support handle persiste...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/18154 This PR is out of date. I will close it. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20164: [SPARK-22971][ML] OneVsRestModel should use tempo...

Github user zhengruifeng closed the pull request at: https://github.com/apache/spark/pull/20164 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20164: [SPARK-22971][ML] OneVsRestModel should use temporary Ra...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/20164 This pr is out of date. So I will close it. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21561: [SPARK-24555][ML] logNumExamples in KMeans/BiKM/G...

GitHub user zhengruifeng opened a pull request: https://github.com/apache/spark/pull/21561 [SPARK-24555][ML] logNumExamples in KMeans/BiKM/GMM/AFT/NB ## What changes were proposed in this pull request? logNumExamples in KMeans/BiKM/GMM/AFT/NB ## How was this patch tested? existing tests You can merge this pull request into a Git repository by running: $ git pull https://github.com/zhengruifeng/spark alg_logNumExamples Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/21561.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #21561 commit ec2171d77456554961558028c654293bea159cc7 Author: éçå³° Date: 2018-06-14T06:15:27Z init pr commit 6ec59d2c2f61ebf05136660388b6887c9d452aca Author: éçå³° Date: 2018-06-14T06:50:42Z add bikm commit 61b95a35ecea4ae21e95fb8370bc4b4525370435 Author: éçå³° Date: 2018-06-14T07:00:12Z _ --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19927: [SPARK-22737][ML][WIP] OVR transform optimization

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/19927 @MLnick @jkbradley What's your thoughts? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19381: [SPARK-10884][ML] Support prediction on single in...

Github user zhengruifeng commented on a diff in the pull request:

https://github.com/apache/spark/pull/19381#discussion_r180997645

--- Diff:

mllib/src/main/scala/org/apache/spark/ml/classification/Classifier.scala ---

@@ -192,12 +192,12 @@ abstract class ClassificationModel[FeaturesType, M <:

ClassificationModel[Featur

/**

* Predict label for the given features.

- * This internal method is used to implement `transform()` and output

[[predictionCol]].

+ * This method is used to implement `transform()` and output

[[predictionCol]].

*

* This default implementation for classification predicts the index of

the maximum value

* from `predictRaw()`.

*/

- override protected def predict(features: FeaturesType): Double = {

+ override def predict(features: FeaturesType): Double = {

--- End diff --

Is @Since annotation needed here? @WeichenXu123

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20956: [SPARK-23841][ML] NodeIdCache should unpersist the last ...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/20956 @srowen Could you please help reviewing this? Thanks in advance --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20956: [SPARK-23841][ML] NodeIdCache should unpersist th...

Github user zhengruifeng commented on a diff in the pull request:

https://github.com/apache/spark/pull/20956#discussion_r180064831

--- Diff:

mllib/src/main/scala/org/apache/spark/ml/tree/impl/NodeIdCache.scala ---

@@ -166,9 +166,13 @@ private[spark] class NodeIdCache(

}

}

}

+if (nodeIdsForInstances != null) {

+ // Unpersist current one if one exists.

+ nodeIdsForInstances.unpersist(false)

+}

if (prevNodeIdsForInstances != null) {

// Unpersist the previous one if one exists.

- prevNodeIdsForInstances.unpersist()

+ prevNodeIdsForInstances.unpersist(false)

--- End diff --

For now `deleteAllCheckpoints` is only called once in whole MLLIB, and

current `unpsersit` of `prevNodeIdsForInstances` is in it. So I think we do not

need to impl another method to unpersist datasets (like `PeriodicCheckpointer`)

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20956: [SPARK-23841][ML] NodeIdCache should unpersist th...

Github user zhengruifeng commented on a diff in the pull request:

https://github.com/apache/spark/pull/20956#discussion_r180063562

--- Diff:

mllib/src/main/scala/org/apache/spark/ml/tree/impl/NodeIdCache.scala ---

@@ -95,7 +95,7 @@ private[spark] class NodeIdCache(

splits: Array[Array[Split]]): Unit = {

if (prevNodeIdsForInstances != null) {

// Unpersist the previous one if one exists.

- prevNodeIdsForInstances.unpersist()

+ prevNodeIdsForInstances.unpersist(false)

--- End diff --

This is not required, but it is usually safe to unpersist without blocking

in MLLIB.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20956: [SPARK-23841][ML] NodeIdCache should unpersist th...

GitHub user zhengruifeng opened a pull request: https://github.com/apache/spark/pull/20956 [SPARK-23841][ML] NodeIdCache should unpersist the last cached nodeIdsForInstances ## What changes were proposed in this pull request? unpersist the last cached nodeIdsForInstances in `deleteAllCheckpoints` ## How was this patch tested? existing tests You can merge this pull request into a Git repository by running: $ git pull https://github.com/zhengruifeng/spark NodeIdCache_cleanup Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/20956.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #20956 commit 96529235a9ce7279ec3eee7ad58b4c7e3c8119ae Author: Zheng RuiFeng <ruifengz@...> Date: 2018-04-02T04:01:20Z init pr --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20918: [SPARK-23805][ML][WIP] Features alg support vecto...

GitHub user zhengruifeng opened a pull request: https://github.com/apache/spark/pull/20918 [SPARK-23805][ML][WIP] Features alg support vector-size validation and Inference ## What changes were proposed in this pull request? support vector-size validation and Inference in features algorithms ## How was this patch tested? existing tests You can merge this pull request into a Git repository by running: $ git pull https://github.com/zhengruifeng/spark features_alg_transform_hint_numFeatures Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/20918.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #20918 commit daa3452abcdbde10e6890913bf1f5abd6206487a Author: Zheng RuiFeng <ruifengz@...> Date: 2018-03-26T11:06:24Z init pr commit 491bb7dd5ae26217553eef572deb9a5af89aabd7 Author: Zheng RuiFeng <ruifengz@...> Date: 2018-03-26T11:11:41Z init pr commit 87f5f2cfd5c73377e0b15547649b346008d055bb Author: Zheng RuiFeng <ruifengz@...> Date: 2018-03-26T11:24:28Z init pr commit dc4006989144eaee36b37b494a327f9727c4c56d Author: Zheng RuiFeng <ruifengz@...> Date: 2018-03-26T11:24:49Z init pr commit 2098d088caa493924e218a8f9185ede234c1a2be Author: Zheng RuiFeng <ruifengz@...> Date: 2018-03-28T06:59:04Z init pr --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20539: [SPARK-22700][ML] Bucketizer.transform incorrectl...

Github user zhengruifeng closed the pull request at: https://github.com/apache/spark/pull/20539 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20518: [SPARK-22119][FOLLOWUP][ML] Use spherical KMeans ...

Github user zhengruifeng commented on a diff in the pull request:

https://github.com/apache/spark/pull/20518#discussion_r167417459

--- Diff:

mllib/src/main/scala/org/apache/spark/mllib/clustering/KMeans.scala ---

@@ -745,4 +763,27 @@ private[spark] class CosineDistanceMeasure extends

DistanceMeasure {

override def distance(v1: VectorWithNorm, v2: VectorWithNorm): Double = {

1 - dot(v1.vector, v2.vector) / v1.norm / v2.norm

}

+

+ /**

+ * Updates the value of `sum` adding the `point` vector.

+ * @param point a `VectorWithNorm` to be added to `sum` of a cluster

+ * @param sum the `sum` for a cluster to be updated

+ */

+ override def updateClusterSum(point: VectorWithNorm, sum: Vector): Unit

= {

+axpy(1.0 / point.norm, point.vector, sum)

--- End diff --

In scala, `1.0 / 0.0` generate `Infinity`, what about directly throw an

exception instead?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20539: [SPARK-22700][ML] Bucketizer.transform incorrectly drops...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/20539 ping @jkbradley --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20539: [SPARK-22700][ML] Bucketizer.transform incorrectl...

GitHub user zhengruifeng opened a pull request: https://github.com/apache/spark/pull/20539 [SPARK-22700][ML] Bucketizer.transform incorrectly drops row containing NaN - for branch-2.2 ## What changes were proposed in this pull request? for branch-2.2 only drops the rows containing NaN in the input columns ## How was this patch tested? existing tests and added tests You can merge this pull request into a Git repository by running: $ git pull https://github.com/zhengruifeng/spark bucketizer_nan_2.2 Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/20539.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #20539 commit 6ab7a35e22bd315d30a10526b4f538dd66fc42e0 Author: Zheng RuiFeng <ruifengz@...> Date: 2018-02-08T03:31:51Z create pr --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20518: [SPARK-22119][FOLLOWUP][ML] Use spherical KMeans ...

Github user zhengruifeng commented on a diff in the pull request:

https://github.com/apache/spark/pull/20518#discussion_r166813909

--- Diff:

mllib/src/main/scala/org/apache/spark/mllib/clustering/KMeans.scala ---

@@ -745,4 +763,27 @@ private[spark] class CosineDistanceMeasure extends

DistanceMeasure {

override def distance(v1: VectorWithNorm, v2: VectorWithNorm): Double = {

1 - dot(v1.vector, v2.vector) / v1.norm / v2.norm

}

+

+ /**

+ * Updates the value of `sum` adding the `point` vector.

+ * @param point a `VectorWithNorm` to be added to `sum` of a cluster

+ * @param sum the `sum` for a cluster to be updated

+ */

+ override def updateClusterSum(point: VectorWithNorm, sum: Vector): Unit

= {

+axpy(1.0 / point.norm, point.vector, sum)

--- End diff --

do we need to ignore zero points here?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19340: [SPARK-22119][ML] Add cosine distance to KMeans

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/19340 @mgaido91 agree that it is better to normalize centers --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20164: [SPARK-22971][ML] OneVsRestModel should use temporary Ra...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/20164 @WeichenXu123 Yes, my concern is that it is confusing if the transform failure is caused by column conflict by a âinvisibleâ column. @srowen Agree that it is not perfect if we alter the output's raw prediction column. I think it maybe better to revert `rawPrediction` of base models after transform, and I will have a try. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20164: [SPARK-22971][ML] OneVsRestModel should use temporary Ra...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/20164 @srowen Different from the base model (like LoR), OVR and OVRModel do not have param `rawPredictionCol`. So if the input dataframe contains a column which has the same name as base model's `getRawPredictionCol`, then OVRModel can not transform the input. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19340: [SPARK-22119][ML] Add cosine distance to KMeans

Github user zhengruifeng commented on the issue:

https://github.com/apache/spark/pull/19340

The updating of centers should be viewed as the **M-step** in EM algorithm,

in which some objective is optimized.

Since cosine similarity do not take vector-norm into account:

1. the optimal solution of normized points (`V`) should also be optimal to

original points

2. scaled solution (`k*V, k>0`) is also optimal to both normized points and

original points

If we want to optimize intra-cluster cosine similarity (like Matlab), then

arithmetic mean of normized points should be a better solution than arithmetic

mean of original points.

Suppose two 2D points (x=0,y=1) and (x=100,y=0):

1. If we choose the arithmetic mean (x=50,y=0.5) as the center, the sum of

cosine similarity is about 1.0;

2. If we choose the arithmetic mean of normized points (x=0.5,y=0.5), the

sum of cosine similarity is about 1.414;

3. this center can then be normized for computation convenience in

following assignment (E-step) or prediction.

Since `VectorWithNorm` is used as the input, norms of vectors are already

computed, then I think we only need to update [this

line](https://github.com/apache/spark/blob/master/mllib/src/main/scala/org/apache/spark/mllib/clustering/KMeans.scala#L314)

to

```

if (point.norm > 0) {

axpy(1.0 / point.norm, point.vector, sum)

}

```

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19340: [SPARK-22119][ML] Add cosine distance to KMeans

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/19340 @mgaido91 @srowen I have the same concern as @Kevin-Ferret and @viirya I don't find the normailization of vectors before training, and the update of center seems incorrect. The arithmetic mean of all points in the cluster is not naturally the new cluster center: For EUCLIDEAN distance, we need to update the center to minimize the square lose, then we get the arithmetic mean as the closed-form solution; For COSINE similarity, we need to update the center to *maximize the cosine similarity*, the solution is also the arithmetic mean only if all vectors are of unit length. In matlab's doc for KMeans, it says "One minus the cosine of the included angle between points (treated as vectors). Each centroid is the mean of the points in that cluster, after *normalizing those points to unit Euclidean length*." I think RapidMiners's implementation of KMeans with cosine similarity is wrong, if it just assign new center with the arithmetic mean. Some reference: [Spherical k-Means Clustering](https://www.jstatsoft.org/article/view/v050i10/v50i10.pdf) [Scikit-Learn's example: Clustering text documents using k-means](http://scikit-learn.org/dev/auto_examples/text/plot_document_clustering.html) https://stats.stackexchange.com/questions/299013/cosine-distance-as-similarity-measure-in-kmeans https://www.quora.com/How-can-I-use-cosine-similarity-in-clustering-For-example-K-means-clustering --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19892: [SPARK-22797][PySpark] Bucketizer support multi-column

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/19892 @MLnick Thanks for your reviewing and suggestions. I have updated this PR --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20275: [SPARK-23085][ML] API parity for mllib.linalg.Vec...

GitHub user zhengruifeng opened a pull request: https://github.com/apache/spark/pull/20275 [SPARK-23085][ML] API parity for mllib.linalg.Vectors.sparse ## What changes were proposed in this pull request? `ML.Vectors#sparse(size: Int, elements: Seq[(Int, Double)])` support zero-length ## How was this patch tested? existing tests You can merge this pull request into a Git repository by running: $ git pull https://github.com/zhengruifeng/spark SparseVector_size Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/20275.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #20275 commit 8b2876e5c5059a1fb258bff53ae6667df80d3205 Author: Zheng RuiFeng <ruifengz@...> Date: 2018-01-16T01:46:47Z nit commit 1a3cd3aab355a00a73993979896624a8684a9aad Author: Zheng RuiFeng <ruifengz@...> Date: 2018-01-16T05:49:40Z update pr --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20164: [SPARK-22971][ML] OneVsRestModel should use temporary Ra...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/20164 retest this please --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20164: [SPARK-22971][ML] OneVsRestModel should use temporary Ra...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/20164 retest this please --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20164: [SPARK-22971][ML] OneVsRestModel should use tempo...

GitHub user zhengruifeng opened a pull request: https://github.com/apache/spark/pull/20164 [SPARK-22971][ML] OneVsRestModel should use temporary RawPredictionCol ## What changes were proposed in this pull request? use temporary RawPredictionCol in `OneVsRestModel#transform` ## How was this patch tested? existing tests and added tests You can merge this pull request into a Git repository by running: $ git pull https://github.com/zhengruifeng/spark ovr_not_use_getRawPredictionCol Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/20164.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #20164 commit f155e1cc6b175ac06a5f2ab710d4c053b0776507 Author: Zheng RuiFeng <ruifengz@...> Date: 2018-01-05T09:29:25Z create pr commit 9b0dcc69535b6731c9b6cdc0030c846c3352a5de Author: Zheng RuiFeng <ruifengz@...> Date: 2018-01-05T10:19:59Z create pr commit 6c567ffb02738346fc83e467752add0d00a42e07 Author: Zheng RuiFeng <ruifengz@...> Date: 2018-01-05T10:26:16Z add test --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20113: [SPARK-22905][ML][FollowUp] Fix GaussianMixtureModel sav...

Github user zhengruifeng commented on the issue:

https://github.com/apache/spark/pull/20113

@WeichenXu123 I use this cmd to list all impl of model.save, and others

looks OK.

`find mllib/src/main/scala -name '*.scala' | xargs -i bash -c 'egrep -in

"repartition\(1\)" {} && echo {}'`

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19892: [SPARK-22797][PySpark] Bucketizer support multi-column

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/19892 ping @MLnick ? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20113: [SPARK-22905][ML][FollowUp] Fix GaussianMixtureMo...

GitHub user zhengruifeng opened a pull request: https://github.com/apache/spark/pull/20113 [SPARK-22905][ML][FollowUp] Fix GaussianMixtureModel save ## What changes were proposed in this pull request? make sure model data is stored in order. @WeichenXu123 ## How was this patch tested? existing tests You can merge this pull request into a Git repository by running: $ git pull https://github.com/zhengruifeng/spark gmm_save Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/20113.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #20113 commit 408bfed88cd237e5adbf42bd5b4fd2ccf875b5bd Author: Zheng RuiFeng <ruifengz@...> Date: 2017-12-29T06:01:51Z create pr --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20030: [SPARK-10496][CORE] Efficient RDD cumulative sum

Github user zhengruifeng closed the pull request at: https://github.com/apache/spark/pull/20030 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20030: [SPARK-10496][CORE] Efficient RDD cumulative sum

GitHub user zhengruifeng opened a pull request: https://github.com/apache/spark/pull/20030 [SPARK-10496][CORE] Efficient RDD cumulative sum ## What changes were proposed in this pull request? impl Efficient RDD cumulative sum ## How was this patch tested? existing tests and added tests You can merge this pull request into a Git repository by running: $ git pull https://github.com/zhengruifeng/spark cum_scan Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/20030.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #20030 commit f32240cf07923f86fd1717dcdece6f94001a662a Author: Zheng RuiFeng <ruifengz@...> Date: 2017-12-20T09:37:03Z create pr commit 4f1d5e269c5f84f6126fea97c201b6cd6fef461f Author: Zheng RuiFeng <ruifengz@...> Date: 2017-12-20T09:48:51Z update tests --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19950: [SPARK-22450][Core][MLLib][FollowUp] safely register cla...

Github user zhengruifeng commented on the issue:

https://github.com/apache/spark/pull/19950

@WeichenXu123 I am not very sure, but it seems that `Kryo` will automatic

ser/deser `Tuple2[A, B]` type if both `A` and `B` have been registered:

```

scala> import org.apache.spark.SparkConf

import org.apache.spark.SparkConf

scala> import org.apache.spark.mllib.linalg.{DenseVector, SparseVector,

Vector, Vectors}

import org.apache.spark.mllib.linalg.{DenseVector, SparseVector, Vector,

Vectors}

scala> import org.apache.spark.serializer.KryoSerializer

import org.apache.spark.serializer.KryoSerializer

scala> val conf = new SparkConf(false)

conf: org.apache.spark.SparkConf = org.apache.spark.SparkConf@71b0289b

scala> conf.set("spark.kryo.registrationRequired", "true")

res0: org.apache.spark.SparkConf = org.apache.spark.SparkConf@71b0289b

scala> val ser = new KryoSerializer(conf).newInstance()

ser: org.apache.spark.serializer.SerializerInstance =

org.apache.spark.serializer.KryoSerializerInstance@33430fc

scala> class X (val values: Array[Double])

defined class X

scala> val x = new X(Array(1.0,2.0))

x: X = X@69d58731

scala> val x2 = ser.deserialize[X](ser.serialize(x))

java.lang.IllegalArgumentException: Class is not registered: X

Note: To register this class use: kryo.register(X.class);

at com.esotericsoftware.kryo.Kryo.getRegistration(Kryo.java:488)

at com.twitter.chill.KryoBase.getRegistration(KryoBase.scala:52)

at

com.esotericsoftware.kryo.util.DefaultClassResolver.writeClass(DefaultClassResolver.java:97)

at com.esotericsoftware.kryo.Kryo.writeClass(Kryo.java:517)

at com.esotericsoftware.kryo.Kryo.writeClassAndObject(Kryo.java:622)

at

org.apache.spark.serializer.KryoSerializerInstance.serialize(KryoSerializer.scala:346)

... 49 elided

scala> val t1 = (1.0, Vectors.dense(Array(1.0, 2.0)))

t1: (Double, org.apache.spark.mllib.linalg.Vector) = (1.0,[1.0,2.0])

scala> val t2 = ser.deserialize[(Double, Vector)](ser.serialize(t1))

t2: (Double, org.apache.spark.mllib.linalg.Vector) = (1.0,[1.0,2.0])

```

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20017: [SPARK-22832][ML] BisectingKMeans unpersist unused datas...

Github user zhengruifeng commented on the issue: https://github.com/apache/spark/pull/20017 ping @srowen --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org