[GitHub] [hadoop] hadoop-yetus commented on issue #903: HDDS-1490. Support configurable container placement policy through 'o…

hadoop-yetus commented on issue #903: HDDS-1490. Support configurable container placement policy through 'o… URL: https://github.com/apache/hadoop/pull/903#issuecomment-499355283 :broken_heart: **-1 overall** | Vote | Subsystem | Runtime | Comment | |::|--:|:|:| | 0 | reexec | 32 | Docker mode activated. | ||| _ Prechecks _ | | +1 | dupname | 1 | No case conflicting files found. | | +1 | @author | 0 | The patch does not contain any @author tags. | | +1 | test4tests | 0 | The patch appears to include 9 new or modified test files. | ||| _ trunk Compile Tests _ | | 0 | mvndep | 23 | Maven dependency ordering for branch | | +1 | mvninstall | 492 | trunk passed | | +1 | compile | 293 | trunk passed | | +1 | checkstyle | 88 | trunk passed | | +1 | mvnsite | 0 | trunk passed | | +1 | shadedclient | 875 | branch has no errors when building and testing our client artifacts. | | +1 | javadoc | 154 | trunk passed | | 0 | spotbugs | 334 | Used deprecated FindBugs config; considering switching to SpotBugs. | | +1 | findbugs | 521 | trunk passed | ||| _ Patch Compile Tests _ | | 0 | mvndep | 28 | Maven dependency ordering for patch | | +1 | mvninstall | 441 | the patch passed | | +1 | compile | 274 | the patch passed | | +1 | javac | 274 | the patch passed | | +1 | checkstyle | 64 | the patch passed | | +1 | mvnsite | 0 | the patch passed | | +1 | whitespace | 0 | The patch has no whitespace issues. | | +1 | xml | 5 | The patch has no ill-formed XML file. | | +1 | shadedclient | 626 | patch has no errors when building and testing our client artifacts. | | +1 | javadoc | 156 | the patch passed | | +1 | findbugs | 523 | the patch passed | ||| _ Other Tests _ | | +1 | unit | 233 | hadoop-hdds in the patch passed. | | -1 | unit | 1357 | hadoop-ozone in the patch failed. | | +1 | asflicense | 40 | The patch does not generate ASF License warnings. | | | | 6380 | | | Reason | Tests | |---:|:--| | Failed junit tests | hadoop.ozone.client.rpc.TestCommitWatcher | | | hadoop.ozone.client.rpc.TestOzoneAtRestEncryption | | | hadoop.ozone.client.rpc.TestOzoneRpcClient | | | hadoop.ozone.client.rpc.TestFailureHandlingByClient | | | hadoop.ozone.client.rpc.TestSecureOzoneRpcClient | | | hadoop.ozone.client.rpc.TestOzoneRpcClientWithRatis | | Subsystem | Report/Notes | |--:|:-| | Docker | Client=17.05.0-ce Server=17.05.0-ce base: https://builds.apache.org/job/hadoop-multibranch/job/PR-903/2/artifact/out/Dockerfile | | GITHUB PR | https://github.com/apache/hadoop/pull/903 | | Optional Tests | dupname asflicense compile javac javadoc mvninstall mvnsite unit shadedclient findbugs checkstyle xml | | uname | Linux 20e99d05d7c3 4.4.0-138-generic #164-Ubuntu SMP Tue Oct 2 17:16:02 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux | | Build tool | maven | | Personality | personality/hadoop.sh | | git revision | trunk / 73954c1 | | Default Java | 1.8.0_212 | | unit | https://builds.apache.org/job/hadoop-multibranch/job/PR-903/2/artifact/out/patch-unit-hadoop-ozone.txt | | Test Results | https://builds.apache.org/job/hadoop-multibranch/job/PR-903/2/testReport/ | | Max. process+thread count | 4953 (vs. ulimit of 5500) | | modules | C: hadoop-hdds/common hadoop-hdds/server-scm hadoop-ozone/integration-test hadoop-ozone/ozone-manager U: . | | Console output | https://builds.apache.org/job/hadoop-multibranch/job/PR-903/2/console | | versions | git=2.7.4 maven=3.3.9 findbugs=3.1.0-RC1 | | Powered by | Apache Yetus 0.10.0 http://yetus.apache.org | This message was automatically generated. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Commented] (HADOOP-16117) Upgrade to latest AWS SDK

[ https://issues.apache.org/jira/browse/HADOOP-16117?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16857270#comment-16857270 ] Aaron Fabbri commented on HADOOP-16117: --- +1 LGTM for trunk. Agreed extended testing is to be expected as AWS SDK has a history of subtle bugs > Upgrade to latest AWS SDK > - > > Key: HADOOP-16117 > URL: https://issues.apache.org/jira/browse/HADOOP-16117 > Project: Hadoop Common > Issue Type: Sub-task > Components: fs/s3 >Reporter: Steve Loughran >Assignee: Steve Loughran >Priority: Major > Original Estimate: 24h > Remaining Estimate: 24h > > Upgrade to the most recent AWS SDK. That's 1.11; even though there's a 2.0 > out it'll be more significant an upgrade, with impact downstream. > The new [AWS SDK update > process|https://github.com/apache/hadoop/blob/trunk/hadoop-tools/hadoop-aws/src/site/markdown/tools/hadoop-aws/testing.md#-qualifying-an-aws-sdk-update] > *must* be followed, and we should plan for 1-2 surprises afterwards anyway. -- This message was sent by Atlassian JIRA (v7.6.3#76005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Updated] (HADOOP-16350) Ability to tell Hadoop not to request KMS Information from Remote NN

[

https://issues.apache.org/jira/browse/HADOOP-16350?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Greg Senia updated HADOOP-16350:

Fix Version/s: 3.3.0

Status: Patch Available (was: Open)

> Ability to tell Hadoop not to request KMS Information from Remote NN

> -

>

> Key: HADOOP-16350

> URL: https://issues.apache.org/jira/browse/HADOOP-16350

> Project: Hadoop Common

> Issue Type: Improvement

> Components: common, kms

>Affects Versions: 3.1.2, 2.7.6, 3.0.0, 2.8.3

>Reporter: Greg Senia

>Priority: Major

> Fix For: 3.3.0

>

> Attachments: HADOOP-16350.patch

>

>

> Before HADOOP-14104 Remote KMSServer URIs were not requested from the remote

> NameNode and their associated remote KMSServer delegation token. Many

> customers were using this as a security feature to prevent TDE/Encryption

> Zone data from being distcped to remote clusters. But there was still a use

> case to allow distcp of data residing in folders that are not being encrypted

> with a KMSProvider/Encrypted Zone.

> So after upgrading to a version of Hadoop that contained HADOOP-14104 distcp

> now fails as we along with other customers (HDFS-13696) DO NOT allow

> KMSServer endpoints to be exposed out of our cluster network as data residing

> in these TDE/Zones contain very critical data that cannot be distcped between

> clusters.

> I propose adding a new code block with the following custom property

> "hadoop.security.kms.client.allow.remote.kms" it will default to "true" so

> keeping current feature of HADOOP-14104 but if specified to "false" will

> allow this area of code to operate as it did before HADOOP-14104. I can see

> the value in HADOOP-14104 but the way Hadoop worked before this JIRA/Issue

> should of at least had an option specified to allow Hadoop/KMS code to

> operate similar to how it did before by not requesting remote KMSServer URIs

> which would than attempt to get a delegation token even if not operating on

> encrypted zones.

> Error when KMS Server traffic is not allowed between cluster networks per

> enterprise security standard which cannot be changed they denied the request

> for exception so the only solution is to allow a feature to not attempt to

> request tokens.

> {code:java}

> $ hadoop distcp -Ddfs.namenode.kerberos.principal.pattern=*

> -Dmapreduce.job.hdfs-servers.token-renewal.exclude=tech

> hdfs:///processed/public/opendata/samples/distcp_test/distcp_file.txt

> hdfs://tech/processed/public/opendata/samples/distcp_test/distcp_file2.txt

> 19/05/29 14:06:09 INFO tools.DistCp: Input Options: DistCpOptions

> {atomicCommit=false, syncFolder=false, deleteMissing=false,

> ignoreFailures=false, overwrite=false, append=false, useDiff=false,

> fromSnapshot=null, toSnapshot=null, skipCRC=false, blocking=true,

> numListstatusThreads=0, maxMaps=20, mapBandwidth=100,

> sslConfigurationFile='null', copyStrategy='uniformsize', preserveStatus=[],

> preserveRawXattrs=false, atomicWorkPath=null, logPath=null,

> sourceFileListing=null,

> sourcePaths=[hdfs:/processed/public/opendata/samples/distcp_test/distcp_file.txt],

>

> targetPath=hdfs://tech/processed/public/opendata/samples/distcp_test/distcp_file2.txt,

> targetPathExists=true, filtersFile='null', verboseLog=false}

> 19/05/29 14:06:09 INFO client.AHSProxy: Connecting to Application History

> server at ha21d53mn.unit.hdp.example.com/10.70.49.2:10200

> 19/05/29 14:06:10 INFO hdfs.DFSClient: Created HDFS_DELEGATION_TOKEN token

> 5093920 for gss2002 on ha-hdfs:unit

> 19/05/29 14:06:10 INFO security.TokenCache: Got dt for hdfs://unit; Kind:

> HDFS_DELEGATION_TOKEN, Service: ha-hdfs:unit, Ident: (HDFS_DELEGATION_TOKEN

> token 5093920 for gss2002)

> 19/05/29 14:06:10 INFO security.TokenCache: Got dt for hdfs://unit; Kind:

> kms-dt, Service: ha21d53en.unit.hdp.example.com:9292, Ident: (owner=gss2002,

> renewer=yarn, realUser=, issueDate=1559153170120, maxDate=1559757970120,

> sequenceNumber=237, masterKeyId=2)

> 19/05/29 14:06:10 INFO tools.SimpleCopyListing: Paths (files+dirs) cnt = 1;

> dirCnt = 0

> 19/05/29 14:06:10 INFO tools.SimpleCopyListing: Build file listing completed.

> 19/05/29 14:06:10 INFO tools.DistCp: Number of paths in the copy list: 1

> 19/05/29 14:06:10 INFO tools.DistCp: Number of paths in the copy list: 1

> 19/05/29 14:06:10 INFO client.AHSProxy: Connecting to Application History

> server at ha21d53mn.unit.hdp.example.com/10.70.49.2:10200

> 19/05/29 14:06:10 INFO hdfs.DFSClient: Created HDFS_DELEGATION_TOKEN token

> 556079 for gss2002 on ha-hdfs:tech

> 19/05/29 14:06:10 ERROR tools.DistCp: Exception encountered

> java.io.IOException: java.net.NoRouteToHostException: No route to host (Host

> unreachable)

> at

>

[jira] [Updated] (HADOOP-16350) Ability to tell Hadoop not to request KMS Information from Remote NN

[

https://issues.apache.org/jira/browse/HADOOP-16350?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Greg Senia updated HADOOP-16350:

Attachment: HADOOP-16350.patch

> Ability to tell Hadoop not to request KMS Information from Remote NN

> -

>

> Key: HADOOP-16350

> URL: https://issues.apache.org/jira/browse/HADOOP-16350

> Project: Hadoop Common

> Issue Type: Improvement

> Components: common, kms

>Affects Versions: 2.8.3, 3.0.0, 2.7.6, 3.1.2

>Reporter: Greg Senia

>Priority: Major

> Attachments: HADOOP-16350.patch

>

>

> Before HADOOP-14104 Remote KMSServer URIs were not requested from the remote

> NameNode and their associated remote KMSServer delegation token. Many

> customers were using this as a security feature to prevent TDE/Encryption

> Zone data from being distcped to remote clusters. But there was still a use

> case to allow distcp of data residing in folders that are not being encrypted

> with a KMSProvider/Encrypted Zone.

> So after upgrading to a version of Hadoop that contained HADOOP-14104 distcp

> now fails as we along with other customers (HDFS-13696) DO NOT allow

> KMSServer endpoints to be exposed out of our cluster network as data residing

> in these TDE/Zones contain very critical data that cannot be distcped between

> clusters.

> I propose adding a new code block with the following custom property

> "hadoop.security.kms.client.allow.remote.kms" it will default to "true" so

> keeping current feature of HADOOP-14104 but if specified to "false" will

> allow this area of code to operate as it did before HADOOP-14104. I can see

> the value in HADOOP-14104 but the way Hadoop worked before this JIRA/Issue

> should of at least had an option specified to allow Hadoop/KMS code to

> operate similar to how it did before by not requesting remote KMSServer URIs

> which would than attempt to get a delegation token even if not operating on

> encrypted zones.

> Error when KMS Server traffic is not allowed between cluster networks per

> enterprise security standard which cannot be changed they denied the request

> for exception so the only solution is to allow a feature to not attempt to

> request tokens.

> {code:java}

> $ hadoop distcp -Ddfs.namenode.kerberos.principal.pattern=*

> -Dmapreduce.job.hdfs-servers.token-renewal.exclude=tech

> hdfs:///processed/public/opendata/samples/distcp_test/distcp_file.txt

> hdfs://tech/processed/public/opendata/samples/distcp_test/distcp_file2.txt

> 19/05/29 14:06:09 INFO tools.DistCp: Input Options: DistCpOptions

> {atomicCommit=false, syncFolder=false, deleteMissing=false,

> ignoreFailures=false, overwrite=false, append=false, useDiff=false,

> fromSnapshot=null, toSnapshot=null, skipCRC=false, blocking=true,

> numListstatusThreads=0, maxMaps=20, mapBandwidth=100,

> sslConfigurationFile='null', copyStrategy='uniformsize', preserveStatus=[],

> preserveRawXattrs=false, atomicWorkPath=null, logPath=null,

> sourceFileListing=null,

> sourcePaths=[hdfs:/processed/public/opendata/samples/distcp_test/distcp_file.txt],

>

> targetPath=hdfs://tech/processed/public/opendata/samples/distcp_test/distcp_file2.txt,

> targetPathExists=true, filtersFile='null', verboseLog=false}

> 19/05/29 14:06:09 INFO client.AHSProxy: Connecting to Application History

> server at ha21d53mn.unit.hdp.example.com/10.70.49.2:10200

> 19/05/29 14:06:10 INFO hdfs.DFSClient: Created HDFS_DELEGATION_TOKEN token

> 5093920 for gss2002 on ha-hdfs:unit

> 19/05/29 14:06:10 INFO security.TokenCache: Got dt for hdfs://unit; Kind:

> HDFS_DELEGATION_TOKEN, Service: ha-hdfs:unit, Ident: (HDFS_DELEGATION_TOKEN

> token 5093920 for gss2002)

> 19/05/29 14:06:10 INFO security.TokenCache: Got dt for hdfs://unit; Kind:

> kms-dt, Service: ha21d53en.unit.hdp.example.com:9292, Ident: (owner=gss2002,

> renewer=yarn, realUser=, issueDate=1559153170120, maxDate=1559757970120,

> sequenceNumber=237, masterKeyId=2)

> 19/05/29 14:06:10 INFO tools.SimpleCopyListing: Paths (files+dirs) cnt = 1;

> dirCnt = 0

> 19/05/29 14:06:10 INFO tools.SimpleCopyListing: Build file listing completed.

> 19/05/29 14:06:10 INFO tools.DistCp: Number of paths in the copy list: 1

> 19/05/29 14:06:10 INFO tools.DistCp: Number of paths in the copy list: 1

> 19/05/29 14:06:10 INFO client.AHSProxy: Connecting to Application History

> server at ha21d53mn.unit.hdp.example.com/10.70.49.2:10200

> 19/05/29 14:06:10 INFO hdfs.DFSClient: Created HDFS_DELEGATION_TOKEN token

> 556079 for gss2002 on ha-hdfs:tech

> 19/05/29 14:06:10 ERROR tools.DistCp: Exception encountered

> java.io.IOException: java.net.NoRouteToHostException: No route to host (Host

> unreachable)

> at

> org.apache.hadoop.crypto.key.kms.KMSClientProvider.addDelegationTokens(KMSClientProvider.java:1029)

> at

>

[GitHub] [hadoop] ChenSammi commented on issue #903: HDDS-1490. Support configurable container placement policy through 'o…

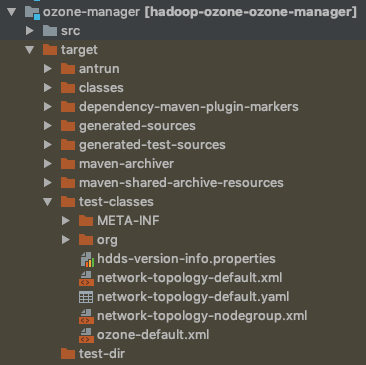

ChenSammi commented on issue #903: HDDS-1490. Support configurable container

placement policy through 'o…

URL: https://github.com/apache/hadoop/pull/903#issuecomment-499336056

@xiaoyuyao, thanks for the information. I didn't releasize that it's because

I explicitely added the testResouces to the pom.xml, then the resources under

${basedir}/src/test/resources get ignored. I will upload a new commit shortly.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] xiaoyuyao merged pull request #910: HDDS-1612. Add 'scmcli printTopology' shell command to print datanode…

xiaoyuyao merged pull request #910: HDDS-1612. Add 'scmcli printTopology' shell command to print datanode… URL: https://github.com/apache/hadoop/pull/910 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] xiaoyuyao commented on a change in pull request #910: HDDS-1612. Add 'scmcli printTopology' shell command to print datanode…

xiaoyuyao commented on a change in pull request #910: HDDS-1612. Add 'scmcli

printTopology' shell command to print datanode…

URL: https://github.com/apache/hadoop/pull/910#discussion_r291007246

##

File path:

hadoop-hdds/tools/src/main/java/org/apache/hadoop/hdds/scm/cli/TopologySubcommand.java

##

@@ -0,0 +1,80 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hadoop.hdds.scm.cli;

+

+import org.apache.hadoop.hdds.cli.HddsVersionProvider;

+import org.apache.hadoop.hdds.protocol.proto.HddsProtos;

+import org.apache.hadoop.hdds.scm.client.ScmClient;

+import picocli.CommandLine;

+import static org.apache.hadoop.hdds.protocol.proto.HddsProtos.NodeState.DEAD;

+import static

org.apache.hadoop.hdds.protocol.proto.HddsProtos.NodeState.DECOMMISSIONED;

+import static

org.apache.hadoop.hdds.protocol.proto.HddsProtos.NodeState.DECOMMISSIONING;

+import static

org.apache.hadoop.hdds.protocol.proto.HddsProtos.NodeState.HEALTHY;

+import static org.apache.hadoop.hdds.protocol.proto.HddsProtos.NodeState.STALE;

+

+import java.util.ArrayList;

+import java.util.List;

+import java.util.concurrent.Callable;

+

+/**

+ * Handler of printTopology command.

+ */

+@CommandLine.Command(

+name = "printTopology",

+description = "Print a tree of the network topology as reported by SCM",

+mixinStandardHelpOptions = true,

+versionProvider = HddsVersionProvider.class)

+public class TopologySubcommand implements Callable {

+

+ @CommandLine.ParentCommand

+ private SCMCLI parent;

+

+ private static List stateArray = new ArrayList<>();

+

+ static {

+stateArray.add(HEALTHY);

+stateArray.add(STALE);

+stateArray.add(DEAD);

+stateArray.add(DECOMMISSIONING);

+stateArray.add(DECOMMISSIONED);

+ }

+

+ @Override

+ public Void call() throws Exception {

+try (ScmClient scmClient = parent.createScmClient()) {

+ for (HddsProtos.NodeState state : stateArray) {

+List nodes = scmClient.queryNode(state,

+HddsProtos.QueryScope.CLUSTER, "");

+if (nodes != null && nodes.size() > 0) {

+ // show node state

+ System.out.println("State = " + state.toString());

Review comment:

Agree, let's add that in follow up JIRA. I will merge it shortly.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on issue #818: HADOOP-16117 Update AWS SDK to 1.11.563

hadoop-yetus commented on issue #818: HADOOP-16117 Update AWS SDK to 1.11.563 URL: https://github.com/apache/hadoop/pull/818#issuecomment-499326011 :broken_heart: **-1 overall** | Vote | Subsystem | Runtime | Comment | |::|--:|:|:| | 0 | reexec | 32 | Docker mode activated. | ||| _ Prechecks _ | | +1 | dupname | 0 | No case conflicting files found. | | +1 | @author | 0 | The patch does not contain any @author tags. | | -1 | test4tests | 0 | The patch doesn't appear to include any new or modified tests. Please justify why no new tests are needed for this patch. Also please list what manual steps were performed to verify this patch. | ||| _ trunk Compile Tests _ | | 0 | mvndep | 65 | Maven dependency ordering for branch | | +1 | mvninstall | 1042 | trunk passed | | +1 | compile | 1014 | trunk passed | | +1 | mvnsite | 844 | trunk passed | | +1 | shadedclient | 3636 | branch has no errors when building and testing our client artifacts. | | +1 | javadoc | 342 | trunk passed | ||| _ Patch Compile Tests _ | | 0 | mvndep | 23 | Maven dependency ordering for patch | | +1 | mvninstall | 1073 | the patch passed | | +1 | compile | 965 | the patch passed | | +1 | javac | 965 | the patch passed | | +1 | mvnsite | 828 | the patch passed | | -1 | whitespace | 0 | The patch has 1 line(s) that end in whitespace. Use git apply --whitespace=fix <>. Refer https://git-scm.com/docs/git-apply | | +1 | xml | 2 | The patch has no ill-formed XML file. | | +1 | shadedclient | 668 | patch has no errors when building and testing our client artifacts. | | +1 | javadoc | 379 | the patch passed | ||| _ Other Tests _ | | -1 | unit | 8185 | root in the patch failed. | | +1 | asflicense | 46 | The patch does not generate ASF License warnings. | | | | 16342 | | | Reason | Tests | |---:|:--| | Failed junit tests | hadoop.hdfs.TestMultipleNNPortQOP | | | hadoop.hdfs.web.TestWebHdfsTimeouts | | Subsystem | Report/Notes | |--:|:-| | Docker | Client=17.05.0-ce Server=17.05.0-ce base: https://builds.apache.org/job/hadoop-multibranch/job/PR-818/4/artifact/out/Dockerfile | | GITHUB PR | https://github.com/apache/hadoop/pull/818 | | Optional Tests | dupname asflicense compile javac javadoc mvninstall mvnsite unit shadedclient xml | | uname | Linux 69a492540875 4.4.0-139-generic #165-Ubuntu SMP Wed Oct 24 10:58:50 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux | | Build tool | maven | | Personality | personality/hadoop.sh | | git revision | trunk / 3b1c257 | | Default Java | 1.8.0_212 | | whitespace | https://builds.apache.org/job/hadoop-multibranch/job/PR-818/4/artifact/out/whitespace-eol.txt | | unit | https://builds.apache.org/job/hadoop-multibranch/job/PR-818/4/artifact/out/patch-unit-root.txt | | Test Results | https://builds.apache.org/job/hadoop-multibranch/job/PR-818/4/testReport/ | | Max. process+thread count | 4427 (vs. ulimit of 5500) | | modules | C: hadoop-project hadoop-tools/hadoop-aws . U: . | | Console output | https://builds.apache.org/job/hadoop-multibranch/job/PR-818/4/console | | versions | git=2.7.4 maven=3.3.9 | | Powered by | Apache Yetus 0.10.0 http://yetus.apache.org | This message was automatically generated. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] ChenSammi commented on a change in pull request #910: HDDS-1612. Add 'scmcli printTopology' shell command to print datanode…

ChenSammi commented on a change in pull request #910: HDDS-1612. Add 'scmcli

printTopology' shell command to print datanode…

URL: https://github.com/apache/hadoop/pull/910#discussion_r290995013

##

File path:

hadoop-hdds/tools/src/main/java/org/apache/hadoop/hdds/scm/cli/TopologySubcommand.java

##

@@ -0,0 +1,80 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hadoop.hdds.scm.cli;

+

+import org.apache.hadoop.hdds.cli.HddsVersionProvider;

+import org.apache.hadoop.hdds.protocol.proto.HddsProtos;

+import org.apache.hadoop.hdds.scm.client.ScmClient;

+import picocli.CommandLine;

+import static org.apache.hadoop.hdds.protocol.proto.HddsProtos.NodeState.DEAD;

+import static

org.apache.hadoop.hdds.protocol.proto.HddsProtos.NodeState.DECOMMISSIONED;

+import static

org.apache.hadoop.hdds.protocol.proto.HddsProtos.NodeState.DECOMMISSIONING;

+import static

org.apache.hadoop.hdds.protocol.proto.HddsProtos.NodeState.HEALTHY;

+import static org.apache.hadoop.hdds.protocol.proto.HddsProtos.NodeState.STALE;

+

+import java.util.ArrayList;

+import java.util.List;

+import java.util.concurrent.Callable;

+

+/**

+ * Handler of printTopology command.

+ */

+@CommandLine.Command(

+name = "printTopology",

+description = "Print a tree of the network topology as reported by SCM",

+mixinStandardHelpOptions = true,

+versionProvider = HddsVersionProvider.class)

+public class TopologySubcommand implements Callable {

+

+ @CommandLine.ParentCommand

+ private SCMCLI parent;

+

+ private static List stateArray = new ArrayList<>();

+

+ static {

+stateArray.add(HEALTHY);

+stateArray.add(STALE);

+stateArray.add(DEAD);

+stateArray.add(DECOMMISSIONING);

+stateArray.add(DECOMMISSIONED);

+ }

+

+ @Override

+ public Void call() throws Exception {

+try (ScmClient scmClient = parent.createScmClient()) {

+ for (HddsProtos.NodeState state : stateArray) {

+List nodes = scmClient.queryNode(state,

+HddsProtos.QueryScope.CLUSTER, "");

+if (nodes != null && nodes.size() > 0) {

+ // show node state

+ System.out.println("State = " + state.toString());

Review comment:

@xiaoyuyao , we can add option to order the output acccording to topology

layer. For example, for /rack/node topolgy, we can show,

State = HEALTHY

/default-rack:

ozone_datanode_1.ozone_default/172.18.0.3

ozone_datanode_2.ozone_default/172.18.0.2

ozone_datanode_3.ozone_default/172.18.0.4

/rack1:

ozone_datanode_4.ozone_default/172.18.0.5

ozone_datanode_5.ozone_default/172.18.0.6

For /dc/rack/node topology, we can either show

State = HEALTHY

/default-dc/default-rack:

ozone_datanode_1.ozone_default/172.18.0.3

ozone_datanode_2.ozone_default/172.18.0.2

ozone_datanode_3.ozone_default/172.18.0.4

/dc1/rack1:

ozone_datanode_4.ozone_default/172.18.0.5

ozone_datanode_5.ozone_default/172.18.0.6

or

State = HEALTHY

default-dc:

default-rack:

ozone_datanode_1.ozone_default/172.18.0.3

ozone_datanode_2.ozone_default/172.18.0.2

ozone_datanode_3.ozone_default/172.18.0.4

dc1:

rack1:

ozone_datanode_4.ozone_default/172.18.0.5

ozone_datanode_5.ozone_default/172.18.0.6

This can be done in a follow on JIRA. Currently, this plain format just

meets our needs.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] ChenSammi commented on a change in pull request #910: HDDS-1612. Add 'scmcli printTopology' shell command to print datanode…

ChenSammi commented on a change in pull request #910: HDDS-1612. Add 'scmcli

printTopology' shell command to print datanode…

URL: https://github.com/apache/hadoop/pull/910#discussion_r290995013

##

File path:

hadoop-hdds/tools/src/main/java/org/apache/hadoop/hdds/scm/cli/TopologySubcommand.java

##

@@ -0,0 +1,80 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hadoop.hdds.scm.cli;

+

+import org.apache.hadoop.hdds.cli.HddsVersionProvider;

+import org.apache.hadoop.hdds.protocol.proto.HddsProtos;

+import org.apache.hadoop.hdds.scm.client.ScmClient;

+import picocli.CommandLine;

+import static org.apache.hadoop.hdds.protocol.proto.HddsProtos.NodeState.DEAD;

+import static

org.apache.hadoop.hdds.protocol.proto.HddsProtos.NodeState.DECOMMISSIONED;

+import static

org.apache.hadoop.hdds.protocol.proto.HddsProtos.NodeState.DECOMMISSIONING;

+import static

org.apache.hadoop.hdds.protocol.proto.HddsProtos.NodeState.HEALTHY;

+import static org.apache.hadoop.hdds.protocol.proto.HddsProtos.NodeState.STALE;

+

+import java.util.ArrayList;

+import java.util.List;

+import java.util.concurrent.Callable;

+

+/**

+ * Handler of printTopology command.

+ */

+@CommandLine.Command(

+name = "printTopology",

+description = "Print a tree of the network topology as reported by SCM",

+mixinStandardHelpOptions = true,

+versionProvider = HddsVersionProvider.class)

+public class TopologySubcommand implements Callable {

+

+ @CommandLine.ParentCommand

+ private SCMCLI parent;

+

+ private static List stateArray = new ArrayList<>();

+

+ static {

+stateArray.add(HEALTHY);

+stateArray.add(STALE);

+stateArray.add(DEAD);

+stateArray.add(DECOMMISSIONING);

+stateArray.add(DECOMMISSIONED);

+ }

+

+ @Override

+ public Void call() throws Exception {

+try (ScmClient scmClient = parent.createScmClient()) {

+ for (HddsProtos.NodeState state : stateArray) {

+List nodes = scmClient.queryNode(state,

+HddsProtos.QueryScope.CLUSTER, "");

+if (nodes != null && nodes.size() > 0) {

+ // show node state

+ System.out.println("State = " + state.toString());

Review comment:

@xiaoyuyao , we can add option to order the output acccording to topology

layer. For example, for /rack/node topolgy, we can show,

State = HEALTHY

/default-rack:

ozone_datanode_1.ozone_default/172.18.0.3

ozone_datanode_2.ozone_default/172.18.0.2

ozone_datanode_3.ozone_default/172.18.0.4

/rack1:

ozone_datanode_4.ozone_default/172.18.0.5

ozone_datanode_5.ozone_default/172.18.0.6

For /dc/rack/node topology, we can either show

State = HEALTHY

/default-dc/default-rack:

ozone_datanode_1.ozone_default/172.18.0.3

ozone_datanode_2.ozone_default/172.18.0.2

ozone_datanode_3.ozone_default/172.18.0.4

/dc1/rack1:

ozone_datanode_4.ozone_default/172.18.0.5

ozone_datanode_5.ozone_default/172.18.0.6

or

State = HEALTHY

default-dc:

default-rack:

ozone_datanode_1.ozone_default/172.18.0.3

ozone_datanode_2.ozone_default/172.18.0.2

ozone_datanode_3.ozone_default/172.18.0.4

dc1:

rack1:

ozone_datanode_4.ozone_default/172.18.0.5

ozone_datanode_5.ozone_default/172.18.0.6

This can be done in a follow on JIRA. Current plain format just meets our

needs.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on issue #867: HDDS-1605. Implement AuditLogging for OM HA Bucket write requests.

hadoop-yetus commented on issue #867: HDDS-1605. Implement AuditLogging for OM HA Bucket write requests. URL: https://github.com/apache/hadoop/pull/867#issuecomment-499316838 :broken_heart: **-1 overall** | Vote | Subsystem | Runtime | Comment | |::|--:|:|:| | 0 | reexec | 38 | Docker mode activated. | ||| _ Prechecks _ | | +1 | dupname | 0 | No case conflicting files found. | | +1 | @author | 0 | The patch does not contain any @author tags. | | +1 | test4tests | 0 | The patch appears to include 3 new or modified test files. | ||| _ trunk Compile Tests _ | | 0 | mvndep | 25 | Maven dependency ordering for branch | | +1 | mvninstall | 515 | trunk passed | | +1 | compile | 294 | trunk passed | | +1 | checkstyle | 72 | trunk passed | | +1 | mvnsite | 0 | trunk passed | | +1 | shadedclient | 801 | branch has no errors when building and testing our client artifacts. | | +1 | javadoc | 157 | trunk passed | | 0 | spotbugs | 344 | Used deprecated FindBugs config; considering switching to SpotBugs. | | +1 | findbugs | 541 | trunk passed | ||| _ Patch Compile Tests _ | | 0 | mvndep | 65 | Maven dependency ordering for patch | | +1 | mvninstall | 466 | the patch passed | | +1 | compile | 283 | the patch passed | | +1 | javac | 283 | the patch passed | | +1 | checkstyle | 71 | the patch passed | | +1 | mvnsite | 0 | the patch passed | | +1 | whitespace | 0 | The patch has no whitespace issues. | | +1 | shadedclient | 616 | patch has no errors when building and testing our client artifacts. | | -1 | javadoc | 98 | hadoop-ozone generated 1 new + 8 unchanged - 0 fixed = 9 total (was 8) | | +1 | findbugs | 533 | the patch passed | ||| _ Other Tests _ | | +1 | unit | 237 | hadoop-hdds in the patch passed. | | -1 | unit | 1389 | hadoop-ozone in the patch failed. | | +1 | asflicense | 59 | The patch does not generate ASF License warnings. | | | | 6519 | | | Reason | Tests | |---:|:--| | Failed junit tests | hadoop.ozone.client.rpc.TestWatchForCommit | | | hadoop.ozone.client.rpc.TestOzoneAtRestEncryption | | | hadoop.ozone.client.rpc.TestFailureHandlingByClient | | | hadoop.hdds.scm.pipeline.TestRatisPipelineProvider | | | hadoop.ozone.client.rpc.TestSecureOzoneRpcClient | | | hadoop.ozone.client.rpc.TestOzoneRpcClientWithRatis | | Subsystem | Report/Notes | |--:|:-| | Docker | Client=17.05.0-ce Server=17.05.0-ce base: https://builds.apache.org/job/hadoop-multibranch/job/PR-867/5/artifact/out/Dockerfile | | GITHUB PR | https://github.com/apache/hadoop/pull/867 | | Optional Tests | dupname asflicense compile javac javadoc mvninstall mvnsite unit shadedclient findbugs checkstyle | | uname | Linux 4809e1ca7ff6 4.4.0-138-generic #164-Ubuntu SMP Tue Oct 2 17:16:02 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux | | Build tool | maven | | Personality | personality/hadoop.sh | | git revision | trunk / 294695d | | Default Java | 1.8.0_212 | | javadoc | https://builds.apache.org/job/hadoop-multibranch/job/PR-867/5/artifact/out/diff-javadoc-javadoc-hadoop-ozone.txt | | unit | https://builds.apache.org/job/hadoop-multibranch/job/PR-867/5/artifact/out/patch-unit-hadoop-ozone.txt | | Test Results | https://builds.apache.org/job/hadoop-multibranch/job/PR-867/5/testReport/ | | Max. process+thread count | 5238 (vs. ulimit of 5500) | | modules | C: hadoop-hdds/common hadoop-ozone/ozone-manager U: . | | Console output | https://builds.apache.org/job/hadoop-multibranch/job/PR-867/5/console | | versions | git=2.7.4 maven=3.3.9 findbugs=3.1.0-RC1 | | Powered by | Apache Yetus 0.10.0 http://yetus.apache.org | This message was automatically generated. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] bharatviswa504 commented on issue #867: HDDS-1605. Implement AuditLogging for OM HA Bucket write requests.

bharatviswa504 commented on issue #867: HDDS-1605. Implement AuditLogging for OM HA Bucket write requests. URL: https://github.com/apache/hadoop/pull/867#issuecomment-499305685 /retest This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on issue #912: HDDS-1201. Reporting Corruptions in Containers to SCM

hadoop-yetus commented on issue #912: HDDS-1201. Reporting Corruptions in Containers to SCM URL: https://github.com/apache/hadoop/pull/912#issuecomment-499303878 :broken_heart: **-1 overall** | Vote | Subsystem | Runtime | Comment | |::|--:|:|:| | 0 | reexec | 95 | Docker mode activated. | ||| _ Prechecks _ | | +1 | dupname | 0 | No case conflicting files found. | | +1 | @author | 0 | The patch does not contain any @author tags. | | -1 | test4tests | 0 | The patch doesn't appear to include any new or modified tests. Please justify why no new tests are needed for this patch. Also please list what manual steps were performed to verify this patch. | ||| _ trunk Compile Tests _ | | +1 | mvninstall | 622 | trunk passed | | +1 | compile | 340 | trunk passed | | +1 | checkstyle | 84 | trunk passed | | +1 | mvnsite | 0 | trunk passed | | +1 | shadedclient | 977 | branch has no errors when building and testing our client artifacts. | | +1 | javadoc | 185 | trunk passed | | 0 | spotbugs | 391 | Used deprecated FindBugs config; considering switching to SpotBugs. | | +1 | findbugs | 618 | trunk passed | ||| _ Patch Compile Tests _ | | +1 | mvninstall | 545 | the patch passed | | +1 | compile | 332 | the patch passed | | +1 | javac | 332 | the patch passed | | -0 | checkstyle | 48 | hadoop-hdds: The patch generated 1 new + 0 unchanged - 0 fixed = 1 total (was 0) | | +1 | mvnsite | 0 | the patch passed | | +1 | whitespace | 0 | The patch has no whitespace issues. | | +1 | shadedclient | 790 | patch has no errors when building and testing our client artifacts. | | +1 | javadoc | 195 | the patch passed | | +1 | findbugs | 629 | the patch passed | ||| _ Other Tests _ | | -1 | unit | 367 | hadoop-hdds in the patch failed. | | -1 | unit | 2607 | hadoop-ozone in the patch failed. | | +1 | asflicense | 94 | The patch does not generate ASF License warnings. | | | | 8769 | | | Reason | Tests | |---:|:--| | Failed junit tests | hadoop.hdds.scm.block.TestBlockManager | | | hadoop.ozone.container.common.statemachine.commandhandler.TestBlockDeletion | | | hadoop.ozone.om.TestOzoneManager | | | hadoop.hdds.scm.pipeline.TestRatisPipelineCreateAndDestory | | | hadoop.ozone.container.common.impl.TestContainerPersistence | | | hadoop.ozone.client.rpc.TestOzoneAtRestEncryption | | | hadoop.ozone.om.TestOzoneManagerHA | | | hadoop.ozone.TestMiniOzoneCluster | | | hadoop.ozone.container.common.statemachine.commandhandler.TestCloseContainerByPipeline | | | hadoop.ozone.client.rpc.TestReadRetries | | | hadoop.ozone.ozShell.TestOzoneShell | | Subsystem | Report/Notes | |--:|:-| | Docker | Client=17.05.0-ce Server=17.05.0-ce base: https://builds.apache.org/job/hadoop-multibranch/job/PR-912/2/artifact/out/Dockerfile | | GITHUB PR | https://github.com/apache/hadoop/pull/912 | | Optional Tests | dupname asflicense compile javac javadoc mvninstall mvnsite unit shadedclient findbugs checkstyle | | uname | Linux 7d60aa1bbb08 4.4.0-138-generic #164-Ubuntu SMP Tue Oct 2 17:16:02 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux | | Build tool | maven | | Personality | personality/hadoop.sh | | git revision | trunk / 3b1c257 | | Default Java | 1.8.0_212 | | checkstyle | https://builds.apache.org/job/hadoop-multibranch/job/PR-912/2/artifact/out/diff-checkstyle-hadoop-hdds.txt | | unit | https://builds.apache.org/job/hadoop-multibranch/job/PR-912/2/artifact/out/patch-unit-hadoop-hdds.txt | | unit | https://builds.apache.org/job/hadoop-multibranch/job/PR-912/2/artifact/out/patch-unit-hadoop-ozone.txt | | Test Results | https://builds.apache.org/job/hadoop-multibranch/job/PR-912/2/testReport/ | | Max. process+thread count | 3198 (vs. ulimit of 5500) | | modules | C: hadoop-hdds/container-service U: hadoop-hdds/container-service | | Console output | https://builds.apache.org/job/hadoop-multibranch/job/PR-912/2/console | | versions | git=2.7.4 maven=3.3.9 findbugs=3.1.0-RC1 | | Powered by | Apache Yetus 0.10.0 http://yetus.apache.org | This message was automatically generated. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on issue #916: HDDS-1652. HddsDispatcher should not shutdown volumeSet. Contributed …

hadoop-yetus commented on issue #916: HDDS-1652. HddsDispatcher should not shutdown volumeSet. Contributed … URL: https://github.com/apache/hadoop/pull/916#issuecomment-499299860 :broken_heart: **-1 overall** | Vote | Subsystem | Runtime | Comment | |::|--:|:|:| | 0 | reexec | 36 | Docker mode activated. | ||| _ Prechecks _ | | +1 | dupname | 0 | No case conflicting files found. | | +1 | @author | 0 | The patch does not contain any @author tags. | | -1 | test4tests | 0 | The patch doesn't appear to include any new or modified tests. Please justify why no new tests are needed for this patch. Also please list what manual steps were performed to verify this patch. | ||| _ trunk Compile Tests _ | | 0 | mvndep | 25 | Maven dependency ordering for branch | | +1 | mvninstall | 490 | trunk passed | | +1 | compile | 294 | trunk passed | | +1 | checkstyle | 90 | trunk passed | | +1 | mvnsite | 0 | trunk passed | | +1 | shadedclient | 879 | branch has no errors when building and testing our client artifacts. | | +1 | javadoc | 175 | trunk passed | | 0 | spotbugs | 337 | Used deprecated FindBugs config; considering switching to SpotBugs. | | +1 | findbugs | 526 | trunk passed | ||| _ Patch Compile Tests _ | | 0 | mvndep | 29 | Maven dependency ordering for patch | | +1 | mvninstall | 465 | the patch passed | | +1 | compile | 281 | the patch passed | | +1 | javac | 281 | the patch passed | | +1 | checkstyle | 83 | the patch passed | | +1 | mvnsite | 0 | the patch passed | | +1 | whitespace | 0 | The patch has no whitespace issues. | | +1 | shadedclient | 685 | patch has no errors when building and testing our client artifacts. | | +1 | javadoc | 175 | the patch passed | | +1 | findbugs | 536 | the patch passed | ||| _ Other Tests _ | | +1 | unit | 229 | hadoop-hdds in the patch passed. | | -1 | unit | 1612 | hadoop-ozone in the patch failed. | | +1 | asflicense | 59 | The patch does not generate ASF License warnings. | | | | 6864 | | | Reason | Tests | |---:|:--| | Failed junit tests | hadoop.ozone.client.rpc.TestOzoneRpcClientWithRatis | | | hadoop.ozone.client.rpc.TestOzoneAtRestEncryption | | | hadoop.ozone.client.rpc.TestFailureHandlingByClient | | | hadoop.ozone.client.rpc.TestOzoneRpcClient | | | hadoop.ozone.client.rpc.TestSecureOzoneRpcClient | | Subsystem | Report/Notes | |--:|:-| | Docker | Client=17.05.0-ce Server=17.05.0-ce base: https://builds.apache.org/job/hadoop-multibranch/job/PR-916/1/artifact/out/Dockerfile | | GITHUB PR | https://github.com/apache/hadoop/pull/916 | | Optional Tests | dupname asflicense compile javac javadoc mvninstall mvnsite unit shadedclient findbugs checkstyle | | uname | Linux 2a664afc4c9d 4.4.0-138-generic #164-Ubuntu SMP Tue Oct 2 17:16:02 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux | | Build tool | maven | | Personality | personality/hadoop.sh | | git revision | trunk / 3b1c257 | | Default Java | 1.8.0_212 | | unit | https://builds.apache.org/job/hadoop-multibranch/job/PR-916/1/artifact/out/patch-unit-hadoop-ozone.txt | | Test Results | https://builds.apache.org/job/hadoop-multibranch/job/PR-916/1/testReport/ | | Max. process+thread count | 5404 (vs. ulimit of 5500) | | modules | C: hadoop-hdds/container-service hadoop-ozone/tools U: . | | Console output | https://builds.apache.org/job/hadoop-multibranch/job/PR-916/1/console | | versions | git=2.7.4 maven=3.3.9 findbugs=3.1.0-RC1 | | Powered by | Apache Yetus 0.10.0 http://yetus.apache.org | This message was automatically generated. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] bharatviswa504 commented on a change in pull request #867: HDDS-1605. Implement AuditLogging for OM HA Bucket write requests.

bharatviswa504 commented on a change in pull request #867: HDDS-1605. Implement

AuditLogging for OM HA Bucket write requests.

URL: https://github.com/apache/hadoop/pull/867#discussion_r290978411

##

File path:

hadoop-ozone/ozone-manager/src/main/java/org/apache/hadoop/ozone/om/request/bucket/OMBucketCreateRequest.java

##

@@ -152,27 +160,35 @@ public OMClientResponse

validateAndUpdateCache(OzoneManager ozoneManager,

OMException.ResultCodes.BUCKET_ALREADY_EXISTS);

}

- LOG.debug("created bucket: {} in volume: {}", bucketName, volumeName);

- omMetrics.incNumBuckets();

-

// Update table cache.

metadataManager.getBucketTable().addCacheEntry(new CacheKey<>(bucketKey),

new CacheValue<>(Optional.of(omBucketInfo), transactionLogIndex));

- // return response.

+

+} catch (IOException ex) {

+ exception = ex;

+} finally {

+ metadataManager.getLock().releaseBucketLock(volumeName, bucketName);

+ metadataManager.getLock().releaseVolumeLock(volumeName);

+

+ // Performing audit logging outside of the lock.

+ auditLog(auditLogger, buildAuditMessage(OMAction.CREATE_BUCKET,

+ omBucketInfo.toAuditMap(), exception, userInfo));

+}

Review comment:

Done. Addressed it.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] bharatviswa504 commented on a change in pull request #867: HDDS-1605. Implement AuditLogging for OM HA Bucket write requests.

bharatviswa504 commented on a change in pull request #867: HDDS-1605. Implement

AuditLogging for OM HA Bucket write requests.

URL: https://github.com/apache/hadoop/pull/867#discussion_r290978023

##

File path:

hadoop-ozone/ozone-manager/src/main/java/org/apache/hadoop/ozone/om/request/bucket/OMBucketCreateRequest.java

##

@@ -152,27 +160,35 @@ public OMClientResponse

validateAndUpdateCache(OzoneManager ozoneManager,

OMException.ResultCodes.BUCKET_ALREADY_EXISTS);

}

- LOG.debug("created bucket: {} in volume: {}", bucketName, volumeName);

- omMetrics.incNumBuckets();

-

// Update table cache.

metadataManager.getBucketTable().addCacheEntry(new CacheKey<>(bucketKey),

new CacheValue<>(Optional.of(omBucketInfo), transactionLogIndex));

- // return response.

+

+} catch (IOException ex) {

+ exception = ex;

+} finally {

+ metadataManager.getLock().releaseBucketLock(volumeName, bucketName);

+ metadataManager.getLock().releaseVolumeLock(volumeName);

+

+ // Performing audit logging outside of the lock.

+ auditLog(auditLogger, buildAuditMessage(OMAction.CREATE_BUCKET,

+ omBucketInfo.toAuditMap(), exception, userInfo));

+}

Review comment:

Yes it can be done. Will update it if you prefer that way?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] hanishakoneru commented on a change in pull request #867: HDDS-1605. Implement AuditLogging for OM HA Bucket write requests.

hanishakoneru commented on a change in pull request #867: HDDS-1605. Implement

AuditLogging for OM HA Bucket write requests.

URL: https://github.com/apache/hadoop/pull/867#discussion_r290977673

##

File path:

hadoop-ozone/ozone-manager/src/main/java/org/apache/hadoop/ozone/om/request/bucket/OMBucketCreateRequest.java

##

@@ -152,27 +160,35 @@ public OMClientResponse

validateAndUpdateCache(OzoneManager ozoneManager,

OMException.ResultCodes.BUCKET_ALREADY_EXISTS);

}

- LOG.debug("created bucket: {} in volume: {}", bucketName, volumeName);

- omMetrics.incNumBuckets();

-

// Update table cache.

metadataManager.getBucketTable().addCacheEntry(new CacheKey<>(bucketKey),

new CacheValue<>(Optional.of(omBucketInfo), transactionLogIndex));

- // return response.

+

+} catch (IOException ex) {

+ exception = ex;

+} finally {

+ metadataManager.getLock().releaseBucketLock(volumeName, bucketName);

+ metadataManager.getLock().releaseVolumeLock(volumeName);

+

+ // Performing audit logging outside of the lock.

+ auditLog(auditLogger, buildAuditMessage(OMAction.CREATE_BUCKET,

+ omBucketInfo.toAuditMap(), exception, userInfo));

+}

Review comment:

Yes I meant why not outside the finally block as generally we keep the must

be done things (like lock releases) in the finally block.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on issue #915: HDDS-1650. Fix Ozone tests leaking volume checker thread. Contributed…

hadoop-yetus commented on issue #915: HDDS-1650. Fix Ozone tests leaking volume checker thread. Contributed… URL: https://github.com/apache/hadoop/pull/915#issuecomment-499295177 :broken_heart: **-1 overall** | Vote | Subsystem | Runtime | Comment | |::|--:|:|:| | 0 | reexec | 156 | Docker mode activated. | ||| _ Prechecks _ | | +1 | dupname | 0 | No case conflicting files found. | | +1 | @author | 0 | The patch does not contain any @author tags. | | +1 | test4tests | 0 | The patch appears to include 6 new or modified test files. | ||| _ trunk Compile Tests _ | | +1 | mvninstall | 670 | trunk passed | | +1 | compile | 323 | trunk passed | | +1 | checkstyle | 90 | trunk passed | | +1 | mvnsite | 0 | trunk passed | | +1 | shadedclient | 987 | branch has no errors when building and testing our client artifacts. | | +1 | javadoc | 174 | trunk passed | | 0 | spotbugs | 376 | Used deprecated FindBugs config; considering switching to SpotBugs. | | +1 | findbugs | 603 | trunk passed | ||| _ Patch Compile Tests _ | | +1 | mvninstall | 472 | the patch passed | | +1 | compile | 277 | the patch passed | | +1 | javac | 277 | the patch passed | | +1 | checkstyle | 84 | the patch passed | | +1 | mvnsite | 0 | the patch passed | | +1 | whitespace | 0 | The patch has no whitespace issues. | | +1 | shadedclient | 727 | patch has no errors when building and testing our client artifacts. | | +1 | javadoc | 163 | the patch passed | | +1 | findbugs | 522 | the patch passed | ||| _ Other Tests _ | | -1 | unit | 166 | hadoop-hdds in the patch failed. | | -1 | unit | 1217 | hadoop-ozone in the patch failed. | | +1 | asflicense | 50 | The patch does not generate ASF License warnings. | | | | 6883 | | | Reason | Tests | |---:|:--| | Failed junit tests | hadoop.ozone.container.common.impl.TestHddsDispatcher | | | hadoop.ozone.client.rpc.TestBCSID | | | hadoop.ozone.client.rpc.TestOzoneRpcClient | | | hadoop.ozone.client.rpc.TestSecureOzoneRpcClient | | | hadoop.ozone.client.rpc.TestOzoneRpcClientWithRatis | | | hadoop.ozone.client.rpc.TestOzoneAtRestEncryption | | Subsystem | Report/Notes | |--:|:-| | Docker | Client=18.09.5 Server=18.09.5 base: https://builds.apache.org/job/hadoop-multibranch/job/PR-915/1/artifact/out/Dockerfile | | GITHUB PR | https://github.com/apache/hadoop/pull/915 | | Optional Tests | dupname asflicense compile javac javadoc mvninstall mvnsite unit shadedclient findbugs checkstyle | | uname | Linux 6fb421f8a9b4 4.15.0-48-generic #51-Ubuntu SMP Wed Apr 3 08:28:49 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux | | Build tool | maven | | Personality | personality/hadoop.sh | | git revision | trunk / 3b1c257 | | Default Java | 1.8.0_212 | | unit | https://builds.apache.org/job/hadoop-multibranch/job/PR-915/1/artifact/out/patch-unit-hadoop-hdds.txt | | unit | https://builds.apache.org/job/hadoop-multibranch/job/PR-915/1/artifact/out/patch-unit-hadoop-ozone.txt | | Test Results | https://builds.apache.org/job/hadoop-multibranch/job/PR-915/1/testReport/ | | Max. process+thread count | 4416 (vs. ulimit of 5500) | | modules | C: hadoop-hdds/container-service U: hadoop-hdds/container-service | | Console output | https://builds.apache.org/job/hadoop-multibranch/job/PR-915/1/console | | versions | git=2.7.4 maven=3.3.9 findbugs=3.1.0-RC1 | | Powered by | Apache Yetus 0.10.0 http://yetus.apache.org | This message was automatically generated. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on issue #703: HDDS-1371. Download RocksDB checkpoint from OM Leader to Follower.

hadoop-yetus commented on issue #703: HDDS-1371. Download RocksDB checkpoint from OM Leader to Follower. URL: https://github.com/apache/hadoop/pull/703#issuecomment-499291766 :broken_heart: **-1 overall** | Vote | Subsystem | Runtime | Comment | |::|--:|:|:| | 0 | reexec | 47 | Docker mode activated. | ||| _ Prechecks _ | | +1 | dupname | 1 | No case conflicting files found. | | +1 | @author | 0 | The patch does not contain any @author tags. | | +1 | test4tests | 0 | The patch appears to include 2 new or modified test files. | ||| _ trunk Compile Tests _ | | 0 | mvndep | 24 | Maven dependency ordering for branch | | +1 | mvninstall | 540 | trunk passed | | +1 | compile | 309 | trunk passed | | +1 | checkstyle | 84 | trunk passed | | +1 | mvnsite | 0 | trunk passed | | +1 | shadedclient | 941 | branch has no errors when building and testing our client artifacts. | | +1 | javadoc | 167 | trunk passed | | 0 | spotbugs | 365 | Used deprecated FindBugs config; considering switching to SpotBugs. | | +1 | findbugs | 567 | trunk passed | ||| _ Patch Compile Tests _ | | 0 | mvndep | 34 | Maven dependency ordering for patch | | +1 | mvninstall | 507 | the patch passed | | +1 | compile | 331 | the patch passed | | +1 | javac | 331 | the patch passed | | -0 | checkstyle | 44 | hadoop-ozone: The patch generated 1 new + 0 unchanged - 0 fixed = 1 total (was 0) | | +1 | mvnsite | 0 | the patch passed | | +1 | whitespace | 0 | The patch has no whitespace issues. | | +1 | xml | 2 | The patch has no ill-formed XML file. | | +1 | shadedclient | 797 | patch has no errors when building and testing our client artifacts. | | +1 | javadoc | 199 | the patch passed | | +1 | findbugs | 584 | the patch passed | ||| _ Other Tests _ | | +1 | unit | 251 | hadoop-hdds in the patch passed. | | -1 | unit | 1293 | hadoop-ozone in the patch failed. | | +1 | asflicense | 54 | The patch does not generate ASF License warnings. | | | | 7000 | | | Reason | Tests | |---:|:--| | Failed junit tests | hadoop.ozone.client.rpc.TestBCSID | | | hadoop.ozone.client.rpc.TestOzoneRpcClientWithRatis | | | hadoop.ozone.TestSecureOzoneCluster | | | hadoop.ozone.client.rpc.TestWatchForCommit | | | hadoop.ozone.om.TestOzoneManagerHA | | | hadoop.ozone.TestOzoneConfigurationFields | | Subsystem | Report/Notes | |--:|:-| | Docker | Client=17.05.0-ce Server=17.05.0-ce base: https://builds.apache.org/job/hadoop-multibranch/job/PR-703/8/artifact/out/Dockerfile | | GITHUB PR | https://github.com/apache/hadoop/pull/703 | | Optional Tests | dupname asflicense compile javac javadoc mvninstall mvnsite unit shadedclient findbugs checkstyle xml | | uname | Linux 78c75eb7ce10 4.4.0-141-generic #167~14.04.1-Ubuntu SMP Mon Dec 10 13:20:24 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux | | Build tool | maven | | Personality | personality/hadoop.sh | | git revision | trunk / 0b1e288 | | Default Java | 1.8.0_212 | | checkstyle | https://builds.apache.org/job/hadoop-multibranch/job/PR-703/8/artifact/out/diff-checkstyle-hadoop-ozone.txt | | unit | https://builds.apache.org/job/hadoop-multibranch/job/PR-703/8/artifact/out/patch-unit-hadoop-ozone.txt | | Test Results | https://builds.apache.org/job/hadoop-multibranch/job/PR-703/8/testReport/ | | Max. process+thread count | 4197 (vs. ulimit of 5500) | | modules | C: hadoop-hdds/common hadoop-hdds/framework hadoop-ozone/client hadoop-ozone/common hadoop-ozone/integration-test hadoop-ozone/ozone-manager hadoop-ozone/ozone-recon U: . | | Console output | https://builds.apache.org/job/hadoop-multibranch/job/PR-703/8/console | | versions | git=2.7.4 maven=3.3.9 findbugs=3.1.0-RC1 | | Powered by | Apache Yetus 0.10.0 http://yetus.apache.org | This message was automatically generated. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on issue #914: HDDS-1647 : Recon config tag does not show up on Ozone UI.

hadoop-yetus commented on issue #914: HDDS-1647 : Recon config tag does not show up on Ozone UI. URL: https://github.com/apache/hadoop/pull/914#issuecomment-499289367 :broken_heart: **-1 overall** | Vote | Subsystem | Runtime | Comment | |::|--:|:|:| | 0 | reexec | 44 | Docker mode activated. | ||| _ Prechecks _ | | +1 | dupname | 0 | No case conflicting files found. | | +1 | @author | 0 | The patch does not contain any @author tags. | | -1 | test4tests | 0 | The patch doesn't appear to include any new or modified tests. Please justify why no new tests are needed for this patch. Also please list what manual steps were performed to verify this patch. | ||| _ trunk Compile Tests _ | | +1 | mvninstall | 528 | trunk passed | | +1 | compile | 297 | trunk passed | | +1 | mvnsite | 0 | trunk passed | | +1 | shadedclient | 1674 | branch has no errors when building and testing our client artifacts. | | +1 | javadoc | 167 | trunk passed | ||| _ Patch Compile Tests _ | | +1 | mvninstall | 470 | the patch passed | | +1 | compile | 290 | the patch passed | | +1 | javac | 290 | the patch passed | | +1 | mvnsite | 0 | the patch passed | | +1 | whitespace | 0 | The patch has no whitespace issues. | | +1 | xml | 1 | The patch has no ill-formed XML file. | | +1 | shadedclient | 747 | patch has no errors when building and testing our client artifacts. | | +1 | javadoc | 169 | the patch passed | ||| _ Other Tests _ | | +1 | unit | 264 | hadoop-hdds in the patch passed. | | -1 | unit | 1987 | hadoop-ozone in the patch failed. | | +1 | asflicense | 48 | The patch does not generate ASF License warnings. | | | | 6067 | | | Reason | Tests | |---:|:--| | Failed junit tests | hadoop.ozone.om.TestScmSafeMode | | | hadoop.ozone.om.TestOzoneManagerHA | | | hadoop.hdds.scm.pipeline.TestRatisPipelineProvider | | | hadoop.ozone.client.rpc.TestBCSID | | Subsystem | Report/Notes | |--:|:-| | Docker | Client=17.05.0-ce Server=17.05.0-ce base: https://builds.apache.org/job/hadoop-multibranch/job/PR-914/1/artifact/out/Dockerfile | | GITHUB PR | https://github.com/apache/hadoop/pull/914 | | Optional Tests | dupname asflicense compile javac javadoc mvninstall mvnsite unit shadedclient xml | | uname | Linux 8f64a2330199 4.4.0-144-generic #170~14.04.1-Ubuntu SMP Mon Mar 18 15:02:05 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux | | Build tool | maven | | Personality | personality/hadoop.sh | | git revision | trunk / 0b1e288 | | Default Java | 1.8.0_212 | | unit | https://builds.apache.org/job/hadoop-multibranch/job/PR-914/1/artifact/out/patch-unit-hadoop-ozone.txt | | Test Results | https://builds.apache.org/job/hadoop-multibranch/job/PR-914/1/testReport/ | | Max. process+thread count | 5165 (vs. ulimit of 5500) | | modules | C: hadoop-hdds/common U: hadoop-hdds/common | | Console output | https://builds.apache.org/job/hadoop-multibranch/job/PR-914/1/console | | versions | git=2.7.4 maven=3.3.9 | | Powered by | Apache Yetus 0.10.0 http://yetus.apache.org | This message was automatically generated. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Commented] (HADOOP-16314) Make sure all end point URL is covered by the same AuthenticationFilter

[ https://issues.apache.org/jira/browse/HADOOP-16314?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16857143#comment-16857143 ] Hudson commented on HADOOP-16314: - SUCCESS: Integrated in Jenkins build Hadoop-trunk-Commit #16683 (See [https://builds.apache.org/job/Hadoop-trunk-Commit/16683/]) HADOOP-16314. Make sure all web end points are covered by the same (eyang: rev 294695dd57cb75f2756a31a54264bdd37b32bb01) * (add) hadoop-common-project/hadoop-common/src/test/java/org/apache/hadoop/http/TestHttpServerWithSpnego.java * (edit) hadoop-hdfs-project/hadoop-hdfs/src/test/java/org/apache/hadoop/hdfs/web/TestWebHdfsWithAuthenticationFilter.java * (edit) hadoop-common-project/hadoop-common/src/main/java/org/apache/hadoop/http/HttpServer2.java * (edit) hadoop-common-project/hadoop-common/src/test/java/org/apache/hadoop/log/TestLogLevel.java * (edit) hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-resourcemanager/src/main/java/org/apache/hadoop/yarn/server/resourcemanager/webapp/RMWebAppUtil.java * (edit) hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-common/src/main/java/org/apache/hadoop/yarn/server/util/timeline/TimelineServerUtils.java * (edit) hadoop-common-project/hadoop-common/src/site/markdown/HttpAuthentication.md * (edit) hadoop-hdfs-project/hadoop-hdfs/src/test/java/org/apache/hadoop/hdfs/qjournal/TestSecureNNWithQJM.java * (edit) hadoop-hdfs-project/hadoop-hdfs/src/test/java/org/apache/hadoop/hdfs/TestDFSInotifyEventInputStreamKerberized.java * (edit) hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/namenode/NameNodeHttpServer.java * (edit) hadoop-common-project/hadoop-common/src/test/java/org/apache/hadoop/http/TestServletFilter.java * (edit) hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-web-proxy/src/test/java/org/apache/hadoop/yarn/server/webproxy/amfilter/TestSecureAmFilter.java * (add) hadoop-common-project/hadoop-common/src/main/java/org/apache/hadoop/http/WebServlet.java * (edit) hadoop-common-project/hadoop-common/src/test/java/org/apache/hadoop/http/TestGlobalFilter.java * (edit) hadoop-common-project/hadoop-common/src/test/java/org/apache/hadoop/http/TestPathFilter.java * (edit) hadoop-yarn-project/hadoop-yarn/hadoop-yarn-common/src/main/java/org/apache/hadoop/yarn/webapp/Dispatcher.java * (edit) hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-timelineservice/src/main/java/org/apache/hadoop/yarn/server/timelineservice/reader/TimelineReaderServer.java * (edit) hadoop-hdfs-project/hadoop-hdfs/src/test/java/org/apache/hadoop/hdfs/web/TestWebHdfsTokens.java > Make sure all end point URL is covered by the same AuthenticationFilter > --- > > Key: HADOOP-16314 > URL: https://issues.apache.org/jira/browse/HADOOP-16314 > Project: Hadoop Common > Issue Type: Sub-task > Components: security >Reporter: Eric Yang >Assignee: Prabhu Joseph >Priority: Major > Fix For: 3.3.0 > > Attachments: HADOOP-16314-001.patch, HADOOP-16314-002.patch, > HADOOP-16314-003.patch, HADOOP-16314-004.patch, HADOOP-16314-005.patch, > HADOOP-16314-006.patch, HADOOP-16314-007.patch, Hadoop Web Security.xlsx, > scan.txt > > > In the enclosed spreadsheet, it shows the list of web applications deployed > by Hadoop, and filters applied to each entry point. > Hadoop web protocol impersonation has been inconsistent. Most of entry point > do not support ?doAs parameter. This creates problem for secure gateway like > Knox to proxy Hadoop web interface on behave of the end user. When the > receiving end does not check for ?doAs flag, web interface would be accessed > using proxy user credential. This can lead to all kind of security holes > using path traversal to exploit Hadoop. > In HADOOP-16287, ProxyUserAuthenticationFilter is proposed as solution to > solve the web impersonation problem. This task is to track changes required > in Hadoop code base to apply authentication filter globally for each of the > web service port. -- This message was sent by Atlassian JIRA (v7.6.3#76005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] bharatviswa504 commented on a change in pull request #867: HDDS-1605. Implement AuditLogging for OM HA Bucket write requests.

bharatviswa504 commented on a change in pull request #867: HDDS-1605. Implement

AuditLogging for OM HA Bucket write requests.

URL: https://github.com/apache/hadoop/pull/867#discussion_r290969230

##

File path:

hadoop-ozone/ozone-manager/src/main/java/org/apache/hadoop/ozone/om/request/bucket/OMBucketCreateRequest.java

##

@@ -152,27 +160,35 @@ public OMClientResponse

validateAndUpdateCache(OzoneManager ozoneManager,

OMException.ResultCodes.BUCKET_ALREADY_EXISTS);

}

- LOG.debug("created bucket: {} in volume: {}", bucketName, volumeName);

- omMetrics.incNumBuckets();

-

// Update table cache.

metadataManager.getBucketTable().addCacheEntry(new CacheKey<>(bucketKey),

new CacheValue<>(Optional.of(omBucketInfo), transactionLogIndex));

- // return response.

+

+} catch (IOException ex) {

+ exception = ex;

+} finally {

+ metadataManager.getLock().releaseBucketLock(volumeName, bucketName);

+ metadataManager.getLock().releaseVolumeLock(volumeName);

+

+ // Performing audit logging outside of the lock.

+ auditLog(auditLogger, buildAuditMessage(OMAction.CREATE_BUCKET,

+ omBucketInfo.toAuditMap(), exception, userInfo));

+}

Review comment:

Yes, it is not to do logging/expensive things inside the lock. As after

adding the response to cache, we can release the lock, so that other threads

waiting for the lock can acquire it. (In future we don't see performance things

because of audit log we are seeing some performance issues.)

More on a side note, doing audit logging outside lock will not cause any

side effects in my view. Let me know if anything I am missing here.

Edit:

Now I got it, we can do this outside the finally block also. But I think it

should be fine any way.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] bharatviswa504 commented on a change in pull request #867: HDDS-1605. Implement AuditLogging for OM HA Bucket write requests.

bharatviswa504 commented on a change in pull request #867: HDDS-1605. Implement

AuditLogging for OM HA Bucket write requests.

URL: https://github.com/apache/hadoop/pull/867#discussion_r290969230

##

File path:

hadoop-ozone/ozone-manager/src/main/java/org/apache/hadoop/ozone/om/request/bucket/OMBucketCreateRequest.java

##

@@ -152,27 +160,35 @@ public OMClientResponse

validateAndUpdateCache(OzoneManager ozoneManager,

OMException.ResultCodes.BUCKET_ALREADY_EXISTS);

}

- LOG.debug("created bucket: {} in volume: {}", bucketName, volumeName);

- omMetrics.incNumBuckets();

-

// Update table cache.

metadataManager.getBucketTable().addCacheEntry(new CacheKey<>(bucketKey),

new CacheValue<>(Optional.of(omBucketInfo), transactionLogIndex));

- // return response.

+

+} catch (IOException ex) {

+ exception = ex;

+} finally {

+ metadataManager.getLock().releaseBucketLock(volumeName, bucketName);

+ metadataManager.getLock().releaseVolumeLock(volumeName);

+

+ // Performing audit logging outside of the lock.

+ auditLog(auditLogger, buildAuditMessage(OMAction.CREATE_BUCKET,

+ omBucketInfo.toAuditMap(), exception, userInfo));

+}

Review comment:

Yes, it is not to do logging/expensive things inside the lock. As after

adding the response to cache, we can release the lock, so that other threads

waiting for the lock can acquire it. (In future we don't see performance things

because of audit log we are seeing some performance issues.)

More on a side note, doing audit logging outside lock will not cause any

side effects in my view. Let me know if anything I am missing here.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] bharatviswa504 commented on a change in pull request #867: HDDS-1605. Implement AuditLogging for OM HA Bucket write requests.

bharatviswa504 commented on a change in pull request #867: HDDS-1605. Implement

AuditLogging for OM HA Bucket write requests.

URL: https://github.com/apache/hadoop/pull/867#discussion_r290969230

##

File path:

hadoop-ozone/ozone-manager/src/main/java/org/apache/hadoop/ozone/om/request/bucket/OMBucketCreateRequest.java

##

@@ -152,27 +160,35 @@ public OMClientResponse

validateAndUpdateCache(OzoneManager ozoneManager,

OMException.ResultCodes.BUCKET_ALREADY_EXISTS);

}

- LOG.debug("created bucket: {} in volume: {}", bucketName, volumeName);

- omMetrics.incNumBuckets();

-

// Update table cache.

metadataManager.getBucketTable().addCacheEntry(new CacheKey<>(bucketKey),

new CacheValue<>(Optional.of(omBucketInfo), transactionLogIndex));

- // return response.

+

+} catch (IOException ex) {

+ exception = ex;

+} finally {

+ metadataManager.getLock().releaseBucketLock(volumeName, bucketName);

+ metadataManager.getLock().releaseVolumeLock(volumeName);

+

+ // Performing audit logging outside of the lock.

+ auditLog(auditLogger, buildAuditMessage(OMAction.CREATE_BUCKET,

+ omBucketInfo.toAuditMap(), exception, userInfo));

+}

Review comment:

Yes, it not to do logging/expensive things inside the lock. As after adding

the response to cache, we can release the lock, so that other threads waiting

for the lock can acquire it. (In future we don't see performance things because

of audit log we are seeing some performance issues.)

More on a side note, doing audit logging outside lock will not cause any

side effects in my view. Let me know if anything I am missing here.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] hanishakoneru commented on a change in pull request #867: HDDS-1605. Implement AuditLogging for OM HA Bucket write requests.

hanishakoneru commented on a change in pull request #867: HDDS-1605. Implement

AuditLogging for OM HA Bucket write requests.

URL: https://github.com/apache/hadoop/pull/867#discussion_r290968001

##

File path:

hadoop-ozone/ozone-manager/src/main/java/org/apache/hadoop/ozone/om/request/bucket/OMBucketCreateRequest.java

##

@@ -152,27 +160,35 @@ public OMClientResponse

validateAndUpdateCache(OzoneManager ozoneManager,

OMException.ResultCodes.BUCKET_ALREADY_EXISTS);

}

- LOG.debug("created bucket: {} in volume: {}", bucketName, volumeName);

- omMetrics.incNumBuckets();

-

// Update table cache.

metadataManager.getBucketTable().addCacheEntry(new CacheKey<>(bucketKey),

new CacheValue<>(Optional.of(omBucketInfo), transactionLogIndex));

- // return response.

+

+} catch (IOException ex) {

+ exception = ex;

+} finally {

+ metadataManager.getLock().releaseBucketLock(volumeName, bucketName);

+ metadataManager.getLock().releaseVolumeLock(volumeName);

+

+ // Performing audit logging outside of the lock.