[GitHub] [spark] ulysses-you commented on pull request #30029: [SPARK-33131][SQL] Fix grouping sets with having clause can not resolve qualified col name

ulysses-you commented on pull request #30029: URL: https://github.com/apache/spark/pull/30029#issuecomment-708171740 @maropu @cloud-fan do you have time to take a look ? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on pull request #30011: [WIP][SPARK-32281][SQL] Spark keep SORTED spec in metastore

cloud-fan commented on pull request #30011: URL: https://github.com/apache/spark/pull/30011#issuecomment-708167152 retest this please This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HeartSaVioR commented on pull request #30033: [SPARK-33136][SQL] Fix mistakenly swapped parameter in V2WriteCommand.outputResolved

HeartSaVioR commented on pull request #30033: URL: https://github.com/apache/spark/pull/30033#issuecomment-708160066 >> We can't get the information about the reason why the operator is considered as unresolved > This is a good point. CheckAnalysis provides better error messages for many cases, and the unresolved operator error is the last resort. I think we should provide better error message for this case as well. Yeah. It looks to be a bit tricky to generalize as any operator can make a decision on resolvable arbitrary. Probably the operator needs to have some method to provide additional information on `resolve` so that Analyzer can call to print out? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HeartSaVioR commented on a change in pull request #30033: [SPARK-33136][SQL] Fix mistakenly swapped parameter in V2WriteCommand.outputResolved

HeartSaVioR commented on a change in pull request #30033:

URL: https://github.com/apache/spark/pull/30033#discussion_r504403294

##

File path:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/plans/logical/v2Commands.scala

##

@@ -45,7 +45,7 @@ trait V2WriteCommand extends Command {

case (inAttr, outAttr) =>

// names and types must match, nullability must be compatible

inAttr.name == outAttr.name &&

- DataType.equalsIgnoreCompatibleNullability(outAttr.dataType,

inAttr.dataType) &&

+ DataType.equalsIgnoreCompatibleNullability(inAttr.dataType,

outAttr.dataType) &&

Review comment:

The usage of AppendData is reverted in

https://github.com/apache/spark/commit/b6e4aca0be7f3b863c326063a3c02aa8a1c266a3

for branch-2.4 and shipped to Spark 2.4.0. So while the code in AppendData is

broken, it's a dead code.

We seem to have three options: 1) revert remaining part of AppendData in

branch-2.4 2) fix the code but leave it as dead 3) leave it as it is. What's

our preference?

cc. @cloud-fan @HyukjinKwon

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on pull request #30011: [WIP][SPARK-32281][SQL] Spark keep SORTED spec in metastore

AngersZh commented on pull request #30011: URL: https://github.com/apache/spark/pull/30011#issuecomment-708158773 retest this please This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HeartSaVioR commented on a change in pull request #30033: [SPARK-33136][SQL] Fix mistakenly swapped parameter in V2WriteCommand.outputResolved

HeartSaVioR commented on a change in pull request #30033:

URL: https://github.com/apache/spark/pull/30033#discussion_r504403294

##

File path:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/plans/logical/v2Commands.scala

##

@@ -45,7 +45,7 @@ trait V2WriteCommand extends Command {

case (inAttr, outAttr) =>

// names and types must match, nullability must be compatible

inAttr.name == outAttr.name &&

- DataType.equalsIgnoreCompatibleNullability(outAttr.dataType,

inAttr.dataType) &&

+ DataType.equalsIgnoreCompatibleNullability(inAttr.dataType,

outAttr.dataType) &&

Review comment:

The usage of AppendData is reverted in

https://github.com/apache/spark/commit/b6e4aca0be7f3b863c326063a3c02aa8a1c266a3

for branch-2.4 and shipped to Spark 2.4.0. So while the code in AppendData for

branch-2.4 is broken as well, it's a dead code.

We seem to have three options: 1) revert remaining part of AppendData in

branch-2.4 2) fix the code but leave it as dead 3) leave it as it is. What's

our preference?

cc. @cloud-fan @HyukjinKwon

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on a change in pull request #30027: [SPARK-32069][CORE][SQL] Improve error message on reading unexpected directory

AngersZh commented on a change in pull request #30027:

URL: https://github.com/apache/spark/pull/30027#discussion_r504402399

##

File path:

sql/hive/src/test/scala/org/apache/spark/sql/hive/HiveParquetSourceSuite.scala

##

@@ -230,6 +230,13 @@ class HiveParquetSourceSuite extends

ParquetPartitioningTest {

withTempPath { path =>

withTable("parq_tbl1", "parq_tbl2", "parq_tbl3",

"tbl1", "tbl2", "tbl3", "tbl4", "tbl5", "tbl6") {

+

+def errorMsg(path: String): String = {

Review comment:

Done

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on a change in pull request #30027: [SPARK-32069][CORE][SQL] Improve error message on reading unexpected directory

AngersZh commented on a change in pull request #30027:

URL: https://github.com/apache/spark/pull/30027#discussion_r504402354

##

File path: core/src/main/scala/org/apache/spark/rdd/HadoopRDD.scala

##

@@ -232,6 +232,11 @@ class HadoopRDD[K, V](

logWarning(s"${jobConf.get(FileInputFormat.INPUT_DIR)} doesn't exist

and no" +

s" partitions returned from this path.", e)

Array.empty[Partition]

+ case e: IOException if e.getMessage.contains("Not a file") =>

+val path = e.getMessage.split(":").map(_.trim).apply(2)

+throw new IOException(s"Path: ${path} is a directory, it is not" +

+ s" allowed for `serde` reader when" +

Review comment:

> How about:

>

> ```

> s"Path: ${path} is a directory, which is not supported by the record

reader when `mapreduce.input.fileinputformat.input.dir.recursive` is false."

> ```

updated

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on pull request #30027: [SPARK-32069][CORE][SQL] Improve error message on reading unexpected directory

HyukjinKwon commented on pull request #30027: URL: https://github.com/apache/spark/pull/30027#issuecomment-708156797 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on pull request #29818: [WIP][SPARK-32953][PYTHON] Add Arrow self_destruct support to toPandas

HyukjinKwon commented on pull request #29818: URL: https://github.com/apache/spark/pull/29818#issuecomment-708156469 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on pull request #29818: [WIP][SPARK-32953][PYTHON] Add Arrow self_destruct support to toPandas

AmplabJenkins removed a comment on pull request #29818: URL: https://github.com/apache/spark/pull/29818#issuecomment-696117614 Can one of the admins verify this patch? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on a change in pull request #29818: [WIP][SPARK-32953][PYTHON] Add Arrow self_destruct support to toPandas

HyukjinKwon commented on a change in pull request #29818: URL: https://github.com/apache/spark/pull/29818#discussion_r504401223 ## File path: python/pyspark/sql/pandas/serializers.py ## @@ -90,7 +90,10 @@ def load_stream(self, stream): import pyarrow as pa reader = pa.ipc.open_stream(stream) for batch in reader: -yield batch +split_batch = pa.RecordBatch.from_arrays([ +pa.concat_arrays([array]) for array in batch +], schema=batch.schema) Review comment: Sorry for asking a question without taking a close look but would you mind elaborating why we should do this? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on a change in pull request #29818: [WIP][SPARK-32953][PYTHON] Add Arrow self_destruct support to toPandas

HyukjinKwon commented on a change in pull request #29818:

URL: https://github.com/apache/spark/pull/29818#discussion_r504400977

##

File path: python/pyspark/sql/pandas/conversion.py

##

@@ -103,10 +103,18 @@ def toPandas(self):

batches = self.toDF(*tmp_column_names)._collect_as_arrow()

if len(batches) > 0:

table = pyarrow.Table.from_batches(batches)

+del batches

# Pandas DataFrame created from PyArrow uses

datetime64[ns] for date type

# values, but we should use datetime.date to match the

behavior with when

# Arrow optimization is disabled.

-pdf = table.to_pandas(date_as_object=True)

+pandas_options = {'date_as_object': True}

+if self_destruct:

+pandas_options.update({

+'self_destruct': True,

Review comment:

Would you mind leaving a comment on the codes about this set of

parameter configurations?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on a change in pull request #29818: [WIP][SPARK-32953][PYTHON] Add Arrow self_destruct support to toPandas

HyukjinKwon commented on a change in pull request #29818: URL: https://github.com/apache/spark/pull/29818#discussion_r504400867 ## File path: python/pyspark/sql/pandas/conversion.py ## @@ -34,7 +34,7 @@ class PandasConversionMixin(object): """ @since(1.3) -def toPandas(self): +def toPandas(self, self_destruct=False): Review comment: Let's name it as `selfDestruct` to make the naming role consistent. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] gengliangwang commented on pull request #30027: [SPARK-32069][CORE][SQL] Improve error message on reading unexpected directory

gengliangwang commented on pull request #30027: URL: https://github.com/apache/spark/pull/30027#issuecomment-708154528 LGTM otherwise. Thanks for doing this work. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] gengliangwang commented on a change in pull request #30027: [SPARK-32069][CORE][SQL] Improve error message on reading unexpected directory

gengliangwang commented on a change in pull request #30027:

URL: https://github.com/apache/spark/pull/30027#discussion_r504399096

##

File path:

sql/hive/src/test/scala/org/apache/spark/sql/hive/HiveParquetSourceSuite.scala

##

@@ -230,6 +230,13 @@ class HiveParquetSourceSuite extends

ParquetPartitioningTest {

withTempPath { path =>

withTable("parq_tbl1", "parq_tbl2", "parq_tbl3",

"tbl1", "tbl2", "tbl3", "tbl4", "tbl5", "tbl6") {

+

+def errorMsg(path: String): String = {

Review comment:

nit: `checkErrorMsg`

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] gengliangwang commented on a change in pull request #30027: [SPARK-32069][CORE][SQL] Improve error message on reading unexpected directory

gengliangwang commented on a change in pull request #30027:

URL: https://github.com/apache/spark/pull/30027#discussion_r504398990

##

File path: core/src/main/scala/org/apache/spark/rdd/HadoopRDD.scala

##

@@ -232,6 +232,11 @@ class HadoopRDD[K, V](

logWarning(s"${jobConf.get(FileInputFormat.INPUT_DIR)} doesn't exist

and no" +

s" partitions returned from this path.", e)

Array.empty[Partition]

+ case e: IOException if e.getMessage.contains("Not a file") =>

+val path = e.getMessage.split(":").map(_.trim).apply(2)

+throw new IOException(s"Path: ${path} is a directory, it is not" +

+ s" allowed for `serde` reader when" +

Review comment:

How about:

```

s"Path: ${path} is a directory, which is not supported by the record reader

when `mapreduce.input.fileinputformat.input.dir.recursive` is false."

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a change in pull request #29831: [SPARK-32351][SQL] Show partially pushed down partition filters in explain()

cloud-fan commented on a change in pull request #29831:

URL: https://github.com/apache/spark/pull/29831#discussion_r504397253

##

File path:

sql/hive/src/test/scala/org/apache/spark/sql/hive/execution/PrunePartitionSuiteBase.scala

##

@@ -46,30 +47,59 @@ abstract class PrunePartitionSuiteBase extends QueryTest

with SQLTestUtils with

}

assertPrunedPartitions(

- "SELECT * FROM t WHERE p = '1' OR (p = '2' AND i = 1)", 2)

+ "SELECT * FROM t WHERE p = '1' OR (p = '2' AND i = 1)", 2,

+ Set("`p`='1'", "`p`='2'"))

assertPrunedPartitions(

- "SELECT * FROM t WHERE (p = '1' AND i = 2) OR (i = 1 OR p = '2')", 4)

+ "SELECT * FROM t WHERE (p = '1' AND i = 2) OR (i = 1 OR p = '2')", 4,

+ Set())

assertPrunedPartitions(

- "SELECT * FROM t WHERE (p = '1' AND i = 2) OR (p = '3' AND i = 3 )",

2)

+ "SELECT * FROM t WHERE (p = '1' AND i = 2) OR (p = '3' AND i = 3 )",

2,

+ Set("`p`='1'", "`p`='3'"))

assertPrunedPartitions(

- "SELECT * FROM t WHERE (p = '1' AND i = 2) OR (p = '2' OR p = '3')",

3)

+ "SELECT * FROM t WHERE (p = '1' AND i = 2) OR (p = '2' OR p = '3')",

3,

+ Set("`p`='1'", "`p`='2'", "`p`='3'"))

assertPrunedPartitions(

- "SELECT * FROM t", 4)

+ "SELECT * FROM t", 4,

+ Set())

assertPrunedPartitions(

- "SELECT * FROM t WHERE p = '1' AND i = 2", 1)

+ "SELECT * FROM t WHERE p = '1' AND i = 2", 1,

+ Set("`p`='1'"))

assertPrunedPartitions(

"""

|SELECT i, COUNT(1) FROM (

|SELECT * FROM t WHERE p = '1' OR (p = '2' AND i = 1)

|) tmp GROUP BY i

- """.stripMargin, 2)

+ """.stripMargin, 2, Set("`p`='1'", "`p`='2'"))

}

}

}

- protected def assertPrunedPartitions(query: String, expected: Long): Unit = {

-val plan = sql(query).queryExecution.sparkPlan

-assert(getScanExecPartitionSize(plan) == expected)

+ private def collectEqualExp(

+ expression: Expression,

+ currentSet: Set[String]): Set[String] = expression match {

+case e: EqualTo =>

+ currentSet + (e.left.sql + e.symbol + e.right.sql)

+.replaceAll("spark_catalog.default.t.", "")

+case _ =>

+ expression.children.foldLeft(currentSet)((s, exp) =>

collectEqualExp(exp, s))

Review comment:

can we put `and`, `or` in the result? I think the test should check

`and/or` as well, not just the leaf predicates.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a change in pull request #29831: [SPARK-32351][SQL] Show partially pushed down partition filters in explain()

cloud-fan commented on a change in pull request #29831:

URL: https://github.com/apache/spark/pull/29831#discussion_r504397419

##

File path:

sql/hive/src/main/scala/org/apache/spark/sql/hive/HiveStrategies.scala

##

@@ -256,20 +256,21 @@ private[hive] trait HiveStrategies {

*/

object HiveTableScans extends Strategy {

def apply(plan: LogicalPlan): Seq[SparkPlan] = plan match {

- case ScanOperation(projectList, predicates, relation: HiveTableRelation)

=>

+ case ScanOperation(projectList, filters, relation: HiveTableRelation) =>

// Filter out all predicates that only deal with partition keys, these

are given to the

// hive table scan operator to be used for partition pruning.

val partitionKeyIds = AttributeSet(relation.partitionCols)

-val (pruningPredicates, otherPredicates) = predicates.partition {

predicate =>

- !predicate.references.isEmpty &&

- predicate.references.subsetOf(partitionKeyIds)

-}

+val normalizedFilters = DataSourceStrategy.normalizeExprs(

+ filters.filter(_.deterministic), relation.output)

+

+val partitionKeyFilters =

DataSourceStrategy.getPushedDownFilters(relation.partitionCols,

+ normalizedFilters)

pruneFilterProject(

projectList,

- otherPredicates,

+ filters.filter(f => f.references.isEmpty ||

!f.references.subsetOf(partitionKeyIds)),

Review comment:

It's OK for now to be the same as before, but it's better to make it

consistent with `FileSourceStrategy`. We can do it in followup.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] maropu commented on a change in pull request #29933: [SPARK-26533][SQL] Support query auto timeout cancel on thriftserver

maropu commented on a change in pull request #29933:

URL: https://github.com/apache/spark/pull/29933#discussion_r504391675

##

File path:

sql/hive-thriftserver/src/main/scala/org/apache/spark/sql/hive/thriftserver/SparkExecuteStatementOperation.scala

##

@@ -45,11 +45,18 @@ private[hive] class SparkExecuteStatementOperation(

parentSession: HiveSession,

statement: String,

confOverlay: JMap[String, String],

-runInBackground: Boolean = true)

+runInBackground: Boolean = true,

+queryTimeout: Long)

extends ExecuteStatementOperation(parentSession, statement, confOverlay,

runInBackground)

with SparkOperation

with Logging {

+ private val queryTimeoutValue = if (queryTimeout <= 0) {

Review comment:

Ah, that's what I was looking for. Thanks! @AngersZh @HyukjinKwon

Updated, could you check the latest fix?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on pull request #26194: [SPARK-29536][PYTHON] Upgrade cloudpickle to 1.1.1 to support Python 3.8

HyukjinKwon commented on pull request #26194: URL: https://github.com/apache/spark/pull/26194#issuecomment-708132184 Thanks @dongjoon-hyun. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on pull request #26194: [SPARK-29536][PYTHON] Upgrade cloudpickle to 1.1.1 to support Python 3.8

dongjoon-hyun commented on pull request #26194: URL: https://github.com/apache/spark/pull/26194#issuecomment-708131463 I updated SPARK-29536 by adding `2.4.7` into `Affected Versions`. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon closed pull request #30032: [SPARK-33134][SQL][3.0] Return partial results only for root JSON objects

HyukjinKwon closed pull request #30032: URL: https://github.com/apache/spark/pull/30032 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on pull request #30032: [SPARK-33134][SQL][3.0] Return partial results only for root JSON objects

HyukjinKwon commented on pull request #30032: URL: https://github.com/apache/spark/pull/30032#issuecomment-708129068 Merged to branch-3.0. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon closed pull request #30031: [SPARK-33134][SQL] Return partial results only for root JSON objects

HyukjinKwon closed pull request #30031: URL: https://github.com/apache/spark/pull/30031 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a change in pull request #29972: [SPARK-33081][SQL] Support ALTER TABLE in JDBC v2 Table Catalog: update type and nullability of columns (DB2 dialect)

cloud-fan commented on a change in pull request #29972:

URL: https://github.com/apache/spark/pull/29972#discussion_r504374789

##

File path:

external/docker-integration-tests/src/test/scala/org/apache/spark/sql/jdbc/v2/V2JDBCTest.scala

##

@@ -0,0 +1,98 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.jdbc.v2

+

+import org.apache.spark.sql.AnalysisException

+import org.apache.spark.sql.test.SharedSparkSession

+import org.apache.spark.sql.types._

+import org.apache.spark.tags.DockerTest

+

+@DockerTest

+trait V2JDBCTest extends SharedSparkSession {

+ val catalogName: String

+ // dialect specific update column type test

+ def testUpdateColumnType(tbl: String): Unit

+

+ test("SPARK-33034: ALTER TABLE ... add new columns") {

+withTable(s"$catalogName.alt_table") {

+ sql(s"CREATE TABLE $catalogName.alt_table (ID STRING) USING _")

+ var t = spark.table(s"$catalogName.alt_table")

+ var expectedSchema = new StructType().add("ID", StringType)

+ assert(t.schema === expectedSchema)

+ sql(s"ALTER TABLE $catalogName.alt_table ADD COLUMNS (C1 STRING, C2

STRING)")

+ t = spark.table(s"$catalogName.alt_table")

+ expectedSchema = expectedSchema.add("C1", StringType).add("C2",

StringType)

+ assert(t.schema === expectedSchema)

+ sql(s"ALTER TABLE $catalogName.alt_table ADD COLUMNS (C3 STRING)")

+ t = spark.table(s"$catalogName.alt_table")

+ expectedSchema = expectedSchema.add("C3", StringType)

+ assert(t.schema === expectedSchema)

+ // Add already existing column

+ val msg = intercept[AnalysisException] {

+sql(s"ALTER TABLE $catalogName.alt_table ADD COLUMNS (C3 DOUBLE)")

+ }.getMessage

+ assert(msg.contains("Cannot add column, because C3 already exists"))

+}

+// Add a column to not existing table

+val msg = intercept[AnalysisException] {

+ sql(s"ALTER TABLE $catalogName.not_existing_table ADD COLUMNS (C4

STRING)")

+}.getMessage

+assert(msg.contains("Table not found"))

+ }

+

+ test("SPARK-33034: ALTER TABLE ... update column type") {

+withTable(s"$catalogName.alt_table") {

+ testUpdateColumnType(s"$catalogName.alt_table")

+ // Update not existing column

+ val msg2 = intercept[AnalysisException] {

+sql(s"ALTER TABLE $catalogName.alt_table ALTER COLUMN bad_column TYPE

DOUBLE")

+ }.getMessage

+ assert(msg2.contains("Cannot update missing field bad_column"))

+}

+// Update column type in not existing table

+val msg = intercept[AnalysisException] {

+ sql(s"ALTER TABLE $catalogName.not_existing_table ALTER COLUMN id TYPE

DOUBLE")

+}.getMessage

+assert(msg.contains("Table not found"))

+ }

+

+ test("SPARK-33034: ALTER TABLE ... update column nullability") {

+withTable(s"$catalogName.alt_table") {

+ sql(s"CREATE TABLE $catalogName.alt_table (ID STRING NOT NULL) USING _")

+ var t = spark.table(s"$catalogName.alt_table")

+ // nullable is true in the expecteSchema because Spark always sets

nullable to true

+ // regardless of the JDBC metadata

https://github.com/apache/spark/pull/18445

Review comment:

This does expose a problem in Spark: most databases allow to write

nullable data to non-nullable column, and fail at runtime if they see null

values. I think Spark shouldn't block it at compile time. After all,

nullability is more like a constraint, not data type itself. cc @rdblue

@dongjoon-hyun @viirya @maropu @MaxGekk

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on pull request #30031: [SPARK-33134][SQL] Return partial results only for root JSON objects

HyukjinKwon commented on pull request #30031: URL: https://github.com/apache/spark/pull/30031#issuecomment-708128835 Merged to master. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on pull request #30031: [SPARK-33134][SQL] Return partial results only for root JSON objects

HyukjinKwon commented on pull request #30031: URL: https://github.com/apache/spark/pull/30031#issuecomment-708128011 We probably need to redesign/refactoring JSON parsing logic here .. it's now pretty convoluted .. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HeartSaVioR commented on a change in pull request #30033: [SPARK-33136][SQL] Fix mistakenly swapped parameter in V2WriteCommand.outputResolved

HeartSaVioR commented on a change in pull request #30033:

URL: https://github.com/apache/spark/pull/30033#discussion_r504373710

##

File path:

sql/core/src/test/scala/org/apache/spark/sql/DataFrameWriterV2Suite.scala

##

@@ -101,6 +100,86 @@ class DataFrameWriterV2Suite extends QueryTest with

SharedSparkSession with Befo

assert(v2.catalog.exists(_ == catalogPlugin))

}

+ case class FakeV2WriteCommand(table: NamedRelation, query: LogicalPlan)

extends V2WriteCommand

+

+ test("SPARK-33136 output resolved on complex types for V2 write commands") {

Review comment:

Yes, according to the implementation of

`equalsIgnoreCompatibleNullability`.

```

private[sql] def equalsIgnoreCompatibleNullability(from: DataType, to:

DataType): Boolean = {

(from, to) match {

case (ArrayType(fromElement, fn), ArrayType(toElement, tn)) =>

(tn || !fn) && equalsIgnoreCompatibleNullability(fromElement,

toElement)

case (MapType(fromKey, fromValue, fn), MapType(toKey, toValue, tn)) =>

(tn || !fn) &&

equalsIgnoreCompatibleNullability(fromKey, toKey) &&

equalsIgnoreCompatibleNullability(fromValue, toValue)

case (StructType(fromFields), StructType(toFields)) =>

fromFields.length == toFields.length &&

fromFields.zip(toFields).forall { case (fromField, toField) =>

fromField.name == toField.name &&

(toField.nullable || !fromField.nullable) &&

equalsIgnoreCompatibleNullability(fromField.dataType,

toField.dataType)

}

case (fromDataType, toDataType) => fromDataType == toDataType

}

}

```

For primitive types the order doesn't affect the result. `outputResolved`

itself does the right comparison, just except the swapped parameters.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on pull request #30027: [SPARK-32069][CORE][SQL] Improve error message on reading unexpected directory

HyukjinKwon commented on pull request #30027: URL: https://github.com/apache/spark/pull/30027#issuecomment-708127310 retest this please This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on pull request #30033: [SPARK-33136][SQL] Fix mistakenly swapped parameter in V2WriteCommand.outputResolved

cloud-fan commented on pull request #30033: URL: https://github.com/apache/spark/pull/30033#issuecomment-708126677 > We can't get the information about the reason why the operator is considered as unresolved This is a good point. `CheckAnalysis` provides better error messages for many cases, and the `unresolved operator` error is the last resort. I think we should provide better error message for this case as well. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HeartSaVioR commented on a change in pull request #30033: [SPARK-33136][SQL] Fix mistakenly swapped parameter in V2WriteCommand.outputResolved

HeartSaVioR commented on a change in pull request #30033:

URL: https://github.com/apache/spark/pull/30033#discussion_r504372431

##

File path:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/plans/logical/v2Commands.scala

##

@@ -45,7 +45,7 @@ trait V2WriteCommand extends Command {

case (inAttr, outAttr) =>

// names and types must match, nullability must be compatible

inAttr.name == outAttr.name &&

- DataType.equalsIgnoreCompatibleNullability(outAttr.dataType,

inAttr.dataType) &&

+ DataType.equalsIgnoreCompatibleNullability(inAttr.dataType,

outAttr.dataType) &&

Review comment:

Ah thanks for noticing. Nice finding. I found it from V2WriteCommand so

thought it was added later. Will check the code path in branch-2.4 and test it.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a change in pull request #30033: [SPARK-33136][SQL] Fix mistakenly swapped parameter in V2WriteCommand.outputResolved

cloud-fan commented on a change in pull request #30033:

URL: https://github.com/apache/spark/pull/30033#discussion_r504372457

##

File path:

sql/core/src/test/scala/org/apache/spark/sql/DataFrameWriterV2Suite.scala

##

@@ -101,6 +100,86 @@ class DataFrameWriterV2Suite extends QueryTest with

SharedSparkSession with Befo

assert(v2.catalog.exists(_ == catalogPlugin))

}

+ case class FakeV2WriteCommand(table: NamedRelation, query: LogicalPlan)

extends V2WriteCommand

+

+ test("SPARK-33136 output resolved on complex types for V2 write commands") {

Review comment:

does this bug only affects complex types?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on a change in pull request #30033: [SPARK-33136][SQL] Fix mistakenly swapped parameter in V2WriteCommand.outputResolved

dongjoon-hyun commented on a change in pull request #30033:

URL: https://github.com/apache/spark/pull/30033#discussion_r504365454

##

File path:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/plans/logical/v2Commands.scala

##

@@ -45,7 +45,7 @@ trait V2WriteCommand extends Command {

case (inAttr, outAttr) =>

// names and types must match, nullability must be compatible

inAttr.name == outAttr.name &&

- DataType.equalsIgnoreCompatibleNullability(outAttr.dataType,

inAttr.dataType) &&

+ DataType.equalsIgnoreCompatibleNullability(inAttr.dataType,

outAttr.dataType) &&

Review comment:

Hi, @HeartSaVioR . The original code looks like the same with

`branch-2.4` but the issue is reported at 3.0.0+. Could you confirm that this

is 3.0.0-only issue or not?

```scala

override lazy val resolved: Boolean = {

table.resolved && query.resolved && query.output.size ==

table.output.size &&

query.output.zip(table.output).forall {

case (inAttr, outAttr) =>

// names and types must match, nullability must be compatible

inAttr.name == outAttr.name &&

DataType.equalsIgnoreCompatibleNullability(outAttr.dataType,

inAttr.dataType) &&

(outAttr.nullable || !inAttr.nullable)

}

}

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on a change in pull request #30033: [SPARK-33136][SQL] Fix mistakenly swapped parameter in V2WriteCommand.outputResolved

dongjoon-hyun commented on a change in pull request #30033:

URL: https://github.com/apache/spark/pull/30033#discussion_r504365454

##

File path:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/plans/logical/v2Commands.scala

##

@@ -45,7 +45,7 @@ trait V2WriteCommand extends Command {

case (inAttr, outAttr) =>

// names and types must match, nullability must be compatible

inAttr.name == outAttr.name &&

- DataType.equalsIgnoreCompatibleNullability(outAttr.dataType,

inAttr.dataType) &&

+ DataType.equalsIgnoreCompatibleNullability(inAttr.dataType,

outAttr.dataType) &&

Review comment:

Hi, @HeartSaVioR . This looks like the same with `branch-2.4` but the

issue is reported at 3.0.0+. Could you confirm that this is 3.0.0-only issue or

not?

```scala

override lazy val resolved: Boolean = {

table.resolved && query.resolved && query.output.size ==

table.output.size &&

query.output.zip(table.output).forall {

case (inAttr, outAttr) =>

// names and types must match, nullability must be compatible

inAttr.name == outAttr.name &&

DataType.equalsIgnoreCompatibleNullability(outAttr.dataType,

inAttr.dataType) &&

(outAttr.nullable || !inAttr.nullable)

}

}

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on pull request #29964: [SPARK-32047][SQL]Add JDBC connection provider disable possibility

HyukjinKwon commented on pull request #29964: URL: https://github.com/apache/spark/pull/29964#issuecomment-708116061 @gaborgsomogyi, just a question. When do we disable some providers? Would you mind elaborating the usecase? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on pull request #30027: [SPARK-32069][CORE][SQL] Improve error message on reading unexpected directory

AngersZh commented on pull request #30027: URL: https://github.com/apache/spark/pull/30027#issuecomment-708114538 @dongjoon-hyun Seems jenkins down because of this pr? em This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on a change in pull request #29933: [SPARK-26533][SQL] Support query auto timeout cancel on thriftserver

AngersZh commented on a change in pull request #29933:

URL: https://github.com/apache/spark/pull/29933#discussion_r504360835

##

File path:

sql/hive-thriftserver/src/main/scala/org/apache/spark/sql/hive/thriftserver/SparkExecuteStatementOperation.scala

##

@@ -200,6 +207,12 @@ private[hive] class SparkExecuteStatementOperation(

parentSession.getUsername)

setHasResultSet(true) // avoid no resultset for async run

+if (queryTimeoutValue > 0) {

+ Executors.newSingleThreadScheduledExecutor.schedule(new Runnable {

+ override def run(): Unit = timeoutCancel()

+}, queryTimeoutValue, TimeUnit.SECONDS)

+}

+

Review comment:

Maybe we need to call shutdown for this executor ?

https://github.com/apache/hive/blob/940ee46d3a7a9300a47e5beae7c3ca6eb32fd759/service/src/java/org/apache/hive/service/cli/operation/SQLOperation.java#L170-L184

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on a change in pull request #29933: [SPARK-26533][SQL] Support query auto timeout cancel on thriftserver

AngersZh commented on a change in pull request #29933:

URL: https://github.com/apache/spark/pull/29933#discussion_r504358168

##

File path:

sql/hive-thriftserver/src/main/scala/org/apache/spark/sql/hive/thriftserver/SparkExecuteStatementOperation.scala

##

@@ -45,11 +45,18 @@ private[hive] class SparkExecuteStatementOperation(

parentSession: HiveSession,

statement: String,

confOverlay: JMap[String, String],

-runInBackground: Boolean = true)

+runInBackground: Boolean = true,

+queryTimeout: Long)

extends ExecuteStatementOperation(parentSession, statement, confOverlay,

runInBackground)

with SparkOperation

with Logging {

+ private val queryTimeoutValue = if (queryTimeout <= 0) {

Review comment:

> @wangyum @AngersZh Is This behaviour the same with the current

Hive one?

When `THRIFTSERVER_QUERY_TIMEOUT ` less then user defined, we respect

`THRIFTSERVER_QUERY_TIMEOUT `

https://github.com/apache/hive/blob/940ee46d3a7a9300a47e5beae7c3ca6eb32fd759/service/src/java/org/apache/hive/service/cli/operation/SQLOperation.java#L118-L122

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on pull request #29992: [SPARK-32881][CORE] Catch some race condition errors and log them more clearly

HyukjinKwon commented on pull request #29992: URL: https://github.com/apache/spark/pull/29992#issuecomment-708110379 How did we test this, @holdenk? I can make a quick followup for that. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on a change in pull request #29933: [SPARK-26533][SQL] Support query auto timeout cancel on thriftserver

AngersZh commented on a change in pull request #29933:

URL: https://github.com/apache/spark/pull/29933#discussion_r504358168

##

File path:

sql/hive-thriftserver/src/main/scala/org/apache/spark/sql/hive/thriftserver/SparkExecuteStatementOperation.scala

##

@@ -45,11 +45,18 @@ private[hive] class SparkExecuteStatementOperation(

parentSession: HiveSession,

statement: String,

confOverlay: JMap[String, String],

-runInBackground: Boolean = true)

+runInBackground: Boolean = true,

+queryTimeout: Long)

extends ExecuteStatementOperation(parentSession, statement, confOverlay,

runInBackground)

with SparkOperation

with Logging {

+ private val queryTimeoutValue = if (queryTimeout <= 0) {

Review comment:

> @wangyum @AngersZh Is This behaviour the same with the current

Hive one?

When `THRIFTSERVER_QUERY_TIMEOUT ` less then user defined, we respect

`THRIFTSERVER_QUERY_TIMEOUT `

https://github.com/apache/hive/blob/940ee46d3a7a9300a47e5beae7c3ca6eb32fd759/service/src/java/org/apache/hive/service/cli/operation/SQLOperation.java#L118-L122

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on a change in pull request #29992: [SPARK-32881][CORE] Catch some race condition errors and log them more clearly

HyukjinKwon commented on a change in pull request #29992:

URL: https://github.com/apache/spark/pull/29992#discussion_r504358081

##

File path: core/src/main/scala/org/apache/spark/MapOutputTracker.scala

##

@@ -125,14 +125,19 @@ private class ShuffleStatus(numPartitions: Int) extends

Logging {

* Update the map output location (e.g. during migration).

*/

def updateMapOutput(mapId: Long, bmAddress: BlockManagerId): Unit =

withWriteLock {

-val mapStatusOpt = mapStatuses.find(_.mapId == mapId)

-mapStatusOpt match {

- case Some(mapStatus) =>

-logInfo(s"Updating map output for ${mapId} to ${bmAddress}")

-mapStatus.updateLocation(bmAddress)

-invalidateSerializedMapOutputStatusCache()

- case None =>

-logError(s"Asked to update map output ${mapId} for untracked map

status.")

+try {

+ val mapStatusOpt = mapStatuses.find(_.mapId == mapId)

+ mapStatusOpt match {

+case Some(mapStatus) =>

+ logInfo(s"Updating map output for ${mapId} to ${bmAddress}")

+ mapStatus.updateLocation(bmAddress)

+ invalidateSerializedMapOutputStatusCache()

+case None =>

+ logWarning(s"Asked to update map output ${mapId} for untracked map

status.")

+ }

+} catch {

+ case e: java.lang.NullPointerException =>

Review comment:

Quick question: can we avoid catching `NullPointerException`? It's a bit

odd that we catch `NullPointerException`. We could just switch to if-else I

guess.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on pull request #30027: [SPARK-32069][CORE][SQL] Improve error message on reading unexpected directory

AngersZh commented on pull request #30027: URL: https://github.com/apache/spark/pull/30027#issuecomment-708109066 retest this please This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on a change in pull request #29933: [SPARK-26533][SQL] Support query auto timeout cancel on thriftserver

HyukjinKwon commented on a change in pull request #29933:

URL: https://github.com/apache/spark/pull/29933#discussion_r504356369

##

File path:

sql/hive-thriftserver/src/main/scala/org/apache/spark/sql/hive/thriftserver/SparkExecuteStatementOperation.scala

##

@@ -45,11 +45,18 @@ private[hive] class SparkExecuteStatementOperation(

parentSession: HiveSession,

statement: String,

confOverlay: JMap[String, String],

-runInBackground: Boolean = true)

+runInBackground: Boolean = true,

+queryTimeout: Long)

extends ExecuteStatementOperation(parentSession, statement, confOverlay,

runInBackground)

with SparkOperation

with Logging {

+ private val queryTimeoutValue = if (queryTimeout <= 0) {

Review comment:

I am

https://github.com/apache/hive/commit/b6218275b00b64aed7efaf470784cc0441464f67#diff-b0085fe84239ae927dfe7464a2174a1c80169bbb60a295e78aeb8df3747e4443R149.

0 seems default meaning no timeout that's matched with the current default's

and behaviour here.

##

File path:

sql/hive-thriftserver/src/main/scala/org/apache/spark/sql/hive/thriftserver/SparkExecuteStatementOperation.scala

##

@@ -45,11 +45,18 @@ private[hive] class SparkExecuteStatementOperation(

parentSession: HiveSession,

statement: String,

confOverlay: JMap[String, String],

-runInBackground: Boolean = true)

+runInBackground: Boolean = true,

+queryTimeout: Long)

extends ExecuteStatementOperation(parentSession, statement, confOverlay,

runInBackground)

with SparkOperation

with Logging {

+ private val queryTimeoutValue = if (queryTimeout <= 0) {

Review comment:

I just took a cursory look for

https://github.com/apache/hive/commit/b6218275b00b64aed7efaf470784cc0441464f67#diff-b0085fe84239ae927dfe7464a2174a1c80169bbb60a295e78aeb8df3747e4443R149.

0 seems default meaning no timeout that's matched with the current default's

and behaviour here.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on pull request #30022: [SPARK-33090][BUILD][test-hadoop2.7] Upgrade Google Guava to 29.0-jre

AngersZh commented on pull request #30022: URL: https://github.com/apache/spark/pull/30022#issuecomment-708106738 > After [SPARK-29250](https://issues.apache.org/jira/browse/SPARK-29250), I guess this PR will be a general Guava version upgrade PR without any relation to `Hadoop 3.2.1`. IMO, if spark-3 with hadoop3.2 can work well in Hadoop cluster (2.6/2/7/2.8. etc), it's ok just use hadoop 3.2 client. In our hadoop cluster, we use spark-2.4-hadoop-2.6 run in hadoop-3.2.1 cluster, works well. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on pull request #29933: [SPARK-26533][SQL] Support query auto timeout cancel on thriftserver

HyukjinKwon commented on pull request #29933: URL: https://github.com/apache/spark/pull/29933#issuecomment-708104164 cc @juliuszsompolski as well (from #28991) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on a change in pull request #30027: [SPARK-32069][CORE][SQL] Improve error message on reading unexpected directory

AngersZh commented on a change in pull request #30027:

URL: https://github.com/apache/spark/pull/30027#discussion_r504350520

##

File path:

sql/hive/src/test/scala/org/apache/spark/sql/hive/HiveParquetSourceSuite.scala

##

@@ -287,7 +287,11 @@ class HiveParquetSourceSuite extends

ParquetPartitioningTest {

val msg = intercept[IOException] {

sql("SELECT * FROM tbl1").show()

}.getMessage

- assert(msg.contains("Not a file:"))

+ assert(msg.contains(

Review comment:

> how about creating a method for checking the exception message?

Done

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on a change in pull request #30027: [SPARK-32069][CORE][SQL] Improve error message on reading unexpected directory

AngersZh commented on a change in pull request #30027:

URL: https://github.com/apache/spark/pull/30027#discussion_r504350436

##

File path: core/src/main/scala/org/apache/spark/rdd/HadoopRDD.scala

##

@@ -232,6 +232,11 @@ class HadoopRDD[K, V](

logWarning(s"${jobConf.get(FileInputFormat.INPUT_DIR)} doesn't exist

and no" +

s" partitions returned from this path.", e)

Array.empty[Partition]

+ case e: IOException if e.getMessage.contains("Not a file") =>

Review comment:

> Nit: if e.getMessage.startsWith("Not a file:")

Done

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on a change in pull request #30027: [SPARK-32069][CORE][SQL] Improve error message on reading unexpected directory

AngersZh commented on a change in pull request #30027:

URL: https://github.com/apache/spark/pull/30027#discussion_r504350493

##

File path: core/src/main/scala/org/apache/spark/rdd/HadoopRDD.scala

##

@@ -232,6 +232,11 @@ class HadoopRDD[K, V](

logWarning(s"${jobConf.get(FileInputFormat.INPUT_DIR)} doesn't exist

and no" +

s" partitions returned from this path.", e)

Array.empty[Partition]

+ case e: IOException if e.getMessage.contains("Not a file") =>

+val path = e.getMessage.split(":").map(_.trim).apply(2)

+throw new IOException(s"Path: ${path} is a directory, it is not" +

+ s" allowed for `serde` reader when" +

Review comment:

> The code path here is in core module. The reader may not be `serde`

reader.

How about current?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on pull request #30011: [WIP][SPARK-32281][SQL] Spark keep SORTED spec in metastore

AngersZh commented on pull request #30011: URL: https://github.com/apache/spark/pull/30011#issuecomment-708096869 retest this please This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] github-actions[bot] closed pull request #28860: [SPARK-32002][SQL]Support ExtractValue from nested ArrayStruct

github-actions[bot] closed pull request #28860: URL: https://github.com/apache/spark/pull/28860 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] github-actions[bot] closed pull request #28821: [SPARK-31981][SQL] Keep TimestampType when taking an average of a Timestamp

github-actions[bot] closed pull request #28821: URL: https://github.com/apache/spark/pull/28821 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] Victsm commented on a change in pull request #29855: [SPARK-32915][CORE] Network-layer and shuffle RPC layer changes to support push shuffle blocks

Victsm commented on a change in pull request #29855:

URL: https://github.com/apache/spark/pull/29855#discussion_r504333803

##

File path:

common/network-shuffle/src/test/java/org/apache/spark/network/shuffle/ErrorHandlerSuite.java

##

@@ -0,0 +1,51 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.network.shuffle;

+

+import java.net.ConnectException;

+

+import org.junit.Test;

+

+import static org.junit.Assert.*;

+

+/**

+ * Test suite for {@link ErrorHandler}

+ */

+public class ErrorHandlerSuite {

+

+ @Test

+ public void testPushErrorRetry() {

+ErrorHandler.BlockPushErrorHandler handler = new

ErrorHandler.BlockPushErrorHandler();

+assertFalse(handler.shouldRetryError(new RuntimeException(new

IllegalArgumentException(

+ ErrorHandler.BlockPushErrorHandler.TOO_LATE_MESSAGE_SUFFIX;

+assertFalse(handler.shouldRetryError(new RuntimeException(new

ConnectException(;

+assertTrue(handler.shouldRetryError(new RuntimeException(new

IllegalArgumentException(

+

ErrorHandler.BlockPushErrorHandler.BLOCK_APPEND_COLLISION_DETECTED_MSG_PREFIX;

Review comment:

We actually distinguish between a block duplication and a block

collision on the server side.

Block duplication is when the exact same shuffle partition block gets pushed

by different executors, due to speculation or maybe task retry.

The server side is able to tell when block duplication happens, whether is

one duplicate block sent after the first has been successfully merged or when

both blocks are received at the same time.

With duplicate block, the server will actually respond success to the

client, so the client won't retry sending it.

In the case of speculation, when 2 clients might be sending the same block

at the same time, the server will respond success to 1 of the two and let the

other write, and if that write fails the corresponding client will retry if

it's retriable.

On the other hand, a block collision is not about the exact same shuffle

partition block, but 2 different blocks belonging to the same shuffle partition

being sent to the same shuffle service at the same time.

Since the shuffle service need to append 1 block completely before appending

the content of the next block belonging to the same shuffle partition, when

these blocks arrive at one shuffle service at the same time, we would encounter

a block collision.

A block collision might not immediately lead to the collision failure sent

back to the client, since the server will buffer the blocks for a short period

of time and make a few attempts before giving up.

When the shuffle service gives up, the client will receive the collision

failure.

If it receives the collision failure, it's an indication that this block

hasn't been merged yet, and thus it's OK to retry.

Of course, it's totally possible that by the time the retry happens, a

speculative task has already pushed the block and successfully merged it.

If that's the case, the retry would be treated as a block duplication

instead of a block collision, and the client will receive success response.

I hope this servers as an overview of what's to come in the next PR.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] kyprifog commented on a change in pull request #29180: [SPARK-17333][PYSPARK] Enable mypy on the repository

kyprifog commented on a change in pull request #29180:

URL: https://github.com/apache/spark/pull/29180#discussion_r504330439

##

File path: dev/lint-python

##

@@ -122,6 +123,31 @@ function pycodestyle_test {

fi

}

+function mypy_test {

+local MYPY_REPORT=

+local MYPY_STATUS=

+

+if ! hash "$MYPY_BUILD" 2> /dev/null; then

+echo "The mypy command was not found."

+echo "mypy checks failed."

+exit 1

+fi

+

+echo "starting $MYPY_BUILD test..."

+MYPY_REPORT=$( ($MYPY_BUILD --ignore-missing-imports --config-file

python/mypy.ini python/) 2>&1)

Review comment:

Second this, I think it should be in the mypy.ini under specific

sections like is suggested here: http://calpaterson.com/mypy-hints.html

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] sunchao commented on pull request #29843: [SPARK-29250][BUILD][test-maven][test-hadoop2.7] Upgrade to Hadoop 3.2.1 and move to shaded client

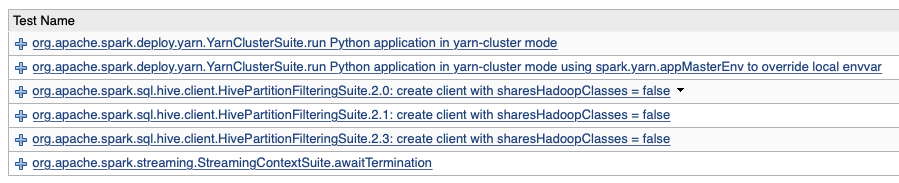

sunchao commented on pull request #29843: URL: https://github.com/apache/spark/pull/29843#issuecomment-708071828 > Among the following, `HivePartitionFilteringSuite.2.2: create client with sharesHadoopClasses = false` seems to fail at Github Action, too. Is this a new after the last dependency change commit? Yes this is new after I rebase. I also couldn't reproduce the error locally. Still trying to find the root cause. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] maropu commented on a change in pull request #29933: [SPARK-26533][SQL] Support query auto timeout cancel on thriftserver

maropu commented on a change in pull request #29933:

URL: https://github.com/apache/spark/pull/29933#discussion_r504312420

##

File path:

sql/hive-thriftserver/src/main/scala/org/apache/spark/sql/hive/thriftserver/SparkExecuteStatementOperation.scala

##

@@ -45,11 +45,18 @@ private[hive] class SparkExecuteStatementOperation(

parentSession: HiveSession,

statement: String,

confOverlay: JMap[String, String],

-runInBackground: Boolean = true)

+runInBackground: Boolean = true,

+queryTimeout: Long)

extends ExecuteStatementOperation(parentSession, statement, confOverlay,

runInBackground)

with SparkOperation

with Logging {

+ private val queryTimeoutValue = if (queryTimeout <= 0) {

Review comment:

@wangyum @AngersZh Is This behaviour the same with the current Hive

one?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] CodingCat commented on a change in pull request #29831: [SPARK-32351][SQL] Show partially pushed down partition filters in explain()

CodingCat commented on a change in pull request #29831:

URL: https://github.com/apache/spark/pull/29831#discussion_r504307502

##

File path:

sql/hive/src/main/scala/org/apache/spark/sql/hive/HiveStrategies.scala

##

@@ -256,20 +256,21 @@ private[hive] trait HiveStrategies {

*/

object HiveTableScans extends Strategy {

def apply(plan: LogicalPlan): Seq[SparkPlan] = plan match {

- case ScanOperation(projectList, predicates, relation: HiveTableRelation)

=>

+ case ScanOperation(projectList, filters, relation: HiveTableRelation) =>

// Filter out all predicates that only deal with partition keys, these

are given to the

// hive table scan operator to be used for partition pruning.

val partitionKeyIds = AttributeSet(relation.partitionCols)

-val (pruningPredicates, otherPredicates) = predicates.partition {

predicate =>

- !predicate.references.isEmpty &&

- predicate.references.subsetOf(partitionKeyIds)

-}

+val normalizedFilters = DataSourceStrategy.normalizeExprs(

+ filters.filter(_.deterministic), relation.output)

+

+val partitionKeyFilters =

DataSourceStrategy.getPushedDownFilters(relation.partitionCols,

+ normalizedFilters)

pruneFilterProject(

projectList,

- otherPredicates,

+ filters.filter(f => f.references.isEmpty ||

!f.references.subsetOf(partitionKeyIds)),

Review comment:

I am actually just following the original behavior of HiveStrategies,

regarding scalar subquery, is it in partition filter , I missed something in

`FileSourceStrategy `?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HeartSaVioR commented on pull request #30033: [SPARK-33136][SQL] Fix mistakenly swapped parameter in V2WriteCommand.outputResolved