[GitHub] [lucene-solr] interma opened a new pull request #2289: Title: add timestamp in default gc_log name

interma opened a new pull request #2289: URL: https://github.com/apache/lucene-solr/pull/2289 # Description https://issues.apache.org/jira/browse/SOLR-15104 When restarting Solr, it will overwrite the gc log, this behavior is not friendly for debugging OOM issues. # Solution Add timestamp in default gc_log name, so it doesn't overwrite the previous one. # Tests Please describe the tests you've developed or run to confirm this patch implements the feature or solves the problem. # Checklist Please review the following and check all that apply: - [ ] I have reviewed the guidelines for [How to Contribute](https://wiki.apache.org/solr/HowToContribute) and my code conforms to the standards described there to the best of my ability. - [ ] I have created a Jira issue and added the issue ID to my pull request title. - [ ] I have given Solr maintainers [access](https://help.github.com/en/articles/allowing-changes-to-a-pull-request-branch-created-from-a-fork) to contribute to my PR branch. (optional but recommended) - [ ] I have developed this patch against the `master` branch. - [ ] I have run `./gradlew check`. - [ ] I have added tests for my changes. - [ ] I have added documentation for the [Ref Guide](https://github.com/apache/lucene-solr/tree/master/solr/solr-ref-guide) (for Solr changes only). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org For additional commands, e-mail: issues-h...@lucene.apache.org

[GitHub] [lucene-solr] sbeniwal12 commented on a change in pull request #2282: LUCENE-9615: Expose HnswGraphBuilder index-time hyperparameters as FieldType attributes

sbeniwal12 commented on a change in pull request #2282:

URL: https://github.com/apache/lucene-solr/pull/2282#discussion_r568327126

##

File path:

lucene/core/src/java/org/apache/lucene/codecs/lucene90/Lucene90VectorWriter.java

##

@@ -188,9 +190,29 @@ private void writeGraph(

RandomAccessVectorValuesProducer vectorValues,

long graphDataOffset,

long[] offsets,

- int count)

+ int count,

+ String maxConnStr,

+ String beamWidthStr)

throws IOException {

-HnswGraphBuilder hnswGraphBuilder = new HnswGraphBuilder(vectorValues);

+int maxConn, beamWidth;

+if (maxConnStr == null) {

+ maxConn = HnswGraphBuilder.DEFAULT_MAX_CONN;

+} else if (!maxConnStr.matches("[0-9]+")) {

Review comment:

Thanks for pointing out, threw `NumberFormatException` with message

describing which attribute caused the exception.

##

File path: lucene/core/src/java/org/apache/lucene/document/VectorField.java

##

@@ -53,6 +54,44 @@ private static FieldType getType(float[] v,

VectorValues.SearchStrategy searchSt

return type;

}

+ /**

+ * Public method to create HNSW field type with the given max-connections

and beam-width

+ * parameters that would be used by HnswGraphBuilder while constructing HNSW

graph.

+ *

+ * @param dimension dimension of vectors

+ * @param searchStrategy a function defining vector proximity.

+ * @param maxConn max-connections at each HNSW graph node

+ * @param beamWidth size of list to be used while constructing HNSW graph

+ * @throws IllegalArgumentException if any parameter is null, or has

dimension 1024.

+ */

+ public static FieldType createHnswType(

+ int dimension, VectorValues.SearchStrategy searchStrategy, int maxConn,

int beamWidth) {

+if (dimension == 0) {

+ throw new IllegalArgumentException("cannot index an empty vector");

+}

+if (dimension > VectorValues.MAX_DIMENSIONS) {

+ throw new IllegalArgumentException(

+ "cannot index vectors with dimension greater than " +

VectorValues.MAX_DIMENSIONS);

+}

+if (searchStrategy == null) {

+ throw new IllegalArgumentException("search strategy must not be null");

Review comment:

Added this check and also added a unit test for this check.

##

File path: lucene/core/src/test/org/apache/lucene/util/hnsw/KnnGraphTester.java

##

@@ -132,13 +135,13 @@ private void run(String... args) throws Exception {

if (iarg == args.length - 1) {

throw new IllegalArgumentException("-beamWidthIndex requires a

following number");

}

- HnswGraphBuilder.DEFAULT_BEAM_WIDTH = Integer.parseInt(args[++iarg]);

Review comment:

Made them final and also made changes to `TestKnnGraph.java` to

accommodate this change.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org

For additional commands, e-mail: issues-h...@lucene.apache.org

[GitHub] [lucene-solr] zacharymorn commented on pull request #2258: LUCENE-9686: Fix read past EOF handling in DirectIODirectory

zacharymorn commented on pull request #2258: URL: https://github.com/apache/lucene-solr/pull/2258#issuecomment-771322610 > Hi Zach. Sorry for belated reply. Please take a look at my comments attached to the context. I have some doubts whether EOF should leave the channel undrained. Maybe I'm paranoid here though. Hi Dawid, no worry and thanks for the review! I've replied to the comment and added some tests to verify, please let me know if they look good to you. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org For additional commands, e-mail: issues-h...@lucene.apache.org

[GitHub] [lucene-solr] zacharymorn commented on a change in pull request #2258: LUCENE-9686: Fix read past EOF handling in DirectIODirectory

zacharymorn commented on a change in pull request #2258:

URL: https://github.com/apache/lucene-solr/pull/2258#discussion_r568294041

##

File path:

lucene/misc/src/java/org/apache/lucene/misc/store/DirectIODirectory.java

##

@@ -381,17 +377,18 @@ public long length() {

@Override

public byte readByte() throws IOException {

if (!buffer.hasRemaining()) {

-refill();

+refill(1);

}

+

return buffer.get();

}

-private void refill() throws IOException {

+private void refill(int byteToRead) throws IOException {

filePos += buffer.capacity();

// BaseDirectoryTestCase#testSeekPastEOF test for consecutive read past

EOF,

// hence throwing EOFException early to maintain buffer state (position

in particular)

- if (filePos > channel.size()) {

+ if (filePos > channel.size() || (channel.size() - filePos < byteToRead))

{

Review comment:

If I understand your comment correctly, your concern is about the

consistency of directory's internal state after EOF is raised right? I think

DirectIODirectory already handles that actually (by manipulating `filePos`, but

not `channel.position` per se), and I have added some more tests to confirm

that to be the case in the latest commit.

Please note that for the additional tests, I was originally adding them into

`BaseDirectoryTestCase#testSeekPastEOF`, but that would fail some existing

tests for other directory implementations, as read immediately after seek past

EOF doesn't raise EOFException for them:

* TestHardLinkCopyDirectoryWrapper

* TestMmapDirectory

* TestByteBuffersDirectory

* TestMultiMMap

However, according to java doc here

https://github.com/apache/lucene-solr/blob/15aaec60d9bfa96f2837c38b7ca83e2c87c66d8d/lucene/core/src/java/org/apache/lucene/store/IndexInput.java#L66-L73,

this seems to be an unspecified state in general.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org

For additional commands, e-mail: issues-h...@lucene.apache.org

[GitHub] [lucene-solr] zacharymorn commented on a change in pull request #2258: LUCENE-9686: Fix read past EOF handling in DirectIODirectory

zacharymorn commented on a change in pull request #2258:

URL: https://github.com/apache/lucene-solr/pull/2258#discussion_r568293170

##

File path:

lucene/misc/src/java/org/apache/lucene/misc/store/DirectIODirectory.java

##

@@ -381,17 +377,18 @@ public long length() {

@Override

public byte readByte() throws IOException {

if (!buffer.hasRemaining()) {

-refill();

+refill(1);

}

+

return buffer.get();

}

-private void refill() throws IOException {

+private void refill(int byteToRead) throws IOException {

Review comment:

Updated.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org

For additional commands, e-mail: issues-h...@lucene.apache.org

[jira] [Commented] (LUCENE-9718) REGEX Pattern Search, character classes with quantifiers do not work

[

https://issues.apache.org/jira/browse/LUCENE-9718?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17276798#comment-17276798

]

Michael Sokolov commented on LUCENE-9718:

-

Thanks Brian, contributions in those areas would be welcome!

> REGEX Pattern Search, character classes with quantifiers do not work

>

>

> Key: LUCENE-9718

> URL: https://issues.apache.org/jira/browse/LUCENE-9718

> Project: Lucene - Core

> Issue Type: Bug

> Components: core/search

>Affects Versions: 7.7.3, 8.6.3

>Reporter: Brian Feldman

>Priority: Minor

> Labels: Documentation, RegEx

>

> Character classes with a quantifier do not work, no error is given and no

> results are returned. For example \d\{2} or \d\{2,3} as is commonly written

> in most languages supporting regular expressions, simply and quietly does not

> work. A user work around is to write them fully out such as \d\d or

> [0-9][0-9] or as [0-9]\{2,3} .

>

> This inconsistency or limitation is not documented, wasting the time of users

> as they have to figure this out themselves. I believe this inconsistency

> should be clearly documented and an effort to fixing the inconsistency would

> improve pattern searching.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org

For additional commands, e-mail: issues-h...@lucene.apache.org

[jira] [Updated] (LUCENE-9718) REGEX Pattern Search, character classes with quantifiers do not work

[

https://issues.apache.org/jira/browse/LUCENE-9718?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Michael Sokolov updated LUCENE-9718:

Issue Type: Improvement (was: Bug)

> REGEX Pattern Search, character classes with quantifiers do not work

>

>

> Key: LUCENE-9718

> URL: https://issues.apache.org/jira/browse/LUCENE-9718

> Project: Lucene - Core

> Issue Type: Improvement

> Components: core/search

>Affects Versions: 7.7.3, 8.6.3

>Reporter: Brian Feldman

>Priority: Minor

> Labels: Documentation, RegEx

>

> Character classes with a quantifier do not work, no error is given and no

> results are returned. For example \d\{2} or \d\{2,3} as is commonly written

> in most languages supporting regular expressions, simply and quietly does not

> work. A user work around is to write them fully out such as \d\d or

> [0-9][0-9] or as [0-9]\{2,3} .

>

> This inconsistency or limitation is not documented, wasting the time of users

> as they have to figure this out themselves. I believe this inconsistency

> should be clearly documented and an effort to fixing the inconsistency would

> improve pattern searching.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org

For additional commands, e-mail: issues-h...@lucene.apache.org

[GitHub] [lucene-solr] jaisonbi edited a comment on pull request #2213: LUCENE-9663: Adding compression to terms dict from SortedSet/Sorted DocValues

jaisonbi edited a comment on pull request #2213:

URL: https://github.com/apache/lucene-solr/pull/2213#issuecomment-771296030

If I understood correctly, the route via PerFieldDocValuesFormat need to

change the usage of SortedSetDocValues.

The idea is adding another constructor for enabling terms dict compression,

as below:

```

public SortedSetDocValuesField(String name, BytesRef bytes, boolean

compression) {

super(name, compression ? COMPRESSION_TYPE: TYPE);

fieldsData = bytes;

}

```

And below is the definition of COMPRESSION_TYPE:

```

public static final FieldType COMPRESSION_TYPE = new FieldType();

static {

COMPRESSION_TYPE.setDocValuesType(DocValuesType.SORTED_SET);

// add one new attribute for telling PerFieldDocValuesFormat that terms

dict compression is enabled for this field

COMPRESSION_TYPE.putAttribute("docvalue.sortedset.compression", "true");

COMPRESSION_TYPE.freeze();

}

```

Not sure if I've got it right :)

@msokolov @bruno-roustant

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org

For additional commands, e-mail: issues-h...@lucene.apache.org

[GitHub] [lucene-solr] jaisonbi commented on pull request #2213: LUCENE-9663: Adding compression to terms dict from SortedSet/Sorted DocValues

jaisonbi commented on pull request #2213:

URL: https://github.com/apache/lucene-solr/pull/2213#issuecomment-771296030

If I understood correctly, the route via PerFieldDocValuesFormat need to

change the usage of SortedSetDocValues.

The idea is adding another constructor for enabling terms dict compression,

as below:

```

public SortedSetDocValuesField(String name, BytesRef bytes, boolean

compression) {

super(name, compression ? COMPRESSION_TYPE: TYPE);

fieldsData = bytes;

}

```

In COMPRESSION_TYPE, add one new attribute for telling

PerFieldDocValuesFormat that terms dict compression is enabled for this field.

Not sure if I've got it right :)

@msokolov @bruno-roustant

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org

For additional commands, e-mail: issues-h...@lucene.apache.org

[jira] [Commented] (SOLR-15124) Remove node/container level admin handlers from ImplicitPlugins.json (core level).

[

https://issues.apache.org/jira/browse/SOLR-15124?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17276789#comment-17276789

]

David Smiley commented on SOLR-15124:

-

{quote}Should we consider setting up redirect paths for the old handlers? Or a

better error message with a hint that they have moved?

{quote}

No; this is 9.0 and the fact that these are registered here is an obscure

oddity. Let's not make removal of tech-debt too hard please, or we will

increasingly won't bother because it's too much of a PITA, and then we're left

with an even worse tech-debt problem in the years to come (from my experience

here, looking back 10+ years).

{quote}Also, will need to update SolrCoreTest.testImplicitPlugins, which I

don't think the existing PR did.

{quote}

[~nazerke] did you run tests?

> Remove node/container level admin handlers from ImplicitPlugins.json (core

> level).

> --

>

> Key: SOLR-15124

> URL: https://issues.apache.org/jira/browse/SOLR-15124

> Project: Solr

> Issue Type: Task

> Security Level: Public(Default Security Level. Issues are Public)

>Reporter: David Smiley

>Priority: Blocker

> Labels: newdev

> Fix For: master (9.0)

>

> Time Spent: 50m

> Remaining Estimate: 0h

>

> There are many very old administrative RequestHandlers registered in a

> SolrCore that are actually JVM / node / CoreContainer level in nature. These

> pre-dated CoreContainer level handlers. We should (1) remove them from

> ImplictPlugins.json, and (2) make simplifying tweaks to them to remove that

> they work at the core level. For example LoggingHandler has two constructors

> and a non-final Watcher because it works in these two modalities. It need

> only have the one that takes a CoreContainer, and Watcher will then be final.

> /admin/threads

> /admin/properties

> /admin/logging

> Should stay because has core-level stuff:

> /admin/plugins

> /admin/mbeans

> This one:

> /admin/system -- SystemInfoHandler

> returns "core" level information, and also node level stuff. I propose

> splitting this one to a CoreInfoHandler to split the logic. Maybe a separate

> issue.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org

For additional commands, e-mail: issues-h...@lucene.apache.org

[jira] [Updated] (LUCENE-9722) Aborted merge can leak readers if the output is empty

[ https://issues.apache.org/jira/browse/LUCENE-9722?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Nhat Nguyen updated LUCENE-9722: Description: We fail to close the merged readers of an aborted merge if its output segment contains no document. This bug was discovered by a test in Elasticsearch ([elastic/elasticsearch#67884|https://github.com/elastic/elasticsearch/issues/67884]). was:We fail to close merged readers if the output segment contains no document. > Aborted merge can leak readers if the output is empty > - > > Key: LUCENE-9722 > URL: https://issues.apache.org/jira/browse/LUCENE-9722 > Project: Lucene - Core > Issue Type: Bug > Components: core/index >Affects Versions: master (9.0), 8.7 >Reporter: Nhat Nguyen >Assignee: Nhat Nguyen >Priority: Major > Time Spent: 10m > Remaining Estimate: 0h > > We fail to close the merged readers of an aborted merge if its output segment > contains no document. > This bug was discovered by a test in Elasticsearch > ([elastic/elasticsearch#67884|https://github.com/elastic/elasticsearch/issues/67884]). -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org For additional commands, e-mail: issues-h...@lucene.apache.org

[jira] [Updated] (LUCENE-9722) Aborted merge can leak readers if the output is empty

[ https://issues.apache.org/jira/browse/LUCENE-9722?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Nhat Nguyen updated LUCENE-9722: Status: Patch Available (was: Open) > Aborted merge can leak readers if the output is empty > - > > Key: LUCENE-9722 > URL: https://issues.apache.org/jira/browse/LUCENE-9722 > Project: Lucene - Core > Issue Type: Bug > Components: core/index >Affects Versions: master (9.0), 8.7 >Reporter: Nhat Nguyen >Assignee: Nhat Nguyen >Priority: Major > Time Spent: 10m > Remaining Estimate: 0h > > We fail to close merged readers if the output segment contains no document. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org For additional commands, e-mail: issues-h...@lucene.apache.org

[GitHub] [lucene-solr] dnhatn opened a new pull request #2288: LUCENE-9722: Close merged readers on abort

dnhatn opened a new pull request #2288: URL: https://github.com/apache/lucene-solr/pull/2288 We fail to close merged readers if the output segment contains no document. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org For additional commands, e-mail: issues-h...@lucene.apache.org

[jira] [Created] (LUCENE-9722) Aborted merge can leak readers if the output is empty

Nhat Nguyen created LUCENE-9722: --- Summary: Aborted merge can leak readers if the output is empty Key: LUCENE-9722 URL: https://issues.apache.org/jira/browse/LUCENE-9722 Project: Lucene - Core Issue Type: Bug Components: core/index Affects Versions: 8.7, master (9.0) Reporter: Nhat Nguyen Assignee: Nhat Nguyen We fail to close merged readers if the output segment contains no document. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org For additional commands, e-mail: issues-h...@lucene.apache.org

[GitHub] [lucene-solr] zhaih commented on a change in pull request #2213: LUCENE-9663: Adding compression to terms dict from SortedSet/Sorted DocValues

zhaih commented on a change in pull request #2213:

URL: https://github.com/apache/lucene-solr/pull/2213#discussion_r568246420

##

File path:

lucene/core/src/java/org/apache/lucene/codecs/lucene80/Lucene80DocValuesConsumer.java

##

@@ -736,49 +736,92 @@ private void doAddSortedField(FieldInfo field,

DocValuesProducer valuesProducer)

private void addTermsDict(SortedSetDocValues values) throws IOException {

final long size = values.getValueCount();

meta.writeVLong(size);

-meta.writeInt(Lucene80DocValuesFormat.TERMS_DICT_BLOCK_SHIFT);

+boolean compress =

+Lucene80DocValuesFormat.Mode.BEST_COMPRESSION == mode

Review comment:

Sorry for late response. I agree we could solve it in a follow-up issue.

And I could still test this via a customized PerFieldDocValuesFormat, thank you!

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org

For additional commands, e-mail: issues-h...@lucene.apache.org

[GitHub] [lucene-solr] zhaih commented on pull request #2213: LUCENE-9663: Adding compression to terms dict from SortedSet/Sorted DocValues

zhaih commented on pull request #2213: URL: https://github.com/apache/lucene-solr/pull/2213#issuecomment-771265310 I see, so I think for now I could test it via a customized PerFieldDocValuesFormat, I'll give PerFieldDocValuesFormat route a try then. Tho IMO I would prefer a simpler configuration (as proposed by @jaisonbi) rather than customize using PerFieldDocValuesFormat in the future, if these 2 compression are showing different performance characteristic. Since if my understand is correct, to enable only TermDictCompression using PerFieldDOcValuesFormat we need to enumerate all SSDV field names in that class? Which sounds not quite maintainable if there's regularly field addition/deletion. Please correct me if I'm wrong as I'm not quite familiar with codec part... This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org For additional commands, e-mail: issues-h...@lucene.apache.org

[jira] [Commented] (SOLR-14330) Return docs with null value in expand for field when collapse has nullPolicy=collapse

[

https://issues.apache.org/jira/browse/SOLR-14330?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17276749#comment-17276749

]

ASF subversion and git services commented on SOLR-14330:

Commit 4a21f594c203fb219942dcbaebbd872dcb2cfd4d in lucene-solr's branch

refs/heads/branch_8x from Chris M. Hostetter

[ https://gitbox.apache.org/repos/asf?p=lucene-solr.git;h=4a21f59 ]

SOLR-14330: ExpandComponent now supports an expand.nullGroup=true option

(cherry picked from commit 15aaec60d9bfa96f2837c38b7ca83e2c87c66d8d)

> Return docs with null value in expand for field when collapse has

> nullPolicy=collapse

> -

>

> Key: SOLR-14330

> URL: https://issues.apache.org/jira/browse/SOLR-14330

> Project: Solr

> Issue Type: Wish

>Reporter: Munendra S N

>Assignee: Chris M. Hostetter

>Priority: Major

> Attachments: SOLR-14330.patch, SOLR-14330.patch

>

>

> When documents doesn't contain value for field then, with collapse either

> those documents could be either ignored(default), collapsed(one document is

> chosen) or expanded(all are returned). This is controlled by {{nullPolicy}}

> When {{nullPolicy}} is {{collapse}}, it would be nice to return all documents

> with {{null}} value in expand block if {{expand=true}}

> Also, when used with {{expand.field}}, even then we should return such

> documents

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org

For additional commands, e-mail: issues-h...@lucene.apache.org

[jira] [Resolved] (SOLR-14330) Return docs with null value in expand for field when collapse has nullPolicy=collapse

[

https://issues.apache.org/jira/browse/SOLR-14330?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Chris M. Hostetter resolved SOLR-14330.

---

Fix Version/s: 8.9

master (9.0)

Resolution: Fixed

> Return docs with null value in expand for field when collapse has

> nullPolicy=collapse

> -

>

> Key: SOLR-14330

> URL: https://issues.apache.org/jira/browse/SOLR-14330

> Project: Solr

> Issue Type: Wish

>Reporter: Munendra S N

>Assignee: Chris M. Hostetter

>Priority: Major

> Fix For: master (9.0), 8.9

>

> Attachments: SOLR-14330.patch, SOLR-14330.patch

>

>

> When documents doesn't contain value for field then, with collapse either

> those documents could be either ignored(default), collapsed(one document is

> chosen) or expanded(all are returned). This is controlled by {{nullPolicy}}

> When {{nullPolicy}} is {{collapse}}, it would be nice to return all documents

> with {{null}} value in expand block if {{expand=true}}

> Also, when used with {{expand.field}}, even then we should return such

> documents

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org

For additional commands, e-mail: issues-h...@lucene.apache.org

[jira] [Commented] (SOLR-14330) Return docs with null value in expand for field when collapse has nullPolicy=collapse

[

https://issues.apache.org/jira/browse/SOLR-14330?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17276725#comment-17276725

]

ASF subversion and git services commented on SOLR-14330:

Commit 15aaec60d9bfa96f2837c38b7ca83e2c87c66d8d in lucene-solr's branch

refs/heads/master from Chris M. Hostetter

[ https://gitbox.apache.org/repos/asf?p=lucene-solr.git;h=15aaec6 ]

SOLR-14330: ExpandComponent now supports an expand.nullGroup=true option

> Return docs with null value in expand for field when collapse has

> nullPolicy=collapse

> -

>

> Key: SOLR-14330

> URL: https://issues.apache.org/jira/browse/SOLR-14330

> Project: Solr

> Issue Type: Wish

>Reporter: Munendra S N

>Assignee: Chris M. Hostetter

>Priority: Major

> Attachments: SOLR-14330.patch, SOLR-14330.patch

>

>

> When documents doesn't contain value for field then, with collapse either

> those documents could be either ignored(default), collapsed(one document is

> chosen) or expanded(all are returned). This is controlled by {{nullPolicy}}

> When {{nullPolicy}} is {{collapse}}, it would be nice to return all documents

> with {{null}} value in expand block if {{expand=true}}

> Also, when used with {{expand.field}}, even then we should return such

> documents

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org

For additional commands, e-mail: issues-h...@lucene.apache.org

[GitHub] [lucene-solr] dsmiley commented on a change in pull request #2230: SOLR-15011: /admin/logging handler is configured logs to all nodes

dsmiley commented on a change in pull request #2230: URL: https://github.com/apache/lucene-solr/pull/2230#discussion_r568204361 ## File path: solr/CHANGES.txt ## @@ -69,6 +69,8 @@ Improvements * SOLR-14949: Docker: Ability to customize the FROM image when building. (Houston Putman) +* SOLR-15011: /admin/logging handler should be able to configure logs on all nodes (Nazerke Seidan, David Smiley) Review comment: ```suggestion * SOLR-15011: /admin/logging handler will now propagate setLevel (log threshold) to all nodes when told to. The admin UI now tells it to. (Nazerke Seidan, David Smiley) ``` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org For additional commands, e-mail: issues-h...@lucene.apache.org

[jira] [Comment Edited] (LUCENE-9705) Move all codec formats to the o.a.l.codecs.Lucene90 package

[

https://issues.apache.org/jira/browse/LUCENE-9705?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17276599#comment-17276599

]

Julie Tibshirani edited comment on LUCENE-9705 at 2/1/21, 11:02 PM:

{quote}It's especially clear here where we must copy a lot of classes with no

change at all, merely to clearly and consistently document the index version

change.

{quote}

I’ll try to add some context since I suspect there might be misunderstanding.

In general when there is a new version like 9.1, we *do not* plan to create all

new index format classes. We only copy a class and move it to backwards-codecs

when there is a change to that specific format, for example {{PointsFormat}}.

This proposal applies only to the 9.0 release, and its main purpose is to

support the work in LUCENE-9047 to move all formats to little endian. My

understanding is that moving to little endian impacts all the formats and will

be much cleaner if we used these fresh {{Lucene90*Format}}.

{quote}I wonder if we (eventually) should consider shifting to a versioning

system that doesn't require new classes. Is this somehow a feature of the

service discovery API that we use?

{quote}

We indeed load codecs (with their formats) through service discovery. If a user

wants to read indices from a previous version, they can depend on

backwards-codecs so Lucene loads the correct older codec. As of LUCENE-9669, we

allow reading indices back to major version N-2.

I personally really like the current "copy-on-write" system for formats.

There’s code duplication, but it has advantages over combining different

version logic in the same file:

* It’s really clear how each version behaves. Having a direct copy like

{{Lucene70Codec}} is almost as if we were pulling in jars from Lucene 7.0.

* It decreases risk of introducing bugs or accidental changes. If you’re

making an enhancement to a new format, there’s little chance of changing the

logic for an old format (since it lives in a separate class). This is

especially important since older formats are not tested as thoroughly.

I started to appreciate it after experiencing the alternative in Elasticsearch,

where we’re constantly bumping into if/ else version checks when making changes.

was (Author: julietibs):

{quote}It's especially clear here where we must copy a lot of classes with no

change at all, merely to clearly and consistently document the index version

change.

{quote}

I’ll try to add some context since I suspect there might be misunderstanding.

In general when there is a new major version, we *do not* plan to create all

new index format classes. We only copy a class and move it to backwards-codecs

when there is a change to that specific format, for example {{PointsFormat}}.

This proposal applies only to the 9.0 release, and its main purpose is to

support the work in LUCENE-9047 to move all formats to little endian. My

understanding is that moving to little endian impacts all the formats and will

be much cleaner if we used these fresh {{Lucene90*Format}}.

{quote}I wonder if we (eventually) should consider shifting to a versioning

system that doesn't require new classes. Is this somehow a feature of the

service discovery API that we use?

{quote}

We indeed load codecs (with their formats) through service discovery. If a user

wants to read indices from a previous version, they can depend on

backwards-codecs so Lucene loads the correct older codec. As of LUCENE-9669, we

allow reading indices back to major version N-2.

I personally really like the current "copy-on-write" system for formats.

There’s code duplication, but it has advantages over combining different

version logic in the same file:

* It’s really clear how each version behaves. Having a direct copy like

{{Lucene70Codec}} is almost as if we were pulling in jars from Lucene 7.0.

* It decreases risk of introducing bugs or accidental changes. If you’re

making an enhancement to a new format, there’s little chance of changing the

logic for an old format (since it lives in a separate class). This is

especially important since older formats are not tested as thoroughly.

I started to appreciate it after experiencing the alternative in Elasticsearch,

where we’re constantly bumping into if/ else version checks when making changes.

> Move all codec formats to the o.a.l.codecs.Lucene90 package

> ---

>

> Key: LUCENE-9705

> URL: https://issues.apache.org/jira/browse/LUCENE-9705

> Project: Lucene - Core

> Issue Type: Wish

>Reporter: Ignacio Vera

>Priority: Major

> Time Spent: 50m

> Remaining Estimate: 0h

>

> Current formats are distributed in different packages, prefixed with the

> Lucene version they were created. With the upcoming release of Lucene 9.0, it

> would be nice

[jira] [Commented] (SOLR-8393) Component for Solr resource usage planning

[ https://issues.apache.org/jira/browse/SOLR-8393?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17276715#comment-17276715 ] Isabelle Giguere commented on SOLR-8393: New patch, off current master Parameter 'sizeUnit' is supported for both the SizeComponent, and ClusterSizing. If parameter 'sizeUnit' is present, values will be output as 'double', according to the chosen size unit. Value of 'estimated-num-docs' remains a 'long'. Default behavior, if 'sizeUnit' is not present is the human-readable format. Valid values for 'sizeUnit' are : GB, MB, KB, bytes ** Note about the implementation : ClusterSizing calls the SizeComponent via HTTP. So the returned results per collection are already formatted according to 'sizeUnit' (or lack of it). As a consequence, ClusterSizing needs to toggle back and forth between human-readable values, and raw long values, to support the requested 'sizeUnit'. I don't know how we could intercept the SizeComponent response, and receive just the long values, to make the conversion to some 'sizeUnit' just once in ClusterSizing, while keeping the formatting in SizeComponent, for use cases that would call it directly. A response transformer ? Would that be the right approach ? > Component for Solr resource usage planning > -- > > Key: SOLR-8393 > URL: https://issues.apache.org/jira/browse/SOLR-8393 > Project: Solr > Issue Type: Improvement >Reporter: Steve Molloy >Priority: Major > Attachments: SOLR-8393.patch, SOLR-8393.patch, SOLR-8393.patch, > SOLR-8393.patch, SOLR-8393.patch, SOLR-8393.patch, SOLR-8393.patch, > SOLR-8393.patch, SOLR-8393.patch, SOLR-8393_tag_7.5.0.patch > > > One question that keeps coming back is how much disk and RAM do I need to run > Solr. The most common response is that it highly depends on your data. While > true, it makes for frustrated users trying to plan their deployments. > The idea I'm bringing is to create a new component that will attempt to > extrapolate resources needed in the future by looking at resources currently > used. By adding a parameter for the target number of documents, current > resources are adapted by a ratio relative to current number of documents. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org For additional commands, e-mail: issues-h...@lucene.apache.org

[jira] [Updated] (SOLR-8393) Component for Solr resource usage planning

[ https://issues.apache.org/jira/browse/SOLR-8393?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Isabelle Giguere updated SOLR-8393: --- Attachment: SOLR-8393.patch > Component for Solr resource usage planning > -- > > Key: SOLR-8393 > URL: https://issues.apache.org/jira/browse/SOLR-8393 > Project: Solr > Issue Type: Improvement >Reporter: Steve Molloy >Priority: Major > Attachments: SOLR-8393.patch, SOLR-8393.patch, SOLR-8393.patch, > SOLR-8393.patch, SOLR-8393.patch, SOLR-8393.patch, SOLR-8393.patch, > SOLR-8393.patch, SOLR-8393.patch, SOLR-8393_tag_7.5.0.patch > > > One question that keeps coming back is how much disk and RAM do I need to run > Solr. The most common response is that it highly depends on your data. While > true, it makes for frustrated users trying to plan their deployments. > The idea I'm bringing is to create a new component that will attempt to > extrapolate resources needed in the future by looking at resources currently > used. By adding a parameter for the target number of documents, current > resources are adapted by a ratio relative to current number of documents. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org For additional commands, e-mail: issues-h...@lucene.apache.org

[GitHub] [lucene-solr] dweiss commented on a change in pull request #2267: LUCENE-9707: Hunspell: check Lucene's implementation against Hunspel's test data

dweiss commented on a change in pull request #2267:

URL: https://github.com/apache/lucene-solr/pull/2267#discussion_r568187636

##

File path:

lucene/analysis/common/src/test/org/apache/lucene/analysis/hunspell/SpellCheckerTest.java

##

@@ -61,59 +61,74 @@ public void needAffixOnAffixes() throws Exception {

doTest("needaffix5");

}

+ @Test

public void testBreak() throws Exception {

doTest("break");

}

- public void testBreakDefault() throws Exception {

+ @Test

+ public void breakDefault() throws Exception {

doTest("breakdefault");

}

- public void testBreakOff() throws Exception {

+ @Test

+ public void breakOff() throws Exception {

doTest("breakoff");

}

- public void testCompoundrule() throws Exception {

+ @Test

+ public void compoundrule() throws Exception {

doTest("compoundrule");

}

- public void testCompoundrule2() throws Exception {

+ @Test

+ public void compoundrule2() throws Exception {

doTest("compoundrule2");

}

- public void testCompoundrule3() throws Exception {

+ @Test

+ public void compoundrule3() throws Exception {

doTest("compoundrule3");

}

- public void testCompoundrule4() throws Exception {

+ @Test

+ public void compoundrule4() throws Exception {

doTest("compoundrule4");

}

- public void testCompoundrule5() throws Exception {

+ @Test

+ public void compoundrule5() throws Exception {

doTest("compoundrule5");

}

- public void testCompoundrule6() throws Exception {

+ @Test

+ public void compoundrule6() throws Exception {

doTest("compoundrule6");

}

- public void testCompoundrule7() throws Exception {

+ @Test

+ public void compoundrule7() throws Exception {

doTest("compoundrule7");

}

- public void testCompoundrule8() throws Exception {

+ @Test

+ public void compoundrule8() throws Exception {

doTest("compoundrule8");

}

- public void testGermanCompounding() throws Exception {

+ @Test

+ public void germanCompounding() throws Exception {

doTest("germancompounding");

}

protected void doTest(String name) throws Exception {

-InputStream affixStream =

-Objects.requireNonNull(getClass().getResourceAsStream(name + ".aff"),

name);

-InputStream dictStream =

-Objects.requireNonNull(getClass().getResourceAsStream(name + ".dic"),

name);

+checkSpellCheckerExpectations(

Review comment:

Ah... can't push to your repo (there is a checkbox to enable committers

to do so - please use it, makes edits easier :). Here is the commit:

https://github.com/dweiss/lucene-solr/commit/618a2d3b5bb51eb0e35322a9c56b97bdce7d728b

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org

For additional commands, e-mail: issues-h...@lucene.apache.org

[GitHub] [lucene-solr] dweiss commented on a change in pull request #2267: LUCENE-9707: Hunspell: check Lucene's implementation against Hunspel's test data

dweiss commented on a change in pull request #2267:

URL: https://github.com/apache/lucene-solr/pull/2267#discussion_r568186778

##

File path:

lucene/analysis/common/src/test/org/apache/lucene/analysis/hunspell/SpellCheckerTest.java

##

@@ -61,59 +61,74 @@ public void needAffixOnAffixes() throws Exception {

doTest("needaffix5");

}

+ @Test

public void testBreak() throws Exception {

doTest("break");

}

- public void testBreakDefault() throws Exception {

+ @Test

+ public void breakDefault() throws Exception {

doTest("breakdefault");

}

- public void testBreakOff() throws Exception {

+ @Test

+ public void breakOff() throws Exception {

doTest("breakoff");

}

- public void testCompoundrule() throws Exception {

+ @Test

+ public void compoundrule() throws Exception {

doTest("compoundrule");

}

- public void testCompoundrule2() throws Exception {

+ @Test

+ public void compoundrule2() throws Exception {

doTest("compoundrule2");

}

- public void testCompoundrule3() throws Exception {

+ @Test

+ public void compoundrule3() throws Exception {

doTest("compoundrule3");

}

- public void testCompoundrule4() throws Exception {

+ @Test

+ public void compoundrule4() throws Exception {

doTest("compoundrule4");

}

- public void testCompoundrule5() throws Exception {

+ @Test

+ public void compoundrule5() throws Exception {

doTest("compoundrule5");

}

- public void testCompoundrule6() throws Exception {

+ @Test

+ public void compoundrule6() throws Exception {

doTest("compoundrule6");

}

- public void testCompoundrule7() throws Exception {

+ @Test

+ public void compoundrule7() throws Exception {

doTest("compoundrule7");

}

- public void testCompoundrule8() throws Exception {

+ @Test

+ public void compoundrule8() throws Exception {

doTest("compoundrule8");

}

- public void testGermanCompounding() throws Exception {

+ @Test

+ public void germanCompounding() throws Exception {

doTest("germancompounding");

}

protected void doTest(String name) throws Exception {

-InputStream affixStream =

-Objects.requireNonNull(getClass().getResourceAsStream(name + ".aff"),

name);

-InputStream dictStream =

-Objects.requireNonNull(getClass().getResourceAsStream(name + ".dic"),

name);

+checkSpellCheckerExpectations(

Review comment:

You can't really convert resource URLs to paths with url.getPath. This

breaks, as I suspected. On Windows you get:

```

java.nio.file.InvalidPathException: Illegal char <:> at index 2:

/C:/Work/apache/lucene/lucene.master/lucene/analysis/common/build/classes/java/test/org/apache/lucene/analysis/hunspell/i53643.aff

> at

__randomizedtesting.SeedInfo.seed([FE61D482FAEDBB53:CE18D8B46A2785A8]:0)

> at

java.base/sun.nio.fs.WindowsPathParser.normalize(WindowsPathParser.java:182)

> at

java.base/sun.nio.fs.WindowsPathParser.parse(WindowsPathParser.java:153)

> at

java.base/sun.nio.fs.WindowsPathParser.parse(WindowsPathParser.java:77)

> at java.base/sun.nio.fs.WindowsPath.parse(WindowsPath.java:92)

> at

java.base/sun.nio.fs.WindowsFileSystem.getPath(WindowsFileSystem.java:229)

> at java.base/java.nio.file.Path.of(Path.java:147)

```

A better method is to go through the URI - Path.of(url.toUri()). I've

modified the code slightly, please take a look.

Also, can you rename tests to follow TestXXX convention? This may be

enforced in the future and will spare somebody some work to rename.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org

For additional commands, e-mail: issues-h...@lucene.apache.org

[jira] [Updated] (SOLR-15127) All-In-One Dockerfile for building local images as well as reproducible release builds directly from (remote) git tags

[

https://issues.apache.org/jira/browse/SOLR-15127?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Chris M. Hostetter updated SOLR-15127:

--

Attachment: SOLR-15127.patch

Status: Open (was: Open)

The attached patch implements this idea, and seems to work well -- there are

some nocommits, but they aren't neccessarily problems that need "fixed", so

much as comments to draw attention to some specific changes for discussion.

Basic usage is spelled out in the Dockerfile comments...

{noformat}

# This Dockerfile can be used in 2 distinct ways:

# 1) For Solr developers with a java/gradle development env, this file is used

by gradle to build docker images

#from your local builds (with or w/o local modifications). When doing

this, gradle will use a docker build context

#containing pre-built artifacts from previous gradle targets

# EX: ./gradlew -p solr/docker dockerBuild

#

# 2) Solr users, with or w/o a local java/gradle development env, can pass this

Dockerfile directly to docker build,

#using the root level checkout of the the project -- or a remote git URL --

as the docker build context. When doing

#this, docker will invoke gradle to build all neccessary artifacts

# EX: docker build --file solr/docker/Dockerfile .

# docker build --file solr/docker/Dockerfile

https://gitbox.apache.org/repos/asf/lucene-solr.git

# docker build --file solr/docker/Dockerfile

https://gitbox.apache.org/repos/asf/lucene-solr.git#branch_9x

#

# This last format is the method used by Solr Release Managers to build the

official apache/solr images uploaded to hub.docker.com

#

# EX: docker build --build-arg SOLR_VERSION=9.0.0 \

# --tag apache/solr:9.0.0 \

# --file solr/docker/Dockerfile \

#

https://gitbox.apache.org/repos/asf/lucene-solr.git#releases/lucene-solr/9.0.0

{noformat}

...allthough the direct "docker build" usage could be drastically simplified

once solr has it's own TLP/git repo if we're willing to keep the Dockerfile in

the root of the repo.

[~houstonputman] / [~dsmiley] / [~janhoy]: what do you guys think of this

overall approach?

> All-In-One Dockerfile for building local images as well as reproducible

> release builds directly from (remote) git tags

> --

>

> Key: SOLR-15127

> URL: https://issues.apache.org/jira/browse/SOLR-15127

> Project: Solr

> Issue Type: Sub-task

> Security Level: Public(Default Security Level. Issues are Public)

>Reporter: Chris M. Hostetter

>Priority: Major

> Attachments: SOLR-15127.patch

>

>

> There was a recent dev@lucene discussion about the future of the

> github/docker-solr repo and (Apache) "official" solr docker images and using

> the "apache/solr" nameing vs (docker-library official) "_/solr" names...

> http://mail-archives.apache.org/mod_mbox/lucene-dev/202101.mbox/%3CCAD4GwrNCPEnAJAjy4tY%3DpMeX5vWvnFyLe9ZDaXmF4J8XchA98Q%40mail.gmail.com%3E

> In that disussion, mak pointed out that docker-library evidently allows for

> some more flexibility in the way "official" docker-library packages can be

> built (compared to the rules that were evidnlty in place when the mak setup

> the current docker-solr image building process/tooling), pointing out how the

> "docker official" elasticsearch images are current built from the "elastic

> official" elasticsearch images...

> http://mail-archives.apache.org/mod_mbox/lucene-dev/202101.mbox/%3C3CED9683-1DD2-4F08-97F9-4FC549EDE47D%40greenhills.co.uk%3E

> Based on this, I proposed that we could probably restructure the Solr

> Dockerfile so that it could be useful for both "local development" -- using

> the current repo checkout -- as well as for "apache official" apache/solr

> images that could be reproducibly built directly from pristine git tags using

> the remote git URL syntax supported by "docker build" (and then -- evidently

> -- extended by trivial one line Dockerfiles for the "docker-library official"

> _/solr images)...

> http://mail-archives.apache.org/mod_mbox/lucene-dev/202101.mbox/%3Calpine.DEB.2.21.2101221423340.16298%40slate%3E

> This jira tracks this idea.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org

For additional commands, e-mail: issues-h...@lucene.apache.org

[GitHub] [lucene-solr] dweiss commented on a change in pull request #2267: LUCENE-9707: Hunspell: check Lucene's implementation against Hunspel's test data

dweiss commented on a change in pull request #2267:

URL: https://github.com/apache/lucene-solr/pull/2267#discussion_r568176360

##

File path:

lucene/analysis/common/src/test/org/apache/lucene/analysis/hunspell/TestsFromOriginalHunspellRepository.java

##

@@ -0,0 +1,71 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.lucene.analysis.hunspell;

+

+import java.io.IOException;

+import java.nio.file.DirectoryStream;

+import java.nio.file.Files;

+import java.nio.file.Path;

+import java.text.ParseException;

+import java.util.Collection;

+import java.util.Collections;

+import java.util.Set;

+import java.util.TreeSet;

+import java.util.stream.Collectors;

+import org.junit.Test;

+import org.junit.runner.RunWith;

+import org.junit.runners.Parameterized;

+

+/**

+ * Same as {@link SpellCheckerTest}, but checks all Hunspell's test data. The

path to the checked

+ * out Hunspell repository should be in {@code -Dhunspell.repo.path=...}

system property.

+ */

+@RunWith(Parameterized.class)

Review comment:

Filed an issue for myself here:

https://github.com/randomizedtesting/randomizedtesting/issues/295.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org

For additional commands, e-mail: issues-h...@lucene.apache.org

[jira] [Created] (SOLR-15127) All-In-One Dockerfile for building local images as well as reproducible release builds directly from (remote) git tags

Chris M. Hostetter created SOLR-15127: - Summary: All-In-One Dockerfile for building local images as well as reproducible release builds directly from (remote) git tags Key: SOLR-15127 URL: https://issues.apache.org/jira/browse/SOLR-15127 Project: Solr Issue Type: Sub-task Security Level: Public (Default Security Level. Issues are Public) Reporter: Chris M. Hostetter There was a recent dev@lucene discussion about the future of the github/docker-solr repo and (Apache) "official" solr docker images and using the "apache/solr" nameing vs (docker-library official) "_/solr" names... http://mail-archives.apache.org/mod_mbox/lucene-dev/202101.mbox/%3CCAD4GwrNCPEnAJAjy4tY%3DpMeX5vWvnFyLe9ZDaXmF4J8XchA98Q%40mail.gmail.com%3E In that disussion, mak pointed out that docker-library evidently allows for some more flexibility in the way "official" docker-library packages can be built (compared to the rules that were evidnlty in place when the mak setup the current docker-solr image building process/tooling), pointing out how the "docker official" elasticsearch images are current built from the "elastic official" elasticsearch images... http://mail-archives.apache.org/mod_mbox/lucene-dev/202101.mbox/%3C3CED9683-1DD2-4F08-97F9-4FC549EDE47D%40greenhills.co.uk%3E Based on this, I proposed that we could probably restructure the Solr Dockerfile so that it could be useful for both "local development" -- using the current repo checkout -- as well as for "apache official" apache/solr images that could be reproducibly built directly from pristine git tags using the remote git URL syntax supported by "docker build" (and then -- evidently -- extended by trivial one line Dockerfiles for the "docker-library official" _/solr images)... http://mail-archives.apache.org/mod_mbox/lucene-dev/202101.mbox/%3Calpine.DEB.2.21.2101221423340.16298%40slate%3E This jira tracks this idea. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org For additional commands, e-mail: issues-h...@lucene.apache.org

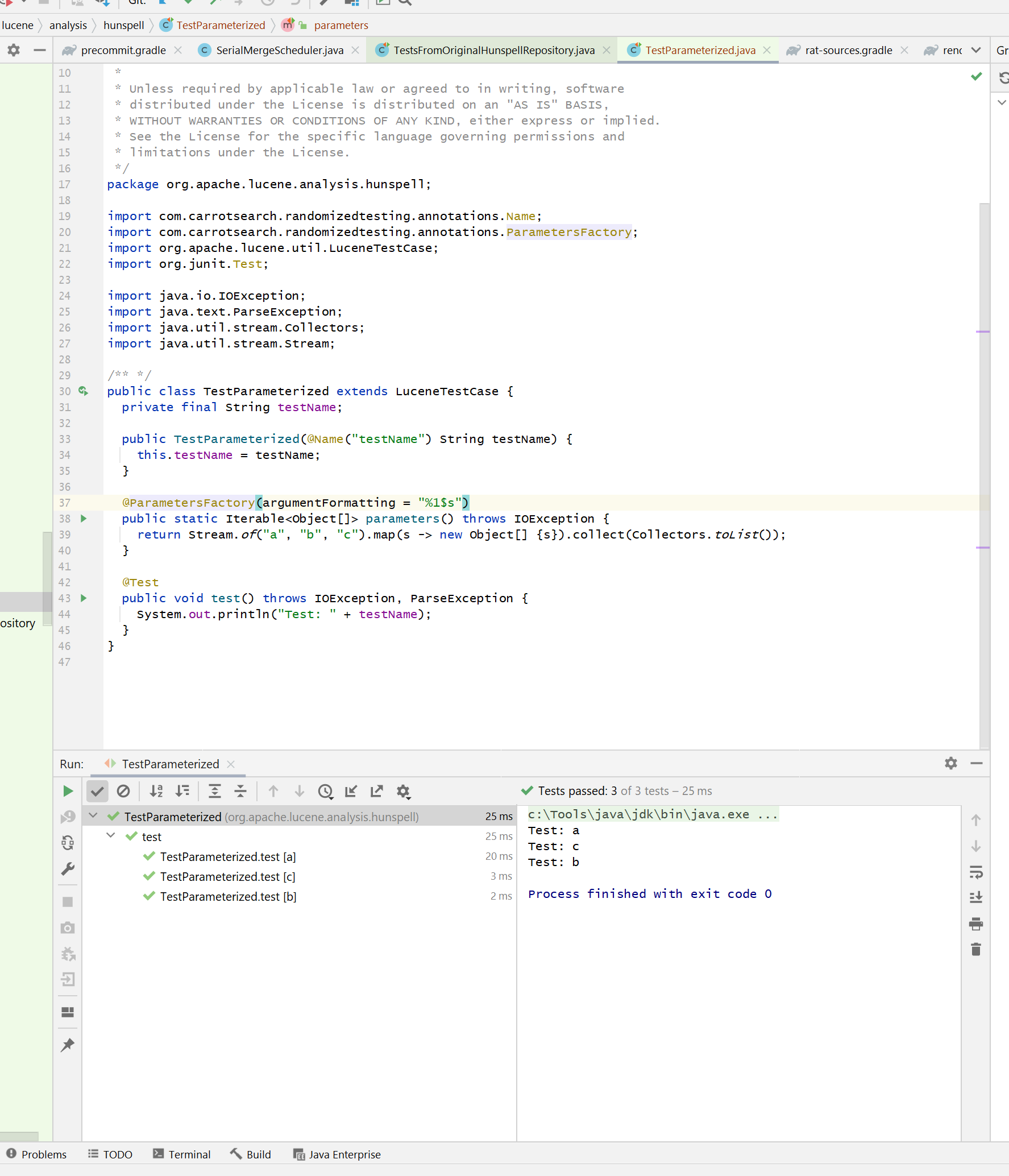

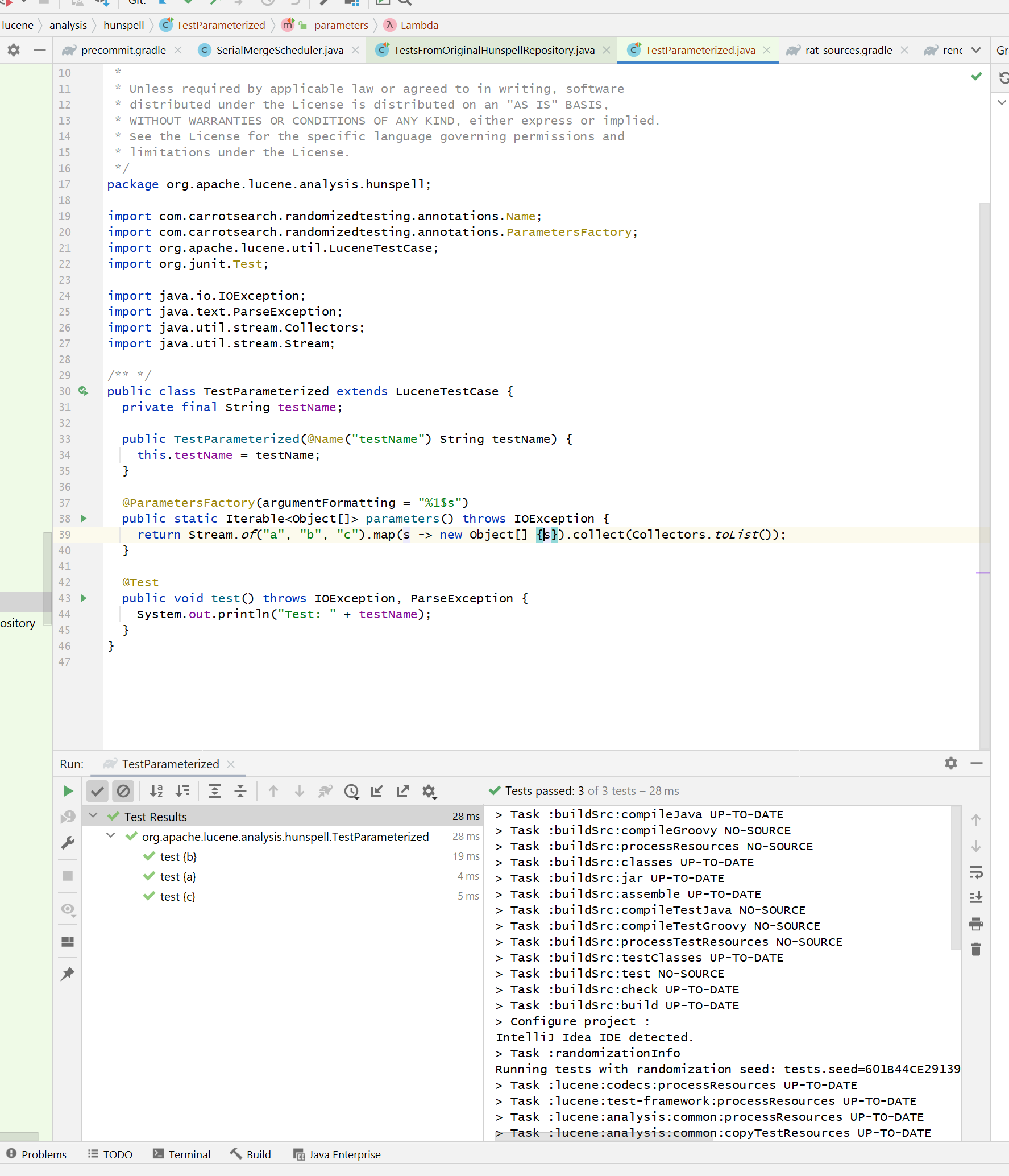

[GitHub] [lucene-solr] dweiss commented on a change in pull request #2267: LUCENE-9707: Hunspell: check Lucene's implementation against Hunspel's test data

dweiss commented on a change in pull request #2267:

URL: https://github.com/apache/lucene-solr/pull/2267#discussion_r568175124

##

File path:

lucene/analysis/common/src/test/org/apache/lucene/analysis/hunspell/TestsFromOriginalHunspellRepository.java

##

@@ -0,0 +1,71 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.lucene.analysis.hunspell;

+

+import java.io.IOException;

+import java.nio.file.DirectoryStream;

+import java.nio.file.Files;

+import java.nio.file.Path;

+import java.text.ParseException;

+import java.util.Collection;

+import java.util.Collections;

+import java.util.Set;

+import java.util.TreeSet;

+import java.util.stream.Collectors;

+import org.junit.Test;

+import org.junit.runner.RunWith;

+import org.junit.runners.Parameterized;

+

+/**

+ * Same as {@link SpellCheckerTest}, but checks all Hunspell's test data. The

path to the checked

+ * out Hunspell repository should be in {@code -Dhunspell.repo.path=...}

system property.

+ */

+@RunWith(Parameterized.class)

Review comment:

I checked intellij and parameterized tests tonight. It's what I was

afraid of - test descriptions are emitted correctly (in my opinion) but they're

*interepreted* differently depending on the tool (and time when you check...).

The reason why you see the class name and test method before each actual

test is because these supposedly "hidden" elements allowed tools to go back to

the source code of a test with an arbitrary name (if you double-click on a test

in IntelliJ it will take you back to the test method). Relaunching of a single

test must have changed at some point because it used to be an exact name

filter... but now I it just reruns all tests under a test method (all parameter

variations).

It's worth mentioning that this isn't consistent even in IntelliJ itself -

if I run a simple(r) parameterized test via IntelliJ launcher, I get this test

suite tree:

But when I run the same test via gradle launcher (from within the IDE), I

get this tree:

I don't know if there is a way to make all the tools happy; test

descriptions and nesting is broken in JUnit 4.x itself.

Given the above, please feel free to revert back to what works for you. I'd

name the test class TestHunspellRepositoryTestCases for clarity. Also, this

test will not run under Lucene test framework because the security manager

won't let you access arbitrary paths outside the build location. You'd need to

add this to tests.policy:

```

permission java.io.FilePermission "${hunspell.repo.path}${/}-", "read";

```

Don't know whether it's worth it at the moment though.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org

For additional commands, e-mail: issues-h...@lucene.apache.org

[jira] [Resolved] (SOLR-15125) Link to docs is brroken

[ https://issues.apache.org/jira/browse/SOLR-15125?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Cassandra Targett resolved SOLR-15125. -- Resolution: Fixed The problem has been fixed and the docs are available again. > Link to docs is brroken > --- > > Key: SOLR-15125 > URL: https://issues.apache.org/jira/browse/SOLR-15125 > Project: Solr > Issue Type: Bug > Security Level: Public(Default Security Level. Issues are Public) > Components: website >Reporter: Thomas Güttler >Priority: Minor > > [On this page: > https://lucene.apache.org/solr/guide/|https://lucene.apache.org/solr/guide/] > the link to [https://lucene.apache.org/solr/guide/8_8/] > is broken. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org For additional commands, e-mail: issues-h...@lucene.apache.org

[jira] [Commented] (LUCENE-9718) REGEX Pattern Search, character classes with quantifiers do not work

[

https://issues.apache.org/jira/browse/LUCENE-9718?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17276685#comment-17276685

]

Brian Feldman commented on LUCENE-9718:

---

1) User level documentation upstream in Solr or ElasticSearch there is limited

documentation. Receiving no error or results back from a search system, some

users might simply believe no matches exist, and not that their syntax is not

supported. I did not realize it was an issue until playing around with it.

2) Besides being documented, the code can be improved, only the initial parsing

code would need updating. It does not affect logic for the running of the

automaton. And since there is already code to support the character classes,

logically the parsing code should be completed to support the trailing

quantifiers, in order to finish the implementation for character classes.

> REGEX Pattern Search, character classes with quantifiers do not work

>

>

> Key: LUCENE-9718

> URL: https://issues.apache.org/jira/browse/LUCENE-9718

> Project: Lucene - Core

> Issue Type: Bug

> Components: core/search

>Affects Versions: 7.7.3, 8.6.3

>Reporter: Brian Feldman

>Priority: Minor

> Labels: Documentation, RegEx

>

> Character classes with a quantifier do not work, no error is given and no

> results are returned. For example \d\{2} or \d\{2,3} as is commonly written

> in most languages supporting regular expressions, simply and quietly does not

> work. A user work around is to write them fully out such as \d\d or

> [0-9][0-9] or as [0-9]\{2,3} .

>

> This inconsistency or limitation is not documented, wasting the time of users

> as they have to figure this out themselves. I believe this inconsistency

> should be clearly documented and an effort to fixing the inconsistency would

> improve pattern searching.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org

For additional commands, e-mail: issues-h...@lucene.apache.org

[GitHub] [lucene-solr-operator] thelabdude commented on a change in pull request #151: Integrate with cert-manager to issue TLS certs for Solr

thelabdude commented on a change in pull request #151:

URL:

https://github.com/apache/lucene-solr-operator/pull/151#discussion_r568152014

##

File path: main.go

##

@@ -65,6 +69,7 @@ func init() {

_ = solrv1beta1.AddToScheme(scheme)

_ = zkv1beta1.AddToScheme(scheme)

+ _ = certv1.AddToScheme(scheme)

// +kubebuilder:scaffold:scheme

flag.BoolVar(, "zk-operator", true, "The operator will

not use the zk operator & crd when this flag is set to false.")

Review comment:

From a reconcile perspective, we really only care about the TLS secret

that cert-manager creates once the Certificate is issued. The "watching" of the

Certificate to come online is really for status reporting while the cert is

issuing as it can take several minutes for the cert to be issued. Notice the

`isCertificateReady` is mostly about checking for the TLS secret.

The operator does create a Certificate for `autoCreate` mode but in that

case, the cert definition should come from the SolrCloud CRD and we don't want

to let users edit the Certificate externally; this is similar to the default

`solr.xml` ConfigMap and any direct edits to that cm are lost, same with

`autoCreate` certs.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org

For additional commands, e-mail: issues-h...@lucene.apache.org

[GitHub] [lucene-solr] mrsoong closed pull request #1589: SOLR-13195: added check for missing shards param in SearchHandler

mrsoong closed pull request #1589: URL: https://github.com/apache/lucene-solr/pull/1589 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org For additional commands, e-mail: issues-h...@lucene.apache.org

[GitHub] [lucene-solr] mrsoong closed pull request #1472: SOLR-13184: Added some input validation in ValueSourceParser

mrsoong closed pull request #1472: URL: https://github.com/apache/lucene-solr/pull/1472 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org For additional commands, e-mail: issues-h...@lucene.apache.org

[GitHub] [lucene-solr-operator] HoustonPutman commented on a change in pull request #151: Integrate with cert-manager to issue TLS certs for Solr

HoustonPutman commented on a change in pull request #151:

URL:

https://github.com/apache/lucene-solr-operator/pull/151#discussion_r568148914

##

File path: main.go

##

@@ -65,6 +69,7 @@ func init() {

_ = solrv1beta1.AddToScheme(scheme)

_ = zkv1beta1.AddToScheme(scheme)

+ _ = certv1.AddToScheme(scheme)

// +kubebuilder:scaffold:scheme

flag.BoolVar(, "zk-operator", true, "The operator will

not use the zk operator & crd when this flag is set to false.")

Review comment:

Ahh sorry for the confusion.

Yeah, it looks like Solr Operator is creating its own Secrets as well as

finding secrets created by CertManager. If that's the case, then I think we

will need both "Owns" and "Watches" with similar logic to the ConfigMaps. But I

may be wrong there, this is uncharted territory.

Are we sure we don't need to own Certificates? We wait for them to come

online, so we want to be notified when they have condition changes, right?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org

For additional commands, e-mail: issues-h...@lucene.apache.org

[GitHub] [lucene-solr-operator] thelabdude commented on a change in pull request #151: Integrate with cert-manager to issue TLS certs for Solr

thelabdude commented on a change in pull request #151:

URL:

https://github.com/apache/lucene-solr-operator/pull/151#discussion_r568141856

##

File path: main.go

##

@@ -65,6 +69,7 @@ func init() {

_ = solrv1beta1.AddToScheme(scheme)

_ = zkv1beta1.AddToScheme(scheme)

+ _ = certv1.AddToScheme(scheme)

// +kubebuilder:scaffold:scheme

flag.BoolVar(, "zk-operator", true, "The operator will

not use the zk operator & crd when this flag is set to false.")

Review comment:

Ok I see, I was confused because you put the comment on the

`AddToScheme` line so thought the problem was about that line of code.

I don't think the Solr operator needs to own `Certificate` objects ... all

it cares about is the TLS secret that gets created by the cert-manager in

response to a change to the `Certificate`. It seems like the secret changing

does trigger a reconcile in my testing but maybe we need to add a specific

watch for that secret changing like you did for user-provided ConfigMaps?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org

For additional commands, e-mail: issues-h...@lucene.apache.org

[GitHub] [lucene-solr] dweiss commented on a change in pull request #2258: LUCENE-9686: Fix read past EOF handling in DirectIODirectory

dweiss commented on a change in pull request #2258:

URL: https://github.com/apache/lucene-solr/pull/2258#discussion_r568136570

##

File path:

lucene/misc/src/java/org/apache/lucene/misc/store/DirectIODirectory.java

##

@@ -381,17 +377,18 @@ public long length() {

@Override

public byte readByte() throws IOException {

if (!buffer.hasRemaining()) {

-refill();

+refill(1);

}

+

return buffer.get();

}

-private void refill() throws IOException {

+private void refill(int byteToRead) throws IOException {

Review comment:

Should it be plural (bytesToRead)?

##

File path:

lucene/misc/src/java/org/apache/lucene/misc/store/DirectIODirectory.java

##

@@ -381,17 +377,18 @@ public long length() {

@Override

public byte readByte() throws IOException {

if (!buffer.hasRemaining()) {

-refill();

+refill(1);

}

+

return buffer.get();

}

-private void refill() throws IOException {

+private void refill(int byteToRead) throws IOException {

filePos += buffer.capacity();

// BaseDirectoryTestCase#testSeekPastEOF test for consecutive read past

EOF,

// hence throwing EOFException early to maintain buffer state (position

in particular)

- if (filePos > channel.size()) {

+ if (filePos > channel.size() || (channel.size() - filePos < byteToRead))

{

Review comment:

I wonder if we should move the channel's position to actually point

after the last byte, then throw EOFException... so that not only we indicate an

EOF but also leave the channel pointing at the end. I have a scenario in my

mind when somebody tries to read a bulk of bytes, hits an eof but then a

single-byte read() succeeds. That would be awkward, wouldn't it?

A refill should try to read as many bytes as it can (min(channel.size()

-filePos, bytesToRead)), then potentially fail if bytesToRead is still >0 and

channel is at EOF. Or is my thinking flawed somewhere?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: issues-unsubscr...@lucene.apache.org

For additional commands, e-mail: issues-h...@lucene.apache.org

[jira] [Commented] (SOLR-8319) NPE when creating pivot